ai-no-jimaku-gumi

AI no jimaku gumi (AIの字幕組), a subtitle maker for video using AI.

Stars: 130

AI no jimaku gumi is a command-line utility designed to assist in video translation. It supports translating subtitles using AI models and provides options for different translation and subtitle sources. Users can easily set up the tool by following the installation steps and use it to translate videos to different languages with customizable settings. The tool currently supports DeepL and llm translation backends and SRT subtitle export. It aims to simplify the process of adding subtitles to videos by leveraging AI technology.

README:

AI no jimaku gumi is a cli utility to facilitate the translation and subtitle making of video.

To get started with AI no jimaku gumi, follow these steps:

- Clone the repository:

git clone https://github.com/Inokinoki/ai-no-jimaku-gumi.git

- Navigate to the project directory:

cd ai-no-jimaku-gumi - Install build dependencies:

Using Homebrew:

brew install cmake ffmpegUbuntu:

apt-get install -y clang cmake make pkg-config \

libavcodec-dev libavdevice-dev libavfilter-dev libavformat-dev \

libavutil-dev libpostproc-dev libswresample-dev libswscale-dev Fedora:

dnf install clang cmake ffmpeg-free-devel make pkgconf-pkg-configArch Linux:

pacman -S clang cmake ffmpeg make pkgconfPlease look for clang, cmake, make, pkgconfig and ffmpeg packages in your distribution, if it's not one of above.

You might need to install some other packages to enable GPU/NPU acceleration.

TODO

Build with cargo:

cargo buildDownload whisper model(you can also download other models refer: https://huggingface.co/ggerganov/whisper.cpp):

wget https://huggingface.co/ggerganov/whisper.cpp/resolve/main/ggml-tiny.binRun it with your video path after --input-video-path and target language after -t.

To use AI no jimaku gumi, refer this help:

aI NO jimaKu gumI, a subtitle maker using AI.

Usage: ainojimakugumi [OPTIONS] --input-video-path <INPUT_VIDEO_PATH>

Options:

-i, --input-video-path <INPUT_VIDEO_PATH>

Path to the input video

--source-language <SOURCE_LANGUAGE>

Which language to translate from (default: "ja") (possible values: "en", "es", "fr", "de", "it", "ja", "ko", "pt", "ru", "zh") (example: "ja") [default: ja]

--target-language <TARGET_LANGUAGE>

Which language to translate to (default: "en") (possible values: "en", "es", "fr", "de", "it", "ja", "ko", "pt", "ru", "zh") (example: "en") [default: en]

--start-time <START_TIME>

Video start time (not used yet) [default: 0]

--end-time <END_TIME>

Video end time (not used yet) [default: 0]

--subtitle-source <SUBTITLE_SOURCE>

Subtitle source (default: "audio") (possible values: "audio", "container", "ocr") (example: "audio") (long_about: "Subtitle source to use") [default: audio]

--ggml-model-path <GGML_MODEL_PATH>

ggml model path (default: "ggml-tiny.bin") (example: "ggml-tiny.bin", ggml-small.bin") (long_about: "Path to the ggml model") [default: ggml-tiny.bin]

--only-extract-audio

Only extract the audio (default: false) (long_about: "Only extract the audio, if subtitle source is audio, but do not transcribe (Debug purpose)") (example: true)

--only-transcript

Only save the transcripted subtitle (default: false) (long_about: "Only save the transcripted subtitle but do not translate (Debug purpose)") (example: true)

--original-subtitle-path <ORIGINAL_SUBTITLE_PATH>

Original subtitle SRT file path (default: "") (example: "origin.srt") (long_about: "Original subtitle path to save the transcripted subtitle as SRT") [default: ]

--only-translate

Only translate the subtitle (default: false) (long_about: "Only translate the subtitle but do not export (Debug purpose)")

-s, --subtitle-backend <SUBTITLE_BACKEND>

Subtitle backend (default: "srt") (possible values: "srt", "container", "embedded") (example: "srt") (long_about: "Subtitle backend to use") [default: srt]

--subtitle-output-path <SUBTITLE_OUTPUT_PATH>

Subtitle output path (if srt) (default: "output.srt") (example: "output.srt") (long_about: "Subtitle output path (if srt)") [default: output.srt]

-t, --translator-backend <TRANSLATOR_BACKEND>

Translator backend (default: "deepl") (possible values: "deepl", "google", "llm", "whisper") (example: "google") (long_about: "Translator backend to use") [default: deepl]

--llm-model-name <LLM_MODEL_NAME>

Model name (if llm) (default: "gpt-4o") (example: "gpt-4o") (long_about: "Model name (if using llm for translation)") [default: gpt-4o]

--llm-api-base <LLM_API_BASE>

API base (if llm) (default: "https://api.openai.com") (example: "https://api.openai.com") (long_about: "API base used in `genai` crate (if using llm for translation)") [default: https://api.openai.com]

--llm-prompt <LLM_PROMPT>

Prompt (if llm) (default: "") (example: "Translate the following text to English") (long_about: "Prompt (if using llm for translation)") [default: ]

-h, --help

Print help

-V, --version

Print version

We are currently supporting only deepl, llm, whisper translation and srt export.

You might need to follow the specific instructions to use a translator backend:

-

deepl(default): please provide your own DeepL API key inDEEPL_API_KEYenv, andDEEPL_API_URL=https://api.deepl.comif you are using the paid API version. -

llm: if you are using llm translate, please refer the repo rust-genai for more detail. An example here:

export CUSTOM_API_KEY=sk-xxxxxxxxxxxxxxxxxxxxxxx

./target/debug/ainojimakugumi --input-video-path one.webm \

--translator-backend llm \

--llm-api-base https://sssss.com/v1/ \

--llm-prompt 'translate this to English' \

--llm-model-name 'gpt-4o-mini'

--ggml-model-path ggml-small.bin

-

whisper(experimental): use Whisper.cpp to directly output translated subtitles from audio (audio only, English only).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-no-jimaku-gumi

Similar Open Source Tools

ai-no-jimaku-gumi

AI no jimaku gumi is a command-line utility designed to assist in video translation. It supports translating subtitles using AI models and provides options for different translation and subtitle sources. Users can easily set up the tool by following the installation steps and use it to translate videos to different languages with customizable settings. The tool currently supports DeepL and llm translation backends and SRT subtitle export. It aims to simplify the process of adding subtitles to videos by leveraging AI technology.

AIGODLIKE-ComfyUI-Translation

A plugin for multilingual translation of ComfyUI, This plugin implements translation of resident menu bar/search bar/right-click context menu/node, etc

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

openmacro

Openmacro is a multimodal personal agent that allows users to run code locally. It acts as a personal agent capable of completing and automating tasks autonomously via self-prompting. The tool provides a CLI natural-language interface for completing and automating tasks, analyzing and plotting data, browsing the web, and manipulating files. Currently, it supports API keys for models powered by SambaNova, with plans to add support for other hosts like OpenAI and Anthropic in future versions.

LLMVoX

LLMVoX is a lightweight 30M-parameter, LLM-agnostic, autoregressive streaming Text-to-Speech (TTS) system designed to convert text outputs from Large Language Models into high-fidelity streaming speech with low latency. It achieves significantly lower Word Error Rate compared to speech-enabled LLMs while operating at comparable latency and speech quality. Key features include being lightweight & fast with only 30M parameters, LLM-agnostic for easy integration with existing models, multi-queue streaming for continuous speech generation, and multilingual support for easy adaptation to new languages.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

matchlock

Matchlock is a CLI tool designed for running AI agents in isolated and disposable microVMs with network allowlisting and secret injection capabilities. It ensures that your secrets never enter the VM, providing a secure environment for AI agents to execute code without risking access to your machine. The tool offers features such as sealing the network to only allow traffic to specified hosts, injecting real credentials in-flight by the host, and providing a full Linux environment for the agent's operations while maintaining isolation from the host machine. Matchlock supports quick booting of Linux environments, sandbox lifecycle management, image building, and SDKs for Go and Python for embedding sandboxes in applications.

crush

Crush is a versatile tool designed to enhance coding workflows in your terminal. It offers support for multiple LLMs, allows for flexible switching between models, and enables session-based work management. Crush is extensible through MCPs and works across various operating systems. It can be installed using package managers like Homebrew and NPM, or downloaded directly. Crush supports various APIs like Anthropic, OpenAI, Groq, and Google Gemini, and allows for customization through environment variables. The tool can be configured locally or globally, and supports LSPs for additional context. Crush also provides options for ignoring files, allowing tools, and configuring local models. It respects `.gitignore` files and offers logging capabilities for troubleshooting and debugging.

typst-mcp

Typst MCP Server is an implementation of the Model Context Protocol (MCP) that facilitates interaction between AI models and Typst, a markup-based typesetting system. The server offers tools for converting between LaTeX and Typst, validating Typst syntax, and generating images from Typst code. It provides functions such as listing documentation chapters, retrieving specific chapters, converting LaTeX snippets to Typst, validating Typst syntax, and rendering Typst code to images. The server is designed to assist Language Model Managers (LLMs) in handling Typst-related tasks efficiently and accurately.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

bosquet

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

functionary

Functionary is a language model that interprets and executes functions/plugins. It determines when to execute functions, whether in parallel or serially, and understands their outputs. Function definitions are given as JSON Schema Objects, similar to OpenAI GPT function calls. It offers documentation and examples on functionary.meetkai.com. The newest model, meetkai/functionary-medium-v3.1, is ranked 2nd in the Berkeley Function-Calling Leaderboard. Functionary supports models with different context lengths and capabilities for function calling and code interpretation. It also provides grammar sampling for accurate function and parameter names. Users can deploy Functionary models serverlessly using Modal.com.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.

For similar tasks

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

auto-subs

Auto-subs is a tool designed to automatically transcribe editing timelines using OpenAI Whisper and Stable-TS for extreme accuracy. It generates subtitles in a custom style, is completely free, and runs locally within Davinci Resolve. It works on Mac, Linux, and Windows, supporting both Free and Studio versions of Resolve. Users can jump to positions on the timeline using the Subtitle Navigator and translate from any language to English. The tool provides a user-friendly interface for creating and customizing subtitles for video content.

VideoLingo

VideoLingo is an all-in-one video translation and localization dubbing tool designed to generate Netflix-level high-quality subtitles. It aims to eliminate stiff machine translation, multiple lines of subtitles, and can even add high-quality dubbing, allowing knowledge from around the world to be shared across language barriers. Through an intuitive Streamlit web interface, the entire process from video link to embedded high-quality bilingual subtitles and even dubbing can be completed with just two clicks, easily creating Netflix-quality localized videos. Key features and functions include using yt-dlp to download videos from Youtube links, using WhisperX for word-level timeline subtitle recognition, using NLP and GPT for subtitle segmentation based on sentence meaning, summarizing intelligent term knowledge base with GPT for context-aware translation, three-step direct translation, reflection, and free translation to eliminate strange machine translation, checking single-line subtitle length and translation quality according to Netflix standards, using GPT-SoVITS for high-quality aligned dubbing, and integrating package for one-click startup and one-click output in streamlit.

voice-pro

Voice-Pro is an integrated solution for subtitles, translation, and TTS. It offers features like multilingual subtitles, live translation, vocal remover, and supports OpenAI Whisper and Open-Source Translator. The tool provides a Studio tab for various functions, Whisper Caption tab for subtitle creation, Translate tab for translation, TTS tab for text-to-speech, Live Translation tab for real-time voice recognition, and Batch tab for processing multiple files. Users can download YouTube videos, improve voice recognition accuracy, create automatic subtitles, and produce multilingual videos with ease. The tool is easy to install with one-click and offers a Web-UI for user convenience.

ai-no-jimaku-gumi

AI no jimaku gumi is a command-line utility designed to assist in video translation. It supports translating subtitles using AI models and provides options for different translation and subtitle sources. Users can easily set up the tool by following the installation steps and use it to translate videos to different languages with customizable settings. The tool currently supports DeepL and llm translation backends and SRT subtitle export. It aims to simplify the process of adding subtitles to videos by leveraging AI technology.

youwee

Youwee is a modern YouTube video downloader tool built with Tauri and React. It offers features like downloading videos from various platforms, following channels, fetching metadata, live stream support, AI video summary and processing, time range download, batch and playlist downloads, audio extraction, subtitle support, subtitle workshop, post-processing, SponsorBlock, speed limit control, download library, multiple themes, and is fast and lightweight.

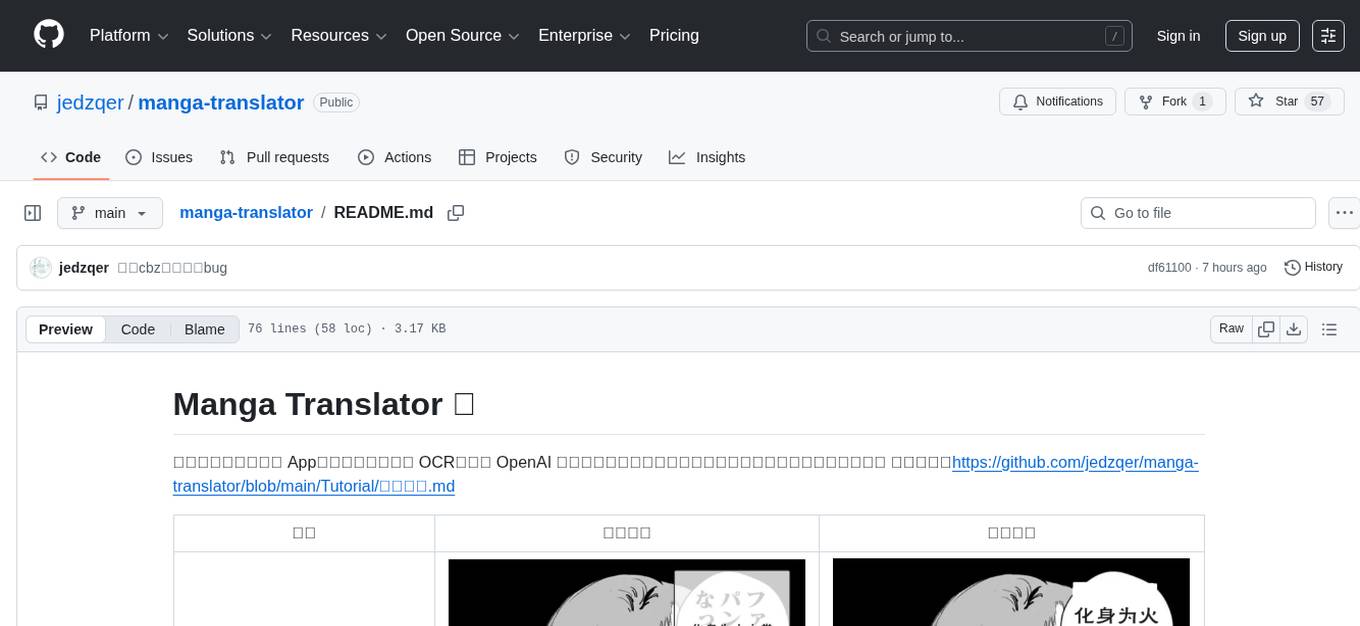

manga-translator

Manga Translator is a tool designed to help users translate manga pages from Japanese to English. It utilizes optical character recognition (OCR) technology to extract text from images and provides a user-friendly interface for translating and editing the text. The tool supports various manga formats and allows users to customize the translation process by adjusting settings such as language preferences and text alignment. With Manga Translator, users can easily translate manga pages for personal use or sharing with others.

chatgpt-subtitle-translator

This tool utilizes the OpenAI ChatGPT API to translate text, with a focus on line-based translation, particularly for SRT subtitles. It optimizes token usage by removing SRT overhead and grouping text into batches, allowing for arbitrary length translations without excessive token consumption while maintaining a one-to-one match between line input and output.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.