mlx-vlm

MLX-VLM is a package for inference and fine-tuning of Vision Language Models (VLMs) on your Mac using MLX.

Stars: 2173

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

README:

MLX-VLM is a package for inference and fine-tuning of Vision Language Models (VLMs) and Omni Models (VLMs with audio and video support) on your Mac using MLX.

- Installation

- Usage

- Activation Quantization (CUDA)

- Multi-Image Chat Support

- Model-Specific Documentation

- Fine-tuning

Some models have detailed documentation with prompt formats, examples, and best practices:

| Model | Documentation |

|---|---|

| DeepSeek-OCR | Docs |

| DeepSeek-OCR-2 | Docs |

| DOTS-OCR | Docs |

| GLM-OCR | Docs |

The easiest way to get started is to install the mlx-vlm package using pip:

pip install -U mlx-vlmSome models (e.g., Qwen2-VL) require additional dependencies from the torch extra:

pip install -U mlx-vlm[torch]This installs torch, torchvision, and other dependencies needed by certain model processors.

Generate output from a model using the CLI:

# Text generation

mlx_vlm.generate --model mlx-community/Qwen2-VL-2B-Instruct-4bit --max-tokens 100 --prompt "Hello, how are you?"

# Image generation

mlx_vlm.generate --model mlx-community/Qwen2-VL-2B-Instruct-4bit --max-tokens 100 --temperature 0.0 --image http://images.cocodataset.org/val2017/000000039769.jpg

# Audio generation (New)

mlx_vlm.generate --model mlx-community/gemma-3n-E2B-it-4bit --max-tokens 100 --prompt "Describe what you hear" --audio /path/to/audio.wav

# Multi-modal generation (Image + Audio)

mlx_vlm.generate --model mlx-community/gemma-3n-E2B-it-4bit --max-tokens 100 --prompt "Describe what you see and hear" --image /path/to/image.jpg --audio /path/to/audio.wavLaunch a chat interface using Gradio:

mlx_vlm.chat_ui --model mlx-community/Qwen2-VL-2B-Instruct-4bitHere's an example of how to use MLX-VLM in a Python script:

import mlx.core as mx

from mlx_vlm import load, generate

from mlx_vlm.prompt_utils import apply_chat_template

from mlx_vlm.utils import load_config

# Load the model

model_path = "mlx-community/Qwen2-VL-2B-Instruct-4bit"

model, processor = load(model_path)

config = load_config(model_path)

# Prepare input

image = ["http://images.cocodataset.org/val2017/000000039769.jpg"]

# image = [Image.open("...")] can also be used with PIL.Image.Image objects

prompt = "Describe this image."

# Apply chat template

formatted_prompt = apply_chat_template(

processor, config, prompt, num_images=len(image)

)

# Generate output

output = generate(model, processor, formatted_prompt, image, verbose=False)

print(output)from mlx_vlm import load, generate

from mlx_vlm.prompt_utils import apply_chat_template

from mlx_vlm.utils import load_config

# Load model with audio support

model_path = "mlx-community/gemma-3n-E2B-it-4bit"

model, processor = load(model_path)

config = model.config

# Prepare audio input

audio = ["/path/to/audio1.wav", "/path/to/audio2.mp3"]

prompt = "Describe what you hear in these audio files."

# Apply chat template with audio

formatted_prompt = apply_chat_template(

processor, config, prompt, num_audios=len(audio)

)

# Generate output with audio

output = generate(model, processor, formatted_prompt, audio=audio, verbose=False)

print(output)from mlx_vlm import load, generate

from mlx_vlm.prompt_utils import apply_chat_template

from mlx_vlm.utils import load_config

# Load multi-modal model

model_path = "mlx-community/gemma-3n-E2B-it-4bit"

model, processor = load(model_path)

config = model.config

# Prepare inputs

image = ["/path/to/image.jpg"]

audio = ["/path/to/audio.wav"]

prompt = ""

# Apply chat template

formatted_prompt = apply_chat_template(

processor, config, prompt,

num_images=len(image),

num_audios=len(audio)

)

# Generate output

output = generate(model, processor, formatted_prompt, image, audio=audio, verbose=False)

print(output)Start the server:

mlx_vlm.server --port 8080

# With trust remote code enabled (required for some models)

mlx_vlm.server --trust-remote-code-

--host: Host address (default:0.0.0.0) -

--port: Port number (default:8080) -

--trust-remote-code: Trust remote code when loading models from Hugging Face Hub

You can also set trust remote code via environment variable:

MLX_TRUST_REMOTE_CODE=true mlx_vlm.serverThe server provides multiple endpoints for different use cases and supports dynamic model loading/unloading with caching (one model at a time).

-

/models- List models available locally -

/chat/completions- OpenAI-compatible chat-style interaction endpoint with support for images, audio, and text -

/responses- OpenAI-compatible responses endpoint -

/health- Check server status -

/unload- Unload current model from memory

curl "http://localhost:8080/models"curl -X POST "http://localhost:8080/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "mlx-community/Qwen2-VL-2B-Instruct-4bit",

"messages": [

{

"role": "user",

"content": "Hello, how are you"

}

],

"stream": true,

"max_tokens": 100

}'curl -X POST "http://localhost:8080/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "mlx-community/Qwen2.5-VL-32B-Instruct-8bit",

[

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": [

{

"type": "text",

"text": This is today's chart for energy demand in California. Can you provide an analysis of the chart and comment on the implications for renewable energy in California?"

},

{

"type": "input_image",

"image_url": "/path/to/repo/examples/images/renewables_california.png"

}

]

}

],

"stream": true,

"max_tokens": 1000

}'curl -X POST "http://localhost:8080/generate" \

-H "Content-Type: application/json" \

-d '{

"model": "mlx-community/gemma-3n-E2B-it-4bit",

"messages": [

{

"role": "user",

"content": [

{ "type": "text", "text": "Describe what you hear in these audio files" },

{ "type": "input_audio", "input_audio": "/path/to/audio1.wav" },

{ "type": "input_audio", "input_audio": "https://example.com/audio2.mp3" }

]

}

],

"stream": true,

"max_tokens": 500

}'curl -X POST "http://localhost:8080/generate" \

-H "Content-Type: application/json" \

-d '{

"model": "mlx-community/gemma-3n-E2B-it-4bit",

"messages": [

{

"role": "user",

"content": [

{"type": "input_image", "image_url": "/path/to/image.jpg"},

{"type": "input_audio", "input_audio": "/path/to/audio.wav"}

]

}

],

"max_tokens": 100

}'curl -X POST "http://localhost:8080/responses" \

-H "Content-Type: application/json" \

-d '{

"model": "mlx-community/Qwen2-VL-2B-Instruct-4bit",

"messages": [

{

"role": "user",

"content": [

{"type": "input_text", "text": "What is in this image?"},

{"type": "input_image", "image_url": "/path/to/image.jpg"}

]

}

],

"max_tokens": 100

}'-

model: Model identifier (required) -

messages: Chat messages for chat/OpenAI endpoints -

max_tokens: Maximum tokens to generate -

temperature: Sampling temperature -

top_p: Top-p sampling parameter -

stream: Enable streaming responses

When running on NVIDIA GPUs with MLX CUDA, models quantized with mxfp8 or nvfp4 modes require activation quantization to work properly. This converts QuantizedLinear layers to QQLinear layers which quantize both weights and activations.

Use the -qa or --quantize-activations flag:

mlx_vlm.generate --model /path/to/mxfp8-model --prompt "Describe this image" --image /path/to/image.jpg -qaPass quantize_activations=True to the load function:

from mlx_vlm import load, generate

# Load with activation quantization enabled

model, processor = load(

"path/to/mxfp8-quantized-model",

quantize_activations=True

)

# Generate as usual

output = generate(model, processor, "Describe this image", image=["image.jpg"])-

mxfp8- 8-bit MX floating point -

nvfp4- 4-bit NVIDIA floating point

Note: This feature is required for mxfp/nvfp quantized models on CUDA. On Apple Silicon (Metal), these models work without the flag.

MLX-VLM supports analyzing multiple images simultaneously with select models. This feature enables more complex visual reasoning tasks and comprehensive analysis across multiple images in a single conversation.

from mlx_vlm import load, generate

from mlx_vlm.prompt_utils import apply_chat_template

from mlx_vlm.utils import load_config

model_path = "mlx-community/Qwen2-VL-2B-Instruct-4bit"

model, processor = load(model_path)

config = model.config

images = ["path/to/image1.jpg", "path/to/image2.jpg"]

prompt = "Compare these two images."

formatted_prompt = apply_chat_template(

processor, config, prompt, num_images=len(images)

)

output = generate(model, processor, formatted_prompt, images, verbose=False)

print(output)mlx_vlm.generate --model mlx-community/Qwen2-VL-2B-Instruct-4bit --max-tokens 100 --prompt "Compare these images" --image path/to/image1.jpg path/to/image2.jpgMLX-VLM also supports video analysis such as captioning, summarization, and more, with select models.

The following models support video chat:

- Qwen2-VL

- Qwen2.5-VL

- Idefics3

- LLaVA

With more coming soon.

mlx_vlm.video_generate --model mlx-community/Qwen2-VL-2B-Instruct-4bit --max-tokens 100 --prompt "Describe this video" --video path/to/video.mp4 --max-pixels 224 224 --fps 1.0These examples demonstrate how to use multiple images with MLX-VLM for more complex visual reasoning tasks.

MLX-VLM supports fine-tuning models with LoRA and QLoRA.

To learn more about LoRA, please refer to the LoRA.md file.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mlx-vlm

Similar Open Source Tools

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

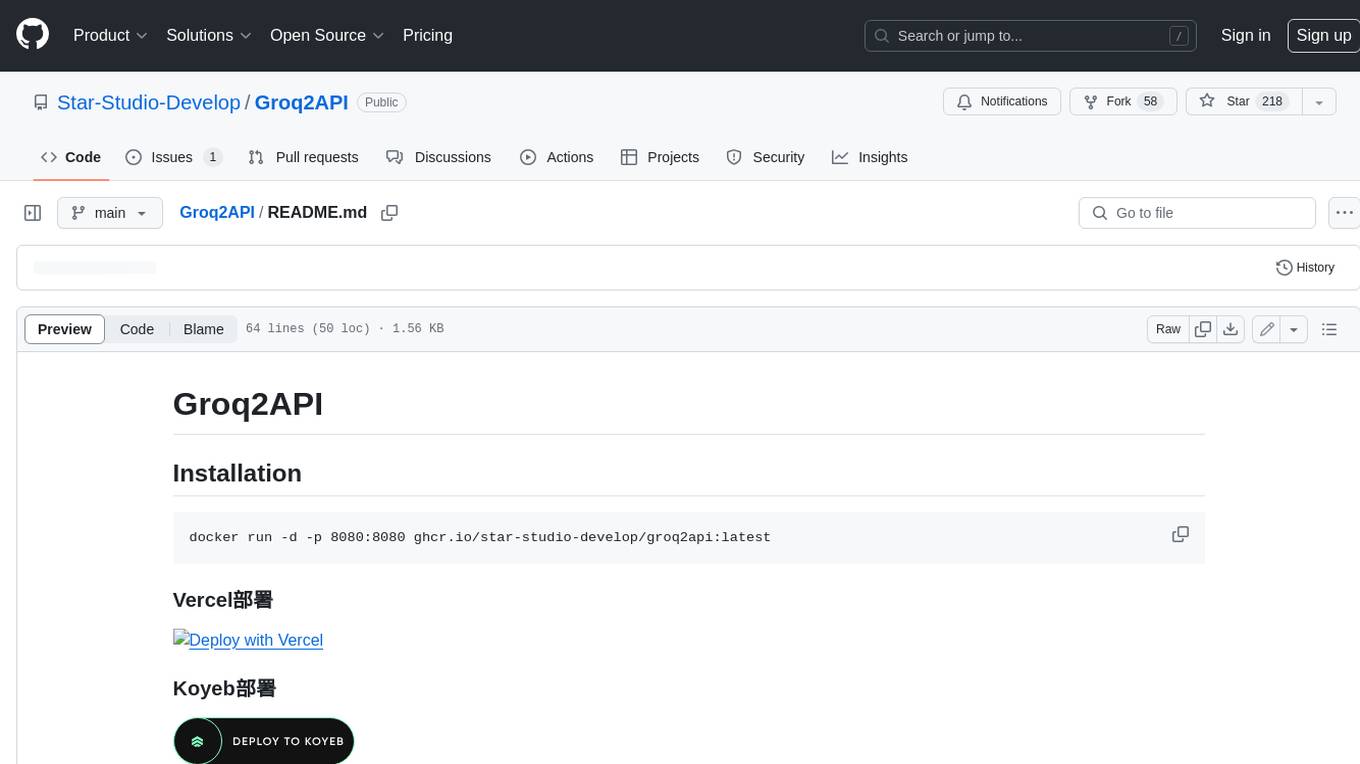

Groq2API

Groq2API is a REST API wrapper around the Groq2 model, a large language model trained by Google. The API allows you to send text prompts to the model and receive generated text responses. The API is easy to use and can be integrated into a variety of applications.

LLMVoX

LLMVoX is a lightweight 30M-parameter, LLM-agnostic, autoregressive streaming Text-to-Speech (TTS) system designed to convert text outputs from Large Language Models into high-fidelity streaming speech with low latency. It achieves significantly lower Word Error Rate compared to speech-enabled LLMs while operating at comparable latency and speech quality. Key features include being lightweight & fast with only 30M parameters, LLM-agnostic for easy integration with existing models, multi-queue streaming for continuous speech generation, and multilingual support for easy adaptation to new languages.

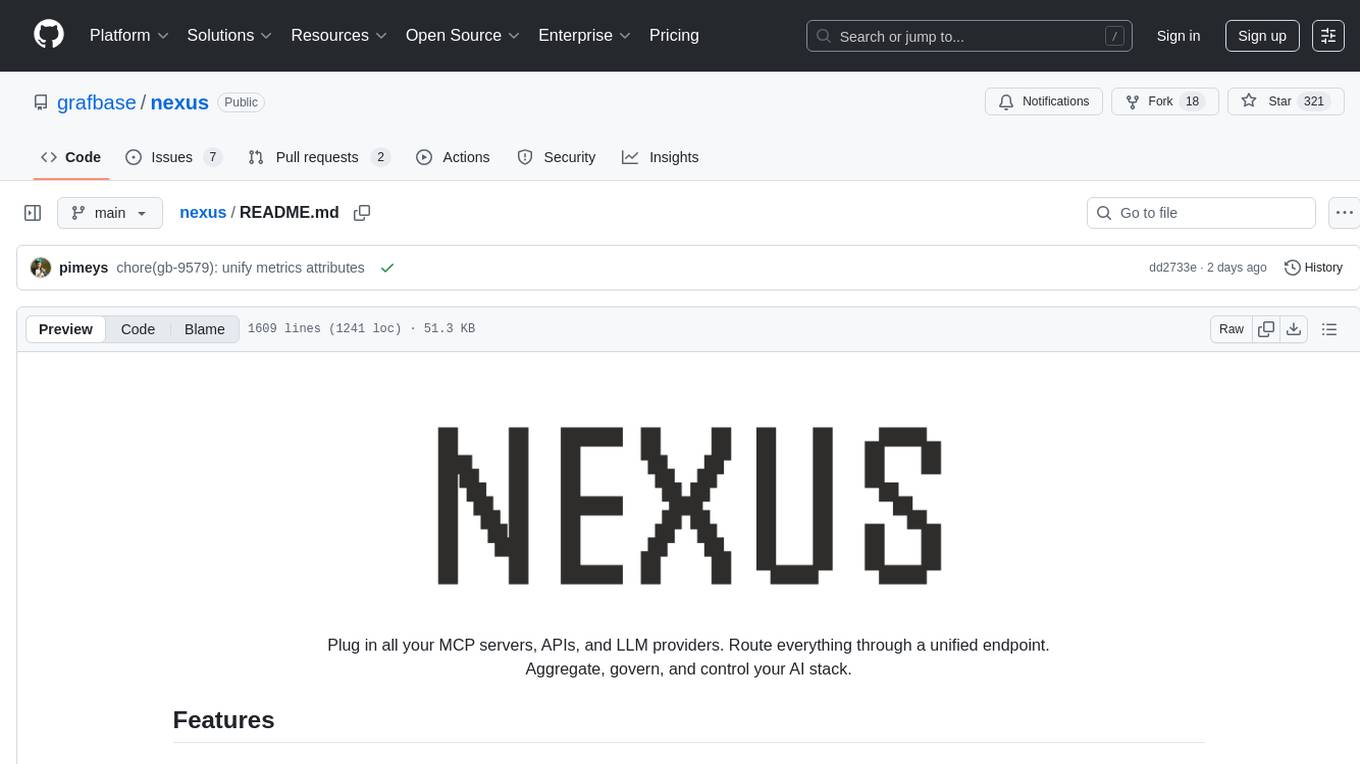

nexus

Nexus is a tool that acts as a unified gateway for multiple LLM providers and MCP servers. It allows users to aggregate, govern, and control their AI stack by connecting multiple servers and providers through a single endpoint. Nexus provides features like MCP Server Aggregation, LLM Provider Routing, Context-Aware Tool Search, Protocol Support, Flexible Configuration, Security features, Rate Limiting, and Docker readiness. It supports tool calling, tool discovery, and error handling for STDIO servers. Nexus also integrates with AI assistants, Cursor, Claude Code, and LangChain for seamless usage.

Bindu

Bindu is an operating layer for AI agents that provides identity, communication, and payment capabilities. It delivers a production-ready service with a convenient API to connect, authenticate, and orchestrate agents across distributed systems using open protocols: A2A, AP2, and X402. Built with a distributed architecture, Bindu makes it fast to develop and easy to integrate with any AI framework. Transform any agent framework into a fully interoperable service for communication, collaboration, and commerce in the Internet of Agents.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

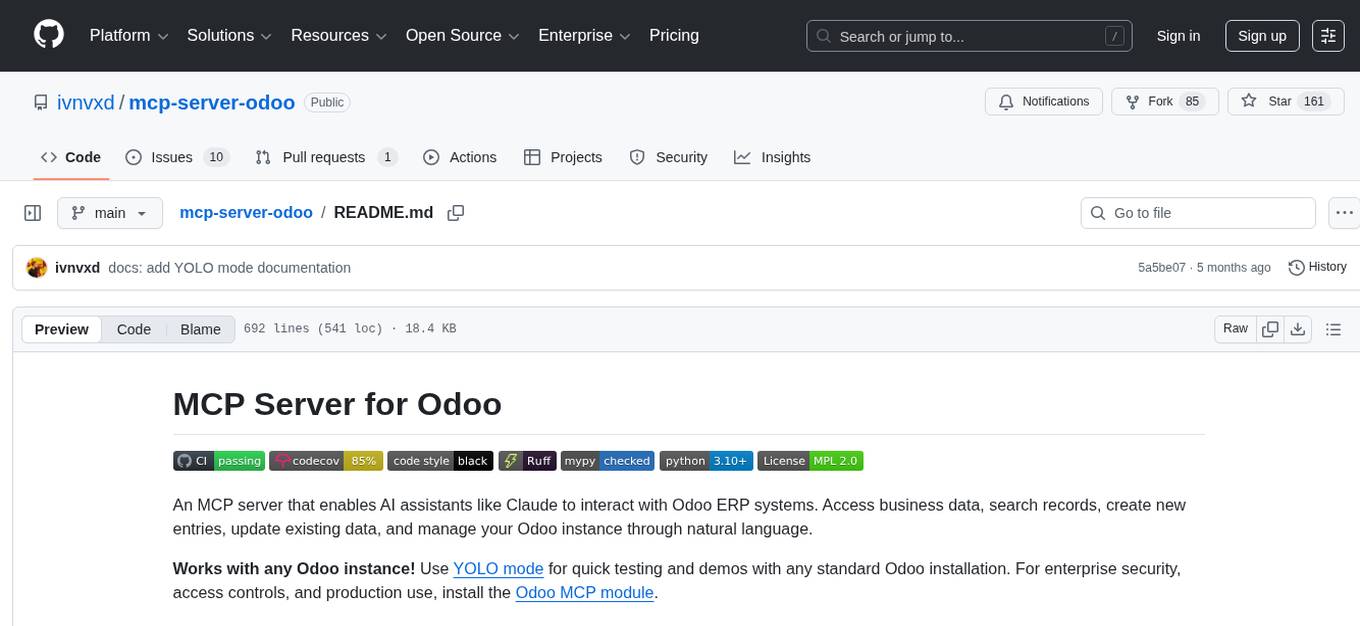

mcp-server-odoo

The MCP Server for Odoo is a tool that enables AI assistants like Claude to interact with Odoo ERP systems. Users can access business data, search records, create new entries, update existing data, and manage their Odoo instance through natural language. The server works with any Odoo instance and offers features like search and retrieve, create new records, update existing data, delete records, browse multiple records, count records, inspect model fields, secure access, smart pagination, LLM-optimized output, and YOLO Mode for quick access. Installation and configuration instructions are provided for different environments, along with troubleshooting tips. The tool supports various tasks such as searching and retrieving records, creating and managing records, listing models, updating records, deleting records, and accessing Odoo data through resource URIs.

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.

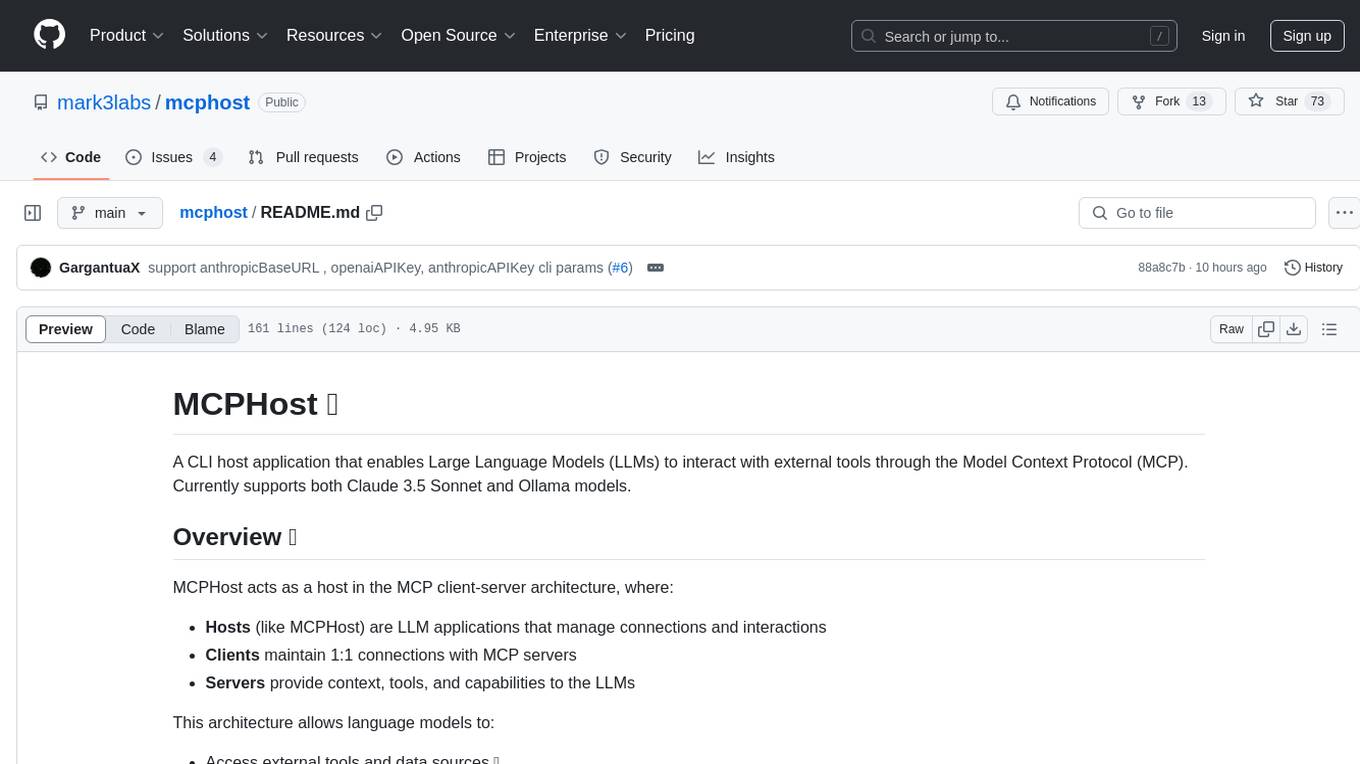

mcphost

MCPHost is a CLI host application that enables Large Language Models (LLMs) to interact with external tools through the Model Context Protocol (MCP). It acts as a host in the MCP client-server architecture, allowing language models to access external tools and data sources, maintain consistent context across interactions, and execute commands safely. The tool supports interactive conversations with Claude 3.5 Sonnet and Ollama models, multiple concurrent MCP servers, dynamic tool discovery and integration, configurable server locations and arguments, and a consistent command interface across model types.

mLLMCelltype

mLLMCelltype is a multi-LLM consensus framework for automated cell type annotation in single-cell RNA sequencing (scRNA-seq) data. The tool integrates multiple large language models to improve annotation accuracy through consensus-based predictions. It offers advantages over single-model approaches by combining predictions from models like OpenAI GPT-5.2, Anthropic Claude-4.6/4.5, Google Gemini-3, and others. Researchers can incorporate mLLMCelltype into existing workflows without the need for reference datasets.

firecrawl

Firecrawl is an API service that empowers AI applications with clean data from any website. It features advanced scraping, crawling, and data extraction capabilities. The repository is still in development, integrating custom modules into the mono repo. Users can run it locally but it's not fully ready for self-hosted deployment yet. Firecrawl offers powerful capabilities like scraping, crawling, mapping, searching, and extracting structured data from single pages, multiple pages, or entire websites with AI. It supports various formats, actions, and batch scraping. The tool is designed to handle proxies, anti-bot mechanisms, dynamic content, media parsing, change tracking, and more. Firecrawl is available as an open-source project under the AGPL-3.0 license, with additional features offered in the cloud version.

crush

Crush is a versatile tool designed to enhance coding workflows in your terminal. It offers support for multiple LLMs, allows for flexible switching between models, and enables session-based work management. Crush is extensible through MCPs and works across various operating systems. It can be installed using package managers like Homebrew and NPM, or downloaded directly. Crush supports various APIs like Anthropic, OpenAI, Groq, and Google Gemini, and allows for customization through environment variables. The tool can be configured locally or globally, and supports LSPs for additional context. Crush also provides options for ignoring files, allowing tools, and configuring local models. It respects `.gitignore` files and offers logging capabilities for troubleshooting and debugging.

Webscout

Webscout is an all-in-one Python toolkit for web search, AI interaction, digital utilities, and more. It provides access to diverse search engines, cutting-edge AI models, temporary communication tools, media utilities, developer helpers, and powerful CLI interfaces through a unified library. With features like comprehensive search leveraging Google and DuckDuckGo, AI powerhouse for accessing various AI models, YouTube toolkit for video and transcript management, GitAPI for GitHub data extraction, Tempmail & Temp Number for privacy, Text-to-Speech conversion, GGUF conversion & quantization, SwiftCLI for CLI interfaces, LitPrinter for styled console output, LitLogger for logging, LitAgent for user agent generation, Text-to-Image generation, Scout for web parsing and crawling, Awesome Prompts for specialized tasks, Weather Toolkit, and AI Search Providers.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

sparrow

Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images. It seamlessly handles forms, invoices, receipts, and other unstructured data sources. Sparrow stands out with its modular architecture, offering independent services and pipelines all optimized for robust performance. One of the critical functionalities of Sparrow - pluggable architecture. You can easily integrate and run data extraction pipelines using tools and frameworks like LlamaIndex, Haystack, or Unstructured. Sparrow enables local LLM data extraction pipelines through Ollama or Apple MLX. With Sparrow solution you get API, which helps to process and transform your data into structured output, ready to be integrated with custom workflows. Sparrow Agents - with Sparrow you can build independent LLM agents, and use API to invoke them from your system. **List of available agents:** * **llamaindex** - RAG pipeline with LlamaIndex for PDF processing * **vllamaindex** - RAG pipeline with LLamaIndex multimodal for image processing * **vprocessor** - RAG pipeline with OCR and LlamaIndex for image processing * **haystack** - RAG pipeline with Haystack for PDF processing * **fcall** - Function call pipeline * **unstructured-light** - RAG pipeline with Unstructured and LangChain, supports PDF and image processing * **unstructured** - RAG pipeline with Weaviate vector DB query, Unstructured and LangChain, supports PDF and image processing * **instructor** - RAG pipeline with Unstructured and Instructor libraries, supports PDF and image processing. Works great for JSON response generation

For similar tasks

HPT

Hyper-Pretrained Transformers (HPT) is a novel multimodal LLM framework from HyperGAI, trained for vision-language models capable of understanding both textual and visual inputs. The repository contains the open-source implementation of inference code to reproduce the evaluation results of HPT Air on different benchmarks. HPT has achieved competitive results with state-of-the-art models on various multimodal LLM benchmarks. It offers models like HPT 1.5 Air and HPT 1.0 Air, providing efficient solutions for vision-and-language tasks.

learnopencv

LearnOpenCV is a repository containing code for Computer Vision, Deep learning, and AI research articles shared on the blog LearnOpenCV.com. It serves as a resource for individuals looking to enhance their expertise in AI through various courses offered by OpenCV. The repository includes a wide range of topics such as image inpainting, instance segmentation, robotics, deep learning models, and more, providing practical implementations and code examples for readers to explore and learn from.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

clarifai-python-grpc

This is the official Clarifai gRPC Python client for interacting with their recognition API. Clarifai offers a platform for data scientists, developers, researchers, and enterprises to utilize artificial intelligence for image, video, and text analysis through computer vision and natural language processing. The client allows users to authenticate, predict concepts in images, and access various functionalities provided by the Clarifai API. It follows a versioning scheme that aligns with the backend API updates and includes specific instructions for installation and troubleshooting. Users can explore the Clarifai demo, sign up for an account, and refer to the documentation for detailed information.

horde-worker-reGen

This repository provides the latest implementation for the AI Horde Worker, allowing users to utilize their graphics card(s) to generate, post-process, or analyze images for others. It offers a platform where users can create images and earn 'kudos' in return, granting priority for their own image generations. The repository includes important details for setup, recommendations for system configurations, instructions for installation on Windows and Linux, basic usage guidelines, and information on updating the AI Horde Worker. Users can also run the worker with multiple GPUs and receive notifications for updates through Discord. Additionally, the repository contains models that are licensed under the CreativeML OpenRAIL License.

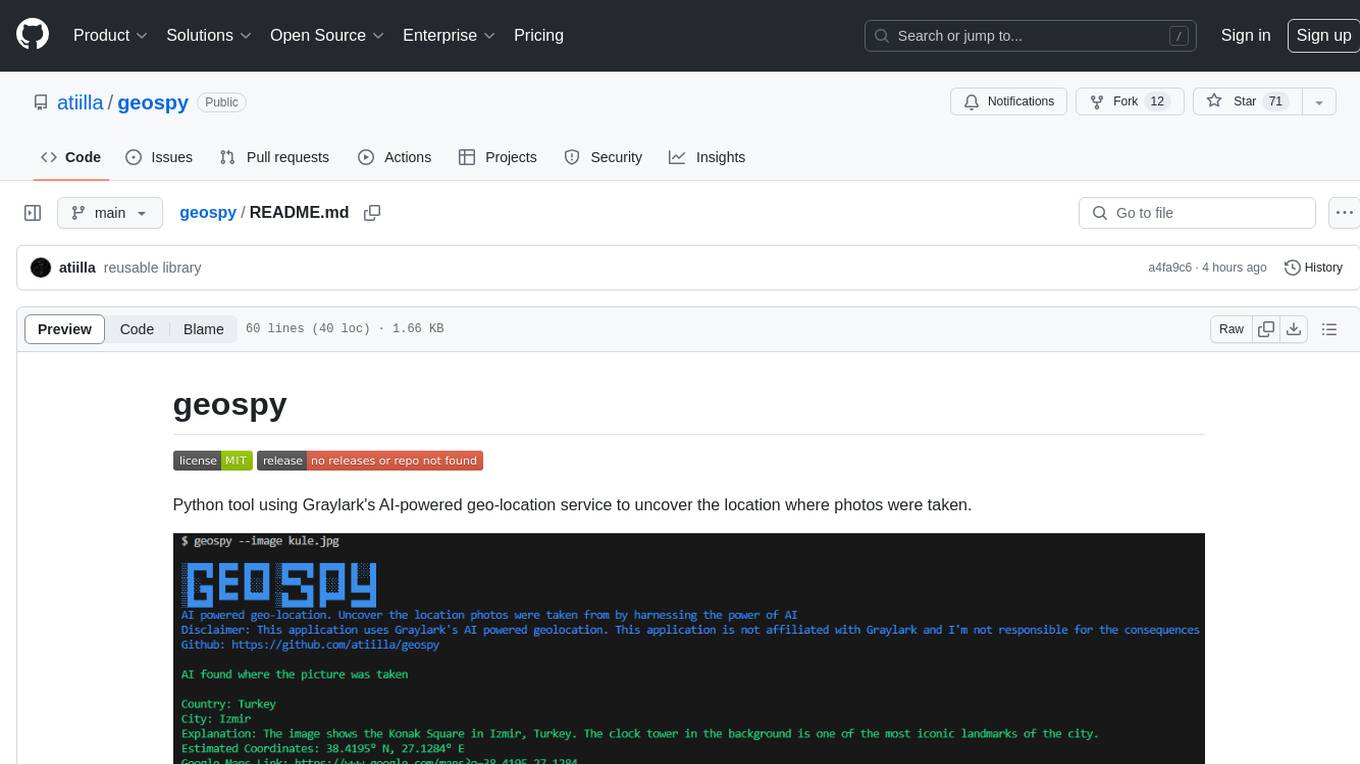

geospy

Geospy is a Python tool that utilizes Graylark's AI-powered geolocation service to determine the location where photos were taken. It allows users to analyze images and retrieve information such as country, city, explanation, coordinates, and Google Maps links. The tool provides a seamless way to integrate geolocation services into various projects and applications.

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.