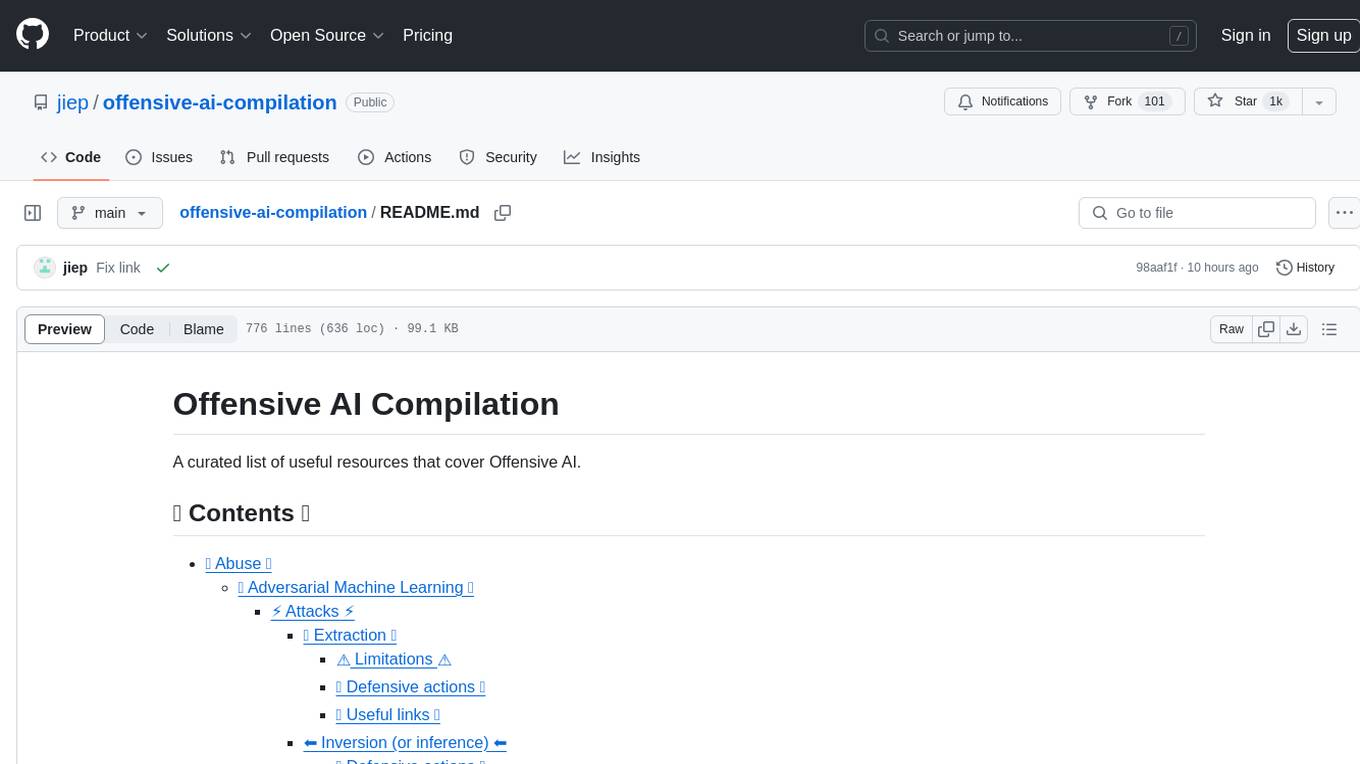

Awesome-Colorful-LLM

Recent advancements propelled by large language models (LLMs), encompassing an array of domains including Vision, Audio, Agent, Robotics, Fundamental Sciences such as Mathematics, and Ominous.

Stars: 106

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

README:

Welcome to our meticulously assembled anthology of vibrant multimodal research, encompassing an array of domains including Vision, Audio, Agent, Robotics, and Fundamental Sciences such as Mathematics, and Ominous including anything you want. Our collection primarily focuses on the advancements propelled by large language models (LLMs), complemented by an assortment of related collections.

Collection of works about Image + LLMs, Diffusion, see Image for details

- Image Understanding

- Reading List

- Datasets & Benchmarks

- Image Generation

- Reading List

- Open-source Projects

Related Collections (Understanding)

-

VLM_survey

, This is the repository of "Vision Language Models for Vision Tasks: a Survey", a systematic survey of VLM studies in various visual recognition tasks including image classification, object detection, semantic segmentation, etc.

-

Awesome-Multimodal-Large-Language-Models

, A curated list of Multimodal Large Language Models (MLLMs), including datasets, multimodal instruction tuning, multimodal in-context learning, multimodal chain-of-thought, llm-aided visual reasoning, foundation models, and others. This list will be updated in real time.

-

LLM-in-Vision

, Recent LLM (Large Language Models)-based CV and multi-modal works

-

Awesome-Transformer-Attention

, This repo contains a comprehensive paper list of Vision Transformer & Attention, including papers, codes, and related websites

-

Multimodal-AND-Large-Language-Models

, Paper list about multimodal and large language models, only used to record papers I read in the daily arxiv for personal needs.

-

Efficient_Foundation_Model_Survey

, This repo contains the paper list and figures for A Survey of Resource-efficient LLM and Multimodal Foundation Models.

-

CVinW_Readings

, A collection of papers on the topic of Computer Vision in the Wild (CVinW)

-

Awesome-Vision-and-Language

, A curated list of awesome vision and language resources

-

Awesome-Multimodal-Research

, This repo is reorganized from Awesome-Multimodal-ML

-

Awesome-Multimodal-ML

, Reading list for research topics in multimodal machine learning

-

Awesome-Referring-Image-Segmentation

, A collection of referring image (video, 3D) segmentation papers and datasets.

-

Awesome-Prompting-on-Vision-Language-Model

, This repo lists relevant papers summarized in our survey paper: A Systematic Survey of Prompt Engineering on Vision-Language Foundation Models.

-

Mamba-in-CV

, A paper list of some recent Mamba-based CV works. If you find some ignored papers, please open issues or pull requests.

-

Efficient-Multimodal-LLMs-Survey

, Efficient Multimodal Large Language Models: A Survey

Related Collections (Evaluation)

-

Awesome-MLLM-Hallucination

, A curated list of resources dedicated to hallucination of multimodal large language models (MLLM)

-

awesome-Large-MultiModal-Hallucination

,

Related Collections (Generation)

-

Awesome-VQVAE

, A collection of resources and papers on Vector Quantized Variational Autoencoder (VQ-VAE) and its application

-

Awesome-Diffusion-Models

, This repository contains a collection of resources and papers on Diffusion Models

-

Awesome-Controllable-Diffusion

, Collection of papers and resources on Controllable Generation using Diffusion Models, including ControlNet, DreamBooth, and others.

-

Awesome-LLMs-meet-Multimodal-Generation

, A curated list of papers on LLMs-based multimodal generation (image, video, 3D and audio).

Tutorials

- [CVPR2024 Tutorial] Recent Advances in Vision Foundation Models

- Large Multimodal Models: Towards Building General-Purpose Multimodal Assistant, Chunyuan Li

- Methods, Analysis & Insights from Multimodal LLM Pre-training, Zhe Gan

- LMMs with Fine-Grained Grounding Capabilities, Haotian Zhang

- A Close Look at Vision in Large Multimodal Models, Jianwei Yang

- Multimodal Agents, Linjie Li

- Recent Advances in Image Generative Foundation Models, Zhengyuan Yang

- Video and 3D Generation, Kevin Lin

- [CVPR2023 Tutorial] Recent Advances in Vision Foundation Models

- Opening Remarks & Visual and Vision-Language Pre-training, Zhe Gan

- From Representation to Interface: The Evolution of Foundation for Vision Understanding, Jianwei Yang

- Alignments in Text-to-Image Generation, Zhengyuan Yang

- Large Multimodal Models, Chunyuan Li

- Multimodal Agents: Chaining Multimodal Experts with LLMs, Linjie Li

- [CVPR2022 Tutorial] Recent Advances in Vision-and-Language Pre-training

- [CVPR2021 Tutorial] From VQA to VLN: Recent Advances in Vision-and-Language Research

- [CVPR2020 Tutorial] Recent Advances in Vision-and-Language Research

Collection of works about Video-Language Pretraining, Video + LLMs, see Video for details

- Video Understanding

- Reading List

- Pretraining Tasks

- Datasets

- Pretraining Corpora

- Video Instructions

- Benchmarks

- Common Downstream Tasks

- Advanced Downstream Tasks

- Task-Specific Benchmarks

- Multifaceted Benchmarks

- Metrics

- Projects & Tools

- Video Generation

- Reading List

- Metrics

- Projects

Related Collections (datasets)

Related Collections (understanding)

-

Awesome-LLMs-for-Video-Understanding

, Latest Papers, Codes and Datasets on Vid-LLMs.

-

Awesome Long-Term Video Understanding

, Awesome papers & datasets specifically focused on long-term videos.

Related Collections (generation)

-

i2vgen-xl

, VGen is an open-source video synthesis codebase developed by the Tongyi Lab of Alibaba Group, featuring state-of-the-art video generative models.

Collection of works about 3D+LLM, see 3D for details

- Reading List

Related Collections

-

awesome-3D-gaussian-splatting

, A curated list of papers and open-source resources focused on 3D Gaussian Splatting, intended to keep pace with the anticipated surge of research in the coming months

-

Awesome-LLM-3D

, a curated list of Multi-modal Large Language Model in 3D world Resources

-

Awesome-3D-Vision-and-Language

, A curated list of research papers in 3D visual grounding

-

awesome-scene-understanding

, A list of awesome scene understanding papers.

Related Collections

-

Awesome Document Understanding

, A curated list of resources for Document Understanding (DU) topic related to Intelligent Document Processing (IDP), which is relative to Robotic Process Automation (RPA) from unstructured data, especially form Visually Rich Documents (VRDs).

Collection of existing popular vision encoder, see Vision Encoder for details

- Image Encoder

- Video Encoder

- Audio Encoder

Collection of works about audio+LLM, see Audio for details

- Reading List

Related Collections

-

awesome-large-audio-models

, Collection of resources on the applications of Large Language Models (LLMs) in Audio AI.

-

speech-trident

, Awesome speech/audio LLMs, representation learning, and codec models

-

Audio-AI-Timeline

, Here we will keep track of the latest AI models for waveform based audio generation, starting in 2023!

Collection of works about agent learning, see Agent for details

- Reading List

- Datasets & Benchmarks

- Projects

- Applications

Related Collections

-

LLM-Agent-Paper-Digest

, For benefiting the research community and promoting LLM-powered agent direction, we organize papers related to LLM-powered agent that published on top conferences recently

-

LLMAgentPapers

, Must-read Papers on Large Language Model Agents.

-

LLM-Agent-Paper-List

, In this repository, we provide a systematic and comprehensive survey on LLM-based agents, and list some must-read papers.

-

XLang Paper Reading

, Paper collection on building and evaluating language model agents via executable language grounding

-

Awesome-LLMOps

, An awesome & curated list of best LLMOps tools for developers

-

Awesome LLM-Powered Agent

, Awesome things about LLM-powered agents. Papers / Repos / Blogs / ...

-

Awesome LMs with Tools

, Language models (LMs) are powerful yet mostly for text-generation tasks. Tools have substantially enhanced their performance for tasks that require complex skills.

-

ToolLearningPapers

, Must-read papers on tool learning with foundation models

-

Awesome-ALM

, This repo collect research papers about leveraging the capabilities of language models, which can be a good reference for building upper-layer applications

- LLM-powered Autonomous Agents, Lil'Log, Overview: panning, memory, tool use

-

World Model Papers,

, Paper collections of the continuous effort start from World Models

Collection of works about robotics+LLM, see Robotic for details

- Reading List

Related Collections (Robotics)

-

Awesome-Robotics-Foundation-Models

, This is the partner repository for the survey paper "Foundation Models in Robotics: Applications, Challenges, and the Future". The authors hope this repository can act as a quick reference for roboticists who wish to read the relevant papers and implement the associated methods.

-

Awesome-LLM-Robotics

, This repo contains a curative list of papers using Large Language/Multi-Modal Models for Robotics/RL

-

Simulately

, a website where we gather useful information of physics simulator for cutting-edge robot learning research. It is still under active development, so stay tuned!

-

Awesome-Temporal-Action-Detection-Temporal-Action-Proposal-Generation

, Temporal Action Detection & Weakly Supervised & Semi Supervised Temporal Action Detection & Temporal Action Proposal Generation & Open-Vocabulary Temporal Action Detection.

-

Awesome-TimeSeries-SpatioTemporal-LM-LLM

, A professionally curated list of Large (Language) Models and Foundation Models (LLM, LM, FM) for Temporal Data (Time Series, Spatio-temporal, and Event Data) with awesome resources (paper, code, data, etc.), which aims to comprehensively and systematically summarize the recent advances to the best of our knowledge.

-

PromptCraft-Robotics

, The PromptCraft-Robotics repository serves as a community for people to test and share interesting prompting examples for large language models (LLMs) within the robotics domain

-

Awesome-Robotics

, A curated list of awesome links and software libraries that are useful for robots

Related Collections (embodied)

-

Embodied_AI_Paper_List

, Awesome Paper list for Embodied AI and its related projects and applications

-

Awesome-Embodied-AI

, A curated list of awesome papers on Embodied AI and related research/industry-driven resources

-

awesome-embodied-vision

, Reading list for research topics in embodied vision

Related Collections (autonomous driving)

-

Awesome-LLM4AD

, A curated list of awesome LLM for Autonomous Driving resources (continually updated)

Collection of works about Mathematics + LLMs, see AI4Math for details

- Reading List

Related Collections

-

Awesome-Scientific-Language-Models

, A curated list of pre-trained language models in scientific domains (e.g., mathematics, physics, chemistry, biology, medicine, materials science, and geoscience), covering different model sizes (from <100M to 70B parameters) and modalities (e.g., language, vision, molecule, protein, graph, and table)

Collection of works about LLM + ominous modality, see Ominous for details

- Reading List

Please freely create a pull request or drop me an email: [email protected]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Colorful-LLM

Similar Open Source Tools

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

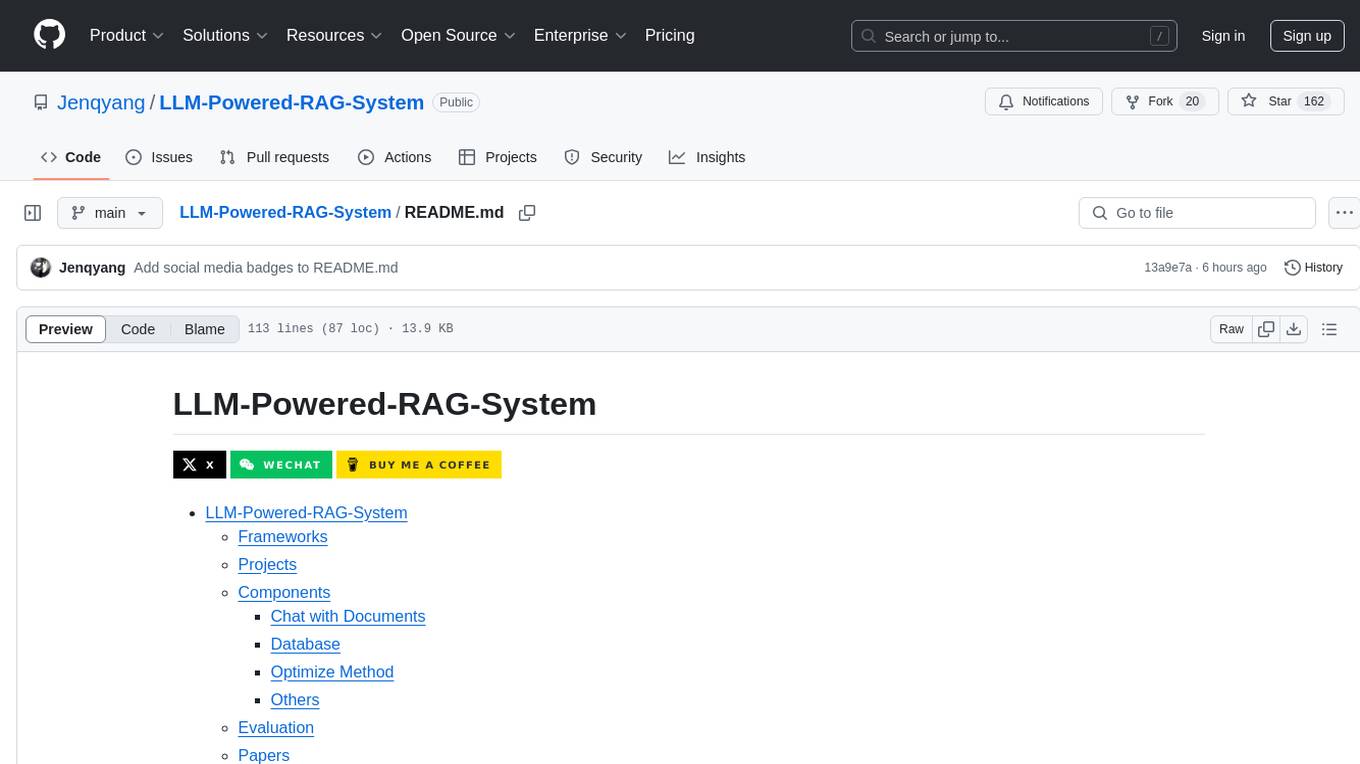

LLM-Powered-RAG-System

LLM-Powered-RAG-System is a comprehensive repository containing frameworks, projects, components, evaluation tools, papers, blogs, and other resources related to Retrieval-Augmented Generation (RAG) systems powered by Large Language Models (LLMs). The repository includes various frameworks for building applications with LLMs, data frameworks, modular graph-based RAG systems, dense retrieval models, and efficient retrieval augmentation and generation frameworks. It also features projects such as personal productivity assistants, knowledge-based platforms, chatbots, question and answer systems, and code assistants. Additionally, the repository provides components for interacting with documents, databases, and optimization methods using ML and LLM technologies. Evaluation frameworks, papers, blogs, and other resources related to RAG systems are also included.

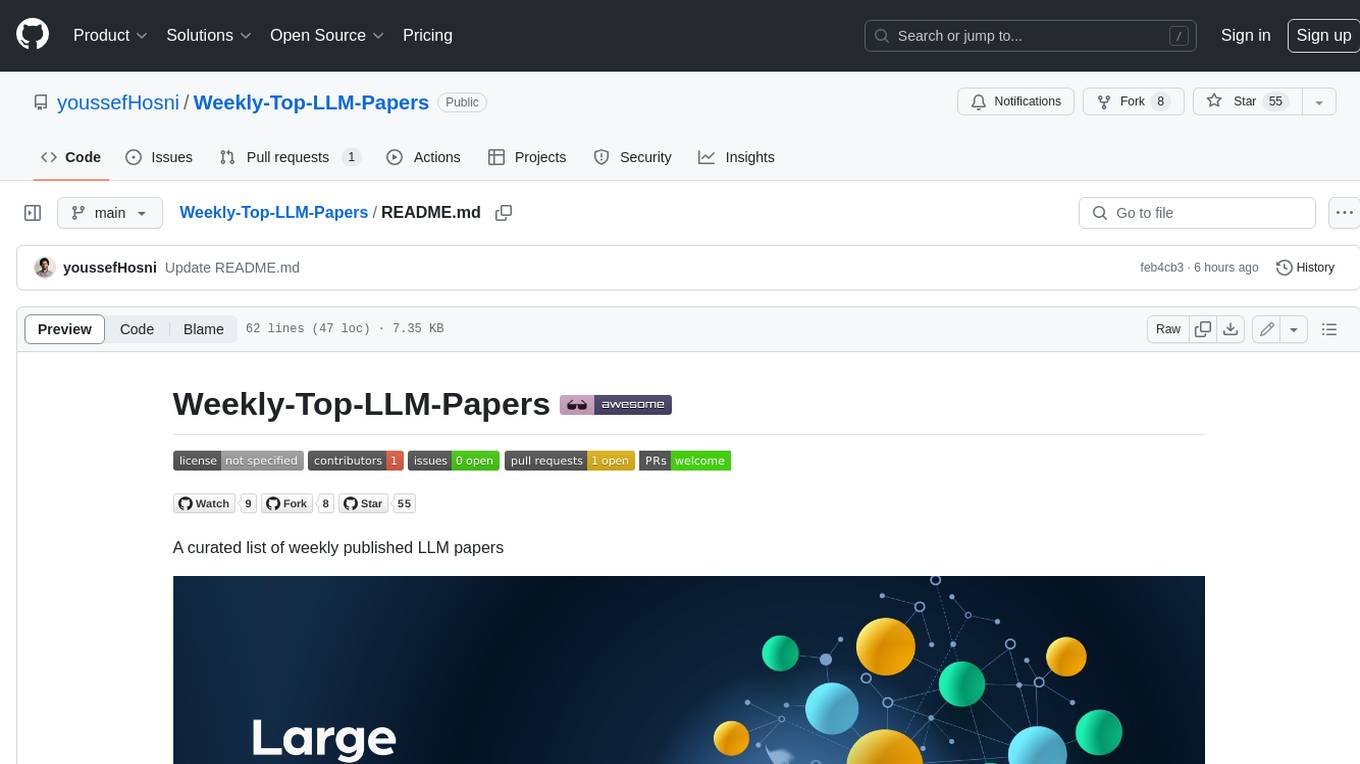

Weekly-Top-LLM-Papers

This repository provides a curated list of weekly published Large Language Model (LLM) papers. It includes top important LLM papers for each week, organized by month and year. The papers are categorized into different time periods, making it easy to find the most recent and relevant research in the field of LLM.

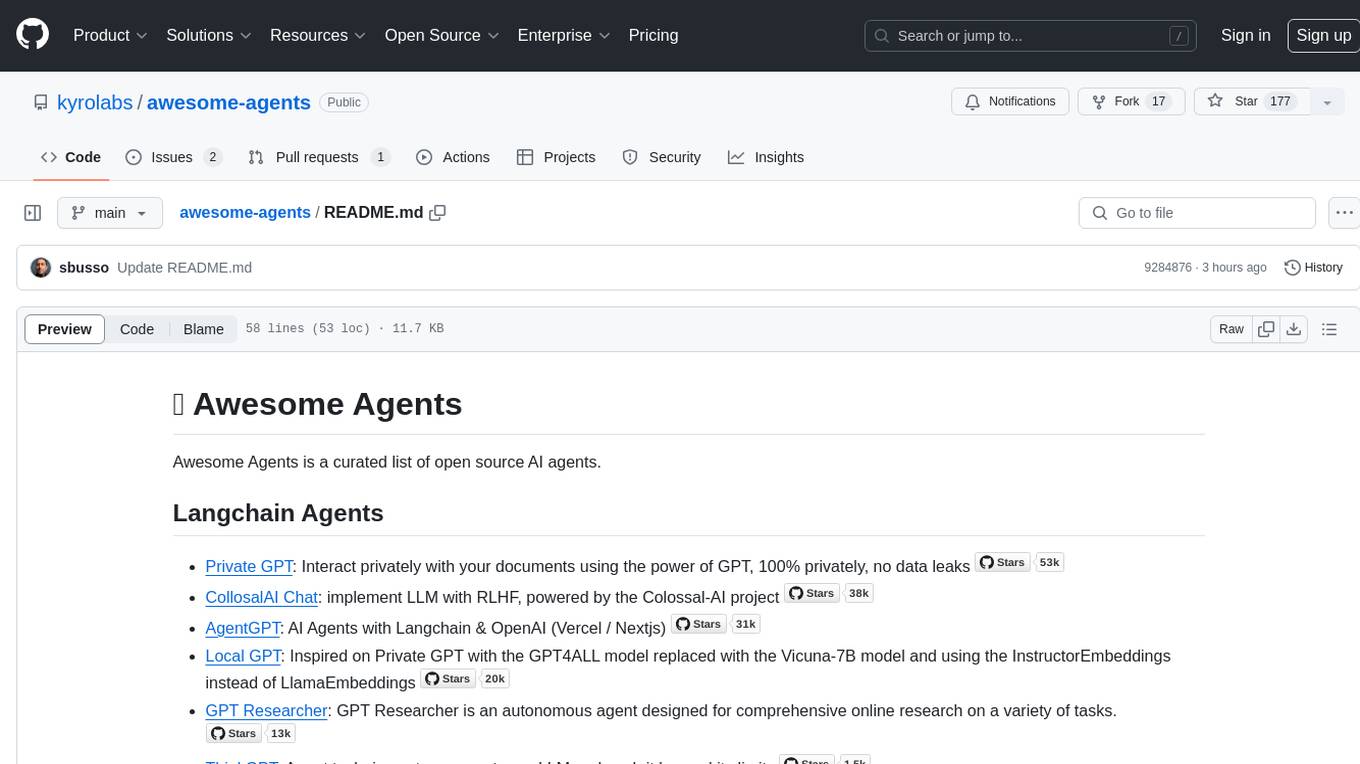

Awesome-AI-Agents

Awesome-AI-Agents is a curated list of projects, frameworks, benchmarks, platforms, and related resources focused on autonomous AI agents powered by Large Language Models (LLMs). The repository showcases a wide range of applications, multi-agent task solver projects, agent society simulations, and advanced components for building and customizing AI agents. It also includes frameworks for orchestrating role-playing, evaluating LLM-as-Agent performance, and connecting LLMs with real-world applications through platforms and APIs. Additionally, the repository features surveys, paper lists, and blogs related to LLM-based autonomous agents, making it a valuable resource for researchers, developers, and enthusiasts in the field of AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

ST-LLM

ST-LLM is a temporal-sensitive video large language model that incorporates joint spatial-temporal modeling, dynamic masking strategy, and global-local input module for effective video understanding. It has achieved state-of-the-art results on various video benchmarks. The repository provides code and weights for the model, along with demo scripts for easy usage. Users can train, validate, and use the model for tasks like video description, action identification, and reasoning.

awesome-agents

Awesome Agents is a curated list of open source AI agents designed for various tasks such as private interactions with documents, chat implementations, autonomous research, human-behavior simulation, code generation, HR queries, domain-specific research, and more. The agents leverage Large Language Models (LLMs) and other generative AI technologies to provide solutions for complex tasks and projects. The repository includes a diverse range of agents for different use cases, from conversational chatbots to AI coding engines, and from autonomous HR assistants to vision task solvers.

awesome-langchain

LangChain is an amazing framework to get LLM projects done in a matter of no time, and the ecosystem is growing fast. Here is an attempt to keep track of the initiatives around LangChain. Subscribe to the newsletter to stay informed about the Awesome LangChain. We send a couple of emails per month about the articles, videos, projects, and tools that grabbed our attention Contributions welcome. Add links through pull requests or create an issue to start a discussion. Please read the contribution guidelines before contributing.

awesome-saas

The Alchemyst Platform Cookbook is a comprehensive guide for developers and builders to bring their AI ideas to life. It provides cutting-edge AI tools and templates to empower users in creating innovative projects. The platform offers API documentation, quick start guides, official and community templates for various projects. Users can contribute to the platform by forking the repository, adding the topic 'alchemyst-awesome-saas', making their repository public, and submitting a pull request. Troubleshooting guidelines are provided for contributors. The platform is actively maintained by the Alchemyst AI Team.

RAG-Driven-Generative-AI

RAG-Driven Generative AI provides a roadmap for building effective LLM, computer vision, and generative AI systems that balance performance and costs. This book offers a detailed exploration of RAG and how to design, manage, and control multimodal AI pipelines. By connecting outputs to traceable source documents, RAG improves output accuracy and contextual relevance, offering a dynamic approach to managing large volumes of information. This AI book also shows you how to build a RAG framework, providing practical knowledge on vector stores, chunking, indexing, and ranking. You'll discover techniques to optimize your project's performance and better understand your data, including using adaptive RAG and human feedback to refine retrieval accuracy, balancing RAG with fine-tuning, implementing dynamic RAG to enhance real-time decision-making, and visualizing complex data with knowledge graphs. You'll be exposed to a hands-on blend of frameworks like LlamaIndex and Deep Lake, vector databases such as Pinecone and Chroma, and models from Hugging Face and OpenAI. By the end of this book, you will have acquired the skills to implement intelligent solutions, keeping you competitive in fields ranging from production to customer service across any project.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

UMOE-Scaling-Unified-Multimodal-LLMs

Uni-MoE is a MoE-based unified multimodal model that can handle diverse modalities including audio, speech, image, text, and video. The project focuses on scaling Unified Multimodal LLMs with a Mixture of Experts framework. It offers enhanced functionality for training across multiple nodes and GPUs, as well as parallel processing at both the expert and modality levels. The model architecture involves three training stages: building connectors for multimodal understanding, developing modality-specific experts, and incorporating multiple trained experts into LLMs using the LoRA technique on mixed multimodal data. The tool provides instructions for installation, weights organization, inference, training, and evaluation on various datasets.

stable-pi-core

Stable-Pi-Core is a next-generation decentralized ecosystem integrating blockchain, quantum AI, IoT, edge computing, and AR/VR for secure, scalable, and personalized solutions in payments, governance, and real-world applications. It features a Dual-Value System, cross-chain interoperability, AI-powered security, and a self-healing network. The platform empowers seamless payments, decentralized governance via DAO, and real-world applications across industries, bridging digital and physical worlds with innovative features like robotic process automation, machine learning personalization, and a dynamic cross-chain bridge framework.

For similar tasks

HPT

Hyper-Pretrained Transformers (HPT) is a novel multimodal LLM framework from HyperGAI, trained for vision-language models capable of understanding both textual and visual inputs. The repository contains the open-source implementation of inference code to reproduce the evaluation results of HPT Air on different benchmarks. HPT has achieved competitive results with state-of-the-art models on various multimodal LLM benchmarks. It offers models like HPT 1.5 Air and HPT 1.0 Air, providing efficient solutions for vision-and-language tasks.

learnopencv

LearnOpenCV is a repository containing code for Computer Vision, Deep learning, and AI research articles shared on the blog LearnOpenCV.com. It serves as a resource for individuals looking to enhance their expertise in AI through various courses offered by OpenCV. The repository includes a wide range of topics such as image inpainting, instance segmentation, robotics, deep learning models, and more, providing practical implementations and code examples for readers to explore and learn from.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

clarifai-python-grpc

This is the official Clarifai gRPC Python client for interacting with their recognition API. Clarifai offers a platform for data scientists, developers, researchers, and enterprises to utilize artificial intelligence for image, video, and text analysis through computer vision and natural language processing. The client allows users to authenticate, predict concepts in images, and access various functionalities provided by the Clarifai API. It follows a versioning scheme that aligns with the backend API updates and includes specific instructions for installation and troubleshooting. Users can explore the Clarifai demo, sign up for an account, and refer to the documentation for detailed information.

horde-worker-reGen

This repository provides the latest implementation for the AI Horde Worker, allowing users to utilize their graphics card(s) to generate, post-process, or analyze images for others. It offers a platform where users can create images and earn 'kudos' in return, granting priority for their own image generations. The repository includes important details for setup, recommendations for system configurations, instructions for installation on Windows and Linux, basic usage guidelines, and information on updating the AI Horde Worker. Users can also run the worker with multiple GPUs and receive notifications for updates through Discord. Additionally, the repository contains models that are licensed under the CreativeML OpenRAIL License.

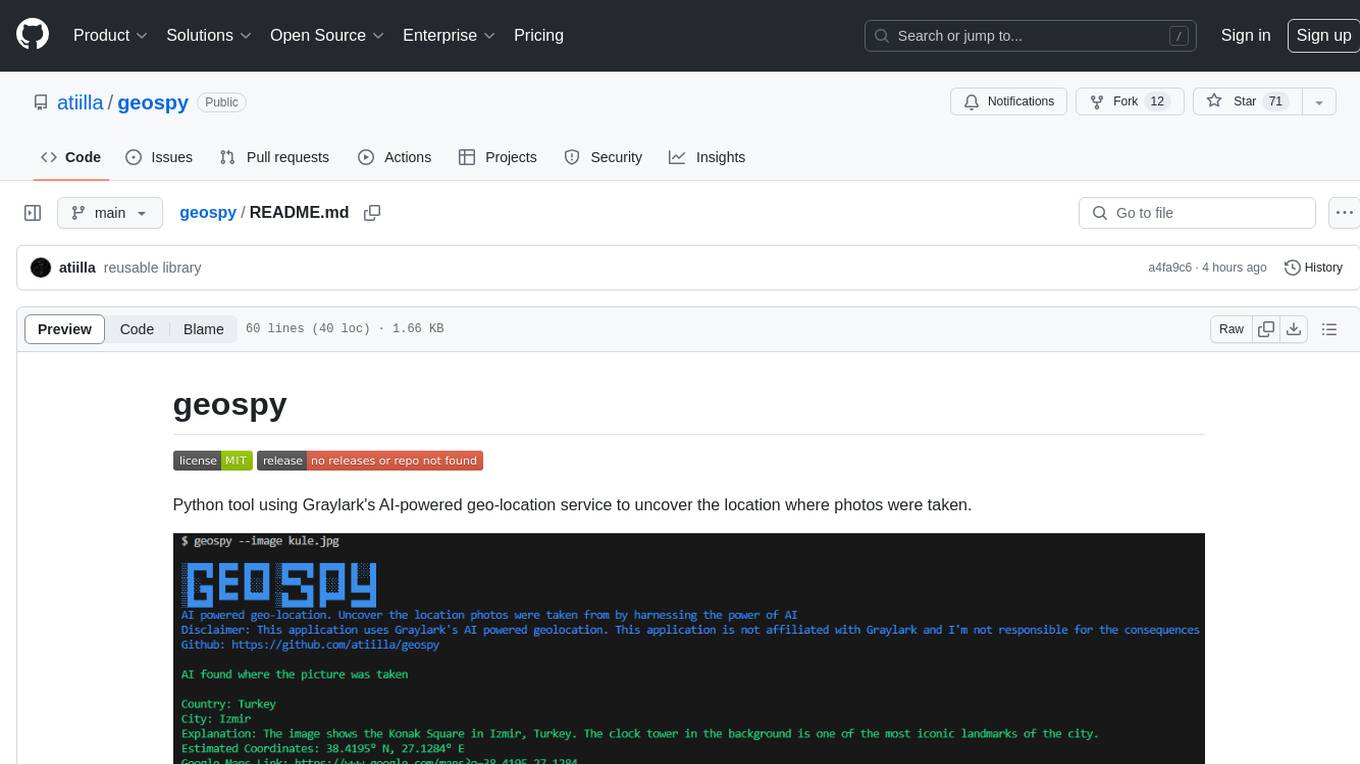

geospy

Geospy is a Python tool that utilizes Graylark's AI-powered geolocation service to determine the location where photos were taken. It allows users to analyze images and retrieve information such as country, city, explanation, coordinates, and Google Maps links. The tool provides a seamless way to integrate geolocation services into various projects and applications.

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.