awesome-langchain

😎 Awesome list of tools and projects with the awesome LangChain framework

Stars: 7965

LangChain is an amazing framework to get LLM projects done in a matter of no time, and the ecosystem is growing fast. Here is an attempt to keep track of the initiatives around LangChain. Subscribe to the newsletter to stay informed about the Awesome LangChain. We send a couple of emails per month about the articles, videos, projects, and tools that grabbed our attention Contributions welcome. Add links through pull requests or create an issue to start a discussion. Please read the contribution guidelines before contributing.

README:

Curated list of tools and projects using LangChain.

LangChain is an amazing framework to get LLM projects done in a matter of no time, and the ecosystem is growing fast. Here is an attempt to keep track of the initiatives around LangChain.

Subscribe to the newsletter to stay informed about the Awesome LangChain. We send a couple of emails per month about the articles, videos, projects, and tools that grabbed our attention

Contributions welcome. Add links through pull requests or create an issue to start a discussion. Please read the contribution guidelines before contributing.

-

LangChain: the original 🐍

-

LangChain.js: the js brother ✨

- Concepts: Langchain concepts doc

- Twitter account: follow to get fresh updates

- Youtube Channel

- Langchain Blog: The Official Langchain blog

-

LangServe: LangServe helps developers deploy LangChain runnables and chains as a REST API.

List of non-official ports of LangChain to other languages.

-

Langchain Go: Golang Langchain

-

LangchainRb: Ruby Langchain

-

LangChain4j: LangChain for Java

-

LangChainDart: Build powerful LLM-based Dart/Flutter applications.

-

Flowise: Drag & drop UI to build your customized LLM flow using LangchainJS

-

Langflow: LangFlow is a UI for LangChain

-

GPTCache: A Library for Creating Semantic Cache for LLM Queries

-

Gorilla: An API store for LLMs

-

LlamaHub: a library of data loaders for LLMs made by the community

-

Auto-evaluator: a lightweight evaluation tool for question-answering using Langchain

-

Langchain visualizer: visualization and debugging tool for LangChain workflows

-

LLM Strategy: implementing the Strategy Pattern using LLMs

-

datasetGPT: A command-line interface to generate textual and conversational datasets with LLMs.

-

Auto Evaluator: Langchain auto evaluator

-

Jina: Langchain Apps on Production with Jina

-

Dify: One API for plugins and datasets, one interface for prompt engineering and visual operation, all for creating powerful AI applications.

-

Chainlit: Build Python LLM apps in minutes ⚡️

-

Zep: Zep: A long-term memory store for LLM / Chatbot applications

-

Langchain Decorators: a layer on the top of LangChain that provides syntactic sugar 🍭 for writing custom langchain prompts and chains

- AilingBot: Quickly integrate applications built on Langchain into IM such as Slack, WeChat Work, Feishu, DingTalk.

-

Llama2 Embedding Server: Llama2 Embeddings FastAPI Service using LangChain

-

ChatAbstractions: LangChain chat model abstractions for dynamic failover, load balancing, chaos engineering, and more!

-

MindSQL - A python package for Txt-to-SQL with self hosting functionalities and RESTful APIs compatible with proprietary as well as open source LLM.

-

Llama-github: Llama-github is a python library which built with Langchain framework that helps you retrieve the most relevant code snippets, issues, and repository information from GitHub

-

CopilotKit: A framework for building custom AI Copilots 🤖 in-app AI chatbots, in-app AI Agents, & AI-powered Textareas

-

LangFair: LangFair is a Python library for conducting use-case-specific LLM bias and fairness assessments

-

Private GPT: Interact privately with your documents using the power of GPT, 100% privately, no data leaks

-

CollosalAI Chat: implement LLM with RLHF, powered by the Colossal-AI project

-

CrewAI: Cutting-edge framework for orchestrating role-playing, autonomous AI agents.

-

AgentGPT: AI Agents with Langchain & OpenAI (Vercel / Nextjs)

-

Local GPT: Inspired on Private GPT with the GPT4ALL model replaced with the Vicuna-7B model and using the InstructorEmbeddings instead of LlamaEmbeddings

-

GPT Researcher: GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks.

-

ThinkGPT: Agent techniques to augment your LLM and push it beyond its limits

-

Camel-AutoGPT: role-playing approach for LLMs and auto-agents like BabyAGI & AutoGPT

-

RasaGPT: RasaGPT is the first headless LLM chatbot platform built on top of Rasa and Langchain.

-

SkyAGI: Emerging human-behavior simulation capability in LLM agents

-

PyCodeAGI: A small AGI experiment to generate a Python app given what app the user wants to build

-

BabyAGI UI: Make it easier to run and develop with babyagi in a web app, like a ChatGPT

-

SuperAgent: Deploy LLM Agents to production

-

Voyager: An Open-Ended Embodied Agent with Large Language Models

-

ix: Autonomous GPT-4 agent platform

-

DuetGPT: A conversational semi-autonomous developer assistant, AI pair programming without the copypasta.

-

Multi-Modal LangChain agents in Production: Deploy LangChain Agents and connect them to Telegram

-

DemoGPT: DemoGPT enables you to create quick demos by just using prompt. It applies ToT approach on Langchain documentation tree.

-

SuperAGI: SuperAGI - A dev-first open source autonomous AI agent framework

-

Autonomous HR Chatbot: An autonomous agent that can answer HR related queries autonomously using the tools it has on hand

-

BlockAGI: BlockAGI conducts iterative, domain-specific research, and outputs detailed narrative reports to showcase its findings

-

waggledance.ai: An opinionated, concurrent system of AI Agents. It implements Plan-Validate-Solve with data and tools for general goal-solving.

-

AI: Vercel template to build AI-powered applications with React, Svelte, and Vue, first class support for LangChain

-

create-t3-turbo-ai: t3 based, Langchain-friendly boilerplate for building type-safe, full-stack, LLM-powered web apps with Nextjs and Prisma

-

LangChain.js LLM Template: LangChain LLM template that allows you to train your own custom AI LLM model.

-

Streamlit Template: template for how to deploy a LangChain on Streamlit

-

Codespaces Template: a Codespaces template for getting up-and-running with LangChain in seconds!

-

Gradio Template: template for how to deploy a LangChain on Gradio

-

AI Getting Started: A Javascript AI getting started stack for weekend projects, including image/text models, vector stores, auth, and deployment configs

-

Embedchain: Framework to easily create LLM powered bots over any dataset.

-

Openllmetry: Open-source observability for your LLM application, based on OpenTelemetry

-

Quiver: Dump your brain into your GenerativeAI Vault

-

DocsGPT: GPT-powered chat for documentation search & assistance.

-

Chaindesk: The no-code platform for semantic search and documents retrieval

-

Knowledge GPT: Accurate answers and instant citations for your documents.

-

Knowledge: Knowledge is a tool for saving, searching, accessing, and exploring all of your favorite websites, documents and files.

-

Anything LLM: A full-stack application that turns any documents into an intelligent chatbot with a sleek UI and easier way to manage your workspaces.

-

DocNavigator: AI-powered chatbot builder that is designed to improve the user experience on product documentation/support websites

-

ChatFiles: Upload your document and then chat with it. Powered by GPT / Embedding / TS / NextJS.

-

DataChad: A streamlit app that lets you chat with any data source. Supporting both OpenAI and local mode with GPT4All.

-

Second Brain AI Agent: A streamlit app dialog with your second brain notes using OpenAI and ChromaDB locally.

-

examor: A website application that allows you to take exams based on your knowledge notes. Let you really remember what you have learned and written.

-

Repochat: Chatbot assistant enabling GitHub repository interaction using LLMs with Retrieval Augmented Generation

-

SolidGPT: Chat everything with your code repository, ask repository level code questions, and discuss your requirements

-

Minima: Chat with local documents, connect local environment to ChatGPT or Claude

-

DB GPT: Interact your data and environment using the local GPT, no data leaks, 100% privately, 100% security

-

AudioGPT: Understanding and Generating Speech, Music, Sound, and Talking Head

-

Paper QA: LLM Chain for answering questions from documents with citations

-

Chat Langchain: locally hosted chatbot specifically focused on question answering over the LangChain documentation

-

Langchain Chat: another Next.js frontend for LangChain Chat.

-

Book GPT: drop a book, start asking question.

-

Chat LangchainJS: NextJS version of Chat Langchain

-

Doc Search: converse with book - Built with GPT-3

-

Fact Checker: fact-checking LLM outputs with langchain

- MM ReAct: Multi Modal ReAct Design

-

QABot: Query local or remote files or databases with natural language queries powered by langchain and openai

-

GPT Automator: Your voice-controlled Mac assistant.

-

Teams LangchainJS: Demonstration of LangChainJS with Teams / Bot Framework bots

-

ChatGPT: ChatGPT & langchain example for node.js & Docker

-

FlowGPT: Generate diagram with AI

-

langchain-text-summarizer: A sample streamlit application summarizing text using LangChain

-

Langchain Chat Websocket: About LangChain LLM chat with streaming response over websockets

-

langchain_yt_tools: Langchain tools to search/extract/transcribe text transcripts of Youtube videos

-

SmartPilot: A Python program leveraging OpenAI's language models to generate, analyze, and select the best answer to a given question

-

Howdol: a helpful chatbot that can answer questions

-

MrsStax: QA Slack Bot

-

ThoughtSource⚡: A framework for the science of machine thinking

- ChatGPT Langchain: ChatGPT clone using langchain on Huggingface

- Chat Math Techniques: langchain chat with math techniques on Huggingface

-

Notion QA: Notion Question-Answering Bot

- QNimGPT: Play Nim against an IBM Quantum Computer simulator or OpenAI GPT-3.5

-

ChatPDF: ChatGPT + Enterprise data with Azure OpenAI

- Chat with Scanned Documents: A demo chatting with documents scanned with Dynamic Web TWAIN.

-

snowChat ❄️: Chat with you're snowflake database

- Airtable-QnA: 🌟 a question-answering tool for your Airtable content

- WingmanAI: tool for interacting with real-time transcription of both system and microphone audio

-

TutorGPT: Dynamic few-shot metaprompting for the task of tutoring.

-

Cheshire Cat: Custom AGI boT with ready-to-use chat integration and plugins development platform.

-

Got Chaat Bot: Repo for creating GoT Chatbots (ex: talk with Tyrion Lannister)

-

Dialoqbase: web application that allows you to create custom chatbots with your own knowledge base

- CSV-AI 🧠: CSV-AI is the ultimate app powered by LangChain that allows you to unlock hidden insights in your CSV files.

-

MindGeniusAI: Auto generate MindMap with ChatGPT

- Robby-Chatbot: AI chatbot 🤖 for chat with CSV, PDF, TXT files 📄 and YTB videos 🎥 | using Langchain🦜 | OpenAI | Streamlit ⚡.

-

AI Chatbot: A full-featured, hackable Next.js AI chatbot built by Vercel Labs

-

Instrukt: A fully-fledged AI environment in the terminal. Build, test and instruct agents.

-

OpenChat: LLMs custom-chatbots console ⚡.

-

Twitter Agent: Scrape tweets, summarize them and chat with them in an interactive terminal.

- GPT Migrate: Easily migrate your codebase from one framework or language to another.

-

Code Interpreter API: About Open source implementation of the ChatGPT Code Interpreter

-

Recommender: Create captivating email marketing campaigns tailored to your business needs

-

Autonomous HR Chatbot An autonomous HR agent that can answer user queries using tools

-

Lobe Chat An open-source, extensible (Function Calling), high-performance chatbot framework

-

Funcchain: write prompts, pythonic

-

PersonalityChatbot: Langchain chatbot for chat with personality using Langchain🦜 | LangSmith | MongoDB.

-

XAgent: An Autonomous LLM Agent for Complex Task Solving

-

MemFree - Open Source Hybrid AI Search Engine, Instantly Get Accurate Answers from the Internet, Bookmarks, Notes, and Docs. Support One-Click Deployment.

-

Langchain Tutorials: overview and tutorial of the LangChain Library

-

LangChain Chinese Getting Started Guide: Chinese LangChain Tutorial for Beginners

- Flan5 LLM: PDF QA using LangChain for chain of thought and multi-task instructions, Flan5 on HuggingFace

- LangChain Handbook: Pinecone / James Briggs' LangChain handbook

- Query the YouTube video transcripts: Query the YouTube video transcripts, returning timestamps as sources to legitimize the answers

- llm-lobbyist: Large Language Models as Corporate Lobbyists

- Langchain Semantic Search: Search and indexing your own Google Drive Files using GPT3, LangChain, and Python

- GPT Political Compass

- llm-grovers-search-party: Leveraging Qiskit, OpenAI and LangChain to demonstrate Grover's algorithm

- TextWorld ReAct Agent

- LangChain <> Wolfram Alpha

- BYO Knowledge Graph

-

Large Language Models Course

- LangChain Series by Sam Witteveen

- LangChain Tutorials Playlist

- LangChain James Briggs' Playlist

- Greg Kamradt Playlist

- Transformers Agents: Provides a natural language API on top of transformers

-

LlamaIndex: provides a central interface to connect your LLM's with external data.

-

Botpress: The building blocks for building chatbots

-

Haystack: NLP framework to interact with your data using Transformer models and LLMs

-

Semantic Kernel: Microsoft C# SDK to integrate cutting-edge LLM technology quickly and easily into your apps

-

Promptify: Prompt Engineering | Use GPT or other prompt based models to get structured output.

-

PromptSource: About Toolkit for creating, sharing and using natural language prompts.

-

Agent-LLM: An Artificial Intelligence Automation Platform.

-

LLM Agents: Build agents which are controlled by LLMs

-

MiniChain: A tiny library for coding with large language models.

-

Griptape: Python framework for AI workflows and pipelines with chain of thought reasoning, external tools, and memory.

-

llm-chain: is a powerful rust crate for building chains in LLMs allowing you to summarise text and complete complex tasks.

-

OpenLM: a drop-in OpenAI-compatible library that can call LLMs from any other hosted inference API. Also Typescript

-

Dust: Design and Deploy Large Language Model Apps

- e2b: Open-source platform for building & deploying virtual developers’ agents

-

SuperAGI: A dev-first open source autonomous AI agent framework.

-

SmartGPT: A program that provides LLMs with the ability to complete complex tasks using plugins.

-

TermGPT: Giving LLMs like GPT-4 the ability to plan and execute terminal commands

-

ReLLM: Regular Expressions for Language Model Completions.

-

OpenDAN: open source Personal AI OS , which consolidates various AI modules in one place for your personal use.

-

OpenLLM: An open platform for operating large language models (LLMs) in production. Fine-tune, serve, deploy, and monitor any LLMs with ease using OpenLLM.

-

FlagAI: FlagAI (Fast LArge-scale General AI models) is a fast, easy-to-use and extensible toolkit for large-scale model.

-

AI.JSX: The AI Application Framework for Javascript

-

Outlines: Generative Model Programming (Python)

-

AI Utils: TypeScript-first library for building AI apps, chatbots, and agents.

-

MetaGPT: The Multi-Agent Meta Programming Framework: Given one line Requirement, return PRD, Design, Tasks, Repo and CI

-

Hyv: Probably the easiest way to use any AI Model in Node.js and create complex interactions with ease.

-

Autochain: Build lightweight, extensible, and testable LLM Agents with AutoChain.

-

TypeChat: TypeChat is a library that makes it easy to build natural language interfaces using types.

-

Marvin: ✨ Build AI interfaces that spark joy

-

LMQL: A programming language for large language models.

-

LLMFlow: Simple, Explicit and Transparent LLM Apps

-

Ax: A comprehensive AI framework for TypeScript

-

TextAI: 💡 All-in-one open-source embeddings database for semantic search, LLM orchestration and language model workflows.

-

AgentFlow: About Complex LLM Workflows from Simple JSON.

-

Outlines: Fast and reliable neural text generation.

-

SimpleAIChat: Python package for easily interfacing with chat apps, with robust features and minimal code complexity.

-

LLFn: A light-weight framework for creating applications using LLMs

-

LLMStack: No code platform for building LLM-powered applications with custom data.

-

Lagent: A lightweight framework for building LLM-based agents

-

Embedbase: The native Software 3.0 stack for building AI-powered applications.

-

Rivet: An IDE for creating complex AI agents and prompt chaining, and embedding it in your application.

-

Promptfoo: Test your prompts. Evaluate and compare LLM outputs, catch regressions, and improve prompt quality.

-

RestGPT: An LLM-based autonomous agent controlling real-world applications via RESTful APIs

-

LangStream: Framework for building and running event-driven LLM applications using no-code and Python (including LangChain-based) agents.

-

Magentic: Seamlessly integrate LLMs as Python functions

- Autogen: Enable Next-Gen Large Language Model Applications.

-

AgentVerse Provides a flexible framework that simplifies the process of building custom multi-agent environments for LLMs

-

Flappy: Production-Ready LLM Agent SDK for Every Developer

-

MemGPT: Teaching LLMs memory management for unbounded context

-

Agentlabs: Universal AI Agent Frontend. Build your backend we handle the rest.

-

axflow: The TypeScript framework for AI development

-

bondai: AI-powered assistant with a lightweight, versatile API for seamless integration into your own applications

-

Chidori: A reactive runtime for building durable AI agents

-

Langroid: an intuitive, lightweight, extensible and principled Python framework to easily build LLM-powered applications.

-

Langstream: Build robust LLM applications with true composability 🔗

-

Agency: 🕵️♂️ Library designed for developers eager to explore the potential of Large Language Models (LLMs) and other generative AI through a clean, effective, and Go-idiomatic approach

-

TaskWeaver: A code-first agent framework for seamlessly planning and executing data analytics tasks.

-

MicroAgent: Agents Capable of Self-Editing Their Prompts / Python Code

-

Casibase: Open-source AI LangChain-like RAG (Retrieval-Augmented Generation) knowledge database with web UI and Enterprise SSO⚡️, supports OpenAI, Azure, LLaMA, Google Gemini, HuggingFace, Claude, Grok, etc

-

Fructose: Fructose is a python package to create a dependable, strongly-typed interface around an LLM call.

-

R2R: A framework for rapid development and deployment of production-ready RAG systems

-

uAgents: A fast and lightweight framework for creating decentralized agents with ease.

-

Codel: ✨ Fully autonomous AI Agent that can perform complicated tasks and projects using terminal, browser, and editor.

-

LLocalSearch: LLocalSearch is a completely locally running search aggregator using LLM Agents. The user can ask a question and the system will use a chain of LLMs to find the answer. The user can see the progress of the agents and the final answer. No OpenAI or Google API keys are needed.

-

Plandex: An AI coding engine for complex tasks

-

Maestro: A framework for Claude Opus to intelligently orchestrate subagents.

-

GPT Pilot: GPT Pilot is the core technology for the Pythagora VS Code extension that aims to provide the first real AI developer companion.

-

SWE Agent: SWE-agent takes a GitHub issue and tries to automatically fix it, using GPT-4, or your LM of choice.

-

Gateway: A Blazing Fast AI Gateway. Route to 100+ LLMs with 1 fast & friendly API.

-

AgentRun: The easiest, and fastest way to run AI-generated Python code safely

-

LLama Cpp Agent: The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models

-

FinRobot: An Open-Source AI Agent Platform for Financial Applications using LLMs

-

Groq Ruby: Groq Cloud runs LLM models fast and cheap. This is a convenience client library for Ruby.

-

AgentScope: Start building LLM-empowered multi-agent applications in an easier way.

-

Memary: Longterm Memory for Autonomous Agents.

-

Llmware: Providing enterprise-grade LLM-based development framework, tools, and fine-tuned models.

-

Pipecat: Open Source framework for voice and multimodal conversational AI.

-

Phidata: Build AI Assistants with memory, knowledge and tools.

-

Rigging: Lightweight LLM Interaction Framework (rust)

-

Vision agent: Vision Agent is a library that helps you utilize agent frameworks to generate code to solve your vision task.

-

llama-agents: llama-agents is an async-first framework for building, iterating, and productionizing multi-agent systems, including multi-agent communication, distributed tool execution, human-in-the-loop, and more

-

Claude Engineer: Claude Engineer is an interactive command-line interface (CLI) that leverages the power of Anthropic's Claude-3.5-Sonnet model to assist with software development tasks.

-

AI Scientist: The AI Scientist: Towards Fully Automated Open-Ended Scientific

-

DSPy: The framework for programming—not prompting—foundation models

-

Open LLMs: A list of open LLMs available for commercial use

-

Awesome LLM: Awesome-LLM: a curated list of Large Language Model resources.

-

LLaMA Cult and More: Keeping Track of Affordable LLMs, 🦙 Cult and More

-

Awesome Language Agents: List of language agents based on paper "Cognitive Architectures for Language Agents"

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-langchain

Similar Open Source Tools

awesome-langchain

LangChain is an amazing framework to get LLM projects done in a matter of no time, and the ecosystem is growing fast. Here is an attempt to keep track of the initiatives around LangChain. Subscribe to the newsletter to stay informed about the Awesome LangChain. We send a couple of emails per month about the articles, videos, projects, and tools that grabbed our attention Contributions welcome. Add links through pull requests or create an issue to start a discussion. Please read the contribution guidelines before contributing.

LLM-Powered-RAG-System

LLM-Powered-RAG-System is a comprehensive repository containing frameworks, projects, components, evaluation tools, papers, blogs, and other resources related to Retrieval-Augmented Generation (RAG) systems powered by Large Language Models (LLMs). The repository includes various frameworks for building applications with LLMs, data frameworks, modular graph-based RAG systems, dense retrieval models, and efficient retrieval augmentation and generation frameworks. It also features projects such as personal productivity assistants, knowledge-based platforms, chatbots, question and answer systems, and code assistants. Additionally, the repository provides components for interacting with documents, databases, and optimization methods using ML and LLM technologies. Evaluation frameworks, papers, blogs, and other resources related to RAG systems are also included.

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

TEN-Agent

TEN Agent is an open-source multimodal agent powered by the world’s first real-time multimodal framework, TEN Framework. It offers high-performance real-time multimodal interactions, multi-language and multi-platform support, edge-cloud integration, flexibility beyond model limitations, and real-time agent state management. Users can easily build complex AI applications through drag-and-drop programming, integrating audio-visual tools, databases, RAG, and more.

yolo-ios-app

The Ultralytics YOLO iOS App GitHub repository offers an advanced object detection tool leveraging YOLOv8 models for iOS devices. Users can transform their devices into intelligent detection tools to explore the world in a new and exciting way. The app provides real-time detection capabilities with multiple AI models to choose from, ranging from 'nano' to 'x-large'. Contributors are welcome to participate in this open-source project, and licensing options include AGPL-3.0 for open-source use and an Enterprise License for commercial integration. Users can easily set up the app by following the provided steps, including cloning the repository, adding YOLOv8 models, and running the app on their iOS devices.

brainwave

Brainwave is a modern animated SaaS generative AI landing page tool built using React JS, Vite JS, Javascript, and Tailwind CSS. It provides a visually appealing interface with features like AI chatbot, generative AI, modern animations, and onboarding section. Users can easily deploy the tool on Netlify and contribute to the project. The tool utilizes framer-motion, react-just-parallax, react-router-dom, scroll-lock, typewriter-effect, and other dependencies for enhanced functionality and user experience.

ollama4j

Ollama4j is a Java library that serves as a wrapper or binding for the Ollama server. It facilitates communication with the Ollama server and provides models for deployment. The tool requires Java 11 or higher and can be installed locally or via Docker. Users can integrate Ollama4j into Maven projects by adding the specified dependency. The tool offers API specifications and supports various development tasks such as building, running unit tests, and integration tests. Releases are automated through GitHub Actions CI workflow. Areas of improvement include adhering to Java naming conventions, updating deprecated code, implementing logging, using lombok, and enhancing request body creation. Contributions to the project are encouraged, whether reporting bugs, suggesting enhancements, or contributing code.

SuperAGI

SuperAGI is an open-source framework designed to build, manage, and run autonomous AI agents. It enables developers to create production-ready and scalable agents, extend agent capabilities with toolkits, and interact with agents through a graphical user interface. The framework allows users to connect to multiple Vector DBs, optimize token usage, store agent memory, utilize custom fine-tuned models, and automate tasks with predefined steps. SuperAGI also provides a marketplace for toolkits that enable agents to interact with external systems and third-party plugins.

awesome-saas

The Alchemyst Platform Cookbook is a comprehensive guide for developers and builders to bring their AI ideas to life. It provides cutting-edge AI tools and templates to empower users in creating innovative projects. The platform offers API documentation, quick start guides, official and community templates for various projects. Users can contribute to the platform by forking the repository, adding the topic 'alchemyst-awesome-saas', making their repository public, and submitting a pull request. Troubleshooting guidelines are provided for contributors. The platform is actively maintained by the Alchemyst AI Team.

genius-ai

Genius is a modern Next.js 14 SaaS AI platform that provides a comprehensive folder structure for app development. It offers features like authentication, dashboard management, landing pages, API integration, and more. The platform is built using React JS, Next JS, TypeScript, Tailwind CSS, and integrates with services like Netlify, Prisma, MySQL, and Stripe. Genius enables users to create AI-powered applications with functionalities such as conversation generation, image processing, code generation, and more. It also includes features like Clerk authentication, OpenAI integration, Replicate API usage, Aiven database connectivity, and Stripe API/webhook setup. The platform is fully configurable and provides a seamless development experience for building AI-driven applications.

duolingo-clone

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

assistant-ui

assistant-ui is a set of React components for AI chat, providing wide model provider support out of the box and the ability to integrate custom APIs. It includes integrations with Langchain, Vercel AI SDK, TailwindCSS, shadcn-ui, react-markdown, react-syntax-highlighter, React Hook Form, and more. The tool allows users to quickly create AI chat applications with pre-configured templates and easy setup steps.

stars

Ultralytics Analytics & Star Tracking is a tool to track GitHub stars, contributors, and PyPI downloads for Ultralytics projects. It provides real-time analytics updated daily, including total stars, forks, issues, pull requests, contributors, and public repositories. Users can access the analytics API for GitHub and PyPI downloads. The tool also offers historical star tracking for GitHub repositories, allowing users to analyze star growth over time. With a REST API and Python usage examples, users can easily retrieve and display analytics data. The repository structure includes scripts for unified analytics fetching, historical star tracking, and shared utilities. Contributions to the open-source community are encouraged, and the tool is available under AGPL-3.0 License for collaboration and an Enterprise License for commercial applications.

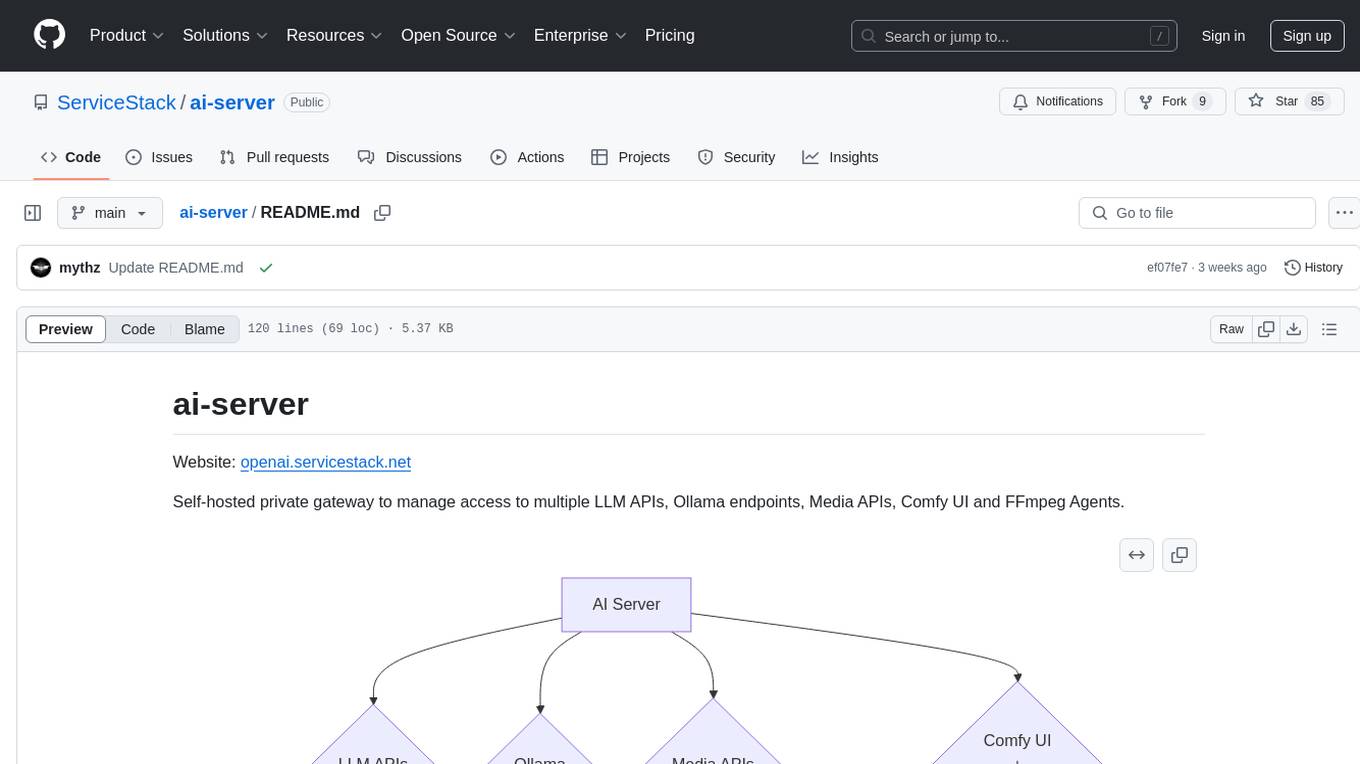

ai-server

AI Server is a self-hosted private gateway that orchestrates AI requests through a single integration, allowing control over AI providers like LLM, Diffusion, and image transformation. It dynamically delegates requests across various providers, including LLM APIs, Media APIs, and Comfy UI with FFmpeg Agents. The tool also offers built-in UIs for tasks like chat, text-to-image, image-to-text, image upscaling, speech-to-text, and text-to-speech. Additionally, it provides admin UIs for managing AI and media providers, API key access, and monitoring background jobs and AI requests.

FinVeda

FinVeda is a dynamic financial literacy app that aims to solve the problem of low financial literacy rates in India by providing a platform for financial education. It features an AI chatbot, finance blogs, market trends analysis, SIP calculator, and finance quiz to help users learn finance with finesse. The app is free and open-source, licensed under the GNU General Public License v3.0. FinVeda was developed at IIT Jammu's Udyamitsav'24 Hackathon, where it won first place in the GenAI track and third place overall.

nacos

Nacos is an easy-to-use platform designed for dynamic service discovery and configuration and service management. It helps build cloud native applications and microservices platform easily. Nacos provides functions like service discovery, health check, dynamic configuration management, dynamic DNS service, and service metadata management.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.