vim-airline

lean & mean status/tabline for vim that's light as air

Stars: 17696

Vim-airline is a lean and mean status/tabline plugin for Vim that provides a nice statusline at the bottom of each Vim window. It consists of several sections displaying information such as mode, environment status, filename, filetype, file encoding, and current position in the file. The plugin is highly customizable and integrates with various plugins, providing a tiny core with extensibility in mind. It is optimized for speed, supports multiple themes, and integrates seamlessly with other plugins. Vim-airline is written in 100% Vimscript, eliminating the need for Python. The plugin aims to be stable and includes a unit testing suite for reliability.

README:

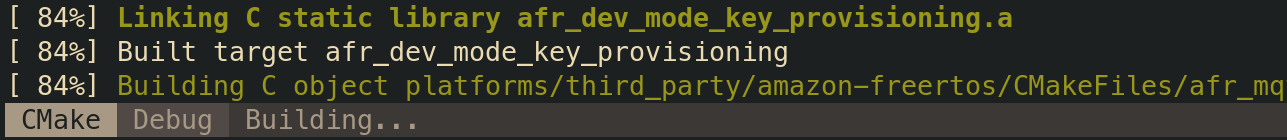

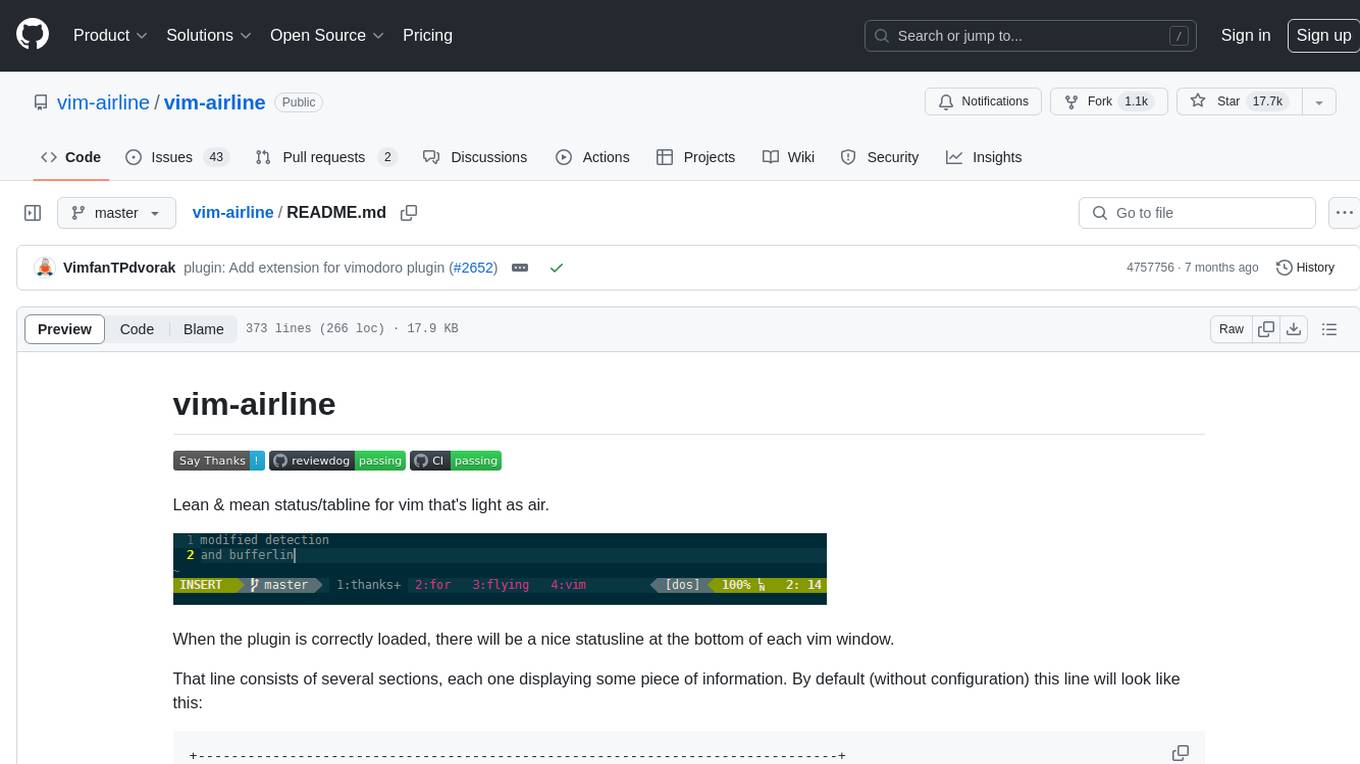

Lean & mean status/tabline for vim that's light as air.

When the plugin is correctly loaded, there will be a nice statusline at the bottom of each vim window.

That line consists of several sections, each one displaying some piece of information. By default (without configuration) this line will look like this:

+-----------------------------------------------------------------------------+

|~ |

|~ |

|~ VIM - Vi IMproved |

|~ |

|~ version 8.2 |

|~ by Bram Moolenaar et al. |

|~ Vim is open source and freely distributable |

|~ |

|~ type :h :q<Enter> to exit |

|~ type :help<Enter> or <F1> for on-line help |

|~ type :help version8<Enter> for version info |

|~ |

|~ |

+-----------------------------------------------------------------------------+

| A | B | C X | Y | Z | [...] |

+-----------------------------------------------------------------------------+

The statusline is the colored line at the bottom, which contains the sections (possibly in different colors):

| section | meaning (example) |

|---|---|

| A | displays the mode + additional flags like crypt/spell/paste (INSERT) |

| B | Environment status (VCS information - branch, hunk summary (master), battery level) |

| C | filename + read-only flag (~/.vim/vimrc RO) |

| X | filetype (vim) |

| Y | file encoding[fileformat] (utf-8[unix]) |

| Z | current position in the file |

| [...] | additional sections (warning/errors/statistics) from external plugins (e.g. YCM, syntastic, ...) |

The information in Section Z looks like this:

10% ☰ 10/100 ln : 20

This means:

10% - 10 percent down the top of the file

☰ 10 - current line 10

/100 ln - of 100 lines

: 20 - current column 20

For a better look, those sections can be colored differently, depending on various conditions (e.g. the mode or whether the current file is 'modified')

- Tiny core written with extensibility in mind (open/closed principle).

- Integrates with a variety of plugins, including: vim-bufferline, fugitive, flog, unite, ctrlp, minibufexpl, gundo, undotree, nerdtree, tagbar, vim-gitgutter, vim-signify, quickfixsigns, syntastic, eclim, lawrencium, virtualenv, tmuxline, taboo.vim, ctrlspace, vim-bufmru, vimagit, denite, vim.battery and more.

- Looks good with regular fonts and provides configuration points so you can use unicode or powerline symbols.

- Optimized for speed - loads in under a millisecond.

- Extensive suite of themes for popular color schemes including solarized (dark and light), tomorrow (all variants), base16 (all variants), molokai, jellybeans and others. Note these are now external to this plugin. More details can be found in the themes repository.

- Supports 7.2 as the minimum Vim version.

- The master branch tries to be as stable as possible, and new features are merged in only after they have gone through a full regression test.

- Unit testing suite.

This plugin follows the standard runtime path structure, and as such it can be installed with a variety of plugin managers:

| Plugin Manager | Install with... |

|---|---|

| Pathogen |

git clone https://github.com/vim-airline/vim-airline ~/.vim/bundle/vim-airlineRemember to run :Helptags to generate help tags |

| NeoBundle | NeoBundle 'vim-airline/vim-airline' |

| Vundle | Plugin 'vim-airline/vim-airline' |

| Plug | Plug 'vim-airline/vim-airline' |

| VAM | call vam#ActivateAddons([ 'vim-airline' ]) |

| Dein | call dein#add('vim-airline/vim-airline') |

| minpac | call minpac#add('vim-airline/vim-airline') |

| pack feature (native Vim 8 package feature) |

git clone https://github.com/vim-airline/vim-airline ~/.vim/pack/dist/start/vim-airlineRemember to run :helptags ~/.vim/pack/dist/start/vim-airline/doc to generate help tags |

| manual | copy all of the files into your ~/.vim directory |

If you don't like the defaults, you can replace all sections with standard statusline syntax. Give your statusline that you've built over the years a face lift.

Themes have moved to another repository as of this commit.

Install the themes as you would this plugin (Vundle example):

Plugin 'vim-airline/vim-airline'

Plugin 'vim-airline/vim-airline-themes'See vim-airline-themes for more.

Sections and parts within sections can be configured to automatically hide when the window size shrinks.

Automatically displays all buffers when there's only one tab open.

This is disabled by default; add the following to your vimrc to enable the extension:

let g:airline#extensions#tabline#enabled = 1

Separators can be configured independently for the tabline, so here is how you can define "straight" tabs:

let g:airline#extensions#tabline#left_sep = ' '

let g:airline#extensions#tabline#left_alt_sep = '|'

In addition, you can also choose which path formatter airline uses. This affects how file paths are

displayed in each individual tab as well as the current buffer indicator in the upper right.

To do so, set the formatter field with:

let g:airline#extensions#tabline#formatter = 'default'

Here is a complete list of formatters with screenshots:

vim-airline integrates with a variety of plugins out of the box. These extensions will be lazily loaded if and only if you have the other plugins installed (and of course you can turn them off).

hunks (vim-gitgutter, vim-signify, coc-git & gitsigns.nvim)

vim-airline also supplies some supplementary stand-alone extensions. In addition to the tabline extension mentioned earlier, there is also:

The statusline can alternatively be drawn on top, making room for other plugins to use the statusline:

The example shows a custom statusline setting, that imitates Vims default statusline, while allowing

to call custom functions. Use :let g:airline_statusline_ontop=1 to enable it.

Every section is composed of parts, and you can reorder and reconfigure them at will.

Sections can contain accents, which allows for very granular control of visuals (see configuration here).

Completely transform the statusline to your liking. Build out the statusline as you see fit by extracting colors from the current colorscheme's highlight groups.

There's already powerline, why yet another statusline?

- 100% vimscript; no python needed.

What about vim-powerline?

- vim-powerline has been deprecated in favor of the newer, unifying powerline, which is under active development; the new version is written in python at the core and exposes various bindings such that it can style statuslines not only in vim, but also tmux, bash, zsh, and others.

I wrote the initial version on an airplane, and since it's light as air it turned out to be a good name. Thanks for flying vim!

:help airline

For the nice looking powerline symbols to appear, you will need to install a patched font. Instructions can be found in the official powerline documentation. Prepatched fonts can be found in the powerline-fonts repository.

Finally, you can add the convenience variable let g:airline_powerline_fonts = 1 to your vimrc which will automatically populate the g:airline_symbols dictionary with the powerline symbols.

Solutions to common problems can be found in the Wiki.

Whoa! Everything got slow all of a sudden...

vim-airline strives to make it easy to use out of the box, which means that by default it will look for all compatible plugins that you have installed and enable the relevant extension.

Many optimizations have been made such that the majority of users will not see any performance degradation, but it can still happen. For example, users who routinely open very large files may want to disable the tagbar extension, as it can be very expensive to scan for the name of the current function.

The minivimrc project has some helper mappings to troubleshoot performance related issues.

If you don't want all the bells and whistles enabled by default, you can define a value for g:airline_extensions. When this variable is defined, only the extensions listed will be loaded; an empty array would effectively disable all extensions (e.g. :let g:airline_extensions = []).

Also, you can enable caching of the various syntax highlighting groups. This will try to prevent some of the more expensive :hi calls in Vim, which seem to be expensive in the Vim core at the expense of possibly not being one hundred percent correct all the time (especially if you often change highlighting groups yourself using :hi commands). To set this up do :let g:airline_highlighting_cache = 1. A :AirlineRefresh will however clear the cache.

In addition you might want to check out the dark_minimal theme, which does not change highlighting groups once they are defined. Also please check the FAQ for more information on how to diagnose and fix the problem.

A full list of screenshots for various themes can be found in the Wiki.

The project is currently being maintained by Christian Brabandt and Bailey Ling.

If you are interested in becoming a maintainer (we always welcome more maintainers), please go here.

MIT License. Copyright (c) 2013-2021 Bailey Ling & Contributors.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vim-airline

Similar Open Source Tools

vim-airline

Vim-airline is a lean and mean status/tabline plugin for Vim that provides a nice statusline at the bottom of each Vim window. It consists of several sections displaying information such as mode, environment status, filename, filetype, file encoding, and current position in the file. The plugin is highly customizable and integrates with various plugins, providing a tiny core with extensibility in mind. It is optimized for speed, supports multiple themes, and integrates seamlessly with other plugins. Vim-airline is written in 100% Vimscript, eliminating the need for Python. The plugin aims to be stable and includes a unit testing suite for reliability.

ComfyUI-IF_AI_tools

ComfyUI-IF_AI_tools is a set of custom nodes for ComfyUI that allows you to generate prompts using a local Large Language Model (LLM) via Ollama. This tool enables you to enhance your image generation workflow by leveraging the power of language models.

WildBench

WildBench is a tool designed for benchmarking Large Language Models (LLMs) with challenging tasks sourced from real users in the wild. It provides a platform for evaluating the performance of various models on a range of tasks. Users can easily add new models to the benchmark by following the provided guidelines. The tool supports models from Hugging Face and other APIs, allowing for comprehensive evaluation and comparison. WildBench facilitates running inference and evaluation scripts, enabling users to contribute to the benchmark and collaborate on improving model performance.

gemini-next-chat

Gemini Next Chat is an open-source, extensible high-performance Gemini chatbot framework that supports one-click free deployment of private Gemini web applications. It provides a simple interface with image recognition and voice conversation, supports multi-modal models, talk mode, visual recognition, assistant market, support plugins, conversation list, full Markdown support, privacy and security, PWA support, well-designed UI, fast loading speed, static deployment, and multi-language support.

RWKV-Runner

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

coreply

Coreply is an open-source Android app that provides texting suggestions while typing, enhancing the typing experience with intelligent, context-aware suggestions. It supports various texting apps and offers real-time AI suggestions, customizable LLM settings, and ensures no data collection. Users can install the app, configure it with an API key, and start receiving suggestions while typing in messaging apps. The tool supports different AI models from providers like OpenAI, Google AI Studio, Openrouter, Groq, and Codestral for chat completion and fill-in-the-middle tasks.

DaoCloud-docs

DaoCloud Enterprise 5.0 Documentation provides detailed information on using DaoCloud, a Certified Kubernetes Service Provider. The documentation covers current and legacy versions, workflow control using GitOps, and instructions for opening a PR and previewing changes locally. It also includes naming conventions, writing tips, references, and acknowledgments to contributors. Users can find guidelines on writing, contributing, and translating pages, along with using tools like MkDocs, Docker, and Poetry for managing the documentation.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

renpy-translator

Renpy Translator is a free and open-source tool designed for translating Ren'py games. It supports various translation services such as Google, Youdao, Deepl, OpenAI, and more. The tool can automatically translate game content, extract untranslated words, replace fonts, and add language preferences. It aims to assist in game translation work by providing a user-friendly interface and supporting multiple languages. The translated contents may not be accurate due to auto-translation, so users are encouraged to review and modify translations as needed.

EmbodiedScan

EmbodiedScan is a holistic multi-modal 3D perception suite designed for embodied AI. It introduces a multi-modal, ego-centric 3D perception dataset and benchmark for holistic 3D scene understanding. The dataset includes over 5k scans with 1M ego-centric RGB-D views, 1M language prompts, 160k 3D-oriented boxes spanning 760 categories, and dense semantic occupancy with 80 common categories. The suite includes a baseline framework named Embodied Perceptron, capable of processing multi-modal inputs for 3D perception tasks and language-grounded tasks.

VideoTuna

VideoTuna is a codebase for text-to-video applications that integrates multiple AI video generation models for text-to-video, image-to-video, and text-to-image generation. It provides comprehensive pipelines in video generation, including pre-training, continuous training, post-training, and fine-tuning. The models in VideoTuna include U-Net and DiT architectures for visual generation tasks, with upcoming releases of a new 3D video VAE and a controllable facial video generation model.

intense-rp-next

IntenseRP Next v2 is a local OpenAI-compatible API + desktop app that bridges an OpenAI-style client (like SillyTavern) with provider web apps (DeepSeek, GLM Chat, Moonshot) by starting a local FastAPI server, launching a real Chromium session, intercepting streaming network responses, and re-emitting them as OpenAI-style SSE deltas for the client. It provides free-ish access to provider web models via the official web apps, a clicky desktop app experience, and occasional wait times due to web app changes. The tool is designed for local or LAN use and comes with built-in logging, update flows, and support for DeepSeek, GLM Chat, and Moonshot provider apps.

ChatTTS

ChatTTS is a generative speech model optimized for dialogue scenarios, providing natural and expressive speech synthesis with fine-grained control over prosodic features. It supports multiple speakers and surpasses most open-source TTS models in terms of prosody. The model is trained with 100,000+ hours of Chinese and English audio data, and the open-source version on HuggingFace is a 40,000-hour pre-trained model without SFT. The roadmap includes open-sourcing additional features like VQ encoder, multi-emotion control, and streaming audio generation. The tool is intended for academic and research use only, with precautions taken to limit potential misuse.

fiftyone

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

OpenMusic

OpenMusic is a repository providing an implementation of QA-MDT, a Quality-Aware Masked Diffusion Transformer for music generation. The code integrates state-of-the-art models and offers training strategies for music generation. The repository includes implementations of AudioLDM, PixArt-alpha, MDT, AudioMAE, and Open-Sora. Users can train or fine-tune the model using different strategies and datasets. The model is well-pretrained and can be used for music generation tasks. The repository also includes instructions for preparing datasets, training the model, and performing inference. Contact information is provided for any questions or suggestions regarding the project.

For similar tasks

vim-airline

Vim-airline is a lean and mean status/tabline plugin for Vim that provides a nice statusline at the bottom of each Vim window. It consists of several sections displaying information such as mode, environment status, filename, filetype, file encoding, and current position in the file. The plugin is highly customizable and integrates with various plugins, providing a tiny core with extensibility in mind. It is optimized for speed, supports multiple themes, and integrates seamlessly with other plugins. Vim-airline is written in 100% Vimscript, eliminating the need for Python. The plugin aims to be stable and includes a unit testing suite for reliability.

langevals

LangEvals is an all-in-one Python library for testing and evaluating LLM models. It can be used in notebooks for exploration, in pytest for writing unit tests, or as a server API for live evaluations and guardrails. The library is modular, with 20+ evaluators including Ragas for RAG quality, OpenAI Moderation, and Azure Jailbreak detection. LangEvals powers LangWatch evaluations and provides tools for batch evaluations on notebooks and unit test evaluations with PyTest. It also offers LangEvals evaluators for LLM-as-a-Judge scenarios and out-of-the-box evaluators for language detection and answer relevancy checks.

aitour26-WRK541-real-world-code-migration-with-github-copilot-agent-mode

Microsoft AI Tour 2026 WRK541 is a workshop focused on real-world code migration using GitHub Copilot Agent Mode. The session is designed for technologists interested in applying AI pair-programming techniques to challenging tasks like migrating or translating code between different programming languages. Participants will learn advanced GitHub Copilot techniques, differences between Python and C#, JSON serialization and deserialization in C#, developing and validating endpoints, integrating Swagger/OpenAPI documentation, and writing unit tests with MSTest. The workshop aims to help users gain hands-on experience in using GitHub Copilot for real-world code migration scenarios.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.