RWKV-Runner

A RWKV management and startup tool, full automation, only 8MB. And provides an interface compatible with the OpenAI API. RWKV is a large language model that is fully open source and available for commercial use.

Stars: 5751

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

README:

This project aims to eliminate the barriers of using large language models by automating everything for you. All you need is a lightweight executable program of just a few megabytes. Additionally, this project provides an interface compatible with the OpenAI API, which means that every ChatGPT client is an RWKV client.

FAQs | Preview | Download | Simple Deploy Example | Server Deploy Examples | MIDI Hardware Input

-

You can deploy backend-python on a server and use this program as a client only. Fill in your server address in the Settings

API URL. -

If you are deploying and providing public services, please limit the request size through API gateway to prevent excessive resource usage caused by submitting overly long prompts. Additionally, please restrict the upper limit of requests' max_tokens based on your actual situation: https://github.com/josStorer/RWKV-Runner/blob/master/backend-python/utils/rwkv.py#L567, the default is set as le=102400, which may result in significant resource consumption for individual responses in extreme cases.

-

Default configs has enabled custom CUDA kernel acceleration, which is much faster and consumes much less VRAM. If you encounter possible compatibility issues (output garbled), go to the Configs page and turn off

Use Custom CUDA kernel to Accelerate, or try to upgrade your gpu driver. -

If Windows Defender claims this is a virus, you can try downloading v1.3.7_win.zip and letting it update automatically to the latest version, or add it to the trusted list (

Windows Security->Virus & threat protection->Manage settings->Exclusions->Add or remove exclusions->Add an exclusion->Folder->RWKV-Runner). -

For different tasks, adjusting API parameters can achieve better results. For example, for translation tasks, you can try setting Temperature to 1 and Top_P to 0.3.

- RWKV model management and one-click startup.

- Front-end and back-end separation, if you don't want to use the client, also allows for separately deploying the front-end service, or the back-end inference service, or the back-end inference service with a WebUI. Simple Deploy Example | Server Deploy Examples

- Compatible with the OpenAI API, making every ChatGPT client an RWKV client. After starting the model, open http://127.0.0.1:8000/docs to view more details.

- Automatic dependency installation, requiring only a lightweight executable program.

- Pre-set multi-level VRAM configs, works well on almost all computers. In Configs page, switch Strategy to WebGPU, it can also run on AMD, Intel, and other graphics cards.

- User-friendly chat, completion, and composition interaction interface included. Also supports chat presets, attachment uploads, MIDI hardware input, and track editing. Preview | MIDI Hardware Input

- Built-in WebUI option, one-click start of Web service, sharing your hardware resources.

- Easy-to-understand and operate parameter configuration, along with various operation guidance prompts.

- Built-in model conversion tool.

- Built-in download management and remote model inspection.

- Built-in one-click LoRA Finetune. (Windows Only)

- Can also be used as an OpenAI ChatGPT, GPT-Playground, Ollama and more clients. (Fill in the API URL and API Key in Settings page)

- Multilingual localization.

- Theme switching.

- Automatic updates.

git clone https://github.com/josStorer/RWKV-Runner

# Then

cd RWKV-Runner

python ./backend-python/main.py #The backend inference service has been started, request /switch-model API to load the model, refer to the API documentation: http://127.0.0.1:8000/docs

# Or

cd RWKV-Runner/frontend

npm ci

npm run build #Compile the frontend

cd ..

python ./backend-python/webui_server.py #Start the frontend service separately

# Or

python ./backend-python/main.py --webui #Start the frontend and backend service at the same time

# Help Info

python ./backend-python/main.py -hab -p body.json -T application/json -c 20 -n 100 -l http://127.0.0.1:8000/chat/completionsbody.json:

{

"messages": [

{

"role": "user",

"content": "Hello"

}

]

}Note: v1.4.0 has improved the quality of embeddings API. The generated results are not compatible with previous versions. If you are using embeddings API to generate knowledge bases or similar, please regenerate.

If you are using langchain, just use OpenAIEmbeddings(openai_api_base="http://127.0.0.1:8000", openai_api_key="sk-")

import numpy as np

import requests

def cosine_similarity(a, b):

return np.dot(a, b) / (np.linalg.norm(a) * np.linalg.norm(b))

values = [

"I am a girl",

"我是个女孩",

"私は女の子です",

"广东人爱吃福建人",

"我是个人类",

"I am a human",

"that dog is so cute",

"私はねこむすめです、にゃん♪",

"宇宙级特大事件!号外号外!"

]

embeddings = []

for v in values:

r = requests.post("http://127.0.0.1:8000/embeddings", json={"input": v})

embedding = r.json()["data"][0]["embedding"]

embeddings.append(embedding)

compared_embedding = embeddings[0]

embeddings_cos_sim = [cosine_similarity(compared_embedding, e) for e in embeddings]

for i in np.argsort(embeddings_cos_sim)[::-1]:

print(f"{embeddings_cos_sim[i]:.10f} - {values[i]}")Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's assets/sound-font directory

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

it in the source code directory.

If you don't have a MIDI keyboard, you can use virtual MIDI input software like Virtual Midi Controller 3 LE, along

with loopMIDI, to use a regular

computer keyboard as MIDI input.

- For Mac users who want to use Bluetooth input, please install Bluetooth MIDI Connect, then click the tray icon to connect after launching, afterwards, you can select your input device in the Composition page.

- Windows seems to have implemented Bluetooth MIDI support only for UWP (Universal Windows Platform) apps. Therefore, it requires multiple steps to establish a connection. We need to create a local virtual MIDI device and then launch a UWP application. Through this UWP application, we will redirect Bluetooth MIDI input to the virtual MIDI device, and then this software will listen to the input from the virtual MIDI device.

- So, first, you need to download loopMIDI to create a virtual MIDI device. Click the plus sign in the bottom left corner to create the device.

- Next, you need to download Bluetooth LE Explorer to discover and connect to Bluetooth MIDI devices. Click "Start" to search for devices, and then click "Pair" to bind the MIDI device.

- Finally, you need to install MIDIberry, This UWP application can redirect Bluetooth MIDI input to the virtual MIDI device. After launching it, double-click your actual Bluetooth MIDI device name in the input field, and in the output field, double-click the virtual MIDI device name we created earlier.

- Now, you can select the virtual MIDI device as the input in the Composition page. Bluetooth LE Explorer no longer needs to run, and you can also close the loopMIDI window, it will run automatically in the background. Just keep MIDIberry open.

- RWKV-5-World: https://huggingface.co/BlinkDL/rwkv-5-world/tree/main

- RWKV-4-World: https://huggingface.co/BlinkDL/rwkv-4-world/tree/main

- RWKV-4-Raven: https://huggingface.co/BlinkDL/rwkv-4-raven/tree/main

- ChatRWKV: https://github.com/BlinkDL/ChatRWKV

- RWKV-LM: https://github.com/BlinkDL/RWKV-LM

- RWKV-LM-LoRA: https://github.com/Blealtan/RWKV-LM-LoRA

- RWKV-v5-lora: https://github.com/JL-er/RWKV-v5-lora

- MIDI-LLM-tokenizer: https://github.com/briansemrau/MIDI-LLM-tokenizer

- ai00_rwkv_server: https://github.com/cgisky1980/ai00_rwkv_server

- rwkv.cpp: https://github.com/saharNooby/rwkv.cpp

- web-rwkv-py: https://github.com/cryscan/web-rwkv-py

- web-rwkv: https://github.com/cryscan/web-rwkv

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's assets/sound-font directory

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

it in the source code directory.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RWKV-Runner

Similar Open Source Tools

RWKV-Runner

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

gemini-next-chat

Gemini Next Chat is an open-source, extensible high-performance Gemini chatbot framework that supports one-click free deployment of private Gemini web applications. It provides a simple interface with image recognition and voice conversation, supports multi-modal models, talk mode, visual recognition, assistant market, support plugins, conversation list, full Markdown support, privacy and security, PWA support, well-designed UI, fast loading speed, static deployment, and multi-language support.

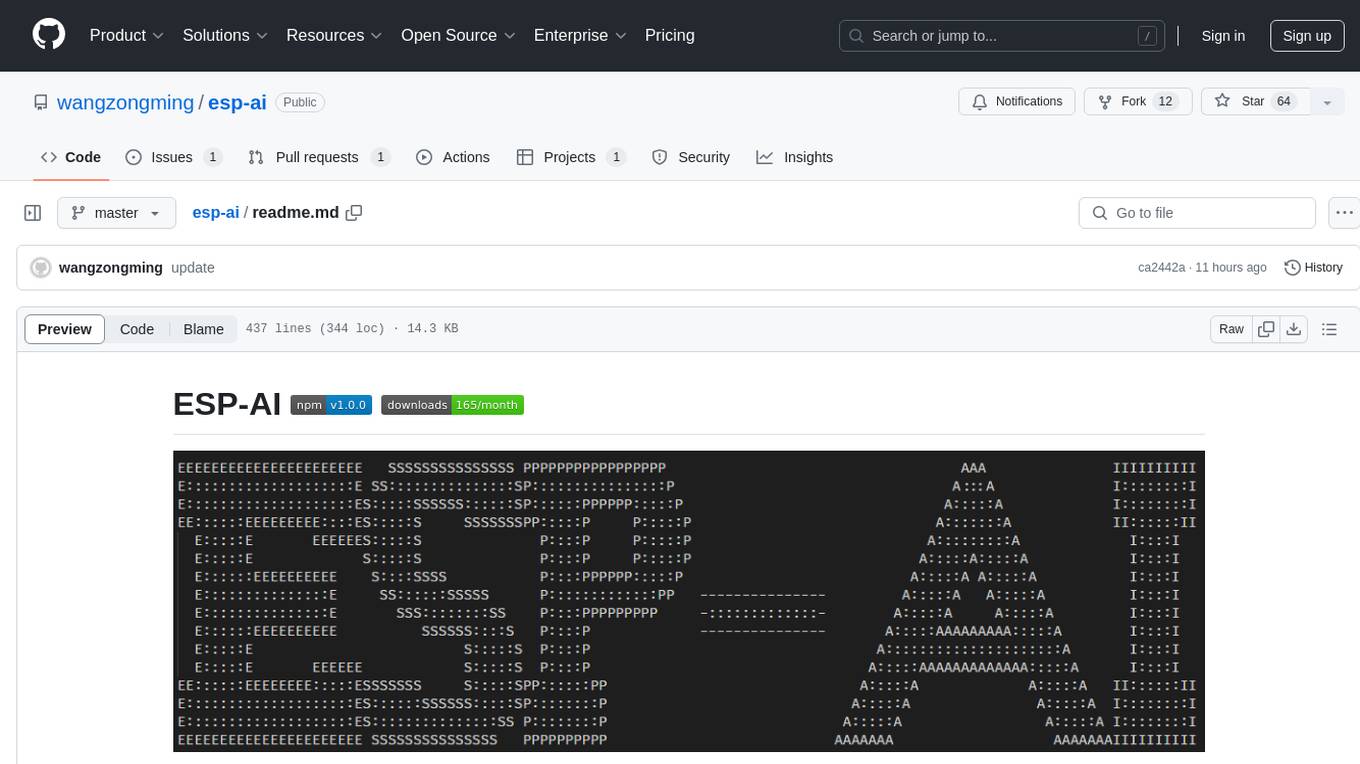

esp-ai

ESP-AI provides a complete AI conversation solution for your development board, including IAT+LLM+TTS integration solutions for ESP32 series development boards. It can be injected into projects without affecting existing ones. By providing keys from platforms like iFlytek, Jiling, and local services, you can run the services without worrying about interactions between services or between development boards and services. The project's server-side code is based on Node.js, and the hardware code is based on Arduino IDE.

Windows-Use

Windows-Use is a powerful automation agent that interacts directly with the Windows OS at the GUI layer. It bridges the gap between AI agents and Windows to perform tasks such as opening apps, clicking buttons, typing, executing shell commands, and capturing UI state without relying on traditional computer vision models. It enables any large language model (LLM) to perform computer automation instead of relying on specific models for it.

ragflow

RAGFlow is an open-source Retrieval-Augmented Generation (RAG) engine that combines deep document understanding with Large Language Models (LLMs) to provide accurate question-answering capabilities. It offers a streamlined RAG workflow for businesses of all sizes, enabling them to extract knowledge from unstructured data in various formats, including Word documents, slides, Excel files, images, and more. RAGFlow's key features include deep document understanding, template-based chunking, grounded citations with reduced hallucinations, compatibility with heterogeneous data sources, and an automated and effortless RAG workflow. It supports multiple recall paired with fused re-ranking, configurable LLMs and embedding models, and intuitive APIs for seamless integration with business applications.

kubewall

kubewall is an open-source, single-binary Kubernetes dashboard with multi-cluster management and AI integration. It provides a simple and rich real-time interface to manage and investigate your clusters. With features like multi-cluster management, AI-powered troubleshooting, real-time monitoring, single-binary deployment, in-depth resource views, browser-based access, search and filter capabilities, privacy by default, port forwarding, live refresh, aggregated pod logs, and clean resource management, kubewall offers a comprehensive solution for Kubernetes cluster management.

Sunshine-AIO

Sunshine-AIO is an all-in-one step-by-step guide to set up Sunshine with all necessary tools for Windows users. It provides a dedicated display for game streaming, virtual monitor switching, automatic resolution adjustment, resource-saving features, game launcher integration, and stream management. The project aims to evolve into an AIO tool as it progresses, welcoming contributions from users.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

allchat

ALLCHAT is a Node.js backend and React MUI frontend for an application that interacts with the Gemini Pro 1.5 (and others), with history, image generating/recognition, PDF/Word/Excel upload, code run, model function calls and markdown support. It is a comprehensive tool that allows users to connect models to the world with Web Tools, run locally, deploy using Docker, configure Nginx, and monitor the application using a dockerized monitoring solution (Loki+Grafana).

browser-use

Browser Use is a tool designed to make websites accessible for AI agents. It provides an easy way to connect AI agents with the browser, enabling users to perform tasks such as extracting vision and HTML elements, managing multiple tabs, and executing custom actions. The tool supports various language models and allows users to parallelize multiple agents for efficient processing. With features like self-correction and the ability to register custom actions, Browser Use offers a versatile solution for interacting with web content using AI technology.

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

mmore

MMORE is an open-source, end-to-end pipeline for ingesting, processing, indexing, and retrieving knowledge from various file types such as PDFs, Office docs, images, audio, video, and web pages. It standardizes content into a unified multimodal format, supports distributed CPU/GPU processing, and offers hybrid dense+sparse retrieval with an integrated RAG service through CLI and APIs.

modelscope-agent

ModelScope-Agent is a customizable and scalable Agent framework. A single agent has abilities such as role-playing, LLM calling, tool usage, planning, and memory. It mainly has the following characteristics: - **Simple Agent Implementation Process**: Simply specify the role instruction, LLM name, and tool name list to implement an Agent application. The framework automatically arranges workflows for tool usage, planning, and memory. - **Rich models and tools**: The framework is equipped with rich LLM interfaces, such as Dashscope and Modelscope model interfaces, OpenAI model interfaces, etc. Built in rich tools, such as **code interpreter**, **weather query**, **text to image**, **web browsing**, etc., make it easy to customize exclusive agents. - **Unified interface and high scalability**: The framework has clear tools and LLM registration mechanism, making it convenient for users to expand more diverse Agent applications. - **Low coupling**: Developers can easily use built-in tools, LLM, memory, and other components without the need to bind higher-level agents.

Devon

Devon is an open-source pair programmer tool designed to facilitate collaborative coding sessions. It provides features such as multi-file editing, codebase exploration, test writing, bug fixing, and architecture exploration. The tool supports Anthropic, OpenAI, and Groq APIs, with plans to add more models in the future. Devon is community-driven, with ongoing development goals including multi-model support, plugin system for tool builders, self-hostable Electron app, and setting SOTA on SWE-bench Lite. Users can contribute to the project by developing core functionality, conducting research on agent performance, providing feedback, and testing the tool.

kantv

KanTV is an open-source project that focuses on studying and practicing state-of-the-art AI technology in real applications and scenarios, such as online TV playback, transcription, translation, and video/audio recording. It is derived from the original ijkplayer project and includes many enhancements and new features, including: * Watching online TV and local media using a customized FFmpeg 6.1. * Recording online TV to automatically generate videos. * Studying ASR (Automatic Speech Recognition) using whisper.cpp. * Studying LLM (Large Language Model) using llama.cpp. * Studying SD (Text to Image by Stable Diffusion) using stablediffusion.cpp. * Generating real-time English subtitles for English online TV using whisper.cpp. * Running/experiencing LLM on Xiaomi 14 using llama.cpp. * Setting up a customized playlist and using the software to watch the content for R&D activity. * Refactoring the UI to be closer to a real commercial Android application (currently only supports English). Some goals of this project are: * To provide a well-maintained "workbench" for ASR researchers interested in practicing state-of-the-art AI technology in real scenarios on mobile devices (currently focusing on Android). * To provide a well-maintained "workbench" for LLM researchers interested in practicing state-of-the-art AI technology in real scenarios on mobile devices (currently focusing on Android). * To create an Android "turn-key project" for AI experts/researchers (who may not be familiar with regular Android software development) to focus on device-side AI R&D activity, where part of the AI R&D activity (algorithm improvement, model training, model generation, algorithm validation, model validation, performance benchmark, etc.) can be done very easily using Android Studio IDE and a powerful Android phone.

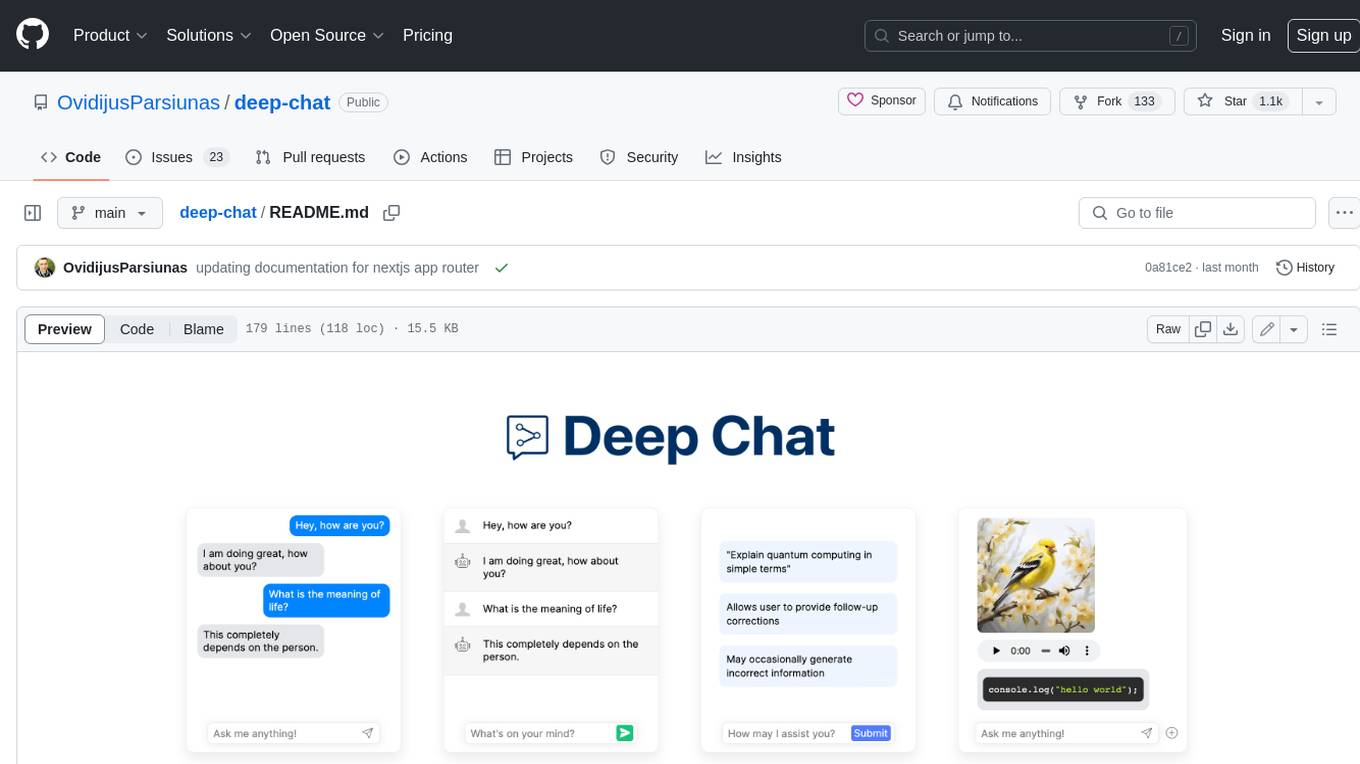

deep-chat

Deep Chat is a fully customizable AI chat component that can be injected into your website with minimal to no effort. Whether you want to create a chatbot that leverages popular APIs such as ChatGPT or connect to your own custom service, this component can do it all! Explore deepchat.dev to view all of the available features, how to use them, examples and more!

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.