smile

Statistical Machine Intelligence & Learning Engine

Stars: 6336

Smile (Statistical Machine Intelligence and Learning Engine) is a comprehensive machine learning, NLP, linear algebra, graph, interpolation, and visualization system in Java and Scala. It covers every aspect of machine learning, including classification, regression, clustering, association rule mining, feature selection, manifold learning, multidimensional scaling, genetic algorithms, missing value imputation, efficient nearest neighbor search, etc. Smile implements major machine learning algorithms and provides interactive shells for Java, Scala, and Kotlin. It supports model serialization, data visualization using SmilePlot and declarative approach, and offers a gallery showcasing various algorithms and visualizations.

README:

SMILE (Statistical Machine Intelligence & Learning Engine) is a fast and comprehensive machine learning framework in Java. SMILE v5.x requires Java 25, v4.x requires Java 21, and all previous versions require Java 8. SMILE also provides APIs in Scala and Kotlin with corresponding language paradigms. With advanced data structures and algorithms, SMILE delivers state-of-art performance. SMILE covers every aspect of machine learning, including deep learning, large language models, classification, regression, clustering, association rule mining, feature selection and extraction, manifold learning, multidimensional scaling, genetic algorithms, missing value imputation, efficient nearest neighbor search, etc. Furthermore, SMILE also provides advanced algorithms for graph, linear algebra, numerical analysis, interpolation, computer algebra system for symbolic manipulations, and data visualization.

SMILE implements the following major machine learning algorithms:

-

LLM: Native Java implementation of Llama 3.1, tiktoken tokenizer, high performance LLM inference server with OpenAI-compatible APIs and SSE-based chat streaming, fully functional frontend.

-

Deep Learning: Deep learning with CPU and GPU. EfficientNet model for image classification.

-

Classification: Support Vector Machines, Decision Trees, AdaBoost, Gradient Boosting, Random Forest, Logistic Regression, Neural Networks, RBF Networks, Maximum Entropy Classifier, KNN, Naïve Bayesian, Fisher/Linear/Quadratic/Regularized Discriminant Analysis.

-

Regression: Support Vector Regression, Gaussian Process, Regression Trees, Gradient Boosting, Random Forest, RBF Networks, OLS, LASSO, ElasticNet, Ridge Regression.

-

Feature Selection: Genetic Algorithm based Feature Selection, Ensemble Learning based Feature Selection, TreeSHAP, Signal Noise ratio, Sum Squares ratio.

-

Clustering: BIRCH, CLARANS, DBSCAN, DENCLUE, Deterministic Annealing, K-Means, X-Means, G-Means, Neural Gas, Growing Neural Gas, Hierarchical Clustering, Sequential Information Bottleneck, Self-Organizing Maps, Spectral Clustering, Minimum Entropy Clustering.

-

Association Rule & Frequent Itemset Mining: FP-growth mining algorithm.

-

Manifold Learning: IsoMap, LLE, Laplacian Eigenmap, t-SNE, UMAP, PCA, Kernel PCA, Probabilistic PCA, GHA, Random Projection, ICA.

-

Multi-Dimensional Scaling: Classical MDS, Isotonic MDS, Sammon Mapping.

-

Nearest Neighbor Search: BK-Tree, Cover Tree, KD-Tree, SimHash, LSH.

-

Sequence Learning: Hidden Markov Model, Conditional Random Field.

-

Natural Language Processing: Sentence Splitter and Tokenizer, Bigram Statistical Test, Phrase Extractor, Keyword Extractor, Stemmer, POS Tagging, Relevance Ranking

SMILE employs a dual license model designed to meet the development and distribution needs of both commercial distributors (such as OEMs, ISVs and VARs) and open source projects. For details, please see LICENSE. To acquire a commercial license, please contact [email protected].

-

Discussion/Questions: If you wish to ask questions about SMILE, we're active on GitHub Discussions and Stack Overflow.

-

Docs: SMILE is well documented and our docs are available online, where you can find tutorial, programming guides, and more information. If you'd like to help improve the docs, they're part of this repository in the

web/srcdirectory. Java Docs, Scala Docs, Kotlin Docs, and Clojure Docs are also available. -

Issues/Feature Requests: Finally, any bugs or features, please report to our issue tracker.

You can use the libraries through Maven central repository by adding the following to your project pom.xml file.

<dependency>

<groupId>com.github.haifengl</groupId>

<artifactId>smile-core</artifactId>

<version>5.2.0</version>

</dependency>

For deep learning and NLP, use the artifactId smile-deep and smile-nlp, respectively.

For Scala API, please add the below into your sbt script.

libraryDependencies += "com.github.haifengl" %% "smile-scala" % "5.2.0"

For Kotlin API, add the below into the dependencies section

of Gradle build script.

implementation("com.github.haifengl:smile-kotlin:5.2.0")

Some algorithms rely on BLAS and LAPACK (e.g. manifold learning,

some clustering algorithms, Gaussian Process regression, MLP, etc.).

To use these algorithms in SMILE v5.x, you should install OpenBLAS and ARPACK

for optimized matrix computation. For Windows, you can find the pre-built

DLL files from the bin directory of release packages. Make sure to add this

directory to PATH environment variable.

To install on Linux (e.g., Ubuntu), run

sudo apt update

sudo apt install libopenblas-dev libarpack2On Mac, we use the BLAS library from the Accelerate framework provided by macOS. But you should install ARPACK by running

brew install arpackHowever, macOS System Integrity Protection (SIP) significantly impacts how JVM handles dynamic library loading by purging dynamic linker (DYLD) environment variables like DYLD_LIBRARY_PATH when launching protected processes. A simple workaround is to copy /opt/homebrew/lib/libarpack.dylib to your working directory so that JVM can successfully load it.

For SMILE v4.x, OpenBLAS and ARPACK libraries can be added to your project with the following dependencies.

libraryDependencies ++= Seq(

"org.bytedeco" % "javacpp" % "1.5.11" classifier "macosx-arm64" classifier "macosx-x86_64" classifier "windows-x86_64" classifier "linux-x86_64",

"org.bytedeco" % "openblas" % "0.3.28-1.5.11" classifier "macosx-arm64" classifier "macosx-x86_64" classifier "windows-x86_64" classifier "linux-x86_64",

"org.bytedeco" % "arpack-ng" % "3.9.1-1.5.11" classifier "macosx-x86_64" classifier "windows-x86_64" classifier "linux-x86_64"

)

In this example, we include all supported 64-bit platforms and filter out 32-bit platforms. The user should include only the needed platforms to save spaces.

SMILE Studio is an interactive desktop application to help you be more productive in building and serving models with SMILE. Similar to Jupyter Notebooks, SMILE Studio is a REPL (Read-Evaluate-Print-Loop) containing an ordered list of input/output cells.

Download pre-packaged SMILE from the

releases page.

After unziping the package and cd into the bin directory of SMILE

in a terminal, type

./smileto enter SMILE Studio. If you work in a headless environment without

graphical interface, you may run ./smile shell to enter SMILE Shell

for Java, which pre-imports all major SMILE packages. If you prefer

Scala, type ./smile scala to enter SMILE Shell for Scala.

By default, the Studio/Shell uses up to 4GB memory. If you need more memory

to handle large data, use the option -J-Xmx or -XX:MaxRAMPercentage.

For example,

./smile -J-Xmx30GYou can also modify the configuration file conf/smile.ini for the

memory and other JVM settings.

Most models support the Java Serializable interface (all classifiers

do support Serializable interface) so that you can serialze a model

and ship it to a production environment for inference. You may also

use serialized models in other systems such as Spark.

A picture is worth a thousand words. In machine learning, we usually handle

high-dimensional data, which is impossible to draw on display directly.

But a variety of statistical plots are tremendously valuable for us to grasp

the characteristics of many data points. SMILE provides data visualization tools

such as plots and maps for researchers to understand information more easily and quickly.

To use smile-plot, add the following to dependencies

<dependency>

<groupId>com.github.haifengl</groupId>

<artifactId>smile-plot</artifactId>

<version>5.2.0</version>

</dependency>

On Swing-based systems, the user may leverage smile.plot.swing package to

create a variety of plots such as scatter plot, line plot, staircase plot,

bar plot, box plot, histogram, 3D histogram, dendrogram, heatmap, hexmap,

QQ plot, contour plot, surface, and wireframe.

This library also support data visualization in declarative approach.

With smile.plot.vega package, we can create a specification

that describes visualizations as mappings from data to properties

of graphical marks (e.g., points or bars). The specification is

based on Vega-Lite. In a web browser,

the Vega-Lite compiler automatically produces visualization components

including axes, legends, and scales. It then determines properties

of these components based on a set of carefully designed rules.

Please read the contributing.md on how to build and test SMILE.

- Haifeng Li (@haifengl)

- Karl Li (@kklioss)

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for smile

Similar Open Source Tools

smile

Smile (Statistical Machine Intelligence and Learning Engine) is a comprehensive machine learning, NLP, linear algebra, graph, interpolation, and visualization system in Java and Scala. It covers every aspect of machine learning, including classification, regression, clustering, association rule mining, feature selection, manifold learning, multidimensional scaling, genetic algorithms, missing value imputation, efficient nearest neighbor search, etc. Smile implements major machine learning algorithms and provides interactive shells for Java, Scala, and Kotlin. It supports model serialization, data visualization using SmilePlot and declarative approach, and offers a gallery showcasing various algorithms and visualizations.

starwhale

Starwhale is an MLOps/LLMOps platform that brings efficiency and standardization to machine learning operations. It streamlines the model development lifecycle, enabling teams to optimize workflows around key areas like model building, evaluation, release, and fine-tuning. Starwhale abstracts Model, Runtime, and Dataset as first-class citizens, providing tailored capabilities for common workflow scenarios including Models Evaluation, Live Demo, and LLM Fine-tuning. It is an open-source platform designed for clarity and ease of use, empowering developers to build customized MLOps features tailored to their needs.

dLLM-RL

dLLM-RL is a revolutionary reinforcement learning framework designed for Diffusion Large Language Models. It supports various models with diverse structures, offers inference acceleration, RL training capabilities, and SFT functionalities. The tool introduces TraceRL for trajectory-aware RL and diffusion-based value models for optimization stability. Users can download and try models like TraDo-4B-Instruct and TraDo-8B-Instruct. The tool also provides support for multi-node setups and easy building of reinforcement learning methods. Additionally, it offers supervised fine-tuning strategies for different models and tasks.

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

cognee

Cognee is an open-source framework designed for creating self-improving deterministic outputs for Large Language Models (LLMs) using graphs, LLMs, and vector retrieval. It provides a platform for AI engineers to enhance their models and generate more accurate results. Users can leverage Cognee to add new information, utilize LLMs for knowledge creation, and query the system for relevant knowledge. The tool supports various LLM providers and offers flexibility in adding different data types, such as text files or directories. Cognee aims to streamline the process of working with LLMs and improving AI models for better performance and efficiency.

slideflow

Slideflow is a deep learning library for digital pathology, offering a user-friendly interface for model development. It is designed for medical researchers and AI enthusiasts, providing an accessible platform for developing state-of-the-art pathology models. Slideflow offers customizable training pipelines, robust slide processing and stain normalization toolkit, support for weakly-supervised or strongly-supervised labels, built-in foundation models, multiple-instance learning, self-supervised learning, generative adversarial networks, explainability tools, layer activation analysis tools, uncertainty quantification, interactive user interface for model deployment, and more. It supports both PyTorch and Tensorflow, with optional support for Libvips for slide reading. Slideflow can be installed via pip, Docker container, or from source, and includes non-commercial add-ons for additional tools and pretrained models. It allows users to create projects, extract tiles from slides, train models, and provides evaluation tools like heatmaps and mosaic maps.

AIQC

AIQC is an open source Python package that provides a declarative API for end-to-end MLOps in order to make deep learning more accessible to researchers. It utilizes a SQLite object-relational model for machine learning objects and stacks standardized workflows for various analyses, data types, and libraries. The benefits include a 90% reduction in data wrangling, reproducibility, and no need to install and maintain application and database servers for experiment tracking. AIQC is pip-installable and provides a Dash-Plotly UI for real-time experiment tracking.

MInference

MInference is a tool designed to accelerate pre-filling for long-context Language Models (LLMs) by leveraging dynamic sparse attention. It achieves up to a 10x speedup for pre-filling on an A100 while maintaining accuracy. The tool supports various decoding LLMs, including LLaMA-style models and Phi models, and provides custom kernels for attention computation. MInference is useful for researchers and developers working with large-scale language models who aim to improve efficiency without compromising accuracy.

sdialog

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction, user simulation, dialog generation, and evaluation in a single reproducible workflow, enabling the generation of reliable, controllable dialog systems or data at scale. The toolkit standardizes a Dialog schema, offers persona-driven multi-agent simulation with LLMs, provides composable orchestration for precise control over behavior and flow, includes built-in evaluation metrics, and offers mechanistic interpretability. It allows for easy creation of user-defined components and interoperability across various AI platforms.

SeerAttention

SeerAttention is a novel trainable sparse attention mechanism that learns intrinsic sparsity patterns directly from LLMs through self-distillation at post-training time. It achieves faster inference while maintaining accuracy for long-context prefilling. The tool offers features such as trainable sparse attention, block-level sparsity, self-distillation, efficient kernel, and easy integration with existing transformer architectures. Users can quickly start using SeerAttention for inference with AttnGate Adapter and training attention gates with self-distillation. The tool provides efficient evaluation methods and encourages contributions from the community.

mmore

MMORE is an open-source, end-to-end pipeline for ingesting, processing, indexing, and retrieving knowledge from various file types such as PDFs, Office docs, images, audio, video, and web pages. It standardizes content into a unified multimodal format, supports distributed CPU/GPU processing, and offers hybrid dense+sparse retrieval with an integrated RAG service through CLI and APIs.

catalyst

Catalyst is a C# Natural Language Processing library designed for speed, inspired by spaCy's design. It provides pre-trained models, support for training word and document embeddings, and flexible entity recognition models. The library is fast, modern, and pure-C#, supporting .NET standard 2.0. It is cross-platform, running on Windows, Linux, macOS, and ARM. Catalyst offers non-destructive tokenization, named entity recognition, part-of-speech tagging, language detection, and efficient binary serialization. It includes pre-built models for language packages and lemmatization. Users can store and load models using streams. Getting started with Catalyst involves installing its NuGet Package and setting the storage to use the online repository. The library supports lazy loading of models from disk or online. Users can take advantage of C# lazy evaluation and native multi-threading support to process documents in parallel. Training a new FastText word2vec embedding model is straightforward, and Catalyst also provides algorithms for fast embedding search and dimensionality reduction.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package designed for state-of-the-art timeseries forecasting using deep learning architectures. It offers a high-level API and leverages PyTorch Lightning for efficient training on GPU or CPU with automatic logging. The package aims to simplify timeseries forecasting tasks by providing a flexible API for professionals and user-friendly defaults for beginners. It includes features such as a timeseries dataset class for handling data transformations, missing values, and subsampling, various neural network architectures optimized for real-world deployment, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. Built on pytorch-lightning, it supports training on CPUs, single GPUs, and multiple GPUs out-of-the-box.

MineStudio

MineStudio is a simple and efficient Minecraft development kit for AI research. It contains tools and APIs for developing Minecraft AI agents, including a customizable simulator, trajectory data structure, policy models, offline and online training pipelines, inference framework, and benchmarking automation. The repository is under development and welcomes contributions and suggestions.

plexe

Plexe is a tool that allows users to create machine learning models by describing them in plain language. Users can explain their requirements, provide a dataset, and the AI-powered system will build a fully functional model through an automated agentic approach. It supports multiple AI agents and model building frameworks like XGBoost, CatBoost, and Keras. Plexe also provides Docker images with pre-configured environments, YAML configuration for customization, and support for multiple LiteLLM providers. Users can visualize experiment results using the built-in Streamlit dashboard and extend Plexe's functionality through custom integrations.

AIOS

AIOS, a Large Language Model (LLM) Agent operating system, embeds large language model into Operating Systems (OS) as the brain of the OS, enabling an operating system "with soul" -- an important step towards AGI. AIOS is designed to optimize resource allocation, facilitate context switch across agents, enable concurrent execution of agents, provide tool service for agents, maintain access control for agents, and provide a rich set of toolkits for LLM Agent developers.

For similar tasks

superagent

Superagent is an open-source AI assistant framework and API that allows developers to add powerful AI assistants to their applications. These assistants use large language models (LLMs), retrieval augmented generation (RAG), and generative AI to help users with a variety of tasks, including question answering, chatbot development, content generation, data aggregation, and workflow automation. Superagent is backed by Y Combinator and is part of YC W24.

Awesome-Segment-Anything

Awesome-Segment-Anything is a powerful tool for segmenting and extracting information from various types of data. It provides a user-friendly interface to easily define segmentation rules and apply them to text, images, and other data formats. The tool supports both supervised and unsupervised segmentation methods, allowing users to customize the segmentation process based on their specific needs. With its versatile functionality and intuitive design, Awesome-Segment-Anything is ideal for data analysts, researchers, content creators, and anyone looking to efficiently extract valuable insights from complex datasets.

simpletransformers

Simple Transformers is a library based on the Transformers library by HuggingFace, allowing users to quickly train and evaluate Transformer models with only 3 lines of code. It supports various tasks such as Information Retrieval, Language Models, Encoder Model Training, Sequence Classification, Token Classification, Question Answering, Language Generation, T5 Model, Seq2Seq Tasks, Multi-Modal Classification, and Conversational AI.

smile

Smile (Statistical Machine Intelligence and Learning Engine) is a comprehensive machine learning, NLP, linear algebra, graph, interpolation, and visualization system in Java and Scala. It covers every aspect of machine learning, including classification, regression, clustering, association rule mining, feature selection, manifold learning, multidimensional scaling, genetic algorithms, missing value imputation, efficient nearest neighbor search, etc. Smile implements major machine learning algorithms and provides interactive shells for Java, Scala, and Kotlin. It supports model serialization, data visualization using SmilePlot and declarative approach, and offers a gallery showcasing various algorithms and visualizations.

pgai

pgai simplifies the process of building search and Retrieval Augmented Generation (RAG) AI applications with PostgreSQL. It brings embedding and generation AI models closer to the database, allowing users to create embeddings, retrieve LLM chat completions, reason over data for classification, summarization, and data enrichment directly from within PostgreSQL in a SQL query. The tool requires an OpenAI API key and a PostgreSQL client to enable AI functionality in the database. Users can install pgai from source, run it in a pre-built Docker container, or enable it in a Timescale Cloud service. The tool provides functions to handle API keys using psql or Python, and offers various AI functionalities like tokenizing, detokenizing, embedding, chat completion, and content moderation.

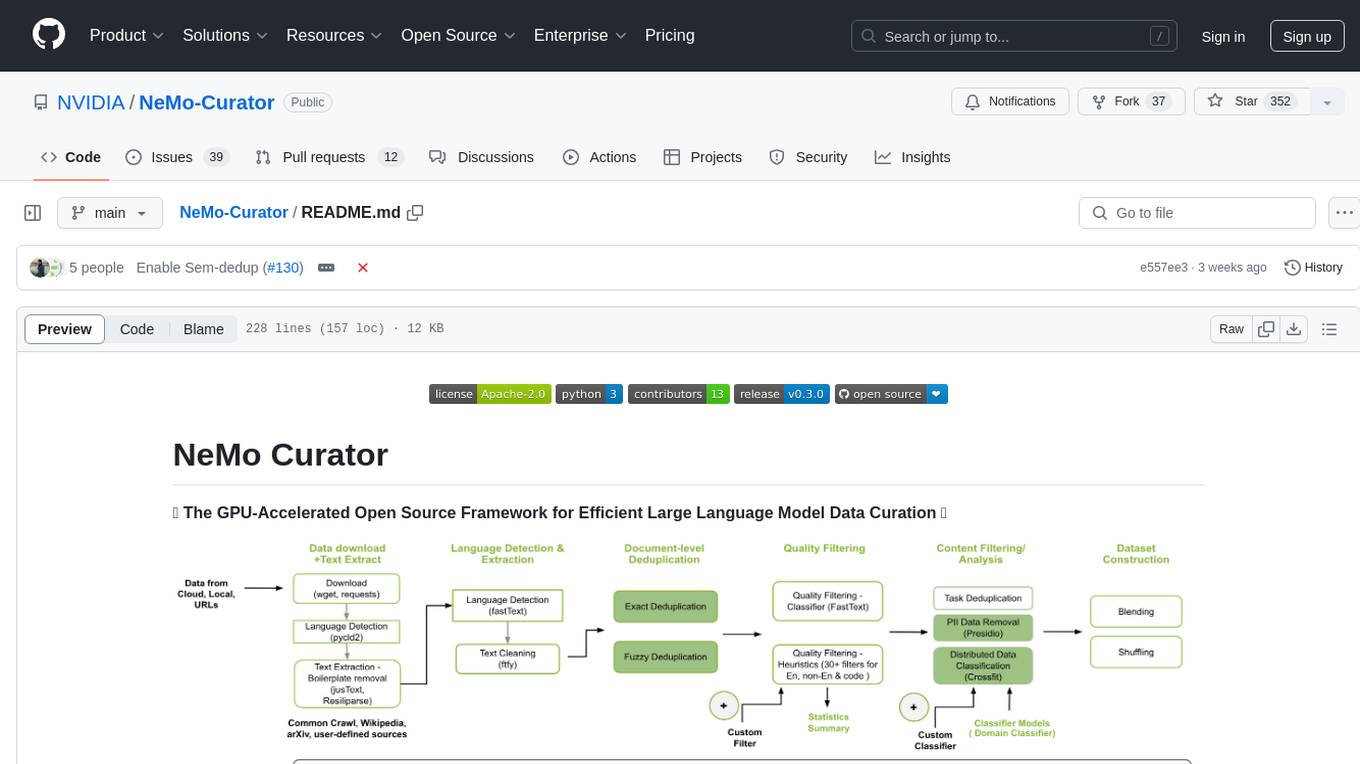

NeMo-Curator

NeMo Curator is a GPU-accelerated open-source framework designed for efficient large language model data curation. It provides scalable dataset preparation for tasks like foundation model pretraining, domain-adaptive pretraining, supervised fine-tuning, and parameter-efficient fine-tuning. The library leverages GPUs with Dask and RAPIDS to accelerate data curation, offering customizable and modular interfaces for pipeline expansion and model convergence. Key features include data download, text extraction, quality filtering, deduplication, downstream-task decontamination, distributed data classification, and PII redaction. NeMo Curator is suitable for curating high-quality datasets for large language model training.

bootcamp_machine-learning

Bootcamp Machine Learning is a one-week program designed by 42 AI to teach the basics of Machine Learning. The curriculum covers topics such as linear algebra, statistics, regression, classification, and regularization. Participants will learn concepts like gradient descent, hypothesis modeling, overfitting detection, logistic regression, and more. The bootcamp is ideal for individuals with prior knowledge of Python who are interested in diving into the field of artificial intelligence.

geoai

geoai is a Python package designed for utilizing Artificial Intelligence (AI) in the context of geospatial data. It allows users to visualize various types of geospatial data such as vector, raster, and LiDAR data. Additionally, the package offers functionalities for segmenting remote sensing imagery using the Segment Anything Model and classifying remote sensing imagery with deep learning models. With a focus on geospatial AI applications, geoai provides a versatile tool for processing and analyzing spatial data with the power of AI.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.