fiftyone

Refine high-quality datasets and visual AI models

Stars: 10399

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

README:

The open-source tool for building high-quality datasets and computer vision models

Website • Docs • Try it Now • Getting Started Guides • Tutorials • Blog • Community

We created FiftyOne to supercharge your visual AI projects by enabling you to visualize datasets, analyze models, and improve data quality more efficiently than ever before 🤝

If you're looking to scale to production-grade, collaborative, cloud-native enterprise workloads, check out FiftyOne Enterprise 🚀

As simple as:

pip install fiftyoneMore details

FiftyOne supports Python 3.9 - 3.12.

For most users, we recommend installing the latest release version of FiftyOne

via pip as shown above.

If you want to contribute to FiftyOne or install the latest development version, then you can also perform a source install.

See the prerequisites section for system-specific setup information.

We strongly recommend that you install FiftyOne in a virtual environment to maintain a clean workspace.

Consult the installation guide for troubleshooting and other information about getting up-and-running with FiftyOne.

Install from source

Follow the instructions below to install FiftyOne from source and build the App.

You'll need the following tools installed:

- Python (3.9 - 3.12)

- Node.js - on Linux, we recommend using nvm to install an up-to-date version.

-

Yarn - once Node.js is installed, you can

enable Yarn via

corepack enable

We strongly recommend that you install FiftyOne in a virtual environment to maintain a clean workspace.

If you are working in Google Colab, skip to here.

First, clone the repository:

git clone https://github.com/voxel51/fiftyone

cd fiftyoneThen run the install script:

# Mac or Linux

bash install.sh

# Windows

.\install.batIf you run into issues importing FiftyOne, you may need to add the path to the

cloned repository to your PYTHONPATH:

export PYTHONPATH=$PYTHONPATH:/path/to/fiftyoneNote that the install script adds to your nvm settings in your ~/.bashrc or

~/.bash_profile, which is needed for installing and building the App.

To upgrade an existing source installation to the bleeding edge, simply pull

the latest develop branch and rerun the install script:

git checkout develop

git pull

# Mac or Linux

bash install.sh

# Windows

.\install.batWhen you pull in new changes to the App, you will need to rebuild it, which you

can do either by rerunning the install script or just running yarn build in

the ./app directory.

If you would like to

contribute to FiftyOne,

you should perform a developer installation using the -d flag of the install

script:

# Mac or Linux

bash install.sh -d

# Windows

.\install.bat -dAlthough not required, developers typically prefer to configure their FiftyOne installation to connect to a self-installed and managed instance of MongoDB, which you can do by following these simple steps.

You can install from source in Google Colab by running the following in a cell and then restarting the runtime:

%%shell

git clone --depth 1 https://github.com/voxel51/fiftyone.git

cd fiftyone

# Mac or Linux

bash install.sh

# Windows

.\install.batSee the docs guide for information on building and contributing to the documentation.

You can uninstall FiftyOne as follows:

pip uninstall fiftyone fiftyone-brain fiftyone-dbPrerequisites for beginners

Follow the instructions for your operating system or environment to perform basic system setup before installing FiftyOne.

If you're an experienced developer, you've likely already done this.

Linux

These steps work on a clean install of Ubuntu Desktop 24.04, and should also work on Ubuntu 24.04 and 22.04, and on Ubuntu Server:

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install python3-venv python3-dev build-essential git-all libgl1-mesa-dev- On Linux, you will need at least the

opensslandlibcurlpackages - On Debian-based distributions, you will need to install

libcurl4orlibcurl3instead oflibcurl, depending on the age of your distribution

# Ubuntu

sudo apt install libcurl4 openssl

# Fedora

sudo dnf install libcurl opensslpython3 -m venv fiftyone_env

source fiftyone_env/bin/activateIf you plan to work with video datasets, you'll need to install FFmpeg:

sudo apt-get install ffmpegMacOS

xcode-select --install/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"After running the above command, follow the instructions in your terminal to complete the Homebrew installation.

brew install [email protected]

brew install protobufpython3 -m venv fiftyone_env

source fiftyone_env/bin/activateIf you plan to work with video datasets, you'll need to install FFmpeg:

brew install ffmpegWindows

Download a Python 3.9 - 3.12 installer from python.org. Make sure to pick a 64-bit version. For example, this Python 3.10.11 installer.

Double-click on the installer to run it, and follow the steps in the installer.

- Check the box to add Python to your

PATH - At the end of the installer, there is an option to disable the

PATHlength limit. It is recommended to click this

Download Microsoft Visual C++ Redistributable. Double-click on the installer to run it, and follow the steps in the installer.

Download Git from this link. Double-click on the installer to run it, and follow the steps in the installer.

- Press

Win + R. typecmd, and pressEnter. Alternatively, search Command Prompt in the Start Menu. - Navigate to your project.

cd C:\path\to\your\project - Create the environment

python -m venv fiftyone_env - Activate the environment typing this in the command line window

fiftyone_env\Scripts\activate - After activation, your command prompt should change and show the name of

the virtual environment

(fiftyone_env) C:\path\to\your\project

If you plan to work with video datasets, you'll need to install FFmpeg.

Download an FFmpeg binary from here. Add

FFmpeg's path (e.g., C:\ffmpeg\bin) to your PATH environmental variable.

Docker

Refer to these instructions to see how to build and run Docker images containing release or source builds of FiftyOne.

Dive right into FiftyOne by opening a Python shell and running the snippet below, which downloads a small dataset and launches the FiftyOne App so you can explore it:

import fiftyone as fo

import fiftyone.zoo as foz

dataset = foz.load_zoo_dataset("quickstart")

session = fo.launch_app(dataset)Then check out this Colab notebook to see some common workflows on the quickstart dataset.

Note that if you are running the above code in a script, you must include

session.wait() to block execution until you close the App. See

this page

for more information.

- Visualize Complex Datasets: Easily explore images, videos, and associated labels in a powerful visual interface.

https://github.com/user-attachments/assets/9dc2db88-967d-43fa-bda0-85e4d5ab6a7a

- Explore Embeddings: Select points of interest and view the corresponding samples/labels.

https://github.com/user-attachments/assets/246faeb7-dcab-4e01-9357-e50f6b106da7

- Analyze and Improve Models: Evaluate model performance, identify failure modes, and fine-tune your models.

https://github.com/user-attachments/assets/8c32d6c4-51e7-4fea-9a3c-2ffd9690f5d6

- Advanced Data Curation: Quickly find and fix data issues, annotation errors, and edge cases.

https://github.com/user-attachments/assets/24fa1960-c2dd-46ae-ae5f-d58b3b84cfe4

- Rich Integrations: Works with popular deep learning libraries like PyTorch, Hugging Face, Ultralytics, and more.

https://github.com/user-attachments/assets/de5f25e1-a967-4362-9e04-616449e745e5

- Open and Extensible: Customize and extend FiftyOne to fit your specific needs.

https://github.com/user-attachments/assets/c7ed496d-0cf7-45d6-9853-e349f1abd6f8

Check out these resources to get up and running with FiftyOne:

| Getting Started Guides | Tutorials | Recipes | User Guide | Examples | API Reference | CLI Reference |

|---|

Full documentation is available at fiftyone.ai.

Want to securely collaborate on billions of samples in the cloud and connect to your compute resources to automate your workflows? Check out FiftyOne Enterprise.

Refer to our common issues page to troubleshoot installation issues. If you're still stuck, check our frequently asked questions page for more answers.

If you encounter an issue that the above resources don't help you resolve, feel free to open an issue on GitHub or contact us on Discord.

Connect with us through your preferred channels:

🎊 Share how FiftyOne makes your visual AI projects a reality on social media and tag us with @Voxel51 and #FiftyOne 🎊

FiftyOne and FiftyOne Brain are open source and community contributions are welcome! Check out the contribution guide to learn how to get involved.

Special thanks to these amazing people for contributing to FiftyOne!

If you use FiftyOne in your research, feel free to cite the project (but only if you love it 😊):

@article{moore2020fiftyone,

title={FiftyOne},

author={Moore, B. E. and Corso, J. J.},

journal={GitHub. Note: https://github.com/voxel51/fiftyone},

year={2020}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for fiftyone

Similar Open Source Tools

fiftyone

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

osaurus

Osaurus is a versatile open-source tool designed for data scientists and machine learning engineers. It provides a wide range of functionalities for data preprocessing, feature engineering, model training, and evaluation. With Osaurus, users can easily clean and transform raw data, extract relevant features, build and tune machine learning models, and analyze model performance. The tool supports various machine learning algorithms and techniques, making it suitable for both beginners and experienced practitioners in the field. Osaurus is actively maintained and updated to incorporate the latest advancements in the machine learning domain, ensuring users have access to state-of-the-art tools and methodologies for their projects.

Automodel

Automodel is a Python library for automating the process of building and evaluating machine learning models. It provides a set of tools and utilities to streamline the model development workflow, from data preprocessing to model selection and evaluation. With Automodel, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to find the best model for their dataset. The library is designed to be user-friendly and customizable, allowing users to define their own pipelines and workflows. Automodel is suitable for data scientists, machine learning engineers, and anyone looking to quickly build and test machine learning models without the need for manual intervention.

dranet

Dranet is a Python library for analyzing and visualizing data from neural networks. It provides tools for interpreting model predictions, understanding feature importance, and evaluating model performance. With Dranet, users can gain insights into how neural networks make decisions and improve model transparency and interpretability.

datatune

Datatune is a data analysis tool designed to help users explore and analyze datasets efficiently. It provides a user-friendly interface for importing, cleaning, visualizing, and modeling data. With Datatune, users can easily perform tasks such as data preprocessing, feature engineering, model selection, and evaluation. The tool offers a variety of statistical and machine learning algorithms to support data analysis tasks. Whether you are a data scientist, analyst, or researcher, Datatune can streamline your data analysis workflow and help you derive valuable insights from your data.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

neurons.me

Neurons.me is an open-source tool designed for creating and managing neural network models. It provides a user-friendly interface for building, training, and deploying deep learning models. With Neurons.me, users can easily experiment with different architectures, hyperparameters, and datasets to optimize their neural networks for various tasks. The tool simplifies the process of developing AI applications by abstracting away the complexities of model implementation and training.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

phoenix

Phoenix is a tool that provides MLOps and LLMOps insights at lightning speed with zero-config observability. It offers a notebook-first experience for monitoring models and LLM Applications by providing LLM Traces, LLM Evals, Embedding Analysis, RAG Analysis, and Structured Data Analysis. Users can trace through the execution of LLM Applications, evaluate generative models, explore embedding point-clouds, visualize generative application's search and retrieval process, and statistically analyze structured data. Phoenix is designed to help users troubleshoot problems related to retrieval, tool execution, relevance, toxicity, drift, and performance degradation.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

ROGRAG

ROGRAG is a powerful open-source tool designed for data analysis and visualization. It provides a user-friendly interface for exploring and manipulating datasets, making it ideal for researchers, data scientists, and analysts. With ROGRAG, users can easily import, clean, analyze, and visualize data to gain valuable insights and make informed decisions. The tool supports a wide range of data formats and offers a variety of statistical and visualization tools to help users uncover patterns, trends, and relationships in their data. Whether you are working on exploratory data analysis, statistical modeling, or data visualization, ROGRAG is a versatile tool that can streamline your workflow and enhance your data analysis capabilities.

XRAG

XRAG is a powerful open-source tool for analyzing and visualizing data. It provides a user-friendly interface for data exploration, manipulation, and interpretation. With XRAG, users can easily import, clean, and transform data to uncover insights and trends. The tool supports various data formats and offers a wide range of statistical and machine learning algorithms for advanced analysis. XRAG is suitable for data scientists, analysts, researchers, and students looking to gain valuable insights from their data.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

For similar tasks

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

promptfoo

Promptfoo is a tool for testing and evaluating LLM output quality. With promptfoo, you can build reliable prompts, models, and RAGs with benchmarks specific to your use-case, speed up evaluations with caching, concurrency, and live reloading, score outputs automatically by defining metrics, use as a CLI, library, or in CI/CD, and use OpenAI, Anthropic, Azure, Google, HuggingFace, open-source models like Llama, or integrate custom API providers for any LLM API.

vespa

Vespa is a platform that performs operations such as selecting a subset of data in a large corpus, evaluating machine-learned models over the selected data, organizing and aggregating it, and returning it, typically in less than 100 milliseconds, all while the data corpus is continuously changing. It has been in development for many years and is used on a number of large internet services and apps which serve hundreds of thousands of queries from Vespa per second.

python-aiplatform

The Vertex AI SDK for Python is a library that provides a convenient way to use the Vertex AI API. It offers a high-level interface for creating and managing Vertex AI resources, such as datasets, models, and endpoints. The SDK also provides support for training and deploying custom models, as well as using AutoML models. With the Vertex AI SDK for Python, you can quickly and easily build and deploy machine learning models on Vertex AI.

ScandEval

ScandEval is a framework for evaluating pretrained language models on mono- or multilingual language tasks. It provides a unified interface for benchmarking models on a variety of tasks, including sentiment analysis, question answering, and machine translation. ScandEval is designed to be easy to use and extensible, making it a valuable tool for researchers and practitioners alike.

opencompass

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include: * Comprehensive support for models and datasets: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions. * Efficient distributed evaluation: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours. * Diversified evaluation paradigms: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models. * Modular design with high extensibility: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded! * Experiment management and reporting mechanism: Use config files to fully record each experiment, and support real-time reporting of results.

flower

Flower is a framework for building federated learning systems. It is designed to be customizable, extensible, framework-agnostic, and understandable. Flower can be used with any machine learning framework, for example, PyTorch, TensorFlow, Hugging Face Transformers, PyTorch Lightning, scikit-learn, JAX, TFLite, MONAI, fastai, MLX, XGBoost, Pandas for federated analytics, or even raw NumPy for users who enjoy computing gradients by hand.

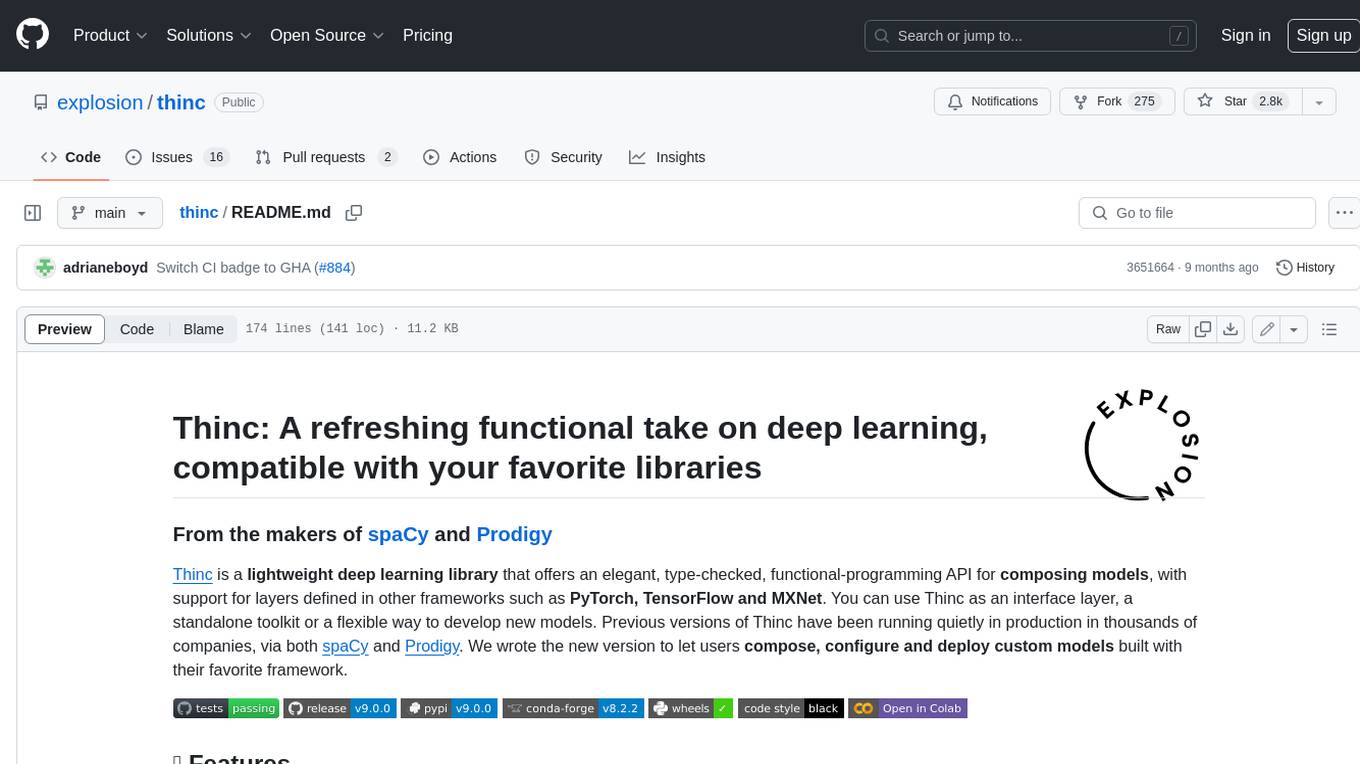

thinc

Thinc is a lightweight deep learning library that offers an elegant, type-checked, functional-programming API for composing models, with support for layers defined in other frameworks such as PyTorch, TensorFlow and MXNet. You can use Thinc as an interface layer, a standalone toolkit or a flexible way to develop new models.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.