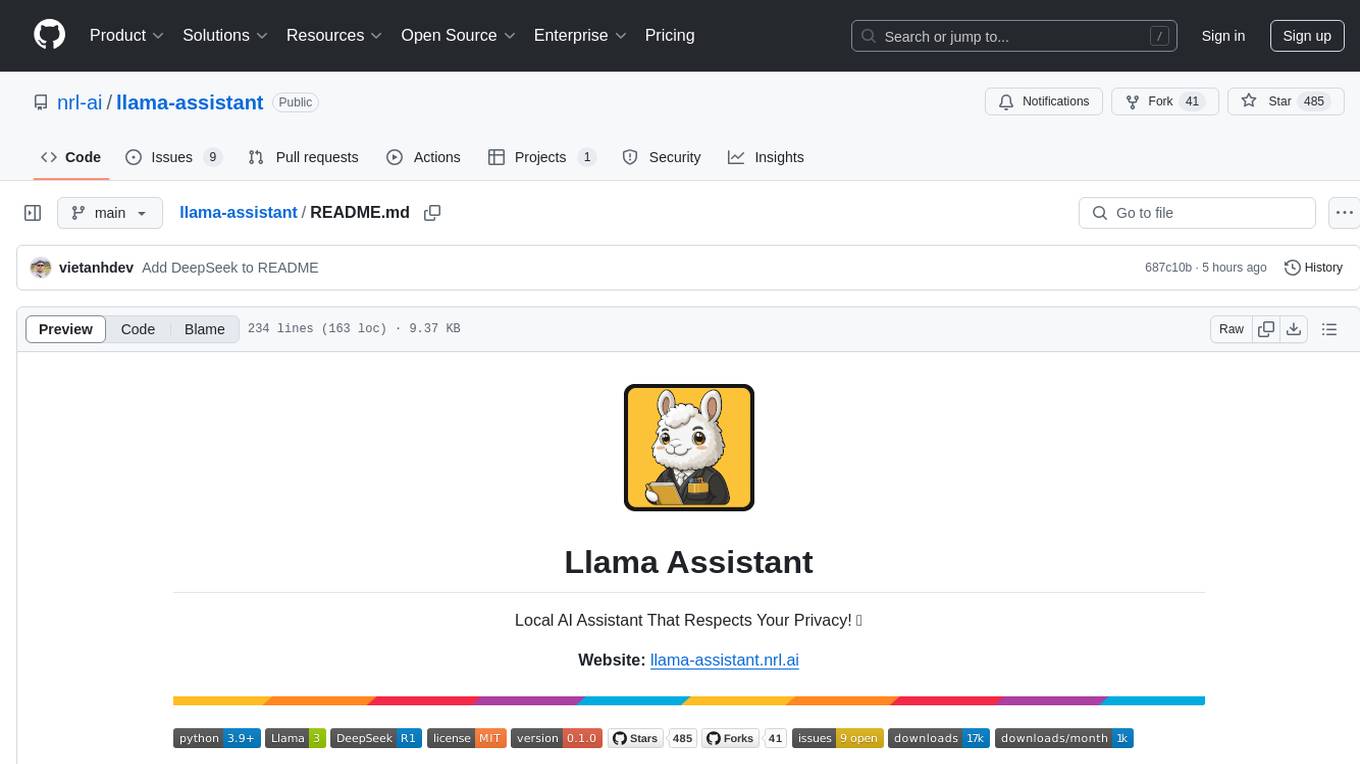

llama-assistant

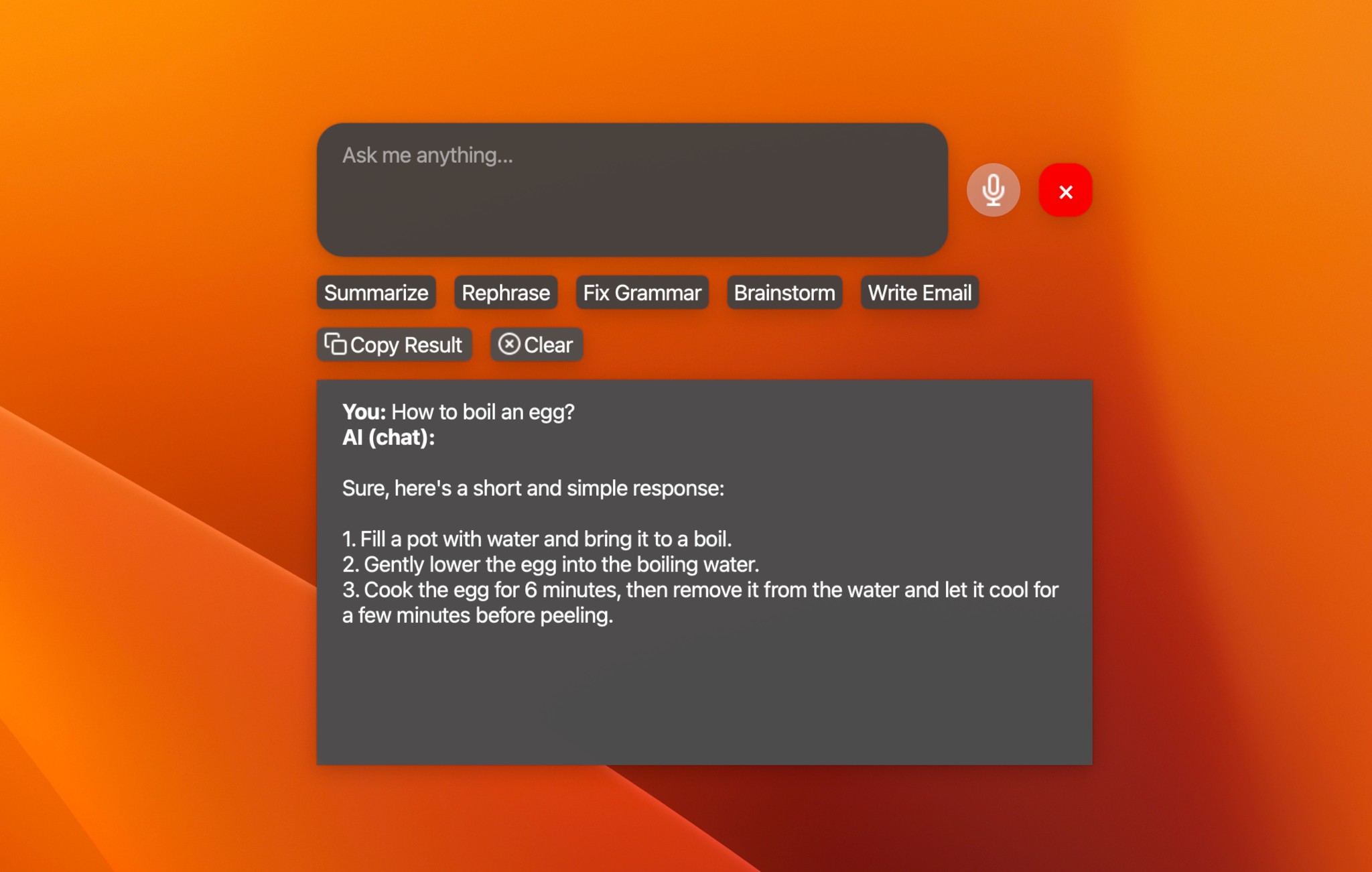

AI-powered assistant to help you with your daily tasks, powered by Llama 3.2. It can recognize your voice, process natural language, and perform various actions based on your commands: summarizing text, rephasing sentences, answering questions, writing emails, and more.

Stars: 300

Llama Assistant is an AI-powered assistant that helps with daily tasks, such as voice recognition, natural language processing, summarizing text, rephrasing sentences, answering questions, and more. It runs offline on your local machine, ensuring privacy by not sending data to external servers. The project is a work in progress with regular feature additions.

README:

Local AI Assistant That Respects Your Privacy! 🔒

Website: llama-assistant.nrl.ai

AI-powered assistant to help you with your daily tasks, powered by Llama 3.2. It can recognize your voice, process natural language, and perform various actions based on your commands: summarizing text, rephrasing sentences, answering questions, writing emails, and more.

This assistant can run offline on your local machine, and it respects your privacy by not sending any data to external servers.

https://github.com/user-attachments/assets/af2c544b-6d46-4c44-87d8-9a051ba213db

-

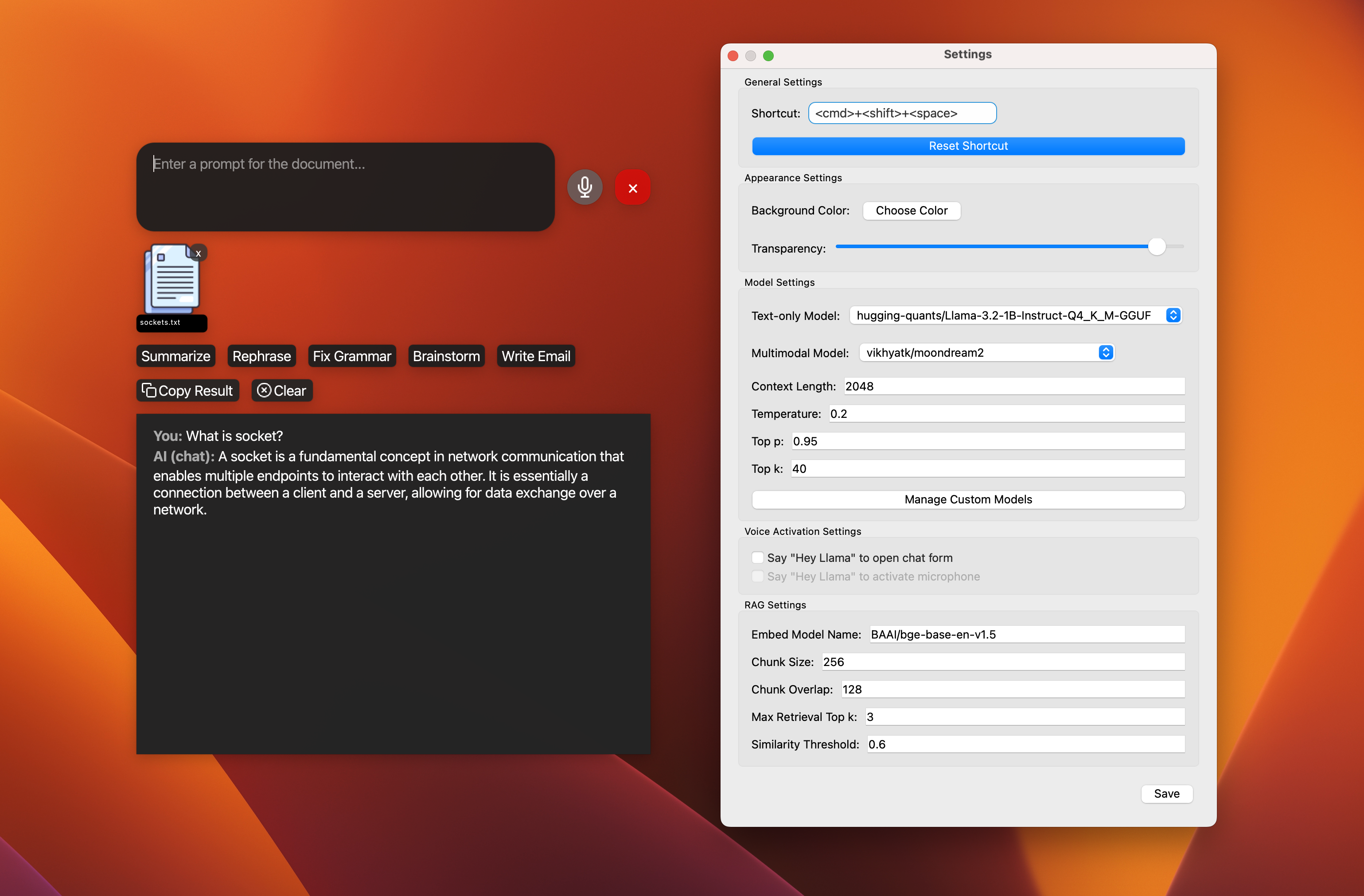

📝 Text-only models:

- Llama 3.2 - 1B, 3B (4/8-bit quantized).

- Qwen2.5-0.5B-Instruct (4-bit quantized).

- Qwen2.5-1.5B-Instruct (4-bit quantized).

- gemma-2-2b-it (4-bit quantized).

- And other models that LlamaCPP supports via custom models. See the list.

-

🖼️ Multimodal models:

- Moondream2.

- MiniCPM-v2.6.

- LLaVA 1.5/1.6.

- Besides supported models, you can try other variants via custom models.

- [x] 🖼️ Support multimodal model: moondream2.

- [x] 🗣️ Add wake word detection: "Hey Llama!".

- [x] 🛠️ Custom models: Add support for custom models.

- [x] 📚 Support 5 other text models.

- [x] 🖼️ Support 5 other multimodal models.

- [x] ⚡ Streaming support for response.

- [x] 🎙️ Add offline STT support: WhisperCPP.

- [ ] 🧠 Knowledge database: Langchain or LlamaIndex?.

- [ ] 🔌 Plugin system for extensibility.

- [ ] 📰 News and weather updates.

- [ ] 📧 Email integration with Gmail and Outlook.

- [ ] 📝 Note-taking and task management.

- [ ] 🎵 Music player and podcast integration.

- [ ] 🤖 Workflow with multiple agents.

- [ ] 🌐 Multi-language support: English, Spanish, French, German, etc.

- [ ] 📦 Package for Windows, Linux, and macOS.

- [ ] 🔄 Automated tests and CI/CD pipeline.

- 🎙️ Voice recognition for hands-free interaction.

- 💬 Natural language processing with Llama 3.2.

- 🖼️ Image analysis capabilities (TODO).

- ⚡ Global hotkey for quick access (Cmd+Shift+Space on macOS).

- 🎨 Customizable UI with adjustable transparency.

Note: This project is a work in progress, and new features are being added regularly.

Recommended Python Version: 3.10.

Install PortAudio:

-

For Mac OS X, you can use

Homebrew_::brew install portaudioNote: if you encounter an error when running

pip installthat indicates it can't findportaudio.h, try runningpip installwith the following flags::pip install --global-option='build_ext' \ --global-option='-I/usr/local/include' \ --global-option='-L/usr/local/lib' \ pyaudio -

For Debian / Ubuntu Linux::

apt-get install portaudio19-dev python3-all-dev -

Windows may work without having to install PortAudio explicitly (it will get installed with PyAudio).

For more details, see the PyAudio installation_ page.

.. _PyAudio: https://people.csail.mit.edu/hubert/pyaudio/ .. _PortAudio: http://www.portaudio.com/ .. _PyAudio installation: https://people.csail.mit.edu/hubert/pyaudio/#downloads .. _Homebrew: http://brew.sh

On Windows: Installing the MinGW-w64 toolchain

Install from PyPI:

pip install pyaudio

pip install git+https://github.com/stlukey/whispercpp.py

pip install llama-assistantOr install from source:

- Clone the repository:

git clone https://github.com/vietanhdev/llama-assistant.git

cd llama-assistant- Install the required dependencies and install the package:

pip install pyaudio

pip install git+https://github.com/stlukey/whispercpp.py

pip install -r requirements.txt

pip install .Speed Hack for Apple Silicon (M1, M2, M3) users: 🔥🔥🔥

- Install Xcode:

# check the path of your xcode install

xcode-select -p

# xcode installed returns

# /Applications/Xcode-beta.app/Contents/Developer

# if xcode is missing then install it... it takes ages;

xcode-select --install- Build

llama-cpp-pythonwith METAL support:

pip uninstall llama-cpp-python -y

CMAKE_ARGS="-DGGML_METAL=on" pip install -U llama-cpp-python --no-cache-dir

# You should now have llama-cpp-python v0.1.62 or higher installed

# llama-cpp-python 0.1.68Run the assistant using the following command:

llama-assistant

# Or with a

python -m llama_assistant.mainUse the global hotkey (default: Cmd+Shift+Space) to quickly access the assistant from anywhere on your system.

The assistant's settings can be customized by editing the settings.json file located in your home directory: ~/llama_assistant/settings.json.

Contributions are welcome! Please feel free to submit a Pull Request.

This project is licensed under the GPLv3 License - see the LICENSE file for details.

- This project uses llama.cpp, llama-cpp-python for running large language models. The default model is Llama 3.2 by Meta AI Research.

- Speech recognition is powered by whisper.cpp and whispercpp.py.

- Viet-Anh Nguyen - vietanhdev, contact form.

- Project Link: https://github.com/vietanhdev/llama-assistant, https://llama-assistant.nrl.ai/.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llama-assistant

Similar Open Source Tools

llama-assistant

Llama Assistant is an AI-powered assistant that helps with daily tasks, such as voice recognition, natural language processing, summarizing text, rephrasing sentences, answering questions, and more. It runs offline on your local machine, ensuring privacy by not sending data to external servers. The project is a work in progress with regular feature additions.

llama-assistant

Llama Assistant is a local AI assistant that respects your privacy. It is an AI-powered assistant that can recognize your voice, process natural language, and perform various actions based on your commands. It can help with tasks like summarizing text, rephrasing sentences, answering questions, writing emails, and more. The assistant runs offline on your local machine, ensuring privacy by not sending data to external servers. It supports voice recognition, natural language processing, and customizable UI with adjustable transparency. The project is a work in progress with new features being added regularly.

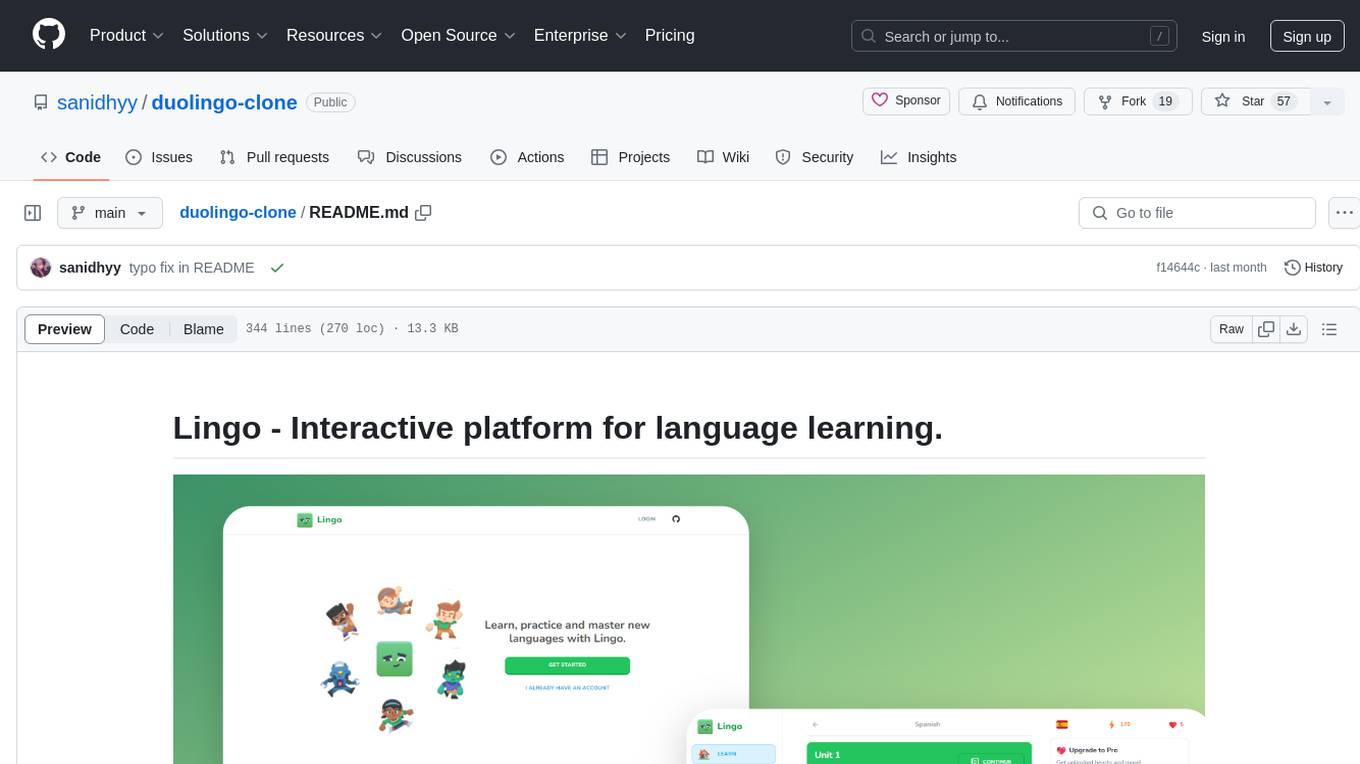

duolingo-clone

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

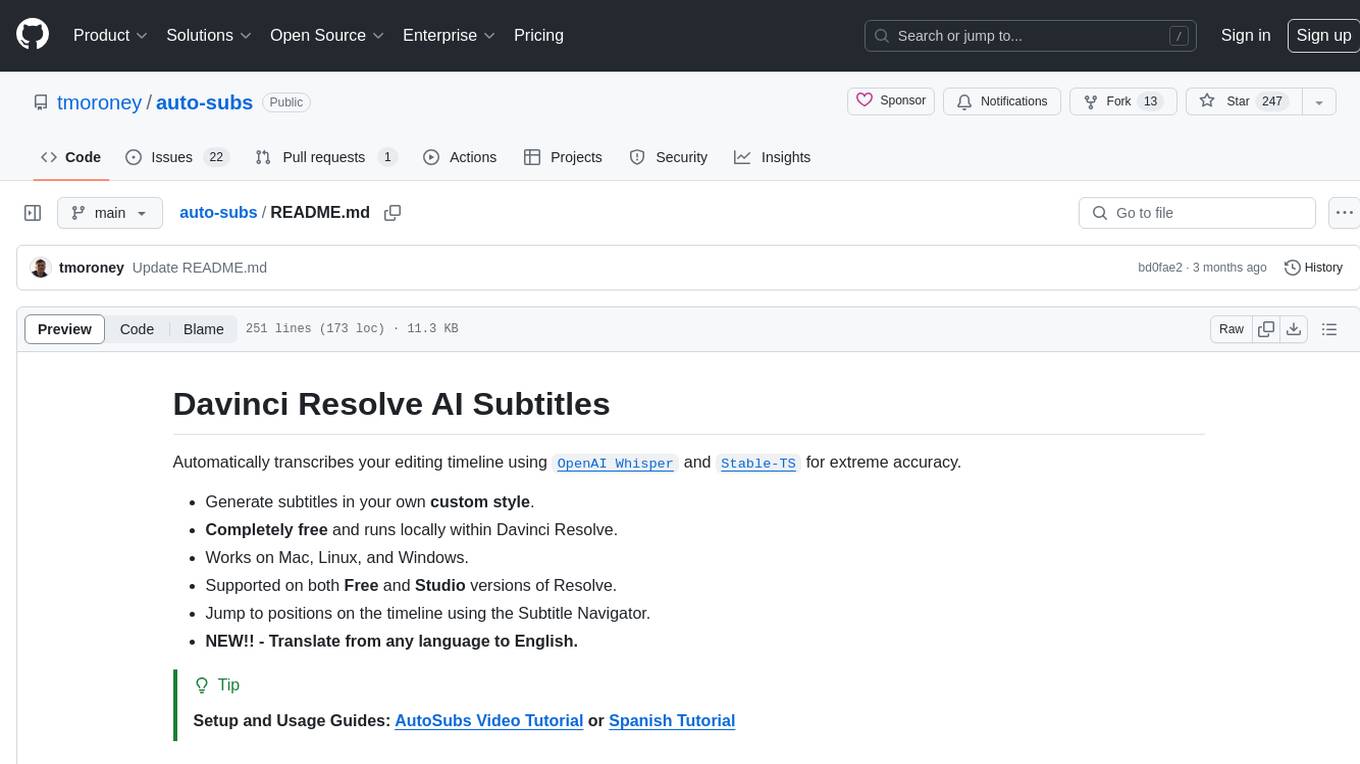

auto-subs

Auto-subs is a tool designed to automatically transcribe editing timelines using OpenAI Whisper and Stable-TS for extreme accuracy. It generates subtitles in a custom style, is completely free, and runs locally within Davinci Resolve. It works on Mac, Linux, and Windows, supporting both Free and Studio versions of Resolve. Users can jump to positions on the timeline using the Subtitle Navigator and translate from any language to English. The tool provides a user-friendly interface for creating and customizing subtitles for video content.

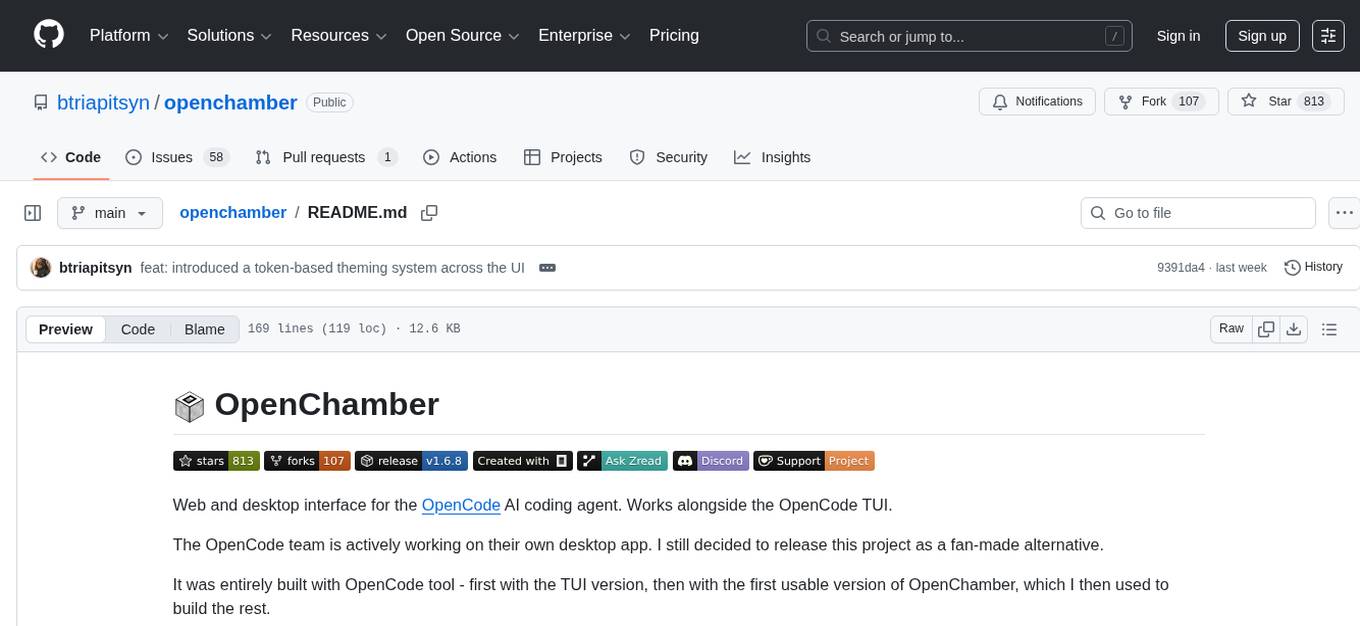

openchamber

OpenChamber is a web and desktop interface for the OpenCode AI coding agent, designed to work alongside the OpenCode TUI. The project was built entirely with AI coding agents under supervision, serving as a proof of concept that AI agents can create usable software. It offers features like integrated terminal, Git operations with AI commit message generation, smart tool visualization, permission management, multi-agent runs, task tracker UI, model selection UX, UI scaling controls, session auto-cleanup, and memory optimizations. OpenChamber provides cross-device continuity, remote access, and a visual alternative for developers preferring GUI workflows.

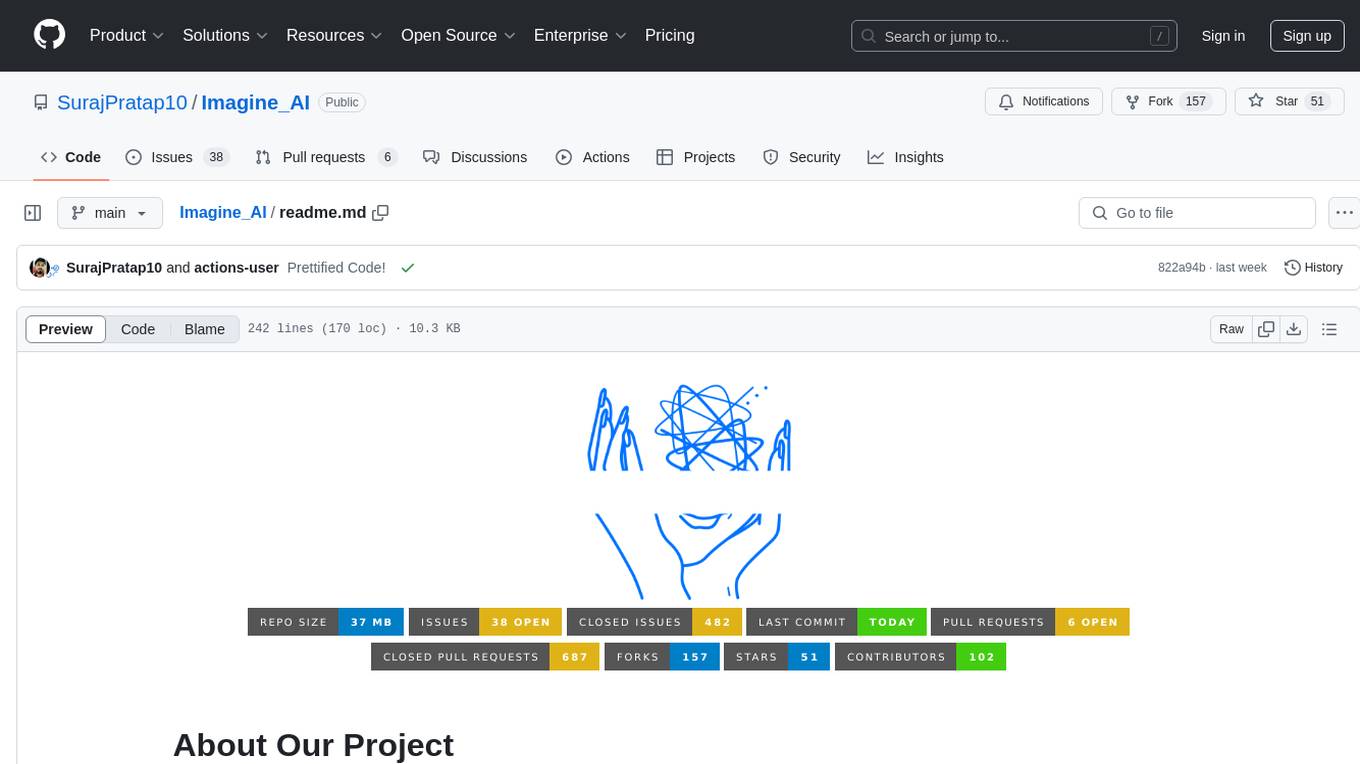

Imagine_AI

IMAGINE - AI is a groundbreaking image generator tool that leverages the power of OpenAI's DALL-E 2 API library to create extraordinary visuals. Developed using Node.js and Express, this tool offers a transformative way to unleash artistic creativity and imagination by generating unique and captivating images through simple prompts or keywords.

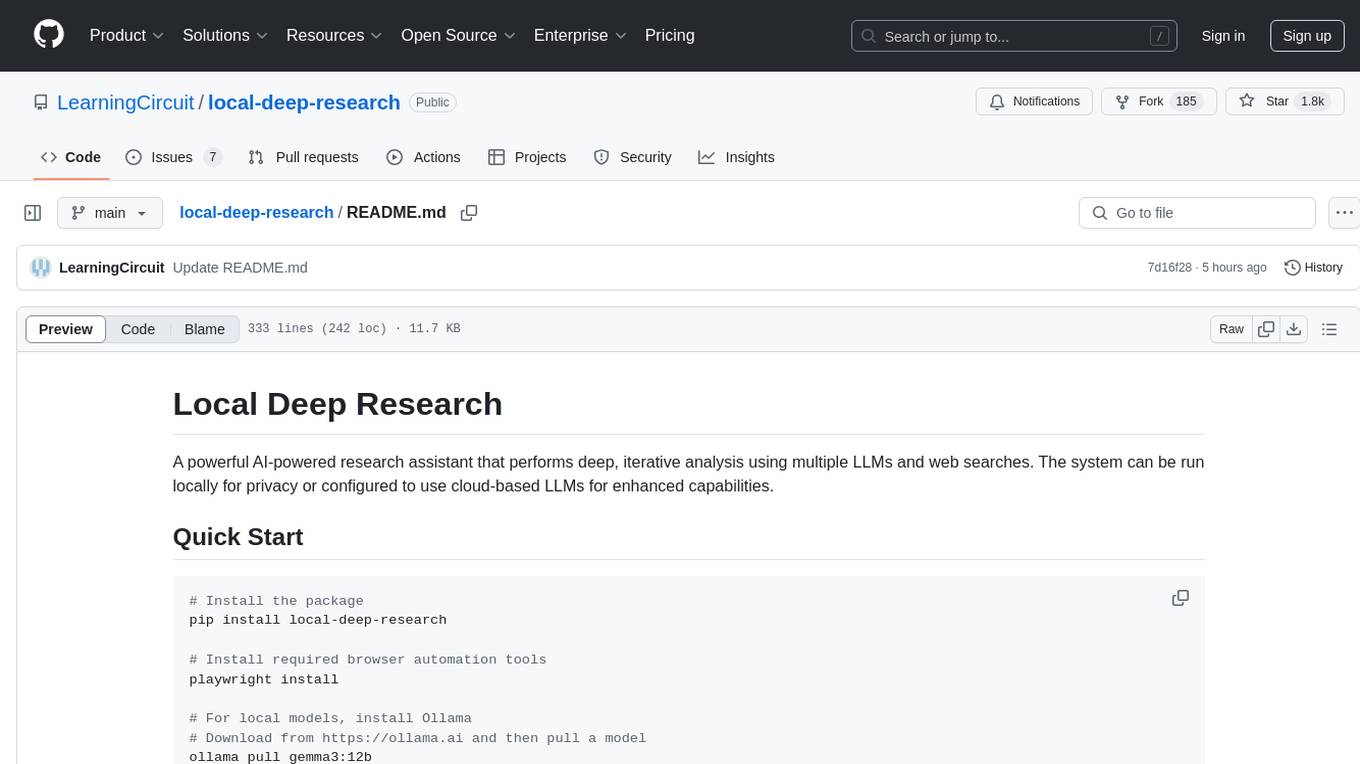

local-deep-research

Local Deep Research is a powerful AI-powered research assistant that performs deep, iterative analysis using multiple LLMs and web searches. It can be run locally for privacy or configured to use cloud-based LLMs for enhanced capabilities. The tool offers advanced research capabilities, flexible LLM support, rich output options, privacy-focused operation, enhanced search integration, and academic & scientific integration. It also provides a web interface, command line interface, and supports multiple LLM providers and search engines. Users can configure AI models, search engines, and research parameters for customized research experiences.

OSA

OSA (Open-Source-Advisor) is a tool designed to improve the quality of scientific open source projects by automating the generation of README files, documentation, CI/CD scripts, and providing advice and recommendations for repositories. It supports various LLMs accessible via API, local servers, or osa_bot hosted on ITMO servers. OSA is currently under development with features like README file generation, documentation generation, automatic implementation of changes, LLM integration, and GitHub Action Workflow generation. It requires Python 3.10 or higher and tokens for GitHub/GitLab/Gitverse and LLM API key. Users can install OSA using PyPi or build from source, and run it using CLI commands or Docker containers.

aderyn

Aderyn is a powerful Solidity static analyzer designed to help protocol engineers and security researchers find vulnerabilities in Solidity code bases. It provides off-the-shelf support for Foundry and Hardhat projects, allows for custom frameworks through a configuration file, and generates reports in Markdown, JSON, and Sarif formats. Users can install Aderyn using Cyfrinup, curl, Homebrew, or npm, and quickly identify vulnerabilities in their Solidity code. The tool also offers a VS Code extension for seamless integration with the IDE.

screenpipe

24/7 Screen & Audio Capture Library to build personalized AI powered by what you've seen, said, or heard. Works with Ollama. Alternative to Rewind.ai. Open. Secure. You own your data. Rust. We are shipping daily, make suggestions, post bugs, give feedback. Building a reliable stream of audio and screenshot data, simplifying life for developers by solving non-trivial problems. Multiple installation options available. Experimental tool with various integrations and features for screen and audio capture, OCR, STT, and more. Open source project focused on enabling tooling & infrastructure for a wide range of applications.

OpenResearcher

OpenResearcher is a fully open agentic large language model designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1, and Tongyi-DeepResearch. The tool is fully open-source, providing the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research. It offers features like a fully open-source recipe, highly scalable and low-cost generation of deep research trajectories, and remarkable performance on deep research benchmarks.

lingo.dev

Replexica AI automates software localization end-to-end, producing authentic translations instantly across 60+ languages. Teams can do localization 100x faster with state-of-the-art quality, reaching more paying customers worldwide. The tool offers a GitHub Action for CI/CD automation and supports various formats like JSON, YAML, CSV, and Markdown. With lightning-fast AI localization, auto-updates, native quality translations, developer-friendly CLI, and scalability for startups and enterprise teams, Replexica is a top choice for efficient and effective software localization.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

Everywhere

Everywhere is an interactive AI assistant with context-aware capabilities, featuring a sleek, modern UI and powerful integrated functionality. It instantly perceives and understands anything on your screen, providing seamless AI assistant support without the need for screenshots or app switching. The tool offers troubleshooting expertise, quick web summarization, instant translation, and email draft assistance. It supports LLM from various providers, integrates with web browsers, file systems, terminals, and more, and provides an interactive experience with a modern UI, context-aware invocation, keyboard shortcuts, and markdown rendering. Everywhere is available on Windows and macOS, with Linux support coming soon. Language support includes Simplified Chinese, English, German, Spanish, French, Italian, Japanese, Korean, Russian, Turkish, Traditional Chinese, and Traditional Chinese (Hong Kong).

superlinked

Superlinked is a compute framework for information retrieval and feature engineering systems, focusing on converting complex data into vector embeddings for RAG, Search, RecSys, and Analytics stack integration. It enables custom model performance in machine learning with pre-trained model convenience. The tool allows users to build multimodal vectors, define weights at query time, and avoid postprocessing & rerank requirements. Users can explore the computational model through simple scripts and python notebooks, with a future release planned for production usage with built-in data infra and vector database integrations.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.