OpenResearcher

OpenResearcher: A Fully Open Pipeline for Long-Horizon Deep Research Trajectory Synthesis

Stars: 285

OpenResearcher is a fully open agentic large language model designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1, and Tongyi-DeepResearch. The tool is fully open-source, providing the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research. It offers features like a fully open-source recipe, highly scalable and low-cost generation of deep research trajectories, and remarkable performance on deep research benchmarks.

README:

🤗 HuggingFace |

Blog |

Slack |

Slack | WeChat

- [2026.2.12] 🔥 Excited to see OpenResearcher powering deep research trajectory generation in NVIDIA’s NeMo Data Designer!

- [2026.2.10] 🚀 Our X post received 1.2K+ likes! Feel free to check out the post and join the discussion! 💬

OpenResearcher is a fully open agentic large language model (30B-A3B) designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1 and Tongyi-DeepResearch. We fully open-source the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research.

-

🔑 Fully Open-Source Recipe — We fully open-source our 96K high-quality DeepResearch trajectory dataset with 100+ turns generated by GPT-OSS-120B with native browser tools, the leading 30B-A3B model trained on it, distillation recipe, and a lightweight DeepResearch evaluation framework to progress deep research.

-

💰 Highly Scalable and Low-Cost — We generate DeepResearch trajectories at massive scale using self-built retriever over a dedicated ~11B-token corpus, eliminating the need for external Search APIs. This scalable retriever significantly reduces training costs.

-

🚀 Remarkable Performance on Deep Research Benchmarks — OpenResearcher demonstrates leading performance across a range of deep research benchmarks, including BrowseComp-Plus, BrowseComp, GAIA, xbench-DeepSearch.

- 🛠 Environment Setup

- 🔍 Configuration

- 🚀 Quick Start

- 🔬 Benchmark OpenResearcher

- 🤝 Core Contributors

- 🎓 Advisors

- 🙏 Acknowledgements

- ✨ Contributing

- 📚 Citation

We run this repo on the following setup:

- 8 * A100 80G Nvidia GPUs

- Linux operating system

Other hardware setups can also work, but remember to modify the corresponding parameters.

sudo apt update

sudo apt install -y openjdk-21-jdk

# install uv

curl -LsSf https://astral.sh/uv/install.sh | sh

uv venv --python 3.12

source .venv/bin/activate

# install tevatron for BrowseComp-plus

git clone https://github.com/texttron/tevatron.git

cd tevatron

uv pip install -e .

cd ..

# install all dependencies automatically

uv pip install -e .Run the setup script to automatically download the BrowseComp-Plus benchmark. Other benchmarks, including BrowseComp, GAIA and xbench-DeepResearch, will be set up automatically when they are first used.

bash setup.shThis script will:

- ✅ Verify Python 3.12 virtual environment and automatically install any missing dependencies

- ✅ Downlaod BrowseComp-Plus dataset from HuggingFace and set up the directory structure

For more info about these deep research benchmarks, see benchmarks.md

Copy the template and configure your API keys:

cp .env.template .envEdit .env:

# Serper API (for web search when using browser_backend=serper)

SERPER_API_KEY=your_key # Get from: https://serper.dev/

# OpenAI API (for evaluation scoring)

OPENAI_API_KEY=your_key # Get from: https://platform.openai.com/api-keysPrerequisites: Install dependencies and configure API keys (see Environment Setup and Configuration)

- Deploy OpenResearcher-30B-A3B:

bash scripts/start_nemotron_servers.shThe complete vLLM server logs can be found in the logs directory.

-

Run your first task (Before proceeding, check the logs in

logsdirectory to ensure the vLLM server is deployed.)

import asyncio

from deploy_agent import run_one, BrowserPool

from utils.openai_generator import OpenAIAsyncGenerator

async def main():

# Initialize generator and browser

generator = OpenAIAsyncGenerator(

base_url="http://localhost:8001/v1",

model_name="OpenResearcher/OpenResearcher-30B-A3B",

use_native_tools=True

)

browser_pool = BrowserPool(search_url=None, browser_backend="serper")

# Run deep research

await run_one(

question="What is the latest news about OpenAI?",

qid="quick_start",

generator=generator,

browser_pool=browser_pool,

)

browser_pool.cleanup("quick_start")

if __name__ == "__main__":

asyncio.run(main())The deep research agent will automatically search the web, browse webpages, and extract relevant information. You'll see the final answer along with all intermediate reasoning steps.

We benchmark our OpenResearcher-30B-A3B using below deep research benchmarks:

| Benchmark | Dataset Key | Size | Language | Search Backend | Description |

|---|---|---|---|---|---|

| BrowseComp-Plus | browsecomp_plus |

830 | EN | local | Deep-research benchmark from BrowseComp isolating retriever and LLM agent effects |

| BrowseComp | browsecomp |

1,266 | EN | serper | A Simple Yet Challenging Benchmark for Browsing Agents |

| GAIA-text | gaia |

103 | EN | serper | Text-only subset of GAIA benchmark (dev split) |

| xbench-DeepResearch | xbench |

100 | ZH | serper | DeepSearch benchmark with encrypted test cases |

For more info about these deep research benchmarks, see benchmarks.md

Complete evaluation using local dense search with browsecomp-plus corpus and embeddings (note: only applicable for BrowseComp-Plus):

# Terminal 1: Start local Dense search service on port 8000

# Embedding model (Qwen3-Embedding-8B) will be deployed on GPUs 7

bash scripts/start_search_service.sh dense 8000

# Terminal 2: Start vLLM servers (requires 4 GPUs)

# TP=2, deploy 2 servers starting from port 8001 on GPUs 0,1,2,3

bash scripts/start_nemotron_servers.sh 2 8001 0,1,2,3

# Terminal 3: Run agent

bash run_agent.sh results/browsecomp_plus/OpenResearcher_dense 8001 2 browsecomp_plus local OpenResearcher/OpenResearcher-30B-A3BWhat this does:

- Deploys Dense retriever service on port 8000 as search engine

- Launches 2 vLLM servers (ports 8001, 8002) with TP=2 across 4 GPUs

- Runs deepresearch agent with load balancing across both servers

Run with Serper Google Search API (note: applicable to all benchmarks except BrowseComp-Plus):

# Terminal 1: Start vLLM servers (requires 4 GPUs)

bash scripts/start_nemotron_servers.sh 2 8001 0,1,2,3

# Terminal 2: Run agent with serper search backend

bash run_agent.sh results/gaia/OpenResearcher_serper 8001 2 gaia serper OpenResearcher/OpenResearcher-30B-A3BBrowser Backend Options:

-

local- Use local BM25/Dense search service (for BrowseComp-Plus) -

serper- Use Serper Google Search API (for all other benchmarks)

For other parameters, refer to parameter.md.

After running experiments, evaluate results:

# eval on browsecomp_plus

python eval.py --input_dir results/browsecomp_plus_dense/OpenResearcher_dense

# eval on gaia

python eval.py --input_dir results/gaia/OpenResearcher_serper| Scenario | Command |

|---|---|

| BrowseComp-Plus (BM25) |

bash scripts/start_search_service.sh bm25 8000 then bash scripts/start_nemotron_servers.sh 2 8001 0,1,2,3 then bash run_agent.sh results/browsecomp-plus/OpenResearcher_bm25 8001 2 browsecomp_plus local OpenResearcher/OpenResearcher-30B-A3B

|

| BrowseComp-Plus (Qwen3-8B Dense Embeddings) |

bash scripts/start_search_service.sh dense 8000 then bash scripts/start_nemotron_servers.sh 2 8001 0,1,2,3 then bash run_agent.sh results/browsecomp-plus/OpenResearcher_dense 8001 2 browsecomp-plus local OpenResearcher/OpenResearcher-30B-A3B

|

| BrowseComp |

bash scripts/start_nemotron_servers.sh 2 8001 0,1,2,3 then bash run_agent.sh results/browsecomp 8001 2 browsecomp serper OpenResearcher/OpenResearcher-30B-A3B

|

| GAIA |

bash scripts/start_nemotron_servers.sh 2 8001 0,1,2,3 then bash run_agent.sh results/gaia 8001 2 gaia serper OpenResearcher/OpenResearcher-30B-A3B

|

| xbench-DeepResearch |

bash scripts/start_nemotron_servers.sh 2 8001 0,1,2,3 then bash run_agent.sh results/xbench 8001 2 xbench serper OpenResearcher/OpenResearcher-30B-A3B

|

For script parameter explanation, refer to parameter.md.

Note: Don't forget to evaluate your results using:

python eval.py --input_dir [INPUT_DIR]

Zhuofeng Li |

Dongfu Jiang |

Xueguang Ma |

Haoxiang Zhang |

Ping Nie |

Wenhu Chen |

Yu Zhang |

We thank Lambda, Netmind AI and Verdent AI for GPU and API support!

We are truly looking forward to open-source contributions to OpenResearcher! If you’re interested in contributing, collaborating, or reporting issues, please feel free to open an issue or submit a pull request (PR). You can also reach us at [email protected].

We are also looking forward to your feedback and suggestions!

@misc{li2025openresearcher,

title={OpenResearcher: A Fully Open Pipeline for Long-Horizon Deep Research Trajectory Synthesis},

author={Zhuofeng Li and Dongfu Jiang and Xueguang Ma and Haoxiang Zhang and Ping Nie and Yuyu Zhang and Kai Zou and Jianwen Xie and Yu Zhang and Wenhu Chen},

year={2025},

howpublished={\url{https://www.notion.so/OpenResearcher-A-Fully-Open-Pipeline-for-Long-Horizon-Deep-Research-Trajectory-Synthesis-2f7e290627b5800cb3a0cd7e8d6ec0ea}},

note={Notion Blog}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OpenResearcher

Similar Open Source Tools

OpenResearcher

OpenResearcher is a fully open agentic large language model designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1, and Tongyi-DeepResearch. The tool is fully open-source, providing the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research. It offers features like a fully open-source recipe, highly scalable and low-cost generation of deep research trajectories, and remarkable performance on deep research benchmarks.

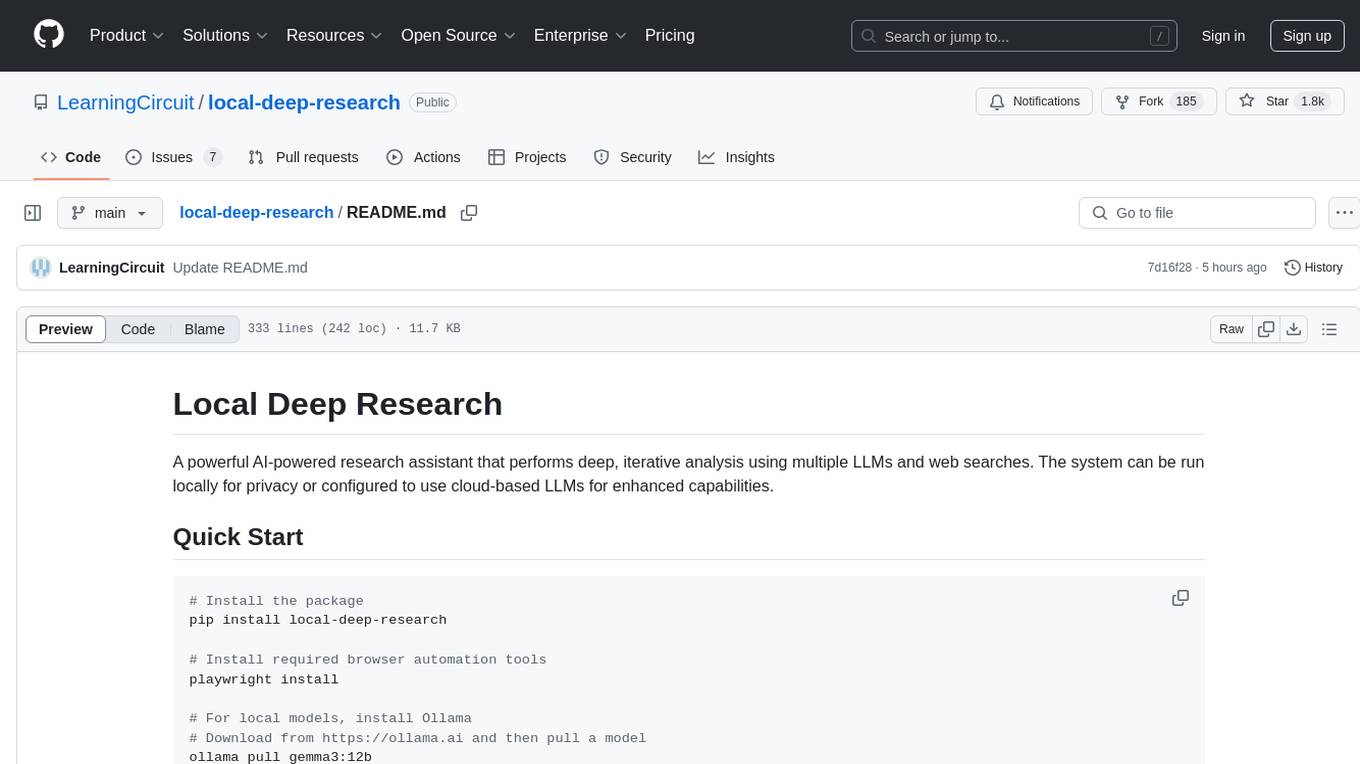

local-deep-research

Local Deep Research is a powerful AI-powered research assistant that performs deep, iterative analysis using multiple LLMs and web searches. It can be run locally for privacy or configured to use cloud-based LLMs for enhanced capabilities. The tool offers advanced research capabilities, flexible LLM support, rich output options, privacy-focused operation, enhanced search integration, and academic & scientific integration. It also provides a web interface, command line interface, and supports multiple LLM providers and search engines. Users can configure AI models, search engines, and research parameters for customized research experiences.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

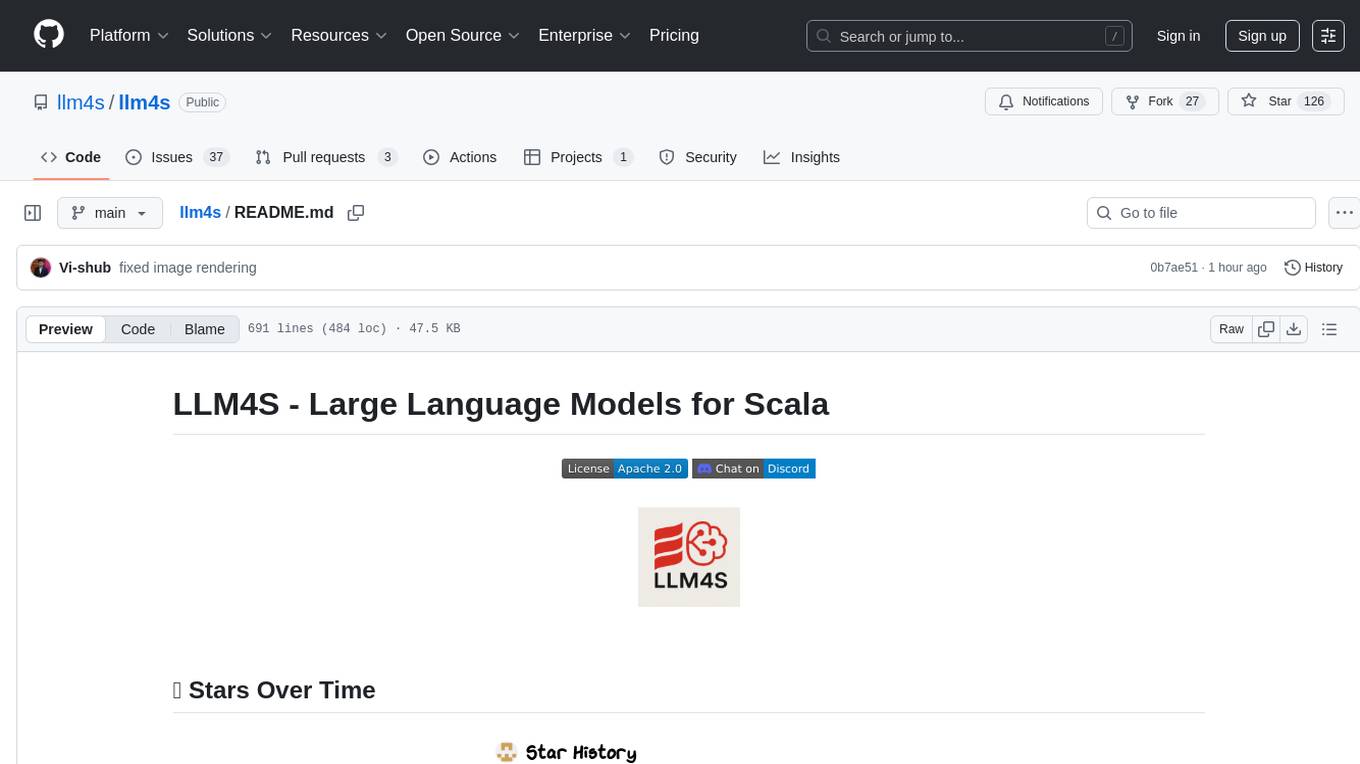

llm4s

LLM4S provides a simple, robust, and scalable framework for building Large Language Models (LLM) applications in Scala. It aims to leverage Scala's type safety, functional programming, JVM ecosystem, concurrency, and performance advantages to create reliable and maintainable AI-powered applications. The framework supports multi-provider integration, execution environments, error handling, Model Context Protocol (MCP) support, agent frameworks, multimodal generation, and Retrieval-Augmented Generation (RAG) workflows. It also offers observability features like detailed trace logging, monitoring, and analytics for debugging and performance insights.

BrowserAI

BrowserAI is a tool that allows users to run large language models (LLMs) directly in the browser, providing a simple, fast, and open-source solution. It prioritizes privacy by processing data locally, is cost-effective with no server costs, works offline after initial download, and offers WebGPU acceleration for high performance. It is developer-friendly with a simple API, supports multiple engines, and comes with pre-configured models for easy use. Ideal for web developers, companies needing privacy-conscious AI solutions, researchers experimenting with browser-based AI, and hobbyists exploring AI without infrastructure overhead.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

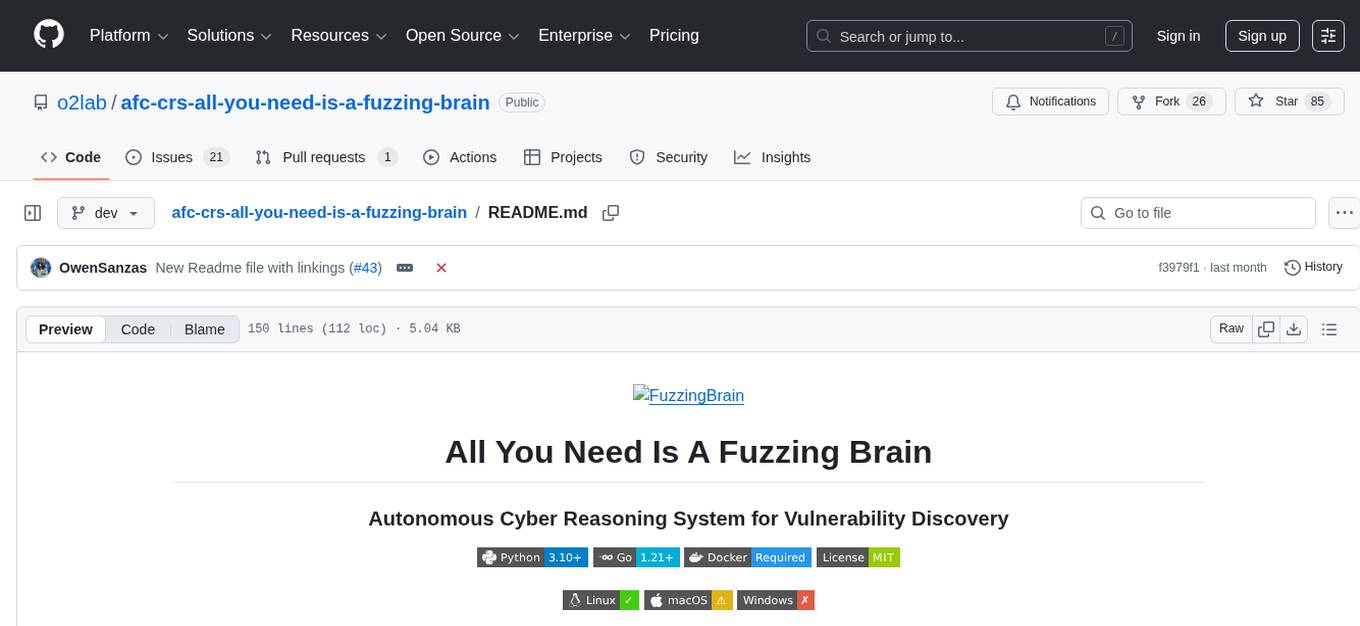

afc-crs-all-you-need-is-a-fuzzing-brain

All You Need Is A Fuzzing Brain is an AI-driven automated vulnerability detection and remediation framework developed for the 2025 DARPA AIxCC finals. It leverages multiple LLM providers for intelligent vulnerability detection, offers 23+ specialized strategies for POV generation and patch synthesis, generates and validates patches automatically, integrates seamlessly with Google's fuzzing infrastructure, and supports vulnerability detection in C/C++ and Java. Users can perform tasks such as Delta Scan, Full Scan, and SARIF Analysis for specific commits, repository-wide analysis, and validation/patching from static analysis reports, respectively. The tool can be used via Docker or by installing from source, and citations are provided for research purposes.

Edit-Banana

Edit Banana is a universal content re-editor that allows users to transform fixed content into fully manipulatable assets. Powered by SAM 3 and multimodal large models, it enables high-fidelity reconstruction while preserving original diagram details and logical relationships. The platform offers advanced segmentation, fixed multi-round VLM scanning, high-quality OCR, user system with credits, multi-user concurrency, and a web interface. Users can upload images or PDFs to get editable DrawIO (XML) or PPTX files in seconds. The project structure includes components for segmentation, text extraction, frontend, models, and scripts, with detailed installation and setup instructions provided. The tool is open-source under the Apache License 2.0, allowing commercial use and secondary development.

trpc-agent-go

A powerful Go framework for building intelligent agent systems with large language models (LLMs), hierarchical planners, memory, telemetry, and a rich tool ecosystem. tRPC-Agent-Go enables the creation of autonomous or semi-autonomous agents that reason, call tools, collaborate with sub-agents, and maintain long-term state. The framework provides detailed documentation, examples, and tools for accelerating the development of AI applications.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

agentscope

AgentScope is an agent-oriented programming tool for building LLM (Large Language Model) applications. It provides transparent development, realtime steering, agentic tools management, model agnostic programming, LEGO-style agent building, multi-agent support, and high customizability. The tool supports async invocation, reasoning models, streaming returns, async/sync tool functions, user interruption, group-wise tools management, streamable transport, stateful/stateless mode MCP client, distributed and parallel evaluation, multi-agent conversation management, and fine-grained MCP control. AgentScope Studio enables tracing and visualization of agent applications. The tool is highly customizable and encourages customization at various levels.

llama-assistant

Llama Assistant is a local AI assistant that respects your privacy. It is an AI-powered assistant that can recognize your voice, process natural language, and perform various actions based on your commands. It can help with tasks like summarizing text, rephrasing sentences, answering questions, writing emails, and more. The assistant runs offline on your local machine, ensuring privacy by not sending data to external servers. It supports voice recognition, natural language processing, and customizable UI with adjustable transparency. The project is a work in progress with new features being added regularly.

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

ChatGPT-Next-Web

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

For similar tasks

OpenResearcher

OpenResearcher is a fully open agentic large language model designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1, and Tongyi-DeepResearch. The tool is fully open-source, providing the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research. It offers features like a fully open-source recipe, highly scalable and low-cost generation of deep research trajectories, and remarkable performance on deep research benchmarks.

ml-engineering

This repository provides a comprehensive collection of methodologies, tools, and step-by-step instructions for successful training of large language models (LLMs) and multi-modal models. It is a technical resource suitable for LLM/VLM training engineers and operators, containing numerous scripts and copy-n-paste commands to facilitate quick problem-solving. The repository is an ongoing compilation of the author's experiences training BLOOM-176B and IDEFICS-80B models, and currently focuses on the development and training of Retrieval Augmented Generation (RAG) models at Contextual.AI. The content is organized into six parts: Insights, Hardware, Orchestration, Training, Development, and Miscellaneous. It includes key comparison tables for high-end accelerators and networks, as well as shortcuts to frequently needed tools and guides. The repository is open to contributions and discussions, and is licensed under Attribution-ShareAlike 4.0 International.

distributed-llama

Distributed Llama is a tool that allows you to run large language models (LLMs) on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage. It uses TCP sockets to synchronize the state of the neural network, and you can easily configure your AI cluster by using a home router. Distributed Llama supports models such as Llama 2 (7B, 13B, 70B) chat and non-chat versions, Llama 3, and Grok-1 (314B).

Awesome-LLMs-for-Video-Understanding

Awesome-LLMs-for-Video-Understanding is a repository dedicated to exploring Video Understanding with Large Language Models. It provides a comprehensive survey of the field, covering models, pretraining, instruction tuning, and hybrid methods. The repository also includes information on tasks, datasets, and benchmarks related to video understanding. Contributors are encouraged to add new papers, projects, and materials to enhance the repository.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

MotionLLM

MotionLLM is a framework for human behavior understanding that leverages Large Language Models (LLMs) to jointly model videos and motion sequences. It provides a unified training strategy, dataset MoVid, and MoVid-Bench for evaluating human behavior comprehension. The framework excels in captioning, spatial-temporal comprehension, and reasoning abilities.

LLMGA

LLMGA (Multimodal Large Language Model-based Generation Assistant) is a tool that leverages Large Language Models (LLMs) to assist users in image generation and editing. It provides detailed language generation prompts for precise control over Stable Diffusion (SD), resulting in more intricate and precise content in generated images. The tool curates a dataset for prompt refinement, similar image generation, inpainting & outpainting, and visual question answering. It offers a two-stage training scheme to optimize SD alignment and a reference-based restoration network to alleviate texture, brightness, and contrast disparities in image editing. LLMGA shows promising generative capabilities and enables wider applications in an interactive manner.

LLMs

LLMs is a Chinese large language model technology stack for practical use. It includes high-availability pre-training, SFT, and DPO preference alignment code framework. The repository covers pre-training data cleaning, high-concurrency framework, SFT dataset cleaning, data quality improvement, and security alignment work for Chinese large language models. It also provides open-source SFT dataset construction, pre-training from scratch, and various tools and frameworks for data cleaning, quality optimization, and task alignment.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.