LLMs

专注于中文领域大语言模型,落地到某个行业某个领域,成为一个行业大模型、公司级别或行业级别领域大模型。

Stars: 97

LLMs is a Chinese large language model technology stack for practical use. It includes high-availability pre-training, SFT, and DPO preference alignment code framework. The repository covers pre-training data cleaning, high-concurrency framework, SFT dataset cleaning, data quality improvement, and security alignment work for Chinese large language models. It also provides open-source SFT dataset construction, pre-training from scratch, and various tools and frameworks for data cleaning, quality optimization, and task alignment.

README:

coming soon...

分享一个观点:"Without a well thought-out data model, organizations will be constantly fighting short-term data engineering problems and connecting the business needs with data requirements will be extremely difficult. "如果没有一个经过深思熟虑的数据模型,组织将不断地与短期数据工程问题作斗争,将业务需求与数据需求联系起来将是极其困难的。"

来源网页需要付费账户,不再注明。

数据生产方案,是从原始数据到可训练数据的一整套流程,包括原始数据筛选(data selection)、数据清洗、数据embedding、数据质量打分、数据难度打分、数据Tag等等,利用这些特征将数据集分层,之后筛选进入SFT训练的数据集,偏好数据的种子从中选择,经过几十个模型生产数据,构造偏好关系,得到用于偏好对齐的训练数据。

训练方案将SFT和DPO串联起来,一轮SFT后紧接着一轮DPO,往复迭代三轮,每一轮都增加新数据进来,构造更有难度的偏好数据。

已经在36台A100,288块GPU卡上实际跑过预训练、增量预训练和SFT,涉及1.5T的tokens训练,等待开源...

地址:https://github.com/xubuvd/PhasedSFT

代码在16台A100、A800和H100机器上,经受过了大规模的SFT训练,数据量最多达 200万条,已经训练过的模型有Llama1、Llama2、Llama3、Qwen1、Qwen1.5、Qwen2 和 Mistral,稳定可用的。

地址:codes_datasets/Postraining_dpo

用法参考:codes_datasets/Postraining_dpo/README.md

代码在16台A100、A800和H100机器上训练过,DPO偏好数据最多到50万条,稳定可用。

大规模预训练数据集数据集清洗,具有可读性好、可用性好,Python多线程并发,包括一套清洗工程规范,清洗策略,多线程框架,文本去重代码,黑名单词典等内容。

2024.08.13,开源出来一部分基于启发式规则的多线程python清洗代码,代码复用性、可用性好:codes_datasets/DataCleaning

清洗代码唯一的启动脚本:

nohup bash run_data_cleaning.sh > r.log 2>&1 &

停止程序的脚本:

bash stopall.sh

🍂step1: 数据清洗,也可复用预训练数据清洗代码

🍂step2: 数据质量提升

1.指令质量打分

2.指令难度打分

3.聚类+语义去重复

代码整理中,等待开源...

一千条安全类指令数据集:codes_datasets/SFTData/cn-Safety-Prompts-gpt12k_baichuan1K.jsonl

一千条安全类中文指令数据集,关于诈骗、欺骗、个人安全、个人攻击性歧视性仇恨言论、黄赌毒等类型: codes_datasets/SFTData/cn-Chinese-harmlessness-1K.jsonl

开源超大规模高中试题指令数据集,100万条中文指令数据,涵盖语文、数学、物理、化学、地理、历史、政治和英文。

已上传:cn-sft-exams-highSchool-1k.jsonl

指令格式:{"id": "26069", "data": ["问题","答案"]}

数据质量和多样性,这两条是模型能力的来源。

优化前:

优化后:

上述数据分布图生成代码:codes_datasets/utils/tsne_cluster_show.py

共 3 万条行测试题,逻辑推理题目,旨在提升模型的逻辑能力。

已上传:cn-exam-high-school-5W.jsonl.zip

指令格式:{"id": "26069", "data": ["问题","答案"]}

有的!

预训练框架:基于 DeepSpeed + HuggFace Trainer 研发框架

模型结构: LLaMA;

@online{CnSftData,

author = {XuBu},

year = {2024},

title = {Chinese Large Language Models},

url = {https://github.com/xubuvd/LLMs},

month = {APR},

lastaccessed = {APR 19, 2024}

}

SFT:使用上一步的预训练框架 (弃用DeepSpeedChat,因为它不支持大规模数据训练,存在很多问题)

RLHF框架:使用优化过的 DeepSpeedChat 进行训练

DPO非常有效,目前在3万偏好数据集上,测试13B、70B和7B模型,效果非常显著;

DPO版本相对于SFT模型,胜率提升了 「10个」百分点以上,人的观感收益也很显著。具体的,在业务上的准确率(accuracy),相对于SFT模型,提升了 「14.6」个百分点,F1值,提升了 「13.5」个百分点。

数据收集和清洗,对大语言模型(LLM)最终效果的影响极度重要。

数据清洗需要一套方法论,预训练数据的三项关键指标:质量高、多样性和数量大。

- 一大段自然语言文本,语法上连贯流畅,没有插入无关的词汇、句子,语义上完整;

- 标点符号符合行文规范;

- 覆盖各个通用领域和学科的知识

- 构建一个全面的「行业领域知识体系」

- 整合上述各类信息源,确保广泛的知识覆盖

- 总量

- Pretrain 数据总量大,以LLaMA tokens计算,约 1~2 T tokens 左右

- Pretrain 数据总量大,以LLaMA tokens计算,约 1~2 T tokens 左右

- 分量

- 从数据多样性上看,各种数据类型的数据都有,大小基本符合互联网上的数据自然分布

- 从数据多样性上看,各种数据类型的数据都有,大小基本符合互联网上的数据自然分布

| No. | Projects | URL | Comments |

|---|---|---|---|

| 1 | LLaMA | https://github.com/facebookresearch/llama | 模型结构transformer block:

|

| 2 | OpenChatKit | https://github.com/togethercomputer/OpenChatKit | 基于GPT-NeoX-20B的微调版本,200亿参数,48层,单机八卡,每卡六层网络,每一层的模型结构:

|

| 3 | Open-Assistant | https://github.com/LAION-AI/Open-Assistant | 12B或者LLAMA-7B两个版本,Open Assistant 全流程训练细节(GPT3+RL),https://zhuanlan.zhihu.com/p/609003237 |

| 4 | ChatGLM-6B | https://github.com/THUDM/ChatGLM-6B | ChatGLM-6B 是一个开源的、支持中英双语的对话语言模型,基于 General Language Model (GLM) 架构,具有 62 亿参数。可以作为很好的基础模型,在此之上做二次研发,在特定垂直领域。没有放出源代码,只有训练好的模型。 |

| 5 | GLM-130B | https://github.com/THUDM/GLM-130B/ | 1300亿参数的中/英文大模型,没有放出源代码,只有训练好的模型 |

| 6 | Alpaca 7B | https://crfm.stanford.edu/2023/03/13/alpaca.html | A Strong Open-Source Instruction-Following Model,a model fine-tuned from the LLaMA 7B model on 52K instruction-following demonstrations. |

| 7 | Claude | 用户可以通过邮箱等信息注册申请试用, 产品地址:https://www.anthropic.com/product, 申请地址:https://www.anthropic.com/earlyaccess, API说明: https://console.anthropic.com/docs/api | 两个版本的 Claude:Claude 和 Claude Instant。 Claude 是最先进的高性能模型,而 Claude Instant 是更轻、更便宜、更快的选择。 |

| 8 | LLama/ChatLLama | https://github.com/nebuly-ai/nebullvm/tree/main/apps/accelerate/chatllama | 中文支持不好,有全套的SFT,RLHF训练过程 |

| 9 | chatglm-6B_finetuning | https://github.com/ssbuild/chatglm_finetuning | 1,chatGLM-6B的微调版本,正在补充RLHF代码,陆续放出来;28层网络,每一层的模型结构: ; ;2,两种微调方式:LoRA微调和SFT微调,28层网络,指令数据5K,单机8卡,A100,80G显存,batch size 8, epoch 1或2(有生成重复问题),大约20分钟内完成; 3, 借鉴 Colossal-AI/Open-Assistant的强化学习代码(PPO,PPO-ptx算法),Colossal-AI可以迁移过来,被实践过。 4,Reward model,可选较多,直接基于GLM-6B模型微调一个Reward model。 难点就是训练数据;GPT3.5使用了33K的人工标注数据训练 Reword model。 每个问题,配置四个答案ABCD,人工从好到差排序比如B>A>D>C,排序后的数据微调Reward model。 单机8卡,A100, 80G,train_batch_size=4, max_seq_len设置成512,才可以跑50K级别的微调数据集,这份代码感觉有点疑问,需要优化的地方挺多的 |

| 10 | ChatGLM-Tuning | https://github.com/mymusise/ChatGLM-Tuning | ChatGLM-6B的又一个微调版本 |

| 11 | 中文语言模型骆驼 (Luotuo) | https://github.com/LC1332/Chinese-alpaca-lora | 基于 LLaMA、Stanford Alpaca、Alpaca LoRA、Japanese-Alpaca-LoRA 等完成,单卡就能完成训练部署 |

| 12 | Alpaca-COT数据集 | https://github.com/PhoebusSi/Alpaca-CoT | 思维链(CoT)数据集,增强大语言模型的推理能力 |

| 13 | Bloom | https://huggingface.co/bigscience/bloom | 训练和代码比较全 |

| 14 | 中文LLaMA&Alpaca大语言模型 | https://github.com/ymcui/Chinese-LLaMA-Alpaca | 在原版LLaMA(7B和13B)的基础上扩充了中文词表并使用了中文数据进行二次预训练,进一步提升了中文基础语义理解能力。同时,在中文LLaMA的基础上,本项目使用了中文指令数据进行指令精调,显著提升了模型对指令的理解和执行能力。 |

| 15 | Colossal-AI/ColossalChat | https://github.com/hpcaitech/ColossalAI | 训练和代码比较全,包括 RLHF 训练代码;以 LLaMA 为基础预训练模型;开源了7B和13B两种模型; |

| 16 | Cerebras-GPT七个版本 | 官网地址:https://www.cerebras.net/blog/cerebras-gpt-a-family-of-open-compute-efficient-large-language-models GPT地址:https://www.cerebras.net/cerebras-gpt Hugging Face地址:https://huggingface.co/cerebras |

七个参数版本:1.16亿、2.56亿、5.9亿、13亿、27亿、67亿和130亿参数, 基于GPT的生成人工智能大型语言模型 |

| 17 | BloombergGPT (金融领域) |

https://arxiv.org/abs/2303.17564 | BloombergGPT的训练数据库名为FINPILE,构建迄今为止最大的特定领域数据集, 由一系列英文金融信息组成,包括新闻、文件、新闻稿、网络爬取的金融文件以及提取到的社交媒体消息。训练专门用于金融领域的LLM,拥有500亿参数的语言模型。 |

| 18 | dolly-v1-6b | https://github.com/databrickslabs/dolly | 1, fine-tuned on a ~52K instruction (Self-Instruct从 ChatGPT自动获取); 2,deepspeed ZeRo 3加速训练; 3.可借鉴的:deepspeed ZeRo 3加速训练部分; |

| 19 | ChatDoctor | https://github.com/Kent0n-Li/ChatDoctor | 医疗领域对话模型,基于LLaMA-7B微调的大模型,经过四轮微调: 第一轮微调:羊驼的52K instruction-following 数据 ;第二轮微调:患者和医生之间的5K对话数据集(ChatGPT GenMedGPT-5k和疾病数据库生成); 第三轮微调:患者和医生之间的真实对话(HealthCareMagic-200k); 第四轮微调:患者和医生之间的真实对话(icliniq-26k). |

| 20 | 开源中文对话大模型BELLE | https://github.com/LianjiaTech/BELLE | BELLE-7B(基于 BLOOMZ-7B1-mt 微调) BELLE-13B的感觉还行 |

| 21 | InstructGLM | https://github.com/yanqiangmiffy/InstructGLM | 基于ChatGLM-6B+LoRA在指令数据集上进行微调;截止4月4号下午,InstructGLM存在以下缺点:多卡不支持,原作者在回答issues时也确认了;社区不活跃,两周不更新代码,坑反馈的太少(才11条),deepspeed没有;这是我用三块卡跑,卡负载不均衡:

|

| 22 | Cerebras-GPT | https://huggingface.co/cerebras/Cerebras-GPT-13B | 参数量级130亿,大小比肩最近Meta开放的LLaMA-13B,数据集、模型权重和计算优化训练,全部开源。可商用! |

| 23 | Baize (加利福尼亚大学, 基于 LLaMA 的微调) |

https://github.com/project-baize/baize-chatbot | 数据集生成: 让 ChatGPT 与自己进行对话,模拟用户和AI机器人的回复」。这个生成的语料数据集是在多轮对话的背景下训练和评估聊天模型的宝贵资源。此外,通过指定种子数据集,可以从特定领域进行采样,并微调聊天模型以专门针对特定领域,例如医疗保健或金融。 Parameter-efficient tuning, 输入序列的最大长度设置为512,LoRA中的秩k设置为8,使用8位整数格式 (int8) ,Adam 优化器」更新LoRA 参数,batch size为64,learning rate为2e-4、1e-4和 5e-5,可训练的LoRA参数在 NVIDIA A100-80GB GPU 上微调了1个 epoch。 |

| 24 | Open-Llama | https://github.com/s-JoL/Open-Llama | Open-Llama是一个开源项目,提供了一整套用于构建大型语言模型的训练流程,从数据集准备到分词、预训练、指令调优,以及强化学习技术 RLHF。采用FastChat项目相同方法测评Open-Llama的效果和GPT3.5的效果对比,经过测试在中文问题上可以达到GPT3.5 84%的水平。 |

| 25 | DeepSpeed-Chat |

https://github.com/microsoft/DeepSpeed/tree/master/blogs/deepspeed-chat https://github.com/microsoft/DeepSpeedExamples |

微调框架:包括指令微调(SFT),Reward model 和强化学习对齐意图(RLHF) |

| 26 | fairseq | https://github.com/facebookresearch/fairseq | FaceBook开源的大语言模型预训练框架 |

| 27 | metaseq | https://github.com/facebookresearch/metaseq | FaceBook开源的大语言模型预训练模型框架,基于fairseq的新版本 |

| 28 | MiniGPT-4 | https://github.com/Vision-CAIR/MiniGPT-4 | 多模态大模型,基于 BLIP-2 和 Vicuna(LLaMA-7B基座), 阿卜杜拉国王科技大学 |

| 29 | moss (复旦大学) |

https://github.com/OpenLMLab/MOSS https://huggingface.co/models?other=moss |

moss-13B开源了,重要贡献是提供了一个纯基座 |

| 30 | 红睡衣(RedPajama)开源计划 |

https://huggingface.co/datasets/togethercomputer/RedPajama-Data-1T 预处理仓库:https://github.com/togethercomputer/RedPajama-Data |

红睡衣开源计划总共包括三部分: 1. 高质量、大规模、高覆盖度的预训练数据集; 2. 在预训练数据集上训练出的基础模型; 3. 指令调优数据集和模型,比基本模型更安全、可靠。 Ontocord.AI,苏黎世联邦理工学院DS3Lab,斯坦福CRFM,斯坦福Hazy Research 和蒙特利尔学习算法研究所的开源计划,旨在生成可复现、完全开放、最先进的语言模型,即从零一直开源到ChatGPT!。 |

| 31 | Panda 中文开源大语言模型 |

https://github.com/dandelionsllm/pandallm | 基于Llama-7B、-13B、-33B和-65B进行了中文领域的持续预训练,在中文基准测试中表现优异,远超同等类型的中文语言模型,Panda的模型和训练所用中文数据集将以开源形式发布,任何人都可以免费使用和参与开发。 |

| 32 | BELLE (LLaMA,链家) |

https://github.com/LianjiaTech/BELLE | BELLE-LLaMA-EXT-13B,在LLaMA-13B的基础上扩展中文词表,并在400万高质量的对话数据上进行训练。 |

| 33 | Linly-Chinese-LLaMA | https://github.com/CVI-SZU/Linly | LLaMA-7B/13B基础上,中文二次预训练,上下文长度2048 |

| 34 | CAMEL | https://github.com/camel-ai/camel | https://www.camel-ai.org/chat |

| 35 | Ziya-LLaMA-13B-v1 | https://huggingface.co/IDEA-CCNL/Ziya-LLaMA-13B-v1 | 姜子牙通用大模型V1是基于LLaMa的130亿参数的大规模预训练模型 |

| 36 | 悟道·天鹰(Aquila) | https://github.com/FlagAI-Open/FlagAI/tree/master/examples/Aquila/Aquila-chat |

1, ZeRO 2的性能,tflops 约为251

2, ZeRO 2和3的性能对比

| Model | ZeRO | GPU数量 | Bs | Seq len | Gpu mem | Gpu Usage | Iter | Tflops | TGS (tokens per gpu per second) |

|---|---|---|---|---|---|---|---|---|---|

| LLaMA-7B | zero2 | 2 | 50 | 2048 | 90% | 60% | 25s | 250 | 4096 |

| LLaMA-7B | zero3 | 2 | 76 | 2048 | 97% | 80% | 30s | 300 | 5188 |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLMs

Similar Open Source Tools

LLMs

LLMs is a Chinese large language model technology stack for practical use. It includes high-availability pre-training, SFT, and DPO preference alignment code framework. The repository covers pre-training data cleaning, high-concurrency framework, SFT dataset cleaning, data quality improvement, and security alignment work for Chinese large language models. It also provides open-source SFT dataset construction, pre-training from scratch, and various tools and frameworks for data cleaning, quality optimization, and task alignment.

awesome-ai-painting

This repository, named 'awesome-ai-painting', is a comprehensive collection of resources related to AI painting. It is curated by a user named 秋风, who is an AI painting enthusiast with a background in the AIGC industry. The repository aims to help more people learn AI painting and also documents the user's goal of creating 100 AI products, with current progress at 4/100. The repository includes information on various AI painting products, tutorials, tools, and models, providing a valuable resource for individuals interested in AI painting and related technologies.

Firefly

Firefly is an open-source large model training project that supports pre-training, fine-tuning, and DPO of mainstream large models. It includes models like Llama3, Gemma, Qwen1.5, MiniCPM, Llama, InternLM, Baichuan, ChatGLM, Yi, Deepseek, Qwen, Orion, Ziya, Xverse, Mistral, Mixtral-8x7B, Zephyr, Vicuna, Bloom, etc. The project supports full-parameter training, LoRA, QLoRA efficient training, and various tasks such as pre-training, SFT, and DPO. Suitable for users with limited training resources, QLoRA is recommended for fine-tuning instructions. The project has achieved good results on the Open LLM Leaderboard with QLoRA training process validation. The latest version has significant updates and adaptations for different chat model templates.

TigerBot

TigerBot is a cutting-edge foundation for your very own LLM, providing a world-class large model for innovative Chinese-style contributions. It offers various upgrades and features, such as search mode enhancements, support for large context lengths, and the ability to play text-based games. TigerBot is suitable for prompt-based game engine development, interactive game design, and real-time feedback for playable games.

crazyai-ml

The 'crazyai-ml' repository is a collection of resources related to machine learning, specifically focusing on explaining artificial intelligence models. It includes articles, code snippets, and tutorials covering various machine learning algorithms, data analysis, model training, and deployment. The content aims to provide a comprehensive guide for beginners in the field of AI, offering practical implementations and insights into popular machine learning packages and model tuning techniques. The repository also addresses the integration of AI models and frontend-backend concepts, making it a valuable resource for individuals interested in AI applications.

AstrBot

AstrBot is a powerful and versatile tool that leverages the capabilities of large language models (LLMs) like GPT-3, GPT-3.5, and GPT-4 to enhance communication and automate tasks. It seamlessly integrates with popular messaging platforms such as QQ, QQ Channel, and Telegram, enabling users to harness the power of AI within their daily conversations and workflows.

Llama-Chinese

Llama中文社区是一个专注于Llama模型在中文方面的优化和上层建设的高级技术社区。 **已经基于大规模中文数据,从预训练开始对Llama2模型进行中文能力的持续迭代升级【Done】**。**正在对Llama3模型进行中文能力的持续迭代升级【Doing】** 我们热忱欢迎对大模型LLM充满热情的开发者和研究者加入我们的行列。

chat-master

ChatMASTER is a self-built backend conversation service based on AI large model APIs, supporting synchronous and streaming responses with perfect printer effects. It supports switching between mainstream models such as DeepSeek, Kimi, Doubao, OpenAI, Claude3, Yiyan, Tongyi, Xinghuo, ChatGLM, Shusheng, and more. It also supports loading local models and knowledge bases using Ollama and Langchain, as well as online API interfaces like Coze and Gitee AI. The project includes Java server-side, web-side, mobile-side, and management background configuration. It provides various assistant types for prompt output and allows creating custom assistant templates in the management background. The project uses technologies like Spring Boot, Spring Security + JWT, Mybatis-Plus, Lombok, Mysql & Redis, with easy-to-understand code and comprehensive permission control using JWT authentication system for multi-terminal support.

MedicalGPT

MedicalGPT is a training medical GPT model with ChatGPT training pipeline, implement of Pretraining, Supervised Finetuning, RLHF(Reward Modeling and Reinforcement Learning) and DPO(Direct Preference Optimization).

Chinese-Mixtral-8x7B

Chinese-Mixtral-8x7B is an open-source project based on Mistral's Mixtral-8x7B model for incremental pre-training of Chinese vocabulary, aiming to advance research on MoE models in the Chinese natural language processing community. The expanded vocabulary significantly improves the model's encoding and decoding efficiency for Chinese, and the model is pre-trained incrementally on a large-scale open-source corpus, enabling it with powerful Chinese generation and comprehension capabilities. The project includes a large model with expanded Chinese vocabulary and incremental pre-training code.

MEGREZ

MEGREZ is a modern and elegant open-source high-performance computing platform that efficiently manages GPU resources. It allows for easy container instance creation, supports multiple nodes/multiple GPUs, modern UI environment isolation, customizable performance configurations, and user data isolation. The platform also comes with pre-installed deep learning environments, supports multiple users, features a VSCode web version, resource performance monitoring dashboard, and Jupyter Notebook support.

MiniCPM

MiniCPM is a series of open-source large models on the client side jointly developed by Face Intelligence and Tsinghua University Natural Language Processing Laboratory. The main language model MiniCPM-2B has only 2.4 billion (2.4B) non-word embedding parameters, with a total of 2.7B parameters. - After SFT, MiniCPM-2B performs similarly to Mistral-7B on public comprehensive evaluation sets (better in Chinese, mathematics, and code capabilities), and outperforms models such as Llama2-13B, MPT-30B, and Falcon-40B overall. - After DPO, MiniCPM-2B also surpasses many representative open-source large models such as Llama2-70B-Chat, Vicuna-33B, Mistral-7B-Instruct-v0.1, and Zephyr-7B-alpha on the current evaluation set MTBench, which is closest to the user experience. - Based on MiniCPM-2B, a multi-modal large model MiniCPM-V 2.0 on the client side is constructed, which achieves the best performance of models below 7B in multiple test benchmarks, and surpasses larger parameter scale models such as Qwen-VL-Chat 9.6B, CogVLM-Chat 17.4B, and Yi-VL 34B on the OpenCompass leaderboard. MiniCPM-V 2.0 also demonstrates leading OCR capabilities, approaching Gemini Pro in scene text recognition capabilities. - After Int4 quantization, MiniCPM can be deployed and inferred on mobile phones, with a streaming output speed slightly higher than human speech speed. MiniCPM-V also directly runs through the deployment of multi-modal large models on mobile phones. - A single 1080/2080 can efficiently fine-tune parameters, and a single 3090/4090 can fully fine-tune parameters. A single machine can continuously train MiniCPM, and the secondary development cost is relatively low.

MindChat

MindChat is a psychological large language model designed to help individuals relieve psychological stress and solve mental confusion, ultimately improving mental health. It aims to provide a relaxed and open conversation environment for users to build trust and understanding. MindChat offers privacy, warmth, safety, timely, and convenient conversation settings to help users overcome difficulties and challenges, achieve self-growth, and development. The tool is suitable for both work and personal life scenarios, providing comprehensive psychological support and therapeutic assistance to users while strictly protecting user privacy. It combines psychological knowledge with artificial intelligence technology to contribute to a healthier, more inclusive, and equal society.

llms-from-scratch-cn

This repository provides a detailed tutorial on how to build your own large language model (LLM) from scratch. It includes all the code necessary to create a GPT-like LLM, covering the encoding, pre-training, and fine-tuning processes. The tutorial is written in a clear and concise style, with plenty of examples and illustrations to help you understand the concepts involved. It is suitable for developers and researchers with some programming experience who are interested in learning more about LLMs and how to build them.

Awesome-RL-for-LRMs

This repository contains a collection of awesome resources for reinforcement learning in language models. It includes tutorials, code implementations, research papers, and tools to help researchers and practitioners explore and apply reinforcement learning techniques in natural language processing tasks. Whether you are a beginner or an expert in the field, this repository aims to provide valuable insights and guidance to enhance your understanding and implementation of reinforcement learning in language models.

For similar tasks

LLMs

LLMs is a Chinese large language model technology stack for practical use. It includes high-availability pre-training, SFT, and DPO preference alignment code framework. The repository covers pre-training data cleaning, high-concurrency framework, SFT dataset cleaning, data quality improvement, and security alignment work for Chinese large language models. It also provides open-source SFT dataset construction, pre-training from scratch, and various tools and frameworks for data cleaning, quality optimization, and task alignment.

Reflection_Tuning

Reflection-Tuning is a project focused on improving the quality of instruction-tuning data through a reflection-based method. It introduces Selective Reflection-Tuning, where the student model can decide whether to accept the improvements made by the teacher model. The project aims to generate high-quality instruction-response pairs by defining specific criteria for the oracle model to follow and respond to. It also evaluates the efficacy and relevance of instruction-response pairs using the r-IFD metric. The project provides code for reflection and selection processes, along with data and model weights for both V1 and V2 methods.

ProX

ProX is a lm-based data refinement framework that automates the process of cleaning and improving data used in pre-training large language models. It offers better performance, domain flexibility, efficiency, and cost-effectiveness compared to traditional methods. The framework has been shown to improve model performance by over 2% and boost accuracy by up to 20% in tasks like math. ProX is designed to refine data at scale without the need for manual adjustments, making it a valuable tool for data preprocessing in natural language processing tasks.

ml-engineering

This repository provides a comprehensive collection of methodologies, tools, and step-by-step instructions for successful training of large language models (LLMs) and multi-modal models. It is a technical resource suitable for LLM/VLM training engineers and operators, containing numerous scripts and copy-n-paste commands to facilitate quick problem-solving. The repository is an ongoing compilation of the author's experiences training BLOOM-176B and IDEFICS-80B models, and currently focuses on the development and training of Retrieval Augmented Generation (RAG) models at Contextual.AI. The content is organized into six parts: Insights, Hardware, Orchestration, Training, Development, and Miscellaneous. It includes key comparison tables for high-end accelerators and networks, as well as shortcuts to frequently needed tools and guides. The repository is open to contributions and discussions, and is licensed under Attribution-ShareAlike 4.0 International.

distributed-llama

Distributed Llama is a tool that allows you to run large language models (LLMs) on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage. It uses TCP sockets to synchronize the state of the neural network, and you can easily configure your AI cluster by using a home router. Distributed Llama supports models such as Llama 2 (7B, 13B, 70B) chat and non-chat versions, Llama 3, and Grok-1 (314B).

Awesome-LLMs-for-Video-Understanding

Awesome-LLMs-for-Video-Understanding is a repository dedicated to exploring Video Understanding with Large Language Models. It provides a comprehensive survey of the field, covering models, pretraining, instruction tuning, and hybrid methods. The repository also includes information on tasks, datasets, and benchmarks related to video understanding. Contributors are encouraged to add new papers, projects, and materials to enhance the repository.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

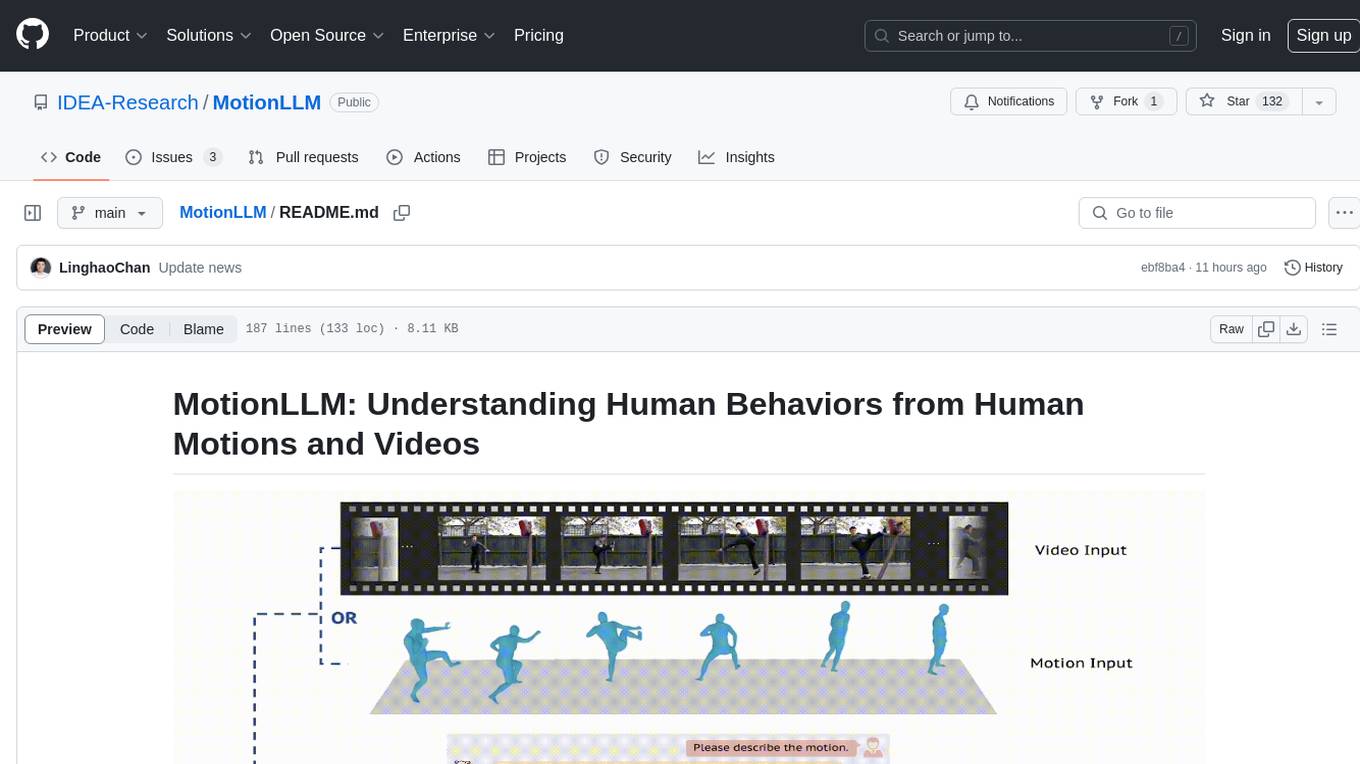

MotionLLM

MotionLLM is a framework for human behavior understanding that leverages Large Language Models (LLMs) to jointly model videos and motion sequences. It provides a unified training strategy, dataset MoVid, and MoVid-Bench for evaluating human behavior comprehension. The framework excels in captioning, spatial-temporal comprehension, and reasoning abilities.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.