oumi

Easily fine-tune, evaluate and deploy gpt-oss, Qwen3, DeepSeek-R1, or any open source LLM / VLM!

Stars: 8853

Oumi is an open-source platform for building state-of-the-art foundation models, offering tools for data preparation, training, evaluation, and deployment. It supports training and fine-tuning models with various parameters, working with text and multimodal models, synthesizing and curating training data, deploying models efficiently, evaluating models comprehensively, and running on different platforms. Oumi provides a consistent API, reliability, and flexibility for research purposes.

README:

- [2026/02] Preview of using the Oumi Platform and Lambda to fine-tune and deploy a 4B model for user intent classification

- [2026/02] Lambda and Oumi partner for end-to-end custom model development

- [2025/12] Oumi v0.6.0 released with Python 3.13 support,

oumi analyzeCLI command, TRL 0.26+ support, and more - [2025/12] WeMakeDevs AI Agents Assemble Hackathon: Oumi webinar on Finetuning for Text-to-SQL

- [2025/12] Oumi co-sponsors WeMakeDevs AI Agents Assemble Hackathon with over 2000 project submissions

- [2025/11] Oumi v0.5.0 released with advanced data synthesis, hyperparameter tuning automation, support for OpenEnv, and more

- [2025/11] Example notebook to perform RLVF fine-tuning with OpenEnv, an open source library from the Meta PyTorch team for creating, deploying, and distributing agentic RL environments

- [2025/10] Oumi v0.4.1 and v0.4.2 released] with support for Qwen3-VL and Transformers v4.56, data synthesis documentation and examples, and many bug fixes

- [2025/09] Oumi v0.4.0 released with DeepSpeed support, a Hugging Face Hub cache management tool, KTO/Vision DPO trainer support

- [2025/08] Training and inference support for OpenAI's

gpt-oss-20bandgpt-oss-120b: recipes here - [2025/08] Aug 14 Webinar - OpenAI's gpt-oss: Separating the Substance from the Hype.

- [2025/08] Oumi v0.3.0 released with model quantization (AWQ), an improved LLM-as-a-Judge API, and Adaptive Inference

- [2025/07] Recipe for Qwen3 235B

- [2025/07] July 24 webinar: "Training a State-of-the-art Agent LLM with Oumi + Lambda"

- [2025/06] Oumi v0.2.0 released with support for GRPO fine-tuning, a plethora of new model support, and much more

- [2025/06] Announcement of Data Curation for Vision Language Models (DCVLR) competition at NeurIPS2025

- [2025/06] Recipes for training, inference, and eval with the newly released Falcon-H1 and Falcon-E models

- [2025/05] Support and recipes for InternVL3 1B

- [2025/04] Added support for training and inference with Llama 4 models: Scout (17B activated, 109B total) and Maverick (17B activated, 400B total) variants, including full fine-tuning, LoRA, and QLoRA configurations

- [2025/04] Recipes for Qwen3 model family

- [2025/04] Introducing HallOumi: a State-of-the-Art Claim-Verification Model (technical overview)

- [2025/04] Oumi now supports two new Vision-Language models: Phi4 and Qwen 2.5

Oumi is a fully open-source platform that streamlines the entire lifecycle of foundation models - from data preparation and training to evaluation and deployment. Whether you're developing on a laptop, launching large scale experiments on a cluster, or deploying models in production, Oumi provides the tools and workflows you need.

With Oumi, you can:

- 🚀 Train and fine-tune models from 10M to 405B parameters using state-of-the-art techniques (SFT, LoRA, QLoRA, GRPO, and more)

- 🤖 Work with both text and multimodal models (Llama, DeepSeek, Qwen, Phi, and others)

- 🔄 Synthesize and curate training data with LLM judges

- ⚡️ Deploy models efficiently with popular inference engines (vLLM, SGLang)

- 📊 Evaluate models comprehensively across standard benchmarks

- 🌎 Run anywhere - from laptops to clusters to clouds (AWS, Azure, GCP, Lambda, and more)

- 🔌 Integrate with both open models and commercial APIs (OpenAI, Anthropic, Vertex AI, Together, Parasail, ...)

All with one consistent API, production-grade reliability, and all the flexibility you need for research.

Learn more at oumi.ai, or jump right in with the quickstart guide.

Choose the installation method that works best for you:

Using pip (Recommended)

# Basic installation

uv pip install oumi

# With GPU support

uv pip install 'oumi[gpu]'

# Latest development version

uv pip install git+https://github.com/oumi-ai/oumi.gitDon't have uv? Install it or use pip instead.

Using Docker

# Pull the latest image

docker pull ghcr.io/oumi-ai/oumi:latest

# Run oumi commands

docker run --gpus all -it ghcr.io/oumi-ai/oumi:latest oumi --help

# Train with a mounted config

docker run --gpus all -v $(pwd):/workspace -it ghcr.io/oumi-ai/oumi:latest \

oumi train --config /workspace/my_config.yamlQuick Install Script (Experimental)

Try Oumi without setting up a Python environment. This installs Oumi in an isolated environment:

curl -LsSf https://oumi.ai/install.sh | bashFor more advanced installation options, see the installation guide.

You can quickly use the oumi command to train, evaluate, and infer models using one of the existing recipes:

# Training

oumi train -c configs/recipes/smollm/sft/135m/quickstart_train.yaml

# Evaluation

oumi evaluate -c configs/recipes/smollm/evaluation/135m/quickstart_eval.yaml

# Inference

oumi infer -c configs/recipes/smollm/inference/135m_infer.yaml --interactiveFor more advanced options, see the training, evaluation, inference, and llm-as-a-judge guides.

You can run jobs remotely on cloud platforms (AWS, Azure, GCP, Lambda, etc.) using the oumi launch command:

# GCP

oumi launch up -c configs/recipes/smollm/sft/135m/quickstart_gcp_job.yaml

# AWS

oumi launch up -c configs/recipes/smollm/sft/135m/quickstart_gcp_job.yaml --resources.cloud aws

# Azure

oumi launch up -c configs/recipes/smollm/sft/135m/quickstart_gcp_job.yaml --resources.cloud azure

# Lambda

oumi launch up -c configs/recipes/smollm/sft/135m/quickstart_gcp_job.yaml --resources.cloud lambdaNote: Oumi is in beta and under active development. The core features are stable, but some advanced features might change as the platform improves.

If you need a comprehensive platform for training, evaluating, or deploying models, Oumi is a great choice.

Here are some of the key features that make Oumi stand out:

- 🔧 Zero Boilerplate: Get started in minutes with ready-to-use recipes for popular models and workflows. No need to write training loops or data pipelines.

- 🏢 Enterprise-Grade: Built and validated by teams training models at scale

- 🎯 Research Ready: Perfect for ML research with easily reproducible experiments, and flexible interfaces for customizing each component.

- 🌐 Broad Model Support: Works with most popular model architectures - from tiny models to the largest ones, text-only to multimodal.

- 🚀 SOTA Performance: Native support for distributed training techniques (FSDP, DeepSpeed, DDP) and optimized inference engines (vLLM, SGLang).

- 🤝 Community First: 100% open source with an active community. No vendor lock-in, no strings attached.

Explore the growing collection of ready-to-use configurations for state-of-the-art models and training workflows:

Note: These configurations are not an exhaustive list of what's supported, simply examples to get you started. You can find a more exhaustive list of supported models, and datasets (supervised fine-tuning, pre-training, preference tuning, and vision-language finetuning) in the oumi documentation.

| Model | Example Configurations |

|---|---|

| Qwen3-Next 80B A3B | LoRA • Inference • Inference (Instruct) • Evaluation |

| Qwen3 30B A3B | LoRA • Inference • Evaluation |

| Qwen3 32B | LoRA • Inference • Evaluation |

| Qwen3 14B | LoRA • Inference • Evaluation |

| Qwen3 8B | FFT • Inference • Evaluation |

| Qwen3 4B | FFT • Inference • Evaluation |

| Qwen3 1.7B | FFT • Inference • Evaluation |

| Qwen3 0.6B | FFT • Inference • Evaluation |

| QwQ 32B | FFT • LoRA • QLoRA • Inference • Evaluation |

| Qwen2.5-VL 3B | SFT • LoRA• Inference (vLLM) • Inference |

| Qwen2-VL 2B | SFT • LoRA • Inference (vLLM) • Inference (SGLang) • Inference • Evaluation |

| Model | Example Configurations |

|---|---|

| DeepSeek R1 671B | Inference (Together AI) |

| Distilled Llama 8B | FFT • LoRA • QLoRA • Inference • Evaluation |

| Distilled Llama 70B | FFT • LoRA • QLoRA • Inference • Evaluation |

| Distilled Qwen 1.5B | FFT • LoRA • Inference • Evaluation |

| Distilled Qwen 32B | LoRA • Inference • Evaluation |

| Model | Example Configurations |

|---|---|

| Llama 4 Scout Instruct 17B | FFT • LoRA • QLoRA • Inference (vLLM) • Inference • Inference (Together.ai) |

| Llama 4 Scout 17B | FFT |

| Llama 3.1 8B | FFT • LoRA • QLoRA • Pre-training • Inference (vLLM) • Inference • Evaluation |

| Llama 3.1 70B | FFT • LoRA • QLoRA • Inference • Evaluation |

| Llama 3.1 405B | FFT • LoRA • QLoRA |

| Llama 3.2 1B | FFT • LoRA • QLoRA • Inference (vLLM) • Inference (SGLang) • Inference • Evaluation |

| Llama 3.2 3B | FFT • LoRA • QLoRA • Inference (vLLM) • Inference (SGLang) • Inference • Evaluation |

| Llama 3.3 70B | FFT • LoRA • QLoRA • Inference (vLLM) • Inference • Evaluation |

| Llama 3.2 Vision 11B | SFT • Inference (vLLM) • Inference (SGLang) • Evaluation |

| Model | Example Configurations |

|---|---|

| Falcon-H1 | FFT • Inference • Evaluation |

| Falcon-E (BitNet) | FFT • DPO • Evaluation |

| Model | Example Configurations |

|---|---|

| Gemma 3 4B Instruct | FFT • Inference • Evaluation |

| Gemma 3 12B Instruct | LoRA • Inference • Evaluation |

| Gemma 3 27B Instruct | LoRA • Inference • Evaluation |

| Model | Example Configurations |

|---|---|

| OLMo 3 7B Instruct | FFT • Inference • Evaluation |

| OLMo 3 32B Instruct | LoRA • Inference • Evaluation |

| Model | Example Configurations |

|---|---|

| Llama 3.2 Vision 11B | SFT • LoRA • Inference (vLLM) • Inference (SGLang) • Evaluation |

| LLaVA 7B | SFT • Inference (vLLM) • Inference |

| Phi3 Vision 4.2B | SFT • LoRA • Inference (vLLM) |

| Phi4 Vision 5.6B | SFT • LoRA • Inference (vLLM) • Inference |

| Qwen2-VL 2B | SFT • LoRA • Inference (vLLM) • Inference (SGLang) • Inference • Evaluation |

| Qwen3-VL 2B | Inference |

| Qwen3-VL 4B | Inference |

| Qwen3-VL 8B | Inference |

| Qwen2.5-VL 3B | SFT • LoRA• Inference (vLLM) • Inference |

| SmolVLM-Instruct 2B | SFT • LoRA |

This section lists all the language models that can be used with Oumi. Thanks to the integration with the 🤗 Transformers library, you can easily use any of these models for training, evaluation, or inference.

Models prefixed with a checkmark (✅) have been thoroughly tested and validated by the Oumi community, with ready-to-use recipes available in the configs/recipes directory.

📋 Click to see more supported models

| Model | Size | Paper | HF Hub | License | Open 1 |

|---|---|---|---|---|---|

| ✅ SmolLM-Instruct | 135M/360M/1.7B | Blog | Hub | Apache 2.0 | ✅ |

| ✅ DeepSeek R1 Family | 1.5B/8B/32B/70B/671B | Blog | Hub | MIT | ❌ |

| ✅ Llama 3.1 Instruct | 8B/70B/405B | Paper | Hub | License | ❌ |

| ✅ Llama 3.2 Instruct | 1B/3B | Paper | Hub | License | ❌ |

| ✅ Llama 3.3 Instruct | 70B | Paper | Hub | License | ❌ |

| ✅ Phi-3.5-Instruct | 4B/14B | Paper | Hub | License | ❌ |

| ✅ Qwen3 | 0.6B-32B | Paper | Hub | License | ❌ |

| Qwen2.5-Instruct | 0.5B-70B | Paper | Hub | License | ❌ |

| OLMo 2 Instruct | 7B | Paper | Hub | Apache 2.0 | ✅ |

| ✅ OLMo 3 Instruct | 7B/32B | Paper | Hub | Apache 2.0 | ✅ |

| MPT-Instruct | 7B | Blog | Hub | Apache 2.0 | ✅ |

| Command R | 35B/104B | Blog | Hub | License | ❌ |

| Granite-3.1-Instruct | 2B/8B | Paper | Hub | Apache 2.0 | ❌ |

| Gemma 2 Instruct | 2B/9B | Blog | Hub | License | ❌ |

| ✅ Gemma 3 Instruct | 4B/12B/27B | Blog | Hub | License | ❌ |

| DBRX-Instruct | 130B MoE | Blog | Hub | Apache 2.0 | ❌ |

| Falcon-Instruct | 7B/40B | Paper | Hub | Apache 2.0 | ❌ |

| ✅ Llama 4 Scout Instruct | 17B (Activated) 109B (Total) | Paper | Hub | License | ❌ |

| ✅ Llama 4 Maverick Instruct | 17B (Activated) 400B (Total) | Paper | Hub | License | ❌ |

| Model | Size | Paper | HF Hub | License | Open |

|---|---|---|---|---|---|

| ✅ Llama 3.2 Vision | 11B | Paper | Hub | License | ❌ |

| ✅ LLaVA-1.5 | 7B | Paper | Hub | License | ❌ |

| ✅ Phi-3 Vision | 4.2B | Paper | Hub | License | ❌ |

| ✅ BLIP-2 | 3.6B | Paper | Hub | MIT | ❌ |

| ✅ Qwen2-VL | 2B | Blog | Hub | License | ❌ |

| ✅ Qwen3-VL | 2B/4B/8B | Blog | Hub | License | ❌ |

| ✅ SmolVLM-Instruct | 2B | Blog | Hub | Apache 2.0 | ✅ |

| Model | Size | Paper | HF Hub | License | Open |

|---|---|---|---|---|---|

| ✅ SmolLM2 | 135M/360M/1.7B | Blog | Hub | Apache 2.0 | ✅ |

| ✅ Llama 3.2 | 1B/3B | Paper | Hub | License | ❌ |

| ✅ Llama 3.1 | 8B/70B/405B | Paper | Hub | License | ❌ |

| ✅ GPT-2 | 124M-1.5B | Paper | Hub | MIT | ✅ |

| DeepSeek V2 | 7B/13B | Blog | Hub | License | ❌ |

| Gemma2 | 2B/9B | Blog | Hub | License | ❌ |

| GPT-J | 6B | Blog | Hub | Apache 2.0 | ✅ |

| GPT-NeoX | 20B | Paper | Hub | Apache 2.0 | ✅ |

| Mistral | 7B | Paper | Hub | Apache 2.0 | ❌ |

| Mixtral | 8x7B/8x22B | Blog | Hub | Apache 2.0 | ❌ |

| MPT | 7B | Blog | Hub | Apache 2.0 | ✅ |

| OLMo | 1B/7B | Paper | Hub | Apache 2.0 | ✅ |

| ✅ Llama 4 Scout | 17B (Activated) 109B (Total) | Paper | Hub | License | ❌ |

| Model | Size | Paper | HF Hub | License | Open |

|---|---|---|---|---|---|

| ✅ gpt-oss | 20B/120B | Paper | Hub | Apache 2.0 | ❌ |

| ✅ Qwen3 | 0.6B-32B | Paper | Hub | License | ❌ |

| ✅ Qwen3-Next | 80B-A3B | Blog | Hub | License | ❌ |

| Qwen QwQ | 32B | Blog | Hub | License | ❌ |

| Model | Size | Paper | HF Hub | License | Open |

|---|---|---|---|---|---|

| ✅ Qwen2.5 Coder | 0.5B-32B | Blog | Hub | License | ❌ |

| DeepSeek Coder | 1.3B-33B | Paper | Hub | License | ❌ |

| StarCoder 2 | 3B/7B/15B | Paper | Hub | License | ✅ |

| Model | Size | Paper | HF Hub | License | Open |

|---|---|---|---|---|---|

| DeepSeek Math | 7B | Paper | Hub | License | ❌ |

To learn more about all the platform's capabilities, see the Oumi documentation.

Oumi is a community-first effort. Whether you are a developer, a researcher, or a non-technical user, all contributions are very welcome!

- To contribute to the

oumirepository, please check theCONTRIBUTING.mdfor guidance on how to contribute to send your first Pull Request. - Make sure to join our Discord community to get help, share your experiences, and contribute to the project!

- If you are interested in joining one of the community's open-science efforts, check out our open collaboration page.

Oumi makes use of several libraries and tools from the open-source community. We would like to acknowledge and deeply thank the contributors of these projects! ✨ 🌟 💫

If you find Oumi useful in your research, please consider citing it:

@software{oumi2025,

author = {Oumi Community},

title = {Oumi: an Open, End-to-end Platform for Building Large Foundation Models},

month = {January},

year = {2025},

url = {https://github.com/oumi-ai/oumi}

}This project is licensed under the Apache License 2.0. See the LICENSE file for details.

-

Open models are defined as models with fully open weights, training code, and data, and a permissive license. See Open Source Definitions for more information. ↩

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for oumi

Similar Open Source Tools

oumi

Oumi is an open-source platform for building state-of-the-art foundation models, offering tools for data preparation, training, evaluation, and deployment. It supports training and fine-tuning models with various parameters, working with text and multimodal models, synthesizing and curating training data, deploying models efficiently, evaluating models comprehensively, and running on different platforms. Oumi provides a consistent API, reliability, and flexibility for research purposes.

langfuse

Langfuse is a powerful tool that helps you develop, monitor, and test your LLM applications. With Langfuse, you can: * **Develop:** Instrument your app and start ingesting traces to Langfuse, inspect and debug complex logs, and manage, version, and deploy prompts from within Langfuse. * **Monitor:** Track metrics (cost, latency, quality) and gain insights from dashboards & data exports, collect and calculate scores for your LLM completions, run model-based evaluations, collect user feedback, and manually score observations in Langfuse. * **Test:** Track and test app behaviour before deploying a new version, test expected in and output pairs and benchmark performance before deploying, and track versions and releases in your application. Langfuse is easy to get started with and offers a generous free tier. You can sign up for Langfuse Cloud or deploy Langfuse locally or on your own infrastructure. Langfuse also offers a variety of integrations to make it easy to connect to your LLM applications.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

phoenix

Phoenix is a tool that provides MLOps and LLMOps insights at lightning speed with zero-config observability. It offers a notebook-first experience for monitoring models and LLM Applications by providing LLM Traces, LLM Evals, Embedding Analysis, RAG Analysis, and Structured Data Analysis. Users can trace through the execution of LLM Applications, evaluate generative models, explore embedding point-clouds, visualize generative application's search and retrieval process, and statistically analyze structured data. Phoenix is designed to help users troubleshoot problems related to retrieval, tool execution, relevance, toxicity, drift, and performance degradation.

gpupixel

GPUPixel is a real-time, high-performance image and video filter library written in C++11 and based on OpenGL/ES. It incorporates a built-in beauty face filter that achieves commercial-grade beauty effects. The library is extremely easy to compile and integrate with a small size, supporting platforms including iOS, Android, Mac, Windows, and Linux. GPUPixel provides various filters like skin smoothing, whitening, face slimming, big eyes, lipstick, and blush. It supports input formats like YUV420P, RGBA, JPEG, PNG, and output formats like RGBA and YUV420P. The library's performance on devices like iPhone and Android is optimized, with low CPU usage and fast processing times. GPUPixel's lib size is compact, making it suitable for mobile and desktop applications.

visionOS-examples

visionOS-examples is a repository containing accelerators for Spatial Computing. It includes examples such as Local Large Language Model, Chat Apple Vision Pro, WebSockets, Anchor To Head, Hand Tracking, Battery Life, Countdown, Plane Detection, Timer Vision, and PencilKit for visionOS. The repository showcases various functionalities and features for Apple Vision Pro, offering tools for developers to enhance their visionOS apps with capabilities like hand tracking, plane detection, and real-time cryptocurrency prices.

chat-master

ChatMASTER is a self-built backend conversation service based on AI large model APIs, supporting synchronous and streaming responses with perfect printer effects. It supports switching between mainstream models such as DeepSeek, Kimi, Doubao, OpenAI, Claude3, Yiyan, Tongyi, Xinghuo, ChatGLM, Shusheng, and more. It also supports loading local models and knowledge bases using Ollama and Langchain, as well as online API interfaces like Coze and Gitee AI. The project includes Java server-side, web-side, mobile-side, and management background configuration. It provides various assistant types for prompt output and allows creating custom assistant templates in the management background. The project uses technologies like Spring Boot, Spring Security + JWT, Mybatis-Plus, Lombok, Mysql & Redis, with easy-to-understand code and comprehensive permission control using JWT authentication system for multi-terminal support.

Awesome-LLM-Tabular

This repository is a curated list of research papers that explore the integration of Large Language Model (LLM) technology with tabular data. It aims to provide a comprehensive resource for researchers and practitioners interested in this emerging field. The repository includes papers on a wide range of topics, including table-to-text generation, table question answering, and tabular data classification. It also includes a section on related datasets and resources.

TRACE

TRACE is a temporal grounding video model that utilizes causal event modeling to capture videos' inherent structure. It presents a task-interleaved video LLM model tailored for sequential encoding/decoding of timestamps, salient scores, and textual captions. The project includes various model checkpoints for different stages and fine-tuning on specific datasets. It provides evaluation codes for different tasks like VTG, MVBench, and VideoMME. The repository also offers annotation files and links to raw videos preparation projects. Users can train the model on different tasks and evaluate the performance based on metrics like CIDER, METEOR, SODA_c, F1, mAP, Hit@1, etc. TRACE has been enhanced with trace-retrieval and trace-uni models, showing improved performance on dense video captioning and general video understanding tasks.

PocketFlow

Pocket Flow is a 100-line minimalist LLM framework designed for (Multi-)Agents, Workflow, RAG, etc. It provides a core abstraction for LLM projects by focusing on computation and communication through a graph structure and shared store. The framework aims to support the development of LLM Agents, such as Cursor AI, by offering a minimal and low-level approach that is well-suited for understanding and usage. Users can install Pocket Flow via pip or by copying the source code, and detailed documentation is available on the project website.

InternVL

InternVL scales up the ViT to _**6B parameters**_ and aligns it with LLM. It is a vision-language foundation model that can perform various tasks, including: **Visual Perception** - Linear-Probe Image Classification - Semantic Segmentation - Zero-Shot Image Classification - Multilingual Zero-Shot Image Classification - Zero-Shot Video Classification **Cross-Modal Retrieval** - English Zero-Shot Image-Text Retrieval - Chinese Zero-Shot Image-Text Retrieval - Multilingual Zero-Shot Image-Text Retrieval on XTD **Multimodal Dialogue** - Zero-Shot Image Captioning - Multimodal Benchmarks with Frozen LLM - Multimodal Benchmarks with Trainable LLM - Tiny LVLM InternVL has been shown to achieve state-of-the-art results on a variety of benchmarks. For example, on the MMMU image classification benchmark, InternVL achieves a top-1 accuracy of 51.6%, which is higher than GPT-4V and Gemini Pro. On the DocVQA question answering benchmark, InternVL achieves a score of 82.2%, which is also higher than GPT-4V and Gemini Pro. InternVL is open-sourced and available on Hugging Face. It can be used for a variety of applications, including image classification, object detection, semantic segmentation, image captioning, and question answering.

DataFlow

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

LLamaTuner

LLamaTuner is a repository for the Efficient Finetuning of Quantized LLMs project, focusing on building and sharing instruction-following Chinese baichuan-7b/LLaMA/Pythia/GLM model tuning methods. The project enables training on a single Nvidia RTX-2080TI and RTX-3090 for multi-round chatbot training. It utilizes bitsandbytes for quantization and is integrated with Huggingface's PEFT and transformers libraries. The repository supports various models, training approaches, and datasets for supervised fine-tuning, LoRA, QLoRA, and more. It also provides tools for data preprocessing and offers models in the Hugging Face model hub for inference and finetuning. The project is licensed under Apache 2.0 and acknowledges contributions from various open-source contributors.

LangBot

LangBot is an open-source large language model native instant messaging robot development platform, aiming to provide a plug-and-play IM robot development experience, with various LLM application functions such as Agent, RAG, MCP, adapting to mainstream instant messaging platforms globally, and providing rich API interfaces to support custom development.

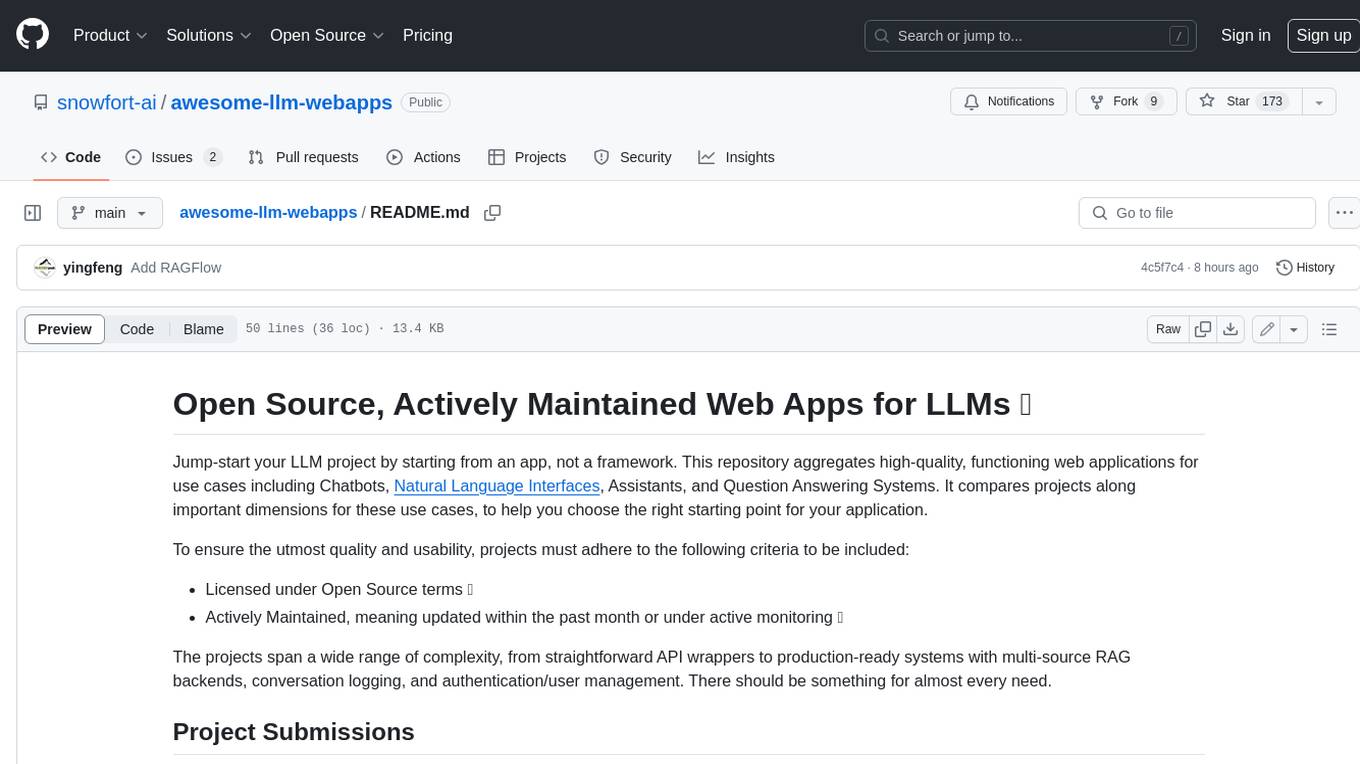

awesome-llm-webapps

This repository is a curated list of open-source, actively maintained web applications that leverage large language models (LLMs) for various use cases, including chatbots, natural language interfaces, assistants, and question answering systems. The projects are evaluated based on key criteria such as licensing, maintenance status, complexity, and features, to help users select the most suitable starting point for their LLM-based applications. The repository welcomes contributions and encourages users to submit projects that meet the criteria or suggest improvements to the existing list.

Steel-LLM

Steel-LLM is a project to pre-train a large Chinese language model from scratch using over 1T of data to achieve a parameter size of around 1B, similar to TinyLlama. The project aims to share the entire process including data collection, data processing, pre-training framework selection, model design, and open-source all the code. The goal is to enable reproducibility of the work even with limited resources. The name 'Steel' is inspired by a band '万能青年旅店' and signifies the desire to create a strong model despite limited conditions. The project involves continuous data collection of various cultural elements, trivia, lyrics, niche literature, and personal secrets to train the LLM. The ultimate aim is to fill the model with diverse data and leave room for individual input, fostering collaboration among users.

For similar tasks

oumi

Oumi is an open-source platform for building state-of-the-art foundation models, offering tools for data preparation, training, evaluation, and deployment. It supports training and fine-tuning models with various parameters, working with text and multimodal models, synthesizing and curating training data, deploying models efficiently, evaluating models comprehensively, and running on different platforms. Oumi provides a consistent API, reliability, and flexibility for research purposes.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.