awesome-llm-webapps

A collection of open source, actively maintained web apps for LLM applications

Stars: 173

This repository is a curated list of open-source, actively maintained web applications that leverage large language models (LLMs) for various use cases, including chatbots, natural language interfaces, assistants, and question answering systems. The projects are evaluated based on key criteria such as licensing, maintenance status, complexity, and features, to help users select the most suitable starting point for their LLM-based applications. The repository welcomes contributions and encourages users to submit projects that meet the criteria or suggest improvements to the existing list.

README:

Jump-start your LLM project by starting from an app, not a framework. This repository aggregates high-quality, functioning web applications for use cases including Chatbots, Natural Language Interfaces, Assistants, and Question Answering Systems. It compares projects along important dimensions for these use cases, to help you choose the right starting point for your application.

To ensure the utmost quality and usability, projects must adhere to the following criteria to be included:

- Licensed under Open Source terms 💸

- Actively Maintained, meaning updated within the past month or under active monitoring 🚨

The projects span a wide range of complexity, from straightforward API wrappers to production-ready systems with multi-source RAG backends, conversation logging, and authentication/user management. There should be something for almost every need.

Contributions are the backbone of this list! If you're aware of a project that meets our criteria but isn't listed, we'd love to hear about it. Please also notify us if any of the listed projects becomes unmaintained or changes its licensing. Additionally, if there's a project detail that you'd like to compare that's not currently tracked, submit an issue for it. Finally, if you're the maintainer of a project that's already listed and would like to update or modify the listing, submit it again with the desired modifications.

To submit a project:

- Create an issue.

- Ensure your submission adheres to the listed criteria and includes all relevant details.

- Submissions will be reviewed and the projects list will be updated within a day.

If you'd like to help maintain this project, contact clharman via email.

Currently seeking submissions for:

- Lightweight chatbots

- Projects with advanced prompting

- Non-chatbot interfaces (question answering, etc)

- Projects with image support

- Projects in different languages e.g. Python only

| Project | Demo | Brief Description | Architecture | Conversation Context Carry | Conversation History | Authentication | Model Support | Rich Text Support | Image Support | (RAG) Search Engine | (RAG) Show Sources | (RAG) Data ingestion | Quick Deploy | Other Features |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Hugging Face Chat UI

|

🟢 Link | Full featured chat interface | SvelteKit, MongoDB | 🟢 | 🟢 | 🟢 OpenID | Hugging Face Inference API, local, Amazon SageMaker | 🟢 | 🔴 | 🟢 Google search | 🟢 | 🔴 | 🟢 Hugging Face Spaces | Theme configuration |

Weaviate Verba    |

🟢 Link | Chat interface for RAG applications | React frontend, FastAPI backend, Weaviate | 🔴 | 🔴 | 🔴 | OpenAI | 🟢 | 🔴 | 🟢 Weaviate | 🟢 | 🟢 Via CLI | 🟢 Docker | Semantic caching |

Microsoft Azure Chat

|

🔴 | Azure-based private chat tenant over data and files | Next.js, LangChain.js, CosmosDB | 🟢 | 🟢 | 🟢 NextAuth | OpenAI | 🟢 | 🔴 | 🟢 Azure Cognitive Search | 🔴 | 🟢 UI single-file upload | 🟢 Azure | |

AWS GenAI LLM Chatbot

|

🔴 | AWS-based chatbot with RAG and selectable LLMs | React frontend, LangChain.js, cloud services backend | 🟢 | 🟢 | 🟢 Amazon Cognito | Bedrock, SageMaker, Hugging Face Inference Endpoints, OpenAI, Anthropic, AI21, Cohere | 🔴 | 🔴 | 🟢 Postgres/ Kendra/ OpenSearch | 🟢 | 🟢 UI file upload | 🟢 AWS | User-selectable model and search backend |

PrivateGPT

|

🔴 | API, pipeline, and UI for RAG applications. Supports private models. | FastAPI, LlamaIndex, Gradio | 🟢 | 🔴 | 🔴 | Local, OpenAI, Sagemaker | 🔴 | 🔴 | 🟢 Qdrant, Chroma | 🟢 | 🟢 UI file upload | 🔴 | |

Ollama Web UI

|

🔴 | Full featured GPT clone | Sveltekit, Ollama backend | 🟢 | 🟢 | 🟢 | Local: Any Ollama supported model | 🟢 | 🟢 | 🔴 | 🔴 | 🔴 | 🟢 Docker Compose | |

Azure GPT-RAG

|

🔴 | Enterprise-ready RAG framework | All-Azure services | 🟢 | 🟢 | 🟢 Azure Active Directory | OpenAI | 🟢 | 🔴 | 🟢 Azure Cognitive Search | 🟢 | 🟢 Data source connections | 🟢 Azure | Microsoft Teams bot integration, costs estimator |

Danswer    |

🔴 | Full featured RAG system with prebuilt data connectors for many source systems | FastAPI, Next.js, Vespa, Postgres, Celery | 🟢 | 🟢 | 🟢 | OpenAI, Local | 🟢 | 🔴 | 🟢 Vespa | 🟢 | 🟢 Selection of data connectors | 🟢 Docker Compose, Kubernetes | Slack bot |

LLM Answer Engine

|

🔴 | Perplexity style answer engine with web search | React, LangChain.JS, Brave, Serper, OpenAI | 🟢 | 🔴 | 🔴 | Mixtral, Ollama, OpenAI | 🔴 | 🔴 | 🟢 Brave, Serper | 🟢 | 🔴 Web search, not ingestion | 🔴 | |

Dify

|

🔴 | App development platform for GenAI | Next.js, Flask, Postgres | 🟢 | 🟢 | 🟢 | Various | 🟢 | 🟢 | 🟢 Various | 🟢 | 🟢 UI file upload | 🟢 AWS, Kubernetes | Agents, observability |

Flowise

|

🔴 | Drag-and-drop LLM flow builder | React, Node | 🟢 | 🟢 | 🟢 | Various | 🔴 | 🔴 | 🟢 Various | 🟢 | 🟢 UI file upload | 🟢 AWS, Kubernetes | GUI/no-code LLM app logic builder |

RAGFlow

|

🟢 Link | Enterprise-RAG engine based on deep document understanding | React, DeepDoc | 🟢 | 🟢 | 🟢 | Various | 🟢 | 🟢 | 🟢 Elasticsearch/Infinity | 🟢 | 🟢 UI file upload / file management | 🟢 Docker Compose | Document structure recognition / Table structure recognition |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-llm-webapps

Similar Open Source Tools

awesome-llm-webapps

This repository is a curated list of open-source, actively maintained web applications that leverage large language models (LLMs) for various use cases, including chatbots, natural language interfaces, assistants, and question answering systems. The projects are evaluated based on key criteria such as licensing, maintenance status, complexity, and features, to help users select the most suitable starting point for their LLM-based applications. The repository welcomes contributions and encourages users to submit projects that meet the criteria or suggest improvements to the existing list.

langfuse

Langfuse is a powerful tool that helps you develop, monitor, and test your LLM applications. With Langfuse, you can: * **Develop:** Instrument your app and start ingesting traces to Langfuse, inspect and debug complex logs, and manage, version, and deploy prompts from within Langfuse. * **Monitor:** Track metrics (cost, latency, quality) and gain insights from dashboards & data exports, collect and calculate scores for your LLM completions, run model-based evaluations, collect user feedback, and manually score observations in Langfuse. * **Test:** Track and test app behaviour before deploying a new version, test expected in and output pairs and benchmark performance before deploying, and track versions and releases in your application. Langfuse is easy to get started with and offers a generous free tier. You can sign up for Langfuse Cloud or deploy Langfuse locally or on your own infrastructure. Langfuse also offers a variety of integrations to make it easy to connect to your LLM applications.

visionOS-examples

visionOS-examples is a repository containing accelerators for Spatial Computing. It includes examples such as Local Large Language Model, Chat Apple Vision Pro, WebSockets, Anchor To Head, Hand Tracking, Battery Life, Countdown, Plane Detection, Timer Vision, and PencilKit for visionOS. The repository showcases various functionalities and features for Apple Vision Pro, offering tools for developers to enhance their visionOS apps with capabilities like hand tracking, plane detection, and real-time cryptocurrency prices.

oumi

Oumi is an open-source platform for building state-of-the-art foundation models, offering tools for data preparation, training, evaluation, and deployment. It supports training and fine-tuning models with various parameters, working with text and multimodal models, synthesizing and curating training data, deploying models efficiently, evaluating models comprehensively, and running on different platforms. Oumi provides a consistent API, reliability, and flexibility for research purposes.

nntrainer

NNtrainer is a software framework for training neural network models on devices with limited resources. It enables on-device fine-tuning of neural networks using user data for personalization. NNtrainer supports various machine learning algorithms and provides examples for tasks such as few-shot learning, ResNet, VGG, and product rating. It is optimized for embedded devices and utilizes CBLAS and CUBLAS for accelerated calculations. NNtrainer is open source and released under the Apache License version 2.0.

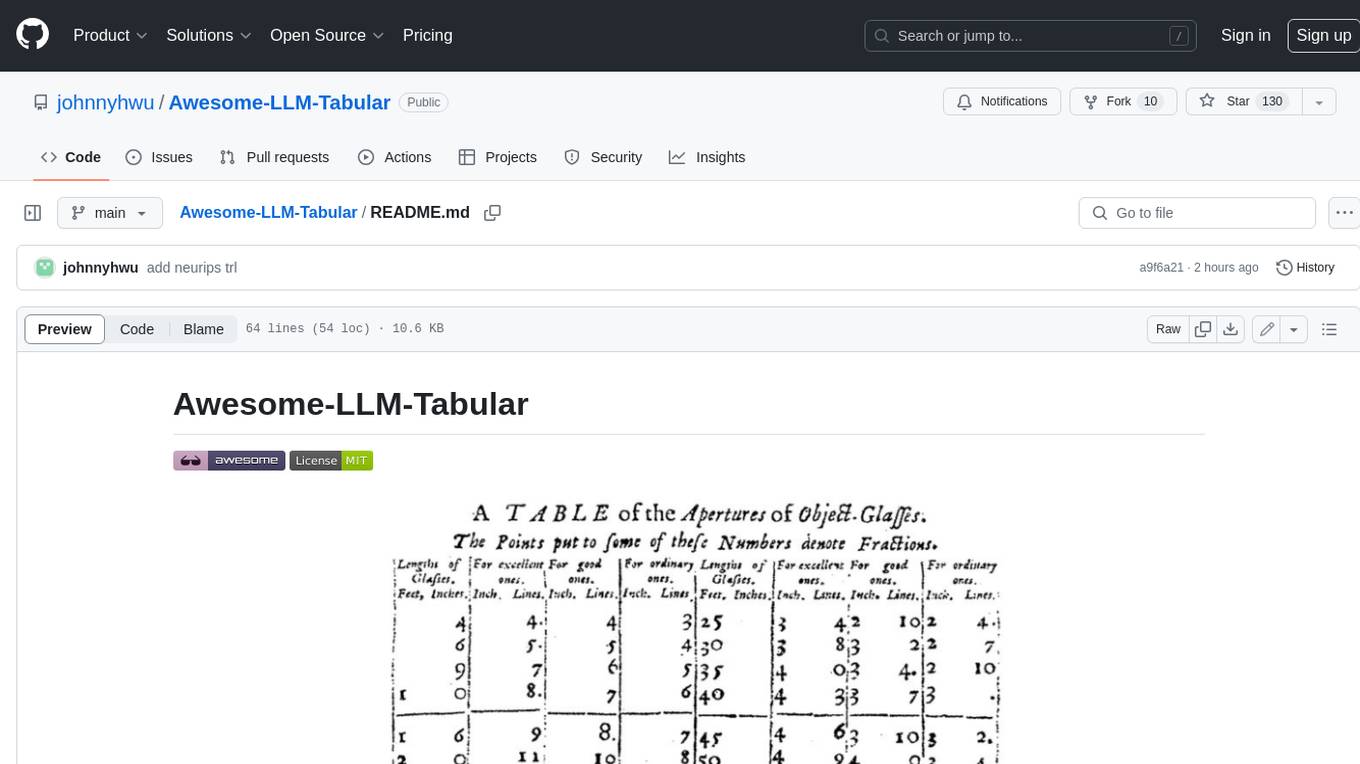

Awesome-LLM-Tabular

This repository is a curated list of research papers that explore the integration of Large Language Model (LLM) technology with tabular data. It aims to provide a comprehensive resource for researchers and practitioners interested in this emerging field. The repository includes papers on a wide range of topics, including table-to-text generation, table question answering, and tabular data classification. It also includes a section on related datasets and resources.

LangBot

LangBot is an open-source large language model native instant messaging robot development platform, aiming to provide a plug-and-play IM robot development experience, with various LLM application functions such as Agent, RAG, MCP, adapting to mainstream instant messaging platforms globally, and providing rich API interfaces to support custom development.

chat-your-doc

Chat Your Doc is an experimental project exploring various applications based on LLM technology. It goes beyond being just a chatbot project, focusing on researching LLM applications using tools like LangChain and LlamaIndex. The project delves into UX, computer vision, and offers a range of examples in the 'Lab Apps' section. It includes links to different apps, descriptions, launch commands, and demos, aiming to showcase the versatility and potential of LLM applications.

llumen

Llumen is a self-hosted interface optimized for modest hardware like Raspberry Pi, old laptops, and minimal VPS. It offers privacy without complexity, providing essential features with minimal resource demands. Users can enjoy sub-second cold starts, real-time token streaming, various chat modes, rich media support, and a universal API for OpenAI-compatible providers. The tool has a small footprint with a binary size of around 17MB and RAM usage under 128MB. Llumen aims to simplify the setup process and offer a user-friendly experience for individuals seeking a privacy-focused solution.

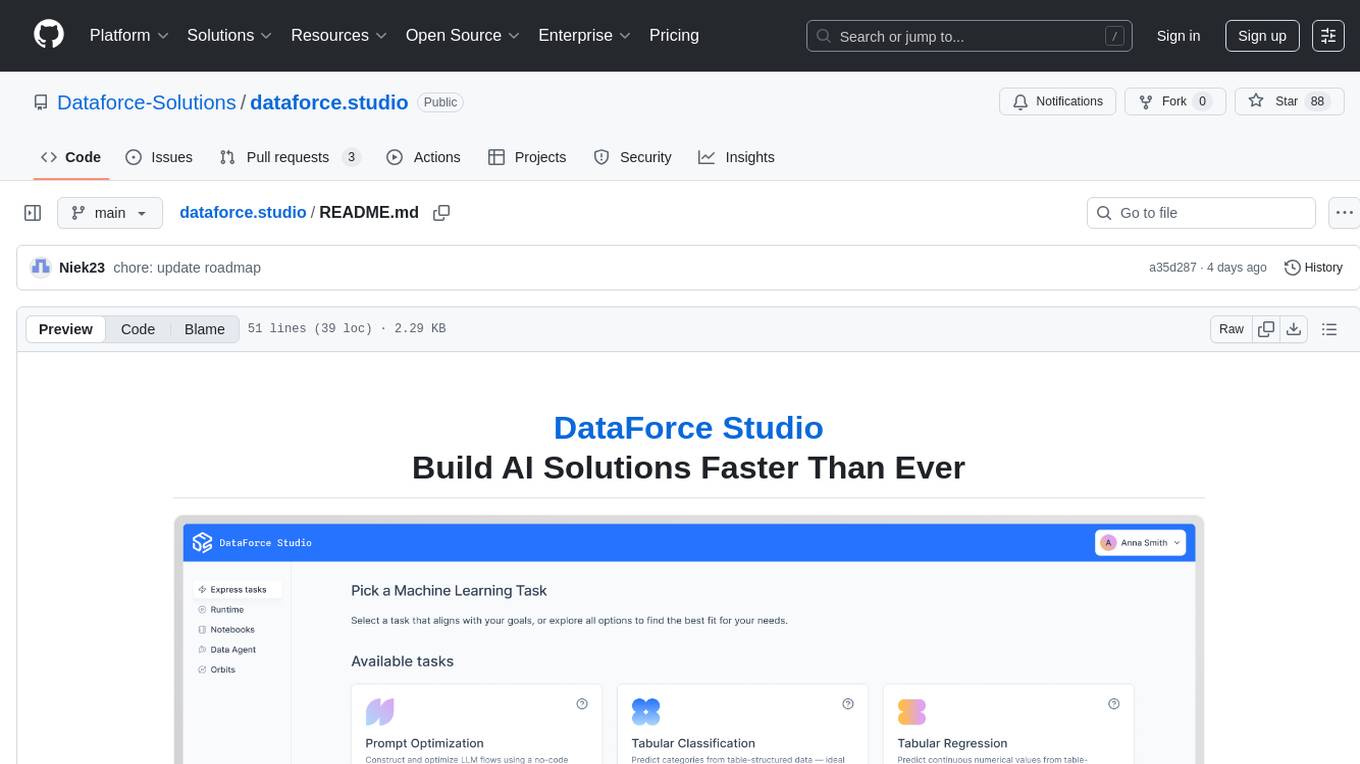

dataforce.studio

DataForce Studio is an open-source MLOps platform designed to help build, manage, and deploy AI/ML models with ease. It supports the entire model lifecycle, from creation to deployment and monitoring, within a user-friendly interface. The platform is in active early development, aiming to provide features like post-deployment monitoring, model deployment, data science agent, experiment snapshots, model cards, Python SDK, model registry, notebooks, in-browser runtime, and express tasks for prompt optimization and tabular data.

openkore

OpenKore is a custom client and intelligent automated assistant for Ragnarok Online. It is a free, open source, and cross-platform program (Linux, Windows, and MacOS are supported). To run OpenKore, you need to download and extract it or clone the repository using Git. Configure OpenKore according to the documentation and run openkore.pl to start. The tool provides a FAQ section for troubleshooting, guidelines for reporting issues, and information about botting status on official servers. OpenKore is developed by a global team, and contributions are welcome through pull requests. Various community resources are available for support and communication. Users are advised to comply with the GNU General Public License when using and distributing the software.

awesome-LangGraph

Awesome LangGraph is a curated list of projects, resources, and tools for building stateful, multi-actor applications with LangGraph. It provides valuable resources for developers at all stages of development, from beginners to those building production-ready systems. The repository covers core ecosystem components, LangChain ecosystem, LangGraph platform, official resources, starter templates, pre-built agents, example applications, development tools, community projects, AI assistants, content & media, knowledge & retrieval, finance & business, sustainability, learning resources, companies using LangGraph, contributing guidelines, and acknowledgments.

llm-app-stack

LLM App Stack, also known as Emerging Architectures for LLM Applications, is a comprehensive list of available tools, projects, and vendors at each layer of the LLM app stack. It covers various categories such as Data Pipelines, Embedding Models, Vector Databases, Playgrounds, Orchestrators, APIs/Plugins, LLM Caches, Logging/Monitoring/Eval, Validators, LLM APIs (proprietary and open source), App Hosting Platforms, Cloud Providers, and Opinionated Clouds. The repository aims to provide a detailed overview of tools and projects for building, deploying, and maintaining enterprise data solutions, AI models, and applications.

rag-web-ui

RAG Web UI is an intelligent dialogue system based on RAG (Retrieval-Augmented Generation) technology. It helps enterprises and individuals build intelligent Q&A systems based on their own knowledge bases. By combining document retrieval and large language models, it delivers accurate and reliable knowledge-based question-answering services. The system is designed with features like intelligent document management, advanced dialogue engine, and a robust architecture. It supports multiple document formats, async document processing, multi-turn contextual dialogue, and reference citations in conversations. The architecture includes a backend stack with Python FastAPI, MySQL + ChromaDB, MinIO, Langchain, JWT + OAuth2 for authentication, and a frontend stack with Next.js, TypeScript, Tailwind CSS, Shadcn/UI, and Vercel AI SDK for AI integration. Performance optimization includes incremental document processing, streaming responses, vector database performance tuning, and distributed task processing. The project is licensed under the Apache-2.0 License and is intended for learning and sharing RAG knowledge only, not for commercial purposes.

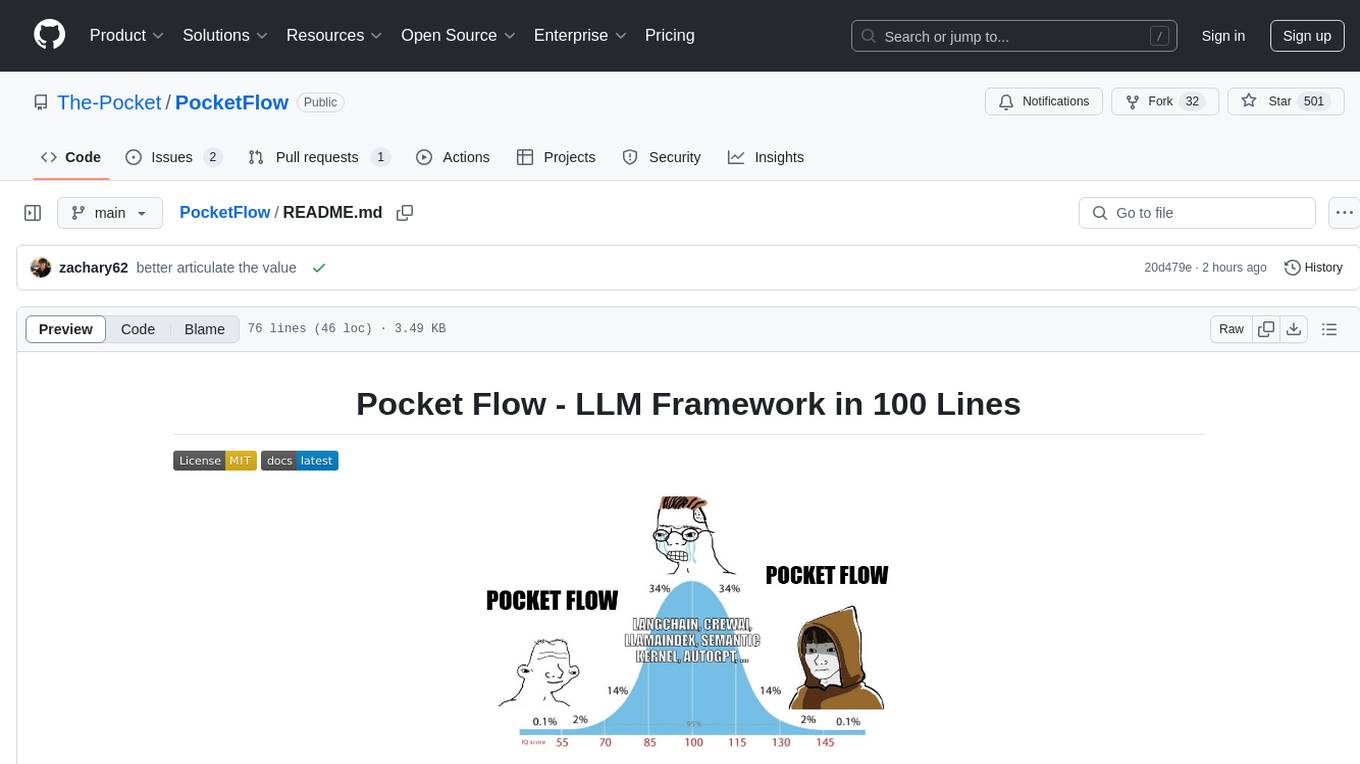

PocketFlow

Pocket Flow is a 100-line minimalist LLM framework designed for (Multi-)Agents, Workflow, RAG, etc. It provides a core abstraction for LLM projects by focusing on computation and communication through a graph structure and shared store. The framework aims to support the development of LLM Agents, such as Cursor AI, by offering a minimal and low-level approach that is well-suited for understanding and usage. Users can install Pocket Flow via pip or by copying the source code, and detailed documentation is available on the project website.

For similar tasks

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

botpress

Botpress is a platform for building next-generation chatbots and assistants powered by OpenAI. It provides a range of tools and integrations to help developers quickly and easily create and deploy chatbots for various use cases.

BotSharp

BotSharp is an open-source machine learning framework for building AI bot platforms. It provides a comprehensive set of tools and components for developing and deploying intelligent virtual assistants. BotSharp is designed to be modular and extensible, allowing developers to easily integrate it with their existing systems and applications. With BotSharp, you can quickly and easily create AI-powered chatbots, virtual assistants, and other conversational AI applications.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.