langfuse-js

🪢 Langfuse JS/TS SDKs - Instrument your LLM app and get detailed tracing/observability. Works with any LLM or framework

Stars: 79

langfuse-js is a modular mono repo for the Langfuse JS/TS client libraries. It includes packages for Langfuse API client, tracing, OpenTelemetry export helpers, OpenAI integration, and LangChain integration. The SDK is currently in version 4 and offers universal JavaScript environments support as well as Node.js 20+. The repository provides documentation, reference materials, and development instructions for managing the monorepo with pnpm. It is licensed under MIT.

README:

Modular mono repo for the Langfuse JS/TS client libraries.

[!IMPORTANT] The SDK was rewritten in v4 and will be released soon. Refer to the v4 migration guide for instructions on updating your code.

| Package | NPM | Description | Environments |

|---|---|---|---|

| @langfuse/client |  |

Langfuse API client for universal JavaScript environments | Universal JS |

| @langfuse/tracing |  |

Langfuse instrumentation methods based on OpenTelemetry | Node.js 20+ |

| @langfuse/otel |  |

Langfuse OpenTelemetry export helpers | Node.js 20+ |

| @langfuse/openai |  |

Langfuse integration for OpenAI SDK | Universal JS |

| @langfuse/langchain |  |

Langfuse integration for LangChain | Universal JS |

This is a monorepo managed with pnpm. See CONTRIBUTING.md for detailed development instructions.

Quick start:

pnpm install # Install dependencies

pnpm build # Build all packages

pnpm test # Run tests

pnpm ci # Run full CI suiteFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for langfuse-js

Similar Open Source Tools

langfuse-js

langfuse-js is a modular mono repo for the Langfuse JS/TS client libraries. It includes packages for Langfuse API client, tracing, OpenTelemetry export helpers, OpenAI integration, and LangChain integration. The SDK is currently in version 4 and offers universal JavaScript environments support as well as Node.js 20+. The repository provides documentation, reference materials, and development instructions for managing the monorepo with pnpm. It is licensed under MIT.

composio

Composio is a production-ready toolset for AI agents that enables users to integrate AI agents with various agentic tools effortlessly. It provides support for over 100 tools across different categories, including popular softwares like GitHub, Notion, Linear, Gmail, Slack, and more. Composio ensures managed authorization with support for six different authentication protocols, offering better agentic accuracy and ease of use. Users can easily extend Composio with additional tools, frameworks, and authorization protocols. The toolset is designed to be embeddable and pluggable, allowing for seamless integration and consistent user experience.

helicone

Helicone is an open-source observability platform designed for Language Learning Models (LLMs). It logs requests to OpenAI in a user-friendly UI, offers caching, rate limits, and retries, tracks costs and latencies, provides a playground for iterating on prompts and chat conversations, supports collaboration, and will soon have APIs for feedback and evaluation. The platform is deployed on Cloudflare and consists of services like Web (NextJs), Worker (Cloudflare Workers), Jawn (Express), Supabase, and ClickHouse. Users can interact with Helicone locally by setting up the required services and environment variables. The platform encourages contributions and provides resources for learning, documentation, and integrations.

bytedesk

Bytedesk is an AI-powered customer service and team instant messaging tool that offers features like enterprise instant messaging, online customer service, large model AI assistant, and local area network file transfer. It supports multi-level organizational structure, role management, permission management, chat record management, seating workbench, work order system, seat management, data dashboard, manual knowledge base, skill group management, real-time monitoring, announcements, sensitive words, CRM, report function, and integrated customer service workbench services. The tool is designed for team use with easy configuration throughout the company, and it allows file transfer across platforms using WiFi/hotspots without the need for internet connection.

ASTRA.ai

Astra.ai is a multimodal agent powered by TEN, showcasing its capabilities in speech, vision, and reasoning through RAG from local documentation. It provides a platform for developing AI agents with features like RTC transportation, extension store, workflow builder, and local deployment. Users can build and test agents locally using Docker and Node.js, with prerequisites including Agora App ID, Azure's speech-to-text and text-to-speech API keys, and OpenAI API key. The platform offers advanced customization options through config files and API keys setup, enabling users to create and deploy their AI agents for various tasks.

ai-playground

The ai-playground repository contains code from tutorials presented on the Code AI with Rok YouTube channel. It includes tutorials on using the OpenAI Assistants API v1 beta to build personal math tutors, customer support chatbots, and more. Additionally, there are tutorials on using Gemini Pro API, Snowflake Cortex LLM functions, LlamaIndex chat streaming app, Fetch.ai uAgents, Milvus Standalone, spaCy for NER, and more. The repository aims to provide practical examples and guides for developers interested in AI-related projects and tools.

supabase

Supabase is an open source Firebase alternative that provides a wide range of features including a hosted Postgres database, authentication and authorization, auto-generated APIs, REST and GraphQL support, realtime subscriptions, functions, file storage, AI and vector/embeddings toolkit, and a dashboard. It aims to offer developers a Firebase-like experience using enterprise-grade open source tools.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

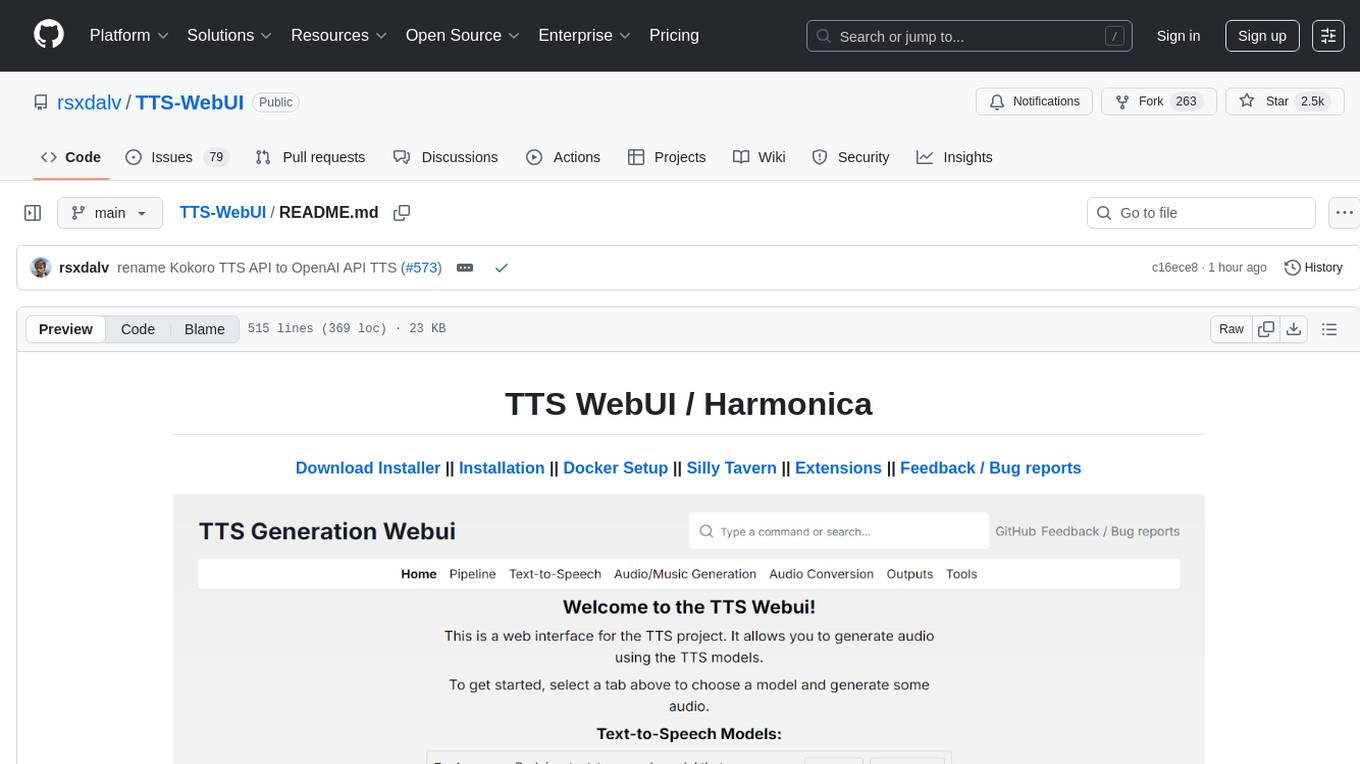

TTS-WebUI

TTS WebUI is a comprehensive tool for text-to-speech synthesis, audio/music generation, and audio conversion. It offers a user-friendly interface for various AI projects related to voice and audio processing. The tool provides a range of models and extensions for different tasks, along with integrations like Silly Tavern and OpenWebUI. With support for Docker setup and compatibility with Linux and Windows, TTS WebUI aims to facilitate creative and responsible use of AI technologies in a user-friendly manner.

PureChat

PureChat is a chat application integrated with ChatGPT, featuring efficient application building with Vite5, screenshot generation and copy support for chat records, IM instant messaging SDK for sessions, automatic light and dark mode switching based on system theme, Markdown rendering, code highlighting, and link recognition support, seamless social experience with GitHub quick login, integration of large language models like ChatGPT Ollama for streaming output, preset prompts, and context, Electron desktop app versions for macOS and Windows, ongoing development of more features. Environment setup requires Node.js 18.20+. Clone code with 'git clone https://github.com/Hyk260/PureChat.git', install dependencies with 'pnpm install', start project with 'pnpm dev', and build with 'pnpm build'.

lemonade

Lemonade is a tool that helps users run local Large Language Models (LLMs) with high performance by configuring state-of-the-art inference engines for their Neural Processing Units (NPUs) and Graphics Processing Units (GPUs). It is used by startups, research teams, and large companies to run LLMs efficiently. Lemonade provides a high-level Python API for direct integration of LLMs into Python applications and a CLI for mixing and matching LLMs with various features like prompting templates, accuracy testing, performance benchmarking, and memory profiling. The tool supports both GGUF and ONNX models and allows importing custom models from Hugging Face using the Model Manager. Lemonade is designed to be easy to use and switch between different configurations at runtime, making it a versatile tool for running LLMs locally.

Home-AssistantConfig

Bear Stone Smart Home Documentation is a live, personal Home Assistant configuration shared for browsing and inspiration. It provides real-world automations, scripts, and scenes for users to reverse-engineer patterns and adapt to their own setups. The repository layout includes reusable config under `config/`, with runtime artifacts hidden by `.gitignore`. The platform runs on Docker/compose, and featured examples cover various smart home scenarios like alarm monitoring, lighting control, and presence awareness. The repository also includes a network diagram and lists Docker add-ons & utilities, gear tied to real automations, and affiliate links for supported hardware.

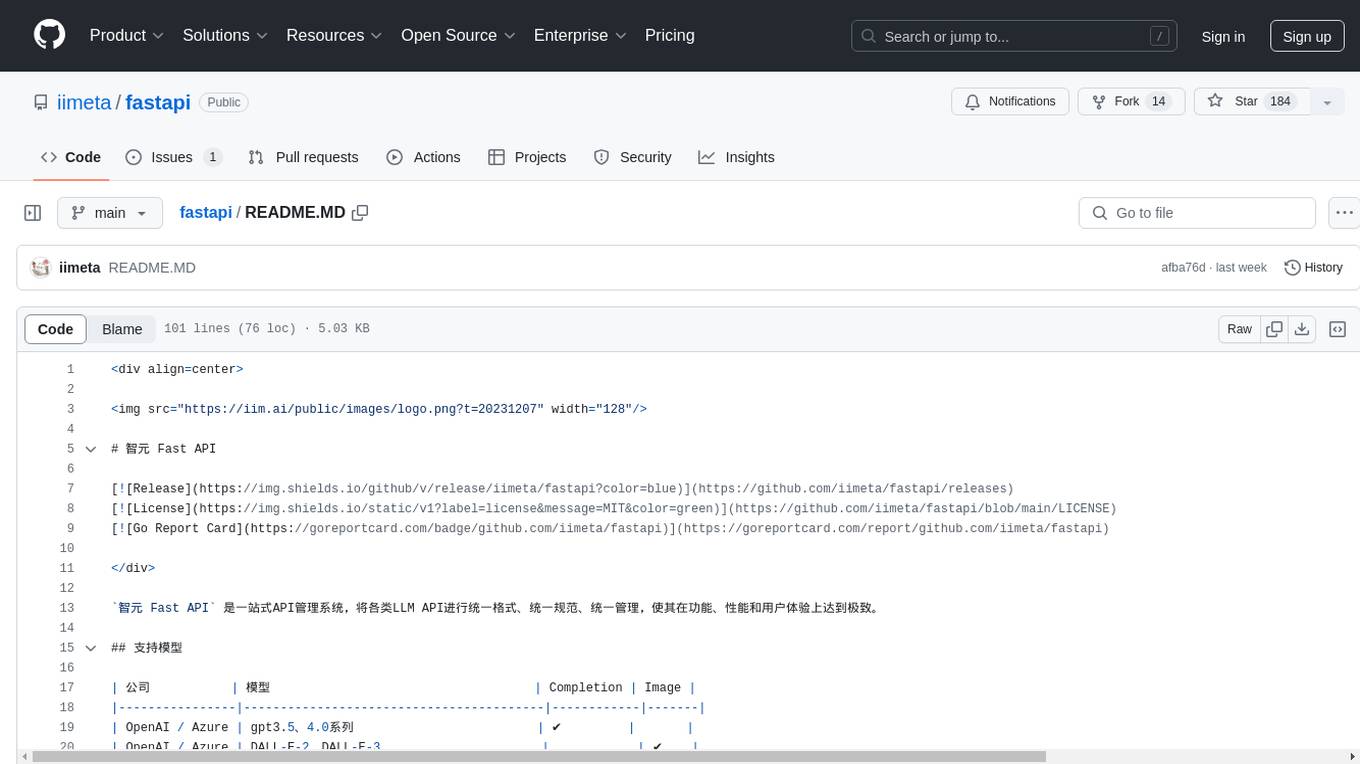

fastapi

智元 Fast API is a one-stop API management system that unifies various LLM APIs in terms of format, standards, and management, achieving the ultimate in functionality, performance, and user experience. It supports various models from companies like OpenAI, Azure, Baidu, Keda Xunfei, Alibaba Cloud, Zhifu AI, Google, DeepSeek, 360 Brain, and Midjourney. The project provides user and admin portals for preview, supports cluster deployment, multi-site deployment, and cross-zone deployment. It also offers Docker deployment, a public API site for registration, and screenshots of the admin and user portals. The API interface is similar to OpenAI's interface, and the project is open source with repositories for API, web, admin, and SDK on GitHub and Gitee.

awesome-LangGraph

Awesome LangGraph is a curated list of projects, resources, and tools for building stateful, multi-actor applications with LangGraph. It provides valuable resources for developers at all stages of development, from beginners to those building production-ready systems. The repository covers core ecosystem components, LangChain ecosystem, LangGraph platform, official resources, starter templates, pre-built agents, example applications, development tools, community projects, AI assistants, content & media, knowledge & retrieval, finance & business, sustainability, learning resources, companies using LangGraph, contributing guidelines, and acknowledgments.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

ai-dev-kit

The AI Dev Kit is a comprehensive toolkit designed to enhance AI-driven development on Databricks. It provides trusted sources for AI coding assistants like Claude Code and Cursor to build faster and smarter on Databricks. The kit includes features such as Spark Declarative Pipelines, Databricks Jobs, AI/BI Dashboards, Unity Catalog, Genie Spaces, Knowledge Assistants, MLflow Experiments, Model Serving, Databricks Apps, and more. Users can choose from different adventures like installing the kit, using the visual builder app, teaching AI assistants Databricks patterns, executing Databricks actions, or building custom integrations with the core library. The kit also includes components like databricks-tools-core, databricks-mcp-server, databricks-skills, databricks-builder-app, and ai-dev-project.

For similar tasks

langfuse-js

langfuse-js is a modular mono repo for the Langfuse JS/TS client libraries. It includes packages for Langfuse API client, tracing, OpenTelemetry export helpers, OpenAI integration, and LangChain integration. The SDK is currently in version 4 and offers universal JavaScript environments support as well as Node.js 20+. The repository provides documentation, reference materials, and development instructions for managing the monorepo with pnpm. It is licensed under MIT.

strictjson

Strict JSON is a framework designed to handle JSON outputs with complex structures, fixing issues that standard json.loads() cannot resolve. It provides functionalities for parsing LLM outputs into dictionaries, supporting various data types, type forcing, and error correction. The tool allows easy integration with OpenAI JSON Mode and offers community support through tutorials and discussions. Users can download the package via pip, set up API keys, and import functions for usage. The tool works by extracting JSON values using regex, matching output values to literals, and ensuring all JSON fields are output by LLM with optional type checking. It also supports LLM-based checks for type enforcement and error correction loops.

hayhooks

Hayhooks is a tool that simplifies the deployment and serving of Haystack pipelines as REST APIs. It allows users to wrap their pipelines with custom logic and expose them via HTTP endpoints, including OpenAI-compatible chat completion endpoints. With Hayhooks, users can easily convert their Haystack pipelines into API services with minimal boilerplate code.

innoshop

InnoShop is an innovative open-source e-commerce system based on Laravel 12. It supports multiple languages, multiple currencies, and is integrated with OpenAI. The system features plugin mechanisms and theme template development for enhanced user experience and system extensibility. It is globally oriented, user-friendly, and based on the latest technology with deep AI integration.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.