imodelsX

Interpret text data using LLMs (scikit-learn compatible).

Stars: 170

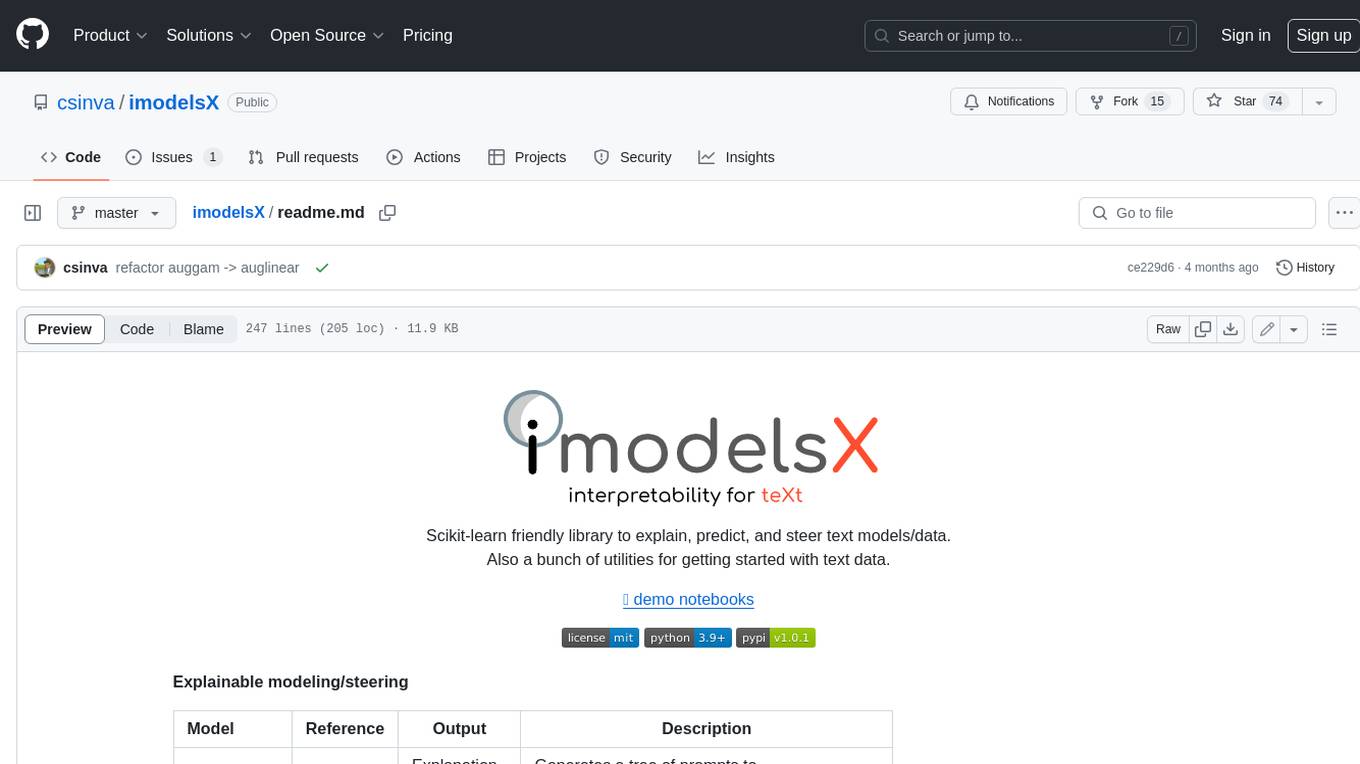

imodelsX is a Scikit-learn friendly library that provides tools for explaining, predicting, and steering text models/data. It also includes a collection of utilities for getting started with text data. **Explainable modeling/steering** | Model | Reference | Output | Description | |---|---|---|---| | Tree-Prompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/tree_prompt) | Explanation + Steering | Generates a tree of prompts to steer an LLM (_Official_) | | iPrompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/iprompt) | Explanation + Steering | Generates a prompt that explains patterns in data (_Official_) | | AutoPrompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/autoprompt) | Explanation + Steering | Find a natural-language prompt using input-gradients (⌛ In progress)| | D3 | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/d3) | Explanation | Explain the difference between two distributions | | SASC | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/sasc) | Explanation | Explain a black-box text module using an LLM (_Official_) | | Aug-Linear | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/aug_linear) | Linear model | Fit better linear model using an LLM to extract embeddings (_Official_) | | Aug-Tree | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/aug_tree) | Decision tree | Fit better decision tree using an LLM to expand features (_Official_) | **General utilities** | Model | Reference | |---|---| | LLM wrapper| [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/llm) | Easily call different LLMs | | | Dataset wrapper| [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/data) | Download minimially processed huggingface datasets | | | Bag of Ngrams | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/bag_of_ngrams) | Learn a linear model of ngrams | | | Linear Finetune | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/linear_finetune) | Finetune a single linear layer on top of LLM embeddings | | **Related work** * [imodels package](https://github.com/microsoft/interpretml/tree/main/imodels) (JOSS 2021) - interpretable ML package for concise, transparent, and accurate predictive modeling (sklearn-compatible). * [Adaptive wavelet distillation](https://arxiv.org/abs/2111.06185) (NeurIPS 2021) - distilling a neural network into a concise wavelet model * [Transformation importance](https://arxiv.org/abs/1912.04938) (ICLR 2020 workshop) - using simple reparameterizations, allows for calculating disentangled importances to transformations of the input (e.g. assigning importances to different frequencies) * [Hierarchical interpretations](https://arxiv.org/abs/1807.03343) (ICLR 2019) - extends CD to CNNs / arbitrary DNNs, and aggregates explanations into a hierarchy * [Interpretation regularization](https://arxiv.org/abs/2006.14340) (ICML 2020) - penalizes CD / ACD scores during training to make models generalize better * [PDR interpretability framework](https://www.pnas.org/doi/10.1073/pnas.1814225116) (PNAS 2019) - an overarching framewwork for guiding and framing interpretable machine learning

README:

Scikit-learn friendly library to explain, predict, and steer text models/data.

Also a bunch of utilities for getting started with text data.

Explainable modeling/steering

| Model | Reference | Output | Description |

|---|---|---|---|

| Tree-Prompt | 🗂️, 🔗, 📄, 📖, | Explanation + Steering |

Generates a tree of prompts to steer an LLM (Official) |

| iPrompt | 🗂️, 🔗, 📄, 📖 | Explanation + Steering |

Generates a prompt that explains patterns in data (Official) |

| AutoPrompt | ㅤㅤ🗂️, 🔗, 📄 | Explanation + Steering |

Find a natural-language prompt using input-gradients |

| D3 | 🗂️, 🔗, 📄, 📖 | Explanation | Explain the difference between two distributions |

| SASC | ㅤㅤ🗂️, 🔗, 📄 | Explanation | Explain a black-box text module using an LLM (Official) |

| Aug-Linear | 🗂️, 🔗, 📄, 📖 | Linear model | Fit better linear model using an LLM to extract embeddings (Official) |

| Aug-Tree | 🗂️, 🔗, 📄, 📖 | Decision tree | Fit better decision tree using an LLM to expand features (Official) |

| QAEmb | 🗂️, 🔗, 📄, 📖 | Explainable embedding |

Generate interpretable embeddings by asking LLMs questions (Official) |

| KAN | 🗂️, 🔗, 📄, 📖 | Small network |

Fit 2-layer Kolmogorov-Arnold network |

📖Demo notebooks 🗂️ Doc 🔗 Reference code 📄 Research paper ⌛ We plan to support other interpretable algorithms like RLPrompt, CBMs, and NBDT. If you want to contribute an algorithm, feel free to open a PR 😄

General utilities

| Model | Reference |

|---|---|

| 🗂️ LLM wrapper | Easily call different LLMs |

| 🗂️ Dataset wrapper | Download minimially processed huggingface datasets |

| 🗂️ Bag of Ngrams | Learn a linear model of ngrams |

| 🗂️ Linear Finetune | Finetune a single linear layer on top of LLM embeddings |

Installation: pip install imodelsx (or, for more control, clone and install from source)

Demos: see the demo notebooks

from imodelsx import TreePromptClassifier

import datasets

import numpy as np

from sklearn.tree import plot_tree

import matplotlib.pyplot as plt

# set up data

rng = np.random.default_rng(seed=42)

dset_train = datasets.load_dataset('rotten_tomatoes')['train']

dset_train = dset_train.select(rng.choice(

len(dset_train), size=100, replace=False))

dset_val = datasets.load_dataset('rotten_tomatoes')['validation']

dset_val = dset_val.select(rng.choice(

len(dset_val), size=100, replace=False))

# set up arguments

prompts = [

"This movie is",

" Positive or Negative? The movie was",

" The sentiment of the movie was",

" The plot of the movie was really",

" The acting in the movie was",

]

verbalizer = {0: " Negative.", 1: " Positive."}

checkpoint = "gpt2"

# fit model

m = TreePromptClassifier(

checkpoint=checkpoint,

prompts=prompts,

verbalizer=verbalizer,

cache_prompt_features_dir=None, # 'cache_prompt_features_dir/gp2',

)

m.fit(dset_train["text"], dset_train["label"])

# compute accuracy

preds = m.predict(dset_val['text'])

print('\nTree-Prompt acc (val) ->',

np.mean(preds == dset_val['label'])) # -> 0.7

# compare to accuracy for individual prompts

for i, prompt in enumerate(prompts):

print(i, prompt, '->', m.prompt_accs_[i]) # -> 0.65, 0.5, 0.5, 0.56, 0.51

# visualize decision tree

plot_tree(

m.clf_,

fontsize=10,

feature_names=m.feature_names_,

class_names=list(verbalizer.values()),

filled=True,

)

plt.show()from imodelsx import explain_dataset_iprompt, get_add_two_numbers_dataset

# get a simple dataset of adding two numbers

input_strings, output_strings = get_add_two_numbers_dataset(num_examples=100)

for i in range(5):

print(repr(input_strings[i]), repr(output_strings[i]))

# explain the relationship between the inputs and outputs

# with a natural-language prompt string

prompts, metadata = explain_dataset_iprompt(

input_strings=input_strings,

output_strings=output_strings,

checkpoint='EleutherAI/gpt-j-6B', # which language model to use

num_learned_tokens=3, # how long of a prompt to learn

n_shots=3, # shots per example

n_epochs=15, # how many epochs to search

verbose=0, # how much to print

llm_float16=True, # whether to load the model in float_16

)

--------

prompts is a list of found natural-language prompt stringsfrom imodelsx import explain_dataset_d3

hypotheses, hypothesis_scores = explain_dataset_d3(

pos=positive_samples, # List[str] of positive examples

neg=negative_samples, # another List[str]

num_steps=100,

num_folds=2,

batch_size=64,

)Here, we explain a module rather than a dataset

from imodelsx import explain_module_sasc

# a toy module that responds to the length of a string

mod = lambda str_list: np.array([len(s) for s in str_list])

# a toy dataset where the longest strings are animals

text_str_list = ["red", "blue", "x", "1", "2", "hippopotamus", "elephant", "rhinoceros"]

explanation_dict = explain_module_sasc(

text_str_list,

mod,

ngrams=1,

)Use these just a like a scikit-learn model. During training, they fit better features via LLMs, but at test-time they are extremely fast and completely transparent.

from imodelsx import AugLinearClassifier, AugTreeClassifier, AugLinearRegressor, AugTreeRegressor

import datasets

import numpy as np

# set up data

dset = datasets.load_dataset('rotten_tomatoes')['train']

dset = dset.select(np.random.choice(len(dset), size=300, replace=False))

dset_val = datasets.load_dataset('rotten_tomatoes')['validation']

dset_val = dset_val.select(np.random.choice(len(dset_val), size=300, replace=False))

# fit model

m = AugLinearClassifier(

checkpoint='textattack/distilbert-base-uncased-rotten-tomatoes',

ngrams=2, # use bigrams

)

m.fit(dset['text'], dset['label'])

# predict

preds = m.predict(dset_val['text'])

print('acc_val', np.mean(preds == dset_val['label']))

# interpret

print('Total ngram coefficients: ', len(m.coefs_dict_))

print('Most positive ngrams')

for k, v in sorted(m.coefs_dict_.items(), key=lambda item: item[1], reverse=True)[:8]:

print('\t', k, round(v, 2))

print('Most negative ngrams')

for k, v in sorted(m.coefs_dict_.items(), key=lambda item: item[1])[:8]:

print('\t', k, round(v, 2))import imodelsx

from sklearn.datasets import make_classification, make_regression

from sklearn.metrics import accuracy_score

import numpy as np

X, y = make_classification(n_samples=5000, n_features=5, n_informative=3)

model = imodelsx.KANClassifier(hidden_layer_size=64, device='cpu',

regularize_activation=1.0, regularize_entropy=1.0)

model.fit(X, y)

y_pred = model.predict(X)

print('Test acc', accuracy_score(y, y_pred))

# now try regression

X, y = make_regression(n_samples=5000, n_features=5, n_informative=3)

model = imodelsx.kan.KANRegressor(hidden_layer_size=64, device='cpu',

regularize_activation=1.0, regularize_entropy=1.0)

model.fit(X, y)

y_pred = model.predict(X)

print('Test correlation', np.corrcoef(y, y_pred.flatten())[0, 1])Easy-to-fit baselines that follow the sklearn API.

from imodelsx import LinearFinetuneClassifier, LinearNgramClassifier

# fit a simple one-layer finetune on top of LLM embeddings

m = LinearFinetuneClassifier(

checkpoint='distilbert-base-uncased',

)

m.fit(dset['text'], dset['label'])

preds = m.predict(dset_val['text'])

acc = (preds == dset_val['label']).mean()

print('validation acc', acc)Easy API for calling different language models with caching (much more lightweight than langchain).

import imodelsx.llm

# supports any huggingface checkpoint or openai checkpoint (including chat models)

llm = imodelsx.llm.get_llm(

checkpoint="gpt2-xl", # text-davinci-003, gpt-3.5-turbo, ...

CACHE_DIR=".cache",

)

out = llm("May the Force be")

llm("May the Force be") # when computing the same string again, uses the cacheAPI for loading huggingface datasets with basic preprocessing.

import imodelsx.data

dset, dataset_key_text = imodelsx.data.load_huggingface_dataset('ag_news')

# Ensures that dset has a split named 'train' and 'validation',

# and that the input data is contained for each split in a column given by {dataset_key_text}- imodels package (JOSS 2021 github) - interpretable ML package for concise, transparent, and accurate predictive modeling (sklearn-compatible).

- Rethinking Interpretability in the Era of Large Language Models (arXiv 2024 pdf) - overview of using LLMs to interpret datasets and yield natural-language explanations

- Experiments in using clinical rule development: github

- Experiments in automatically generating brain explanations: github

- Interpretation regularization (ICML 2020 pdf, github) - penalizes CD / ACD scores during training to make models generalize better

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for imodelsX

Similar Open Source Tools

imodelsX

imodelsX is a Scikit-learn friendly library that provides tools for explaining, predicting, and steering text models/data. It also includes a collection of utilities for getting started with text data. **Explainable modeling/steering** | Model | Reference | Output | Description | |---|---|---|---| | Tree-Prompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/tree_prompt) | Explanation + Steering | Generates a tree of prompts to steer an LLM (_Official_) | | iPrompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/iprompt) | Explanation + Steering | Generates a prompt that explains patterns in data (_Official_) | | AutoPrompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/autoprompt) | Explanation + Steering | Find a natural-language prompt using input-gradients (⌛ In progress)| | D3 | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/d3) | Explanation | Explain the difference between two distributions | | SASC | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/sasc) | Explanation | Explain a black-box text module using an LLM (_Official_) | | Aug-Linear | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/aug_linear) | Linear model | Fit better linear model using an LLM to extract embeddings (_Official_) | | Aug-Tree | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/aug_tree) | Decision tree | Fit better decision tree using an LLM to expand features (_Official_) | **General utilities** | Model | Reference | |---|---| | LLM wrapper| [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/llm) | Easily call different LLMs | | | Dataset wrapper| [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/data) | Download minimially processed huggingface datasets | | | Bag of Ngrams | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/bag_of_ngrams) | Learn a linear model of ngrams | | | Linear Finetune | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/linear_finetune) | Finetune a single linear layer on top of LLM embeddings | | **Related work** * [imodels package](https://github.com/microsoft/interpretml/tree/main/imodels) (JOSS 2021) - interpretable ML package for concise, transparent, and accurate predictive modeling (sklearn-compatible). * [Adaptive wavelet distillation](https://arxiv.org/abs/2111.06185) (NeurIPS 2021) - distilling a neural network into a concise wavelet model * [Transformation importance](https://arxiv.org/abs/1912.04938) (ICLR 2020 workshop) - using simple reparameterizations, allows for calculating disentangled importances to transformations of the input (e.g. assigning importances to different frequencies) * [Hierarchical interpretations](https://arxiv.org/abs/1807.03343) (ICLR 2019) - extends CD to CNNs / arbitrary DNNs, and aggregates explanations into a hierarchy * [Interpretation regularization](https://arxiv.org/abs/2006.14340) (ICML 2020) - penalizes CD / ACD scores during training to make models generalize better * [PDR interpretability framework](https://www.pnas.org/doi/10.1073/pnas.1814225116) (PNAS 2019) - an overarching framewwork for guiding and framing interpretable machine learning

Ultimate-Data-Science-Toolkit---From-Python-Basics-to-GenerativeAI

Ultimate Data Science Toolkit is a comprehensive repository covering Python basics to Generative AI. It includes modules on Python programming, data analysis, statistics, machine learning, MLOps, case studies, and deep learning. The repository provides detailed tutorials on various topics such as Python data structures, control statements, functions, modules, object-oriented programming, exception handling, file handling, web API, databases, list comprehension, lambda functions, Pandas, Numpy, data visualization, statistical analysis, supervised and unsupervised machine learning algorithms, model serialization, ML pipeline orchestration, case studies, and deep learning concepts like neural networks and autoencoders.

Awesome-LLM-Tabular

This repository is a curated list of research papers that explore the integration of Large Language Model (LLM) technology with tabular data. It aims to provide a comprehensive resource for researchers and practitioners interested in this emerging field. The repository includes papers on a wide range of topics, including table-to-text generation, table question answering, and tabular data classification. It also includes a section on related datasets and resources.

helicone

Helicone is an open-source observability platform designed for Language Learning Models (LLMs). It logs requests to OpenAI in a user-friendly UI, offers caching, rate limits, and retries, tracks costs and latencies, provides a playground for iterating on prompts and chat conversations, supports collaboration, and will soon have APIs for feedback and evaluation. The platform is deployed on Cloudflare and consists of services like Web (NextJs), Worker (Cloudflare Workers), Jawn (Express), Supabase, and ClickHouse. Users can interact with Helicone locally by setting up the required services and environment variables. The platform encourages contributions and provides resources for learning, documentation, and integrations.

PocketFlow

Pocket Flow is a 100-line minimalist LLM framework designed for (Multi-)Agents, Workflow, RAG, etc. It provides a core abstraction for LLM projects by focusing on computation and communication through a graph structure and shared store. The framework aims to support the development of LLM Agents, such as Cursor AI, by offering a minimal and low-level approach that is well-suited for understanding and usage. Users can install Pocket Flow via pip or by copying the source code, and detailed documentation is available on the project website.

chat-your-doc

Chat Your Doc is an experimental project exploring various applications based on LLM technology. It goes beyond being just a chatbot project, focusing on researching LLM applications using tools like LangChain and LlamaIndex. The project delves into UX, computer vision, and offers a range of examples in the 'Lab Apps' section. It includes links to different apps, descriptions, launch commands, and demos, aiming to showcase the versatility and potential of LLM applications.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

oumi

Oumi is an open-source platform for building state-of-the-art foundation models, offering tools for data preparation, training, evaluation, and deployment. It supports training and fine-tuning models with various parameters, working with text and multimodal models, synthesizing and curating training data, deploying models efficiently, evaluating models comprehensively, and running on different platforms. Oumi provides a consistent API, reliability, and flexibility for research purposes.

swift

SWIFT (Scalable lightWeight Infrastructure for Fine-Tuning) supports training, inference, evaluation and deployment of nearly **200 LLMs and MLLMs** (multimodal large models). Developers can directly apply our framework to their own research and production environments to realize the complete workflow from model training and evaluation to application. In addition to supporting the lightweight training solutions provided by [PEFT](https://github.com/huggingface/peft), we also provide a complete **Adapters library** to support the latest training techniques such as NEFTune, LoRA+, LLaMA-PRO, etc. This adapter library can be used directly in your own custom workflow without our training scripts. To facilitate use by users unfamiliar with deep learning, we provide a Gradio web-ui for controlling training and inference, as well as accompanying deep learning courses and best practices for beginners. Additionally, we are expanding capabilities for other modalities. Currently, we support full-parameter training and LoRA training for AnimateDiff.

ms-swift

ms-swift is an official framework provided by the ModelScope community for fine-tuning and deploying large language models and multi-modal large models. It supports training, inference, evaluation, quantization, and deployment of over 400 large models and 100+ multi-modal large models. The framework includes various training technologies and accelerates inference, evaluation, and deployment modules. It offers a Gradio-based Web-UI interface and best practices for easy application of large models. ms-swift supports a wide range of model types, dataset types, hardware support, lightweight training methods, distributed training techniques, quantization training, RLHF training, multi-modal training, interface training, plugin and extension support, inference acceleration engines, model evaluation, and model quantization.

awesome-LangGraph

Awesome LangGraph is a curated list of projects, resources, and tools for building stateful, multi-actor applications with LangGraph. It provides valuable resources for developers at all stages of development, from beginners to those building production-ready systems. The repository covers core ecosystem components, LangChain ecosystem, LangGraph platform, official resources, starter templates, pre-built agents, example applications, development tools, community projects, AI assistants, content & media, knowledge & retrieval, finance & business, sustainability, learning resources, companies using LangGraph, contributing guidelines, and acknowledgments.

awesome-llm-webapps

This repository is a curated list of open-source, actively maintained web applications that leverage large language models (LLMs) for various use cases, including chatbots, natural language interfaces, assistants, and question answering systems. The projects are evaluated based on key criteria such as licensing, maintenance status, complexity, and features, to help users select the most suitable starting point for their LLM-based applications. The repository welcomes contributions and encourages users to submit projects that meet the criteria or suggest improvements to the existing list.

Hands-On-Large-Language-Models-CN

Hands-On Large Language Models CN(ZH) is a Chinese version of the book 'Hands-On Large Language Models' by Jay Alammar and Maarten Grootendorst. It provides detailed code annotations and additional insights, offers Notebook versions suitable for Chinese network environments, utilizes openbayes for free GPU access, allows convenient environment setup with vscode, and includes accompanying Chinese language videos on platforms like Bilibili and YouTube. The book covers various chapters on topics like Tokens and Embeddings, Transformer LLMs, Text Classification, Text Clustering, Prompt Engineering, Text Generation, Semantic Search, Multimodal LLMs, Text Embedding Models, Fine-tuning Models, and more.

For similar tasks

imodelsX

imodelsX is a Scikit-learn friendly library that provides tools for explaining, predicting, and steering text models/data. It also includes a collection of utilities for getting started with text data. **Explainable modeling/steering** | Model | Reference | Output | Description | |---|---|---|---| | Tree-Prompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/tree_prompt) | Explanation + Steering | Generates a tree of prompts to steer an LLM (_Official_) | | iPrompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/iprompt) | Explanation + Steering | Generates a prompt that explains patterns in data (_Official_) | | AutoPrompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/autoprompt) | Explanation + Steering | Find a natural-language prompt using input-gradients (⌛ In progress)| | D3 | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/d3) | Explanation | Explain the difference between two distributions | | SASC | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/sasc) | Explanation | Explain a black-box text module using an LLM (_Official_) | | Aug-Linear | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/aug_linear) | Linear model | Fit better linear model using an LLM to extract embeddings (_Official_) | | Aug-Tree | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/aug_tree) | Decision tree | Fit better decision tree using an LLM to expand features (_Official_) | **General utilities** | Model | Reference | |---|---| | LLM wrapper| [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/llm) | Easily call different LLMs | | | Dataset wrapper| [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/data) | Download minimially processed huggingface datasets | | | Bag of Ngrams | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/bag_of_ngrams) | Learn a linear model of ngrams | | | Linear Finetune | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/linear_finetune) | Finetune a single linear layer on top of LLM embeddings | | **Related work** * [imodels package](https://github.com/microsoft/interpretml/tree/main/imodels) (JOSS 2021) - interpretable ML package for concise, transparent, and accurate predictive modeling (sklearn-compatible). * [Adaptive wavelet distillation](https://arxiv.org/abs/2111.06185) (NeurIPS 2021) - distilling a neural network into a concise wavelet model * [Transformation importance](https://arxiv.org/abs/1912.04938) (ICLR 2020 workshop) - using simple reparameterizations, allows for calculating disentangled importances to transformations of the input (e.g. assigning importances to different frequencies) * [Hierarchical interpretations](https://arxiv.org/abs/1807.03343) (ICLR 2019) - extends CD to CNNs / arbitrary DNNs, and aggregates explanations into a hierarchy * [Interpretation regularization](https://arxiv.org/abs/2006.14340) (ICML 2020) - penalizes CD / ACD scores during training to make models generalize better * [PDR interpretability framework](https://www.pnas.org/doi/10.1073/pnas.1814225116) (PNAS 2019) - an overarching framewwork for guiding and framing interpretable machine learning

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.