pytorch-lightning

Pretrain, finetune ANY AI model of ANY size on multiple GPUs, TPUs with zero code changes.

Stars: 30077

PyTorch Lightning is a framework for training and deploying AI models. It provides a high-level API that abstracts away the low-level details of PyTorch, making it easier to write and maintain complex models. Lightning also includes a number of features that make it easy to train and deploy models on multiple GPUs or TPUs, and to track and visualize training progress. PyTorch Lightning is used by a wide range of organizations, including Google, Facebook, and Microsoft. It is also used by researchers at top universities around the world. Here are some of the benefits of using PyTorch Lightning: * **Increased productivity:** Lightning's high-level API makes it easy to write and maintain complex models. This can save you time and effort, and allow you to focus on the research or business problem you're trying to solve. * **Improved performance:** Lightning's optimized training loops and data loading pipelines can help you train models faster and with better performance. * **Easier deployment:** Lightning makes it easy to deploy models to a variety of platforms, including the cloud, on-premises servers, and mobile devices. * **Better reproducibility:** Lightning's logging and visualization tools make it easy to track and reproduce training results.

README:

The deep learning framework to pretrain, finetune and deploy AI models.

NEW- Deploying models? Check out LitServe, the PyTorch Lightning for model serving

Quick start • Examples • PyTorch Lightning • Fabric • Lightning AI • Community • Docs

Training models in plain PyTorch is tedious and error-prone - you have to manually handle things like backprop, mixed precision, multi-GPU, and distributed training, often rewriting code for every new project. PyTorch Lightning organizes PyTorch code to automate those complexities so you can focus on your model and data, while keeping full control and scaling from CPU to multi-node without changing your core code. But if you want control of those things, you can still opt into more DIY.

Fun analogy: If PyTorch is Javascript, PyTorch Lightning is ReactJS or NextJS.

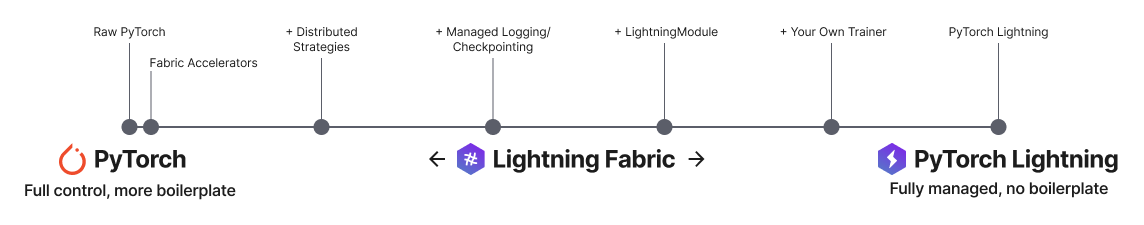

PyTorch Lightning: Train and deploy PyTorch at scale.

Lightning Fabric: Expert control.

Lightning gives you granular control over how much abstraction you want to add over PyTorch.

Install Lightning:

pip install lightningAdvanced install options

pip install lightning['extra']conda install lightning -c conda-forgeInstall future release from the source

pip install https://github.com/Lightning-AI/lightning/archive/refs/heads/release/stable.zip -UInstall nightly from the source (no guarantees)

pip install https://github.com/Lightning-AI/lightning/archive/refs/heads/master.zip -Uor from testing PyPI

pip install -iU https://test.pypi.org/simple/ pytorch-lightningDefine the training workflow. Here's a toy example (explore real examples):

# main.py

# ! pip install torchvision

import torch, torch.nn as nn, torch.utils.data as data, torchvision as tv, torch.nn.functional as F

import lightning as L

# --------------------------------

# Step 1: Define a LightningModule

# --------------------------------

# A LightningModule (nn.Module subclass) defines a full *system*

# (ie: an LLM, diffusion model, autoencoder, or simple image classifier).

class LitAutoEncoder(L.LightningModule):

def __init__(self):

super().__init__()

self.encoder = nn.Sequential(nn.Linear(28 * 28, 128), nn.ReLU(), nn.Linear(128, 3))

self.decoder = nn.Sequential(nn.Linear(3, 128), nn.ReLU(), nn.Linear(128, 28 * 28))

def forward(self, x):

# in lightning, forward defines the prediction/inference actions

embedding = self.encoder(x)

return embedding

def training_step(self, batch, batch_idx):

# training_step defines the train loop. It is independent of forward

x, _ = batch

x = x.view(x.size(0), -1)

z = self.encoder(x)

x_hat = self.decoder(z)

loss = F.mse_loss(x_hat, x)

self.log("train_loss", loss)

return loss

def configure_optimizers(self):

optimizer = torch.optim.Adam(self.parameters(), lr=1e-3)

return optimizer

# -------------------

# Step 2: Define data

# -------------------

dataset = tv.datasets.MNIST(".", download=True, transform=tv.transforms.ToTensor())

train, val = data.random_split(dataset, [55000, 5000])

# -------------------

# Step 3: Train

# -------------------

autoencoder = LitAutoEncoder()

trainer = L.Trainer()

trainer.fit(autoencoder, data.DataLoader(train), data.DataLoader(val))Run the model on your terminal

pip install torchvision

python main.py

PyTorch Lightning is just organized PyTorch - Lightning disentangles PyTorch code to decouple the science from the engineering.

Explore various types of training possible with PyTorch Lightning. Pretrain and finetune ANY kind of model to perform ANY task like classification, segmentation, summarization and more:

| Task | Description | Run |

|---|---|---|

| Hello world | Pretrain - Hello world example |  |

| Image classification | Finetune - ResNet-34 model to classify images of cars |  |

| Image segmentation | Finetune - ResNet-50 model to segment images |  |

| Object detection | Finetune - Faster R-CNN model to detect objects |  |

| Text classification | Finetune - text classifier (BERT model) |  |

| Text summarization | Finetune - text summarization (Hugging Face transformer model) |  |

| Audio generation | Finetune - audio generator (transformer model) |  |

| LLM finetuning | Finetune - LLM (Meta Llama 3.1 8B) |  |

| Image generation | Pretrain - Image generator (diffusion model) |  |

| Recommendation system | Train - recommendation system (factorization and embedding) |  |

| Time-series forecasting | Train - Time-series forecasting with LSTM |  |

Lightning has over 40+ advanced features designed for professional AI research at scale.

Here are some examples:

Train on 1000s of GPUs without code changes

# 8 GPUs

# no code changes needed

trainer = Trainer(accelerator="gpu", devices=8)

# 256 GPUs

trainer = Trainer(accelerator="gpu", devices=8, num_nodes=32)Train on other accelerators like TPUs without code changes

# no code changes needed

trainer = Trainer(accelerator="tpu", devices=8)16-bit precision

# no code changes needed

trainer = Trainer(precision=16)Experiment managers

from lightning import loggers

# tensorboard

trainer = Trainer(logger=TensorBoardLogger("logs/"))

# weights and biases

trainer = Trainer(logger=loggers.WandbLogger())

# comet

trainer = Trainer(logger=loggers.CometLogger())

# mlflow

trainer = Trainer(logger=loggers.MLFlowLogger())

# neptune

trainer = Trainer(logger=loggers.NeptuneLogger())

# ... and dozens moreEarly Stopping

es = EarlyStopping(monitor="val_loss")

trainer = Trainer(callbacks=[es])Checkpointing

checkpointing = ModelCheckpoint(monitor="val_loss")

trainer = Trainer(callbacks=[checkpointing])Export to torchscript (JIT) (production use)

# torchscript

autoencoder = LitAutoEncoder()

torch.jit.save(autoencoder.to_torchscript(), "model.pt")Export to ONNX (production use)

# onnx

with tempfile.NamedTemporaryFile(suffix=".onnx", delete=False) as tmpfile:

autoencoder = LitAutoEncoder()

input_sample = torch.randn((1, 64))

autoencoder.to_onnx(tmpfile.name, input_sample, export_params=True)

os.path.isfile(tmpfile.name)- Models become hardware agnostic

- Code is clear to read because engineering code is abstracted away

- Easier to reproduce

- Make fewer mistakes because lightning handles the tricky engineering

- Keeps all the flexibility (LightningModules are still PyTorch modules), but removes a ton of boilerplate

- Lightning has dozens of integrations with popular machine learning tools.

- Tested rigorously with every new PR. We test every combination of PyTorch and Python supported versions, every OS, multi GPUs and even TPUs.

- Minimal running speed overhead (about 300 ms per epoch compared with pure PyTorch).

Run on any device at any scale with expert-level control over PyTorch training loop and scaling strategy. You can even write your own Trainer.

Fabric is designed for the most complex models like foundation model scaling, LLMs, diffusion, transformers, reinforcement learning, active learning. Of any size.

| What to change | Resulting Fabric Code (copy me!) |

|---|---|

+ import lightning as L

import torch; import torchvision as tv

dataset = tv.datasets.CIFAR10("data", download=True,

train=True,

transform=tv.transforms.ToTensor())

+ fabric = L.Fabric()

+ fabric.launch()

model = tv.models.resnet18()

optimizer = torch.optim.SGD(model.parameters(), lr=0.001)

- device = "cuda" if torch.cuda.is_available() else "cpu"

- model.to(device)

+ model, optimizer = fabric.setup(model, optimizer)

dataloader = torch.utils.data.DataLoader(dataset, batch_size=8)

+ dataloader = fabric.setup_dataloaders(dataloader)

model.train()

num_epochs = 10

for epoch in range(num_epochs):

for batch in dataloader:

inputs, labels = batch

- inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = torch.nn.functional.cross_entropy(outputs, labels)

- loss.backward()

+ fabric.backward(loss)

optimizer.step()

print(loss.data) |

import lightning as L

import torch; import torchvision as tv

dataset = tv.datasets.CIFAR10("data", download=True,

train=True,

transform=tv.transforms.ToTensor())

fabric = L.Fabric()

fabric.launch()

model = tv.models.resnet18()

optimizer = torch.optim.SGD(model.parameters(), lr=0.001)

model, optimizer = fabric.setup(model, optimizer)

dataloader = torch.utils.data.DataLoader(dataset, batch_size=8)

dataloader = fabric.setup_dataloaders(dataloader)

model.train()

num_epochs = 10

for epoch in range(num_epochs):

for batch in dataloader:

inputs, labels = batch

optimizer.zero_grad()

outputs = model(inputs)

loss = torch.nn.functional.cross_entropy(outputs, labels)

fabric.backward(loss)

optimizer.step()

print(loss.data) |

Easily switch from running on CPU to GPU (Apple Silicon, CUDA, …), TPU, multi-GPU or even multi-node training

# Use your available hardware

# no code changes needed

fabric = Fabric()

# Run on GPUs (CUDA or MPS)

fabric = Fabric(accelerator="gpu")

# 8 GPUs

fabric = Fabric(accelerator="gpu", devices=8)

# 256 GPUs, multi-node

fabric = Fabric(accelerator="gpu", devices=8, num_nodes=32)

# Run on TPUs

fabric = Fabric(accelerator="tpu")Use state-of-the-art distributed training strategies (DDP, FSDP, DeepSpeed) and mixed precision out of the box

# Use state-of-the-art distributed training techniques

fabric = Fabric(strategy="ddp")

fabric = Fabric(strategy="deepspeed")

fabric = Fabric(strategy="fsdp")

# Switch the precision

fabric = Fabric(precision="16-mixed")

fabric = Fabric(precision="64")All the device logic boilerplate is handled for you

# no more of this!

- model.to(device)

- batch.to(device)Build your own custom Trainer using Fabric primitives for training checkpointing, logging, and more

import lightning as L

class MyCustomTrainer:

def __init__(self, accelerator="auto", strategy="auto", devices="auto", precision="32-true"):

self.fabric = L.Fabric(accelerator=accelerator, strategy=strategy, devices=devices, precision=precision)

def fit(self, model, optimizer, dataloader, max_epochs):

self.fabric.launch()

model, optimizer = self.fabric.setup(model, optimizer)

dataloader = self.fabric.setup_dataloaders(dataloader)

model.train()

for epoch in range(max_epochs):

for batch in dataloader:

input, target = batch

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

self.fabric.backward(loss)

optimizer.step()You can find a more extensive example in our examples

Lightning is rigorously tested across multiple CPUs, GPUs and TPUs and against major Python and PyTorch versions.

Current build statuses

| System / PyTorch ver. | 1.13 | 2.0 | 2.1 |

|---|---|---|---|

| Linux py3.9 [GPUs] | |||

| Linux (multiple Python versions) | |||

| OSX (multiple Python versions) | |||

| Windows (multiple Python versions) |

The lightning community is maintained by

- 10+ core contributors who are all a mix of professional engineers, Research Scientists, and Ph.D. students from top AI labs.

- 800+ community contributors.

Want to help us build Lightning and reduce boilerplate for thousands of researchers? Learn how to make your first contribution here

Lightning is also part of the PyTorch ecosystem which requires projects to have solid testing, documentation and support.

If you have any questions please:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for pytorch-lightning

Similar Open Source Tools

pytorch-lightning

PyTorch Lightning is a framework for training and deploying AI models. It provides a high-level API that abstracts away the low-level details of PyTorch, making it easier to write and maintain complex models. Lightning also includes a number of features that make it easy to train and deploy models on multiple GPUs or TPUs, and to track and visualize training progress. PyTorch Lightning is used by a wide range of organizations, including Google, Facebook, and Microsoft. It is also used by researchers at top universities around the world. Here are some of the benefits of using PyTorch Lightning: * **Increased productivity:** Lightning's high-level API makes it easy to write and maintain complex models. This can save you time and effort, and allow you to focus on the research or business problem you're trying to solve. * **Improved performance:** Lightning's optimized training loops and data loading pipelines can help you train models faster and with better performance. * **Easier deployment:** Lightning makes it easy to deploy models to a variety of platforms, including the cloud, on-premises servers, and mobile devices. * **Better reproducibility:** Lightning's logging and visualization tools make it easy to track and reproduce training results.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

infinity

Infinity is a high-throughput, low-latency REST API for serving vector embeddings, supporting all sentence-transformer models and frameworks. It is developed under the MIT License and powers inference behind Gradient.ai. The API allows users to deploy models from SentenceTransformers, offers fast inference backends utilizing various accelerators, dynamic batching for efficient processing, correct and tested implementation, and easy-to-use API built on FastAPI with Swagger documentation. Users can embed text, rerank documents, and perform text classification tasks using the tool. Infinity supports various models from Huggingface and provides flexibility in deployment via CLI, Docker, Python API, and cloud services like dstack. The tool is suitable for tasks like embedding, reranking, and text classification.

TempCompass

TempCompass is a benchmark designed to evaluate the temporal perception ability of Video LLMs. It encompasses a diverse set of temporal aspects and task formats to comprehensively assess the capability of Video LLMs in understanding videos. The benchmark includes conflicting videos to prevent models from relying on single-frame bias and language priors. Users can clone the repository, install required packages, prepare data, run inference using examples like Video-LLaVA and Gemini, and evaluate the performance of their models across different tasks such as Multi-Choice QA, Yes/No QA, Caption Matching, and Caption Generation.

libllm

libLLM is an open-source project designed for efficient inference of large language models (LLM) on personal computers and mobile devices. It is optimized to run smoothly on common devices, written in C++14 without external dependencies, and supports CUDA for accelerated inference. Users can build the tool for CPU only or with CUDA support, and run libLLM from the command line. Additionally, there are API examples available for Python and the tool can export Huggingface models.

OpenResearcher

OpenResearcher is a fully open agentic large language model designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1, and Tongyi-DeepResearch. The tool is fully open-source, providing the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research. It offers features like a fully open-source recipe, highly scalable and low-cost generation of deep research trajectories, and remarkable performance on deep research benchmarks.

unsloth

Unsloth is a tool that allows users to fine-tune large language models (LLMs) 2-5x faster with 80% less memory. It is a free and open-source tool that can be used to fine-tune LLMs such as Gemma, Mistral, Llama 2-5, TinyLlama, and CodeLlama 34b. Unsloth supports 4-bit and 16-bit QLoRA / LoRA fine-tuning via bitsandbytes. It also supports DPO (Direct Preference Optimization), PPO, and Reward Modelling. Unsloth is compatible with Hugging Face's TRL, Trainer, Seq2SeqTrainer, and Pytorch code. It is also compatible with NVIDIA GPUs since 2018+ (minimum CUDA Capability 7.0).

auto-round

AutoRound is an advanced weight-only quantization algorithm for low-bits LLM inference. It competes impressively against recent methods without introducing any additional inference overhead. The method adopts sign gradient descent to fine-tune rounding values and minmax values of weights in just 200 steps, often significantly outperforming SignRound with the cost of more tuning time for quantization. AutoRound is tailored for a wide range of models and consistently delivers noticeable improvements.

ailoy

Ailoy is a lightweight library for building AI applications such as agent systems or RAG pipelines with ease. It enables AI features effortlessly, supporting AI models locally or via cloud APIs, multi-turn conversation, system message customization, reasoning-based workflows, tool calling capabilities, and built-in vector store support. It also supports running native-equivalent functionality in web browsers using WASM. The library is in early development stages and provides examples in the `examples` directory for inspiration on building applications with Agents.

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

VideoRefer

VideoRefer Suite is a tool designed to enhance the fine-grained spatial-temporal understanding capabilities of Video Large Language Models (Video LLMs). It consists of three primary components: Model (VideoRefer) for perceiving, reasoning, and retrieval for user-defined regions at any specified timestamps, Dataset (VideoRefer-700K) for high-quality object-level video instruction data, and Benchmark (VideoRefer-Bench) to evaluate object-level video understanding capabilities. The tool can understand any object within a video.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

retinify

Retinify is an advanced AI-powered stereo vision library designed for robotics, enabling real-time, high-precision 3D perception by leveraging GPU and NPU acceleration. It is open source under Apache-2.0 license, offers high precision 3D mapping and object recognition, runs computations on GPU for fast performance, accepts stereo images from any rectified camera setup, is cost-efficient using minimal hardware, and has minimal dependencies on CUDA Toolkit, cuDNN, and TensorRT. The tool provides a pipeline for stereo matching and supports various image data types independently of OpenCV.

DeepMesh

DeepMesh is an auto-regressive artist-mesh creation tool that utilizes reinforcement learning to generate high-quality meshes conditioned on a given point cloud. It offers pretrained weights and allows users to generate obj/ply files based on specific input parameters. The tool has been tested on Ubuntu 22 with CUDA 11.8 and supports A100, A800, and A6000 GPUs. Users can clone the repository, create a conda environment, install pretrained model weights, and use command line inference to generate meshes.

aim

Aim is an open-source, self-hosted ML experiment tracking tool designed to handle 10,000s of training runs. Aim provides a performant and beautiful UI for exploring and comparing training runs. Additionally, its SDK enables programmatic access to tracked metadata — perfect for automations and Jupyter Notebook analysis. **Aim's mission is to democratize AI dev tools 🎯**

celeste-python

Celeste AI is a type-safe, modality/provider-agnostic tool that offers unified interface for various providers like OpenAI, Anthropic, Gemini, Mistral, and more. It supports multiple modalities including text, image, audio, video, and embeddings, with full Pydantic validation and IDE autocomplete. Users can switch providers instantly, ensuring zero lock-in and a lightweight architecture. The tool provides primitives, not frameworks, for clean I/O operations.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.