llama.cpp

LLM inference in C/C++

Stars: 87028

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud. It provides a Plain C/C++ implementation without any dependencies, optimized for Apple silicon via ARM NEON, Accelerate and Metal frameworks, and supports various architectures like AVX, AVX2, AVX512, and AMX. It offers integer quantization for faster inference, custom CUDA kernels for NVIDIA GPUs, Vulkan and SYCL backend support, and CPU+GPU hybrid inference. llama.cpp is the main playground for developing new features for the ggml library, supporting various models and providing tools and infrastructure for LLM deployment.

README:

LLM inference in C/C++

- guide : running gpt-oss with llama.cpp

- [FEEDBACK] Better packaging for llama.cpp to support downstream consumers 🤗

- Support for the

gpt-ossmodel with native MXFP4 format has been added | PR | Collaboration with NVIDIA | Comment - Hot PRs: All | Open

- Multimodal support arrived in

llama-server: #12898 | documentation - VS Code extension for FIM completions: https://github.com/ggml-org/llama.vscode

- Vim/Neovim plugin for FIM completions: https://github.com/ggml-org/llama.vim

- Introducing GGUF-my-LoRA https://github.com/ggml-org/llama.cpp/discussions/10123

- Hugging Face Inference Endpoints now support GGUF out of the box! https://github.com/ggml-org/llama.cpp/discussions/9669

- Hugging Face GGUF editor: discussion | tool

Getting started with llama.cpp is straightforward. Here are several ways to install it on your machine:

- Install

llama.cppusing brew, nix or winget - Run with Docker - see our Docker documentation

- Download pre-built binaries from the releases page

- Build from source by cloning this repository - check out our build guide

Once installed, you'll need a model to work with. Head to the Obtaining and quantizing models section to learn more.

Example command:

# Use a local model file

llama-cli -m my_model.gguf

# Or download and run a model directly from Hugging Face

llama-cli -hf ggml-org/gemma-3-1b-it-GGUF

# Launch OpenAI-compatible API server

llama-server -hf ggml-org/gemma-3-1b-it-GGUFThe main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide

range of hardware - locally and in the cloud.

- Plain C/C++ implementation without any dependencies

- Apple silicon is a first-class citizen - optimized via ARM NEON, Accelerate and Metal frameworks

- AVX, AVX2, AVX512 and AMX support for x86 architectures

- 1.5-bit, 2-bit, 3-bit, 4-bit, 5-bit, 6-bit, and 8-bit integer quantization for faster inference and reduced memory use

- Custom CUDA kernels for running LLMs on NVIDIA GPUs (support for AMD GPUs via HIP and Moore Threads GPUs via MUSA)

- Vulkan and SYCL backend support

- CPU+GPU hybrid inference to partially accelerate models larger than the total VRAM capacity

The llama.cpp project is the main playground for developing new features for the ggml library.

Models

Typically finetunes of the base models below are supported as well.

Instructions for adding support for new models: HOWTO-add-model.md

- [X] LLaMA 🦙

- [x] LLaMA 2 🦙🦙

- [x] LLaMA 3 🦙🦙🦙

- [X] Mistral 7B

- [x] Mixtral MoE

- [x] DBRX

- [X] Falcon

- [X] Chinese LLaMA / Alpaca and Chinese LLaMA-2 / Alpaca-2

- [X] Vigogne (French)

- [X] BERT

- [X] Koala

- [X] Baichuan 1 & 2 + derivations

- [X] Aquila 1 & 2

- [X] Starcoder models

- [X] Refact

- [X] MPT

- [X] Bloom

- [x] Yi models

- [X] StableLM models

- [x] Deepseek models

- [x] Qwen models

- [x] PLaMo-13B

- [x] Phi models

- [x] PhiMoE

- [x] GPT-2

- [x] Orion 14B

- [x] InternLM2

- [x] CodeShell

- [x] Gemma

- [x] Mamba

- [x] Grok-1

- [x] Xverse

- [x] Command-R models

- [x] SEA-LION

- [x] GritLM-7B + GritLM-8x7B

- [x] OLMo

- [x] OLMo 2

- [x] OLMoE

- [x] Granite models

- [x] GPT-NeoX + Pythia

- [x] Snowflake-Arctic MoE

- [x] Smaug

- [x] Poro 34B

- [x] Bitnet b1.58 models

- [x] Flan T5

- [x] Open Elm models

- [x] ChatGLM3-6b + ChatGLM4-9b + GLMEdge-1.5b + GLMEdge-4b

- [x] GLM-4-0414

- [x] SmolLM

- [x] EXAONE-3.0-7.8B-Instruct

- [x] FalconMamba Models

- [x] Jais

- [x] Bielik-11B-v2.3

- [x] RWKV-6

- [x] QRWKV-6

- [x] GigaChat-20B-A3B

- [X] Trillion-7B-preview

- [x] Ling models

- [x] LFM2 models

- [x] Hunyuan models

- [x] LLaVA 1.5 models, LLaVA 1.6 models

- [x] BakLLaVA

- [x] Obsidian

- [x] ShareGPT4V

- [x] MobileVLM 1.7B/3B models

- [x] Yi-VL

- [x] Mini CPM

- [x] Moondream

- [x] Bunny

- [x] GLM-EDGE

- [x] Qwen2-VL

- [x] LFM2-VL

Bindings

- Python: ddh0/easy-llama

- Python: abetlen/llama-cpp-python

- Go: go-skynet/go-llama.cpp

- Node.js: withcatai/node-llama-cpp

- JS/TS (llama.cpp server client): lgrammel/modelfusion

- JS/TS (Programmable Prompt Engine CLI): offline-ai/cli

- JavaScript/Wasm (works in browser): tangledgroup/llama-cpp-wasm

- Typescript/Wasm (nicer API, available on npm): ngxson/wllama

- Ruby: yoshoku/llama_cpp.rb

- Rust (more features): edgenai/llama_cpp-rs

- Rust (nicer API): mdrokz/rust-llama.cpp

- Rust (more direct bindings): utilityai/llama-cpp-rs

- Rust (automated build from crates.io): ShelbyJenkins/llm_client

- C#/.NET: SciSharp/LLamaSharp

- C#/VB.NET (more features - community license): LM-Kit.NET

- Scala 3: donderom/llm4s

- Clojure: phronmophobic/llama.clj

- React Native: mybigday/llama.rn

- Java: kherud/java-llama.cpp

- Java: QuasarByte/llama-cpp-jna

- Zig: deins/llama.cpp.zig

- Flutter/Dart: netdur/llama_cpp_dart

- Flutter: xuegao-tzx/Fllama

- PHP (API bindings and features built on top of llama.cpp): distantmagic/resonance (more info)

- Guile Scheme: guile_llama_cpp

- Swift srgtuszy/llama-cpp-swift

- Swift ShenghaiWang/SwiftLlama

- Delphi Embarcadero/llama-cpp-delphi

UIs

(to have a project listed here, it should clearly state that it depends on llama.cpp)

- AI Sublime Text plugin (MIT)

- cztomsik/ava (MIT)

- Dot (GPL)

- eva (MIT)

- iohub/collama (Apache-2.0)

- janhq/jan (AGPL)

- johnbean393/Sidekick (MIT)

- KanTV (Apache-2.0)

- KodiBot (GPL)

- llama.vim (MIT)

- LARS (AGPL)

- Llama Assistant (GPL)

- LLMFarm (MIT)

- LLMUnity (MIT)

- LMStudio (proprietary)

- LocalAI (MIT)

- LostRuins/koboldcpp (AGPL)

- MindMac (proprietary)

- MindWorkAI/AI-Studio (FSL-1.1-MIT)

- Mobile-Artificial-Intelligence/maid (MIT)

- Mozilla-Ocho/llamafile (Apache-2.0)

- nat/openplayground (MIT)

- nomic-ai/gpt4all (MIT)

- ollama/ollama (MIT)

- oobabooga/text-generation-webui (AGPL)

- PocketPal AI (MIT)

- psugihara/FreeChat (MIT)

- ptsochantaris/emeltal (MIT)

- pythops/tenere (AGPL)

- ramalama (MIT)

- semperai/amica (MIT)

- withcatai/catai (MIT)

- Autopen (GPL)

Tools

- akx/ggify – download PyTorch models from HuggingFace Hub and convert them to GGML

- akx/ollama-dl – download models from the Ollama library to be used directly with llama.cpp

- crashr/gppm – launch llama.cpp instances utilizing NVIDIA Tesla P40 or P100 GPUs with reduced idle power consumption

- gpustack/gguf-parser - review/check the GGUF file and estimate the memory usage

- Styled Lines (proprietary licensed, async wrapper of inference part for game development in Unity3d with pre-built Mobile and Web platform wrappers and a model example)

Infrastructure

- Paddler - Open-source LLMOps platform for hosting and scaling AI in your own infrastructure

- GPUStack - Manage GPU clusters for running LLMs

- llama_cpp_canister - llama.cpp as a smart contract on the Internet Computer, using WebAssembly

- llama-swap - transparent proxy that adds automatic model switching with llama-server

- Kalavai - Crowdsource end to end LLM deployment at any scale

- llmaz - ☸️ Easy, advanced inference platform for large language models on Kubernetes.

Games

- Lucy's Labyrinth - A simple maze game where agents controlled by an AI model will try to trick you.

| Backend | Target devices |

|---|---|

| Metal | Apple Silicon |

| BLAS | All |

| BLIS | All |

| SYCL | Intel and Nvidia GPU |

| MUSA | Moore Threads GPU |

| CUDA | Nvidia GPU |

| HIP | AMD GPU |

| Vulkan | GPU |

| CANN | Ascend NPU |

| OpenCL | Adreno GPU |

| IBM zDNN | IBM Z & LinuxONE |

| WebGPU [In Progress] | All |

| RPC | All |

The Hugging Face platform hosts a number of LLMs compatible with llama.cpp:

You can either manually download the GGUF file or directly use any llama.cpp-compatible models from Hugging Face or other model hosting sites, such as ModelScope, by using this CLI argument: -hf <user>/<model>[:quant]. For example:

llama-cli -hf ggml-org/gemma-3-1b-it-GGUFBy default, the CLI would download from Hugging Face, you can switch to other options with the environment variable MODEL_ENDPOINT. For example, you may opt to downloading model checkpoints from ModelScope or other model sharing communities by setting the environment variable, e.g. MODEL_ENDPOINT=https://www.modelscope.cn/.

After downloading a model, use the CLI tools to run it locally - see below.

llama.cpp requires the model to be stored in the GGUF file format. Models in other data formats can be converted to GGUF using the convert_*.py Python scripts in this repo.

The Hugging Face platform provides a variety of online tools for converting, quantizing and hosting models with llama.cpp:

- Use the GGUF-my-repo space to convert to GGUF format and quantize model weights to smaller sizes

- Use the GGUF-my-LoRA space to convert LoRA adapters to GGUF format (more info: https://github.com/ggml-org/llama.cpp/discussions/10123)

- Use the GGUF-editor space to edit GGUF meta data in the browser (more info: https://github.com/ggml-org/llama.cpp/discussions/9268)

- Use the Inference Endpoints to directly host

llama.cppin the cloud (more info: https://github.com/ggml-org/llama.cpp/discussions/9669)

To learn more about model quantization, read this documentation

-

Run in conversation mode

Models with a built-in chat template will automatically activate conversation mode. If this doesn't occur, you can manually enable it by adding

-cnvand specifying a suitable chat template with--chat-template NAMEllama-cli -m model.gguf # > hi, who are you? # Hi there! I'm your helpful assistant! I'm an AI-powered chatbot designed to assist and provide information to users like you. I'm here to help answer your questions, provide guidance, and offer support on a wide range of topics. I'm a friendly and knowledgeable AI, and I'm always happy to help with anything you need. What's on your mind, and how can I assist you today? # # > what is 1+1? # Easy peasy! The answer to 1+1 is... 2!

-

Run in conversation mode with custom chat template

# use the "chatml" template (use -h to see the list of supported templates) llama-cli -m model.gguf -cnv --chat-template chatml # use a custom template llama-cli -m model.gguf -cnv --in-prefix 'User: ' --reverse-prompt 'User:'

-

Run simple text completion

To disable conversation mode explicitly, use

-no-cnvllama-cli -m model.gguf -p "I believe the meaning of life is" -n 128 -no-cnv # I believe the meaning of life is to find your own truth and to live in accordance with it. For me, this means being true to myself and following my passions, even if they don't align with societal expectations. I think that's what I love about yoga – it's not just a physical practice, but a spiritual one too. It's about connecting with yourself, listening to your inner voice, and honoring your own unique journey.

-

Constrain the output with a custom grammar

llama-cli -m model.gguf -n 256 --grammar-file grammars/json.gbnf -p 'Request: schedule a call at 8pm; Command:' # {"appointmentTime": "8pm", "appointmentDetails": "schedule a a call"}

The grammars/ folder contains a handful of sample grammars. To write your own, check out the GBNF Guide.

For authoring more complex JSON grammars, check out https://grammar.intrinsiclabs.ai/

A lightweight, OpenAI API compatible, HTTP server for serving LLMs.

-

Start a local HTTP server with default configuration on port 8080

llama-server -m model.gguf --port 8080 # Basic web UI can be accessed via browser: http://localhost:8080 # Chat completion endpoint: http://localhost:8080/v1/chat/completions

-

Support multiple-users and parallel decoding

# up to 4 concurrent requests, each with 4096 max context llama-server -m model.gguf -c 16384 -np 4 -

Enable speculative decoding

# the draft.gguf model should be a small variant of the target model.gguf llama-server -m model.gguf -md draft.gguf -

Serve an embedding model

# use the /embedding endpoint llama-server -m model.gguf --embedding --pooling cls -ub 8192 -

Serve a reranking model

# use the /reranking endpoint llama-server -m model.gguf --reranking -

Constrain all outputs with a grammar

# custom grammar llama-server -m model.gguf --grammar-file grammar.gbnf # JSON llama-server -m model.gguf --grammar-file grammars/json.gbnf

A tool for measuring the perplexity 1 (and other quality metrics) of a model over a given text.

-

Measure the perplexity over a text file

llama-perplexity -m model.gguf -f file.txt # [1]15.2701,[2]5.4007,[3]5.3073,[4]6.2965,[5]5.8940,[6]5.6096,[7]5.7942,[8]4.9297, ... # Final estimate: PPL = 5.4007 +/- 0.67339

-

Measure KL divergence

# TODO

-

Run default benchmark

llama-bench -m model.gguf # Output: # | model | size | params | backend | threads | test | t/s | # | ------------------- | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: | # | qwen2 1.5B Q4_0 | 885.97 MiB | 1.54 B | Metal,BLAS | 16 | pp512 | 5765.41 ± 20.55 | # | qwen2 1.5B Q4_0 | 885.97 MiB | 1.54 B | Metal,BLAS | 16 | tg128 | 197.71 ± 0.81 | # # build: 3e0ba0e60 (4229)

A comprehensive example for running llama.cpp models. Useful for inferencing. Used with RamaLama 2.

-

Run a model with a specific prompt (by default it's pulled from Ollama registry)

llama-run granite-code

-

Basic text completion

llama-simple -m model.gguf # Hello my name is Kaitlyn and I am a 16 year old girl. I am a junior in high school and I am currently taking a class called "The Art of

- Contributors can open PRs

- Collaborators will be invited based on contributions

- Maintainers can push to branches in the

llama.cpprepo and merge PRs into themasterbranch - Any help with managing issues, PRs and projects is very appreciated!

- See good first issues for tasks suitable for first contributions

- Read the CONTRIBUTING.md for more information

- Make sure to read this: Inference at the edge

- A bit of backstory for those who are interested: Changelog podcast

If your issue is with model generation quality, then please at least scan the following links and papers to understand the limitations of LLaMA models. This is especially important when choosing an appropriate model size and appreciating both the significant and subtle differences between LLaMA models and ChatGPT:

- LLaMA:

- GPT-3

- GPT-3.5 / InstructGPT / ChatGPT:

The XCFramework is a precompiled version of the library for iOS, visionOS, tvOS, and macOS. It can be used in Swift projects without the need to compile the library from source. For example:

// swift-tools-version: 5.10

// The swift-tools-version declares the minimum version of Swift required to build this package.

import PackageDescription

let package = Package(

name: "MyLlamaPackage",

targets: [

.executableTarget(

name: "MyLlamaPackage",

dependencies: [

"LlamaFramework"

]),

.binaryTarget(

name: "LlamaFramework",

url: "https://github.com/ggml-org/llama.cpp/releases/download/b5046/llama-b5046-xcframework.zip",

checksum: "c19be78b5f00d8d29a25da41042cb7afa094cbf6280a225abe614b03b20029ab"

)

]

)The above example is using an intermediate build b5046 of the library. This can be modified

to use a different version by changing the URL and checksum.

Command-line completion is available for some environments.

$ build/bin/llama-cli --completion-bash > ~/.llama-completion.bash

$ source ~/.llama-completion.bashOptionally this can be added to your .bashrc or .bash_profile to load it

automatically. For example:

$ echo "source ~/.llama-completion.bash" >> ~/.bashrc-

yhirose/cpp-httplib - Single-header HTTP server, used by

llama-server- MIT license - stb-image - Single-header image format decoder, used by multimodal subsystem - Public domain

- nlohmann/json - Single-header JSON library, used by various tools/examples - MIT License

- minja - Minimal Jinja parser in C++, used by various tools/examples - MIT License

-

linenoise.cpp - C++ library that provides readline-like line editing capabilities, used by

llama-run- BSD 2-Clause License - curl - Client-side URL transfer library, used by various tools/examples - CURL License

- miniaudio.h - Single-header audio format decoder, used by multimodal subsystem - Public domain

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llama.cpp

Similar Open Source Tools

llama.cpp

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud. It provides a Plain C/C++ implementation without any dependencies, optimized for Apple silicon via ARM NEON, Accelerate and Metal frameworks, and supports various architectures like AVX, AVX2, AVX512, and AMX. It offers integer quantization for faster inference, custom CUDA kernels for NVIDIA GPUs, Vulkan and SYCL backend support, and CPU+GPU hybrid inference. llama.cpp is the main playground for developing new features for the ggml library, supporting various models and providing tools and infrastructure for LLM deployment.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

VideoRefer

VideoRefer Suite is a tool designed to enhance the fine-grained spatial-temporal understanding capabilities of Video Large Language Models (Video LLMs). It consists of three primary components: Model (VideoRefer) for perceiving, reasoning, and retrieval for user-defined regions at any specified timestamps, Dataset (VideoRefer-700K) for high-quality object-level video instruction data, and Benchmark (VideoRefer-Bench) to evaluate object-level video understanding capabilities. The tool can understand any object within a video.

achatbot

achatbot is a factory tool that allows users to create chat bots with various functionalities such as llm (language models), asr (automatic speech recognition), tts (text-to-speech), vad (voice activity detection), ocr (optical character recognition), and object detection. The tool provides a structured project with features like chat bots for cmd, grpc, and http servers. It supports various chat bot processors, transport connectors, and AI modules for different tasks. Users can run chat bots locally or deploy them on cloud services like vercel, Cloudflare, AWS Lambda, or Docker. The tool also includes UI components for easy deployment and service architecture diagrams for reference.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

VideoLLaMA2

VideoLLaMA 2 is a project focused on advancing spatial-temporal modeling and audio understanding in video-LLMs. It provides tools for multi-choice video QA, open-ended video QA, and video captioning. The project offers model zoo with different configurations for visual encoder and language decoder. It includes training and evaluation guides, as well as inference capabilities for video and image processing. The project also features a demo setup for running a video-based Large Language Model web demonstration.

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

pytorch-lightning

PyTorch Lightning is a framework for training and deploying AI models. It provides a high-level API that abstracts away the low-level details of PyTorch, making it easier to write and maintain complex models. Lightning also includes a number of features that make it easy to train and deploy models on multiple GPUs or TPUs, and to track and visualize training progress. PyTorch Lightning is used by a wide range of organizations, including Google, Facebook, and Microsoft. It is also used by researchers at top universities around the world. Here are some of the benefits of using PyTorch Lightning: * **Increased productivity:** Lightning's high-level API makes it easy to write and maintain complex models. This can save you time and effort, and allow you to focus on the research or business problem you're trying to solve. * **Improved performance:** Lightning's optimized training loops and data loading pipelines can help you train models faster and with better performance. * **Easier deployment:** Lightning makes it easy to deploy models to a variety of platforms, including the cloud, on-premises servers, and mobile devices. * **Better reproducibility:** Lightning's logging and visualization tools make it easy to track and reproduce training results.

UMOE-Scaling-Unified-Multimodal-LLMs

Uni-MoE is a MoE-based unified multimodal model that can handle diverse modalities including audio, speech, image, text, and video. The project focuses on scaling Unified Multimodal LLMs with a Mixture of Experts framework. It offers enhanced functionality for training across multiple nodes and GPUs, as well as parallel processing at both the expert and modality levels. The model architecture involves three training stages: building connectors for multimodal understanding, developing modality-specific experts, and incorporating multiple trained experts into LLMs using the LoRA technique on mixed multimodal data. The tool provides instructions for installation, weights organization, inference, training, and evaluation on various datasets.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

InternLM

InternLM is a powerful language model series with features such as 200K context window for long-context tasks, outstanding comprehensive performance in reasoning, math, code, chat experience, instruction following, and creative writing, code interpreter & data analysis capabilities, and stronger tool utilization capabilities. It offers models in sizes of 7B and 20B, suitable for research and complex scenarios. The models are recommended for various applications and exhibit better performance than previous generations. InternLM models may match or surpass other open-source models like ChatGPT. The tool has been evaluated on various datasets and has shown superior performance in multiple tasks. It requires Python >= 3.8, PyTorch >= 1.12.0, and Transformers >= 4.34 for usage. InternLM can be used for tasks like chat, agent applications, fine-tuning, deployment, and long-context inference.

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

stm32ai-modelzoo

The STM32 AI model zoo is a collection of reference machine learning models optimized to run on STM32 microcontrollers. It provides a large collection of application-oriented models ready for re-training, scripts for easy retraining from user datasets, pre-trained models on reference datasets, and application code examples generated from user AI models. The project offers training scripts for transfer learning or training custom models from scratch. It includes performances on reference STM32 MCU and MPU for float and quantized models. The project is organized by application, providing step-by-step guides for training and deploying models.

gitmesh

GitMesh is an AI-powered Git collaboration network designed to address contributor dropout in open source projects. It offers real-time branch-level insights, intelligent contributor-task matching, and automated workflows. The platform transforms complex codebases into clear contribution journeys, fostering engagement through gamified rewards and integration with open source support programs. GitMesh's mascot, Meshy/Mesh Wolf, symbolizes agility, resilience, and teamwork, reflecting the platform's ethos of efficiency and power through collaboration.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

chatgpt-infinity

ChatGPT Infinity is a free and powerful add-on that makes ChatGPT generate infinite answers on any topic. It offers customizable topic selection, multilingual support, adjustable response interval, and auto-scroll feature for a seamless chat experience.

For similar tasks

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

ai-hub

AI Hub Project aims to continuously test and evaluate mainstream large language models, while accumulating and managing various effective model invocation prompts. It has integrated all mainstream large language models in China, including OpenAI GPT-4 Turbo, Baidu ERNIE-Bot-4, Tencent ChatPro, MiniMax abab5.5-chat, and more. The project plans to continuously track, integrate, and evaluate new models. Users can access the models through REST services or Java code integration. The project also provides a testing suite for translation, coding, and benchmark testing.

long-context-attention

Long-Context-Attention (YunChang) is a unified sequence parallel approach that combines the strengths of DeepSpeed-Ulysses-Attention and Ring-Attention to provide a versatile and high-performance solution for long context LLM model training and inference. It addresses the limitations of both methods by offering no limitation on the number of heads, compatibility with advanced parallel strategies, and enhanced performance benchmarks. The tool is verified in Megatron-LM and offers best practices for 4D parallelism, making it suitable for various attention mechanisms and parallel computing advancements.

marlin

Marlin is a highly optimized FP16xINT4 matmul kernel designed for large language model (LLM) inference, offering close to ideal speedups up to batchsizes of 16-32 tokens. It is suitable for larger-scale serving, speculative decoding, and advanced multi-inference schemes like CoT-Majority. Marlin achieves optimal performance by utilizing various techniques and optimizations to fully leverage GPU resources, ensuring efficient computation and memory management.

MMC

This repository, MMC, focuses on advancing multimodal chart understanding through large-scale instruction tuning. It introduces a dataset supporting various tasks and chart types, a benchmark for evaluating reasoning capabilities over charts, and an assistant achieving state-of-the-art performance on chart QA benchmarks. The repository provides data for chart-text alignment, benchmarking, and instruction tuning, along with existing datasets used in experiments. Additionally, it offers a Gradio demo for the MMCA model.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

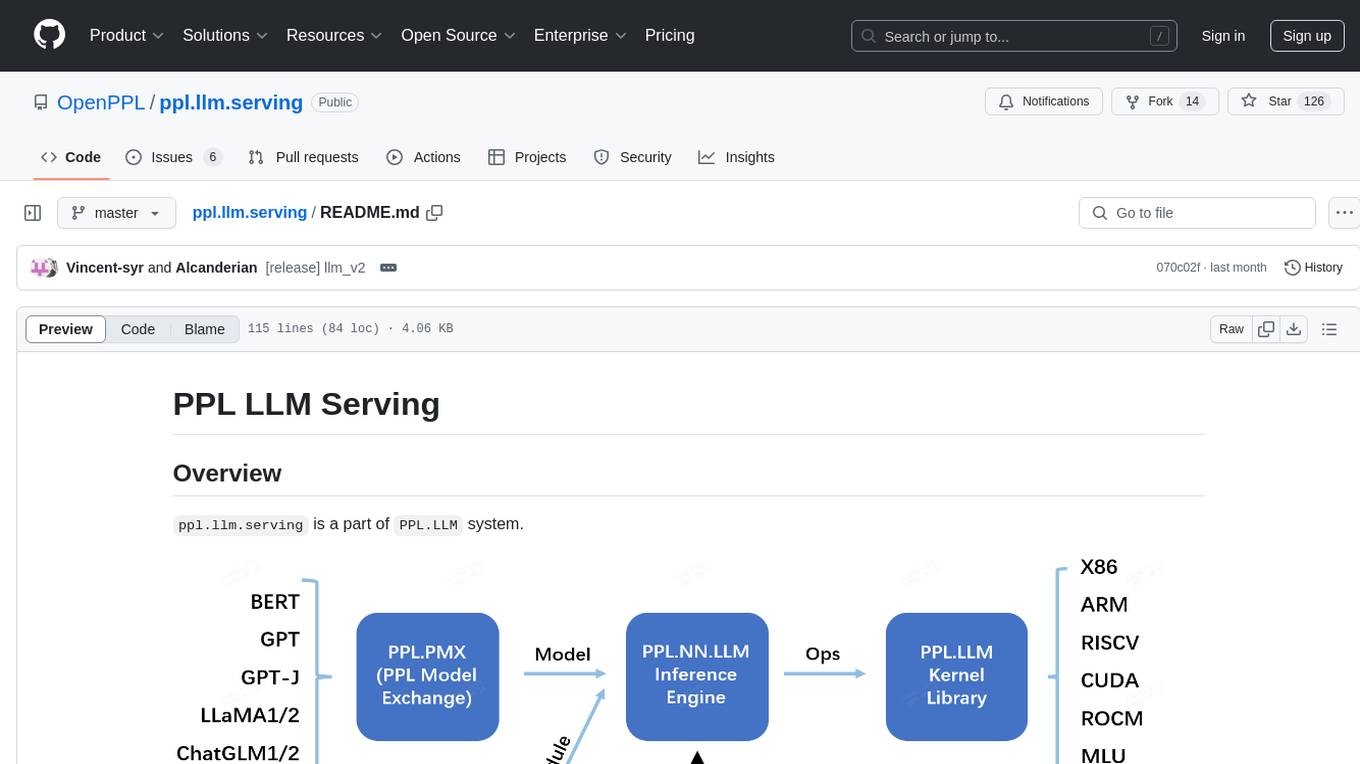

ppl.llm.serving

ppl.llm.serving is a serving component for Large Language Models (LLMs) within the PPL.LLM system. It provides a server based on gRPC and supports inference for LLaMA. The repository includes instructions for prerequisites, quick start guide, model exporting, server setup, client usage, benchmarking, and offline inference. Users can refer to the LLaMA Guide for more details on using this serving component.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.