Best AI tools for< Benchmark Performance >

20 - AI tool Sites

Perspect

Perspect is an AI-powered platform designed for high-performance software teams. It offers real-time insights into team contributions and impact, optimizing developer experience, and rewarding high-performers. With 50+ integrations, Perspect enables visualization of impact, benchmarking performance, and uses machine learning models to identify and eliminate blockers. The platform is deeply integrated with web3 wallets and offers built-in reward mechanisms. Managers can align resources around crucial KPIs, identify top talent, and prevent burnout. Perspect aims to enhance team productivity and employee retention through AI and ML technologies.

Skan AI

Skan AI is an Enterprise Work Context Graph and Process Intelligence Platform that offers a comprehensive solution for observing, distilling, and analyzing how work is done within an organization. It provides a platform that powers transformation and fuels reliable enterprise AI by capturing every action, exception, and workaround to train AI agents effectively. Skan AI enables users to move from pilots to production, offering AI-powered Process Intelligence that observes work continuously, identifies bottlenecks, and helps in fixing critical issues. The platform allows users to govern actions, deploy guardrails, benchmark performance, and build AI agents based on real data and observations.

Clarity AI

Clarity AI is an AI-powered technology platform that offers a Sustainability Tech Kit for sustainable investing, shopping, reporting, and benchmarking. The platform provides built-in sustainability technology with customizable solutions for various needs related to data, methodologies, and tools. It seamlessly integrates into workflows, offering scalable and flexible end-to-end SaaS tools to address sustainability use cases. Clarity AI leverages powerful AI and machine learning to analyze vast amounts of data points, ensuring reliable and transparent data coverage. The platform is designed to empower users to assess, analyze, and report on sustainability aspects efficiently and confidently.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

Hailo Community

Hailo Community is an AI tool designed for developers and enthusiasts working with Raspberry Pi and Hailo-8L AI Kit. The platform offers resources, benchmarks, and support for training custom models, optimizing AI tasks, and troubleshooting errors related to Hailo and Raspberry Pi integration.

EarningsCall.ai

EarningsCall.ai is an AI-powered tool that provides stock earnings call summaries and insights, saving users hours of reading transcripts. It allows users to compare competitors, analyze trends, and generate their own insights. The tool is designed for business leaders, financial professionals, consultants, and advisors to track competitor earnings, assess market trends, and optimize investment strategies.

Particl

Particl is an AI-powered platform that automates competitor intelligence for modern retail businesses. It provides real-time sales, pricing, and sentiment data across various e-commerce channels. Particl's AI technology tracks sales, inventory, pricing, assortment, and sentiment to help users quickly identify profitable opportunities in the market. The platform offers features such as benchmarking performance, automated e-commerce intelligence, competitor research, product research, assortment analysis, and promotions monitoring. With easy-to-use tools and robust AI capabilities, Particl aims to elevate team workflows and capabilities in strategic planning, product launches, and market analysis.

ADSoar

ADSoar is a powerful AI-driven Google Ads spy tool that provides official data and AI scoring to help advertisers spy on competitors, scale ads, and win bigger. It offers industry-first waterfall charts to track competitor expansion across 50+ countries, 6-point AI scoring to identify winning ads, and features like ad intelligence, global expansion tracking, audience targeting analysis, and ad performance benchmarking. ADSoar aims to solve specific challenges faced by advertisers by providing real features and insights, not empty promises.

Peec AI

Peec AI is an AI search analytics tool designed for marketing teams to track, analyze, and improve brand performance on AI search platforms. It provides key metrics such as Visibility, Position, and Sentiment to help businesses understand how AI perceives their brand. The platform offers insights on AI visibility, prompts analysis, and competitor tracking to enhance marketing strategies in the era of AI and generative search.

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

Junbi.ai

Junbi.ai is an AI-powered insights platform designed for YouTube advertisers. It offers AI-powered creative insights for YouTube ads, allowing users to benchmark their ads, predict performance, and test quickly and easily with fully AI-powered technology. The platform also includes expoze.io API for attention prediction on images or videos, with scientifically valid results and developer-friendly features for easy integration into software applications.

Woven Insights

Woven Insights is an AI-driven Fashion Retail Market & Consumer Insights solution that empowers fashion businesses with data-driven decision-making capabilities. It provides competitive intelligence, performance monitoring analytics, product assortment optimization, market insights, consumer insights, and pricing strategies to help businesses succeed in the retail market. With features like insights-driven competitive benchmarking, real-time market insights, product performance tracking, in-depth market analytics, and sentiment analysis, Woven Insights offers a comprehensive solution for businesses of all sizes. The application also offers bespoke data analysis, AI insights, natural language query, and easy collaboration tools to enhance decision-making processes. Woven Insights aims to democratize fashion intelligence by providing affordable pricing and accessible insights to help businesses stay ahead of the competition.

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

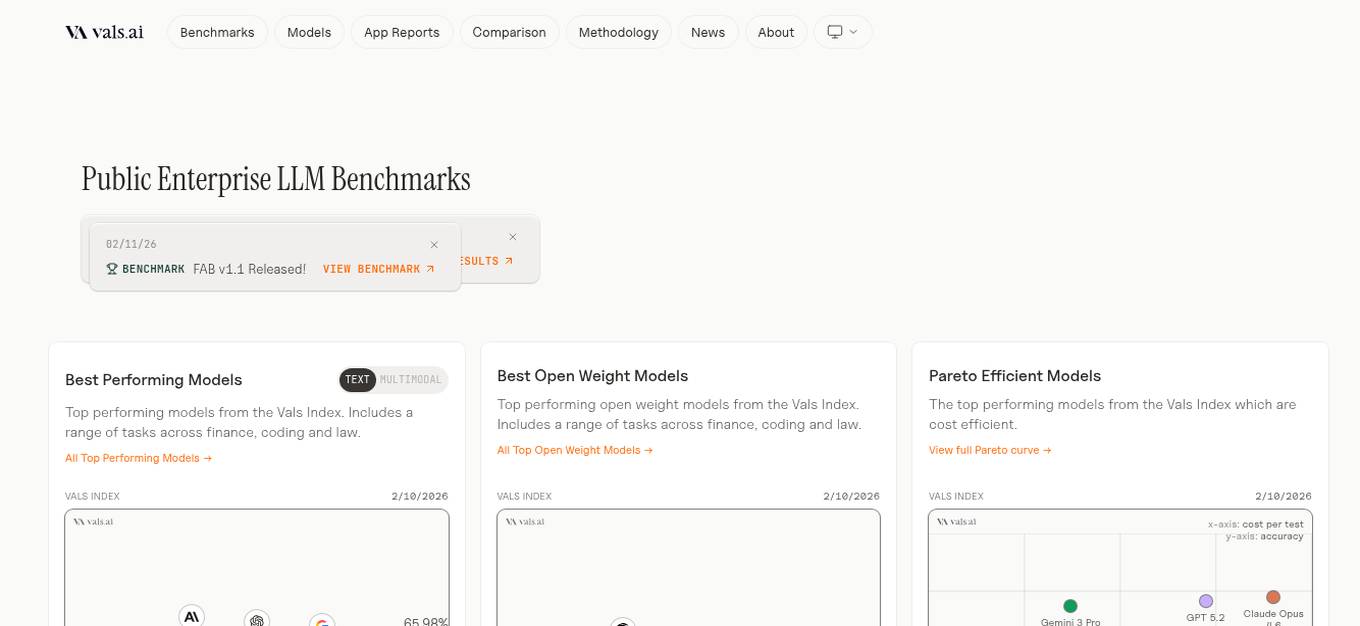

Vals AI

Vals AI is an advanced AI tool that provides benchmark reports and comparisons for various models in the fields of finance, coding, and law. The platform offers insights into the performance of different AI models across different tasks and industries. Vals AI aims to bridge the gap in model benchmarking and provide valuable information for users looking to evaluate and compare AI models for specific tasks.

Quolity AI

Quolity is an AI-powered platform designed for Answer Engine Optimization (AEO) to help brands and businesses enhance their online visibility and credibility in AI search engines. It offers insights, quality scores, and actions based on analyzing AI citations, brand mentions, and competitive link analysis. Quolity tracks brand mentions, provides quality analysis, and offers recommendations to improve AEO performance. The platform caters to startups, B2B SaaS, agencies, and content-driven brands aiming to excel in the evolving landscape of AI search.

Aider

Aider is an AI pair programming tool that allows users to collaborate with Language Model Models (LLMs) to edit code in their local git repository. It supports popular languages like Python, JavaScript, TypeScript, PHP, HTML, and CSS. Aider can handle complex requests, automatically commit changes, and work well in larger codebases by using a map of the entire git repository. Users can edit files while chatting with Aider, add images and URLs to the chat, and even code using their voice. Aider has received positive feedback from users for its productivity-enhancing features and performance on software engineering benchmarks.

Reflection 70B

Reflection 70B is a next-gen open-source LLM powered by Llama 70B, offering groundbreaking self-correction capabilities that outsmart GPT-4. It provides advanced AI-powered conversations, assists with various tasks, and excels in accuracy and reliability. Users can engage in human-like conversations, receive assistance in research, coding, creative writing, and problem-solving, all while benefiting from its innovative self-correction mechanism. Reflection 70B sets new standards in AI performance and is designed to enhance productivity and decision-making across multiple domains.

Legal Benchmarks

Legal Benchmarks is a platform that provides independent lawyer-led AI evaluations for in-house legal work in the legal industry. The platform evaluates AI assistants on critical legal tasks like contract drafting and information extraction. It offers rankings based on how different AI tools perform on real-world legal tasks, helping legal teams understand and adopt AI solutions. Legal Benchmarks also allows legal AI vendors to submit their tools for evaluation and provides access to customized private reports, insights, and practical breakdowns of AI tools' performance.

WorkViz

WorkViz is an AI-powered performance tool designed for remote teams to visualize productivity, maximize performance, and foresee the team's potential. It offers features such as automated daily reports, employee voice expression through emojis, workload management alerts, productivity solutions, and intelligent summaries. WorkViz ensures data security through guaranteed audit, desensitization, and SSL security protocols. The application has received positive feedback from clients for driving improvements, providing KPIs and benchmarks, and simplifying daily reporting. It helps users track work hours, identify roadblocks, and improve team performance.

Flowtrace

Flowtrace is an AI-powered Company Analytics Productivity tool that focuses on improving meeting culture, collaboration, and engagement within organizations. It analyzes meeting data to provide actionable recommendations for enhancing team performance and productivity. With features like industry benchmarks, integration with common SaaS tools, and personalized insights, Flowtrace aims to help teams work more efficiently and effectively. The tool helps in reducing meeting costs, increasing productivity, removing distractions, and transforming meeting culture for better decision-making and outcomes.

19 - Open Source AI Tools

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

ai-hub

AI Hub Project aims to continuously test and evaluate mainstream large language models, while accumulating and managing various effective model invocation prompts. It has integrated all mainstream large language models in China, including OpenAI GPT-4 Turbo, Baidu ERNIE-Bot-4, Tencent ChatPro, MiniMax abab5.5-chat, and more. The project plans to continuously track, integrate, and evaluate new models. Users can access the models through REST services or Java code integration. The project also provides a testing suite for translation, coding, and benchmark testing.

long-context-attention

Long-Context-Attention (YunChang) is a unified sequence parallel approach that combines the strengths of DeepSpeed-Ulysses-Attention and Ring-Attention to provide a versatile and high-performance solution for long context LLM model training and inference. It addresses the limitations of both methods by offering no limitation on the number of heads, compatibility with advanced parallel strategies, and enhanced performance benchmarks. The tool is verified in Megatron-LM and offers best practices for 4D parallelism, making it suitable for various attention mechanisms and parallel computing advancements.

marlin

Marlin is a highly optimized FP16xINT4 matmul kernel designed for large language model (LLM) inference, offering close to ideal speedups up to batchsizes of 16-32 tokens. It is suitable for larger-scale serving, speculative decoding, and advanced multi-inference schemes like CoT-Majority. Marlin achieves optimal performance by utilizing various techniques and optimizations to fully leverage GPU resources, ensuring efficient computation and memory management.

MMC

This repository, MMC, focuses on advancing multimodal chart understanding through large-scale instruction tuning. It introduces a dataset supporting various tasks and chart types, a benchmark for evaluating reasoning capabilities over charts, and an assistant achieving state-of-the-art performance on chart QA benchmarks. The repository provides data for chart-text alignment, benchmarking, and instruction tuning, along with existing datasets used in experiments. Additionally, it offers a Gradio demo for the MMCA model.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

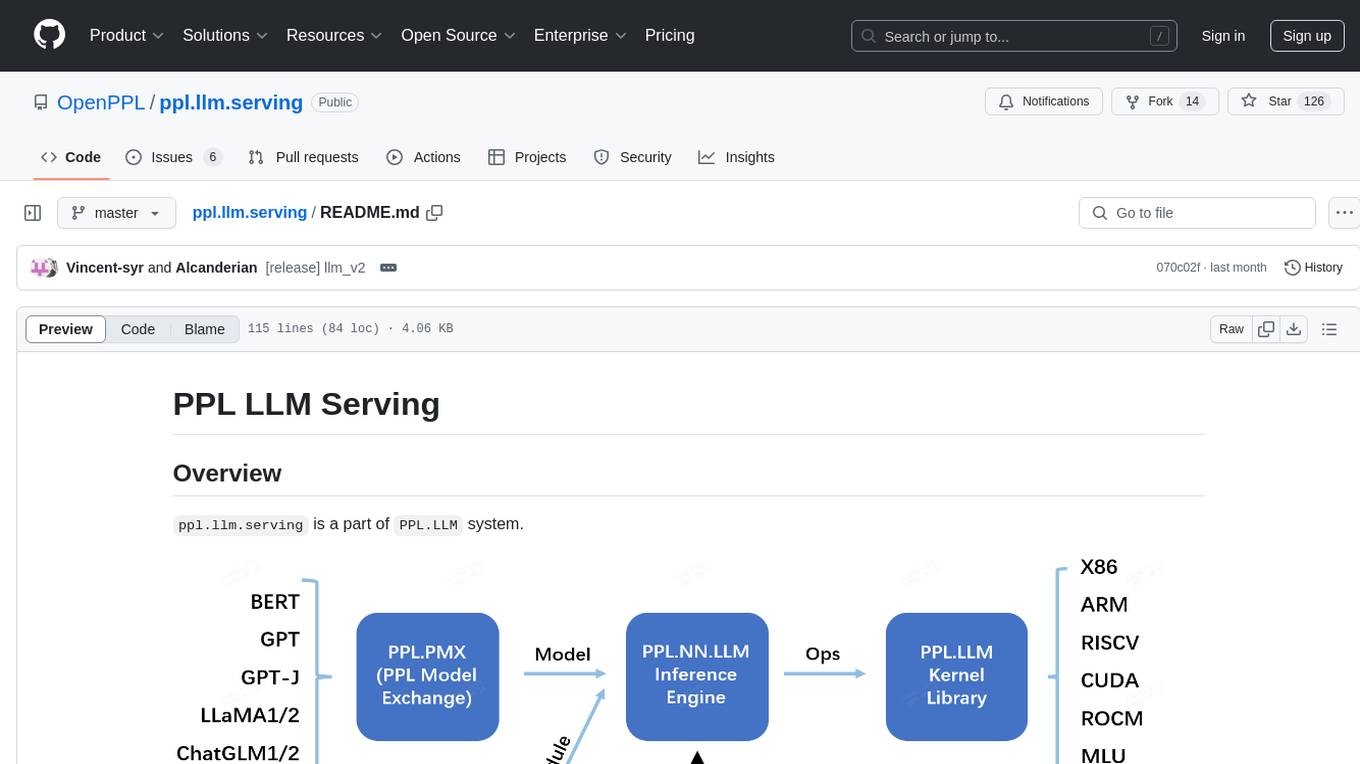

ppl.llm.serving

ppl.llm.serving is a serving component for Large Language Models (LLMs) within the PPL.LLM system. It provides a server based on gRPC and supports inference for LLaMA. The repository includes instructions for prerequisites, quick start guide, model exporting, server setup, client usage, benchmarking, and offline inference. Users can refer to the LLaMA Guide for more details on using this serving component.

llama.cpp

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud. It provides a Plain C/C++ implementation without any dependencies, optimized for Apple silicon via ARM NEON, Accelerate and Metal frameworks, and supports various architectures like AVX, AVX2, AVX512, and AMX. It offers integer quantization for faster inference, custom CUDA kernels for NVIDIA GPUs, Vulkan and SYCL backend support, and CPU+GPU hybrid inference. llama.cpp is the main playground for developing new features for the ggml library, supporting various models and providing tools and infrastructure for LLM deployment.

Tutel

Tutel MoE is an optimized Mixture-of-Experts implementation that offers a parallel solution with 'No-penalty Parallism/Sparsity/Capacity/Switching' for modern training and inference. It supports Pytorch framework (version >= 1.10) and various GPUs including CUDA and ROCm. The tool enables Full Precision Inference of MoE-based Deepseek R1 671B on AMD MI300. Tutel provides features like all-to-all benchmarking, tensorcore option, NCCL timeout settings, Megablocks solution, and dynamic switchable configurations. Users can run Tutel in distributed mode across multiple GPUs and machines. The tool allows for custom MoE implementations and offers detailed usage examples and reference documentation.

nestia

Nestia is a set of helper libraries for NestJS, providing super-fast/easy decorators, advanced WebSocket routes, Swagger generator, SDK library generator for clients, mockup simulator for client applications, automatic E2E test functions generator, test program utilizing e2e test functions, benchmark program using e2e test functions, super A.I. chatbot by Swagger document, Swagger-UI with online TypeScript editor, and a CLI tool. It enhances performance significantly and offers a collection of typed fetch functions with DTO structures like tRPC, along with a mockup simulator that is fully automated.

LLMs-Planning

This repository contains code for three papers related to evaluating large language models on planning and reasoning about change. It includes benchmarking tools and analysis for assessing the planning abilities of large language models. The latest addition evaluates and enhances the planning and scheduling capabilities of a specific language reasoning model. The repository provides a static test set leaderboard showcasing model performance on various tasks with natural language and planning domain prompts.

chitu

Chitu is a high-performance inference framework for large language models, focusing on efficiency, flexibility, and availability. It supports various mainstream large language models, including DeepSeek, LLaMA series, Mixtral, and more. Chitu integrates latest optimizations for large language models, provides efficient operators with online FP8 to BF16 conversion, and is deployed for real-world production. The framework is versatile, supporting various hardware environments beyond NVIDIA GPUs. Chitu aims to enhance output speed per unit computing power, especially in decoding processes dependent on memory bandwidth.

ipex-llm

The `ipex-llm` repository is an LLM acceleration library designed for Intel GPU, NPU, and CPU. It provides seamless integration with various models and tools like llama.cpp, Ollama, HuggingFace transformers, LangChain, LlamaIndex, vLLM, Text-Generation-WebUI, DeepSpeed-AutoTP, FastChat, Axolotl, and more. The library offers optimizations for over 70 models, XPU acceleration, and support for low-bit (FP8/FP6/FP4/INT4) operations. Users can run different models on Intel GPUs, NPU, and CPUs with support for various features like finetuning, inference, serving, and benchmarking.

uzu

uzu is a high-performance inference engine for AI models on Apple Silicon. It features a simple, high-level API, hybrid architecture for GPU kernel computation, unified model configurations, traceable computations, and utilizes unified memory on Apple devices. The tool provides a CLI mode for running models, supports its own model format, and offers prebuilt Swift and TypeScript frameworks for bindings. Users can quickly start by adding the uzu dependency to their Cargo.toml and creating an inference Session with a specific model and configuration. Performance benchmarks show metrics for various models on Apple M2, highlighting the tokens/s speed for each model compared to llama.cpp with bf16/f16 precision.

LTEngine

LTEngine is a free and open-source local AI machine translation API written in Rust. It is self-hosted and compatible with LibreTranslate. LTEngine utilizes large language models (LLMs) via llama.cpp, offering high-quality translations that rival or surpass DeepL for certain languages. It supports various accelerators like CUDA, Metal, and Vulkan, with the largest model 'gemma3-27b' fitting on a single consumer RTX 3090. LTEngine is actively developed, with a roadmap outlining future enhancements and features.

mini-swe-agent

The mini-swe-agent is a lightweight AI agent designed to solve GitHub issues and more. It is a minimal tool with just 100 lines of Python code, suitable for researchers, developers, and engineers looking for a simple, powerful, and deployable solution. The agent is built to be convenient, deployable in various environments, and tested for performance. It focuses on providing a hackable tool that is simple, convenient, and flexible, without unnecessary bloat or complexity. Users can run the agent with any model using bash, making it ideal for benchmarking, fine-tuning, and reinforcement learning tasks.

WaferLLM

WaferLLM is the first wafer-scale Large Language Model (LLM) inference system designed to optimize the utilization of hundreds of thousands of on-chip cores in wafer-scale accelerators. It introduces MeshGEMM and MeshGEMV implementations for effective scaling on wafer-scale architectures, achieving significantly higher accelerator utilization and speedups compared to state-of-the-art methods. Users need the Cerebras SDK to reproduce the results, and the project provides detailed documentation and scripts for running simulations on both simulator and actual hardware.

kvcached

kvcached is a new KV cache management system that supports on-demand KV cache allocation. It implements the concept of GPU virtual memory, allowing applications to reserve virtual address space without immediately committing physical memory. Physical memory is then automatically allocated and mapped as needed at runtime. This capability allows multiple LLMs to run concurrently on a single GPU or a group of GPUs (TP) and flexibly share the GPU memory, significantly improving GPU utilization and reducing memory fragmentation. kvcached is compatible with popular LLM serving engines, including SGLang and vLLM.

10 - OpenAI Gpts

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.

HVAC Apex

Benchmark HVAC GPT model with unmatched expertise and forward-thinking solutions, powered by OpenAI

SaaS Navigator

A strategic SaaS analyst for CXOs, with a focus on market trends and benchmarks.

Transfer Pricing Advisor

Guides businesses in managing global tax liabilities efficiently.

Salary Guides

I provide monthly salary data in euros, using a structured format for global job roles.