Groq

Fast AI Inference for Open Models

Groq is a fast AI inference tool that offers instant intelligence for openly-available models like Llama 3.1. It provides ultra-low-latency inference for cloud deployments and is compatible with other providers like OpenAI. Groq's speed is proven to be instant through independent benchmarks, and it powers leading openly-available AI models such as Llama, Mixtral, Gemma, and Whisper. The tool has gained recognition in the industry for its high-speed inference compute capabilities and has received significant funding to challenge established players like Nvidia.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Features

Advantages

Disadvantages

Frequently Asked Questions

Alternative AI tools for Groq

Similar sites

Groq

Groq is a fast AI inference tool that offers instant intelligence for openly-available models like Llama 3.1. It provides ultra-low-latency inference for cloud deployments and is compatible with other providers like OpenAI. Groq's speed is proven to be instant through independent benchmarks, and it powers leading openly-available AI models such as Llama, Mixtral, Gemma, and Whisper. The tool has gained recognition in the industry for its high-speed inference compute capabilities and has received significant funding to challenge established players like Nvidia.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

GPUX

GPUX is a cloud platform that provides access to GPUs for running AI workloads. It offers a variety of features to make it easy to deploy and run AI models, including a user-friendly interface, pre-built templates, and support for a variety of programming languages. GPUX is also committed to providing a sustainable and ethical platform, and it has partnered with organizations such as the Climate Leadership Council to reduce its carbon footprint.

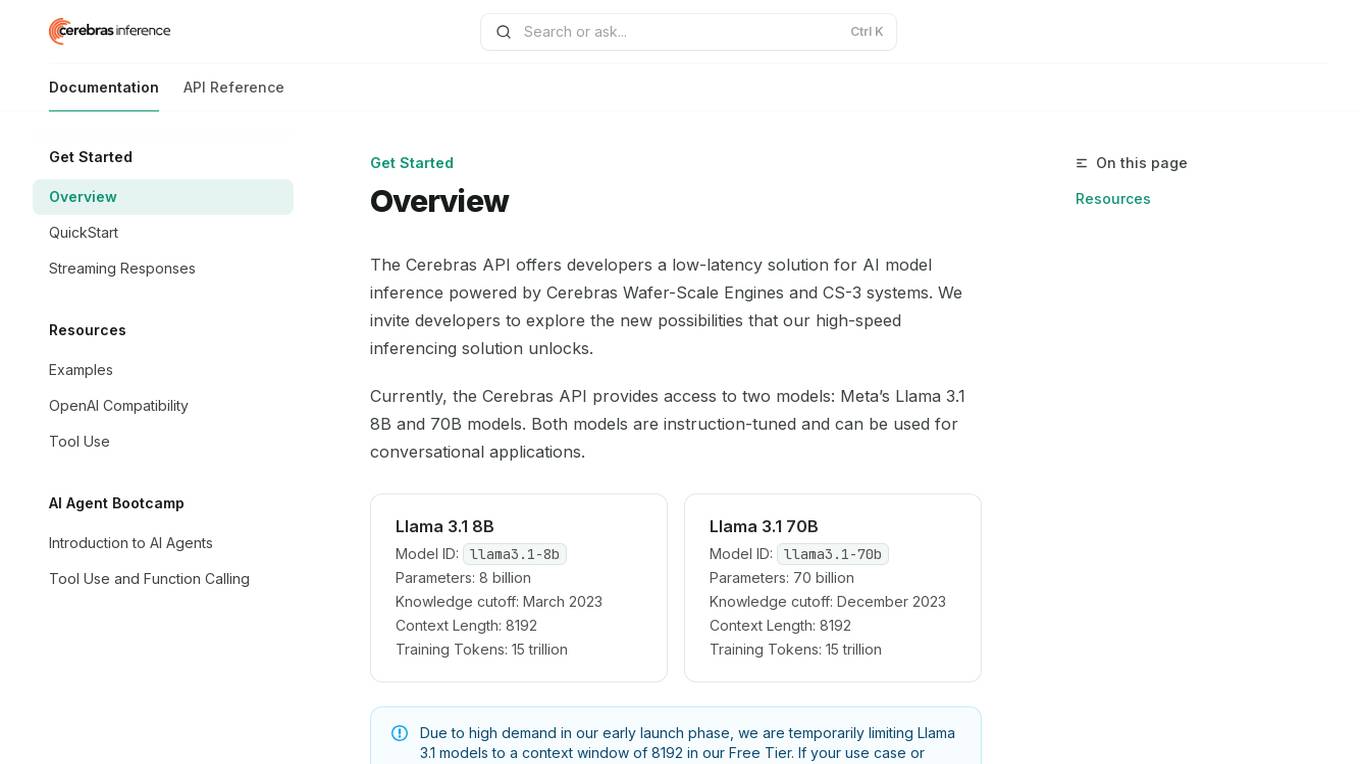

Cerebras API

The Cerebras API is a high-speed inferencing solution for AI model inference powered by Cerebras Wafer-Scale Engines and CS-3 systems. It offers developers access to two models: Meta’s Llama 3.1 8B and 70B models, which are instruction-tuned and suitable for conversational applications. The API provides low-latency solutions and invites developers to explore new possibilities in AI development.

Tensoic AI

Tensoic AI is an AI tool designed for custom Large Language Models (LLMs) fine-tuning and inference. It offers ultra-fast fine-tuning and inference capabilities for enterprise-grade LLMs, with a focus on use case-specific tasks. The tool is efficient, cost-effective, and easy to use, enabling users to outperform general-purpose LLMs using synthetic data. Tensoic AI generates small, powerful models that can run on consumer-grade hardware, making it ideal for a wide range of applications.

fal.ai

fal.ai is a generative media platform designed for developers to build the next generation of creativity. It offers lightning-fast inference with no compromise on quality, providing access to high-quality generative media models optimized by the fal Inference Engine™. The platform allows developers to fine-tune their own models, leverage real-time infrastructure for new user experiences, and scale to thousands of GPUs as needed. With a focus on developer experience, fal.ai aims to be the fastest AI tool for running diffusion models.

Obviously AI

Obviously AI is a no-code AI tool that allows users to build and deploy machine learning models without writing any code. It is designed to be easy to use, even for those with no data science experience. Obviously AI offers a variety of features, including model building, model deployment, model monitoring, and integration with other tools. It also provides expert support from a dedicated data scientist.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

Cerebras

Cerebras is an AI tool that offers products and services related to AI supercomputers, cloud system processors, and applications for various industries. It provides high-performance computing solutions, including large language models, and caters to sectors such as health, energy, government, scientific computing, and financial services. Cerebras specializes in AI model services, offering state-of-the-art models and training services for tasks like multi-lingual chatbots and DNA sequence prediction. The platform also features the Cerebras Model Zoo, an open-source repository of AI models for developers and researchers.

Tune AI

Tune AI is an enterprise Gen AI stack that offers custom models to build competitive advantage. It provides a range of features such as accelerating coding, content creation, indexing patent documents, data audit, automatic speech recognition, and more. The application leverages generative AI to help users solve real-world problems and create custom models on top of industry-leading open source models. With enterprise-grade security and flexible infrastructure, Tune AI caters to developers and enterprises looking to harness the power of AI.

Fifi.ai

Fifi.ai is a managed AI cloud platform that provides users with the infrastructure and tools to deploy and run AI models. The platform is designed to be easy to use, with a focus on plug-and-play functionality. Fifi.ai also offers a range of customization and fine-tuning options, allowing users to tailor the platform to their specific needs. The platform is supported by a team of experts who can provide assistance with onboarding, API integration, and troubleshooting.

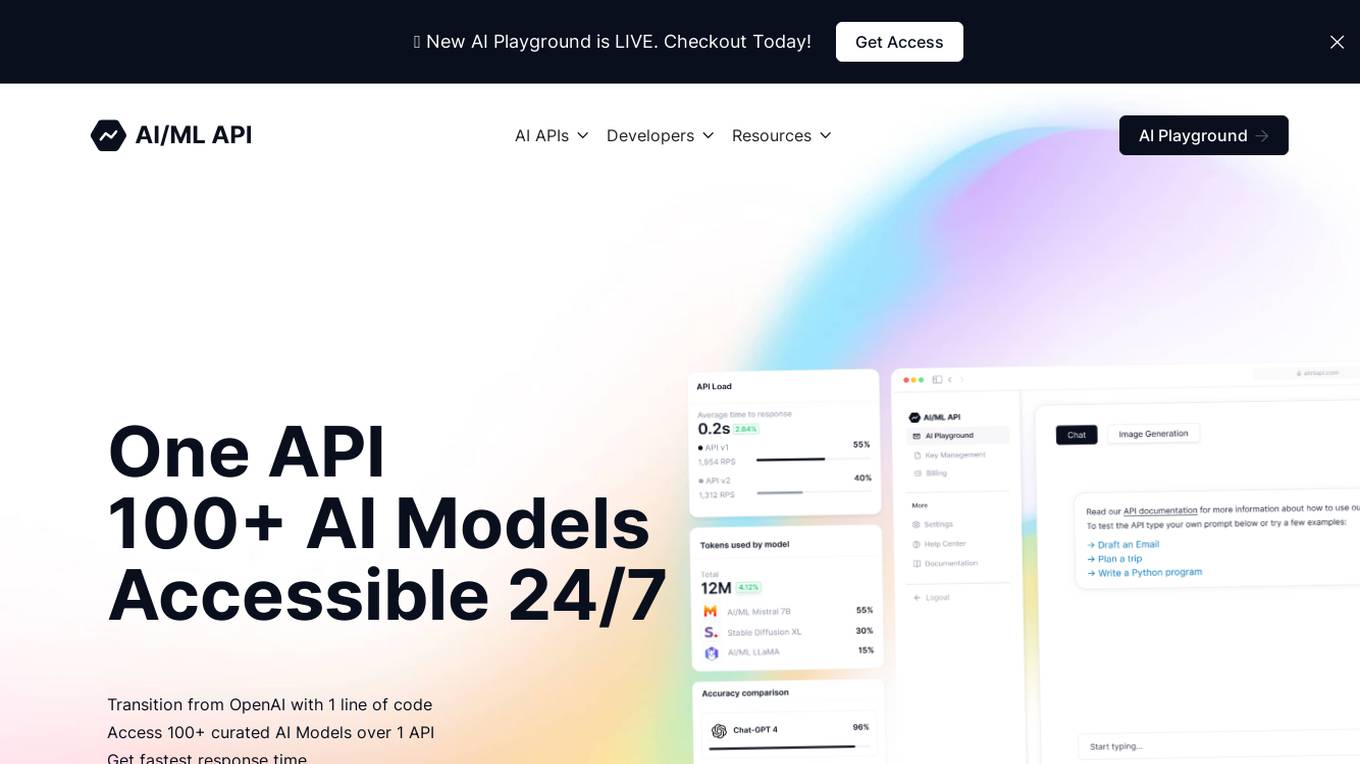

aimlapi.com

aimlapi.com is an AI tool that offers 100+ AI models accessible via one API. It provides developers with a wide range of AI models for various tasks such as chat, language, image generation, and code processing. The platform is designed to be user-friendly, cost-efficient, and scalable, making it suitable for developers of all levels. With a focus on transparency, affordability, and compatibility with OpenAI, aimlapi.com aims to provide high-quality AI solutions to its users.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

Galileo AI

Galileo AI is a platform that offers automated evaluations for AI applications, bringing automation and insight to AI evaluations to ensure reliable and confident shipping. It helps in eliminating 80% of evaluation time by replacing manual reviews with high-accuracy metrics, enabling rapid iteration, achieving real-time protection, and providing end-to-end visibility into agent completions. Galileo also allows developers to take control of AI complexity, de-risk AI in production, and deploy AI applications flexibly across different environments. The platform is trusted by enterprises and loved by developers for its accuracy, low-latency, and ability to run on L4 GPUs.

Magick

Magick is a cutting-edge Artificial Intelligence Development Environment (AIDE) that empowers users to rapidly prototype and deploy advanced AI agents and applications without coding. It provides a full-stack solution for building, deploying, maintaining, and scaling AI creations. Magick's open-source, platform-agnostic nature allows for full control and flexibility, making it suitable for users of all skill levels. With its visual node-graph editors, users can code visually and create intuitively. Magick also offers powerful document processing capabilities, enabling effortless embedding and access to complex data. Its real-time and event-driven agents respond to events right in the AIDE, ensuring prompt and efficient handling of tasks. Magick's scalable deployment feature allows agents to handle any number of users, making it suitable for large-scale applications. Additionally, its multi-platform integrations with tools like Discord, Unreal Blueprints, and Google AI provide seamless connectivity and enhanced functionality.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

For similar tasks

Rationale

Rationale is a cutting-edge decision-making AI tool that leverages the power of the latest GPT technology and in-context learning. It is designed to assist users in making informed decisions by providing valuable insights and recommendations based on the data provided. With its advanced algorithms and machine learning capabilities, Rationale aims to streamline the decision-making process and enhance overall efficiency.

Talpa.ai

Talpa.ai is an AI-powered platform that offers advanced solutions for data analytics and automation. The platform leverages cutting-edge artificial intelligence technologies to provide businesses with valuable insights and streamline their operations. Talpa.ai helps organizations make data-driven decisions, optimize processes, and enhance overall efficiency. With its user-friendly interface and powerful features, Talpa.ai is a reliable partner for businesses looking to harness the power of AI for growth and success.

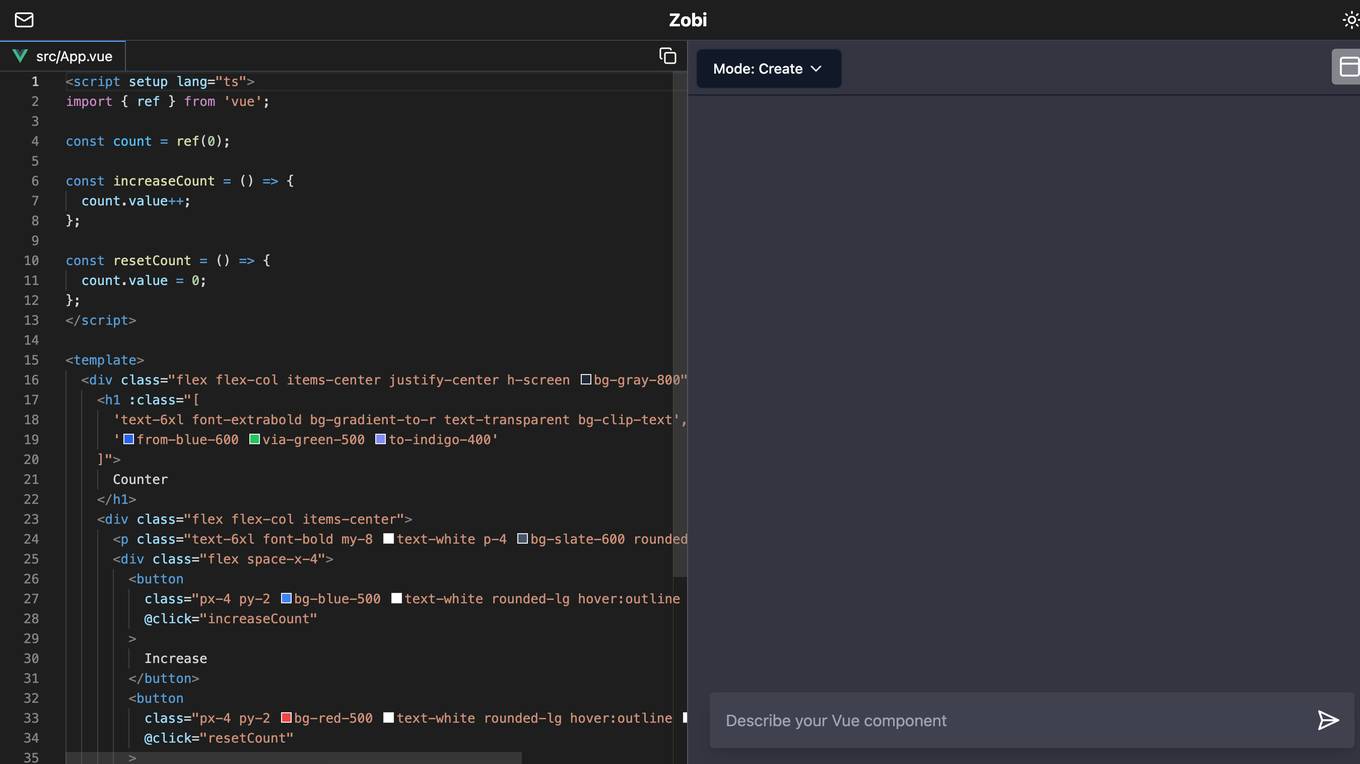

Zobi

The website Zobi is a web application that allows users to create a counter mode. Users can increment and reset the count using the provided functions. The interface is designed with a modern and user-friendly layout, making it easy for users to interact with the application.

Unlearn.ai

Unlearn.ai is an AI-powered platform that streamlines clinical trials by leveraging digital twins of patients. The platform replaces traditional siloed workflows with a unified workspace for trial design, planning, and analysis. By utilizing digital twins, data, and AI, Unlearn.ai helps teams achieve alignment faster, test assumptions earlier, and move forward with confidence in clinical development.

Promptly

Promptly is a generative AI platform designed for enterprises to build custom AI agents, applications, and chatbots without any coding experience. The platform allows users to seamlessly integrate their own data and GPT-powered models, supporting a wide variety of data sources. With features like model chaining, developer-friendly tools, and collaborative app building, Promptly empowers teams to quickly prototype and scale AI applications for various use cases. The platform also offers seamless integrations with popular workflows and tools, ensuring limitless possibilities for AI-powered solutions.

BlockSurvey

BlockSurvey is an AI-powered survey assistant that offers a comprehensive platform for creating, analyzing, and optimizing surveys with advanced AI capabilities. It prioritizes user data privacy and security, providing end-to-end encryption and compliance with industry standards. The platform features AI survey creation, analysis, thematic analysis, sentiment analysis, adaptive questioning, and sample responses generation. It also offers features like data encoding, survey optimization, secure surveys, anonymous surveys, and customization options. BlockSurvey caters to various industries and functions, ensuring privacy-first surveys with zero knowledge and decentralized survey capabilities.

Baseboard

Baseboard is an AI tool designed to help users generate insights from their data quickly and efficiently. With Baseboard, users can create visually appealing charts and visualizations for their websites or publications with the assistance of an AI-powered designer. The tool aims to streamline the data visualization process and provide users with valuable insights to make informed decisions.

Gestualy

Gestualy is an AI tool designed to measure and improve customer satisfaction and mood quickly and easily through gestures. It eliminates the need for cumbersome satisfaction surveys by allowing interactions with customers or guests through gestures. The tool uses artificial intelligence to make intelligent decisions in businesses. Gestualy generates valuable statistical reports for businesses, including satisfaction levels, gender, mood, and age, all while ensuring data protection and privacy compliance. It offers touchless interaction, immediate feedback, anonymized reports, and various services such as gesture recognition, facial analysis, gamification, and alert systems.

Strategy-First AI

Strategy-First AI is an AI tool designed to help businesses elevate their brand using artificial intelligence technology. The tool focuses on implementing strategic AI solutions to enhance brand performance and competitiveness in the market. With a user-friendly interface and advanced AI algorithms, Strategy-First AI provides businesses with valuable insights and recommendations to optimize their branding strategies and achieve business goals.

iListingAI

iListingAI is the UK's leading inventory software designed to streamline property inspection reports using AI technology. The application offers a user-friendly interface that combines vision, voice, and video to generate professional and legally compliant inventories 10x faster. With features like AI analysis, instant report generation, voice-first workflow, and ghost mode comparison, iListingAI revolutionizes the property inspection process for independent clerks and agencies.

Microsoft Azure

Microsoft Azure is a cloud computing service that offers a wide range of products and solutions for businesses and developers. It provides services such as databases, analytics, compute, containers, hybrid cloud, AI, application development, and more. Azure aims to help organizations innovate, modernize, and scale their operations by leveraging the power of the cloud. With a focus on flexibility, performance, and security, Azure is designed to support a variety of workloads and use cases across different industries.

Wizu

Wizu is an AI-powered platform that offers conversational surveys to enhance customer feedback. It helps businesses collect meaningful feedback, analyze AI-powered insights, and take clear actions based on the data. The platform utilizes AI technology to drive engagement, deliver better response rates, and provide richer insights for businesses to make data-driven decisions.

Lexum.ai

Lexum.ai is an AI-powered legal research and summaries tool designed to assist legal professionals in conducting efficient and accurate legal research. The tool utilizes advanced artificial intelligence algorithms to analyze vast amounts of legal data and provide users with comprehensive summaries and insights. By leveraging cutting-edge technology, Lexum.ai aims to streamline the legal research process and enhance the productivity of legal practitioners.

TimeToTok

TimeToTok is an AI Copilot and Agent designed for TikTok's growth. It uses LLM technology, similar to GPT, to analyze massive TikTok data and provide AI-driven insights to help creators improve their content, engagement, and monetization. The platform offers personalized growth strategies, viral content ideas, video optimization suggestions, competitor tracking, and 24/7 support to enhance users' TikTok presence and achieve significant growth.

Chatsheet

Chatsheet is an AI tool that revolutionizes the way spreadsheets are used. It leverages artificial intelligence to automate data entry, analysis, and visualization tasks, making spreadsheet management more efficient and intuitive. With Chatsheet, users can easily create, edit, and analyze data in a collaborative and interactive environment. The tool offers advanced features such as predictive analytics, natural language processing, and smart data suggestions to streamline decision-making processes. Chatsheet is designed to simplify complex data handling tasks and empower users to make data-driven decisions with ease.

Socialvar

Socialvar Ltd is a leading marketing platform offering a full-stack social media solution, email and SMS marketing services, and WhatsApp automation. The platform helps businesses automate their marketing efforts, reach more customers, and drive sales by simplifying social media post scheduling, email campaigns, and SMS marketing. With user-friendly interfaces and flexible pricing plans, Socialvar caters to businesses of all sizes, providing tools for efficient communication and customer engagement.

ChatCSV

ChatCSV is your personal data analyst that allows you to interact with your spreadsheets in a conversational manner. Simply upload a CSV file and start asking questions to get insights through visualizations. It is designed to assist users across various industries such as retail, finance, banking, marketing, and more, making data analysis more accessible and intuitive.

Lime

Lime is an AI-powered data research assistant that helps users with data research tasks. It offers advanced capabilities to streamline the data research process, providing valuable insights and recommendations. Lime is designed to assist users in making informed decisions based on data analysis and research findings. With its intuitive interface and powerful algorithms, Lime simplifies complex data research tasks and enhances productivity. Whether you are a business professional, researcher, or student, Lime can be a valuable tool to support your data research needs.

OpenAI

OpenAI is an artificial intelligence research laboratory consisting of the for-profit OpenAI LP and the non-profit OpenAI Inc. The organization focuses on creating and promoting friendly AI for the benefit of humanity. OpenAI conducts research in the field of AI and aims to ensure that artificial general intelligence benefits all of humanity. The organization is known for its research in natural language processing, reinforcement learning, and other areas of AI. OpenAI also develops and releases AI models and tools to advance the field of artificial intelligence.

Airwiz

Airwiz is an AI data analyst tool designed to revolutionize the data analysis experience for Airtable users. It offers intuitive AI data analysis without the need for coding skills, providing instant, actionable insights by simply asking questions. Airwiz seamlessly integrates with Airtable, unlocking Python-level data insights and empowering professionals to make informed decisions effortlessly. The tool simplifies complex data analysis tasks, making it a must-have for Operations Coordinators, Financial Analysts, and Product Managers.

Synthreo

Synthreo is an AI tool that empowers businesses to streamline operations, reduce costs, and drive growth through intelligent AI agents. It provides cutting-edge AI agent solutions that automate routine tasks, enhance decision-making, and create seamless collaboration between human teams and digital labor. Synthreo offers transformative advantages for businesses of all sizes, enabling operational efficiency and strategic growth. The platform implements advanced security measures, complies with industry standards, and upholds ethical AI usage. Businesses can leverage AI-powered digital labor to achieve unprecedented efficiency, innovation, and growth.

Rapid Editor

Rapid Editor is an advanced mapping tool that revolutionizes map editing by integrating cutting-edge technology and authoritative geospatial open data. It empowers OpenStreetMap mappers of all levels to quickly make accurate and fresh edits to maps. The tool saves effort by tapping into open data and AI-predicted features to draw map geometry, provides AI-analyzed satellite imagery for a high-level overview of unmapped areas, and displays open map data and machine learning detections in an intuitive user interface. Rapid Editor is designed to help map the world efficiently and is supported by a strong community of humanitarian and community groups.

RestoGPT AI

RestoGPT AI is a Restaurant Marketing and Sales Platform designed to help local restaurants streamline their online ordering and delivery processes. It acts as an AI employee that manages the entire online business, from building a branded website to customer database management, order processing, delivery dispatch, menu maintenance, customer support, and data-driven marketing campaigns. The platform aims to increase customer retention, generate more direct orders, and improve overall efficiency in restaurant operations.

RTutor

RTutor is an AI tool developed by Orditus LLC that leverages OpenAI's large language models to translate natural language into R or Python code for data analysis. Users can upload data in various formats, ask questions, and receive results in seconds. The tool allows for data exploration, basic plots, and model customization. RTutor is designed for traditional statistics data analysis, where rows represent observations and columns represent variables. It offers a user-friendly interface for analyzing data through chats and supports Python as well. The tool is free for non-profit organizations, with licensing required for commercial use.

For similar jobs

nunu.ai

nunu.ai is an AI-powered platform designed to revolutionize game testing by leveraging AI agents to conduct end-to-end tests at scale. By automating repetitive tasks, the platform significantly reduces manual QA costs for game studios. With features like human-like testing, multi-platform support, and enterprise-grade security, nunu.ai offers a comprehensive solution for game developers seeking efficient and reliable testing processes.

XenonStack

XenonStack is an AI application that offers a reasoning foundry for agentic enterprises. It provides unified reasoning foundation enabling seamless orchestration, analytics, infrastructure, and trust across intelligent ecosystems. The platform includes various AI tools such as Akira AI for reasoning and agent orchestration, ElixirData for agentic analytics intelligence, NexaStack for agentic infrastructure automation, MetaSecure for trust, compliance, and defense, and Neural AI for agentic intelligence & autonomous innovation. It also offers pre-built autonomous agents for domain-specific intelligence, seamless integrations, and governed enterprise deployment.

Promptly

Promptly is a generative AI platform designed for enterprises to build custom AI agents, applications, and chatbots without any coding experience. The platform allows users to seamlessly integrate their own data and GPT-powered models, supporting a wide variety of data sources. With features like model chaining, developer-friendly tools, and collaborative app building, Promptly empowers teams to quickly prototype and scale AI applications for various use cases. The platform also offers seamless integrations with popular workflows and tools, ensuring limitless possibilities for AI-powered solutions.

Agentic AI

The website is a platform offering domain-specialized AI agents that drive enterprise-grade cost efficiency, operational turnaround, and unlock valuation multiples with defensible IP. It focuses on driving innovation, efficiency, and growth through Agentic AI for intelligent execution. The platform powers a structural upgrade in how work gets done, shifting from legacy, manual workflows to intelligent, self-improving systems. It is designed for secure, scalable transformation tailored to specific domains, data, and workflows.

Google Colab Copilot

Google Colab Copilot is an AI tool that integrates the GitHub Copilot functionality into Google Colab, allowing users to easily generate code suggestions and improve their coding workflow. By following a simple setup guide, users can start using the tool to enhance their coding experience and boost productivity. With features like code generation, auto-completion, and real-time suggestions, Google Colab Copilot is a valuable tool for developers looking to streamline their coding process.

Kapa.ai

Kapa.ai is an AI tool that builds accurate AI agents from technical documentation and various other sources. It helps deploy AI assistants across support, documentation, and internal teams in a matter of hours. Trusted by over 200 leading companies with technical products, Kapa.ai offers pre-built integrations, customer results, and an analytics platform to track user questions and content gaps. The tool focuses on providing grounded answers, connecting existing sources, and ensuring data security and compliance.

MARZ

MARZ is a technology and VFX company specializing in providing feature-film quality visual effects for TV productions. With a focus on innovation and leveraging proprietary AI solutions, MARZ aims to deliver outstanding VFX on fast timelines while remaining affordable for TV clients. The company has completed 128 projects in the first 4 years, received 2 VES nominations, 2 Emmy nominations, and boasts a team of 260 staff including 55 engineers, researchers, and technology experts.

Weaviate

Weaviate is an AI tool designed to empower AI builders to design, build, and ship complete AI experiences. It provides a foundation for search, retrieval augmented generation, and agentic AI. Weaviate offers production-ready AI applications, faster deployment, and seamless model integration. With billion-scale architecture and enterprise-ready deployment options, Weaviate helps AI builders scale seamlessly and meet enterprise requirements. The platform offers AI-first features under one roof, enabling users to write less custom code and build AI apps efficiently.

PaperClip

PaperClip is an AI tool designed to help users keep track of their daily AI papers review. It allows users to memorize details from papers in machine learning, computer vision, and natural language processing. The tool offers an extension to easily find back important findings from AI research papers, ML blog posts, and news. PaperClip's AI runs locally, ensuring data privacy by not sending any information to external servers. Users can save and index their findings locally, with offline support for searching even without an internet connection. The tool also provides the ability to clean data by resetting saved bits in one click.

cto.new

cto.new is a completely free AI code agent that plans and ships code using the best AI models. It seamlessly integrates with various developer tools without the need for a credit card or API key. The platform is designed to assist developers in efficiently completing tasks, finding and fixing bugs, and working on tickets. Trusted by teams, cto.new aims to streamline the coding process by leveraging AI technology.

Pythagora

Pythagora is the world's first all-in-one AI development platform that allows users to build production apps quickly and efficiently. With Pythagora, users can go from prompt to production seamlessly, with frontend development in minutes and backend development in hours. The platform offers a complete technical stack, smart inline code review, one-click deployment, and full code ownership, making app development faster and smarter.

Questflow

Questflow is an AI-powered platform that offers a range of products to automate tasks, gather user feedback, design and deploy AI agents, and track on-chain transactions in real-time. It empowers teams and innovators worldwide by providing tools to streamline processes and enhance productivity. With a focus on user feedback and continuous improvement, Questflow aims to revolutionize the way businesses operate in the digital age.

Isomeric

Isomeric is an AI tool that utilizes artificial intelligence to semantically understand unstructured text and extract specific data. It transforms messy, unstructured text into machine-readable JSON, enabling users to extract insights, process data, deliver results, and more. From web scraping to browser extensions to general information extraction, Isomeric helps users scale their data gathering pipeline efficiently.

Vectara

Vectara is a conversational search API platform designed for developers, offering best-in-class retrieval and summarization capabilities. It showcases conversational search capabilities and allows users to ask questions about the news, filter by source, and explore various topics. Vectara also supports personalized data queries and offers a free trial for easy access. The platform is built with grounded generation to minimize hallucinations, providing reliable and accurate results for users.

ThirdAI

ThirdAI is an AI platform that offers a production-ready solution for building and deploying AI applications quickly and efficiently. It provides advanced AI/GenAI technology that can run on any infrastructure, reducing barriers to delivering production-grade AI solutions. With features like enterprise SSO, built-in models, no-code interface, and more, ThirdAI empowers users to create AI applications without the need for specialized GPU servers or AI skills. The platform covers the entire workflow of building AI applications end-to-end, allowing for easy customization and deployment in various environments.

AI SDK

The AI SDK is a free open-source library designed to empower developers to build AI-powered products. It offers a unified provider API, generative UI capabilities, framework-agnostic support, and streaming AI responses. The SDK has received high praise from developers for its ease of use, speed of development, and comprehensive documentation.

AnyAPI

AnyAPI is an AI tool that allows users to easily add AI features to their products in minutes. With the ability to craft the perfect GPT-3 prompt using A/B testing, users can quickly generate a live API endpoint to power their next AI feature. The platform offers a range of use cases, including turning emails into tasks, suggesting replies, and accessing plain text JSON. AnyAPI is designed to streamline the integration of AI capabilities into various products and services, offering a user-friendly experience for developers and businesses alike.

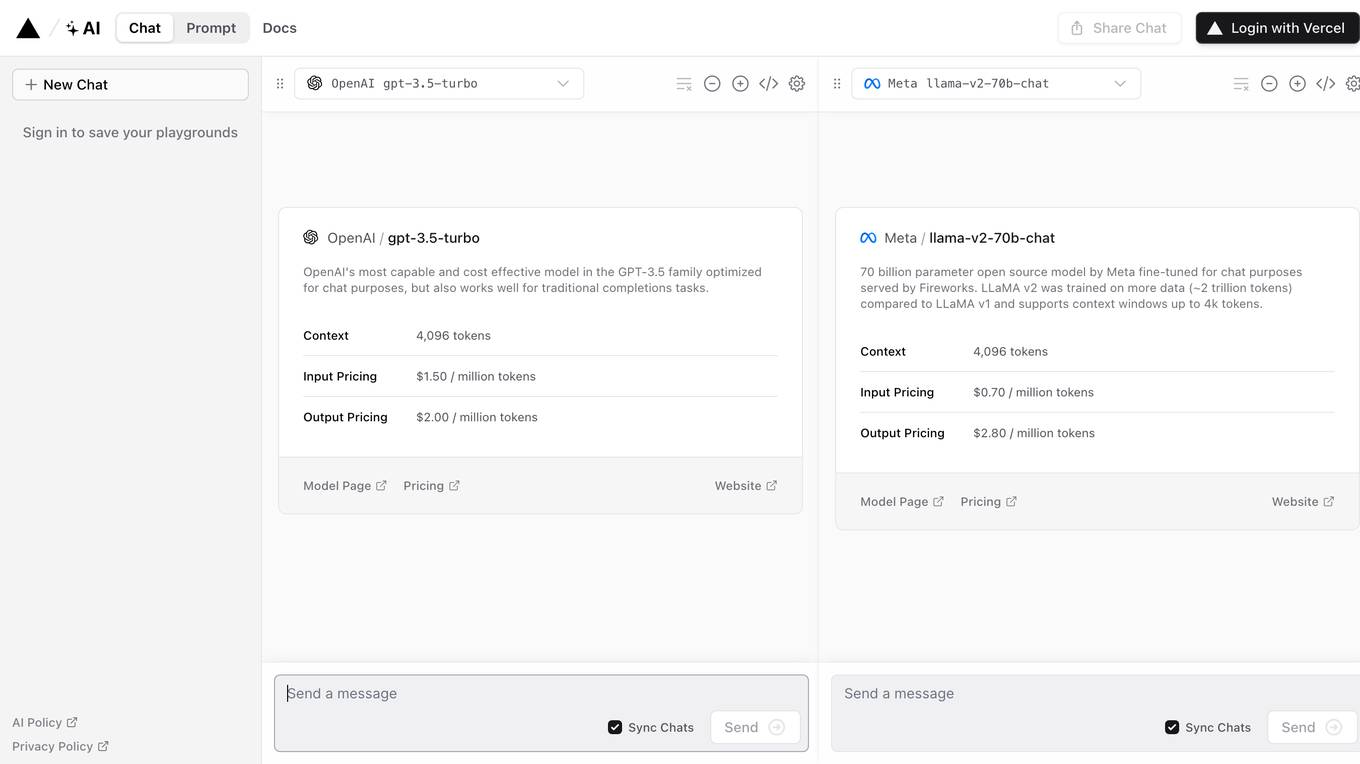

Rawbot

Rawbot is an AI model comparison tool designed to simplify the selection process by enabling users to identify and understand the strengths and weaknesses of various AI models. It allows users to compare AI models based on performance optimization, strengths and weaknesses identification, customization and tuning, cost and efficiency analysis, and informed decision-making. Rawbot is a user-friendly platform that caters to researchers, developers, and business leaders, offering a comprehensive solution for selecting the best AI models tailored to specific needs.

Convai

Convai is a Conversational AI platform that enables users to create intelligent characters with human-like conversation capabilities for games and virtual world applications. It offers an easy-to-use interface to design characters, connect them to assets, and engage in open-ended voice-based conversations. The platform focuses on enhancing user experiences in gaming, learning, and entertainment by providing AI-guided training applications and brand agents for various industries. Convai aims to revolutionize the way users interact with virtual worlds through cutting-edge Generative Conversational AI technology.

OpenAI

OpenAI is an artificial intelligence research laboratory consisting of the for-profit OpenAI LP and the non-profit OpenAI Inc. The organization focuses on creating and promoting friendly AI for the benefit of humanity. OpenAI conducts research in the field of AI and aims to ensure that artificial general intelligence benefits all of humanity. The organization is known for its research in natural language processing, reinforcement learning, and other areas of AI. OpenAI also develops and releases AI models and tools to advance the field of artificial intelligence.

Signature AI

Signature is a private AI generative platform designed for brands and enterprises to enhance content creation capabilities. It offers bespoke AI models tailored to brand's output, mimicking creative teams' processes. The platform ensures privacy, safety, and security by deploying locally hosted Foundation Models and transparent licensing frameworks. With a focus on scalability, flexibility, and excellence, Signature enables rapid ideation, prototyping, and full-scale production. It optimizes resource efficiency and cost by streamlining production workflows through AI, reducing operational overhead and traditional photoshoot costs.

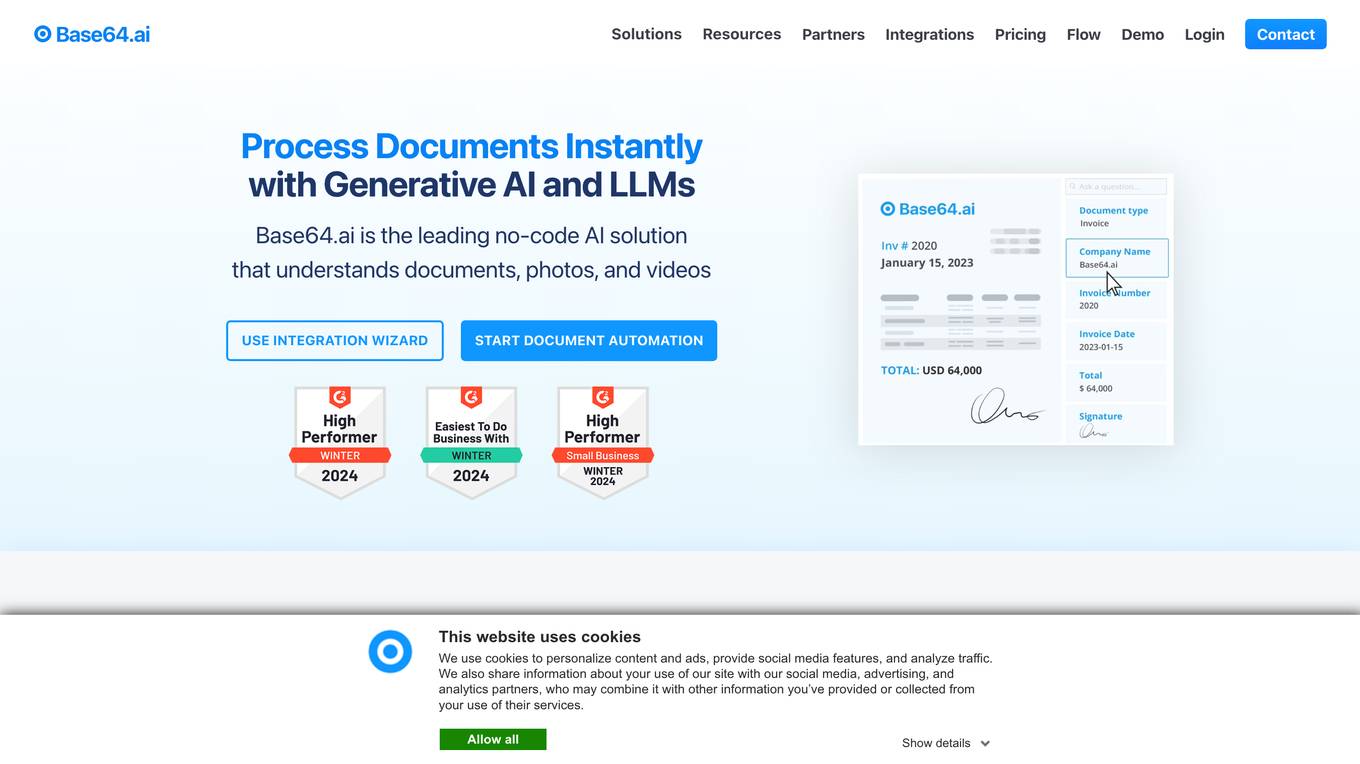

Base64.ai

Base64.ai is an AI-powered document intelligence platform that offers a comprehensive solution for document processing and data extraction. It leverages advanced AI technology to automate business decisions, improve efficiency, accuracy, and digital transformation. Base64.ai provides features such as GenAI models, Semantic AI, Custom Model Builder, Question & Answer capabilities, and Large Action Models to streamline document processing. The platform supports over 50 file formats and offers integrations with scanners, RPA platforms, and third-party software.

GGPredict.io

GGPredict.io is an AI-powered tool designed to help Counter-Strike: Global Offensive (CS:GO) players improve their skills through personalized challenges and analytics. The platform offers detailed performance analysis, cutting-edge maps for practice, dynamic leaderboards, and AI-led tools to track progress and identify areas for improvement. With endorsements from professional players and coaches, GGPredict.io aims to help players of all levels enhance their gameplay and reach their full potential.

Voqal

Voqal is an intelligent voice coding assistant designed to provide natural speech programming capabilities for software developers. It offers customization options, context extensions, and access to various compute providers. Voqal simplifies coding through intuitive modes and allows developers to code using plain-spoken language. The tool aims to enhance productivity and efficiency in software development by leveraging AI technology.