mini-swe-agent

The 100 line AI agent that solves GitHub issues or helps you in your command line. Radically simple, no huge configs, no giant monorepo—but scores 68% on SWE-bench verified!

Stars: 1721

The mini-swe-agent is a lightweight AI agent designed to solve GitHub issues and more. It is a minimal tool with just 100 lines of Python code, suitable for researchers, developers, and engineers looking for a simple, powerful, and deployable solution. The agent is built to be convenient, deployable in various environments, and tested for performance. It focuses on providing a hackable tool that is simple, convenient, and flexible, without unnecessary bloat or complexity. Users can run the agent with any model using bash, making it ideal for benchmarking, fine-tuning, and reinforcement learning tasks.

README:

📣 New blogpost: Randomly switching between GPT-5 and Sonnet 4 boosts performance

In 2024, SWE-bench & SWE-agent helped kickstart the coding agent revolution.

We now ask: What if SWE-agent was 100x smaller, and still worked nearly as well?

mini is for

- Researchers who want to benchmark, fine-tune or RL without assumptions, bloat, or surprises

- Developers who like their tools like their scripts: short, sharp, and readable

- Engineers who want something trivial to sandbox & to deploy anywhere

Here's some details:

- Minimal: Just 100 lines of python (+100 total for env, model, script) — no fancy dependencies!

- Powerful: Resolves 68% of GitHub issues in the SWE-bench verified benchmark (leaderboard).

- Convenient: Comes with UIs that turn this into your daily dev swiss army knife!

- Deployable: In addition to local envs, you can use docker, podman, singularity, apptainer, and more

-

Tested:

- Cutting edge: Built by the Princeton & Stanford team behind SWE-bench and SWE-agent.

More motivation (for research)

SWE-agent jump-started the development of AI agents in 2024. Back then, we placed a lot of emphasis on tools and special interfaces for the agent. However, one year later, as LMs have become more capable, a lot of this is not needed at all to build a useful agent! In fact, mini-SWE-agent

- Does not have any tools other than bash — it doesn't even use the tool-calling interface of the LMs. This means that you can run it with literally any model. When running in sandboxed environments you also don't need to take care of installing a single package — all it needs is bash.

- Has a completely linear history — every step of the agent just appends to the messages and that's it. So there's no difference between the trajectory and the messages that you pass on to the LM. Great for debugging & fine-tuning.

-

Executes actions with

subprocess.run— every action is completely independent (as opposed to keeping a stateful shell session running). This makes it trivial to execute the actions in sandboxes (literally just switch outsubprocess.runwithdocker exec) and to scale up effortlessly. Seriously, this is a big deal, trust me.

This makes it perfect as a baseline system and for a system that puts the language model (rather than

the agent scaffold) in the middle of our attention.

You can see the result on the SWE-bench (bash only) leaderboard, that evaluates the performance of different LMs with mini.

More motivation (as a tool)

Some agents are overfitted research artifacts. Others are UI-heavy frontend monsters.

mini wants to be a hackable tool, not a black box.

- Simple enough to understand at a glance

- Convenient enough to use in daily workflows

- Flexible to extend

Unlike other agents (including our own swe-agent), it is radically simpler, because it:

- Does not have any tools other than bash — it doesn't even use the tool-calling interface of the LMs. Instead of implementing custom tools for every specific thing the agent might want to do, the focus is fully on the LM utilizing the shell to its full potential. Want it to do something specific like opening a PR? Just tell the LM to figure it out rather than spending time to implement it in the agent.

-

Executes actions with

subprocess.run— every action is completely independent (as opposed to keeping a stateful shell session running). This is a big deal for the stability of the agent, trust me. - Has a completely linear history — every step of the agent just appends to the messages that are passed to the LM in the next step and that's it. This is great for debugging and understanding what the LM is prompted with.

Should I use SWE-agent or mini-SWE-agent?

You should use mini-swe-agent if

- You want a quick command line tool that works locally

- You want an agent with a very simple control flow

- You want even faster, simpler & more stable sandboxing & benchmark evaluations

- You are doing FT or RL and don't want to overfit to a specific agent scaffold

You should use swe-agent if

- You need specific tools or want to experiment with different tools

- You want to experiment with different history processors

- You want very powerful yaml configuration without touching code

What you get with both

- Excellent performance on SWE-Bench

- A trajectory browser

Simple UI (mini)

|

Visual UI (mini -v)

|

| Batch inference | Trajectory browser |

| Python bindings | More in the docs |

agent = DefaultAgent(

LitellmModel(model_name=...),

LocalEnvironment(),

)

agent.run("Write a sudoku game") |

Option 1: Install + run in virtual environment

pip install uv && uvx mini-swe-agent [-v]

# or

pip install pipx && pipx ensurepath && pipx run mini-swe-agent [-v]Option 2: Install in current environment

pip install mini-swe-agent && mini [-v]Option 3: Install from source

git clone https://github.com/SWE-agent/mini-swe-agent.git

cd mini-swe-agent

pip install -e .

mini [-v]Read more in our documentation:

- Quick start guide

- More on

miniandmini -v - Global configuration

- Yaml configuration files

- Power up with the cookbook

- FAQ

- Contribute!

If you found this work helpful, please consider citing the SWE-agent paper in your work:

@inproceedings{yang2024sweagent,

title={{SWE}-agent: Agent-Computer Interfaces Enable Automated Software Engineering},

author={John Yang and Carlos E Jimenez and Alexander Wettig and Kilian Lieret and Shunyu Yao and Karthik R Narasimhan and Ofir Press},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems},

year={2024},

url={https://arxiv.org/abs/2405.15793}

}Our other projects:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mini-swe-agent

Similar Open Source Tools

mini-swe-agent

The mini-swe-agent is a lightweight AI agent designed to solve GitHub issues and more. It is a minimal tool with just 100 lines of Python code, suitable for researchers, developers, and engineers looking for a simple, powerful, and deployable solution. The agent is built to be convenient, deployable in various environments, and tested for performance. It focuses on providing a hackable tool that is simple, convenient, and flexible, without unnecessary bloat or complexity. Users can run the agent with any model using bash, making it ideal for benchmarking, fine-tuning, and reinforcement learning tasks.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

deepchecks

Deepchecks is a holistic open-source solution for AI & ML validation needs, enabling thorough testing of data and models from research to production. It includes components for testing, CI & testing management, and monitoring. Users can install and use Deepchecks for testing and monitoring their AI models, with customizable checks and suites for tabular, NLP, and computer vision data. The tool provides visual reports, pythonic/json output for processing, and a dynamic UI for collaboration and monitoring. Deepchecks is open source, with premium features available under a commercial license for monitoring components.

open-autonomy

Open Autonomy is a framework for creating agent services that run as a multi-agent-system and offer enhanced functionalities on-chain. It enables executing complex operations like machine-learning algorithms in a decentralized, trust-minimized, transparent, and robust manner.

pebble

Pebbling is an open-source protocol for agent-to-agent communication, enabling AI agents to collaborate securely using Decentralised Identifiers (DIDs) and mutual TLS (mTLS). It provides a lightweight communication protocol built on JSON-RPC 2.0, ensuring reliable and secure conversations between agents. Pebbling allows agents to exchange messages safely, connect seamlessly regardless of programming language, and communicate quickly and efficiently. It is designed to pave the way for the next generation of collaborative AI systems, promoting secure and effortless communication between agents across different environments.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

agent-zero

Agent Zero is a personal and organic AI framework designed to be dynamic, organically growing, and learning as you use it. It is fully transparent, readable, comprehensible, customizable, and interactive. The framework uses the computer as a tool to accomplish tasks, with no single-purpose tools pre-programmed. It emphasizes multi-agent cooperation, complete customization, and extensibility. Communication is key in this framework, allowing users to give proper system prompts and instructions to achieve desired outcomes. Agent Zero is capable of dangerous actions and should be run in an isolated environment. The framework is prompt-based, highly customizable, and requires a specific environment to run effectively.

Devon

Devon is an open-source pair programmer tool designed to facilitate collaborative coding sessions. It provides features such as multi-file editing, codebase exploration, test writing, bug fixing, and architecture exploration. The tool supports Anthropic, OpenAI, and Groq APIs, with plans to add more models in the future. Devon is community-driven, with ongoing development goals including multi-model support, plugin system for tool builders, self-hostable Electron app, and setting SOTA on SWE-bench Lite. Users can contribute to the project by developing core functionality, conducting research on agent performance, providing feedback, and testing the tool.

aegis-stack

Aegis Stack is a system for creating and evolving modular Python applications quickly, without the need for extensive testing or clean architecture. It allows users to go from idea to working prototype rapidly, using familiar tools. The stack includes a CLI, a built-in system dashboard called Overseer, and an optional conversational interface named Illiana. Users can start with basic components and add or remove features as needed, without being locked into initial choices. Aegis Stack aims to provide a flexible and efficient development environment for Python applications.

lanarky

Lanarky is a Python web framework designed for building microservices using Large Language Models (LLMs). It is LLM-first, fast, modern, supports streaming over HTTP and WebSockets, and is open-source. The framework provides an abstraction layer for developers to easily create LLM microservices. Lanarky guarantees zero vendor lock-in and is free to use. It is built on top of FastAPI and offers features familiar to FastAPI users. The project is now in maintenance mode, with no active development planned, but community contributions are encouraged.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

giselle

Giselle is an open source AI tool designed for agentic workflows, facilitating seamless collaboration between humans and AI. It offers cloud hosting with free agent time, self-hosting options, and a Vibe Cording Guide for using AI coding assistants. Giselle is suitable for developers and non-engineers alike, empowering users to leverage AI capabilities without extensive coding knowledge. The tool is actively developed, with a roadmap in progress, and welcomes contributions from the community under the Apache License Version 2.0.

AiR

AiR is an AI tool built entirely in Rust that delivers blazing speed and efficiency. It features accurate translation and seamless text rewriting to supercharge productivity. AiR is designed to assist non-native speakers by automatically fixing errors and polishing language to sound like a native speaker. The tool is under heavy development with more features on the horizon.

AgentGPT

AgentGPT is a platform that allows users to configure and deploy autonomous AI agents. Users can name their own custom AI and set it on any goal. The AI will think of tasks, execute them, and learn from the results to reach the goal. The platform provides a demo experience, automatic setup CLI, and a tech stack including Next.js, FastAPI, Prisma, TailwindCSS, Zod, and more. AgentGPT is designed to help users easily create and deploy AI agents for various tasks.

CopilotKit

CopilotKit is an open-source framework for building, deploying, and operating fully custom AI Copilots, including in-app AI chatbots, AI agents, and AI Textareas. It provides a set of components and entry points that allow developers to easily integrate AI capabilities into their applications. CopilotKit is designed to be flexible and extensible, so developers can tailor it to their specific needs. It supports a variety of use cases, including providing app-aware AI chatbots that can interact with the application state and take action, drop-in replacements for textareas with AI-assisted text generation, and in-app agents that can access real-time application context and take action within the application.

For similar tasks

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

ai-hub

AI Hub Project aims to continuously test and evaluate mainstream large language models, while accumulating and managing various effective model invocation prompts. It has integrated all mainstream large language models in China, including OpenAI GPT-4 Turbo, Baidu ERNIE-Bot-4, Tencent ChatPro, MiniMax abab5.5-chat, and more. The project plans to continuously track, integrate, and evaluate new models. Users can access the models through REST services or Java code integration. The project also provides a testing suite for translation, coding, and benchmark testing.

long-context-attention

Long-Context-Attention (YunChang) is a unified sequence parallel approach that combines the strengths of DeepSpeed-Ulysses-Attention and Ring-Attention to provide a versatile and high-performance solution for long context LLM model training and inference. It addresses the limitations of both methods by offering no limitation on the number of heads, compatibility with advanced parallel strategies, and enhanced performance benchmarks. The tool is verified in Megatron-LM and offers best practices for 4D parallelism, making it suitable for various attention mechanisms and parallel computing advancements.

marlin

Marlin is a highly optimized FP16xINT4 matmul kernel designed for large language model (LLM) inference, offering close to ideal speedups up to batchsizes of 16-32 tokens. It is suitable for larger-scale serving, speculative decoding, and advanced multi-inference schemes like CoT-Majority. Marlin achieves optimal performance by utilizing various techniques and optimizations to fully leverage GPU resources, ensuring efficient computation and memory management.

MMC

This repository, MMC, focuses on advancing multimodal chart understanding through large-scale instruction tuning. It introduces a dataset supporting various tasks and chart types, a benchmark for evaluating reasoning capabilities over charts, and an assistant achieving state-of-the-art performance on chart QA benchmarks. The repository provides data for chart-text alignment, benchmarking, and instruction tuning, along with existing datasets used in experiments. Additionally, it offers a Gradio demo for the MMCA model.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

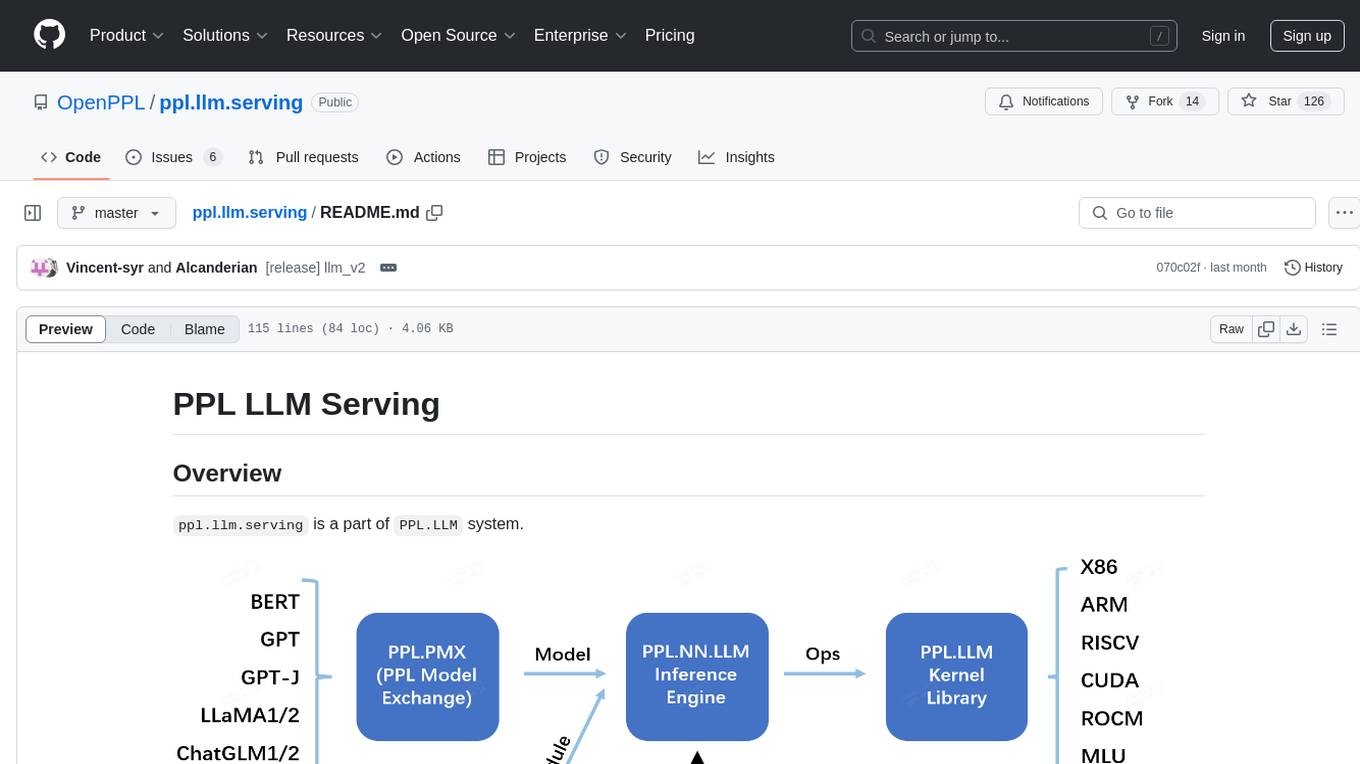

ppl.llm.serving

ppl.llm.serving is a serving component for Large Language Models (LLMs) within the PPL.LLM system. It provides a server based on gRPC and supports inference for LLaMA. The repository includes instructions for prerequisites, quick start guide, model exporting, server setup, client usage, benchmarking, and offline inference. Users can refer to the LLaMA Guide for more details on using this serving component.

For similar jobs

Pichome

PicHome is a powerful open-source cloud storage program that efficiently manages various types of files and excels in image and media file management. Its highlights include robust file sharing features and advanced AI-assisted management tools, providing users with a convenient and intelligent file management experience. The program offers diverse list modes, customizable file information display, enhanced quick file preview, advanced tagging, custom cover and preview images, multiple preview images, and multi-library management. Additionally, PicHome features strong file sharing capabilities, allowing users to share entire libraries, create personalized showcase web pages, and build complete data sharing websites. The AI-assisted management aspect includes AI file renaming, tagging, description writing, batch annotation, and file Q&A services, all aimed at improving file management efficiency. PicHome supports a wide range of file formats and can be applied in various scenarios such as e-commerce, gaming, design, development, enterprises, schools, labs, media, and entertainment institutions.

machine-learning-research

The 'machine-learning-research' repository is a comprehensive collection of resources related to mathematics, machine learning, deep learning, artificial intelligence, data science, and various scientific fields. It includes materials such as courses, tutorials, books, podcasts, communities, online courses, papers, and dissertations. The repository covers topics ranging from fundamental math skills to advanced machine learning concepts, with a focus on applications in healthcare, genetics, computational biology, precision health, and AI in science. It serves as a valuable resource for individuals interested in learning and researching in the fields of machine learning and related disciplines.

Awesome-TimeSeries-SpatioTemporal-LM-LLM

Awesome-TimeSeries-SpatioTemporal-LM-LLM is a curated list of Large (Language) Models and Foundation Models for Temporal Data, including Time Series, Spatio-temporal, and Event Data. The repository aims to summarize recent advances in Large Models and Foundation Models for Time Series and Spatio-Temporal Data with resources such as papers, code, and data. It covers various applications like General Time Series Analysis, Transportation, Finance, Healthcare, Event Analysis, Climate, Video Data, and more. The repository also includes related resources, surveys, and papers on Large Language Models, Foundation Models, and their applications in AIOps.

moon

Moon is a monitoring and alerting platform suitable for multiple domains, supporting various application scenarios such as cloud-native, Internet of Things (IoT), and Artificial Intelligence (AI). It simplifies operational work of cloud-native monitoring, boasts strong IoT and AI support capabilities, and meets diverse monitoring needs across industries. Capable of real-time data monitoring, intelligent alerts, and fault response for various fields.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from platforms like TikTok, Douyin, and Kuaishou. It allows users to effortlessly grab videos, make bulk edits, and utilize advanced AI features for generating videos, images, and sounds in bulk. The tool offers features like video, photo, and sound editing, downloading videos without watermarks, bulk AI generation, and AI editing for content enhancement.

ai-trend-publish

AI TrendPublish is an AI-based trend discovery and content publishing system that supports multi-source data collection, intelligent summarization, and automatic publishing to WeChat official accounts. It features data collection from various sources, AI-powered content processing using DeepseekAI Together, key information extraction, intelligent title generation, automatic article publishing to WeChat official accounts with custom templates and scheduled tasks, notification system integration with Bark for task status updates and error alerts. The tool offers multiple templates for content customization and is built using Node.js + TypeScript with AI services from DeepseekAI Together, data sources including Twitter/X API and FireCrawl, and uses node-cron for scheduling tasks and EJS as the template engine.

llm.hunyuan.T1

Hunyuan-T1 is a cutting-edge large-scale hybrid Mamba reasoning model driven by reinforcement learning. It has been officially released as an upgrade to the Hunyuan Thinker-1-Preview model. The model showcases exceptional performance in deep reasoning tasks, leveraging the TurboS base and Mamba architecture to enhance inference capabilities and align with human preferences. With a focus on reinforcement learning training, the model excels in various reasoning tasks across different domains, showcasing superior abilities in mathematical, logical, scientific, and coding reasoning. Through innovative training strategies and alignment with human preferences, Hunyuan-T1 demonstrates remarkable performance in public benchmarks and internal evaluations, positioning itself as a leading model in the field of reasoning.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from top platforms like TikTok, Douyin, and Kuaishou. Effortlessly grab videos from user profiles, make bulk edits throughout the entire directory with just one click. Advanced Chat & AI features let you download, edit, and generate videos, images, and sounds in bulk. Exciting new features are coming soon—stay tuned!