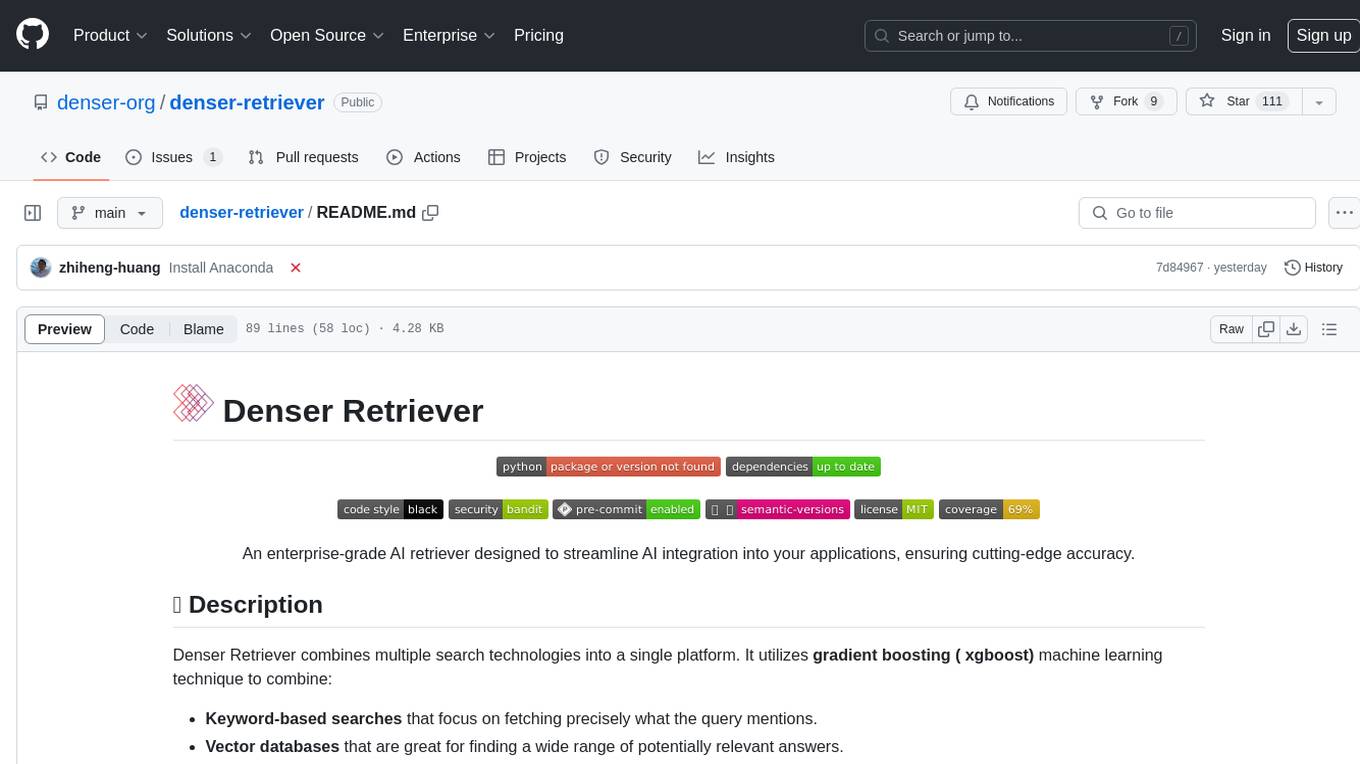

denser-retriever

An enterprise-grade AI retriever designed to streamline AI integration into your applications, ensuring cutting-edge accuracy.

Stars: 119

Denser Retriever is an enterprise-grade AI retriever designed to streamline AI integration into applications, combining keyword-based searches, vector databases, and machine learning rerankers using xgboost. It provides state-of-the-art accuracy on MTEB Retrieval benchmarking and supports various heterogeneous retrievers for end-to-end applications like chatbots and semantic search.

README:

An enterprise-grade AI retriever designed to streamline AI integration into your applications, ensuring cutting-edge accuracy.

Denser Retriever combines multiple search technologies into a single platform. It utilizes gradient boosting ( xgboost) machine learning technique to combine:

- Keyword-based searches that focus on fetching precisely what the query mentions.

- Vector databases that are great for finding a wide range of potentially relevant answers.

- Machine Learning rerankers that fine-tune the results to ensure the most relevant answers top the list.

Our experiments on MTEB datasets show that the combination of keyword search, vector search and a reranker via an xgboost model (denoted as ES+VS+RR_n) can significantly improve the vector search (VS) baseline.

The initial release of Denser Retriever provides the following features.

- Supporting heterogeneous retrievers such as keyword search, vector search, and ML model reranking

- Leveraging xgboost ML technique to effectively combine heterogeneous retrievers

- State-of-the-art accuracy on MTEB Retrieval benchmarking

- Demonstrating how to use Denser retriever to power an end-to-end applications such as chatbot and semantic search

We recommend installing Python via Anaconda, as we have received feedback about issues with Numpy installation when using the installer from https://www.python.org/downloads/. We are working on providing a solution to this problem. To install Denser Retriever, you can run:

pip install git+https://github.com/denser-org/denser-retriever.git#mainpoetry add git+https://github.com/denser-org/denser-retriever.git#mainThe official documentation is hosted on retriever.denser.ai. Click here to get started.

You can start developing Denser Retriever on your local machine.

See DEVELOPMENT.md for more details.

This project is licensed under the terms of the MIT license.

See LICENSE for more details.

@misc{denser-retriever,

author = {denser-org},

title = {An enterprise-grade AI retriever designed to streamline AI integration into your applications, ensuring cutting-edge accuracy.},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/denser-org/denser-retriever}}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for denser-retriever

Similar Open Source Tools

denser-retriever

Denser Retriever is an enterprise-grade AI retriever designed to streamline AI integration into applications, combining keyword-based searches, vector databases, and machine learning rerankers using xgboost. It provides state-of-the-art accuracy on MTEB Retrieval benchmarking and supports various heterogeneous retrievers for end-to-end applications like chatbots and semantic search.

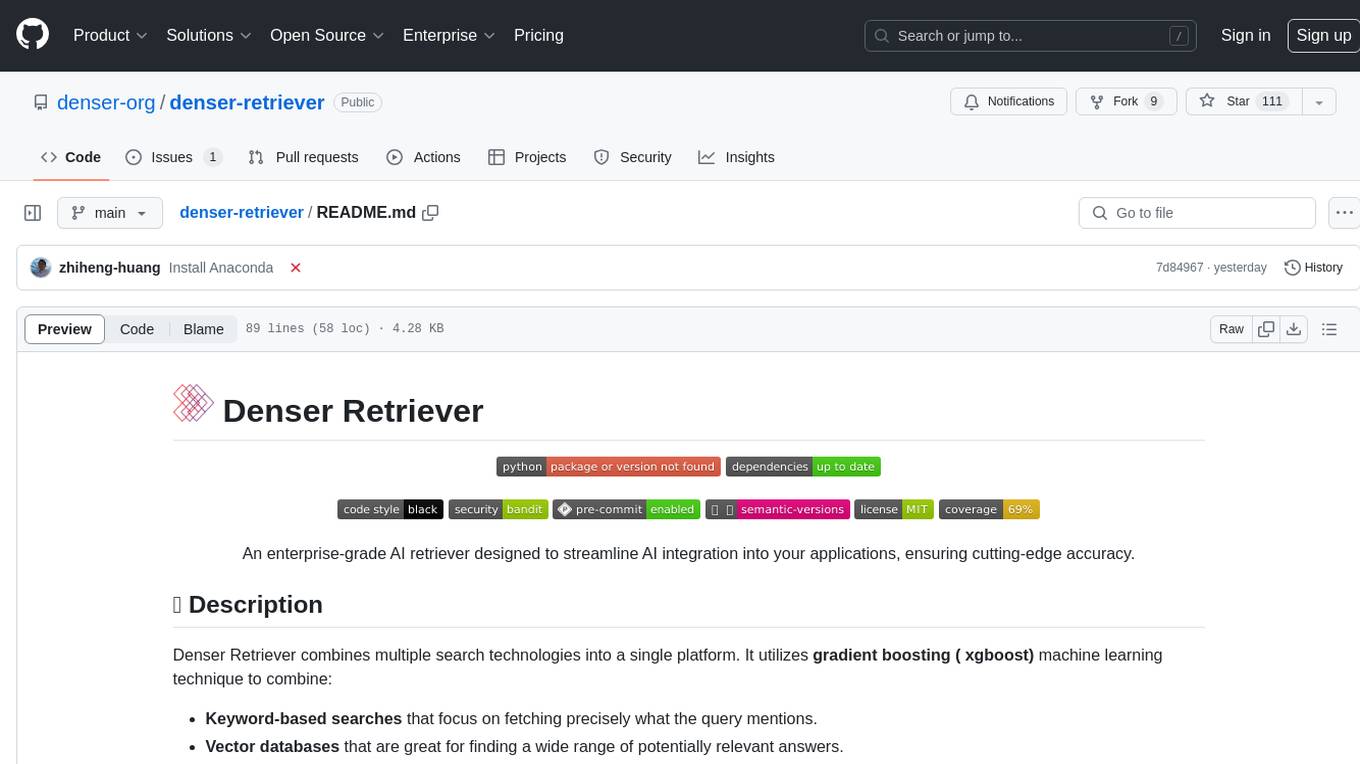

open-autonomy

Open Autonomy is a framework for creating agent services that run as a multi-agent-system and offer enhanced functionalities on-chain. It enables executing complex operations like machine-learning algorithms in a decentralized, trust-minimized, transparent, and robust manner.

pipeshub-ai

Pipeshub-ai is a versatile tool for automating data pipelines in AI projects. It provides a user-friendly interface to design, deploy, and monitor complex data workflows, enabling seamless integration of various AI models and data sources. With Pipeshub-ai, users can easily create end-to-end pipelines for tasks such as data preprocessing, model training, and inference, streamlining the AI development process and improving productivity. The tool supports integration with popular AI frameworks and cloud services, making it suitable for both beginners and experienced AI practitioners.

serverless-rag-demo

The serverless-rag-demo repository showcases a solution for building a Retrieval Augmented Generation (RAG) system using Amazon Opensearch Serverless Vector DB, Amazon Bedrock, Llama2 LLM, and Falcon LLM. The solution leverages generative AI powered by large language models to generate domain-specific text outputs by incorporating external data sources. Users can augment prompts with relevant context from documents within a knowledge library, enabling the creation of AI applications without managing vector database infrastructure. The repository provides detailed instructions on deploying the RAG-based solution, including prerequisites, architecture, and step-by-step deployment process using AWS Cloudshell.

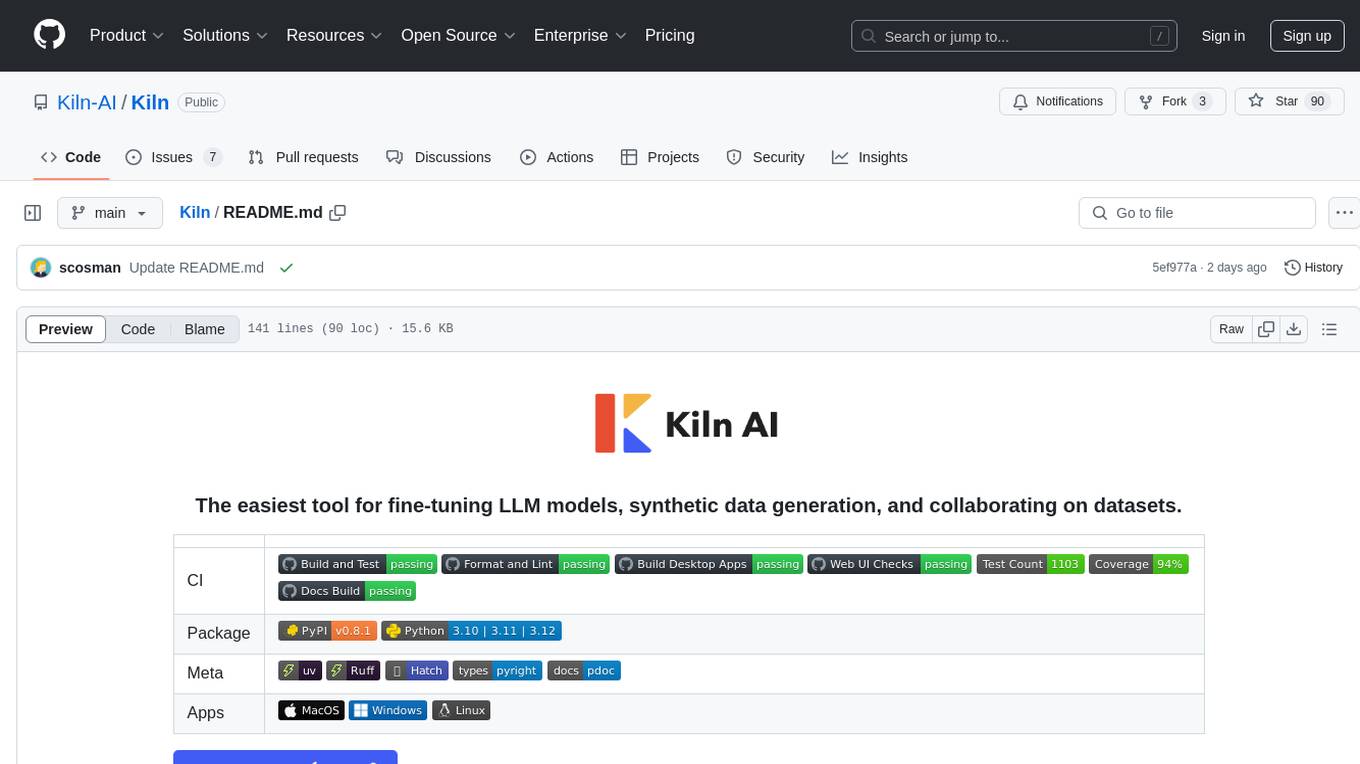

Kiln

Kiln is an intuitive tool for fine-tuning LLM models, generating synthetic data, and collaborating on datasets. It offers desktop apps for Windows, MacOS, and Linux, zero-code fine-tuning for various models, interactive data generation, and Git-based version control. Users can easily collaborate with QA, PM, and subject matter experts, generate auto-prompts, and work with a wide range of models and providers. The tool is open-source, privacy-first, and supports structured data tasks in JSON format. Kiln is free to use and helps build high-quality AI products with datasets, facilitates collaboration between technical and non-technical teams, allows comparison of models and techniques without code, ensures structured data integrity, and prioritizes user privacy.

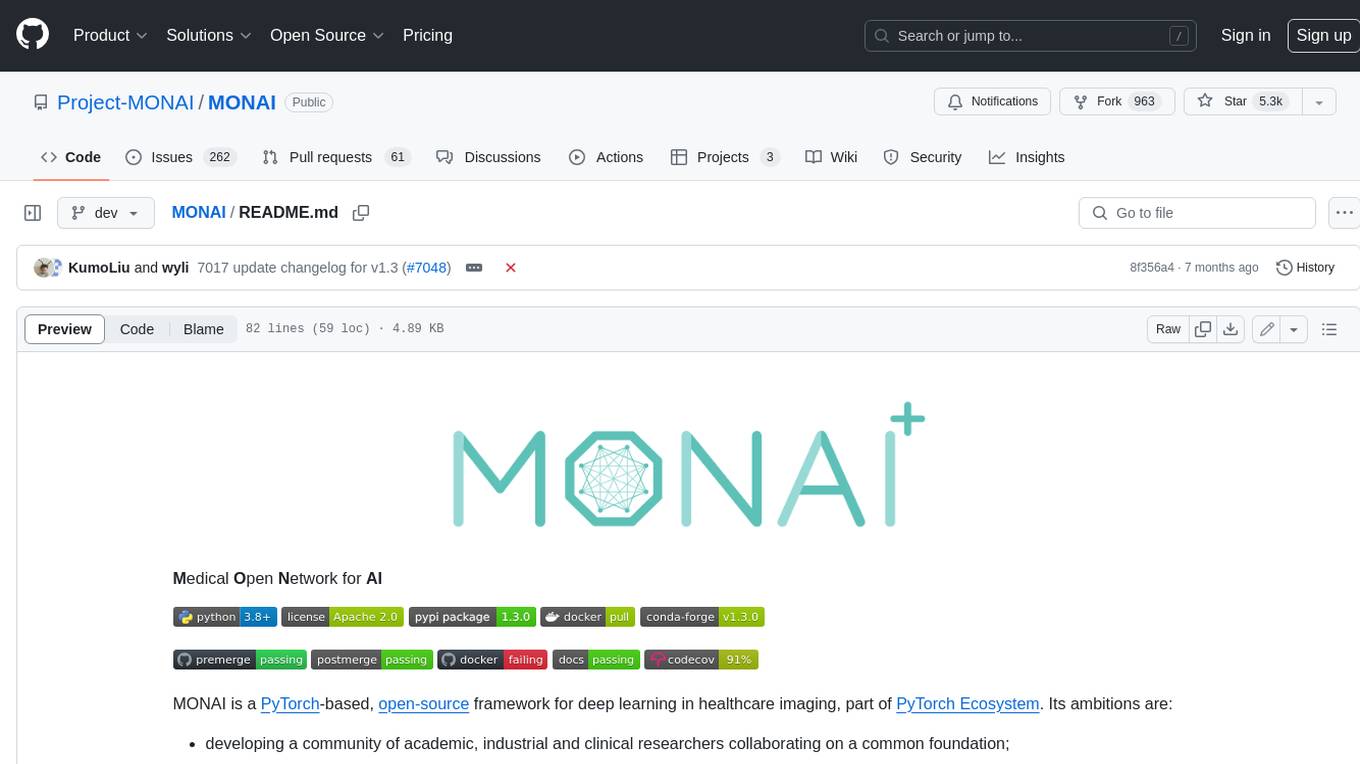

MONAI

MONAI is a PyTorch-based, open-source framework for deep learning in healthcare imaging. It provides a comprehensive set of tools for medical image analysis, including data preprocessing, model training, and evaluation. MONAI is designed to be flexible and easy to use, making it a valuable resource for researchers and developers in the field of medical imaging.

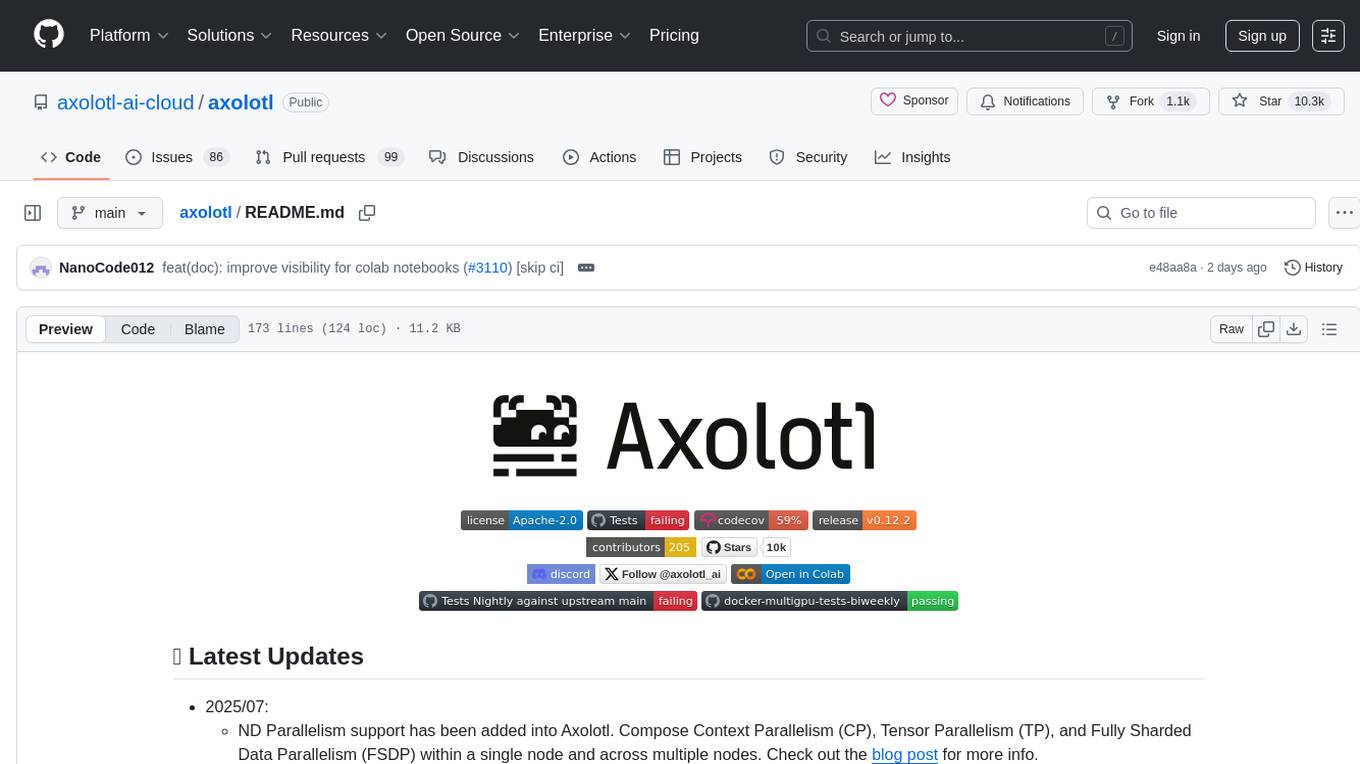

axolotl

Axolotl is a lightweight and efficient tool for managing and analyzing large datasets. It provides a user-friendly interface for data manipulation, visualization, and statistical analysis. With Axolotl, users can easily import, clean, and explore data to gain valuable insights and make informed decisions. The tool supports various data formats and offers a wide range of functions for data processing and modeling. Whether you are a data scientist, researcher, or business analyst, Axolotl can help streamline your data workflows and enhance your data analysis capabilities.

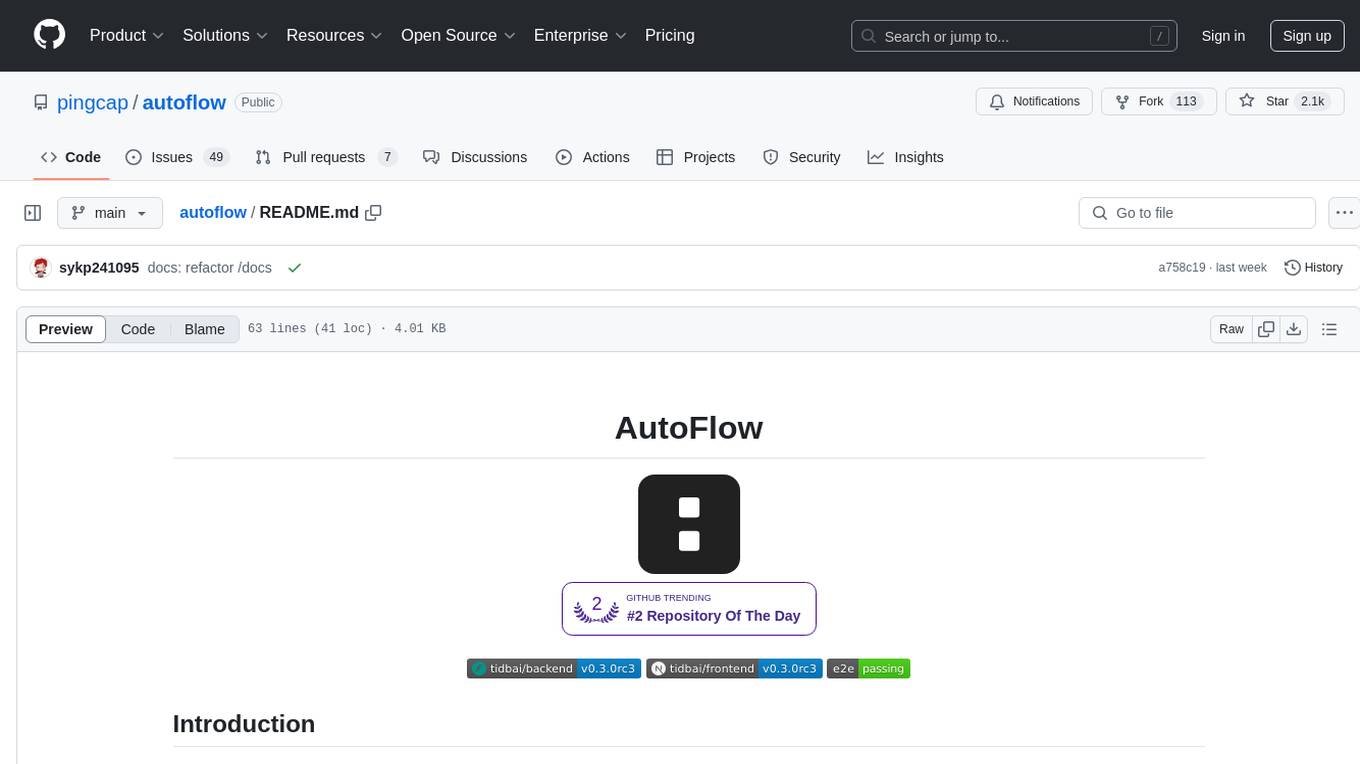

autoflow

AutoFlow is an open source graph rag based knowledge base tool built on top of TiDB Vector and LlamaIndex and DSPy. It features a Perplexity-style Conversational Search page and an Embeddable JavaScript Snippet for easy integration into websites. The tool allows for comprehensive coverage and streamlined search processes through sitemap URL scraping.

deepchat

DeepChat is a versatile chat tool that supports multiple model cloud services and local model deployment. It offers multi-channel chat concurrency support, platform compatibility, complete Markdown rendering, and easy usability with a comprehensive guide. The tool aims to enhance chat experiences by leveraging various AI models and ensuring efficient conversation management.

xpert

Xpert is a powerful tool for data analysis and visualization. It provides a user-friendly interface to explore and manipulate datasets, perform statistical analysis, and create insightful visualizations. With Xpert, users can easily import data from various sources, clean and preprocess data, analyze trends and patterns, and generate interactive charts and graphs. Whether you are a data scientist, analyst, researcher, or student, Xpert simplifies the process of data analysis and visualization, making it accessible to users with varying levels of expertise.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

panko-gpt

PankoGPT is an AI companion platform that allows users to easily create and deploy custom AI companions on messaging platforms like WhatsApp, Discord, and Telegram. Users can customize companion behavior, configure settings, and equip companions with various tools without the need for coding. The platform aims to provide contextual understanding and user-friendly interface for creating companions that respond based on context and offer configurable tools for enhanced capabilities. Planned features include expanded functionality, pre-built skills, and optimization for better performance.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

pyspur

PySpur is a graph-based editor designed for LLM (Large Language Models) workflows. It offers modular building blocks, node-level debugging, and performance evaluation. The tool is easy to hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies. Users can quickly set up PySpur by cloning the repository, creating a .env file, starting docker services, and accessing the portal. PySpur can also work with local models served using Ollama, with steps provided for configuration. The roadmap includes features like canvas, async/batch execution, support for Ollama, new nodes, pipeline optimization, templates, code compilation, multimodal support, and more.

assistant-ui

assistant-ui is a set of React components for AI chat, providing wide model provider support out of the box and the ability to integrate custom APIs. It includes integrations with Langchain, Vercel AI SDK, TailwindCSS, shadcn-ui, react-markdown, react-syntax-highlighter, React Hook Form, and more. The tool allows users to quickly create AI chat applications with pre-configured templates and easy setup steps.

ai-chatbot-framework

An AI Chatbot framework built in Python. It allows users to easily create Natural Language conversational scenarios with no coding efforts. The tool continuously learns from conversations to improve its capabilities. It can be integrated with various channels like Messenger and Slack. Users can create AI-powered chatbots without expertise in artificial intelligence.

For similar tasks

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.

generative-ai-go

The Google AI Go SDK enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. It supports use cases like generating text from text-only input, generating text from text-and-images input (multimodal), building multi-turn conversations (chat), and embedding.

awesome-langchain-zh

The awesome-langchain-zh repository is a collection of resources related to LangChain, a framework for building AI applications using large language models (LLMs). The repository includes sections on the LangChain framework itself, other language ports of LangChain, tools for low-code development, services, agents, templates, platforms, open-source projects related to knowledge management and chatbots, as well as learning resources such as notebooks, videos, and articles. It also covers other LLM frameworks and provides additional resources for exploring and working with LLMs. The repository serves as a comprehensive guide for developers and AI enthusiasts interested in leveraging LangChain and LLMs for various applications.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

awesome-local-llms

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.