awesome-local-llms

Compare open-source local LLM inference projects by their metrics to assess popularity and activeness.

Stars: 390

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

README:

There are an overwhelming number of open-source tools for local LLM inference - for both proprietary and open weights LLMs. These tools generally lie within three categories:

- LLM inference backend engine

- LLM front end UI

- All-in-one desktop application

However these tools can overlap in scope with new features are constantly being added so I have chosen not to manually categorize or label features of each project.

GitHub repository metrics, like number of stars, contributors, issues, releases, and time since last commit, have been collected as a proxy for popularity and active maintenance.

Contributions are welcome! Feel free to suggest open-source repos that I have missed either in the Issues of this repo or run the script in the script branch and update the README and make a pull request.

For full table with all metrics go to this Google Sheet.

For my thoughts on local LLM tooling: https://vinlam.com/posts/local-llm-options/

Note the condensed table below has two filters applied:

- Repositories need more than 100 stars

- Repositories require a commit within the last 60 days

Last Updated: 15/10/2024

| # | Repo | About | Stars | Forks | Issues | Contributors | Releases | License | Time Since Last Commit |

|---|---|---|---|---|---|---|---|---|---|

| 1 | transformers | 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. | 133,422 | 26,644 | 1,419 | 432 | 167 | Apache License 2.0 | 0 days, 1 hrs, 5 mins |

| 2 | ollama | Get up and running with Llama 3.2, Mistral, Gemma 2, and other large language models. | 93,343 | 7,368 | 1,399 | 304 | 93 | MIT License | 0 days, 14 hrs, 40 mins |

| 3 | ChatGPT-Next-Web | A cross-platform ChatGPT/Gemini UI (Web / PWA / Linux / Win / MacOS). 一键拥有你自己的跨平台 ChatGPT/Gemini 应用。 | 75,708 | 58,871 | 441 | 225 | 72 | MIT License | 0 days, 5 hrs, 18 mins |

| 4 | gpt4all | GPT4All: Run Local LLMs on Any Device. Open-source and available for commercial use. | 70,014 | 7,654 | 603 | 113 | 27 | MIT License | 1 days, 1 hrs, 25 mins |

| 5 | llama.cpp | LLM inference in C/C++ | 66,283 | 9,531 | 556 | 464 | 2,436 | MIT License | 0 days, 1 hrs, 10 mins |

| 6 | gpt_academic | 为GPT/GLM等LLM大语言模型提供实用化交互接口,特别优化论文阅读/润色/写作体验,模块化设计,支持自定义快捷按钮&函数插件,支持Python和C++等项目剖析&自译解功能,PDF/LaTex论文翻译&总结功能,支持并行问询多种LLM模型,支持chatglm3等本地模型。接入通义千问, deepseekcoder, 讯飞星火, 文心一言, llama2, rwkv, claude2, moss等。 | 64,813 | 8,009 | 360 | 90 | 31 | GNU General Public License v3.0 | 0 days, 5 hrs, 37 mins |

| 7 | gpt4free | The official gpt4free repository, various collection of powerful language models | 60,332 | 13,253 | 18 | 215 | 150 | GNU General Public License v3.0 | 0 days, 4 hrs, 54 mins |

| 8 | privateGPT | Interact with your documents using the power of GPT, 100% privately, no data leaks | 53,901 | 7,245 | 235 | 89 | 10 | Apache License 2.0 | 19 days, 0 hrs, 16 mins |

| 9 | open-webui | User-friendly AI Interface (Supports Ollama, OpenAI API, ...) | 42,874 | 5,161 | 136 | 221 | 63 | MIT License | 0 days, 2 hrs, 33 mins |

| 10 | lobe-chat | 🤯 Lobe Chat - an open-source, modern-design AI chat framework. Supports Multi AI Providers( OpenAI / Claude 3 / Gemini / Ollama / Azure / DeepSeek), Knowledge Base (file upload / knowledge management / RAG ), Multi-Modals (Vision/TTS) and plugin system. One-click FREE deployment of your private ChatGPT/ Claude application. | 42,683 | 9,631 | 398 | 153 | 972 | Other | 0 days, 10 hrs, 38 mins |

| 11 | text-generation-webui | A Gradio web UI for Large Language Models. | 40,094 | 5,259 | 278 | 327 | 51 | GNU Affero General Public License v3.0 | 0 days, 1 hrs, 7 mins |

| 12 | vllm | A high-throughput and memory-efficient inference and serving engine for LLMs | 28,414 | 4,214 | 2,165 | 453 | 40 | Apache License 2.0 | 0 days, 8 hrs, 27 mins |

| 13 | anything-llm | The all-in-one Desktop & Docker AI application with built-in RAG, AI agents, and more. | 24,558 | 2,468 | 194 | 77 | 7 | MIT License | 0 days, 13 hrs, 34 mins |

| 14 | LocalAI | 🤖 The free, Open Source alternative to OpenAI, Claude and others. Self-hosted and local-first. Drop-in replacement for OpenAI, running on consumer-grade hardware. No GPU required. Runs gguf, transformers, diffusers and many more models architectures. Features: Generate Text, Audio, Video, Images, Voice Cloning, Distributed inference | 23,933 | 1,837 | 370 | 109 | 62 | MIT License | 0 days, 7 hrs, 5 mins |

| 15 | jan | Jan is an open source alternative to ChatGPT that runs 100% offline on your computer. Multiple engine support (llama.cpp, TensorRT-LLM) | 22,687 | 1,309 | 154 | 54 | 30 | GNU Affero General Public License v3.0 | 0 days, 3 hrs, 2 mins |

| 16 | chatbox | User-friendly Desktop Client App for AI Models/LLMs (GPT, Claude, Gemini, Ollama...) | 21,235 | 2,143 | 394 | 29 | 43 | GNU General Public License v3.0 | 8 days, 1 hrs, 11 mins |

| 17 | localGPT | Chat with your documents on your local device using GPT models. No data leaves your device and 100% private. | 19,998 | 2,230 | 473 | 43 | 0 | Apache License 2.0 | 17 days, 8 hrs, 4 mins |

| 18 | llamafile | Distribute and run LLMs with a single file. | 19,783 | 996 | 132 | 45 | 29 | Other | 1 days, 6 hrs, 31 mins |

| 19 | mlc-llm | Universal LLM Deployment Engine with ML Compilation | 18,914 | 1,545 | 186 | 126 | 1 | Apache License 2.0 | 0 days, 1 hrs, 1 mins |

| 20 | LibreChat | Enhanced ChatGPT Clone: Features Anthropic, AWS, OpenAI, Assistants API, Azure, Groq, o1, GPT-4o, Mistral, OpenRouter, Vertex AI, Gemini, Artifacts, AI model switching, message search, langchain, DALL-E-3, ChatGPT Plugins, OpenAI Functions, Secure Multi-User System, Presets, completely open-source for self-hosting. Actively in public development. | 18,165 | 3,035 | 189 | 158 | 46 | MIT License | 0 days, 17 hrs, 26 mins |

| 21 | ChuanhuChatGPT | GUI for ChatGPT API and many LLMs. Supports agents, file-based QA, GPT finetuning and query with web search. All with a neat UI. | 15,191 | 2,293 | 121 | 51 | 25 | GNU General Public License v3.0 | 20 days, 0 hrs, 38 mins |

| 22 | web-llm | High-performance In-browser LLM Inference Engine | 13,294 | 850 | 71 | 40 | 1 | Apache License 2.0 | 8 days, 1 hrs, 48 mins |

| 23 | h2ogpt | Private chat with local GPT with document, images, video, etc. 100% private, Apache 2.0. Supports oLLaMa, Mixtral, llama.cpp, and more. Demo: https://gpt.h2o.ai/ https://gpt-docs.h2o.ai/ | 11,321 | 1,240 | 283 | 68 | 2 | Apache License 2.0 | 0 days, 5 hrs, 3 mins |

| 24 | OpenLLM | Run any open-source LLMs, such as Llama 3.1, Gemma, as OpenAI compatible API endpoint in the cloud. | 9,879 | 628 | 22 | 31 | 128 | Apache License 2.0 | 0 days, 22 hrs, 19 mins |

| 25 | FlexGen | Running large language models on a single GPU for throughput-oriented scenarios. | 9,168 | 547 | 57 | 19 | 0 | Apache License 2.0 | 7 days, 8 hrs, 17 mins |

| 26 | text-generation-inference | Large Language Model Text Generation Inference | 8,910 | 1,053 | 128 | 117 | 48 | Apache License 2.0 | 0 days, 2 hrs, 32 mins |

| 27 | TensorRT-LLM | TensorRT-LLM provides users with an easy-to-use Python API to define Large Language Models (LLMs) and build TensorRT engines that contain state-of-the-art optimizations to perform inference efficiently on NVIDIA GPUs. TensorRT-LLM also contains components to create Python and C++ runtimes that execute those TensorRT engines. | 8,415 | 949 | 769 | 15 | 9 | Apache License 2.0 | 0 days, 7 hrs, 19 mins |

| 28 | server | The Triton Inference Server provides an optimized cloud and edge inferencing solution. | 8,183 | 1,462 | 613 | 119 | 71 | BSD 3-Clause "New" or "Revised" License | 0 days, 8 hrs, 46 mins |

| 29 | llama-cpp-python | Python bindings for llama.cpp | 7,921 | 944 | 511 | 156 | 276 | MIT License | 5 days, 18 hrs, 36 mins |

| 30 | SillyTavern | LLM Frontend for Power Users. | 7,849 | 2,344 | 261 | 156 | 83 | GNU Affero General Public License v3.0 | 0 days, 6 hrs, 54 mins |

| 31 | chat-ui | Open source codebase powering the HuggingChat app | 7,394 | 1,076 | 270 | 106 | 14 | Apache License 2.0 | 0 days, 4 hrs, 55 mins |

| 32 | big-agi | Generative AI suite powered by state-of-the-art models and providing advanced AI/AGI functions. It features AI personas, AGI functions, multi-model chats, text-to-image, voice, response streaming, code highlighting and execution, PDF import, presets for developers, much more. Deploy on-prem or in the cloud. | 5,373 | 1,222 | 203 | 43 | 16 | MIT License | 0 days, 4 hrs, 23 mins |

| 33 | inference | Replace OpenAI GPT with another LLM in your app by changing a single line of code. Xinference gives you the freedom to use any LLM you need. With Xinference, you're empowered to run inference with any open-source language models, speech recognition models, and multimodal models, whether in the cloud, on-premises, or even on your laptop. | 5,071 | 407 | 205 | 75 | 85 | Apache License 2.0 | 3 days, 4 hrs, 10 mins |

| 34 | koboldcpp | Run GGUF models easily with a KoboldAI UI. One File. Zero Install. | 5,065 | 354 | 235 | 463 | 88 | GNU Affero General Public License v3.0 | 1 days, 0 hrs, 36 mins |

| 35 | lmdeploy | LMDeploy is a toolkit for compressing, deploying, and serving LLMs. | 4,428 | 398 | 307 | 74 | 36 | Apache License 2.0 | 0 days, 1 hrs, 20 mins |

| 36 | llm | Access large language models from the command-line | 4,416 | 241 | 231 | 21 | 27 | Apache License 2.0 | 32 days, 15 hrs, 25 mins |

| 37 | lollms-webui | Lord of Large Language Models Web User Interface | 4,286 | 540 | 155 | 38 | 23 | Apache License 2.0 | 2 days, 0 hrs, 8 mins |

| 38 | exllamav2 | A fast inference library for running LLMs locally on modern consumer-class GPUs | 3,570 | 277 | 87 | 46 | 34 | MIT License | 13 days, 16 hrs, 45 mins |

| 39 | LLamaSharp | A C#/.NET library to run LLM (🦙LLaMA/LLaVA) on your local device efficiently. | 2,583 | 337 | 131 | 54 | 21 | MIT License | 1 days, 19 hrs, 39 mins |

| 40 | nitro | Run and customize Local LLMs. | 1,958 | 109 | 126 | 32 | 135 | Apache License 2.0 | 0 days, 1 hrs, 2 mins |

| 41 | page-assist | Use your locally running AI models to assist you in your web browsing | 1,395 | 135 | 91 | 12 | 19 | MIT License | 1 days, 22 hrs, 19 mins |

| 42 | maid | Maid is a cross-platform Flutter app for interfacing with GGUF / llama.cpp models locally, and with Ollama and OpenAI models remotely. | 1,380 | 146 | 15 | 20 | 31 | MIT License | 3 days, 9 hrs, 5 mins |

| 43 | LLMFarm | llama and other large language models on iOS and MacOS offline using GGML library. | 1,269 | 79 | 18 | 1 | 31 | MIT License | 4 days, 0 hrs, 3 mins |

| 44 | oterm | a text-based terminal client for Ollama | 1,023 | 60 | 7 | 12 | 35 | MIT License | 3 days, 5 hrs, 23 mins |

| 45 | amica | Amica is an open source interface for interactive communication with 3D characters with voice synthesis and speech recognition. | 690 | 112 | 40 | 16 | 4 | MIT License | 0 days, 2 hrs, 16 mins |

| 46 | exui | Web UI for ExLlamaV2 | 430 | 41 | 33 | 8 | 0 | MIT License | 5 days, 16 hrs, 29 mins |

| 47 | ChatterUI | Simple frontend for LLMs built in react-native. | 422 | 25 | 10 | 1 | 44 | GNU Affero General Public License v3.0 | 0 days, 3 hrs, 13 mins |

| 48 | ava | All-in-one desktop app for running LLMs locally. | 410 | 15 | 3 | 3 | 0 | Other | 2 days, 16 hrs, 32 mins |

| 49 | tenere | 🤖 TUI interface for LLMs written in Rust | 314 | 9 | 1 | 7 | 13 | GNU General Public License v3.0 | 40 days, 4 hrs, 8 mins |

| 50 | web-llm-chat | Chat with AI large language models running natively in your browser. Enjoy private, server-free, seamless AI conversations. | 286 | 47 | 10 | 181 | 0 | Apache License 2.0 | 10 days, 18 hrs, 44 mins |

| 51 | mikupad | LLM Frontend in a single html file | 238 | 27 | 23 | 10 | 35 | Creative Commons Zero v1.0 Universal | 8 days, 18 hrs, 44 mins |

| 52 | emeltal | Local ML voice chat using high-end models. | 142 | 8 | 1 | 1 | 0 | MIT License | 2 days, 2 hrs, 44 mins |

- https://github.com/janhq/awesome-local-ai

- https://huyenchip.com/2024/03/14/ai-oss.html

- https://github.com/mahseema/awesome-ai-tools

- https://github.com/steven2358/awesome-generative-ai

- https://github.com/e2b-dev/awesome-ai-agents

- https://github.com/aimerou/awesome-ai-papers

- https://github.com/DefTruth/Awesome-LLM-Inference

- https://github.com/youssefHosni/Awesome-AI-Data-GitHub-Repos

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-local-llms

Similar Open Source Tools

awesome-local-llms

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

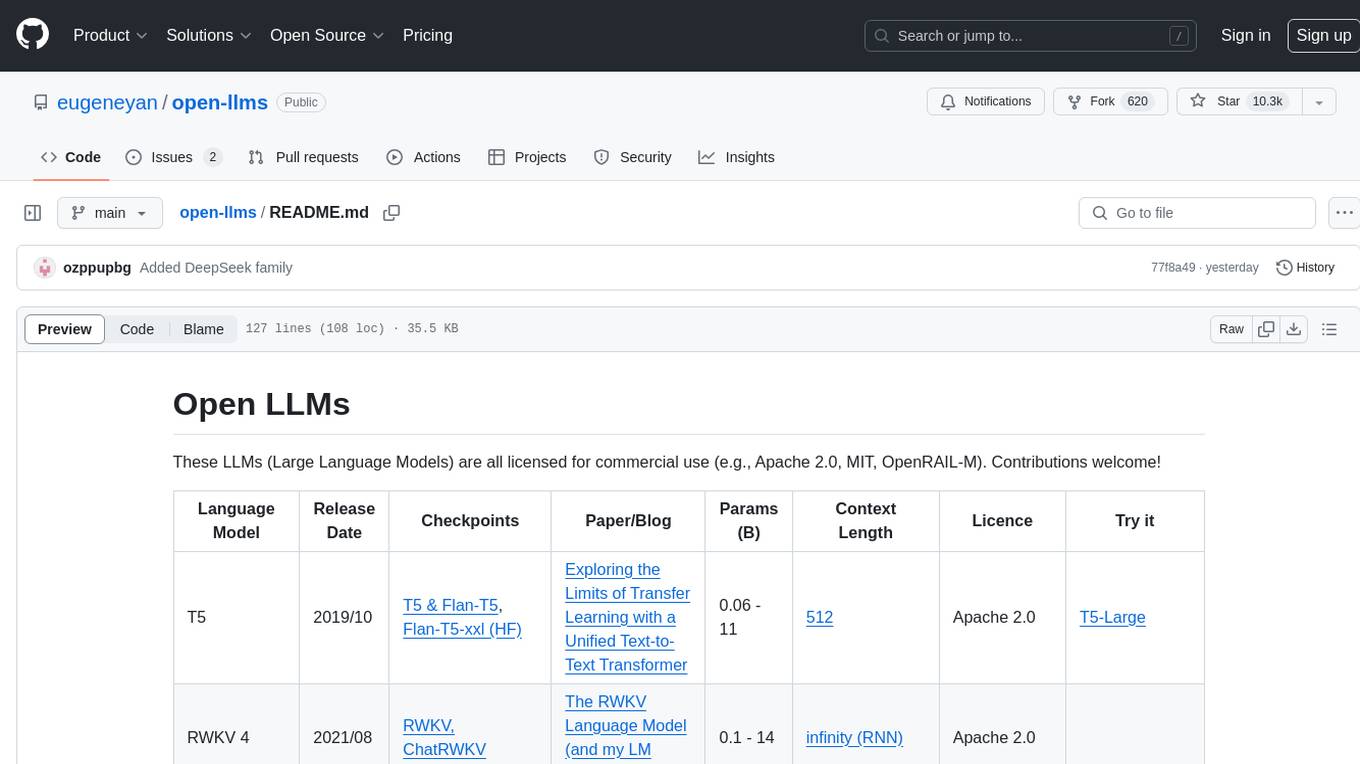

open-llms

Open LLMs is a repository containing various Large Language Models licensed for commercial use. It includes models like T5, GPT-NeoX, UL2, Bloom, Cerebras-GPT, Pythia, Dolly, and more. These models are designed for tasks such as transfer learning, language understanding, chatbot development, code generation, and more. The repository provides information on release dates, checkpoints, papers/blogs, parameters, context length, and licenses for each model. Contributions to the repository are welcome, and it serves as a resource for exploring the capabilities of different language models.

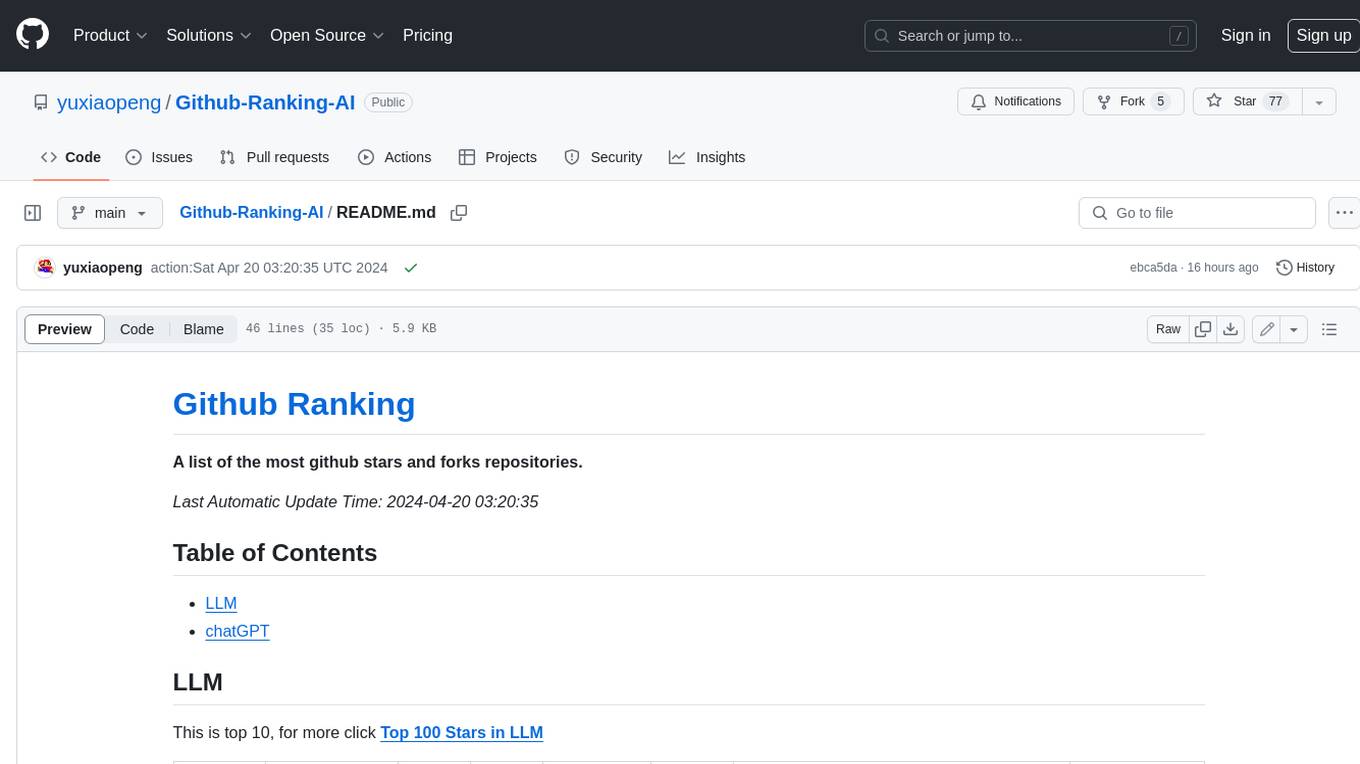

Github-Ranking-AI

This repository provides a list of the most starred and forked repositories on GitHub. It is updated automatically and includes information such as the project name, number of stars, number of forks, language, number of open issues, description, and last commit date. The repository is divided into two sections: LLM and chatGPT. The LLM section includes repositories related to large language models, while the chatGPT section includes repositories related to the chatGPT chatbot.

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

writing

The LLM Creative Story-Writing Benchmark evaluates large language models based on their ability to incorporate a set of 10 mandatory story elements in a short narrative. It measures constraint satisfaction and literary quality by grading models on character development, plot structure, atmosphere, storytelling impact, authenticity, and execution. The benchmark aims to assess how well models can adapt to rigid requirements, remain original, and produce cohesive stories using all assigned elements.

goodai-ltm-benchmark

This repository contains code and data for replicating experiments on Long-Term Memory (LTM) abilities of conversational agents. It includes a benchmark for testing agents' memory performance over long conversations, evaluating tasks requiring dynamic memory upkeep and information integration. The repository supports various models, datasets, and configurations for benchmarking and reporting results.

llmq

llm.q is an implementation of (quantized) large language model training in CUDA, inspired by llm.c. It is particularly aimed at medium-sized training setups, i.e., a single node with multiple GPUs. The code is written in C++20 and requires CUDA 12 or later. It depends on nccl for communication, and cudnn for fast attention. Multi-GPU training can either be run in multi-process mode (requires OpenMPI) or in multi-thread mode. Additional header-only dependencies are automatically downloaded by cmake during the build process. The tool provides detailed instructions on data preparation, training runs, inspecting logs, evaluations, and a larger example for real training runs. It also offers detailed usage instructions covering model configuration, data configuration, optimization parameters, checkpointing, output, low-bit settings, activation checkpointing/recomputation, multi-GPU settings, offloading, algorithm selection, and Python bindings. The code organization includes directories for kernels, models, training, and utilities. Speed benchmarks for different GPU configurations are provided, along with testing details for recomputation, fixed reference, and Python reference tests.

denodo-ai-sdk

Denodo AI SDK is a tool that enables users to create AI chatbots and agents that provide accurate and context-aware answers using enterprise data. It connects to the Denodo Platform, supports popular LLMs and vector stores, and includes a sample chatbot and simple APIs for quick setup. The tool also offers benchmarks for evaluating LLM performance and provides guidance on configuring DeepQuery for different LLM providers.

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

LLamaTuner

LLamaTuner is a repository for the Efficient Finetuning of Quantized LLMs project, focusing on building and sharing instruction-following Chinese baichuan-7b/LLaMA/Pythia/GLM model tuning methods. The project enables training on a single Nvidia RTX-2080TI and RTX-3090 for multi-round chatbot training. It utilizes bitsandbytes for quantization and is integrated with Huggingface's PEFT and transformers libraries. The repository supports various models, training approaches, and datasets for supervised fine-tuning, LoRA, QLoRA, and more. It also provides tools for data preprocessing and offers models in the Hugging Face model hub for inference and finetuning. The project is licensed under Apache 2.0 and acknowledges contributions from various open-source contributors.

GenAI-Learning

GenAI-Learning is a repository dedicated to providing resources and courses for individuals interested in Generative AI. It covers a wide range of topics from prompt engineering to user-centered design, offering courses on LLM Bootcamp, DeepLearning AI, Microsoft Copilot Learning, Amazon Generative AI, Google Cloud Skills, NVIDIA Learn, Oracle Cloud, and IBM AI Learn. The repository includes detailed course descriptions, partners, and topics for each course, making it a valuable resource for AI enthusiasts and professionals.

Azure-AIGEN-demos

Microsoft Foundry is a unified Azure platform-as-a-service offering for enterprise AI operations, model builders, and application development. This foundation combines production-grade infrastructure with friendly interfaces, enabling developers to focus on building applications rather than managing infrastructure. Microsoft Foundry unifies agents, models, and tools under a single management grouping with built-in enterprise-readiness capabilities including tracing, monitoring, evaluations, and customizable enterprise setup configurations. The platform provides streamlined management through unified Role-based access control (RBAC), networking, and policies under one Azure resource provider namespace.

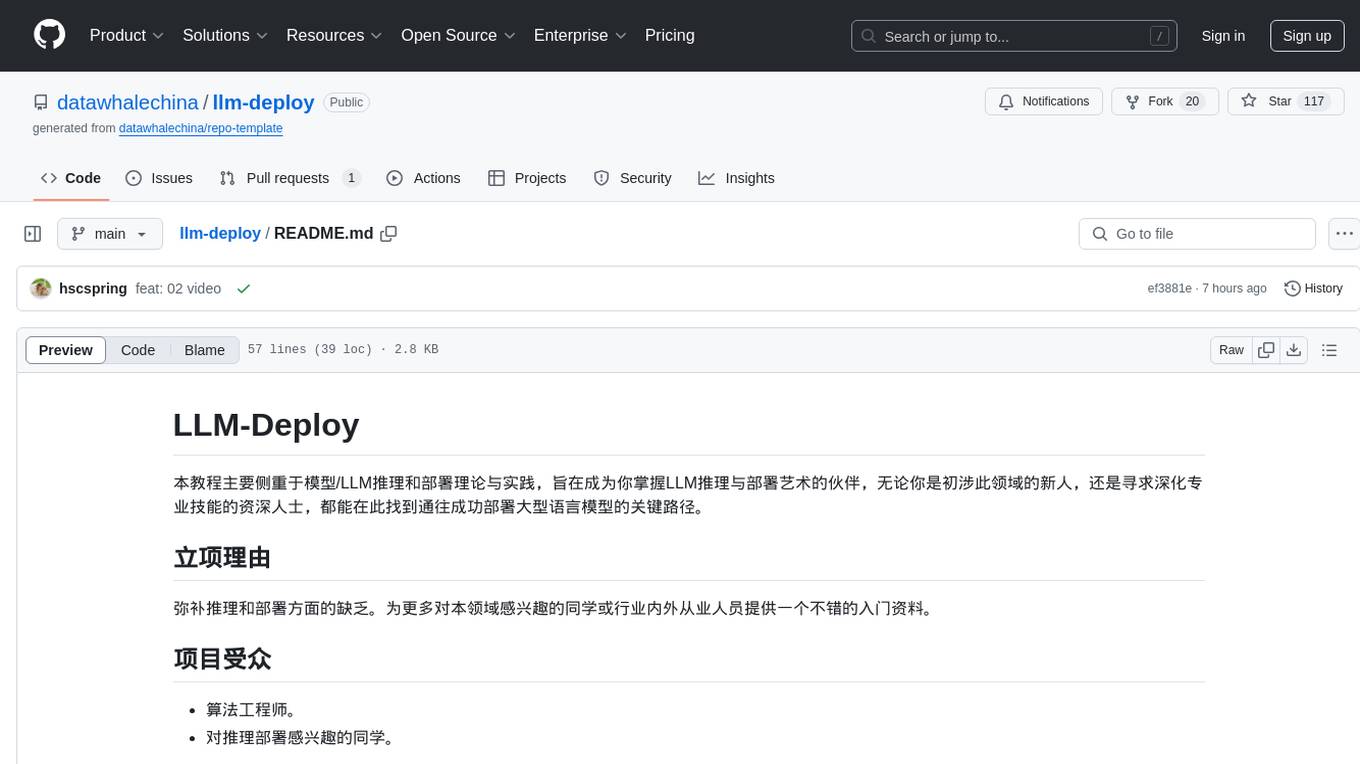

llm-deploy

LLM-Deploy focuses on the theory and practice of model/LLM reasoning and deployment, aiming to be your partner in mastering the art of LLM reasoning and deployment. Whether you are a newcomer to this field or a senior professional seeking to deepen your skills, you can find the key path to successfully deploy large language models here. The project covers reasoning and deployment theories, model and service optimization practices, and outputs from experienced engineers. It serves as a valuable resource for algorithm engineers and individuals interested in reasoning deployment.

nntrainer

NNtrainer is a software framework for training neural network models on devices with limited resources. It enables on-device fine-tuning of neural networks using user data for personalization. NNtrainer supports various machine learning algorithms and provides examples for tasks such as few-shot learning, ResNet, VGG, and product rating. It is optimized for embedded devices and utilizes CBLAS and CUBLAS for accelerated calculations. NNtrainer is open source and released under the Apache License version 2.0.

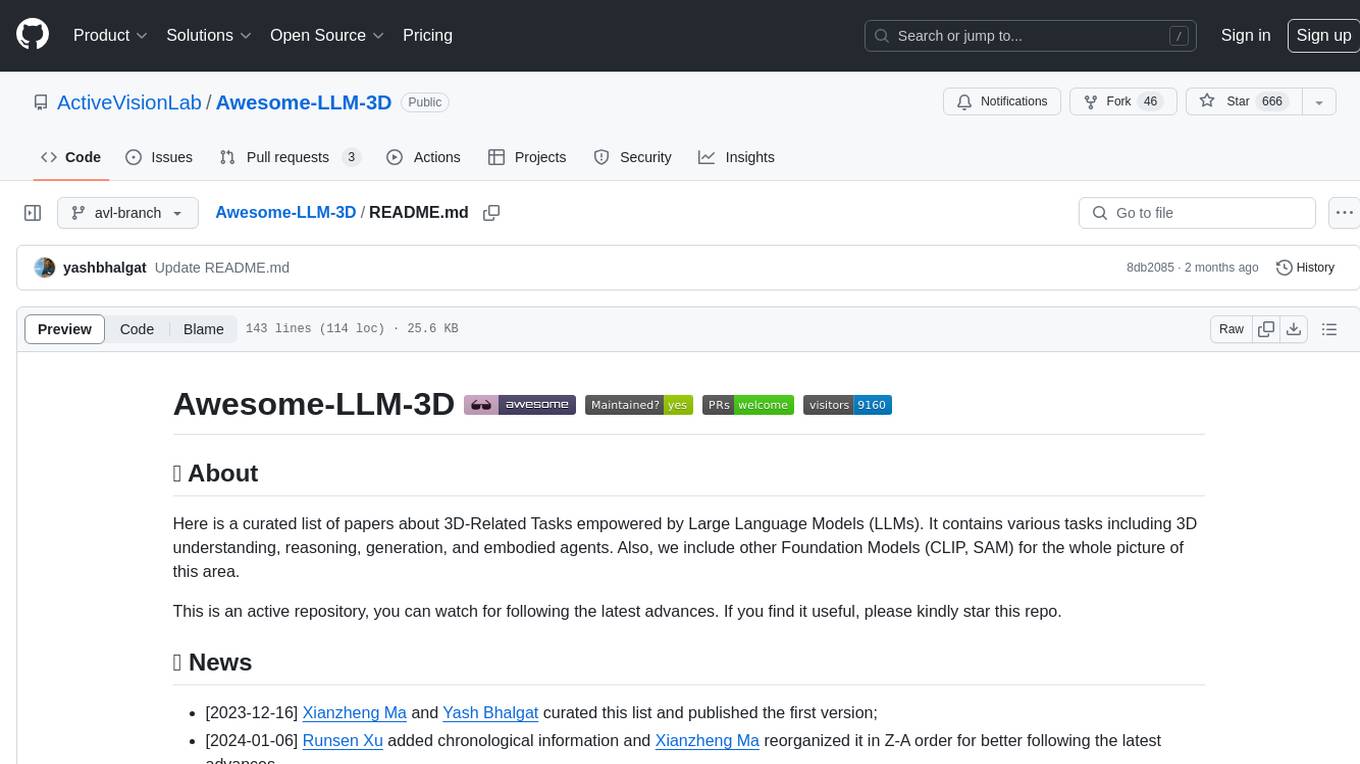

Awesome-LLM-3D

This repository is a curated list of papers related to 3D tasks empowered by Large Language Models (LLMs). It covers tasks such as 3D understanding, reasoning, generation, and embodied agents. The repository also includes other Foundation Models like CLIP and SAM to provide a comprehensive view of the area. It is actively maintained and updated to showcase the latest advances in the field. Users can find a variety of research papers and projects related to 3D tasks and LLMs in this repository.

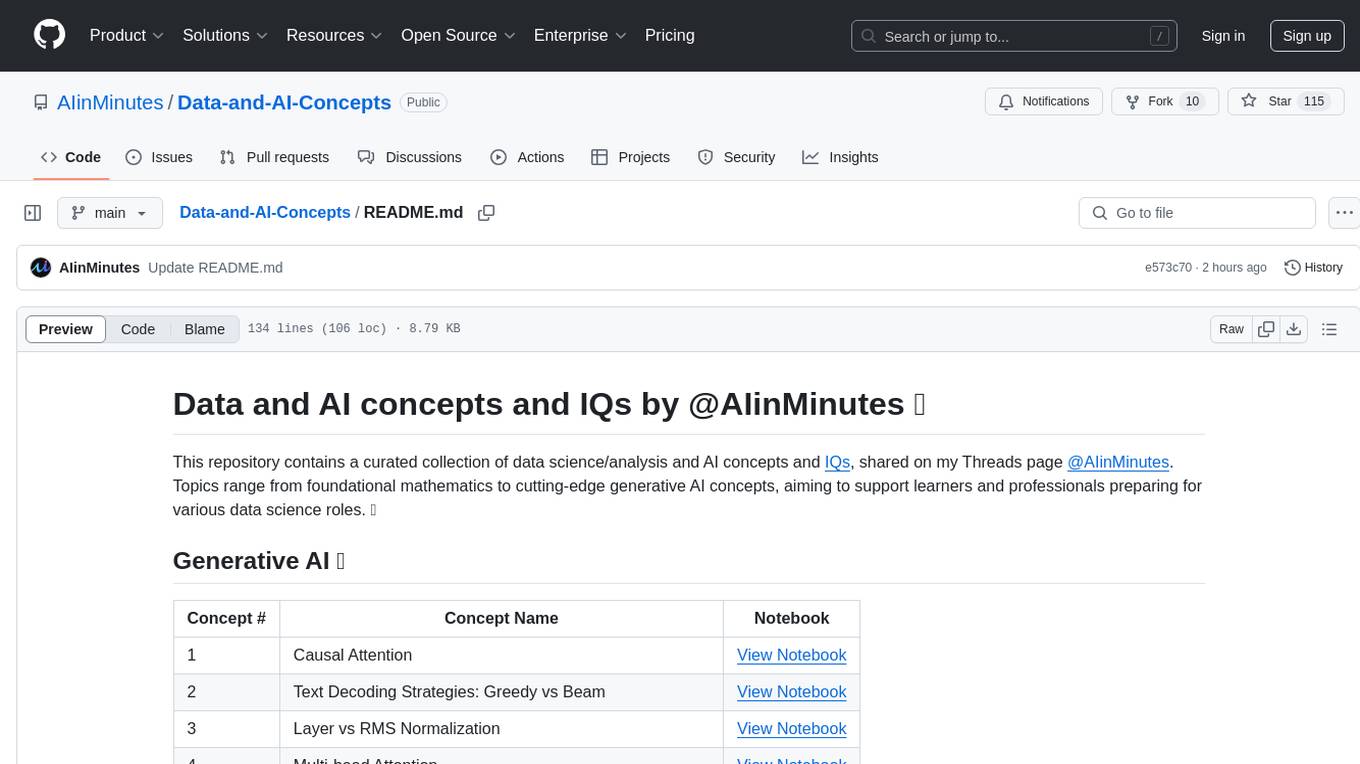

Data-and-AI-Concepts

This repository is a curated collection of data science and AI concepts and IQs, covering topics from foundational mathematics to cutting-edge generative AI concepts. It aims to support learners and professionals preparing for various data science roles by providing detailed explanations and notebooks for each concept.

For similar tasks

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.

generative-ai-go

The Google AI Go SDK enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. It supports use cases like generating text from text-only input, generating text from text-and-images input (multimodal), building multi-turn conversations (chat), and embedding.

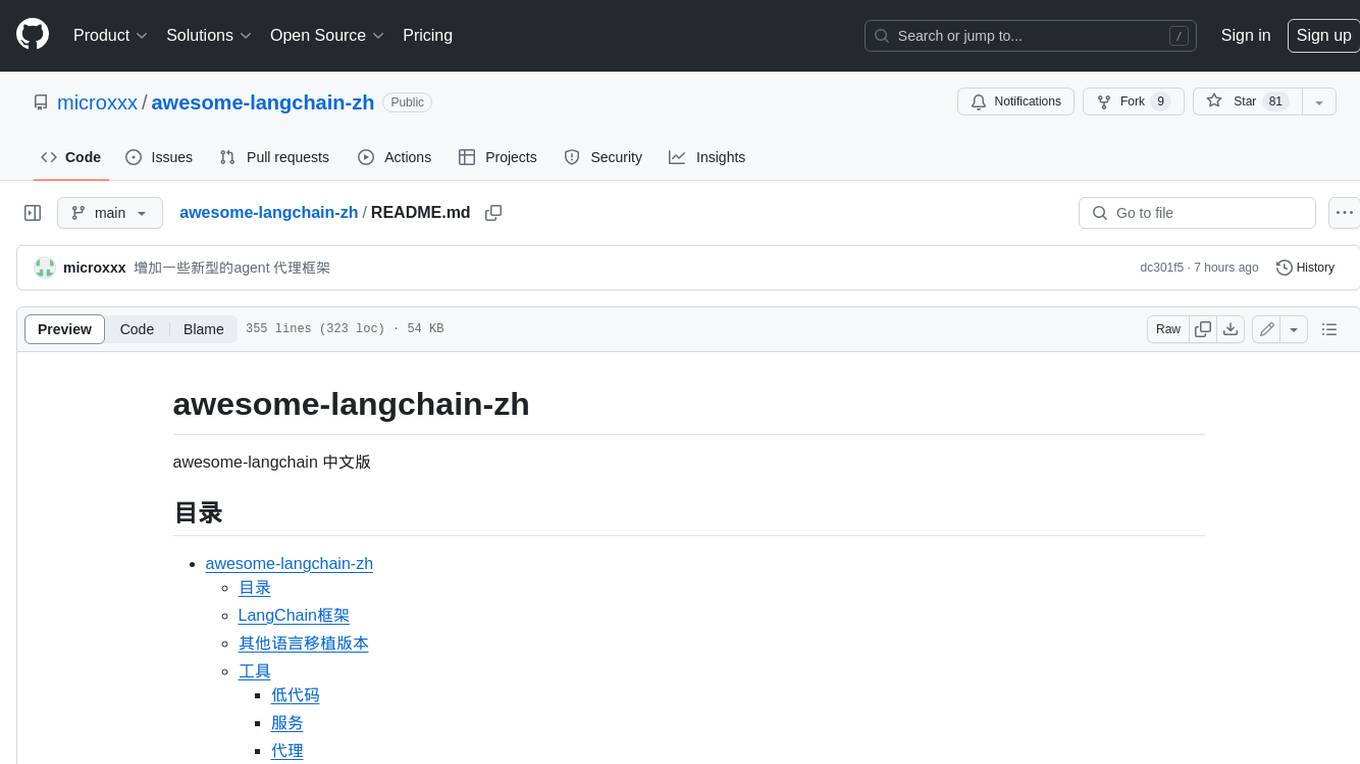

awesome-langchain-zh

The awesome-langchain-zh repository is a collection of resources related to LangChain, a framework for building AI applications using large language models (LLMs). The repository includes sections on the LangChain framework itself, other language ports of LangChain, tools for low-code development, services, agents, templates, platforms, open-source projects related to knowledge management and chatbots, as well as learning resources such as notebooks, videos, and articles. It also covers other LLM frameworks and provides additional resources for exploring and working with LLMs. The repository serves as a comprehensive guide for developers and AI enthusiasts interested in leveraging LangChain and LLMs for various applications.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

awesome-local-llms

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.