are-copilots-local-yet

Are Copilots Local Yet? The frontier of local LLM Copilots for code completion, project generation, shell assistance, and more. Find tools shaping tomorrow's developer experience, today!

Stars: 511

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

README:

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more.

📝 Help keep this list relevant and up-to-date by making edits!

- Summary

- Background

- Editor Extensions

- Tools

- Chat Interfaces

- Models

- Datasets

- Misc Tools

- Suggested Setup

- History

- Stats

Local Copilots are now fully functional, although with output quality still not on par with those offered by cloud-based services like GitHub Copilot.

This document is a curated list of local Copilots, shell assistants, and related projects. It is intended to be a resource for those interested in a survey of the existing tools, and to help developers discover the state of the art for projects like these.

In 2021, GitHub released Copilot which quickly became popular among devs. Since then, with the flurry of AI developments around LLMs, local models that can run on consumer machines have become available, and it has seemed only a matter of time before Copilot will go local.

Many perceived limitations of GitHub's Copilot are related to its closed and cloud-hosted nature.

As an alternative, local Copilots enable:

- 🌐 Offline & private use

- ⚡ Improved responsiveness

- 📚 Better project/context awareness

- 🎯 The ability to run models specialized for a particular language/task

- 🔒 Constraining the LLM output to fit a particular format/syntax.

Editor extensions used to complete code using LLMs:

| Name | Editor | ⭐ | Released | Notes |

|---|---|---|---|---|

| GitHub Copilot | VSCode, vim | 9125 | 2021-6-29 | The GitHub Original, not local or open-source. |

| Cursor | VSCode | 27112 | 2023-3-14 | Fork of VSCode, not open-source |

| Fauxpilot | VSCode | 14645 | 2022-9-3 | Early local PoC. Stale? |

| Tabby | VSCode, vim, IntelliJ | 29074 | 2023-9-30 | Completes the cursor selection |

| turbopilot | VSCode | 3818 | 2023-4-10 | Completions with FIM support, inspired by fauxpilot |

| HuggingFace-vscode | VSCode | 1255 | 2023-6-19 | Fork of Tabnine, supports Starcoder |

| localpilot | VSCode | 3369 | 2023-10-2 | Utility for easily hosting models locally, for use with official Copilot extension using custom API endpoint. |

| StarcoderEx | VSCode | 101 | 2023-5-5 | Completes the cursor selection |

| WizardCoder-VSC | VSCode | 145 | 2023-6-19 | PoC, article available |

| KoboldAIConnect | VSCode | 2023-10-7 | Copilot clone using local KoboldAI backend | |

| gen.nvim | vim | 1323 | 2023-10-1 | Edit selection using custom prompts |

| uniteai | VSCode, emacs, lsp | 309 | 2023-8-27 | |

| Privy | VSCode | 916 | 2024-1-8 | A privacy-first coding assistant. |

| twinny | VSCode | 3279 | 2024-1-24 | The most no-nonsense locally hosted AI code completion plugin for VS Code |

| continue | 21966 | 2023-5-24 | VSCode extension with chat, autocomplete, and actions. |

Tools that try to generate projects/features from specification:

| Name | ⭐ | Released | Notes |

|---|---|---|---|

| gpt-engineer | 52940 | 2023-6-6 | Specify what you want it to build, the AI asks for clarification, and then builds it. |

| gpt-pilot | 32250 | 2023-7-18 | Very similar to gpt-engineer |

| aider | 25618 | 2023-6-8 | AI pair programming in your terminal, works well with pre-existing, larger codebases |

| rift | 3051 | 2023-6-20 | VSCode extension. Lets you write code by chatting, makes your IDE agentic, AI engineer that works alongside you. |

| mentat | 2583 | 2023-7-25 | Mentat coordinates edits across multiple locations and files. |

| clippinator | 364 | 2023-4-15 | Uses a team of agents to plan, write, debug, and test |

| Refact.AI | 1660 | 2023-10-06 | Full self-hostable code completion, chat and training service, complete with VSCode extension. |

| LocalCompletion | 27 | 2023-11-15 | Inline completion with support for any OpenAI compatible backend |

Chat interfaces with shell/REPL/notebook access. Similar to/inspired by ChatGPT's "Advanced Data Analysis" feature (previously "Code Interpreter").

| Name | ⭐ | Notes |

|---|---|---|

| open-interpreter | 57982 | open-source, locally running implementation of OpenAI's Code Interpreter |

| gptme | 3131 | Supporting open models. Developed by me, @ErikBjare |

| octogen | 256 | Local Code Interpreter executing in Docker environment. |

| terminal-x | 34 | Very early prototype that converts natural language into shell commands, unmaintained since Sept. 2021 |

| DODA | >50 | Electron based GUI for a local OpenAI Dev Assistant |

Models relevant for local Copilot-use. Ordered by most recent first.

| Name | Size | Languages | ⭐ | Released | Notes |

|---|---|---|---|---|---|

| Phind CodeLlama v2 | 34B | Many | 829 | 2023-8-27 | |

| WizardCoder-Python | 7/13/34B | Python | 765 | 2023-8 | |

| CodeLlama | 7/13/34B | Many | 16165 | 2023-8 | |

| WizardCoder | 15B | 80+ | 750 | 2023-6 | Fine-tuning of Starcoder |

| replit-glaive | 3B | 1? | 88 | 2023-7 | Small model fine-tuned on high-quality data with impressive performance. |

| Starcoder | 15B | 80+ | 7351 | 2023-5 | |

| replit-v1-3b | 3B | 20+ | 724 | 2023-5 | |

| SantaCoder | 1.1B | Python, Java, JavaScript | 331 | 2023-4 | Tiny model selectively trained on 3 languages from 'The Stack' |

| Qwen 2.5 Coder | 32b | 92 different languages | 3998 | 2024-11 | |

| Deepseek R1 | 671B | Many | 3052 | 2025-01 |

Note: due to the pace of new model releases, this section is doomed to be out of date.

Datasets relevant for training models.

| Name | Size | Languages | ⭐ | Released | Notes |

|---|---|---|---|---|---|

| The Stack | 3TB/6TB | 358 | 760 | 2022-10 | Excludes weak-copyleft licenses (MPL, LGPL, EGL) since v1.1 |

Misc relevant useful tools.

| Name | ⭐ | Released | Notes |

|---|---|---|---|

| ollama | 111009 | 2023-8-27 | Easily get up and running with large language models locally. |

As you can see above there are many options for models and editor extensions. If you use VS Code or JetBrains and want to get started straight away you can use the following setup:

- Install LM Studio.

- Install Continue.dev extension.

- Download one or several models in LM Studio. As of January 2025, Qwen 2.5 Coder is a good choice for autocomplete and Deepseek R1 is a good choice for chat. Depending on your hardware you'll have to experiment with which model size and quantization level gives you sufficient speed. For example on a Macbook Pro M2 with 32GB RAM,

Qwen2.5-Coder-7B-Instruct-Q4_K_Mworks well for autocomplete andDeepSeek-R1-Distill-Qwen-14B-Q4_0works well for chat. - Go to the Developer tab in LM Studio and start the server.

- Configure Continue.dev extension with by adding your selected models. For example:

{ "models": [ { "apiBase": "http://localhost:1234/v1/", "title": "Deepseek R1", "model": "bartowski/deepseek-r1-distill-qwen-14b", "provider": "lmstudio" } ], "tabAutocompleteModel": { "provider": "lmstudio", "apiBase": "http://localhost:1234/v1/", "title": "Qwen 2.5 Coder", "model": "qwen2.5-coder-7b-instruct" }, }

Stargazers over time:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for are-copilots-local-yet

Similar Open Source Tools

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

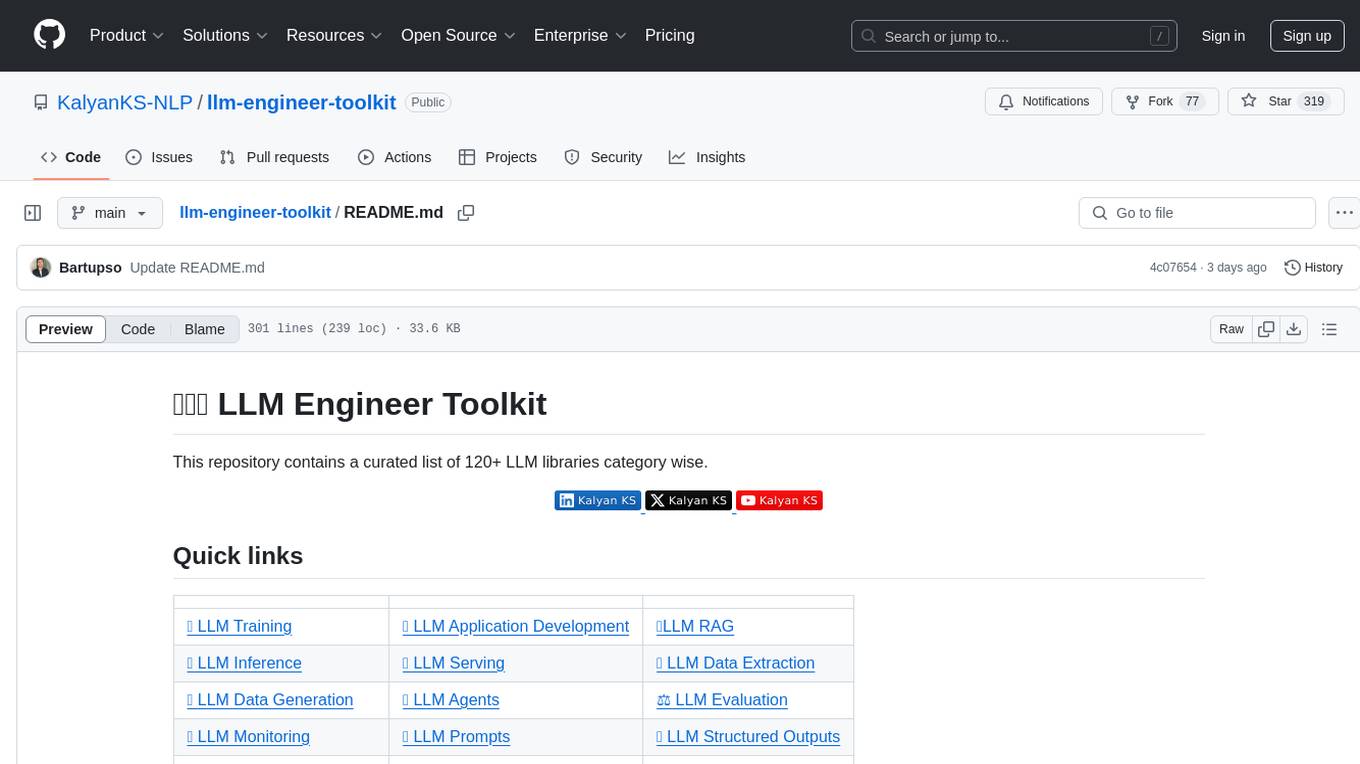

llm-engineer-toolkit

The LLM Engineer Toolkit is a curated repository containing over 120 LLM libraries categorized for various tasks such as training, application development, inference, serving, data extraction, data generation, agents, evaluation, monitoring, prompts, structured outputs, safety, security, embedding models, and other miscellaneous tools. It includes libraries for fine-tuning LLMs, building applications powered by LLMs, serving LLM models, extracting data, generating synthetic data, creating AI agents, evaluating LLM applications, monitoring LLM performance, optimizing prompts, handling structured outputs, ensuring safety and security, embedding models, and more. The toolkit covers a wide range of tools and frameworks to streamline the development, deployment, and optimization of large language models.

Awesome-LLM-Constrained-Decoding

Awesome-LLM-Constrained-Decoding is a curated list of papers, code, and resources related to constrained decoding of Large Language Models (LLMs). The repository aims to facilitate reliable, controllable, and efficient generation with LLMs by providing a comprehensive collection of materials in this domain.

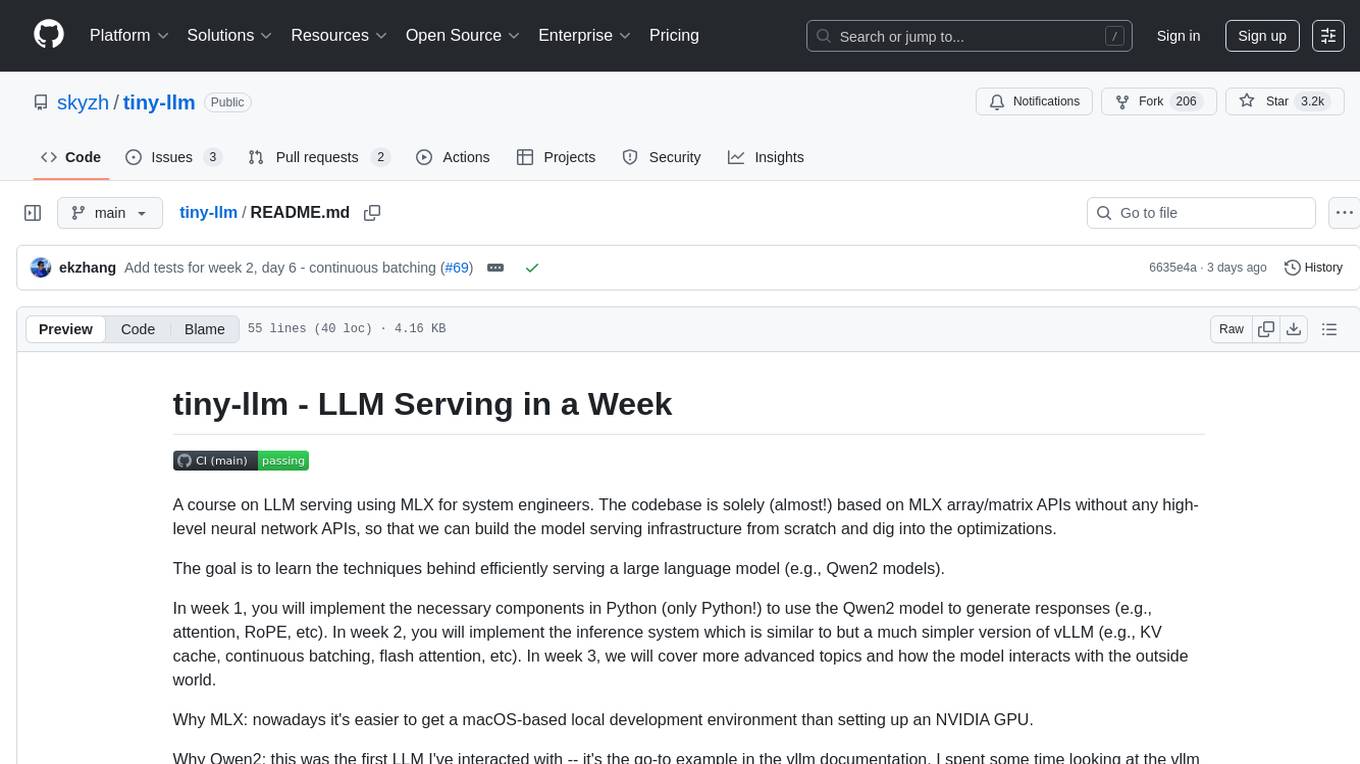

tiny-llm

tiny-llm is a course on LLM serving using MLX for system engineers. The codebase is focused on MLX array/matrix APIs to build model serving infrastructure from scratch and explore optimizations. The goal is to efficiently serve large language models like Qwen2 models. The course covers implementing components in Python, building an inference system similar to vLLM, and advanced topics on model interaction. The tool aims to provide hands-on experience in serving language models without high-level neural network APIs.

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

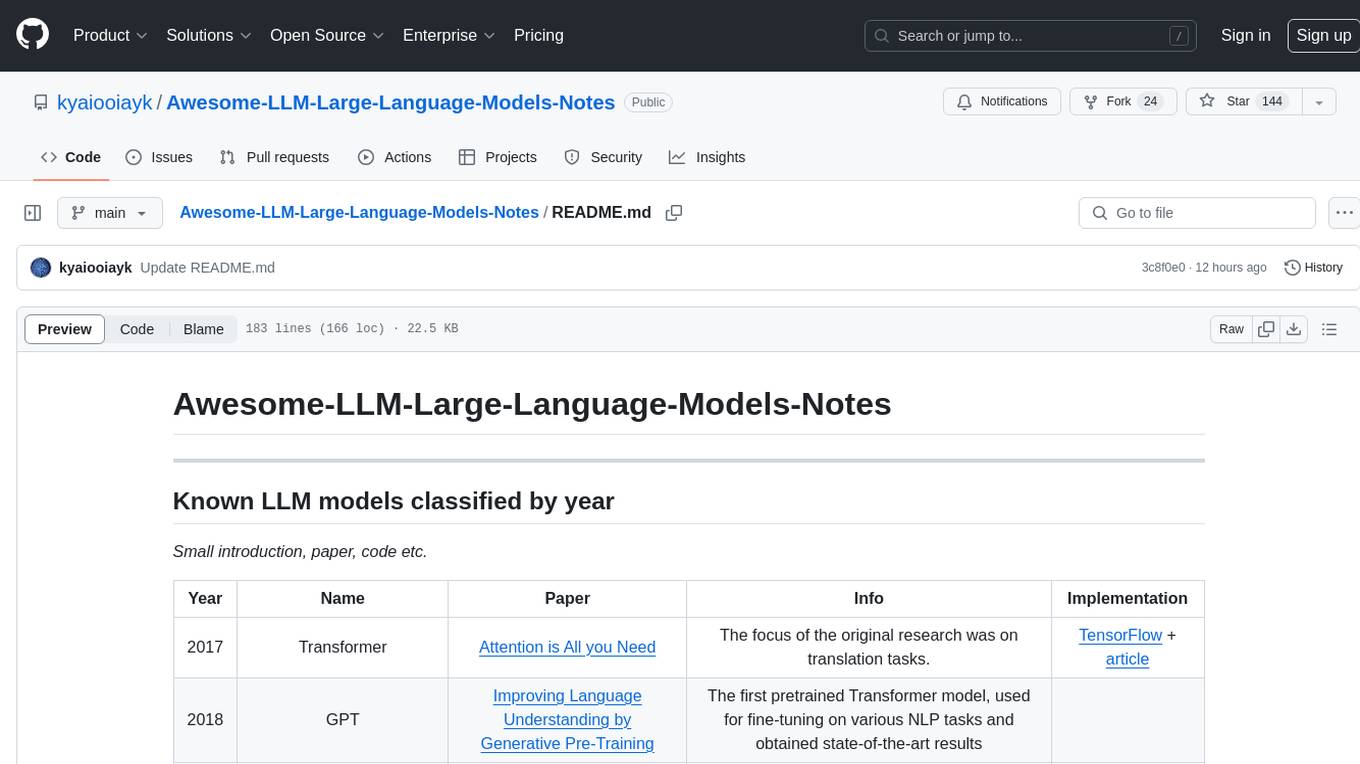

Awesome-LLM-Large-Language-Models-Notes

Awesome-LLM-Large-Language-Models-Notes is a repository that provides a comprehensive collection of information on various Large Language Models (LLMs) classified by year, size, and name. It includes details on known LLM models, their papers, implementations, and specific characteristics. The repository also covers LLM models classified by architecture, must-read papers, blog articles, tutorials, and implementations from scratch. It serves as a valuable resource for individuals interested in understanding and working with LLMs in the field of Natural Language Processing (NLP).

tamingLLMs

The 'Taming LLMs' repository provides a practical guide to the pitfalls and challenges associated with Large Language Models (LLMs) when building applications. It focuses on key limitations and implementation pitfalls, offering practical Python examples and open source solutions to help engineers and technical leaders navigate these challenges. The repository aims to equip readers with the knowledge to harness the power of LLMs while avoiding their inherent limitations.

watsonx-ai-samples

Sample notebooks for IBM Watsonx.ai for IBM Cloud and IBM Watsonx.ai software product. The notebooks demonstrate capabilities such as running experiments on model building using AutoAI or Deep Learning, deploying third-party models as web services or batch jobs, monitoring deployments with OpenScale, managing model lifecycles, inferencing Watsonx.ai foundation models, and integrating LangChain with Watsonx.ai. Notebooks with Python code and the Python SDK can be found in the `python_sdk` folder. The REST API examples are organized in the `rest_api` folder.

Github-Ranking-AI

This repository provides a list of the most starred and forked repositories on GitHub. It is updated automatically and includes information such as the project name, number of stars, number of forks, language, number of open issues, description, and last commit date. The repository is divided into two sections: LLM and chatGPT. The LLM section includes repositories related to large language models, while the chatGPT section includes repositories related to the chatGPT chatbot.

Model-References

The 'Model-References' repository contains examples for training and inference using Intel Gaudi AI Accelerator. It includes models for computer vision, natural language processing, audio, generative models, MLPerf™ training, and MLPerf™ inference. The repository provides performance data and model validation information for various frameworks like PyTorch. Users can find examples of popular models like ResNet, BERT, and Stable Diffusion optimized for Intel Gaudi AI accelerator.

redis-ai-resources

A curated repository of code recipes, demos, and resources for basic and advanced Redis use cases in the AI ecosystem. It includes demos for ArxivChatGuru, Redis VSS, Vertex AI & Redis, Agentic RAG, ArXiv Search, and Product Search. Recipes cover topics like Getting started with RAG, Semantic Cache, Advanced RAG, and Recommendation systems. The repository also provides integrations/tools like RedisVL, AWS Bedrock, LangChain Python, LangChain JS, LlamaIndex, Semantic Kernel, RelevanceAI, and DocArray. Additional content includes blog posts, talks, reviews, and documentation related to Vector Similarity Search, AI-Powered Document Search, Vector Databases, Real-Time Product Recommendations, and more. Benchmarks compare Redis against other Vector Databases and ANN benchmarks. Documentation includes QuickStart guides, official literature for Vector Similarity Search, Redis-py client library docs, Redis Stack documentation, and Redis client list.

BizFinBench

BizFinBench is a benchmark tool designed for evaluating large language models (LLMs) in logic-heavy and precision-critical domains such as finance. It comprises over 100,000 bilingual financial questions rooted in real-world business scenarios. The tool covers five dimensions: numerical calculation, reasoning, information extraction, prediction recognition, and knowledge-based question answering, mapped to nine fine-grained categories. BizFinBench aims to assess the capacity of LLMs in real-world financial scenarios and provides insights into their strengths and limitations.

llm-compression-intelligence

This repository presents the findings of the paper "Compression Represents Intelligence Linearly". The study reveals a strong linear correlation between the intelligence of LLMs, as measured by benchmark scores, and their ability to compress external text corpora. Compression efficiency, derived from raw text corpora, serves as a reliable evaluation metric that is linearly associated with model capabilities. The repository includes the compression corpora used in the paper, code for computing compression efficiency, and data collection and processing pipelines.

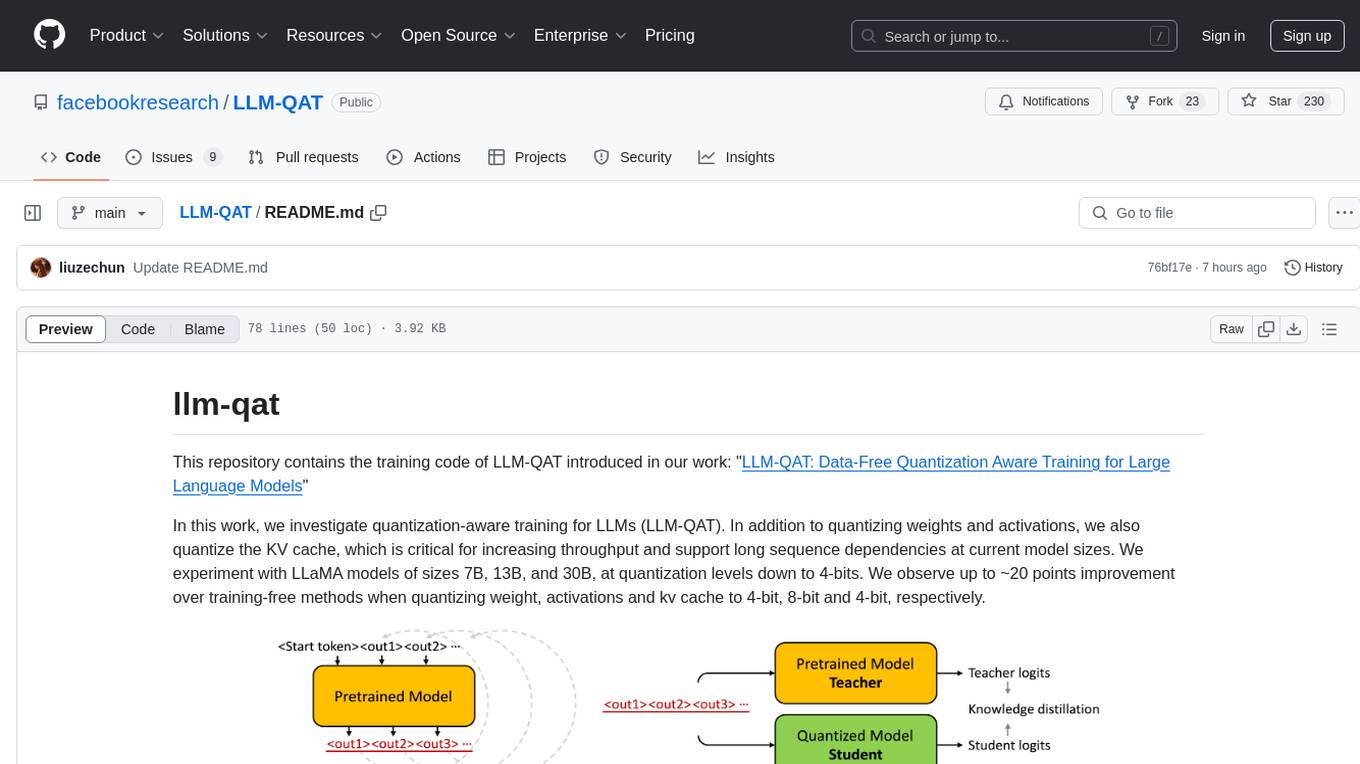

LLM-QAT

This repository contains the training code of LLM-QAT for large language models. The work investigates quantization-aware training for LLMs, including quantizing weights, activations, and the KV cache. Experiments were conducted on LLaMA models of sizes 7B, 13B, and 30B, at quantization levels down to 4-bits. Significant improvements were observed when quantizing weight, activations, and kv cache to 4-bit, 8-bit, and 4-bit, respectively.

2024-AICS-EXP

This repository contains the complete archive of the 2024 version of the 'Intelligent Computing System' experiment at the University of Chinese Academy of Sciences. The experiment content for 2024 has undergone extensive adjustments to the knowledge system and experimental topics, including the transition from TensorFlow to PyTorch, significant modifications to previous code, and the addition of experiments with large models. The project is continuously updated in line with the course progress, currently up to the seventh experiment. Updates include the addition of experiments like YOLOv5 in Experiment 5-3, updates to theoretical teaching materials, and fixes for bugs in Experiment 6 code. The repository also includes experiment manuals, questions, and answers for various experiments, with some data sets hosted on Baidu Cloud due to size limitations on GitHub.

goodai-ltm-benchmark

This repository contains code and data for replicating experiments on Long-Term Memory (LTM) abilities of conversational agents. It includes a benchmark for testing agents' memory performance over long conversations, evaluating tasks requiring dynamic memory upkeep and information integration. The repository supports various models, datasets, and configurations for benchmarking and reporting results.

For similar tasks

auto-dev-vscode

AutoDev for VSCode is an AI-powered coding wizard with multilingual support, auto code generation, and a bug-slaying assistant. It offers customizable prompts and features like Auto Dev/Testing/Document/Agent. The tool aims to enhance coding productivity and efficiency by providing intelligent assistance and automation capabilities within the Visual Studio Code environment.

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

LLPhant

LLPhant is a comprehensive PHP Generative AI Framework designed to be simple yet powerful, compatible with Symfony and Laravel. It supports various LLMs like OpenAI, Anthropic, Mistral, Ollama, and services compatible with OpenAI API. The framework enables tasks such as semantic search, chatbots, personalized content creation, text summarization, personal shopper creation, autonomous AI agents, and coding tool assistance. It provides tools for generating text, images, speech-to-text transcription, and customizing system messages for question answering. LLPhant also offers features for embeddings, vector stores, document stores, and question answering with various query transformations and reranking techniques.

awesome-code-ai

A curated list of AI coding tools, including code completion, refactoring, and assistants. This list includes both open-source and commercial tools, as well as tools that are still in development. Some of the most popular AI coding tools include GitHub Copilot, CodiumAI, Codeium, Tabnine, and Replit Ghostwriter.

companion-vscode

Quack Companion is a VSCode extension that provides smart linting, code chat, and coding guideline curation for developers. It aims to enhance the coding experience by offering a new tab with features like curating software insights with the team, code chat similar to ChatGPT, smart linting, and upcoming code completion. The extension focuses on creating a smooth contribution experience for developers by turning contribution guidelines into a live pair coding experience, helping developers find starter contribution opportunities, and ensuring alignment between contribution goals and project priorities. Quack collects limited telemetry data to improve its services and products for developers, with options for anonymization and disabling telemetry available to users.

CodeGeeX4

CodeGeeX4-ALL-9B is an open-source multilingual code generation model based on GLM-4-9B, offering enhanced code generation capabilities. It supports functions like code completion, code interpreter, web search, function call, and repository-level code Q&A. The model has competitive performance on benchmarks like BigCodeBench and NaturalCodeBench, outperforming larger models in terms of speed and performance.

probsem

ProbSem is a repository that provides a framework to leverage large language models (LLMs) for assigning context-conditional probability distributions over queried strings. It supports OpenAI engines and HuggingFace CausalLM models, and is flexible for research applications in linguistics, cognitive science, program synthesis, and NLP. Users can define prompts, contexts, and queries to derive probability distributions over possible completions, enabling tasks like cloze completion, multiple-choice QA, semantic parsing, and code completion. The repository offers CLI and API interfaces for evaluation, with options to customize models, normalize scores, and adjust temperature for probability distributions.

chatgpt

The ChatGPT R package provides a set of features to assist in R coding. It includes addins like Ask ChatGPT, Comment selected code, Complete selected code, Create unit tests, Create variable name, Document code, Explain selected code, Find issues in the selected code, Optimize selected code, and Refactor selected code. Users can interact with ChatGPT to get code suggestions, explanations, and optimizations. The package helps in improving coding efficiency and quality by providing AI-powered assistance within the RStudio environment.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.