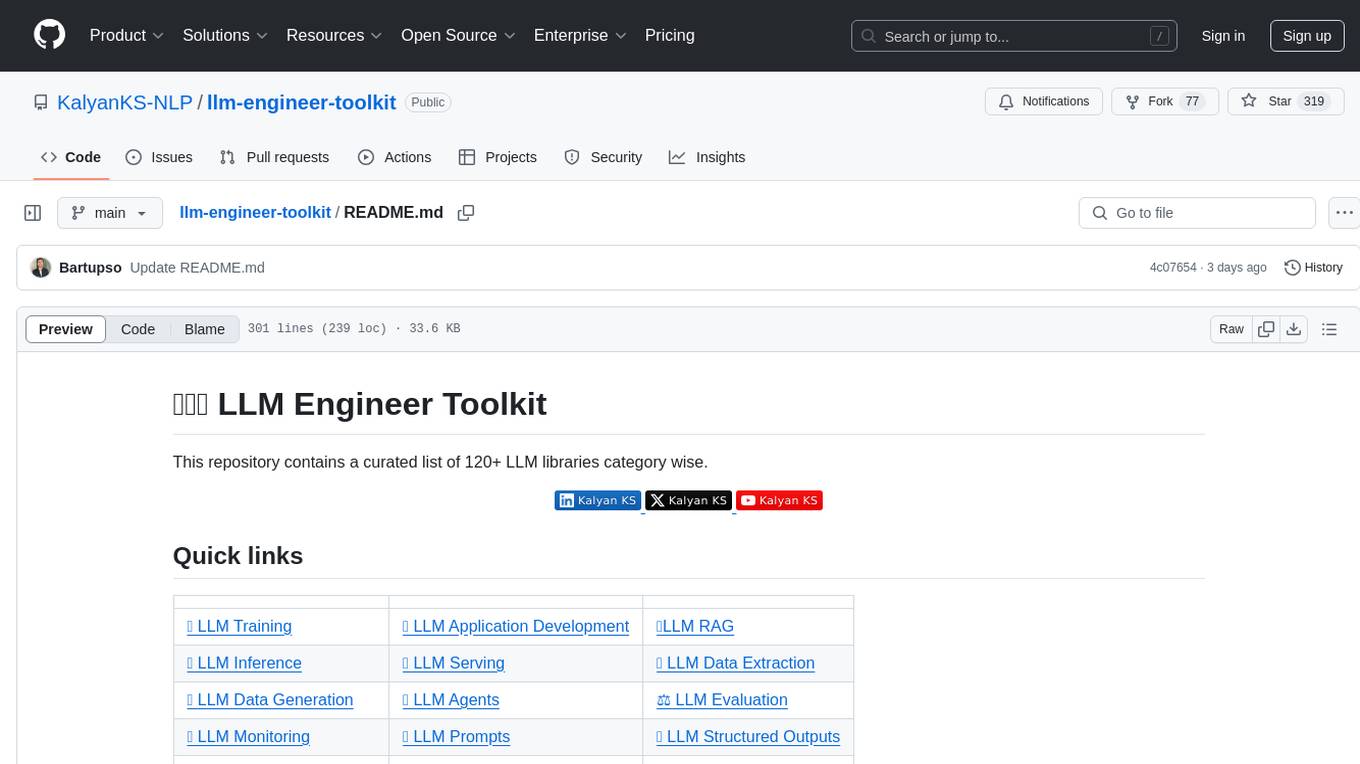

llm-engineer-toolkit

A curated list of 120+ LLM libraries category wise.

Stars: 6991

The LLM Engineer Toolkit is a curated repository containing over 120 LLM libraries categorized for various tasks such as training, application development, inference, serving, data extraction, data generation, agents, evaluation, monitoring, prompts, structured outputs, safety, security, embedding models, and other miscellaneous tools. It includes libraries for fine-tuning LLMs, building applications powered by LLMs, serving LLM models, extracting data, generating synthetic data, creating AI agents, evaluating LLM applications, monitoring LLM performance, optimizing prompts, handling structured outputs, ensuring safety and security, embedding models, and more. The toolkit covers a wide range of tools and frameworks to streamline the development, deployment, and optimization of large language models.

README:

This repository contains a curated list of 120+ LLM libraries category wise.

Join 🚀 AIxFunda free newsletter to get latest updates and interesting tutorials related to Generative AI, LLMs, Agents and RAG.

- ✨ Weekly GenAI updates

- 📄 Weekly LLM, Agents and RAG paper updates

- 📝 1 fresh blog post on an interesting topic every week

- 🚀RAG Zero to Hero Guide - Comprehensive guide to learn RAG from basics to advanced.

| Library | Description | Link |

|---|---|---|

| unsloth | Fine-tune LLMs faster with less memory. | Link |

| PEFT | State-of-the-art Parameter-Efficient Fine-Tuning library. | Link |

| TRL | Train transformer language models with reinforcement learning. | Link |

| Transformers | Transformers provides thousands of pretrained models to perform tasks on different modalities such as text, vision, and audio. | Link |

| Axolotl | Tool designed to streamline post-training for various AI models. | Link |

| LLMBox | A comprehensive library for implementing LLMs, including a unified training pipeline and comprehensive model evaluation. | Link |

| LitGPT | Train and fine-tune LLM lightning fast. | Link |

| Mergoo | A library for easily merging multiple LLM experts, and efficiently train the merged LLM. | Link |

| Llama-Factory | Easy and efficient LLM fine-tuning. | Link |

| Ludwig | Low-code framework for building custom LLMs, neural networks, and other AI models. | Link |

| Txtinstruct | A framework for training instruction-tuned models. | Link |

| Lamini | An integrated LLM inference and tuning platform. | Link |

| XTuring | xTuring provides fast, efficient and simple fine-tuning of open-source LLMs, such as Mistral, LLaMA, GPT-J, and more. | Link |

| RL4LMs | A modular RL library to fine-tune language models to human preferences. | Link |

| DeepSpeed | DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective. | Link |

| torchtune | A PyTorch-native library specifically designed for fine-tuning LLMs. | Link |

| PyTorch Lightning | A library that offers a high-level interface for pretraining and fine-tuning LLMs. | Link |

Frameworks

| Library | Description | Link |

|---|---|---|

| LangChain | LangChain is a framework for developing applications powered by large language models (LLMs). | Link |

| Llama Index | LlamaIndex is a data framework for your LLM applications. | Link |

| HayStack | Haystack is an end-to-end LLM framework that allows you to build applications powered by LLMs, Transformer models, vector search and more. | Link |

| Prompt flow | A suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications. | Link |

| Griptape | A modular Python framework for building AI-powered applications. | Link |

| Weave | Weave is a toolkit for developing Generative AI applications. | Link |

| Llama Stack | Build Llama Apps. | Link |

Data Preparation

| Library | Description | Link |

|---|---|---|

| Data Prep Kit | Data Prep Kit accelerates unstructured data preparation for LLM app developers. Developers can use Data Prep Kit to cleanse, transform, and enrich use case-specific unstructured data to pre-train LLMs, fine-tune LLMs, instruct-tune LLMs, or build RAG applications. | Link |

Multi API Access

| Library | Description | Link |

|---|---|---|

| LiteLLM | Library to call 100+ LLM APIs in OpenAI format. | Link |

| AI Gateway | A Blazing Fast AI Gateway with integrated Guardrails. Route to 200+ LLMs, 50+ AI Guardrails with 1 fast & friendly API. | Link |

Routers

| Library | Description | Link |

|---|---|---|

| RouteLLM | Framework for serving and evaluating LLM routers - save LLM costs without compromising quality. Drop-in replacement for OpenAI's client to route simpler queries to cheaper models. | Link |

Memory

| Library | Description | Link |

|---|---|---|

| mem0 | The Memory layer for your AI apps. | Link |

| Memoripy | An AI memory layer with short- and long-term storage, semantic clustering, and optional memory decay for context-aware applications. | Link |

| Letta (MemGPT) | An open-source framework for building stateful LLM applications with advanced reasoning capabilities and transparent long-term memory | Link |

| Memobase | A user profile-based memory system designed to bring long-term user memory to your Generative AI applications. | Link |

Interface

| Library | Description | Link |

|---|---|---|

| Streamlit | A faster way to build and share data apps. Streamlit lets you transform Python scripts into interactive web apps in minutes | Link |

| Gradio | Build and share delightful machine learning apps, all in Python. | Link |

| AI SDK UI | Build chat and generative user interfaces. | Link |

| AI-Gradio | Create AI apps powered by various AI providers. | Link |

| Simpleaichat | Python package for easily interfacing with chat apps, with robust features and minimal code complexity. | Link |

| Chainlit | Build production-ready Conversational AI applications in minutes. | Link |

Low Code

| Library | Description | Link |

|---|---|---|

| LangFlow | LangFlow is a low-code app builder for RAG and multi-agent AI applications. It’s Python-based and agnostic to any model, API, or database. | Link |

Cache

| Library | Description | Link |

|---|---|---|

| GPTCache | A Library for Creating Semantic Cache for LLM Queries. Slash Your LLM API Costs by 10x 💰, Boost Speed by 100x. Fully integrated with LangChain and LlamaIndex. | Link |

| Library | Description | Link |

|---|---|---|

| FastGraph RAG | Streamlined and promptable Fast GraphRAG framework designed for interpretable, high-precision, agent-driven retrieval workflows. | Link |

| Chonkie | RAG chunking library that is lightweight, lightning-fast, and easy to use. | Link |

| RAGChecker | A Fine-grained Framework For Diagnosing RAG. | Link |

| RAG to Riches | Build, scale, and deploy state-of-the-art Retrieval-Augmented Generation applications. | Link |

| BeyondLLM | Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. | Link |

| SQLite-Vec | A vector search SQLite extension that runs anywhere! | Link |

| fastRAG | fastRAG is a research framework for efficient and optimized retrieval-augmented generative pipelines, incorporating state-of-the-art LLMs and Information Retrieval. | Link |

| FlashRAG | A Python Toolkit for Efficient RAG Research. | Link |

| Llmware | Unified framework for building enterprise RAG pipelines with small, specialized models. | Link |

| Rerankers | A lightweight unified API for various reranking models. | Link |

| Vectara | Build Agentic RAG applications. | Link |

| Library | Description | Link |

|---|---|---|

| LLM Compressor | Transformers-compatible library for applying various compression algorithms to LLMs for optimized deployment. | Link |

| LightLLM | Python-based LLM inference and serving framework, notable for its lightweight design, easy scalability, and high-speed performance. | Link |

| vLLM | High-throughput and memory-efficient inference and serving engine for LLMs. | Link |

| torchchat | Run PyTorch LLMs locally on servers, desktop, and mobile. | Link |

| TensorRT-LLM | TensorRT-LLM is a library for optimizing Large Language Model (LLM) inference. | Link |

| WebLLM | High-performance In-browser LLM Inference Engine. | Link |

| Library | Description | Link |

|---|---|---|

| Langcorn | Serving LangChain LLM apps and agents automagically with FastAPI. | Link |

| LitServe | Lightning-fast serving engine for any AI model of any size. It augments FastAPI with features like batching, streaming, and GPU autoscaling. | Link |

| Library | Description | Link |

|---|---|---|

| Crawl4AI | Open-source LLM Friendly Web Crawler & Scraper. | Link |

| ScrapeGraphAI | A web scraping Python library that uses LLM and direct graph logic to create scraping pipelines for websites and local documents (XML, HTML, JSON, Markdown, etc.). | Link |

| Docling | Docling parses documents and exports them to the desired format with ease and speed. | Link |

| Llama Parse | GenAI-native document parser that can parse complex document data for any downstream LLM use case (RAG, agents). | Link |

| PyMuPDF4LLM | PyMuPDF4LLM library makes it easier to extract PDF content in the format you need for LLM & RAG environments. | Link |

| Crawlee | A web scraping and browser automation library. | Link |

| MegaParse | Parser for every type of document. | Link |

| ExtractThinker | Document Intelligence library for LLMs. | Link |

| Library | Description | Link |

|---|---|---|

| DataDreamer | DataDreamer is a powerful open-source Python library for prompting, synthetic data generation, and training workflows. | Link |

| fabricator | A flexible open-source framework to generate datasets with large language models. | Link |

| Promptwright | Synthetic Dataset Generation Library. | Link |

| EasyInstruct | An Easy-to-use Instruction Processing Framework for Large Language Models. | Link |

| Library | Description | Link |

|---|---|---|

| CrewAI | Framework for orchestrating role-playing, autonomous AI agents. | Link |

| LangGraph | Build resilient language agents as graphs. | Link |

| Agno | Build AI Agents with memory, knowledge, tools, and reasoning. Chat with them using a beautiful Agent UI. | Link |

| Agents SDK | Build agentic apps using LLMs with context, tools, hand off to other specialized agents. | Link |

| AutoGen | An open-source framework for building AI agent systems. | Link |

| Smolagents | Library to build powerful agents in a few lines of code. | Link |

| Pydantic AI | Python agent framework to build production grade applications with Generative AI. | Link |

| CAMEL | Open-source multi-agent framework with various toolkits and use-cases available. | Link |

| BeeAI | Build production-ready multi-agent systems in Python. | Link |

| gradio-tools | A Python library for converting Gradio apps into tools that can be leveraged by an LLM-based agent to complete its task. | Link |

| Composio | Production Ready Toolset for AI Agents. | Link |

| Atomic Agents | Building AI agents, atomically. | Link |

| Memary | Open Source Memory Layer For Autonomous Agents. | Link |

| Browser Use | Make websites accessible for AI agents. | Link |

| OpenWebAgent | An Open Toolkit to Enable Web Agents on Large Language Models. | Link |

| Lagent | A lightweight framework for building LLM-based agents. | Link |

| LazyLLM | A Low-code Development Tool For Building Multi-agent LLMs Applications. | Link |

| Swarms | The Enterprise-Grade Production-Ready Multi-Agent Orchestration Framework. | Link |

| ChatArena | ChatArena is a library that provides multi-agent language game environments and facilitates research about autonomous LLM agents and their social interactions. | Link |

| Swarm | Educational framework exploring ergonomic, lightweight multi-agent orchestration. | Link |

| AgentStack | The fastest way to build robust AI agents. | Link |

| Archgw | Intelligent gateway for Agents. | Link |

| Flow | A lightweight task engine for building AI agents. | Link |

| AgentOps | Python SDK for AI agent monitoring. | Link |

| Langroid | Multi-Agent framework. | Link |

| Agentarium | Framework for creating and managing simulations populated with AI-powered agents. | Link |

| Upsonic | Reliable AI agent framework that supports MCP. | Link |

| Library | Description | Link |

|---|---|---|

| Ragas | Ragas is your ultimate toolkit for evaluating and optimizing Large Language Model (LLM) applications. | Link |

| Giskard | Open-Source Evaluation & Testing for ML & LLM systems. | Link |

| DeepEval | LLM Evaluation Framework | Link |

| Lighteval | All-in-one toolkit for evaluating LLMs. | Link |

| Trulens | Evaluation and Tracking for LLM Experiments | Link |

| PromptBench | A unified evaluation framework for large language models. | Link |

| LangTest | Deliver Safe & Effective Language Models. 60+ Test Types for Comparing LLM & NLP Models on Accuracy, Bias, Fairness, Robustness & More. | Link |

| EvalPlus | A rigorous evaluation framework for LLM4Code. | Link |

| FastChat | An open platform for training, serving, and evaluating large language model-based chatbots. | Link |

| judges | A small library of LLM judges. | Link |

| Evals | Evals is a framework for evaluating LLMs and LLM systems, and an open-source registry of benchmarks. | Link |

| AgentEvals | Evaluators and utilities for evaluating the performance of your agents. | Link |

| LLMBox | A comprehensive library for implementing LLMs, including a unified training pipeline and comprehensive model evaluation. | Link |

| Opik | An open-source end-to-end LLM Development Platform which also includes LLM evaluation. | Link |

| PydanticAI Evals | A powerful evaluation framework designed to help you systematically evaluate the performance of LLM applications. | Link |

| UQLM | A Python package for generation-time, zero-resource LLM hallucination using state-of-the-art uncertainty quantification techniques. | Link |

| Library | Description | Link |

|---|---|---|

| MLflow | An open-source end-to-end MLOps/LLMOps Platform for tracking, evaluating, and monitoring LLM applications. | Link |

| Opik | An open-source end-to-end LLM Development Platform which also includes LLM monitoring. | Link |

| LangSmith | Provides tools for logging, monitoring, and improving your LLM applications. | Link |

| Weights & Biases (W&B) | W&B provides features for tracking LLM performance. | Link |

| Helicone | Open source LLM-Observability Platform for Developers. One-line integration for monitoring, metrics, evals, agent tracing, prompt management, playground, etc. | Link |

| Evidently | An open-source ML and LLM observability framework. | Link |

| Phoenix | An open-source AI observability platform designed for experimentation, evaluation, and troubleshooting. | Link |

| Observers | A Lightweight Library for AI Observability. | Link |

| Library | Description | Link |

|---|---|---|

| PCToolkit | A Unified Plug-and-Play Prompt Compression Toolkit of Large Language Models. | Link |

| Selective Context | Selective Context compresses your prompt and context to allow LLMs (such as ChatGPT) to process 2x more content. | Link |

| LLMLingua | Library for compressing prompts to accelerate LLM inference. | Link |

| betterprompt | Test suite for LLM prompts before pushing them to production. | Link |

| Promptify | Solve NLP Problems with LLMs & easily generate different NLP Task prompts for popular generative models like GPT, PaLM, and more with Promptify. | Link |

| PromptSource | PromptSource is a toolkit for creating, sharing, and using natural language prompts. | Link |

| DSPy | DSPy is the open-source framework for programming—rather than prompting—language models. | Link |

| Py-priompt | Prompt design library. | Link |

| Promptimizer | Prompt optimization library. | Link |

| Library | Description | Link |

|---|---|---|

| Instructor | Python library for working with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API. | Link |

| XGrammar | An open-source library for efficient, flexible, and portable structured generation. | Link |

| Outlines | Robust (structured) text generation | Link |

| Guidance | Guidance is an efficient programming paradigm for steering language models. | Link |

| LMQL | A language for constraint-guided and efficient LLM programming. | Link |

| Jsonformer | A Bulletproof Way to Generate Structured JSON from Language Models. | Link |

| Library | Description | Link |

|---|---|---|

| JailbreakEval | A collection of automated evaluators for assessing jailbreak attempts. | Link |

| EasyJailbreak | An easy-to-use Python framework to generate adversarial jailbreak prompts. | Link |

| Guardrails | Adding guardrails to large language models. | Link |

| LLM Guard | The Security Toolkit for LLM Interactions. | Link |

| AuditNLG | AuditNLG is an open-source library that can help reduce the risks associated with using generative AI systems for language. | Link |

| NeMo Guardrails | NeMo Guardrails is an open-source toolkit for easily adding programmable guardrails to LLM-based conversational systems. | Link |

| Garak | LLM vulnerability scanner | Link |

| DeepTeam | The LLM Red Teaming Framework | Link |

| Library | Description | Link |

|---|---|---|

| Sentence-Transformers | State-of-the-Art Text Embeddings | Link |

| Model2Vec | Fast State-of-the-Art Static Embeddings | Link |

| Text Embedding Inference | A blazing fast inference solution for text embeddings models. TEI enables high-performance extraction for the most popular models, including FlagEmbedding, Ember, GTE and E5. | Link |

| Library | Description | Link |

|---|---|---|

| Text Machina | A modular and extensible Python framework, designed to aid in the creation of high-quality, unbiased datasets to build robust models for MGT-related tasks such as detection, attribution, and boundary detection. | Link |

| LLM Reasoners | A library for advanced large language model reasoning. | Link |

| EasyEdit | An Easy-to-use Knowledge Editing Framework for Large Language Models. | Link |

| CodeTF | CodeTF: One-stop Transformer Library for State-of-the-art Code LLM. | Link |

| spacy-llm | This package integrates Large Language Models (LLMs) into spaCy, featuring a modular system for fast prototyping and prompting, and turning unstructured responses into robust outputs for various NLP tasks. | Link |

| pandas-ai | Chat with your database (SQL, CSV, pandas, polars, MongoDB, NoSQL, etc.). | Link |

| LLM Transparency Tool | An open-source interactive toolkit for analyzing internal workings of Transformer-based language models. | Link |

| Vanna | Chat with your SQL database. Accurate Text-to-SQL Generation via LLMs using RAG. | Link |

| mergekit | Tools for merging pretrained large language models. | Link |

| MarkLLM | An Open-Source Toolkit for LLM Watermarking. | Link |

| LLMSanitize | An open-source library for contamination detection in NLP datasets and Large Language Models (LLMs). | Link |

| Annotateai | Automatically annotate papers using LLMs. | Link |

| LLM Reasoner | Make any LLM think like OpenAI o1 and DeepSeek R1. | Link |

Please consider giving a star, if you find this repository useful.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-engineer-toolkit

Similar Open Source Tools

llm-engineer-toolkit

The LLM Engineer Toolkit is a curated repository containing over 120 LLM libraries categorized for various tasks such as training, application development, inference, serving, data extraction, data generation, agents, evaluation, monitoring, prompts, structured outputs, safety, security, embedding models, and other miscellaneous tools. It includes libraries for fine-tuning LLMs, building applications powered by LLMs, serving LLM models, extracting data, generating synthetic data, creating AI agents, evaluating LLM applications, monitoring LLM performance, optimizing prompts, handling structured outputs, ensuring safety and security, embedding models, and more. The toolkit covers a wide range of tools and frameworks to streamline the development, deployment, and optimization of large language models.

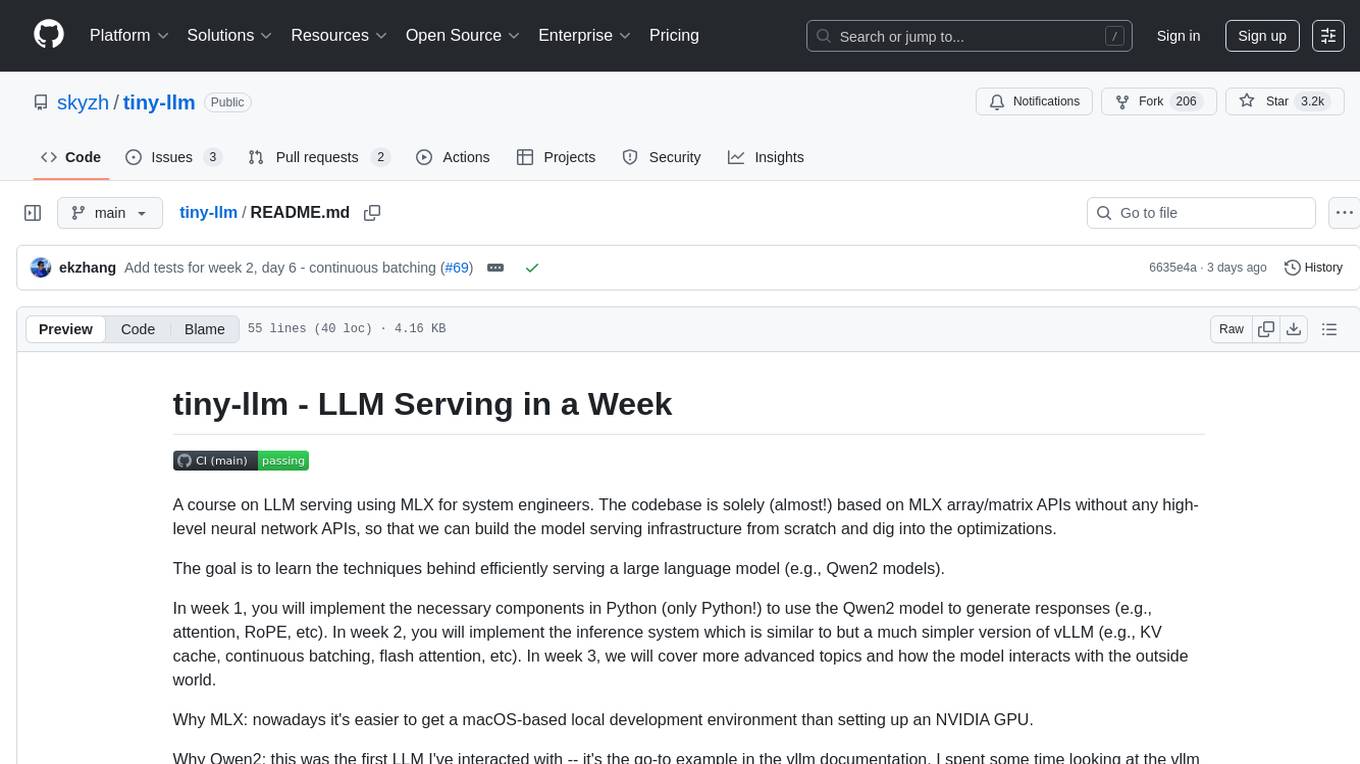

tiny-llm

tiny-llm is a course on LLM serving using MLX for system engineers. The codebase is focused on MLX array/matrix APIs to build model serving infrastructure from scratch and explore optimizations. The goal is to efficiently serve large language models like Qwen2 models. The course covers implementing components in Python, building an inference system similar to vLLM, and advanced topics on model interaction. The tool aims to provide hands-on experience in serving language models without high-level neural network APIs.

watsonx-ai-samples

Sample notebooks for IBM Watsonx.ai for IBM Cloud and IBM Watsonx.ai software product. The notebooks demonstrate capabilities such as running experiments on model building using AutoAI or Deep Learning, deploying third-party models as web services or batch jobs, monitoring deployments with OpenScale, managing model lifecycles, inferencing Watsonx.ai foundation models, and integrating LangChain with Watsonx.ai. Notebooks with Python code and the Python SDK can be found in the `python_sdk` folder. The REST API examples are organized in the `rest_api` folder.

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

redis-ai-resources

A curated repository of code recipes, demos, and resources for basic and advanced Redis use cases in the AI ecosystem. It includes demos for ArxivChatGuru, Redis VSS, Vertex AI & Redis, Agentic RAG, ArXiv Search, and Product Search. Recipes cover topics like Getting started with RAG, Semantic Cache, Advanced RAG, and Recommendation systems. The repository also provides integrations/tools like RedisVL, AWS Bedrock, LangChain Python, LangChain JS, LlamaIndex, Semantic Kernel, RelevanceAI, and DocArray. Additional content includes blog posts, talks, reviews, and documentation related to Vector Similarity Search, AI-Powered Document Search, Vector Databases, Real-Time Product Recommendations, and more. Benchmarks compare Redis against other Vector Databases and ANN benchmarks. Documentation includes QuickStart guides, official literature for Vector Similarity Search, Redis-py client library docs, Redis Stack documentation, and Redis client list.

Model-References

The 'Model-References' repository contains examples for training and inference using Intel Gaudi AI Accelerator. It includes models for computer vision, natural language processing, audio, generative models, MLPerf™ training, and MLPerf™ inference. The repository provides performance data and model validation information for various frameworks like PyTorch. Users can find examples of popular models like ResNet, BERT, and Stable Diffusion optimized for Intel Gaudi AI accelerator.

tamingLLMs

The 'Taming LLMs' repository provides a practical guide to the pitfalls and challenges associated with Large Language Models (LLMs) when building applications. It focuses on key limitations and implementation pitfalls, offering practical Python examples and open source solutions to help engineers and technical leaders navigate these challenges. The repository aims to equip readers with the knowledge to harness the power of LLMs while avoiding their inherent limitations.

edge-ai-libraries

The Edge AI Libraries project is a collection of libraries, microservices, and tools for Edge application development. It includes sample applications showcasing generic AI use cases. Key components include Anomalib, Dataset Management Framework, Deep Learning Streamer, ECAT EnableKit, EtherCAT Masterstack, FLANN, OpenVINO toolkit, Audio Analyzer, ORB Extractor, PCL, PLCopen Servo, Real-time Data Agent, RTmotion, Audio Intelligence, Deep Learning Streamer Pipeline Server, Document Ingestion, Model Registry, Multimodal Embedding Serving, Time Series Analytics, Vector Retriever, Visual-Data Preparation, VLM Inference Serving, Intel Geti, Intel SceneScape, Visual Pipeline and Platform Evaluation Tool, Chat Question and Answer, Document Summarization, PLCopen Benchmark, PLCopen Databus, Video Search and Summarization, Isolation Forest Classifier, Random Forest Microservices. Visit sub-directories for instructions and guides.

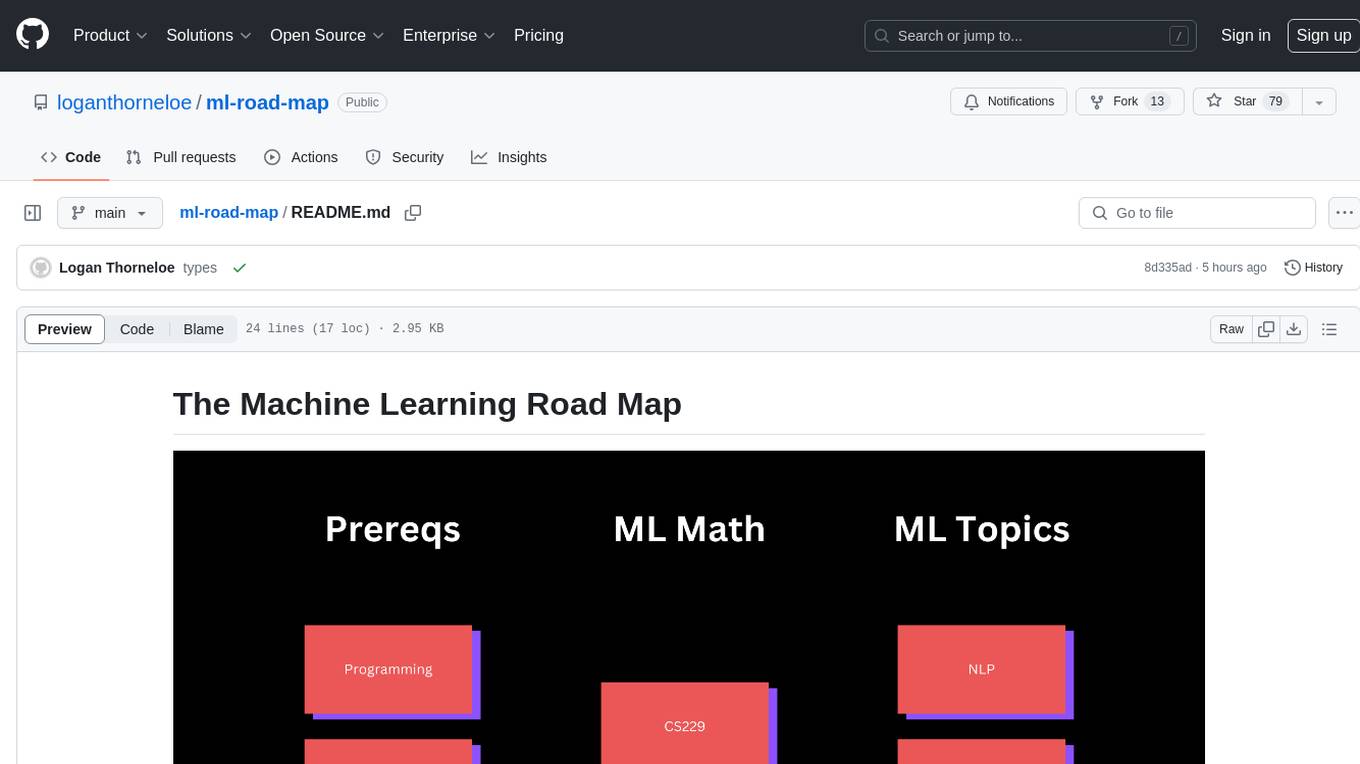

ml-road-map

The Machine Learning Road Map is a comprehensive guide designed to take individuals from various levels of machine learning knowledge to a basic understanding of machine learning principles using high-quality, free resources. It aims to simplify the complex and rapidly growing field of machine learning by providing a structured roadmap for learning. The guide emphasizes the importance of understanding AI for everyone, the need for patience in learning machine learning due to its complexity, and the value of learning from experts in the field. It covers five different paths to learning about machine learning, catering to consumers, aspiring AI researchers, ML engineers, developers interested in building ML applications, and companies looking to implement AI solutions.

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

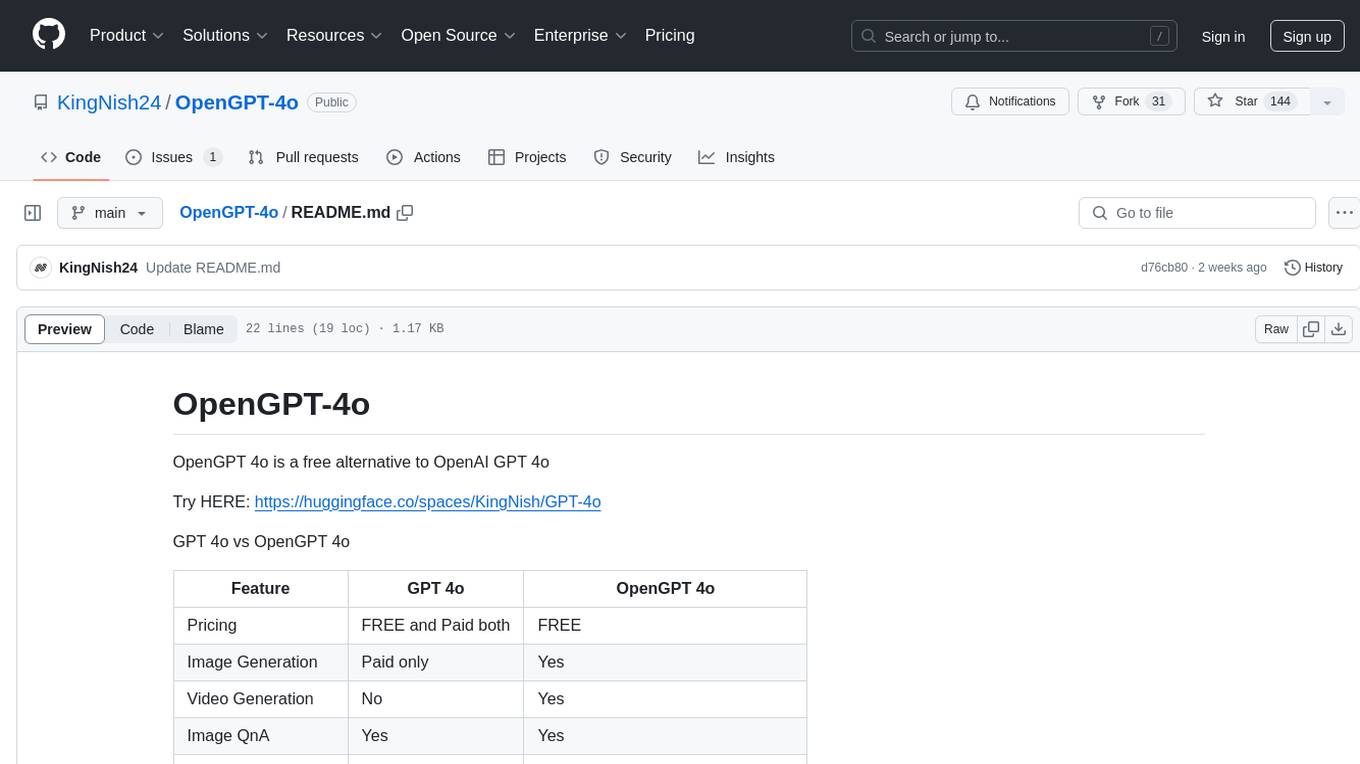

OpenGPT-4o

OpenGPT 4o is a free alternative to OpenAI GPT 4o. It offers various features such as free pricing, image and video generation, image QnA, voice and video chat, multilingual support, high customization, and continuous learning capability. The tool aims to provide an alternative to OpenAI GPT 4o with enhanced capabilities and features for users.

Awesome-LLM-Constrained-Decoding

Awesome-LLM-Constrained-Decoding is a curated list of papers, code, and resources related to constrained decoding of Large Language Models (LLMs). The repository aims to facilitate reliable, controllable, and efficient generation with LLMs by providing a comprehensive collection of materials in this domain.

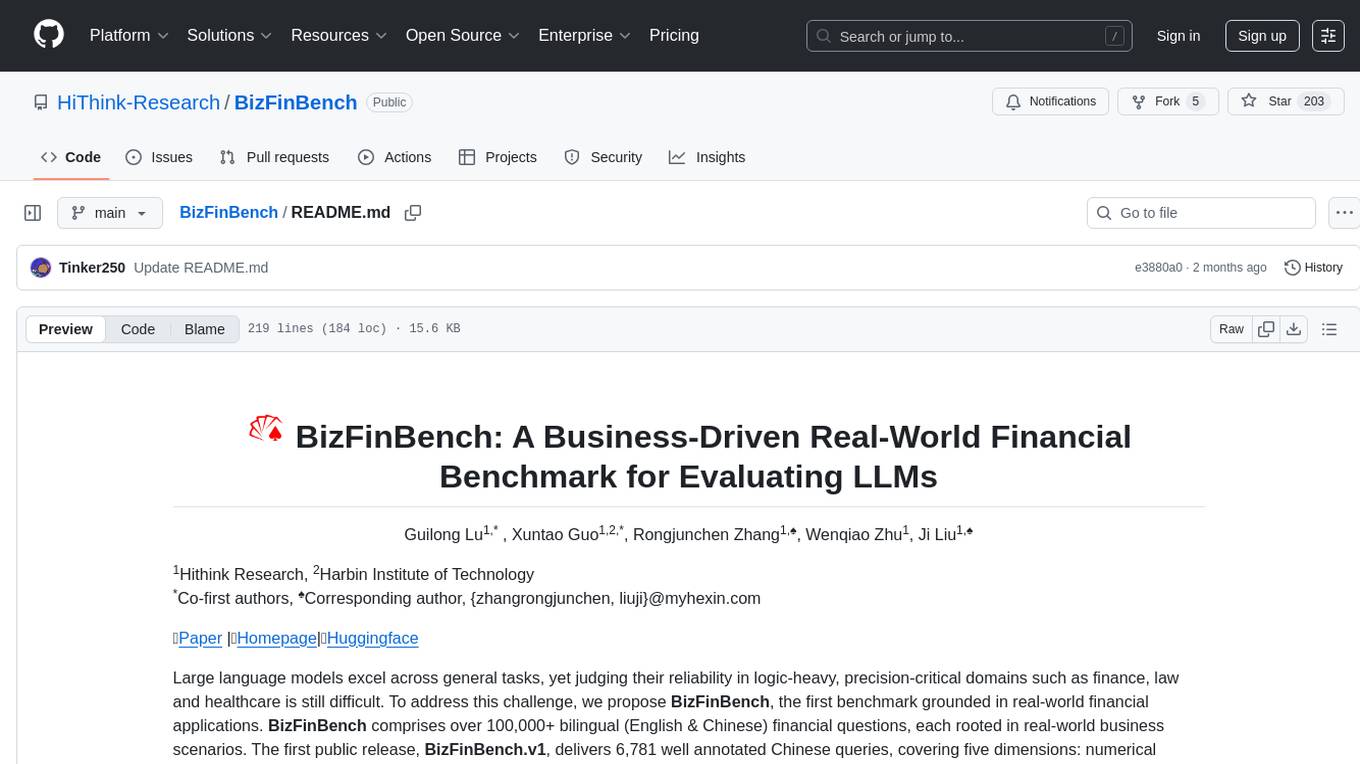

BizFinBench

BizFinBench is a benchmark tool designed for evaluating large language models (LLMs) in logic-heavy and precision-critical domains such as finance. It comprises over 100,000 bilingual financial questions rooted in real-world business scenarios. The tool covers five dimensions: numerical calculation, reasoning, information extraction, prediction recognition, and knowledge-based question answering, mapped to nine fine-grained categories. BizFinBench aims to assess the capacity of LLMs in real-world financial scenarios and provides insights into their strengths and limitations.

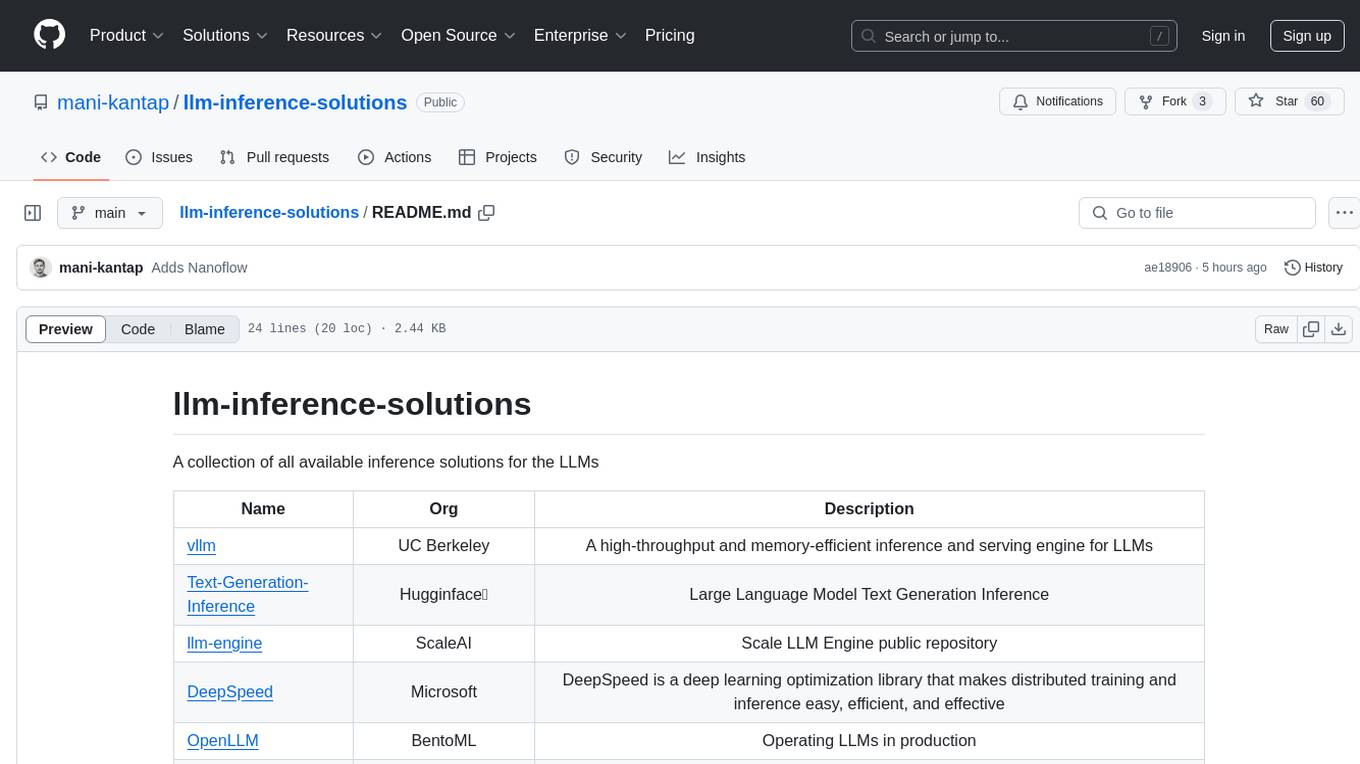

llm-inference-solutions

A collection of available inference solutions for Large Language Models (LLMs) including high-throughput engines, optimization libraries, deployment toolkits, and deep learning frameworks for production environments.

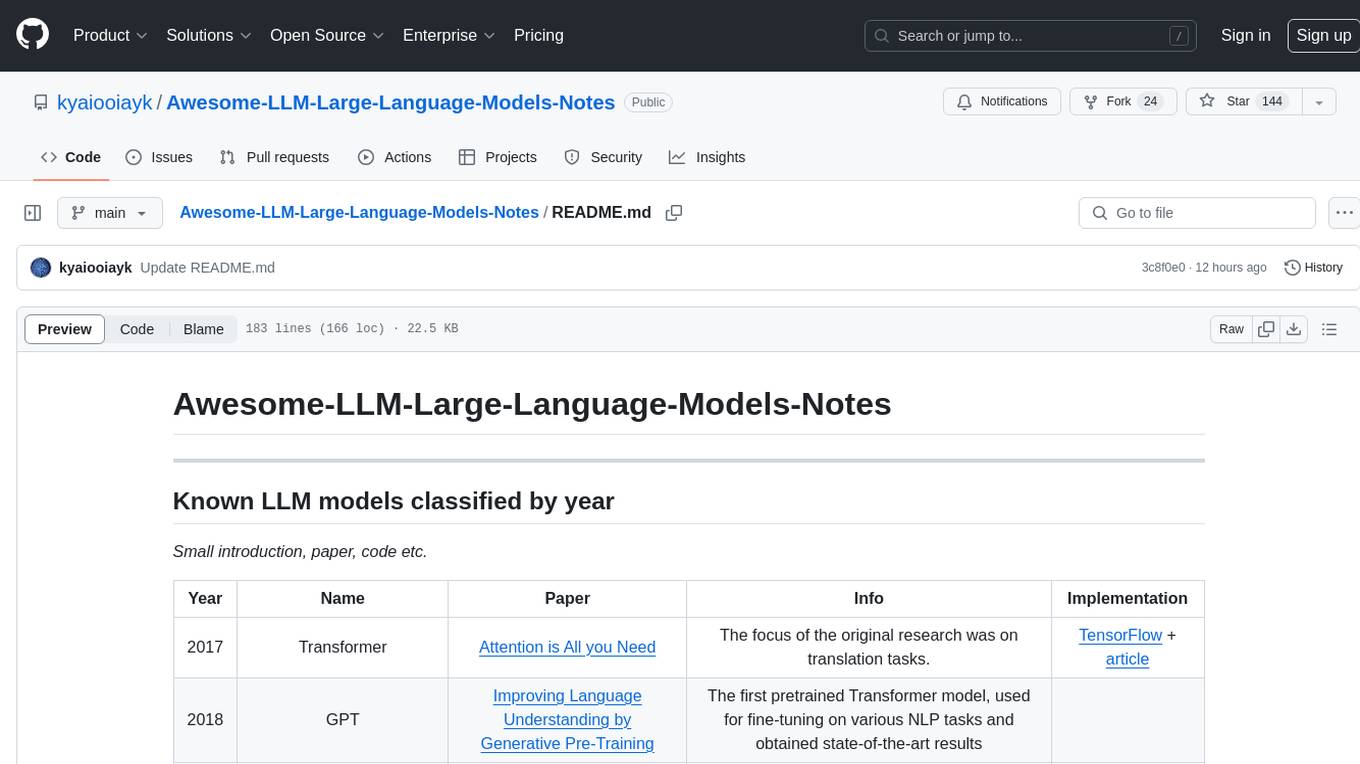

Awesome-LLM-Large-Language-Models-Notes

Awesome-LLM-Large-Language-Models-Notes is a repository that provides a comprehensive collection of information on various Large Language Models (LLMs) classified by year, size, and name. It includes details on known LLM models, their papers, implementations, and specific characteristics. The repository also covers LLM models classified by architecture, must-read papers, blog articles, tutorials, and implementations from scratch. It serves as a valuable resource for individuals interested in understanding and working with LLMs in the field of Natural Language Processing (NLP).

Telco-AIX

Telco-AIX is a collaborative experimental workspace focusing on data-driven decision-making through open-source AI capabilities and open datasets. It covers various domains such as revenue management, service quality, network operations, sustainability, security, smart infrastructure, IoT security, advanced AI, customer experience, anomaly detection, connectivity, network operations, IT management, and agentic Telco-AI. The repository provides models, datasets, and published works related to telecommunications AI applications.

For similar tasks

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

MoonshotAI-Cookbook

The MoonshotAI-Cookbook provides example code and guides for accomplishing common tasks with the MoonshotAI API. To run these examples, you'll need an MoonshotAI account and associated API key. Most code examples are written in Python, though the concepts can be applied in any language.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.