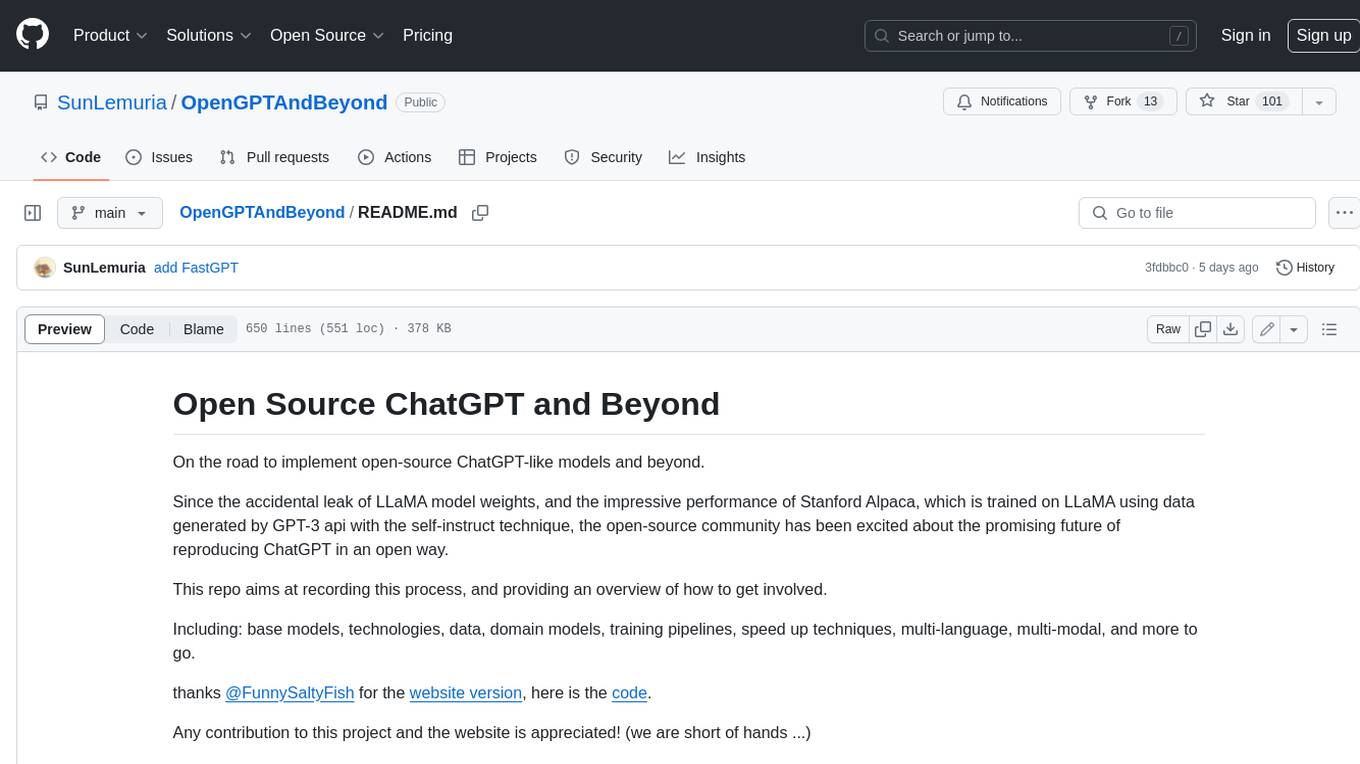

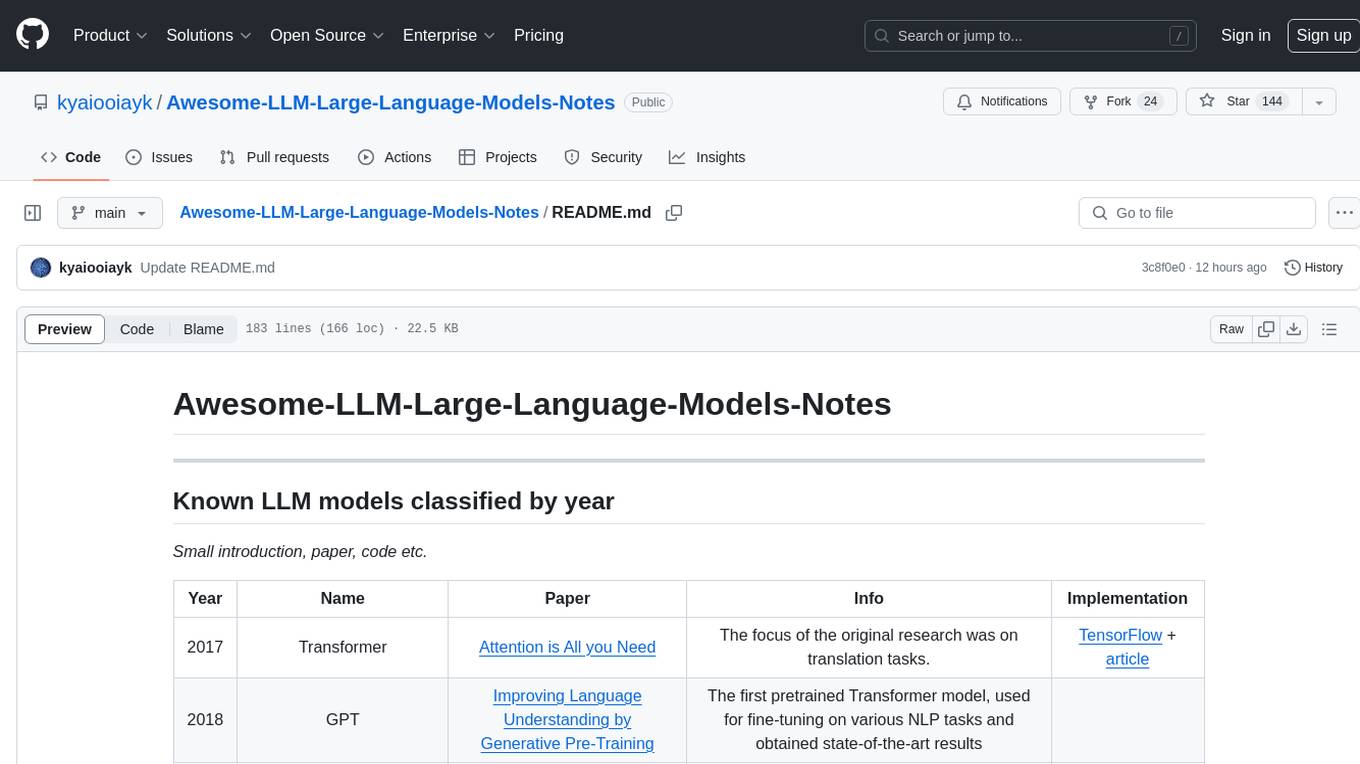

Awesome-LLM-Large-Language-Models-Notes

What can I do with a LLM model?

Stars: 156

Awesome-LLM-Large-Language-Models-Notes is a repository that provides a comprehensive collection of information on various Large Language Models (LLMs) classified by year, size, and name. It includes details on known LLM models, their papers, implementations, and specific characteristics. The repository also covers LLM models classified by architecture, must-read papers, blog articles, tutorials, and implementations from scratch. It serves as a valuable resource for individuals interested in understanding and working with LLMs in the field of Natural Language Processing (NLP).

README:

Small introduction, paper, code etc.

| Year | Name | Paper | Info | Implementation |

|---|---|---|---|---|

| 2017 | Transformer | Attention is All you Need | The focus of the original research was on translation tasks. | TensorFlow + article |

| 2018 | GPT | Improving Language Understanding by Generative Pre-Training | The first pretrained Transformer model, used for fine-tuning on various NLP tasks and obtained state-of-the-art results | |

| 2018 | BERT | BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | Another large pretrained model, this one designed to produce better summaries of sentences | PyTorch |

| 2019 | GPT-2 | Language Models are Unsupervised Multitask Learners | An improved (and bigger) version of GPT that was not immediately publicly released due to ethical concerns | |

| 2019 | DistilBERT - Distilled BERT | DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter | A distilled version of BERT that is 60% faster, 40% lighter in memory, and still retains 97% of BERT’s performance | |

| 2019 | BART | BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension | Large pretrained models using the same architecture as the original Transformer model. | |

| 2019 | T5 | Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer | Large pretrained models using the same architecture as the original Transformer model. | |

| 2019 | ALBERT | ALBERT: A Lite BERT for Self-supervised Learning of Language Representations | ||

| 2019 | RoBERTa - A Robustly Optimized BERT Pretraining Approach | RoBERTa: A Robustly Optimized BERT Pretraining Approach | ||

| 2019 | CTRL | CTRL: A Conditional Transformer Language Model for Controllable Generation | ||

| 2019 | Transformer XL | Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context | Adopts a recurrence methodology over past state coupled with relative positional encoding enabling longer term dependencies | |

| 2019 | Diablo GPT | DialoGPT: Large-Scale Generative Pre-training for Conversational Response Generation | Trained on 147M conversation-like exchanges extracted from Reddit comment chains over a period spanning from 2005 through 2017 | PyTorch |

| 2019 | ERNIE | ERNIE: Enhanced Language Representation with Informative Entities | In this paper, we utilize both large-scale textual corpora and KGs to train an enhanced language representation model (ERNIE), which can take full advantage of lexical, syntactic, and knowledge information simultaneously. | |

| 2020 | GPT-3 | Language Models are Few-Shot Learners | An even bigger version of GPT-2 that is able to perform well on a variety of tasks without the need for fine-tuning (called zero-shot learning) | |

| 2020 | ELECTRA | ELECTRA: PRE-TRAINING TEXT ENCODERS AS DISCRIMINATORS RATHER THAN GENERATORS | ||

| 2020 | mBART | Multilingual Denoising Pre-training for Neural Machine Translation | ||

| 2021 | CLIP (Contrastive Language-Image Pre-Training) | Learning Transferable Visual Models From Natural Language Supervision | CLIP is a neural network trained on a variety of (image, text) pairs. It can be instructed in natural language to predict the most relevant text snippet, given an image, without directly optimizing for the task, similarly to the zero-shot capabilities of GPT-2 and 3. | PyTorch |

| 2021 | DALL-E | Zero-Shot Text-to-Image Generation | PyTorch | |

| 2021 | Gopher | Scaling Language Models: Methods, Analysis & Insights from Training Gopher | ||

| 2021 | Decision Transformer | Decision Transformer: Reinforcement Learning via Sequence Modeling | An architecture that casts the problem of RL as conditional sequence modeling. | PyTorch |

| 2021 | GLam (Generalist Language Model) | GLaM: Efficient Scaling of Language Models with Mixture-of-Experts | In this paper, we propose and develop a family of language models named GLaM (Generalist Language Model), which uses a sparsely activated mixture-of-experts architecture to scale the model capacity while also incurring substantially less training cost compared to dense variants. | |

| 2022 | chatGPT/InstructGPT | Training language models to follow instructions with human feedback | This trained language model is much better at following user intentions than GPT-3. The model is optimised (fine tuned) using Reinforcement Learning with Human Feedback (RLHF) to achieve conversational dialogue. The model was trained using a variety of data which were written by people to achieve responses that sounded human-like. | :-: |

| 2022 | Chinchilla | Training Compute-Optimal Large Language Models | Uses the same compute budget as Gopher but with 70B parameters and 4x more more data. | :-: |

| 2022 | LaMDA - Language Models for Dialog Applications | LaMDA | It is a family of Transformer-based neural language models specialized for dialog | |

| 2022 | DQ-BART | DQ-BART: Efficient Sequence-to-Sequence Model via Joint Distillation and Quantization | Propose to jointly distill and quantize the model, where knowledge is transferred from the full-precision teacher model to the quantized and distilled low-precision student model. | |

| 2022 | Flamingo | Flamingo: a Visual Language Model for Few-Shot Learning | Building models that can be rapidly adapted to novel tasks using only a handful of annotated examples is an open challenge for multimodal machine learning research. We introduce Flamingo, a family of Visual Language Models (VLM) with this ability. | |

| 2022 | Gato | A Generalist Agent | Inspired by progress in large-scale language modeling, we apply a similar approach towards building a single generalist agent beyond the realm of text outputs. The agent, which we refer to as Gato, works as a multi-modal, multi-task, multi-embodiment generalist policy. | |

| 2022 | GODEL: Large-Scale Pre-Training for Goal-Directed Dialog | GODEL: Large-Scale Pre-Training for Goal-Directed Dialog | In contrast with earlier models such as DialoGPT, GODEL leverages a new phase of grounded pre-training designed to better support adapting GODEL to a wide range of downstream dialog tasks that require information external to the current conversation (e.g., a database or document) to produce good responses. | PyTorch |

| 2023 | GPT-4 | GPT-4 Technical Report | The model now accepts multimodal inputs: images and text | :-: |

| 2023 | BloombergGPT | BloombergGPT: A Large Language Model for Finance | LLM specialised in financial domain trained on Bloomberg's extensive data sources | |

| 2023 | BLOOM | BLOOM: A 176B-Parameter Open-Access Multilingual Language Model | BLOOM (BigScience Large Open-science Open-access Multilingual Language Model) is a decoder-only Transformer language model that was trained on the ROOTS corpus, a dataset comprising hundreds of sources in 46 natural and 13 programming languages (59 in total) | |

| 2023 | Llama 2 | Llama 2: Open Foundation and Fine-Tuned Chat Models | PyTorch #1 PyTorch #2 | |

| 2023 | Claude | Claude | Claude can analyze 75k words (100k tokens). GPT4 can do just 32.7k tokens. | |

| 2023 | SelfCheckGPT | SelfCheckGPT: Zero-Resource Black-Box Hallucination Detection for Generative Large Language Models | A simple sampling-based approach that can be used to fact-check black-box models in a zero-resource fashion, i.e. without an external database. |

| Name | Size (# Parameters) | Training Tokens | Training data |

|---|---|---|---|

| GLaM | 1.2T | ||

| Gopher | 280B | 300B | |

| BLOOM | 176B | ROOTS corpus | |

| GPT-3 | 175B | ||

| LaMDA | 137B | 168B | 1.56T words of public dialog data and web text |

| Chinchilla | 70B | 1.4T | |

| Llama 2 | 7B, 13B and 70B | ||

| BloombergGPT | 50B | 363B+345B | |

| Falcon40B | 40B | 1T | 1,000B tokens of RefinedWeb |

- M=Million | B=billion | T=Trillion

- ALBERT | Alpaca

- BART | BERT | Big Bird | BLOOM |

- Chinchilla | CLIP | CTRL | chatGPT | Claude

- DALL-E | DALL-E-2 | Decision Transformers | DialoGPT | DistilBERT | DQ-BART |

- ELECTRA | ERNIE

- Flamingo | Falcon40B

- Gato | Gopher | GLaM | GLIDE | GPT | GPT-2 | GPT-3 | GPT-4 | GPT-Neo | Godel | GPT-J

- Imagen | InstructGPT

- Jurassic-1

- LaMDA | Llama 2

- mBART | Megatron | Minerva | MT-NLG

- OPT

- Palm | Pegasus

- RoBERTa

- SeeKer | Swin Transformer | Switch | SelfCheckGPT

- Transformer | T5 | Trajectory Transformers | Transformer XL | Turing-NLG

- ViT

- Wu Dao 2.0 |

- XLM-RoBERTa | XLNet

| Architecture | Models | Tasks |

|---|---|---|

| Encoder-only, aka also called auto-encoding Transformer models | ALBERT, BERT, DistilBERT, ELECTRA, RoBERTa | Sentence classification, named entity recognition, extractive question answering |

| Decoder-only, aka auto-regressive (or causal) Transformer models | CTRL, GPT, GPT-2, Transformer XL | Text generation given a prompt |

| Encoder-Decoder, aka sequence-to-sequence Transformer models | BART, T5, Marian, mBART | Summarisation, translation, generative question answering |

- HuggingFace, a popular NLP library, but it also offers an easy way to deploy models via their Inference API. When you build a model using the HuggingFace library, you can then train it and upload it to their Model Hub. Read more about this here.

- List of notebook

- 2014 | Neural Machine Translation by Jointly Learning to Align and Translate

- 2022 | A SURVEY ON GPT-3

- 2022 | Efficiently Scaling Transformer Inference

- Must-Read Papers on Pre-trained Language Models (PLMs)

- Building a synth with ChatGPT

- PubMed GPT: a Domain-Specific Large Language Model for Biomedical Text

- ChatGPT - Where it lacks

- Awesome ChatGPT Prompts

- ChatGPT vs. GPT3: The Ultimate Comparison

- Prompt Engineering 101: Introduction and resources

- Transformer models: an introduction and catalog — 2022 Edition

- Can GPT-3 or BERT Ever Understand Language?—The Limits of Deep Learning Language Models

- 10 Things You Need to Know About BERT and the Transformer Architecture That Are Reshaping the AI Landscape

- Comprehensive Guide to Transformers

- Unmasking BERT: The Key to Transformer Model Performance

- Transformer NLP Models (Meena and LaMDA): Are They “Sentient” and What Does It Mean for Open-Domain Chatbots?

- Hugging Face Pre-trained Models: Find the Best One for Your Task

- Large Transformer Model Inference Optimization

- 4-part tutorial on how transformers work: Part 1 | Part 2 | Part 3 | Part 4

- What Makes a Dialog Agent Useful?

- Understanding Large Language Models -- A Transformative Reading List

- Prompt Engineering

- Building LLM applications for production

- Developer's Guide To LLMOps: Prompt Engineering, LLM Agents, and Observability

- Argument for using RL LLMs

- Why Google and OpenAI are loosing against the open-source communities

- You probably don't know how to do Prompt Engineering!

- The Full Story of Large Language Models and RLHF

- Understanding OpenAI's Evals

- What We Know About LLMs (Primer)

- F**k You, Show Me The Prompt.

- How to avoid LLM Security risks (Fiddler AI)

- Developing Agentic Workflows With Safety And Accuracy (Fiddler AI)

- Building a search engine with a pre-trained BERT model

- Fine tuning pre-trained BERT model on Text Classification Task

- Fine tuning pre-trained BERT model on the Amazon product review dataset

- Sentiment analysis with Hugging Face transformer

- Fine tuning pre-trained BERT model on YELP review Classification Task

- HuggingFace API

- HuggingFace mask filling

- HuggingFace NER name entity recognition

- HuggingFace question answering within context

- HuggingFace text generation

- HuggingFace text summarisation.ipynb

- HuggingFace zero-shot learning

- Two notebooks are available:

- One with coloured boxes and outside folder

GitHub_MD_rendering - One in black-and-white under folder

GitHub_MD_rendering

- One with coloured boxes and outside folder

- The easiest option would be for you to clone this repository.

- Navigate to Google Colab and open the notebook directly from Colab.

- You can then also write it back to GitHub provided permission to Colab is granted. The whole procedure is automated.

- How to Code BERT Using PyTorch

- miniGPT in PyTorch

- nanoGPT in PyTorch

- TensorFlow implementation of Attention is all you need + article

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-LLM-Large-Language-Models-Notes

Similar Open Source Tools

Awesome-LLM-Large-Language-Models-Notes

Awesome-LLM-Large-Language-Models-Notes is a repository that provides a comprehensive collection of information on various Large Language Models (LLMs) classified by year, size, and name. It includes details on known LLM models, their papers, implementations, and specific characteristics. The repository also covers LLM models classified by architecture, must-read papers, blog articles, tutorials, and implementations from scratch. It serves as a valuable resource for individuals interested in understanding and working with LLMs in the field of Natural Language Processing (NLP).

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

llm-datasets

LLM Datasets is a repository containing high-quality datasets, tools, and concepts for LLM fine-tuning. It provides datasets with characteristics like accuracy, diversity, and complexity to train large language models for various tasks. The repository includes datasets for general-purpose, math & logic, code, conversation & role-play, and agent & function calling domains. It also offers guidance on creating high-quality datasets through data deduplication, data quality assessment, data exploration, and data generation techniques.

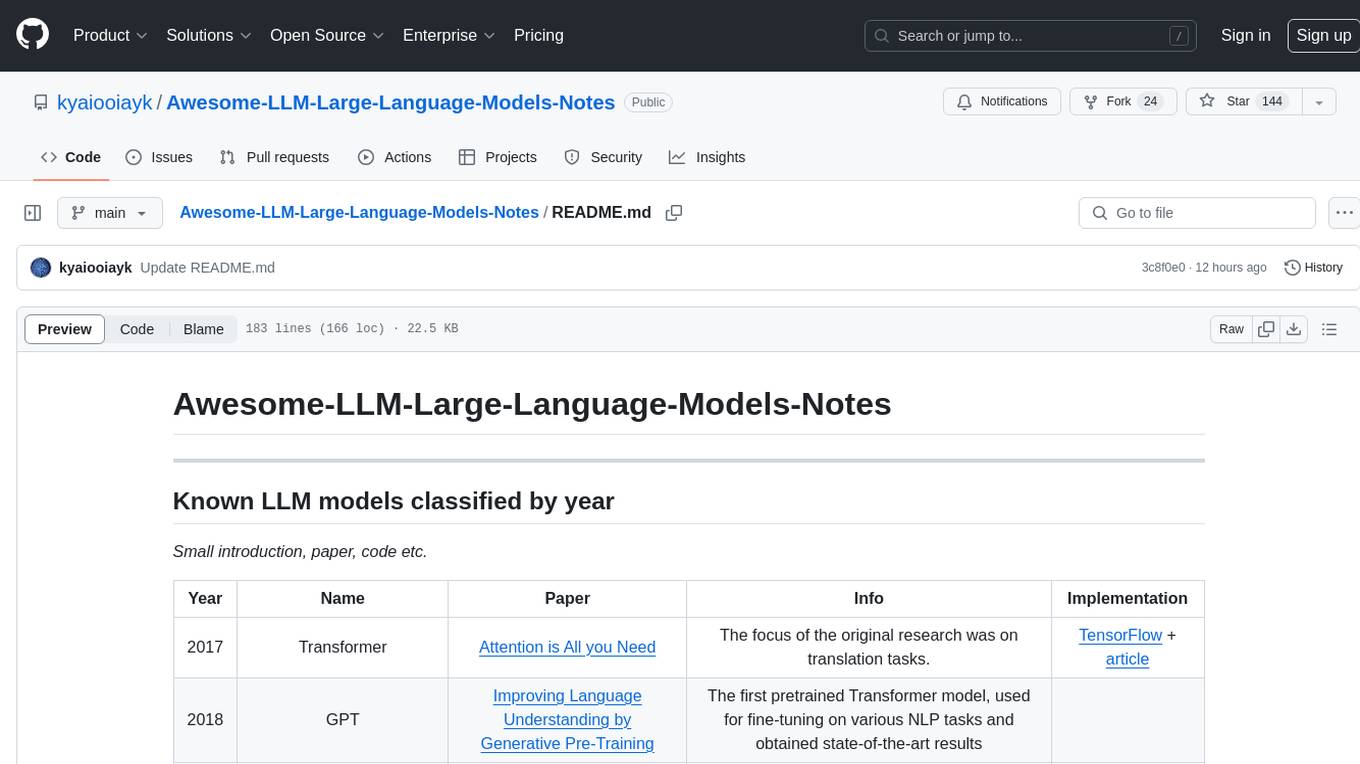

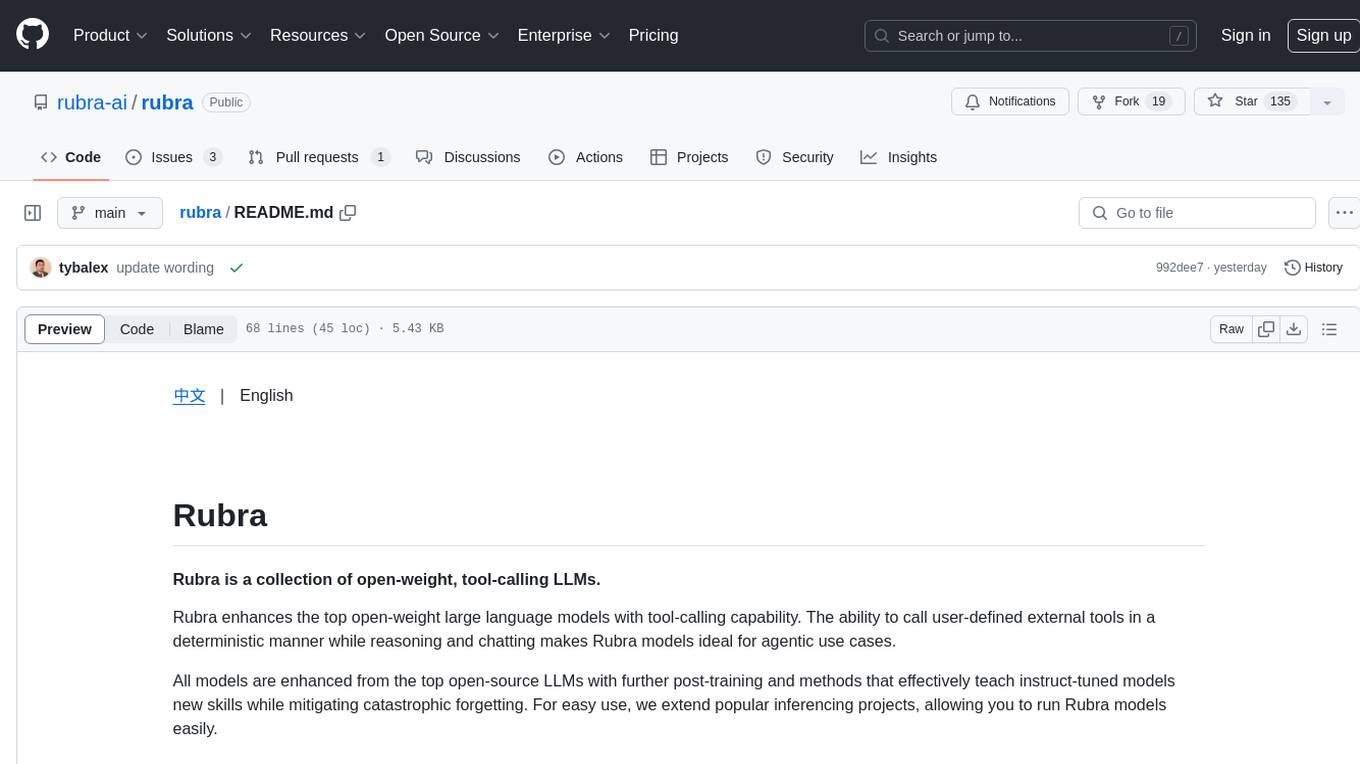

rubra

Rubra is a collection of open-weight large language models enhanced with tool-calling capability. It allows users to call user-defined external tools in a deterministic manner while reasoning and chatting, making it ideal for agentic use cases. The models are further post-trained to teach instruct-tuned models new skills and mitigate catastrophic forgetting. Rubra extends popular inferencing projects for easy use, enabling users to run the models easily.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

MathEval

MathEval is a benchmark designed for evaluating the mathematical capabilities of large models. It includes over 20 evaluation datasets covering various mathematical domains with more than 30,000 math problems. The goal is to assess the performance of large models across different difficulty levels and mathematical subfields. MathEval serves as a reliable reference for comparing mathematical abilities among large models and offers guidance on enhancing their mathematical capabilities in the future.

imodels

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easy to use. _For interpretability in NLP, check out our new package:imodelsX _

together-cookbook

The Together Cookbook is a collection of code and guides designed to help developers build with open source models using Together AI. The recipes provide examples on how to chain multiple LLM calls, create agents that route tasks to specialized models, run multiple LLMs in parallel, break down tasks into parallel subtasks, build agents that iteratively improve responses, perform LoRA fine-tuning and inference, fine-tune LLMs for repetition, improve summarization capabilities, fine-tune LLMs on multi-step conversations, implement retrieval-augmented generation, conduct multimodal search and conditional image generation, visualize vector embeddings, improve search results with rerankers, implement vector search with embedding models, extract structured text from images, summarize and evaluate outputs with LLMs, generate podcasts from PDF content, and get LLMs to generate knowledge graphs.

llm-compression-intelligence

This repository presents the findings of the paper "Compression Represents Intelligence Linearly". The study reveals a strong linear correlation between the intelligence of LLMs, as measured by benchmark scores, and their ability to compress external text corpora. Compression efficiency, derived from raw text corpora, serves as a reliable evaluation metric that is linearly associated with model capabilities. The repository includes the compression corpora used in the paper, code for computing compression efficiency, and data collection and processing pipelines.

aideml

AIDE is a machine learning code generation agent that can generate solutions for machine learning tasks from natural language descriptions. It has the following features: 1. **Instruct with Natural Language**: Describe your problem or additional requirements and expert insights, all in natural language. 2. **Deliver Solution in Source Code**: AIDE will generate Python scripts for the **tested** machine learning pipeline. Enjoy full transparency, reproducibility, and the freedom to further improve the source code! 3. **Iterative Optimization**: AIDE iteratively runs, debugs, evaluates, and improves the ML code, all by itself. 4. **Visualization**: We also provide tools to visualize the solution tree produced by AIDE for a better understanding of its experimentation process. This gives you insights not only about what works but also what doesn't. AIDE has been benchmarked on over 60 Kaggle data science competitions and has demonstrated impressive performance, surpassing 50% of Kaggle participants on average. It is particularly well-suited for tasks that require complex data preprocessing, feature engineering, and model selection.

LLMs-Planning

This repository contains code for three papers related to evaluating large language models on planning and reasoning about change. It includes benchmarking tools and analysis for assessing the planning abilities of large language models. The latest addition evaluates and enhances the planning and scheduling capabilities of a specific language reasoning model. The repository provides a static test set leaderboard showcasing model performance on various tasks with natural language and planning domain prompts.

ai-hands-on

A complete, hands-on guide to becoming an AI Engineer. This repository is designed to help you learn AI from first principles, build real neural networks, and understand modern LLM systems end-to-end. Progress through math, PyTorch, deep learning, transformers, RAG, and OCR with clean, intuitive Jupyter notebooks guiding you at every step. Suitable for beginners and engineers leveling up, providing clarity, structure, and intuition to build real AI systems.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

ai4chem_course

The AI4Chemistry course is a hands-on course focusing on Artificial Intelligence (AI) for Chemistry. It covers topics such as Python programming, machine learning, cheminformatics toolkits, data science, deep learning for chemistry, and advanced AI topics. The course includes exercises using Google Colab and covers supervised and unsupervised machine learning, property prediction models, Bayesian optimization, and more. The course is created by the LIAC team and references open-source community examples. It aims to be accessible to learners with varying levels of experience in Python and ML.

mcp-for-beginners

The Model Context Protocol (MCP) Curriculum for Beginners is an open-source framework designed to standardize interactions between AI models and client applications. It offers a structured learning path with practical coding examples and real-world use cases in popular programming languages like C#, Java, JavaScript, Rust, Python, and TypeScript. Whether you're an AI developer, system architect, or software engineer, this guide provides comprehensive resources for mastering MCP fundamentals and implementation strategies.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.