LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse: Unleash LLMs' potential through curated tutorials, best practices, and ready-to-use code for custom training and inferencing.

Stars: 648

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

README:

Unleash LLMs' potential through curated tutorials, best practices, and ready-to-use code for custom training and inferencing.

Welcome to LLM-PowerHouse, your ultimate resource for unleashing the full potential of Large Language Models (LLMs) with custom training and inferencing. This GitHub repository is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of LLMs and build intelligent applications that push the boundaries of natural language understanding.

- Foundations of LLMs

- Unlock the Art of LLM Science

- Building Production-Ready LLM Applications

- In-Depth Articles

- Codebase Mastery: Building with Perfection

- LLM PlayLab

- LLM Datasets

- What I am learning

- Contributing

- License

- About The Author

This section offers fundamental insights into mathematics, Python, and neural networks. It may not be the ideal starting point, but you can consult it whenever necessary.

⬇️ Ready to Embrace Foundations of LLMs? ⬇️

graph LR

Foundations["📚 Foundations of Large Language Models (LLMs)"] --> ML["1️⃣ Mathematics for Machine Learning"]

Foundations["📚 Foundations of Large Language Models (LLMs)"] --> Python["2️⃣ Python for Machine Learning"]

Foundations["📚 Foundations of Large Language Models (LLMs)"] --> NN["3️⃣ Neural Networks"]

Foundations["📚 Foundations of Large Language Models (LLMs)"] --> NLP["4️⃣ Natural Language Processing (NLP)"]

ML["1️⃣ Mathematics for Machine Learning"] --> LA["📐 Linear Algebra"]

ML["1️⃣ Mathematics for Machine Learning"] --> Calculus["📏 Calculus"]

ML["1️⃣ Mathematics for Machine Learning"] --> Probability["📊 Probability & Statistics"]

Python["2️⃣ Python for Machine Learning"] --> PB["🐍 Python Basics"]

Python["2️⃣ Python for Machine Learning"] --> DS["📊 Data Science Libraries"]

Python["2️⃣ Python for Machine Learning"] --> DP["🔄 Data Preprocessing"]

Python["2️⃣ Python for Machine Learning"] --> MLL["🤖 Machine Learning Libraries"]

NN["3️⃣ Neural Networks"] --> Fundamentals["🔧 Fundamentals"]

NN["3️⃣ Neural Networks"] --> TO["⚙️ Training & Optimization"]

NN["3️⃣ Neural Networks"] --> Overfitting["📉 Overfitting"]

NN["3️⃣ Neural Networks"] --> MLP["🧠 Implementation of MLP"]

NLP["4️⃣ Natural Language Processing (NLP)"] --> TP["📝 Text Preprocessing"]

NLP["4️⃣ Natural Language Processing (NLP)"] --> FET["🔍 Feature Extraction Techniques"]

NLP["4️⃣ Natural Language Processing (NLP)"] --> WE["🌐 Word Embedding"]

NLP["4️⃣ Natural Language Processing (NLP)"] --> RNN["🔄 Recurrent Neural Network"]

Before mastering machine learning, it's essential to grasp the fundamental mathematical concepts that underpin these algorithms.

| Concept | Description |

|---|---|

| Linear Algebra | Crucial for understanding many algorithms, especially in deep learning. Key concepts include vectors, matrices, determinants, eigenvalues, eigenvectors, vector spaces, and linear transformations. |

| Calculus | Important for optimizing continuous functions in many machine learning algorithms. Essential topics include derivatives, integrals, limits, series, multivariable calculus, and gradients. |

| Probability and Statistics | Vital for understanding how models learn from data and make predictions. Key concepts encompass probability theory, random variables, probability distributions, expectations, variance, covariance, correlation, hypothesis testing, confidence intervals, maximum likelihood estimation, and Bayesian inference. |

| Reference | Description | Link |

|---|---|---|

| 3Blue1Brown - The Essence of Linear Algebra | Offers a series of videos providing geometric intuition to fundamental linear algebra concepts. | 🔗 |

| StatQuest with Josh Starmer - Statistics Fundamentals | Provides clear and straightforward explanations for various statistical concepts through video tutorials. | 🔗 |

| AP Statistics Intuition by Ms Aerin | Curates a collection of Medium articles offering intuitive insights into different probability distributions. | 🔗 |

| Immersive Linear Algebra | Presents an alternative visual approach to understanding linear algebra concepts. | 🔗 |

| Khan Academy - Linear Algebra | Tailored for beginners, this resource provides intuitive explanations for fundamental linear algebra topics. | 🔗 |

| Khan Academy - Calculus | Delivers an interactive course covering the essentials of calculus comprehensively. | 🔗 |

| Khan Academy - Probability and Statistics | Offers easy-to-follow material for learning probability and statistics concepts. | 🔗 |

| Concept | Description |

|---|---|

| Python Basics | Mastery of Python programming entails understanding its basic syntax, data types, error handling, and object-oriented programming principles. |

| Data Science Libraries | Familiarity with essential libraries such as NumPy for numerical operations, Pandas for data manipulation, and Matplotlib and Seaborn for data visualization is crucial for effective data analysis. |

| Data Preprocessing | This phase involves crucial tasks such as feature scaling, handling missing data, outlier detection, categorical data encoding, and data partitioning into training, validation, and test sets to ensure data quality and model performance. |

| Machine Learning Libraries | Proficiency with Scikit-learn, a comprehensive library for machine learning, is indispensable. Understanding and implementing algorithms like linear regression, logistic regression, decision trees, random forests, k-nearest neighbors (K-NN), and K-means clustering are essential for building predictive models. Additionally, familiarity with dimensionality reduction techniques like PCA and t-SNE aids in visualizing complex data structures effectively. |

| Reference | Description | Link |

|---|---|---|

| Real Python | A comprehensive resource offering articles and tutorials for both beginner and advanced Python concepts. | 🔗 |

| freeCodeCamp - Learn Python | A lengthy video providing a thorough introduction to all core Python concepts. | 🔗 |

| Python Data Science Handbook | A free digital book that is an excellent resource for learning pandas, NumPy, Matplotlib, and Seaborn. | 🔗 |

| freeCodeCamp - Machine Learning for Everybody | A practical introduction to various machine learning algorithms for beginners. | 🔗 |

| Udacity - Intro to Machine Learning | An introductory course on machine learning for beginners, covering fundamental algorithms. | 🔗 |

| Concept | Description |

|---|---|

| Fundamentals | Understand the basic structure of a neural network, including layers, weights, biases, and activation functions like sigmoid, tanh, and ReLU. |

| Training and Optimization | Learn about backpropagation and various loss functions such as Mean Squared Error (MSE) and Cross-Entropy. Become familiar with optimization algorithms like Gradient Descent, Stochastic Gradient Descent, RMSprop, and Adam. |

| Overfitting | Grasp the concept of overfitting, where a model performs well on training data but poorly on unseen data, and explore regularization techniques like dropout, L1/L2 regularization, early stopping, and data augmentation to mitigate it. |

| Implement a Multilayer Perceptron (MLP) | Build a Multilayer Perceptron (MLP), also known as a fully connected network, using PyTorch. |

| Reference | Description | Link |

|---|---|---|

| 3Blue1Brown - But what is a Neural Network? | This video provides an intuitive explanation of neural networks and their inner workings. | 🔗 |

| freeCodeCamp - Deep Learning Crash Course | This video efficiently introduces the most important concepts in deep learning. | 🔗 |

| Fast.ai - Practical Deep Learning | A free course designed for those with coding experience who want to learn about deep learning. | 🔗 |

| Patrick Loeber - PyTorch Tutorials | A series of videos for complete beginners to learn about PyTorch. | 🔗 |

| Concept | Description |

|---|---|

| Text Preprocessing | Learn various text preprocessing steps such as tokenization (splitting text into words or sentences), stemming (reducing words to their root form), lemmatization (similar to stemming but considers the context), and stop word removal. |

| Feature Extraction Techniques | Become familiar with techniques to convert text data into a format understandable by machine learning algorithms. Key methods include Bag-of-Words (BoW), Term Frequency-Inverse Document Frequency (TF-IDF), and n-grams. |

| Word Embeddings | Understand word embeddings, a type of word representation that allows words with similar meanings to have similar representations. Key methods include Word2Vec, GloVe, and FastText. |

| Recurrent Neural Networks (RNNs) | Learn about RNNs, a type of neural network designed to work with sequence data, and explore LSTMs and GRUs, two RNN variants capable of learning long-term dependencies. |

| Reference | Description | Link |

|---|---|---|

| RealPython - NLP with spaCy in Python | An exhaustive guide on using the spaCy library for NLP tasks in Python. | 🔗 |

| Kaggle - NLP Guide | A collection of notebooks and resources offering a hands-on explanation of NLP in Python. | 🔗 |

| Jay Alammar - The Illustrated Word2Vec | A detailed reference for understanding the Word2Vec architecture. | 🔗 |

| Jake Tae - PyTorch RNN from Scratch | A practical and straightforward implementation of RNN, LSTM, and GRU models in PyTorch. | 🔗 |

| colah's blog - Understanding LSTM Networks | A theoretical article explaining LSTM networks. | 🔗 |

In this segment of the curriculum, participants delve into mastering the creation of top-notch LLMs through cutting-edge methodologies.

⬇️ Ready to Embrace LLM Science? ⬇️

graph LR

Scientist["Art of LLM Science 👩🔬"] --> Architecture["The LLM architecture 🏗️"]

Scientist["Art of LLM Science 👩🔬"] --> Instruction["Building an instruction dataset 📚"]

Scientist["Art of LLM Science 👩🔬"] --> Pretraining["Pretraining models 🛠️"]

Scientist["Art of LLM Science 👩🔬"] --> FineTuning["Supervised Fine-Tuning 🎯"]

Scientist["Art of LLM Science 👩🔬"] --> RLHF["RLHF 🔍"]

Scientist["Art of LLM Science 👩🔬"] --> Evaluation["Evaluation 📊"]

Scientist["Art of LLM Science 👩🔬"] --> Quantization["Quantization ⚖️"]

Scientist["Art of LLM Science 👩🔬"] --> Trends["New Trends 📈"]

Architecture["The LLM architecture 🏗️"] --> HLV["High Level View 🔍"]

Architecture["The LLM architecture 🏗️"] --> Tokenization["Tokenization 🔠"]

Architecture["The LLM architecture 🏗️"] --> Attention["Attention Mechanisms 🧠"]

Architecture["The LLM architecture 🏗️"] --> Generation["Text Generation ✍️"]

Instruction["Building an instruction dataset 📚"] --> Alpaca["Alpaca-like dataset 🦙"]

Instruction["Building an instruction dataset 📚"] --> Advanced["Advanced Techniques 📈"]

Instruction["Building an instruction dataset 📚"] --> Filtering["Filtering Data 🔍"]

Instruction["Building an instruction dataset 📚"] --> Prompt["Prompt Templates 📝"]

Pretraining["Pretraining models 🛠️"] --> Pipeline["Data Pipeline 🚀"]

Pretraining["Pretraining models 🛠️"] --> CLM["Casual Language Modeling 📝"]

Pretraining["Pretraining models 🛠️"] --> Scaling["Scaling Laws 📏"]

Pretraining["Pretraining models 🛠️"] --> HPC["High-Performance Computing 💻"]

FineTuning["Supervised Fine-Tuning 🎯"] --> Full["Full fine-tuning 🛠️"]

FineTuning["Supervised Fine-Tuning 🎯"] --> Lora["Lora and QLoRA 🌀"]

FineTuning["Supervised Fine-Tuning 🎯"] --> Axoloti["Axoloti 🦠"]

FineTuning["Supervised Fine-Tuning 🎯"] --> DeepSpeed["DeepSpeed ⚡"]

RLHF["RLHF 🔍"] --> Preference["Preference Datasets 📝"]

RLHF["RLHF 🔍"] --> Optimization["Proximal Policy Optimization 🎯"]

RLHF["RLHF 🔍"] --> DPO["Direct Preference Optimization 📈"]

Evaluation["Evaluation 📊"] --> Traditional["Traditional Metrics 📏"]

Evaluation["Evaluation 📊"] --> General["General Benchmarks 📈"]

Evaluation["Evaluation 📊"] --> Task["Task-specific Benchmarks 📋"]

Evaluation["Evaluation 📊"] --> HF["Human Evaluation 👩🔬"]

Quantization["Quantization ⚖️"] --> Base["Base Techniques 🛠️"]

Quantization["Quantization ⚖️"] --> GGUF["GGUF and llama.cpp 🐐"]

Quantization["Quantization ⚖️"] --> GPTQ["GPTQ and EXL2 🤖"]

Quantization["Quantization ⚖️"] --> AWQ["AWQ 🚀"]

Trends["New Trends 📈"] --> Positional["Positional Embeddings 🎯"]

Trends["New Trends 📈"] --> Merging["Model Merging 🔄"]

Trends["New Trends 📈"] --> MOE["Mixture of Experts 🎭"]

Trends["New Trends 📈"] --> Multimodal["Multimodal Models 📷"]An overview of the Transformer architecture, with emphasis on inputs (tokens) and outputs (logits), and the importance of understanding the vanilla attention mechanism and its improved versions.

| Concept | Description |

|---|---|

| Transformer Architecture (High-Level) | Review encoder-decoder Transformers, specifically the decoder-only GPT architecture used in modern LLMs. |

| Tokenization | Understand how raw text is converted into tokens (words or subwords) for the model to process. |

| Attention Mechanisms | Grasp the theory behind attention, including self-attention and scaled dot-product attention, which allows the model to focus on relevant parts of the input during output generation. |

| Text Generation | Learn different methods the model uses to generate output sequences. Common strategies include greedy decoding, beam search, top-k sampling, and nucleus sampling. |

| Reference | Description | Link |

|---|---|---|

| The Illustrated Transformer by Jay Alammar | A visual and intuitive explanation of the Transformer model | 🔗 |

| The Illustrated GPT-2 by Jay Alammar | Focuses on the GPT architecture, similar to Llama's. | 🔗 |

| Visual intro to Transformers by 3Blue1Brown | Simple visual intro to Transformers | 🔗 |

| LLM Visualization by Brendan Bycroft | 3D visualization of LLM internals | 🔗 |

| nanoGPT by Andrej Karpathy | Reimplementation of GPT from scratch (for programmers) | 🔗 |

| Decoding Strategies in LLMs | Provides code and visuals for decoding strategies | 🔗 |

While it's easy to find raw data from Wikipedia and other websites, it's difficult to collect pairs of instructions and answers in the wild. Like in traditional machine learning, the quality of the dataset will directly influence the quality of the model, which is why it might be the most important component in the fine-tuning process.

| Concept | Description |

|---|---|

| Alpaca-like dataset | This dataset generation method utilizes the OpenAI API (GPT) to synthesize data from scratch, allowing for the specification of seeds and system prompts to foster diversity within the dataset. |

| Advanced techniques | Delve into methods for enhancing existing datasets with Evol-Instruct, and explore approaches for generating top-tier synthetic data akin to those outlined in the Orca and phi-1 research papers. |

| Filtering data | Employ traditional techniques such as regex, near-duplicate removal, and prioritizing answers with substantial token counts to refine datasets. |

| Prompt templates | Recognize the absence of a definitive standard for structuring instructions and responses, underscoring the importance of familiarity with various chat templates like ChatML and Alpaca. |

| Reference | Description | Link |

|---|---|---|

| Preparing a Dataset for Instruction tuning by Thomas Capelle | Explores the Alpaca and Alpaca-GPT4 datasets and discusses formatting methods. | 🔗 |

| Generating a Clinical Instruction Dataset by Solano Todeschini | Provides a tutorial on creating a synthetic instruction dataset using GPT-4. | 🔗 |

| GPT 3.5 for news classification by Kshitiz Sahay | Demonstrates using GPT 3.5 to create an instruction dataset for fine-tuning Llama 2 in news classification. | 🔗 |

| Dataset creation for fine-tuning LLM | Notebook containing techniques to filter a dataset and upload the result. | 🔗 |

| Chat Template by Matthew Carrigan | Hugging Face's page about prompt templates | 🔗 |

Pre-training, being both lengthy and expensive, is not the primary focus of this course. While it's beneficial to grasp the fundamentals of pre-training, practical experience in this area is not mandatory.

| Concept | Description |

|---|---|

| Data pipeline | Pre-training involves handling vast datasets, such as the 2 trillion tokens used in Llama 2, which necessitates tasks like filtering, tokenization, and vocabulary preparation. |

| Causal language modeling | Understand the distinction between causal and masked language modeling, including insights into the corresponding loss functions. Explore efficient pre-training techniques through resources like Megatron-LM or gpt-neox. |

| Scaling laws | Delve into the scaling laws, which elucidate the anticipated model performance based on factors like model size, dataset size, and computational resources utilized during training. |

| High-Performance Computing | While beyond the scope of this discussion, a deeper understanding of HPC becomes essential for those considering building their own LLMs from scratch, encompassing aspects like hardware selection and distributed workload management. |

| Reference | Description | Link |

|---|---|---|

| LLMDataHub by Junhao Zhao | Offers a carefully curated collection of datasets tailored for pre-training, fine-tuning, and RLHF. | 🔗 |

| Training a causal language model from scratch by Hugging Face | Guides users through the process of pre-training a GPT-2 model from the ground up using the transformers library. | 🔗 |

| TinyLlama by Zhang et al. | Provides insights into the training process of a Llama model from scratch, offering a comprehensive understanding. | 🔗 |

| Causal language modeling by Hugging Face | Explores the distinctions between causal and masked language modeling, alongside a tutorial on efficiently fine-tuning a DistilGPT-2 model. | 🔗 |

| Chinchilla's wild implications by nostalgebraist | Delves into the scaling laws and their implications for LLMs, offering valuable insights into their broader significance. | 🔗 |

| BLOOM by BigScience | Provides a comprehensive overview of the BLOOM model's construction, offering valuable insights into its engineering aspects and encountered challenges. | 🔗 |

| OPT-175 Logbook by Meta | Offers research logs detailing the successes and failures encountered during the pre-training of a large language model with 175B parameters. | 🔗 |

| LLM 360 | Presents a comprehensive framework for open-source LLMs, encompassing training and data preparation code, datasets, evaluation metrics, and models. | 🔗 |

Pre-trained models are trained to predict the next word, so they're not great as assistants. But with SFT, you can adjust them to follow instructions. Plus, you can fine-tune them on different data, even private stuff GPT-4 hasn't seen, and use them without needing paid APIs like OpenAI's.

| Concept | Description |

|---|---|

| Full fine-tuning | Full fine-tuning involves training all parameters in the model, though it's not the most efficient approach, it can yield slightly improved results. |

| LoRA | LoRA, a parameter-efficient technique (PEFT) based on low-rank adapters, focuses on training only these adapters rather than all model parameters. |

| QLoRA | QLoRA, another PEFT stemming from LoRA, also quantizes model weights to 4 bits and introduces paged optimizers to manage memory spikes efficiently. |

| Axolotl | Axolotl stands as a user-friendly and potent fine-tuning tool, extensively utilized in numerous state-of-the-art open-source models. |

| DeepSpeed | DeepSpeed facilitates efficient pre-training and fine-tuning of large language models across multi-GPU and multi-node settings, often integrated within Axolotl for enhanced performance. |

| Reference | Description | Link |

|---|---|---|

| The Novice's LLM Training Guide by Alpin | Provides an overview of essential concepts and parameters for fine-tuning LLMs. | 🔗 |

| LoRA insights by Sebastian Raschka | Offers practical insights into LoRA and guidance on selecting optimal parameters. | 🔗 |

| Fine-Tune Your Own Llama 2 Model | Presents a hands-on tutorial on fine-tuning a Llama 2 model using Hugging Face libraries. | 🔗 |

| Padding Large Language Models by Benjamin Marie | Outlines best practices for padding training examples in causal LLMs. | 🔗 |

Following supervised fine-tuning, RLHF serves as a crucial step in harmonizing the LLM's responses with human expectations. This entails acquiring preferences from human or artificial feedback, thereby mitigating biases, implementing model censorship, or fostering more utilitarian behavior. RLHF is notably more intricate than SFT and is frequently regarded as discretionary.

| Concept | Description |

|---|---|

| Preference datasets | Typically containing several answers with some form of ranking, these datasets are more challenging to produce than instruction datasets. |

| Proximal Policy Optimization | This algorithm utilizes a reward model to predict whether a given text is highly ranked by humans. It then optimizes the SFT model using a penalty based on KL divergence. |

| Direct Preference Optimization | DPO simplifies the process by framing it as a classification problem. It employs a reference model instead of a reward model (requiring no training) and only necessitates one hyperparameter, rendering it more stable and efficient. |

| Reference | Description | Link |

|---|---|---|

| An Introduction to Training LLMs using RLHF by Ayush Thakur | Explain why RLHF is desirable to reduce bias and increase performance in LLMs. | 🔗 |

| Illustration RLHF by Hugging Face | Introduction to RLHF with reward model training and fine-tuning with reinforcement learning. | 🔗 |

| StackLLaMA by Hugging Face | Tutorial to efficiently align a LLaMA model with RLHF using the transformers library | 🔗 |

| LLM Training RLHF and Its Alternatives by Sebastian Rashcka | Overview of the RLHF process and alternatives like RLAIF. | 🔗 |

| Fine-tune Llama2 with DPO | Tutorial to fine-tune a Llama2 model with DPO | 🔗 |

Assessing LLMs is an often overlooked aspect of the pipeline, characterized by its time-consuming nature and moderate reliability. Your evaluation criteria should be tailored to your downstream task, while bearing in mind Goodhart's law: "When a measure becomes a target, it ceases to be a good measure."

| Concept | Description |

|---|---|

| Traditional metrics | Metrics like perplexity and BLEU score, while less favored now due to their contextual limitations, remain crucial for comprehension and determining their applicable contexts. |

| General benchmarks | The primary benchmark for general-purpose LLMs, such as ChatGPT, is the Open LLM Leaderboard, which is founded on the Language Model Evaluation Harness. Other notable benchmarks include BigBench and MT-Bench. |

| Task-specific benchmarks | Tasks like summarization, translation, and question answering boast dedicated benchmarks, metrics, and even subdomains (e.g., medical, financial), exemplified by PubMedQA for biomedical question answering. |

| Human evaluation | The most dependable evaluation method entails user acceptance rates or human-comparison metrics. Additionally, logging user feedback alongside chat traces, facilitated by tools like LangSmith, aids in pinpointing potential areas for enhancement. |

| Reference | Description | Link |

|---|---|---|

| Perplexity of fixed-length models by Hugging Face | Provides an overview of perplexity along with code to implement it using the transformers library. | 🔗 |

| BLEU at your own risk by Rachael Tatman | Offers insights into the BLEU score, highlighting its various issues through examples. | 🔗 |

| A Survey on Evaluation of LLMs by Chang et al. | Presents a comprehensive paper covering what to evaluate, where to evaluate, and how to evaluate language models. | 🔗 |

| Chatbot Arena Leaderboard by lmsys | Showcases an Elo rating system for general-purpose language models, based on comparisons made by humans. | 🔗 |

Quantization involves converting the weights (and activations) of a model to lower precision. For instance, weights initially stored using 16 bits may be transformed into a 4-bit representation. This technique has gained significance in mitigating the computational and memory expenses linked with LLMs

| Concept | Description |

|---|---|

| Base techniques | Explore various levels of precision (FP32, FP16, INT8, etc.) and learn how to conduct naïve quantization using techniques like absmax and zero-point. |

| GGUF and llama.cpp | Originally intended for CPU execution, llama.cpp and the GGUF format have emerged as popular tools for running LLMs on consumer-grade hardware. |

| GPTQ and EXL2 | GPTQ and its variant, the EXL2 format, offer remarkable speed but are limited to GPU execution. However, quantizing models using these formats can be time-consuming. |

| AWQ | This newer format boasts higher accuracy compared to GPTQ, as indicated by lower perplexity, but demands significantly more VRAM and may not necessarily exhibit faster performance. |

| Reference | Description | Link |

|---|---|---|

| Introduction to quantization | Offers an overview of quantization, including absmax and zero-point quantization, and demonstrates LLM.int8() with accompanying code. | 🔗 |

| Quantize Llama models with llama.cpp | Provides a tutorial on quantizing a Llama 2 model using llama.cpp and the GGUF format. | 🔗 |

| 4-bit LLM Quantization with GPTQ | Offers a tutorial on quantizing an LLM using the GPTQ algorithm with AutoGPTQ. | 🔗 |

| ExLlamaV2 | Presents a guide on quantizing a Mistral model using the EXL2 format and running it with the ExLlamaV2 library, touted as the fastest library for LLMs. | 🔗 |

| Understanding Activation-Aware Weight Quantization by FriendliAI | Provides an overview of the AWQ technique and its associated benefits. | 🔗 |

| Concept | Description |

|---|---|

| Positional embeddings | Explore how LLMs encode positions, focusing on relative positional encoding schemes like RoPE. Implement extensions to context length using techniques such as YaRN (which multiplies the attention matrix by a temperature factor) or ALiBi (applying attention penalty based on token distance). |

| Model merging | Model merging has gained popularity as a method for creating high-performance models without additional fine-tuning. The widely-used mergekit library incorporates various merging methods including SLERP, DARE, and TIES. |

| Mixture of Experts | The resurgence of the MoE architecture, exemplified by Mixtral, has led to the emergence of alternative approaches like frankenMoE, seen in community-developed models such as Phixtral, offering cost-effective and high-performance alternatives. |

| Multimodal models | These models, such as CLIP, Stable Diffusion, or LLaVA, process diverse inputs (text, images, audio, etc.) within a unified embedding space, enabling versatile applications like text-to-image generation. |

| glaive-function-calling-v2 | High-quality dataset with pairs of instructions and answers in different languages. See Locutusque/function-calling-chatml for a variant without conversation tags. |

| Agent-FLAN | Mix of AgentInstruct, ToolBench, and ShareGPT datasets. |

| Reference | Description | Link |

|---|---|---|

| Extending the RoPE by EleutherAI | Article summarizing various position-encoding techniques. | 🔗 |

| Understanding YaRN by Rajat Chawla | Introduction to YaRN. | 🔗 |

| Merge LLMs with mergekit | Tutorial on model merging using mergekit. | 🔗 |

| Mixture of Experts Explained by Hugging Face | Comprehensive guide on MoEs and their functioning. | 🔗 |

| Large Multimodal Models by Chip Huyen: | Overview of multimodal systems and recent developments in the field. | 🔗 |

Learn to create and deploy robust LLM-powered applications, focusing on model augmentation and practical deployment strategies for production environments.

⬇️ Ready to Build Production-Ready LLM Applications?⬇️

graph LR

Scientist["Production-Ready LLM Applications 👩🔬"] --> Architecture["Running LLMs 🏗️"]

Scientist --> Storage["Building a Vector Storage 📦"]

Scientist --> Retrieval["Retrieval Augmented Generation 🔍"]

Scientist --> AdvancedRAG["Advanced RAG ⚙️"]

Scientist --> Optimization["Inference Optimization ⚡"]

Scientist --> Deployment["Deploying LLMs 🚀"]

Scientist --> Secure["Securing LLMs 🔒"]

Architecture --> APIs["LLM APIs 🌐"]

Architecture --> OpenSource["Open Source LLMs 🌍"]

Architecture --> PromptEng["Prompt Engineering 💬"]

Architecture --> StructOutputs["Structure Outputs 🗂️"]

Storage --> Ingest["Ingesting Documents 📥"]

Storage --> Split["Splitting Documents ✂️"]

Storage --> Embed["Embedding Models 🧩"]

Storage --> VectorDB["Vector Databases 📊"]

Retrieval --> Orchestrators["Orchestrators 🎼"]

Retrieval --> Retrievers["Retrievers 🤖"]

Retrieval --> Memory["Memory 🧠"]

Retrieval --> Evaluation["Evaluation 📈"]

AdvancedRAG --> Query["Query Construction 🔧"]

AdvancedRAG --> Agents["Agents and Tools 🛠️"]

AdvancedRAG --> PostProcess["Post Processing 🔄"]

AdvancedRAG --> Program["Program LLMs 💻"]

Optimization --> FlashAttention["Flash Attention ⚡"]

Optimization --> KeyValue["Key-value Cache 🔑"]

Optimization --> SpecDecoding["Speculative Decoding 🚀"]

Deployment --> LocalDeploy["Local Deployment 🖥️"]

Deployment --> DemoDeploy["Demo Deployment 🎤"]

Deployment --> ServerDeploy["Server Deployment 🖧"]

Deployment --> EdgeDeploy["Edge Deployment 🌐"]

Secure --> PromptEngSecure["Prompt Engineering 🔐"]

Secure --> Backdoors["Backdoors 🚪"]

Secure --> Defensive["Defensive measures 🛡️"]Running LLMs can be demanding due to significant hardware requirements. Based on your use case, you might opt to use a model through an API (like GPT-4) or run it locally. In either scenario, employing additional prompting and guidance techniques can improve and constrain the output for your applications.

| Category | Details |

|---|---|

| LLM APIs | APIs offer a convenient way to deploy LLMs. This space is divided between private LLMs (OpenAI, Google, Anthropic, Cohere, etc.) and open-source LLMs (OpenRouter, Hugging Face, Together AI, etc.). |

| Open-source LLMs | The Hugging Face Hub is an excellent resource for finding LLMs. Some can be run directly in Hugging Face Spaces, or downloaded and run locally using apps like LM Studio or through the command line interface with llama.cpp or Ollama. |

| Prompt Engineering | Techniques such as zero-shot prompting, few-shot prompting, chain of thought, and ReAct are commonly used in prompt engineering. These methods are more effective with larger models but can also be adapted for smaller ones. |

| Structuring Outputs | Many tasks require outputs to be in a specific format, such as a strict template or JSON. Libraries like LMQL, Outlines, and Guidance can help guide the generation process to meet these structural requirements. |

| Reference | Description | Link |

|---|---|---|

| Run an LLM locally with LM Studio by Nisha Arya | A brief guide on how to use LM Studio for running a local LLM. | 🔗 |

| Prompt engineering guide by DAIR.AI | An extensive list of prompt techniques with examples. | 🔗 |

| Outlines - Quickstart | A quickstart guide detailing the guided generation techniques enabled by the Outlines library. | 🔗 |

| LMQL - Overview | An introduction to the LMQL language, explaining its features and usage. | 🔗 |

Creating a vector storage is the first step in building a Retrieval Augmented Generation (RAG) pipeline. This involves loading and splitting documents, and then using the relevant chunks to produce vector representations (embeddings) that are stored for future use during inference.

| Category | Details |

|---|---|

| Ingesting Documents | Document loaders are convenient wrappers that handle various formats such as PDF, JSON, HTML, Markdown, etc. They can also retrieve data directly from some databases and APIs (e.g., GitHub, Reddit, Google Drive). |

| Splitting Documents | Text splitters break down documents into smaller, semantically meaningful chunks. Instead of splitting text after a certain number of characters, it's often better to split by header or recursively, with some additional metadata. |

| Embedding Models | Embedding models convert text into vector representations, providing a deeper and more nuanced understanding of language, which is essential for performing semantic search. |

| Vector Databases | Vector databases (like Chroma, Pinecone, Milvus, FAISS, Annoy, etc.) store embedding vectors and enable efficient retrieval of data based on vector similarity. |

| Reference | Description | Link |

|---|---|---|

| LangChain - Text splitters | A list of different text splitters implemented in LangChain. | 🔗 |

| Sentence Transformers library | A popular library for embedding models. | 🔗 |

| MTEB Leaderboard | Leaderboard for evaluating embedding models. | 🔗 |

| The Top 5 Vector Databases by Moez Ali | A comparison of the best and most popular vector databases. | 🔗 |

Using RAG, LLMs access relevant documents from a database to enhance the precision of their responses. This method is widely used to expand the model's knowledge base without the need for fine-tuning.

| Category | Details |

|---|---|

| Orchestrators | Orchestrators (like LangChain, LlamaIndex, FastRAG, etc.) are popular frameworks to connect your LLMs with tools, databases, memories, etc. and augment their abilities. |

| Retrievers | User instructions are not optimized for retrieval. Different techniques (e.g., multi-query retriever, HyDE, etc.) can be applied to rephrase/expand them and improve performance. |

| Memory | To remember previous instructions and answers, LLMs and chatbots like ChatGPT add this history to their context window. This buffer can be improved with summarization (e.g., using a smaller LLM), a vector store + RAG, etc. |

| Evaluation | We need to evaluate both the document retrieval (context precision and recall) and generation stages (faithfulness and answer relevancy). It can be simplified with tools Ragas and DeepEval. |

| Reference | Description | Link |

|---|---|---|

| Llamaindex - High-level concepts | Main concepts to know when building RAG pipelines. | 🔗 |

| Pinecone - Retrieval Augmentation | Overview of the retrieval augmentation process. | 🔗 |

| LangChain - Q&A with RAG | Step-by-step tutorial to build a typical RAG pipeline. | 🔗 |

| LangChain - Memory types | List of different types of memories with relevant usage. | 🔗 |

| RAG pipeline - Metrics | Overview of the main metrics used to evaluate RAG pipelines. | 🔗 |

Real-world applications often demand intricate pipelines that utilize SQL or graph databases and dynamically choose the appropriate tools and APIs. These sophisticated methods can improve a basic solution and offer extra capabilities.

| Category | Details |

|---|---|

| Query construction | Structured data stored in traditional databases requires a specific query language like SQL, Cypher, metadata, etc. We can directly translate the user instruction into a query to access the data with query construction. |

| Agents and tools | Agents augment LLMs by automatically selecting the most relevant tools to provide an answer. These tools can be as simple as using Google or Wikipedia, or more complex like a Python interpreter or Jira. |

| Post-processing | The final step processes the inputs that are fed to the LLM. It enhances the relevance and diversity of documents retrieved with re-ranking, RAG-fusion, and classification. |

| Program LLMs | Frameworks like DSPy allow you to optimize prompts and weights based on automated evaluations in a programmatic way. |

| Reference | Description | Link |

|---|---|---|

| LangChain - Query Construction | Blog post about different types of query construction. | 🔗 |

| LangChain - SQL | Tutorial on how to interact with SQL databases with LLMs, involving Text-to-SQL and an optional SQL agent. | 🔗 |

| Pinecone - LLM agents | Introduction to agents and tools with different types. | 🔗 |

| LLM Powered Autonomous Agents by Lilian Weng | More theoretical article about LLM agents. | 🔗 |

| LangChain - OpenAI's RAG | Overview of the RAG strategies employed by OpenAI, including post-processing. | 🔗 |

| DSPy in 8 Steps | General-purpose guide to DSPy introducing modules, signatures, and optimizers. | 🔗 |

Text generation is an expensive process that requires powerful hardware. Besides quantization, various techniques have been proposed to increase throughput and lower inference costs.

| Category | Details |

|---|---|

| Flash Attention | Optimization of the attention mechanism to transform its complexity from quadratic to linear, speeding up both training and inference. |

| Key-value cache | Understanding the key-value cache and the improvements introduced in Multi-Query Attention (MQA) and Grouped-Query Attention (GQA). |

| Speculative decoding | Using a small model to produce drafts that are then reviewed by a larger model to speed up text generation. |

| Reference | Description | Link |

|---|---|---|

| GPU Inference by Hugging Face | Explain how to optimize inference on GPUs. | 🔗 |

| LLM Inference by Databricks | Best practices for how to optimize LLM inference in production. | 🔗 |

| Optimizing LLMs for Speed and Memory by Hugging Face | Explain three main techniques to optimize speed and memory, namely quantization, Flash Attention, and architectural innovations. | 🔗 |

| Assisted Generation by Hugging Face | HF's version of speculative decoding, it's an interesting blog post about how it works with code to implement it. | 🔗 |

Deploying LLMs at scale is a complex engineering task that may require multiple GPU clusters. However, demos and local applications can often be achieved with significantly less complexity.

| Category | Details |

|---|---|

| Local deployment | Privacy is an important advantage that open-source LLMs have over private ones. Local LLM servers (LM Studio, Ollama, oobabooga, kobold.cpp, etc.) capitalize on this advantage to power local apps. |

| Demo deployment | Frameworks like Gradio and Streamlit are helpful to prototype applications and share demos. You can also easily host them online, for example using Hugging Face Spaces. |

| Server deployment | Deploying LLMs at scale requires cloud infrastructure (see also SkyPilot) or on-prem infrastructure and often leverages optimized text generation frameworks like TGI, vLLM, etc. |

| Edge deployment | In constrained environments, high-performance frameworks like MLC LLM and mnn-llm can deploy LLMs in web browsers, Android, and iOS. |

| Reference | Description | Link |

|---|---|---|

| Streamlit - Build a basic LLM app | Tutorial to make a basic ChatGPT-like app using Streamlit. | 🔗 |

| HF LLM Inference Container | Deploy LLMs on Amazon SageMaker using Hugging Face's inference container. | 🔗 |

| Philschmid blog by Philipp Schmid | Collection of high-quality articles about LLM deployment using Amazon SageMaker. | 🔗 |

| Optimizing latency by Hamel Husain | Comparison of TGI, vLLM, CTranslate2, and mlc in terms of throughput and latency. | 🔗 |

Along with the usual security concerns of software, LLMs face distinct vulnerabilities arising from their training and prompting methods.

| Category | Details |

|---|---|

| Prompt hacking | Techniques related to prompt engineering, including prompt injection (adding instructions to alter the model’s responses), data/prompt leaking (accessing original data or prompts), and jailbreaking (crafting prompts to bypass safety features). |

| Backdoors | Attack vectors targeting the training data itself, such as poisoning the training data with false information or creating backdoors (hidden triggers to alter the model’s behavior during inference). |

| Defensive measures | Protecting LLM applications involves testing them for vulnerabilities (e.g., using red teaming and tools like garak) and monitoring them in production (using a framework like langfuse). |

| Reference | Description | Link |

|---|---|---|

| OWASP LLM Top 10 by HEGO Wiki | List of the 10 most critical vulnerabilities found in LLM applications. | 🔗 |

| Prompt Injection Primer by Joseph Thacker | Short guide dedicated to prompt injection techniques for engineers. | 🔗 |

| LLM Security by @llm_sec | Extensive list of resources related to LLM security. | 🔗 |

| Red teaming LLMs by Microsoft | Guide on how to perform red teaming assessments with LLMs. | 🔗 |

| Article | Resources |

|---|---|

| LLMs Overview | 🔗 |

| NLP Embeddings | 🔗 |

| Preprocessing | 🔗 |

| Sampling | 🔗 |

| Tokenization | 🔗 |

| Transformer | 🔗 |

| Interview Preparation | 🔗 |

| Article | Resources |

|---|---|

| Generative Pre-trained Transformer (GPT) | 🔗 |

| Article | Resources |

|---|---|

| Activation Function | 🔗 |

| Fine Tuning Models | 🔗 |

| Enhancing Model Compression: Inference and Training Optimization Strategies | 🔗 |

| Model Summary | 🔗 |

| Splitting Datasets | 🔗 |

| Train Loss > Val Loss | 🔗 |

| Parameter Efficient Fine-Tuning | 🔗 |

| Gradient Descent and Backprop | 🔗 |

| Overfitting And Underfitting | 🔗 |

| Gradient Accumulation and Checkpointing | 🔗 |

| Flash Attention | 🔗 |

| Article | Resources |

|---|---|

| Quantization | 🔗 |

| Intro to Quantization | 🔗 |

| Knowledge Distillation | 🔗 |

| Pruning | 🔗 |

| DeepSpeed | 🔗 |

| Sharding | 🔗 |

| Mixed Precision Training | 🔗 |

| Inference Optimization | 🔗 |

| Article | Resources |

|---|---|

| Classification | 🔗 |

| Regression | 🔗 |

| Generative Text Models | 🔗 |

| Article | Resources |

|---|---|

| Open Source LLM Space for Commercial Use | 🔗 |

| Open Source LLM Space for Research Use | 🔗 |

| LLM Training Frameworks | 🔗 |

| Effective Deployment Strategies for Language Models | 🔗 |

| Tutorials about LLM | 🔗 |

| Courses about LLM | 🔗 |

| Deployment | 🔗 |

| Article | Resources |

|---|---|

| Lambda Labs vs AWS Cost Analysis | 🔗 |

| Neural Network Visualization | 🔗 |

| Title | Repository |

|---|---|

| Instruction based data prepare using OpenAI | 🔗 |

| Optimal Fine-Tuning using the Trainer API: From Training to Model Inference | 🔗 |

| Efficient Fine-tuning and inference LLMs with PEFT and LoRA | 🔗 |

| Efficient Fine-tuning and inference LLMs Accelerate | 🔗 |

| Efficient Fine-tuning with T5 | 🔗 |

| Train Large Language Models with LoRA and Hugging Face | 🔗 |

| Fine-Tune Your Own Llama 2 Model in a Colab Notebook | 🔗 |

| Guanaco Chatbot Demo with LLaMA-7B Model | 🔗 |

| PEFT Finetune-Bloom-560m-tagger | 🔗 |

| Finetune_Meta_OPT-6-1b_Model_bnb_peft | 🔗 |

| Finetune Falcon-7b with BNB Self Supervised Training | 🔗 |

| FineTune LLaMa2 with QLoRa | 🔗 |

| Stable_Vicuna13B_8bit_in_Colab | 🔗 |

| GPT-Neo-X-20B-bnb2bit_training | 🔗 |

| MPT-Instruct-30B Model Training | 🔗 |

| RLHF_Training_for_CustomDataset_for_AnyModel | 🔗 |

| Fine_tuning_Microsoft_Phi_1_5b_on_custom_dataset(dialogstudio) | 🔗 |

| Finetuning OpenAI GPT3.5 Turbo | 🔗 |

| Finetuning Mistral-7b FineTuning Model using Autotrain-advanced | 🔗 |

| RAG LangChain Tutorial | 🔗 |

| Mistral DPO Trainer | 🔗 |

| LLM Sharding | 🔗 |

| Integrating Unstructured and Graph Knowledge with Neo4j and LangChain for Enhanced Question | 🔗 |

| vLLM Benchmarking | 🔗 |

| Milvus Vector Database | 🔗 |

| Decoding Strategies | 🔗 |

| Peft QLora SageMaker Training | 🔗 |

| Optimize Single Model SageMaker Endpoint | 🔗 |

| Multi Adapter Inference | 🔗 |

| Inf2 LLM SM Deployment | 🔗 |

Text Chunk Visualization In Progress

|

🔗 |

| Fine-tune Llama 3 with ORPO | 🔗 |

| 4 bit LLM Quantization with GPTQ | 🔗 |

| Model Family Tree | 🔗 |

| Create MoEs with MergeKit | 🔗 |

| LLM Projects | Respository |

|---|---|

| CSVQConnect | 🔗 |

| AI_VIRTUAL_ASSISTANT | 🔗 |

| DocuBotMultiPDFConversationalAssistant | 🔗 |

| autogpt | 🔗 |

| meta_llama_2finetuned_text_generation_summarization | 🔗 |

| text_generation_using_Llama | 🔗 |

| llm_using_petals | 🔗 |

| llm_using_petals | 🔗 |

| Salesforce-xgen | 🔗 |

| text_summarization_using_open_llama_7b | 🔗 |

| Text_summarization_using_GPT-J | 🔗 |

| codllama | 🔗 |

| Image_to_text_using_LLaVA | 🔗 |

| Tabular_data_using_llamaindex | 🔗 |

| nextword_sentence_prediction | 🔗 |

| Text-Generation-using-DeciLM-7B-instruct | 🔗 |

| Gemini-blog-creation | 🔗 |

| Prepare_holiday_cards_with_Gemini_and_Sheets | 🔗 |

| Code-Generattion_using_phi2_llm | 🔗 |

| RAG-USING-GEMINI | 🔗 |

| Resturant-Recommendation-Multi-Modal-RAG-using-Gemini | 🔗 |

| slim-sentiment-tool | 🔗 |

| Synthetic-Data-Generation-Using-LLM | 🔗 |

| Architecture-for-building-a-Chat-Assistant | 🔗 |

| LLM-CHAT-ASSISTANT-WITH-DYNAMIC-CONTEXT-BASED-ON-QUERY | 🔗 |

| Text Classifier using LLM | 🔗 |

| Multiclass sentiment Analysis | 🔗 |

| Text-Generation-Using-GROQ | 🔗 |

| DataAgents | 🔗 |

| PandasQuery_tabular_data | 🔗 |

| Exploratory_Data_Analysis_using_LLM | 🔗 |

| Dataset | # | Authors | Date | Notes | Category |

|---|---|---|---|---|---|

| Buzz | 31.2M | Alignment Lab AI | May 2024 | Huge collection of 435 datasets with data augmentation, deduplication, and other techniques. | General Purpose |

| WebInstructSub | 2.39M | Yue et al. | May 2024 | Instructions created by retrieving document from Common Crawl, extracting QA pairs, and refining them. See the MAmmoTH2 paper (this is a subset). | General Purpose |

| Bagel | >2M? | Jon Durbin | Jan 2024 | Collection of datasets decontaminated with cosine similarity. | General Purpose |

| Hercules v4.5 | 1.72M | Sebastian Gabarain | Apr 2024 | Large-scale general-purpose dataset with math, code, RP, etc. See v4 for the list of datasets. | General Purpose |

| Dolphin-2.9 | 1.39M | Cognitive Computations | Apr 2023 | Large-scale general-purpose dataset used by the Dolphin models. | General Purpose |

| WildChat-1M | 1.04M | Zhao et al. | May 2023 | Real conversations between human users and GPT-3.5/4, including metadata. See the WildChat paper. | General Purpose |

| OpenHermes-2.5 | 1M | Teknium | Nov 2023 | Another large-scale dataset used by the OpenHermes models. | General Purpose |

| SlimOrca | 518k | Lian et al. | Sep 2023 | Curated subset of OpenOrca using GPT-4-as-a-judge to remove wrong answers. | General Purpose |

| Tulu V2 Mix | 326k | Ivison et al. | Nov 2023 | Mix of high-quality datasets. See Tulu 2 paper. | General Purpose |

| UltraInteract SFT | 289k | Yuan et al. | Apr 2024 | Focus on math, coding, and logic tasks with step-by-step answers. See Eurus paper. | General Purpose |

| NeurIPS-LLM-data | 204k | Jindal et al. | Nov 2023 | Winner of NeurIPS LLM Efficiency Challenge, with an interesting data preparation strategy. | General Purpose |

| UltraChat 200k | 200k | Tunstall et al., Ding et al. | Oct 2023 | Heavily filtered version of the UItraChat dataset, consisting of 1.4M dialogues generated by ChatGPT. | General Purpose |

| WizardLM_evol_instruct_V2 | 143k | Xu et al. | Jun 2023 | Latest version of Evol-Instruct applied to Alpaca and ShareGPT data. See WizardLM paper. | General Purpose |

| sft_datablend_v1 | 128k | NVIDIA | Jan 2024 | Blend of publicly available datasets: OASST, CodeContests, FLAN, T0, Open_Platypus, and GSM8K and others (45 total). | General Purpose |

| Synthia-v1.3 | 119k | Migel Tissera | Nov 2023 | High-quality synthetic data generated using GPT-4. | General Purpose |

| FuseChat-Mixture | 95k | Wan et al. | Feb 2024 | Selection of samples from high-quality datasets. See FuseChat paper. | General Purpose |

| oasst1 | 84.4k | Köpf et al. | Mar 2023 | Human-generated assistant-style conversation corpus in 35 different languages. See OASST1 paper and oasst2. | General Purpose |

| WizardLM_evol_instruct_70k | 70k | Xu et al. | Apr 2023 | Evol-Instruct applied to Alpaca and ShareGPT data. See WizardLM paper. | General Purpose |

| airoboros-3.2 | 58.7k | Jon Durbin | Dec 2023 | High-quality uncensored dataset. | General Purpose |

| ShareGPT_Vicuna_unfiltered | 53k | anon823 1489123 | Mar 2023 | Filtered version of the ShareGPT dataset, consisting of real conversations between users and ChatGPT. | General Purpose |

| lmsys-chat-1m-smortmodelsonly | 45.8k | Nebulous, Zheng et al. | Sep 2023 | Filtered version of lmsys-chat-1m with responses from GPT-4, GPT-3.5-turbo, Claude-2, Claude-1, and Claude-instant-1. | General Purpose |

| Open-Platypus | 24.9k | Lee et al. | Sep 2023 | Collection of datasets that were deduplicated using Sentence Transformers (it contains an NC dataset). See Platypus paper. | General Purpose |

| databricks-dolly-15k | 15k | Conover et al. | May 2023 | Generated by Databricks employees, prompt/response pairs in eight different instruction categories, including the seven outlined in the InstructGPT paper. | General Purpose |

| OpenMathInstruct-1 | 5.75M | Toshniwal et al. | Feb 2024 | Problems from GSM8K and MATH, solutions generated by Mixtral-8x7B. | Math |

| MetaMathQA | 395k | Yu et al. | Dec 2023 | Bootstrap mathematical questions by rewriting them from multiple perspectives. See MetaMath paper. | Math |

| MathInstruct | 262k | Yue et al. | Sep 2023 | Compiled from 13 math rationale datasets, six of which are newly curated, and focuses on chain-of-thought and program-of-thought. | Math |

| Orca-Math | 200k | Mitra et al. | Feb 2024 | Grade school math world problems generated using GPT4-Turbo. See Orca-Math paper. | Math |

| CodeFeedback-Filtered-Instruction | 157k | Zheng et al. | Feb 2024 | Filtered version of Magicoder-OSS-Instruct, ShareGPT (Python), Magicoder-Evol-Instruct, and Evol-Instruct-Code. | Code |

| Tested-143k-Python-Alpaca | 143k | Vezora | Mar 2024 | Collection of generated Python code that passed automatic tests to ensure high quality. | Code |

| glaive-code-assistant | 136k | Glaive.ai | Sep 2023 | Synthetic data of problems and solutions with ~60% Python samples. Also see the v2 version. | Code |

| Magicoder-Evol-Instruct-110K | 110k | Wei et al. | Nov 2023 | A decontaminated version of evol-codealpaca-v1. Decontamination is done in the same way as StarCoder (bigcode decontamination process). See Magicoder paper. | Code |

| dolphin-coder | 109k | Eric Hartford | Nov 2023 | Dataset transformed from leetcode-rosetta. | Code |

| synthetic_tex_to_sql | 100k | Gretel.ai | Apr 2024 | Synthetic text-to-SQL samples (~23M tokens), covering diverse domains. | Code |

| sql-create-context | 78.6k | b-mc2 | Apr 2023 | Cleansed and augmented version of the WikiSQL and Spider datasets. | Code |

| Magicoder-OSS-Instruct-75K | 75k | Wei et al. | Nov 2023 | OSS-Instruct dataset generated by gpt-3.5-turbo-1106. See Magicoder paper. |

Code |

| Code-Feedback | 66.4k | Zheng et al. | Feb 2024 | Diverse Code Interpreter-like dataset with multi-turn dialogues and interleaved text and code responses. See OpenCodeInterpreter paper. | Code |

| Open-Critic-GPT | 55.1k | Vezora | Jul 2024 | Use a local model to create, introduce, and identify bugs in code across multiple programming languages. | Code |

| self-oss-instruct-sc2-exec-filter-50k | 50.7k | Lozhkov et al. | Apr 2024 | Created in three steps with seed functions from TheStack v1, self-instruction with StarCoder2, and self-validation. See the blog post. | Code |

| Bluemoon | 290k | Squish42 | Jun 2023 | Posts from the Blue Moon roleplaying forum cleaned and scraped by a third party. | Conversation & Role-Play |

| PIPPA | 16.8k | Gosling et al., kingbri | Aug 2023 | Deduped version of Pygmalion's PIPPA in ShareGPT format. | Conversation & Role-Play |

| Capybara | 16k | LDJnr | Dec 2023 | Strong focus on information diversity across a wide range of domains with multi-turn conversations. | Conversation & Role-Play |

| RPGPT_PublicDomain-alpaca | 4.26k | practical dreamer | May 2023 | Synthetic dataset of public domain character dialogue in roleplay format made with build-a-dataset. | Conversation & Role-Play |

| Pure-Dove | 3.86k | LDJnr | Sep 2023 | Highly filtered multi-turn conversations between GPT-4 and real humans. | Conversation & Role-Play |

| Opus Samantha | 1.85k | macadelicc | Apr 2024 | Multi-turn conversations with Claude 3 Opus. | Conversation & Role-Play |

| LimaRP-augmented | 804 | lemonilia, grimulkan | Jan 2024 | Augmented and cleansed version of LimaRP, consisting of human roleplaying conversations. | Conversation & Role-Play |

| glaive-function-calling-v2 | 113k | Sahil Chaudhary | Sep 2023 | High-quality dataset with pairs of instructions and answers in different languages. See Locutusque/function-calling-chatml for a variant without conversation tags. |

Agent & Function calling |

| xlam-function-calling-60k | 60k | Salesforce | Jun 2024 | Samples created using a data generation pipeline designed to produce verifiable data for function-calling applications. | Agent & Function calling |

| Agent-FLAN | 34.4k | internlm | Mar 2024 | Mix of AgentInstruct, ToolBench, and ShareGPT datasets. | Agent & Function calling |

Alignment is an emerging field of study where you ensure that an AI system performs exactly what you want it to perform. In the context of LLMs specifically, alignment is a process that trains an LLM to ensure that the generated outputs align with human values and goals.

What are the current methods for LLM alignment?

You will find many alignment methods in research literature, we will only stick to 3 alignment methods for the sake of discussion

- Step 1 & 2: Train an LLM (pre-training for the base model + supervised/instruction fine-tuning for chat model)

- Step 3: RLHF uses an ancillary language model (it could be much smaller than the main LLM) to learn human preferences. This can be done using a preference dataset - it contains a prompt, and a response/set of responses graded by expert human labelers. This is called a “reward model”.

- Step 4: Use a reinforcement learning algorithm (eg: PPO - proximal policy optimization), where the LLM is the agent, the reward model provides a positive or negative reward to the LLM based on how well it’s responses align with the “human preferred responses”. In theory, it is as simple as that. However, implementation isn’t that easy - requiring lot of human experts and compute resources. To overcome the “expense” of RLHF, researchers developed DPO.

- RLHF : RLHF: Reinforcement Learning from Human Feedback

- Step 1&2 remain the same

- Step 4: DPO eliminates the need for the training of a reward model (i.e step 3). How? DPO defines an additional preference loss as a function of it’s policy and uses the language model directly as the reward model. The idea is simple, If you are already training such a powerful LLM, why not train itself to distinguish between good and bad responses, instead of using another model?

- DPO is shown to be more computationally efficient (in case of RLHF you also need to constantly monitor the behavior of the reward model) and has better performance than RLHF in several settings.

- Blog on DPO : Aligning LLMs with Direct Preference Optimization (DPO)— background, overview, intuition and paper summary

- The newest method out of all 3, ORPO combines Step 2, 3 & 4 into a single step - so the dataset required for this method is a combination of a fine-tuning + preference dataset.

- The supervised fine-tuning and alignment/preference optimization is performed in a single step. This is because the fine-tuning step, while allowing the model to specialize to tasks and domains, can also increase the probability of undesired responses from the model.

- ORPO combines the steps using a single objective function by incorporating an odds ratio (OR) term - reward preferred responses & penalizing rejected responses.

- Blog on ORPO : ORPO Outperforms SFT+DPO | Train Phi-2 with ORPO

| Datasets | Descriptions | Link |

|---|---|---|

| Rule-based filtering | Remove samples based on a list of unwanted words, like refusals and "As an AI assistant" | 🔗 |

| SemHash | Fuzzy deduplication based on fast embedding generation with a distilled model. | 🔗 |

| Datasets | Descriptions | Link |

|---|---|---|

| Distilabel | General-purpose framework that can generate and augment data (SFT, DPO) with techniques like UltraFeedback and DEITA | 🔗 |

| Auto Data | Lightweight library to automatically generate fine-tuning datasets with API models. | 🔗 |

| Bonito | Library for generating synthetic instruction tuning datasets for your data without GPT (see also AutoBonito). | 🔗 |

| Augmentoolkit | Framework to convert raw text into datasets using open-source and closed-source models. | 🔗 |

| Magpie | Your efficient and high-quality synthetic data generation pipeline by prompting aligned LLMs with nothing. | 🔗 |

| Genstruct | An instruction generation model, which is designed to generate valid instructions from raw data | 🔗 |

| DataDreamer | A python library for prompting and synthetic data generation. | 🔗 |

| Datasets | Descriptions | Link |

|---|---|---|

| llm-swarm | Generate synthetic datasets for pretraining or fine-tuning using either local LLMs or Inference Endpoints on the Hugging Face Hub | 🔗 |

| Cosmopedia | Hugging Face's code for generating the Cosmopedia dataset. | 🔗 |

| textbook_quality | A repository for generating textbook-quality data, mimicking the approach of the Microsoft's Phi models. | 🔗 |

| Datasets | Descriptions | Link |

|---|---|---|

| sentence-transformers | A python module for working with popular language embedding models. | 🔗 |

| Lilac | Tool to curate better data for LLMs, used by NousResearch, databricks, cohere, Alignment Lab AI. It can also apply filters. | 🔗 |

| Nomic Atlas | Interact with instructed data to find insights and store embeddings. | 🔗 |

| text-clustering) | Easily embed, cluster and semantically label text datasets | 🔗 |

| Datasets | Descriptions | Link |

|---|---|---|

| Trafilatura | Python and command-line tool to gather text and metadata on the web. Used for the creation of RefinedWeb(https://arxiv.org/abs/2306.01116). | 🔗 |

| marker | Convert PDF to markdown + JSON quickly with high accuracy | 🔗 |

| Resources | Link |

|---|---|

| Brown, Tom B. "Language models are few-shot learners." arXiv preprint arXiv:2005.14165 (2020). | 🔗 |

| Kambhampati, Subbarao, et al. "LLMs can't plan, but can help planning in LLM-modulo frameworks." arXiv preprint arXiv:2402.01817 (2024). | 🔗 |

After immersing myself in the recent GenAI text-based language model hype for nearly a month, I have made several observations about its performance on my specific tasks.

Please note that these observations are subjective and specific to my own experiences, and your conclusions may differ.

- We need a minimum of 7B parameter models (<7B) for optimal natural language understanding performance. Models with fewer parameters result in a significant decrease in performance. However, using models with more than 7 billion parameters requires a GPU with greater than 24GB VRAM (>24GB).

- Benchmarks can be tricky as different LLMs perform better or worse depending on the task. It is crucial to find the model that works best for your specific use case. In my experience, MPT-7B is still the superior choice compared to Falcon-7B.

- Prompts change with each model iteration. Therefore, multiple reworks are necessary to adapt to these changes. While there are potential solutions, their effectiveness is still being evaluated.

- For fine-tuning, you need at least one GPU with greater than 24GB VRAM (>24GB). A GPU with 32GB or 40GB VRAM is recommended.

- Fine-tuning only the last few layers to speed up LLM training/finetuning may not yield satisfactory results. I have tried this approach, but it didn't work well.

- Loading 8-bit or 4-bit models can save VRAM. For a 7B model, instead of requiring 16GB, it takes approximately 10GB or less than 6GB, respectively. However, this reduction in VRAM usage comes at the cost of significantly decreased inference speed. It may also result in lower performance in text understanding tasks.

- Those who are exploring LLM applications for their companies should be aware of licensing considerations. Training a model with another model as a reference and requiring original weights is not advisable for commercial settings.

- There are three major types of LLMs: basic (like GPT-2/3), chat-enabled, and instruction-enabled. Most of the time, basic models are not usable as they are and require fine-tuning. Chat versions tend to be the best, but they are often not open-source.

- Not every problem needs to be solved with LLMs. Avoid forcing a solution around LLMs. Similar to the situation with deep reinforcement learning in the past, it is important to find the most appropriate approach.

- I have tried but didn't use langchains and vector-dbs. I never needed them. Simple Python, embeddings, and efficient dot product operations worked well for me.

- LLMs do not need to have complete world knowledge. Humans also don't possess comprehensive knowledge but can adapt. LLMs only need to know how to utilize the available knowledge. It might be possible to create smaller models by separating the knowledge component.

- The next wave of innovation might involve simulating "thoughts" before answering, rather than simply predicting one word after another. This approach could lead to significant advancements.

- The overparameterization of LLMs presents a significant challenge: they tend to memorize extensive amounts of training data. This becomes particularly problematic in RAG scenarios when the context conflicts with this "implicit" knowledge. However, the situation escalates further when the context itself contains contradictory information. A recent survey paper comprehensively analyzes these "knowledge conflicts" in LLMs, categorizing them into three distinct types:

-

Context-Memory Conflicts: Arise when external context contradicts the LLM's internal knowledge.

- Solution

- Fine-tune on counterfactual contexts to prioritize external information.

- Utilize specialized prompts to reinforce adherence to context

- Apply decoding techniques to amplify context probabilities.

- Pre-train on diverse contexts across documents.

- Solution

-

Inter-Context Conflicts: Contradictions between multiple external sources.

- Solution:

- Employ specialized models for contradiction detection.

- Utilize fact-checking frameworks integrated with external tools.

- Fine-tune discriminators to identify reliable sources.

- Aggregate high-confidence answers from augmented queries.

- Solution:

-

Intra-Memory Conflicts: The LLM gives inconsistent outputs for similar inputs due to conflicting internal knowledge.

- Solution:

- Fine-tune with consistency loss functions.

- Implement plug-in methods, retraining on word definitions.

- Ensemble one model's outputs with another's coherence scoring.

- Apply contrastive decoding, focusing on truthful layers/heads.

- Solution:

-

- The difference between PPO and DPOs: in DPO you don’t need to train a reward model anymore. Having good and bad data would be sufficient!

- ORPO: “A straightforward and innovative reference model-free monolithic odds ratio preference optimization algorithm, ORPO, eliminating the necessity for an additional preference alignment phase. “ Hong, Lee, Thorne (2024)

- KTO: “KTO does not need preferences -- only a binary signal of whether an output is desirable or undesirable for a given input. This makes it far easier to use in the real world, where preference data is scarce and expensive.” Ethayarajh et al (2024)

Contributions are welcome! If you'd like to contribute to this project, feel free to open an issue or submit a pull request.

This project is licensed under the MIT License.

Sunil Ghimire is a NLP Engineer passionate about literature. He believes that words and data are the two most powerful tools to change the world.

Created with ❤️ by Sunil Ghimire

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

Similar Open Source Tools

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

llm-datasets

LLM Datasets is a repository containing high-quality datasets, tools, and concepts for LLM fine-tuning. It provides datasets with characteristics like accuracy, diversity, and complexity to train large language models for various tasks. The repository includes datasets for general-purpose, math & logic, code, conversation & role-play, and agent & function calling domains. It also offers guidance on creating high-quality datasets through data deduplication, data quality assessment, data exploration, and data generation techniques.

Awesome-LLM-Large-Language-Models-Notes

Awesome-LLM-Large-Language-Models-Notes is a repository that provides a comprehensive collection of information on various Large Language Models (LLMs) classified by year, size, and name. It includes details on known LLM models, their papers, implementations, and specific characteristics. The repository also covers LLM models classified by architecture, must-read papers, blog articles, tutorials, and implementations from scratch. It serves as a valuable resource for individuals interested in understanding and working with LLMs in the field of Natural Language Processing (NLP).

together-cookbook

The Together Cookbook is a collection of code and guides designed to help developers build with open source models using Together AI. The recipes provide examples on how to chain multiple LLM calls, create agents that route tasks to specialized models, run multiple LLMs in parallel, break down tasks into parallel subtasks, build agents that iteratively improve responses, perform LoRA fine-tuning and inference, fine-tune LLMs for repetition, improve summarization capabilities, fine-tune LLMs on multi-step conversations, implement retrieval-augmented generation, conduct multimodal search and conditional image generation, visualize vector embeddings, improve search results with rerankers, implement vector search with embedding models, extract structured text from images, summarize and evaluate outputs with LLMs, generate podcasts from PDF content, and get LLMs to generate knowledge graphs.

llm-compression-intelligence

This repository presents the findings of the paper "Compression Represents Intelligence Linearly". The study reveals a strong linear correlation between the intelligence of LLMs, as measured by benchmark scores, and their ability to compress external text corpora. Compression efficiency, derived from raw text corpora, serves as a reliable evaluation metric that is linearly associated with model capabilities. The repository includes the compression corpora used in the paper, code for computing compression efficiency, and data collection and processing pipelines.

imodels

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easy to use. _For interpretability in NLP, check out our new package:imodelsX _

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

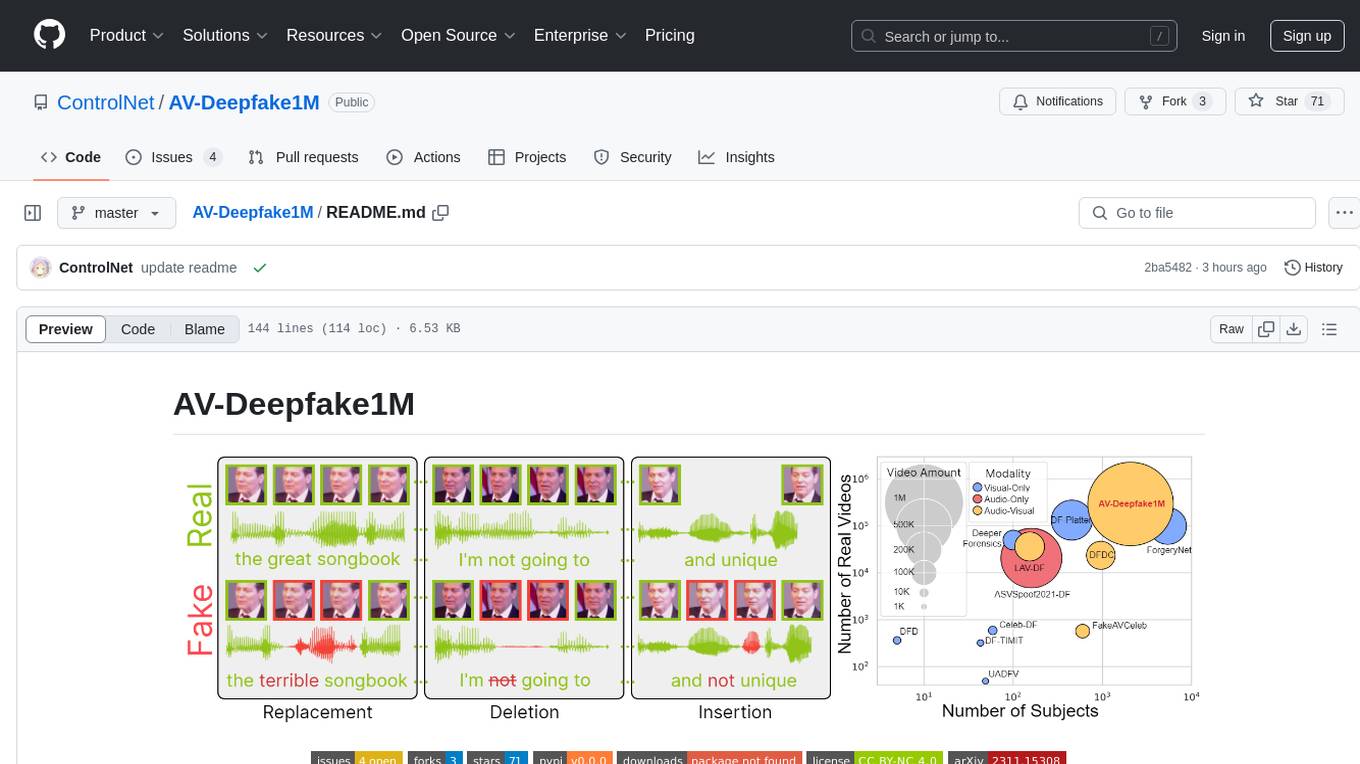

AV-Deepfake1M

The AV-Deepfake1M repository is the official repository for the paper AV-Deepfake1M: A Large-Scale LLM-Driven Audio-Visual Deepfake Dataset. It addresses the challenge of detecting and localizing deepfake audio-visual content by proposing a dataset containing video manipulations, audio manipulations, and audio-visual manipulations for over 2K subjects resulting in more than 1M videos. The dataset is crucial for developing next-generation deepfake localization methods.

rubra

Rubra is a collection of open-weight large language models enhanced with tool-calling capability. It allows users to call user-defined external tools in a deterministic manner while reasoning and chatting, making it ideal for agentic use cases. The models are further post-trained to teach instruct-tuned models new skills and mitigate catastrophic forgetting. Rubra extends popular inferencing projects for easy use, enabling users to run the models easily.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

MathEval

MathEval is a benchmark designed for evaluating the mathematical capabilities of large models. It includes over 20 evaluation datasets covering various mathematical domains with more than 30,000 math problems. The goal is to assess the performance of large models across different difficulty levels and mathematical subfields. MathEval serves as a reliable reference for comparing mathematical abilities among large models and offers guidance on enhancing their mathematical capabilities in the future.

amber-train

Amber is the first model in the LLM360 family, an initiative for comprehensive and fully open-sourced LLMs. It is a 7B English language model with the LLaMA architecture. The model type is a language model with the same architecture as LLaMA-7B. It is licensed under Apache 2.0. The resources available include training code, data preparation, metrics, and fully processed Amber pretraining data. The model has been trained on various datasets like Arxiv, Book, C4, Refined-Web, StarCoder, StackExchange, and Wikipedia. The hyperparameters include a total of 6.7B parameters, hidden size of 4096, intermediate size of 11008, 32 attention heads, 32 hidden layers, RMSNorm ε of 1e^-6, max sequence length of 2048, and a vocabulary size of 32000.

recommenders

Recommenders is a project under the Linux Foundation of AI and Data that assists researchers, developers, and enthusiasts in prototyping, experimenting with, and bringing to production a range of classic and state-of-the-art recommendation systems. The repository contains examples and best practices for building recommendation systems, provided as Jupyter notebooks. It covers tasks such as preparing data, building models using various recommendation algorithms, evaluating algorithms, tuning hyperparameters, and operationalizing models in a production environment on Azure. The project provides utilities to support common tasks like loading datasets, evaluating model outputs, and splitting training/test data. It includes implementations of state-of-the-art algorithms for self-study and customization in applications.

AI-For-Beginners

AI-For-Beginners is a comprehensive 12-week, 24-lesson curriculum designed by experts at Microsoft to introduce beginners to the world of Artificial Intelligence (AI). The curriculum covers various topics such as Symbolic AI, Neural Networks, Computer Vision, Natural Language Processing, Genetic Algorithms, and Multi-Agent Systems. It includes hands-on lessons, quizzes, and labs using popular frameworks like TensorFlow and PyTorch. The focus is on providing a foundational understanding of AI concepts and principles, making it an ideal starting point for individuals interested in AI.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

LLaVA-MORE

LLaVA-MORE is a new family of Multimodal Language Models (MLLMs) that integrates recent language models with diverse visual backbones. The repository provides a unified training protocol for fair comparisons across all architectures and releases training code and scripts for distributed training. It aims to enhance Multimodal LLM performance and offers various models for different tasks. Users can explore different visual backbones like SigLIP and methods for managing image resolutions (S2) to improve the connection between images and language. The repository is a starting point for expanding the study of Multimodal LLMs and enhancing new features in the field.

For similar tasks

llama-recipes

The llama-recipes repository provides a scalable library for fine-tuning Llama 2, along with example scripts and notebooks to quickly get started with using the Llama 2 models in a variety of use-cases, including fine-tuning for domain adaptation and building LLM-based applications with Llama 2 and other tools in the LLM ecosystem. The examples here showcase how to run Llama 2 locally, in the cloud, and on-prem.

llmware

LLMWare is a framework for quickly developing LLM-based applications including Retrieval Augmented Generation (RAG) and Multi-Step Orchestration of Agent Workflows. This project provides a comprehensive set of tools that anyone can use - from a beginner to the most sophisticated AI developer - to rapidly build industrial-grade, knowledge-based enterprise LLM applications. Our specific focus is on making it easy to integrate open source small specialized models and connecting enterprise knowledge safely and securely.