writing

This benchmark tests how well LLMs incorporate a set of 10 mandatory story elements (characters, objects, core concepts, attributes, motivations, etc.) in a short creative story

Stars: 97

The LLM Creative Story-Writing Benchmark evaluates large language models based on their ability to incorporate a set of 10 mandatory story elements in a short narrative. It measures constraint satisfaction and literary quality by grading models on character development, plot structure, atmosphere, storytelling impact, authenticity, and execution. The benchmark aims to assess how well models can adapt to rigid requirements, remain original, and produce cohesive stories using all assigned elements.

README:

This benchmark tests how well large language models (LLMs) incorporate a set of 10 mandatory story elements (characters, objects, core concepts, attributes, motivations, etc.) in a short narrative. This is particularly relevant for creative LLM use cases. Because every story has the same required building blocks and similar length, their resulting cohesiveness and creativity become directly comparable across models. A wide variety of required random elements ensures that LLMs must create diverse stories and cannot resort to repetition. The benchmark captures both constraint satisfaction (did the LLM incorporate all elements properly?) and literary quality (how engaging or coherent is the final piece?). By applying a multi-question grading rubric and multiple "grader" LLMs, we can pinpoint differences in how well each model integrates the assigned elements, develops characters, maintains atmosphere, and sustains an overall coherent plot. It measures more than fluency or style: it probes whether each model can adapt to rigid requirements, remain original, and produce a cohesive story that meaningfully uses every single assigned element.

Each of the 26 LLMs produces 500 short stories - each targeted at 400–500 words long - that must organically integrate all assigned random elements. In total, 26 * 500 = 13,000 unique stories are generated.

Six LLMs grade each of these stories on 16 questions regarding:

- Character Development & Motivation

- Plot Structure & Coherence

- World & Atmosphere

- Storytelling Impact & Craft

- Authenticity & Originality

- Execution & Cohesion

- 7A to 7J. Element fit for 10 required element: character, object, concept, attribute, action, method, setting, timeframe, motivation, tone

The grading LLMs are:

- GPT-4o

- Claude 3.5 Sonnet 2024-10-22

- LLama 3.1 405B

- DeepSeek-V3

- Grok 2 12-12

- Gemini 1.5 Pro (Sept)

In total, 26 * 500 * 6 * 16 = 1,248,000 grades are generated.

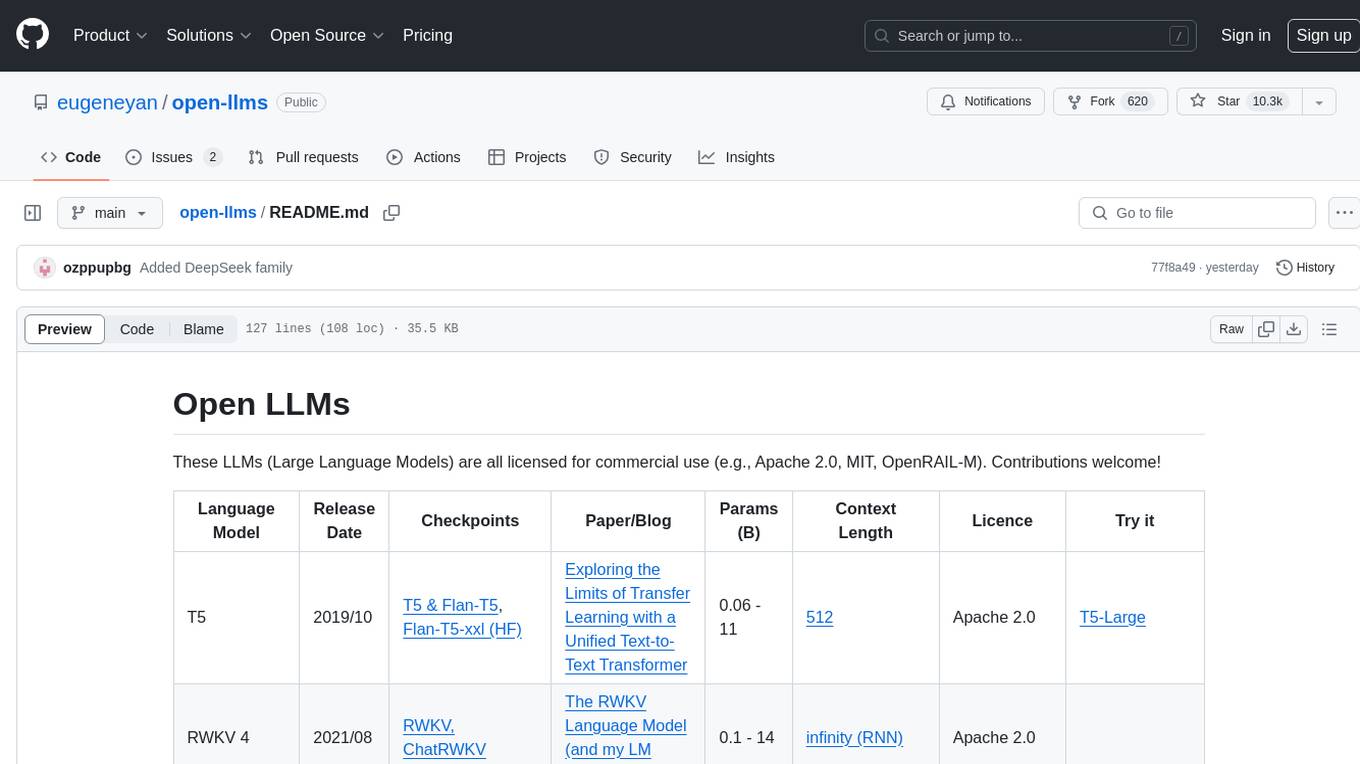

Leaderboard:

| Rank | LLM | Mean |

|---|---|---|

| 1 | DeepSeek R1 | 8.54 |

| 2 | Claude 3.5 Sonnet 2024-10-22 | 8.47 |

| 3 | Claude 3.5 Haiku | 8.07 |

| 4 | Gemini 1.5 Pro (Sept) | 7.97 |

| 5 | Gemini 2.0 Flash Thinking Exp Old | 7.87 |

| 6 | Gemini 2.0 Flash Thinking Exp 01-21 | 7.82 |

| 7 | o1-preview | 7.74 |

| 8 | Gemini 2.0 Flash Exp | 7.65 |

| 9 | Qwen 2.5 Max | 7.64 |

| 10 | DeepSeek-V3 | 7.62 |

| 11 | o1 | 7.57 |

| 12 | Mistral Large 2 | 7.54 |

| 13 | Gemma 2 27B | 7.49 |

| 14 | Qwen QwQ | 7.44 |

| 15 | GPT-4o mini | 7.37 |

| 16 | GPT-4o | 7.36 |

| 17 | o1-mini | 7.30 |

| 18 | Claude 3 Opus | 7.17 |

| 19 | Qwen 2.5 72B | 7.00 |

| 20 | Grok 2 12-12 | 6.98 |

| 21 | o3-mini | 6.90 |

| 22 | Microsoft Phi-4 | 6.89 |

| 23 | Amazon Nova Pro | 6.70 |

| 24 | Llama 3.1 405B | 6.60 |

| 25 | Llama 3.3 70B | 5.95 |

| 26 | Claude 3 Haiku | 5.83 |

DeepSeek R1 and Claude 3.5 Sonnet emerge as the clear overall winners. Notably, Claude 3.5 Haiku shows a large improvement over Claude 3 Haiku. Gemini models perform well, while Llama models lag behind. Interestingly, larger, more expensive models did not outperform smaller models by as much as one might expect. o3-mini performs worse than expected.

A strip plot illustrating distributions of scores (y-axis) by LLM (x-axis) across all stories, with Grader LLMs marked in different colors:

The plot reveals that Llama 3.1 405B occasionally, and DeepSeek-V3 sporadically, award a perfect 10 across the board, despite prompts explicitly asking them to be strict graders.

A heatmap showing each LLM's mean rating per question:

Before DeepSeek R1's release, Claude 3.5 Sonnet ranked #1 on every single question.

Which LLM ranked #1 the most times across all stories? This pie chart shows the distribution of #1 finishes:

Claude 3.5 Sonnet's and R1's dominance is undeniable when analyzing the best scores by story.

A heatmap of Grader (row) vs. LLM (column) average scores:

The chart highlights that grading LLMs do not disproportionately overrate their own stories. Llama 3.1 405B is impressed by the o3-mini, while other grading LLMs dislike its stories.

A correlation matrix (−1 to 1 scale) measuring how strongly multiple LLMs correlate when cross-grading the same stories:

Llama 3.1 405B's grades show the least correlation with other LLMs.

A basic prompt asking LLMs to create a 400-500 word story resulted in an unacceptable range of story lengths. A revised prompt instructing each LLM to track the number of words after each sentence improved consistency somewhat but still fell short of the accuracy needed for fair grading. These stories are available in stories_first/. For example, Claude 3.5 Haiku consistently produced stories that were significantly too short:

Since the benchmark aims to evaluate how well LLMs write, not how well they count or follow prompts about the format, we adjusted the word counts in the prompt for different LLMs to approximately match the target story length - an approach similar to what someone dissatisfied with the initial story length might adopt. Qwen QwQ and Llama 3.x models required the most extensive prompt engineering to achieve the required word counts and to adhere to the proper output format across all 500 stories. Note that this did not require any evaluation of the story's content itself. These final stories were then graded and they are available in stories_wc/.

This chart shows the correlations between each LLM's scores and their story lengths:

o3-mini and o1 seem to force too many of their stories to be exactly within the specified limits, which may hurt their grades.

This chart shows the correlations between each Grader LLM's scores and the lengths of stories they graded:

Here, we list the top 3 and the bottom 3 individual stories (written by any LLM) out of the 13,000 generated, based on the average scores from our grader LLMs, and include the required elements for each. Feel free to evaluate their quality for yourself!

-

Story: story_386.txt by DeepSeek R1

- Overall Mean (All Graders): 9.27

- Grader Score Range: 8.31 (lowest: Gemini 1.5 Pro (Sept)) .. 10.00 (highest: Llama 3.1 405B)

- Required Elements:

- Character: time refugee from a forgotten empire

- Object: embroidered tablecloth

- Core Concept: quietly defiant

- Attribute: trustworthy strange

- Action: catapult

- Method: by the alignment of the stars

- Setting: atom-powered lighthouse

- Timeframe: in the hush of a line that never moves

- Motivation: to bind old wounds with unstoppable will

- Tone: borrowed dawn

-

Story: story_185.txt by DeepSeek R1

- Overall Mean (All Graders): 9.24

- Grader Score Range: 7.56 (lowest: Gemini 1.5 Pro (Sept)) .. 10.00 (highest: Llama 3.1 405B)

- Required Elements:

- Character: peculiar collector

- Object: old pencil stub

- Core Concept: buried talents

- Attribute: infuriatingly calm

- Action: tweak

- Method: by decoding the arrangement of keys left in a piano bench

- Setting: probability mapping center

- Timeframe: across millennia

- Motivation: to make a final stand

- Tone: fractured grace

-

Story: story_166.txt by DeepSeek R1

- Overall Mean (All Graders): 9.22

- Grader Score Range: 8.19 (lowest: Claude 3.5 Sonnet 2024-10-22) .. 10.00 (highest: Llama 3.1 405B)

- Required Elements:

- Character: ghostly caretaker

- Object: plastic straw

- Core Concept: weaving through fate

- Attribute: solemnly silly

- Action: perforate

- Method: through forbidden expedition logs

- Setting: frozen orchard feeding off geothermal streams

- Timeframe: after the last wish is granted

- Motivation: to communicate with animals

- Tone: gentle chaos

- Story: story_385.txt by Claude 3 Haiku. 3.77

- Story: story_450.txt by Llama 3.3 70B. 3.88

- Story: story_4.txt by Claude 3 Haiku. 3.86

A valid concern is whether LLM graders can accurately score questions 1 to 6 (Major Story Aspects), such as Character Development & Motivation. However, questions 7A to 7J (Element Integration) are clearly much easier for LLM graders to evaluate correctly, and we observe a very high correlation between the grades for questions 1 to 6 and 7A to 7J across all grader - LLM combinations. We also observe a high correlation among the grader LLMs themselves. Overall, the per-story correlation of 1-6 vs 7A-7J is 0.949 (N=78,000). While we cannot be certain that these ratings are correct without human validation, the consistency suggests that something real is being measured. But we can simply ignore questions 1 to 6 and just use ratings for 7A to 7J:

Excluding 10% worst stories per LLM does not significantly change the rankings:

| LLM Full | Old Rank | Old Mean | New Rank | New Mean |

|---|---|---|---|---|

| DeepSeek R1 | 1 | 8.54 | 1 | 8.60 |

| Claude 3.5 Sonnet 2024-10-22 | 2 | 8.47 | 2 | 8.54 |

| Claude 3.5 Haiku | 3 | 8.07 | 3 | 8.15 |

| Gemini 1.5 Pro (Sept) | 5 | 7.97 | 5 | 8.06 |

| Gemini 2.0 Flash Thinking Exp Old | 6 | 7.87 | 6 | 7.96 |

| Gemini 2.0 Flash Thinking Exp 01-21 | 7 | 7.82 | 7 | 7.93 |

| o1-preview | 8 | 7.74 | 8 | 7.85 |

| Gemini 2.0 Flash Exp | 9 | 7.65 | 9 | 7.76 |

| DeepSeek-V3 | 11 | 7.62 | 10 | 7.74 |

| Qwen 2.5 Max | 10 | 7.64 | 11 | 7.74 |

| o1 | 12 | 7.57 | 12 | 7.68 |

| Mistral Large 2 | 13 | 7.54 | 13 | 7.65 |

| Gemma 2 27B | 14 | 7.49 | 14 | 7.60 |

| Qwen QwQ | 15 | 7.44 | 15 | 7.55 |

| GPT-4o | 17 | 7.36 | 16 | 7.47 |

| GPT-4o mini | 16 | 7.37 | 17 | 7.46 |

| o1-mini | 18 | 7.30 | 18 | 7.44 |

| Claude 3 Opus | 19 | 7.17 | 19 | 7.30 |

| Grok 2 12-12 | 21 | 6.98 | 20 | 7.12 |

| Qwen 2.5 72B | 20 | 7.00 | 21 | 7.12 |

| o3-mini | 22 | 6.90 | 22 | 7.04 |

| Microsoft Phi-4 | 23 | 6.89 | 23 | 7.02 |

| Amazon Nova Pro | 24 | 6.70 | 24 | 6.84 |

| Llama 3.1 405B | 25 | 6.60 | 25 | 6.72 |

| Llama 3.3 70B | 26 | 5.95 | 26 | 6.08 |

| Claude 3 Haiku | 27 | 5.83 | 27 | 5.97 |

Excluding any one LLM from grading also does not significantly change the rankings. For example, here is what happens when LLama 3.1 405B is excluded:

| LLM | Old Rank | Old Mean | New Rank | New Mean |

|---|---|---|---|---|

| DeepSeek R1 | 1 | 8.54 | 1 | 8.36 |

| Claude 3.5 Sonnet 2024-10-22 | 2 | 8.47 | 2 | 8.25 |

| Claude 3.5 Haiku | 3 | 8.07 | 3 | 7.75 |

| Gemini 1.5 Pro (Sept) | 5 | 7.97 | 5 | 7.73 |

| Gemini 2.0 Flash Thinking Exp Old | 6 | 7.87 | 6 | 7.64 |

| Gemini 2.0 Flash Thinking Exp 01-21 | 7 | 7.82 | 7 | 7.54 |

| o1-preview | 8 | 7.74 | 8 | 7.47 |

| Qwen 2.5 Max | 10 | 7.64 | 9 | 7.42 |

| DeepSeek-V3 | 11 | 7.62 | 10 | 7.36 |

| Gemini 2.0 Flash Exp | 9 | 7.65 | 11 | 7.36 |

| o1 | 12 | 7.57 | 12 | 7.29 |

| Gemma 2 27B | 14 | 7.49 | 13 | 7.29 |

| Mistral Large 2 | 13 | 7.54 | 14 | 7.24 |

| Qwen QwQ | 15 | 7.44 | 15 | 7.18 |

| GPT-4o mini | 16 | 7.37 | 16 | 7.09 |

| GPT-4o | 17 | 7.36 | 17 | 7.03 |

| o1-mini | 18 | 7.30 | 18 | 6.91 |

| Claude 3 Opus | 19 | 7.17 | 19 | 6.84 |

| Qwen 2.5 72B | 20 | 7.00 | 20 | 6.66 |

| Grok 2 12-12 | 21 | 6.98 | 21 | 6.63 |

| Microsoft Phi-4 | 23 | 6.89 | 22 | 6.49 |

| o3-mini | 22 | 6.90 | 23 | 6.38 |

| Amazon Nova Pro | 24 | 6.70 | 24 | 6.34 |

| Llama 3.1 405B | 25 | 6.60 | 25 | 6.18 |

| Llama 3.3 70B | 26 | 5.95 | 26 | 5.41 |

| Claude 3 Haiku | 27 | 5.83 | 27 | 5.32 |

Normalizing each grader’s scores doesn’t significantly alter the rankings:

| Rank | LLM | Normalized Mean |

|---|---|---|

| 1 | DeepSeek R1 | 1.103 |

| 2 | Claude 3.5 Sonnet 2024-10-22 | 1.055 |

| 3 | Claude 3.5 Haiku | 0.653 |

| 5 | Gemini 1.5 Pro (Sept) | 0.556 |

| 6 | Gemini 2.0 Flash Thinking Exp Old | 0.463 |

| 7 | Gemini 2.0 Flash Thinking Exp 01-21 | 0.413 |

| 8 | o1-preview | 0.346 |

| 9 | Gemini 2.0 Flash Exp | 0.270 |

| 10 | DeepSeek-V3 | 0.215 |

| 11 | Qwen 2.5 Max | 0.208 |

| 12 | o1 | 0.172 |

| 13 | Mistral Large 2 | 0.157 |

| 14 | Gemma 2 27B | 0.041 |

| 15 | Qwen QwQ | -0.002 |

| 16 | GPT-4o | -0.024 |

| 17 | o1-mini | -0.031 |

| 18 | GPT-4o mini | -0.038 |

| 19 | Claude 3 Opus | -0.211 |

| 20 | Grok 2 12-12 | -0.370 |

| 21 | Qwen 2.5 72B | -0.381 |

| 22 | o3-mini | -0.423 |

| 23 | Microsoft Phi-4 | -0.477 |

| 24 | Amazon Nova Pro | -0.697 |

| 25 | Llama 3.1 405B | -0.727 |

| 26 | Llama 3.3 70B | -1.334 |

| 27 | Claude 3 Haiku | -1.513 |

Full range of scores:

- Feb 1, 2025: o3-mini (medium reasoning effort) added.

- Jan 31, 2025: DeepSeek R1, o1, Gemini 2.0 Flash Thinking Exp 01-21, Microsoft Phi-4, Amazon Nova Pro added.

- Also check out Multi-Agent Elimination Game LLM Benchmark, LLM Public Goods Game, LLM Step Game, LLM Thematic Generalization Benchmark, LLM Confabulation/Hallucination Benchmark, NYT Connections Benchmark, LLM Deception Benchmark and LLM Divergent Thinking Creativity Benchmark.

- Follow @lechmazur on X (Twitter) for other upcoming benchmarks and more.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for writing

Similar Open Source Tools

writing

The LLM Creative Story-Writing Benchmark evaluates large language models based on their ability to incorporate a set of 10 mandatory story elements in a short narrative. It measures constraint satisfaction and literary quality by grading models on character development, plot structure, atmosphere, storytelling impact, authenticity, and execution. The benchmark aims to assess how well models can adapt to rigid requirements, remain original, and produce cohesive stories using all assigned elements.

goodai-ltm-benchmark

This repository contains code and data for replicating experiments on Long-Term Memory (LTM) abilities of conversational agents. It includes a benchmark for testing agents' memory performance over long conversations, evaluating tasks requiring dynamic memory upkeep and information integration. The repository supports various models, datasets, and configurations for benchmarking and reporting results.

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

awesome-local-llms

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

FlipAttack

FlipAttack is a jailbreak attack tool designed to exploit black-box Language Model Models (LLMs) by manipulating text inputs. It leverages insights into LLMs' autoregressive nature to construct noise on the left side of the input text, deceiving the model and enabling harmful behaviors. The tool offers four flipping modes to guide LLMs in denoising and executing malicious prompts effectively. FlipAttack is characterized by its universality, stealthiness, and simplicity, allowing users to compromise black-box LLMs with just one query. Experimental results demonstrate its high success rates against various LLMs, including GPT-4o and guardrail models.

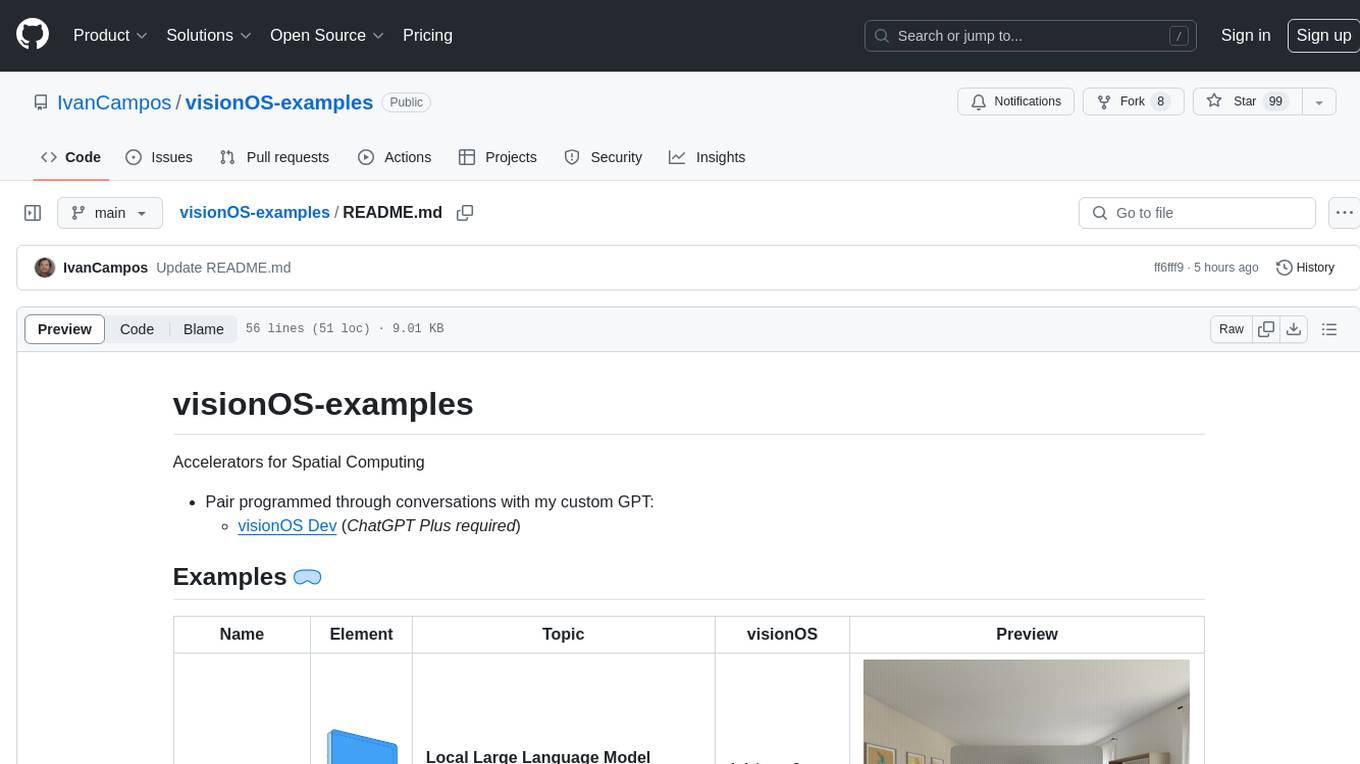

open-llms

Open LLMs is a repository containing various Large Language Models licensed for commercial use. It includes models like T5, GPT-NeoX, UL2, Bloom, Cerebras-GPT, Pythia, Dolly, and more. These models are designed for tasks such as transfer learning, language understanding, chatbot development, code generation, and more. The repository provides information on release dates, checkpoints, papers/blogs, parameters, context length, and licenses for each model. Contributions to the repository are welcome, and it serves as a resource for exploring the capabilities of different language models.

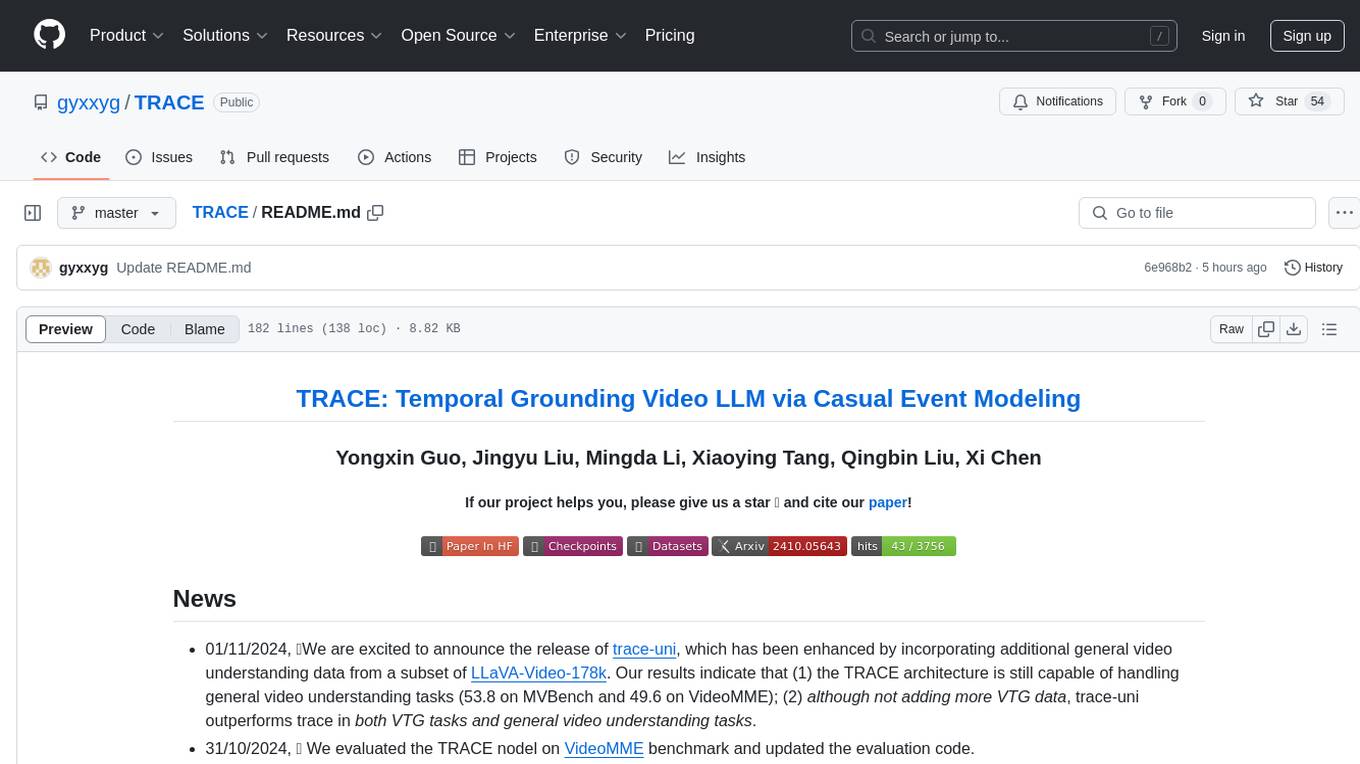

TRACE

TRACE is a temporal grounding video model that utilizes causal event modeling to capture videos' inherent structure. It presents a task-interleaved video LLM model tailored for sequential encoding/decoding of timestamps, salient scores, and textual captions. The project includes various model checkpoints for different stages and fine-tuning on specific datasets. It provides evaluation codes for different tasks like VTG, MVBench, and VideoMME. The repository also offers annotation files and links to raw videos preparation projects. Users can train the model on different tasks and evaluate the performance based on metrics like CIDER, METEOR, SODA_c, F1, mAP, Hit@1, etc. TRACE has been enhanced with trace-retrieval and trace-uni models, showing improved performance on dense video captioning and general video understanding tasks.

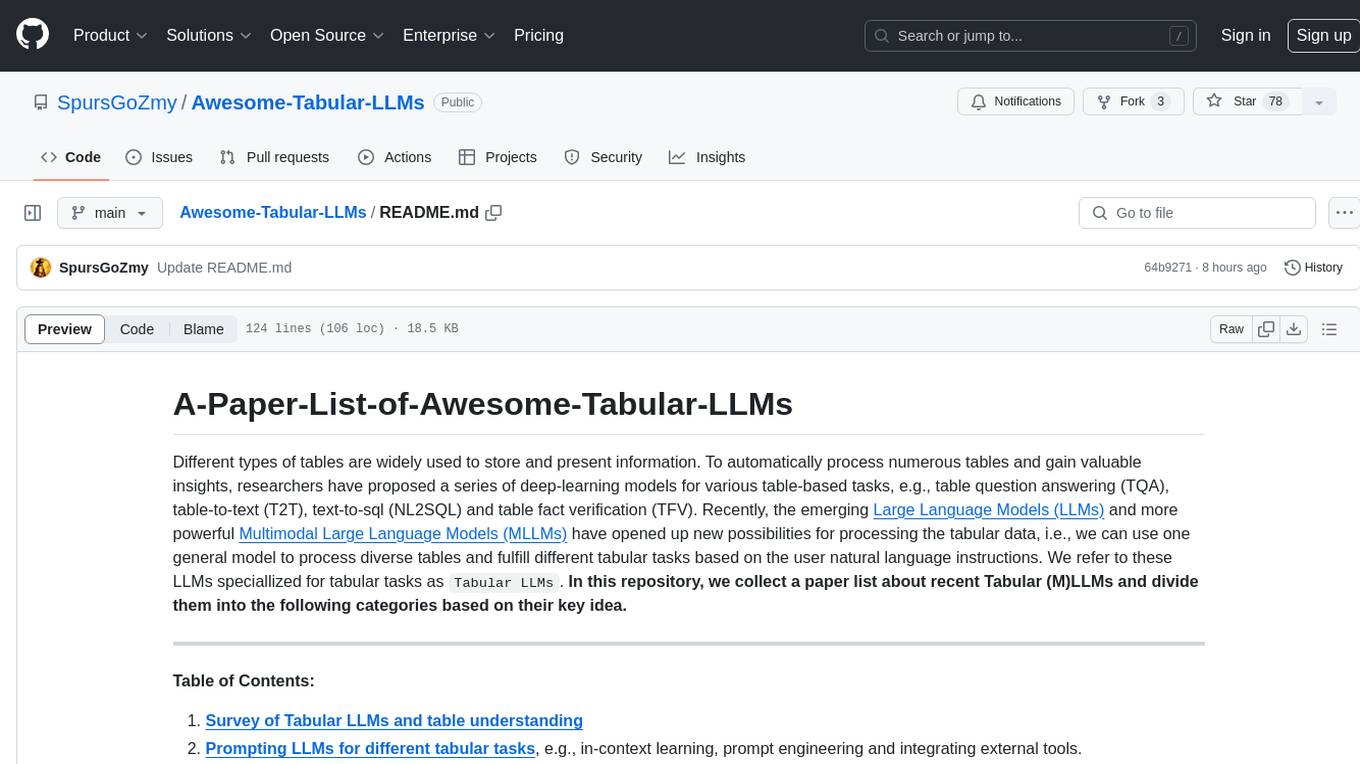

Awesome-Tabular-LLMs

This repository is a collection of papers on Tabular Large Language Models (LLMs) specialized for processing tabular data. It includes surveys, models, and applications related to table understanding tasks such as Table Question Answering, Table-to-Text, Text-to-SQL, and more. The repository categorizes the papers based on key ideas and provides insights into the advancements in using LLMs for processing diverse tables and fulfilling various tabular tasks based on natural language instructions.

visionOS-examples

visionOS-examples is a repository containing accelerators for Spatial Computing. It includes examples such as Local Large Language Model, Chat Apple Vision Pro, WebSockets, Anchor To Head, Hand Tracking, Battery Life, Countdown, Plane Detection, Timer Vision, and PencilKit for visionOS. The repository showcases various functionalities and features for Apple Vision Pro, offering tools for developers to enhance their visionOS apps with capabilities like hand tracking, plane detection, and real-time cryptocurrency prices.

Github-Ranking-AI

This repository provides a list of the most starred and forked repositories on GitHub. It is updated automatically and includes information such as the project name, number of stars, number of forks, language, number of open issues, description, and last commit date. The repository is divided into two sections: LLM and chatGPT. The LLM section includes repositories related to large language models, while the chatGPT section includes repositories related to the chatGPT chatbot.

llm4regression

This project explores the capability of Large Language Models (LLMs) to perform regression tasks using in-context examples. It compares the performance of LLMs like GPT-4 and Claude 3 Opus with traditional supervised methods such as Linear Regression and Gradient Boosting. The project provides preprints and results demonstrating the strong performance of LLMs in regression tasks. It includes datasets, models used, and experiments on adaptation and contamination. The code and data for the experiments are available for interaction and analysis.

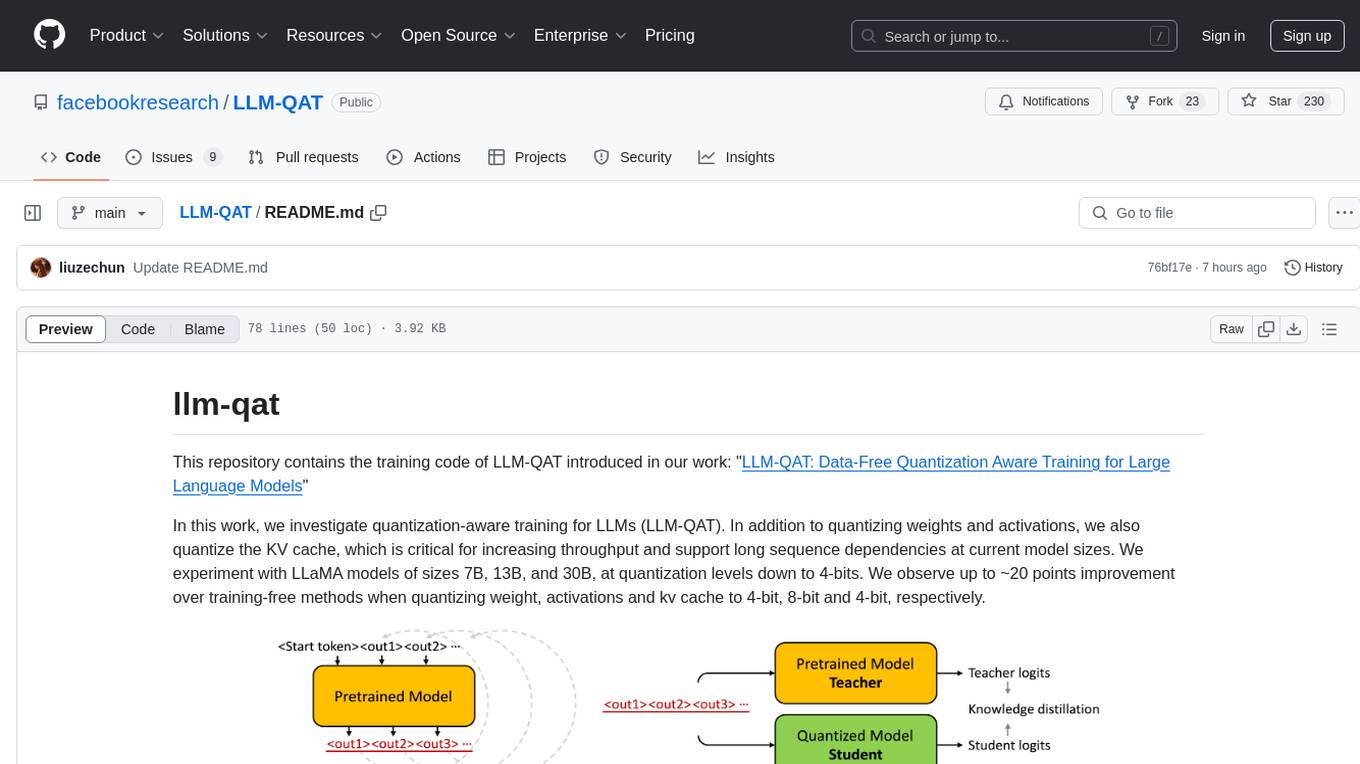

LLM-QAT

This repository contains the training code of LLM-QAT for large language models. The work investigates quantization-aware training for LLMs, including quantizing weights, activations, and the KV cache. Experiments were conducted on LLaMA models of sizes 7B, 13B, and 30B, at quantization levels down to 4-bits. Significant improvements were observed when quantizing weight, activations, and kv cache to 4-bit, 8-bit, and 4-bit, respectively.

Korean-SAT-LLM-Leaderboard

The Korean SAT LLM Leaderboard is a benchmarking project that allows users to test their fine-tuned Korean language models on a 10-year dataset of the Korean College Scholastic Ability Test (CSAT). The project provides a platform to compare human academic ability with the performance of large language models (LLMs) on various question types to assess reading comprehension, critical thinking, and sentence interpretation skills. It aims to share benchmark data, utilize a reliable evaluation dataset curated by the Korea Institute for Curriculum and Evaluation, provide annual updates to prevent data leakage, and promote open-source LLM advancement for achieving top-tier performance on the Korean CSAT.

nntrainer

NNtrainer is a software framework for training neural network models on devices with limited resources. It enables on-device fine-tuning of neural networks using user data for personalization. NNtrainer supports various machine learning algorithms and provides examples for tasks such as few-shot learning, ResNet, VGG, and product rating. It is optimized for embedded devices and utilizes CBLAS and CUBLAS for accelerated calculations. NNtrainer is open source and released under the Apache License version 2.0.

Data-and-AI-Concepts

This repository is a curated collection of data science and AI concepts and IQs, covering topics from foundational mathematics to cutting-edge generative AI concepts. It aims to support learners and professionals preparing for various data science roles by providing detailed explanations and notebooks for each concept.

For similar tasks

AlignBench

AlignBench is the first comprehensive evaluation benchmark for assessing the alignment level of Chinese large models across multiple dimensions. It includes introduction information, data, and code related to AlignBench. The benchmark aims to evaluate the alignment performance of Chinese large language models through a multi-dimensional and rule-calibrated evaluation method, enhancing reliability and interpretability.

LLMEvaluation

The LLMEvaluation repository is a comprehensive compendium of evaluation methods for Large Language Models (LLMs) and LLM-based systems. It aims to assist academics and industry professionals in creating effective evaluation suites tailored to their specific needs by reviewing industry practices for assessing LLMs and their applications. The repository covers a wide range of evaluation techniques, benchmarks, and studies related to LLMs, including areas such as embeddings, question answering, multi-turn dialogues, reasoning, multi-lingual tasks, ethical AI, biases, safe AI, code generation, summarization, software performance, agent LLM architectures, long text generation, graph understanding, and various unclassified tasks. It also includes evaluations for LLM systems in conversational systems, copilots, search and recommendation engines, task utility, and verticals like healthcare, law, science, financial, and others. The repository provides a wealth of resources for evaluating and understanding the capabilities of LLMs in different domains.

writing

The LLM Creative Story-Writing Benchmark evaluates large language models based on their ability to incorporate a set of 10 mandatory story elements in a short narrative. It measures constraint satisfaction and literary quality by grading models on character development, plot structure, atmosphere, storytelling impact, authenticity, and execution. The benchmark aims to assess how well models can adapt to rigid requirements, remain original, and produce cohesive stories using all assigned elements.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

ComfyUI-IF_AI_tools

ComfyUI-IF_AI_tools is a set of custom nodes for ComfyUI that allows you to generate prompts using a local Large Language Model (LLM) via Ollama. This tool enables you to enhance your image generation workflow by leveraging the power of language models.

ai-game-development-tools

Here we will keep track of the AI Game Development Tools, including LLM, Agent, Code, Writer, Image, Texture, Shader, 3D Model, Animation, Video, Audio, Music, Singing Voice and Analytics. 🔥 * Tool (AI LLM) * Game (Agent) * Code * Framework * Writer * Image * Texture * Shader * 3D Model * Avatar * Animation * Video * Audio * Music * Singing Voice * Speech * Analytics * Video Tool

RisuAI

RisuAI, or Risu for short, is a cross-platform AI chatting software/web application with powerful features such as multiple API support, assets in the chat, regex functions, and much more.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.