LLMEvaluation

A comprehensive guide to LLM evaluation methods designed to assist in identifying the most suitable evaluation techniques for various use cases, promote the adoption of best practices in LLM assessment, and critically assess the effectiveness of these evaluation methods.

Stars: 176

The LLMEvaluation repository is a comprehensive compendium of evaluation methods for Large Language Models (LLMs) and LLM-based systems. It aims to assist academics and industry professionals in creating effective evaluation suites tailored to their specific needs by reviewing industry practices for assessing LLMs and their applications. The repository covers a wide range of evaluation techniques, benchmarks, and studies related to LLMs, including areas such as embeddings, question answering, multi-turn dialogues, reasoning, multi-lingual tasks, ethical AI, biases, safe AI, code generation, summarization, software performance, agent LLM architectures, long text generation, graph understanding, and various unclassified tasks. It also includes evaluations for LLM systems in conversational systems, copilots, search and recommendation engines, task utility, and verticals like healthcare, law, science, financial, and others. The repository provides a wealth of resources for evaluating and understanding the capabilities of LLMs in different domains.

README:

The aim of this compendium is to assist academics and industry professionals in creating effective evaluation suites tailored to their specific needs. It does so by reviewing the top industry practices for assessing large language models (LLMs) and their applications. This work goes beyond merely cataloging benchmarks and evaluation studies; it encompasses a comprehensive overview of all effective and practical evaluation techniques, including those embedded within papers that primarily introduce new LLM methodologies and tasks. I plan to periodically update this survey with any noteworthy and shareable evaluation methods that I come across. I aim to create a resource that will enable anyone with queries—whether it's about evaluating a large language model (LLM) or an LLM application for specific tasks, determining the best methods to assess LLM effectiveness, or understanding how well an LLM performs in a particular domain—to easily find all the relevant information needed for these tasks. Additionally, I want to highlight various methods for evaluating the evaluation tasks themselves, to ensure that these evaluations align effectively with business or academic objectives.

My view on LLM Evaluation: Deck 24, and SF Big Analytics and AICamp 24 video Analytics Vidhya (Data Phoenix Mar 5 24) (by Andrei Lopatenko)

Adjacent compendium on LLM, Search and Recommender engines

- Reviews and Surveys

- Leaderboards and Arenas

- Evaluation Software

- LLM Evaluation articles in tech media and blog posts from companies

- Frontier models

- Large benchmarks

- Evaluation of evaluation, Evaluation theory, evaluation methods, analysis of evaluation

- Long Comprehensive Studies

- HITL (Human in the Loop)

- LLM as Judge

-

LLM Evaluation

- Embeddings

- In Context Learning

- Hallucinations

- Question Answering

- Multi Turn

- Reasoning

- Multi-Lingual

- Multi-Modal

- Instruction Following

- Ethical AI

- Biases

- Safe AI

- Cybersecurity

- Code Generating LLMs

- Summarization

- LLM quality (generic methods: overfitting, redundant layers etc)

- Inference Performance

- Agent LLM architectures

- AGI Evaluation

- Long Text Generation

- Document Understanding

- Graph Understandings

- Reward Models

- Various unclassified tasks

- LLM Systems

- Other collections

- Citation

- Order in the Evaluation Court: A Critical Analysis of NLG Evaluation Trends, Jan 2026, arxiv

- Benchmark^2: Systematic Evaluation of LLM Benchmarks, Jan 2026, arxiv

- Toward an evaluation science for generative AI systems, Mar 2025, arxiv

- Benchmark Evaluations, Applications, and Challenges of Large Vision Language Models: A Survey, UMD, Jan 2025, arxiv

- AI Benchmarks and Datasets for LLM Evaluation, Dec 2024, arxiv, a survey of many LLM benchmarks

- LLMs-as-Judges: A Comprehensive Survey on LLM-based Evaluation Methods, Dec 2024, arxiv

- A Systematic Survey and Critical Review on Evaluating Large Language Models: Challenges, Limitations, and Recommendations, EMNLP 2024, ACLAnthology

- A Survey on Evaluation of Multimodal Large Language Models, aug 2024, arxiv

- A Survey of Useful LLM Evaluation, Jun 2024, arxiv

- Evaluating Large Language Models: A Comprehensive Survey , Oct 2023 arxiv:

- A Survey on Evaluation of Large Language Models Jul 2023 arxiv:

- Through the Lens of Core Competency: Survey on Evaluation of Large Language Models, Aug 2023 , arxiv:

- for industry-specific surveys of evaluation methods for industries such as medical, see in respective parts of this compendium

- New Hard Leaderboard by HuggingFace leaderboard description, blog post

- MathArena Evaluating LLMs on Uncontaminated Math Competitions Evaluation code

- ViDoRe Benchmark V2: Raising the Bar for Visual Retrieval, The Visual Document Retrieval Benchmark, Mar 2025, HuggingSpace See leaderboard in the document

- The FACTS Grounding Leaderboard: Benchmarking LLMs' Ability to Ground Responses to Long-Form Input, DeepMind, Jan 2025, arxiv Leaderboard

- LMSys Arena (explanation:)

- Aider Polyglot, code edit benchmark, Aider Polyglot

- Salesforce's Contextual Bench leaderboard hugging face an overview of how different LLMs perform across a variety of contextual tasks,

- GAIA leaderboard, GAIA is a benchmark developed by Meta, HuggingFace to measure AGI Assistants, see GAIA: a benchmark for General AI Assistants

- WebQA - Multimodal and Multihop QA, by WebQA WebQA leaderboard

- ArenaHard Leaderboard Paper: From Crowdsourced Data to High-Quality Benchmarks: Arena-Hard and BenchBuilder Pipeline, UC Berkeley, Jun 2024, arxiv github repo ArenaHard benchmark

- OpenGPT-X Multi- Lingual European LLM Leaderboard, evaluation of LLMs for many European languages - on HuggingFace

- AllenAI's ZeroEval LeaderBoard benchmark: ZeroEval from AllenAI unified framework for evaluating (large) language models on various reasoning tasks

- OpenLLM Leaderboard

- MTEB

- SWE Bench

- AlpacaEval leaderboard Length-Controlled AlpacaEval: A Simple Way to Debias Automatic Evaluators, Apr 2024, arxiv code

- Open Medical LLM Leaderboard from HF Explanation

- Gorilla, Berkeley function calling Leaderboard Explanation

- WildBench WildBench: Benchmarking LLMs with Challenging Tasks from Real Users in the Wild

- Enterprise Scenarios, Patronus

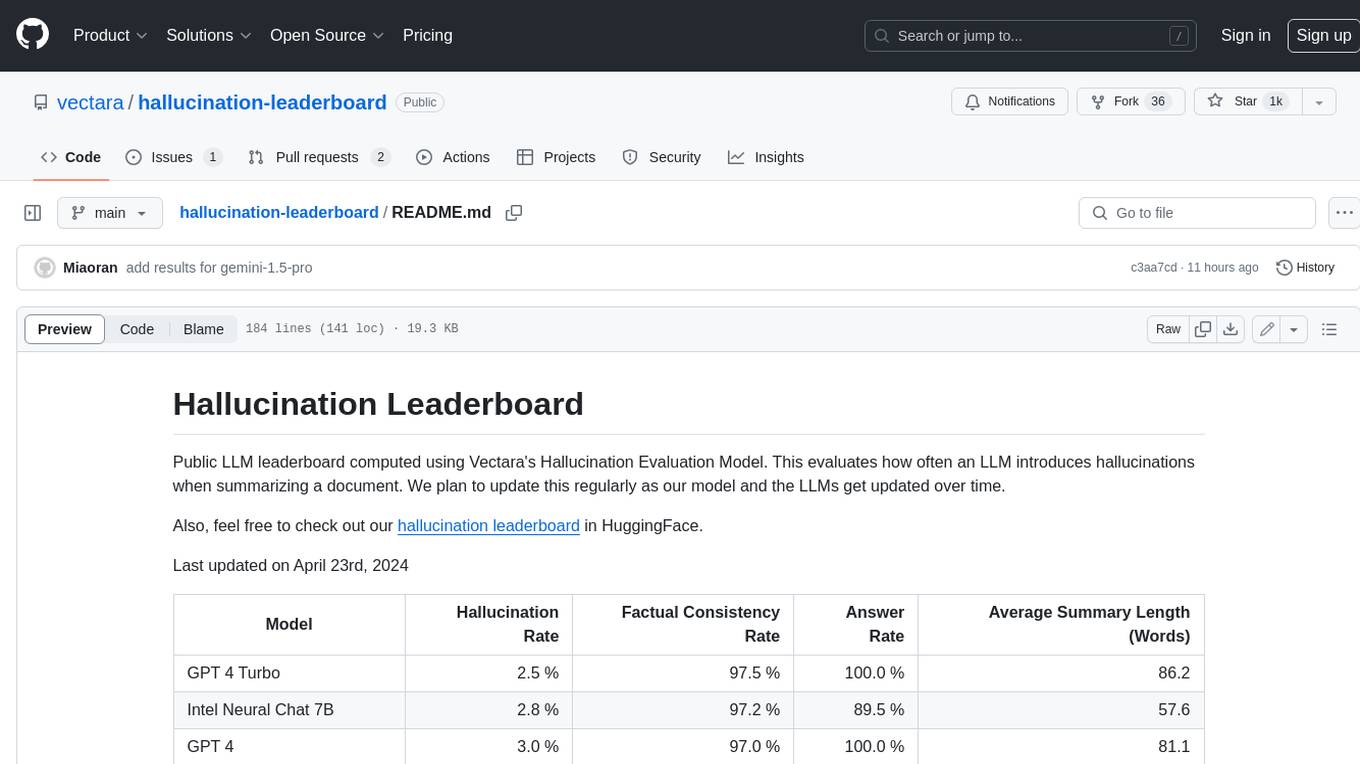

- Vectara Hallucination Leaderboard

- Ray/Anyscale's LLM Performance Leaderboard (explanation:)

- Hugging Face LLM Performance hugging face leaderboard

- Multi-task Language Understanding on MMLU

- EleutherAI LLM Evaluation Harness

- Eureka, Microsoft, A framework for standardizing evaluations of large foundation models, beyond single-score reporting and rankings. github Sep 2024 arxiv

- OpenAI Evals

- Visualizations of embedding space, Atlas from Apple

- github: LLM Comparator from PAIR Google, a side by side evaluation tool, LLM Comparator: A tool for human-driven LLM evaluation

- OpenEvals from LangChain indtroductory blog post from langchain

- YourBench: A Dynamic Benchmark Generation Framework, github "YourBench is an open-source framework for generating domain-specific benchmarks in a zero-shot manner. It aims to keep your large language models on their toes—even as new data sources, domains, and knowledge demands evolve."

- Score from Nvidia, a link to the github is inside the article, the code should be available soon

- AutoGenBench -- A Tool for Measuring and Evaluating AutoGen Agents from Microsoft see an example how it's used in the evaluation of Magentic-One: A Generalist Multi-Agent System for Solving Complex Tasks

- coPilot Arena: github repo, article: Copilot Arena: A Platform for Code LLM Evaluation in the Wild, Feb 2025, arxiv

- Phoenix Arize AI LLM observability and evaluation platform

- MTEB

- OpenICL Framework

- RAGAS

- Confident-AI DeepEval The LLM Evaluation Framework (unittest alike evaluation of LLM outputs)

- ML Flow Evaluate

- MosaicML Composer

- Microsoft Prompty

- NVidia Garac evaluation of LLMs vulnerabilities Generative AI Red-teaming & Assessment Kit

- Toolkit from Mozilla AI for LLM as judge evaluation tool: lm-buddy eval tool model: Prometheus

- ZeroEval from AllenAI unified framework for evaluating (large) language models on various reasoning tasks LeaderBoard

- TruLens

- Promptfoo

- BigCode Evaluation Harness

- LangFuse LLM Engineering platform with observability and evaluation tools

- LLMeBench see LLMeBench: A Flexible Framework for Accelerating LLMs Benchmarking

- ChainForge

- Ironclad Rivet

- LM-PUB-QUIZ: A Comprehensive Framework for Zero-Shot Evaluation of Relational Knowledge in Language Models, arxiv pdf github repository

---

in tech media and blog posts and podcasts from companies

- Demystifying evals for AI agents, Anthropic, Jan 2026, Anthropic

- Product Evals in Three Simple Steps, Eugene Yan, Nov 2025, blog post

- Measuring political bias in Claude, Nov 2025, Anthropic

- Understanding the 4 Main Approaches to LLM Evaluation (From Scratch), Oct 2025, Sebastian Raschka blog

- About Evalus by Andrew Ng , April 2025, the batch

- Mastering LLM Techniques: Evaluation, by Nvidia, Jan 2025, nvidia blog

- AI Search Has A Citation Problem, "A study of eight AI search engines found they provided incorrect citations of news articles in 60%+ of queries; Grok 3 answered 94% of the queries incorrectly", Mar 2025, Columbia Journalism Review

- On GPT-4.5 by Zvi Mowshowitz, a good writeup about several topics including evaluation at Zvi Mowschowitz's substack

- Mastering LLM Techniques: Evaluation, Jan 2025, Nvidia, nvidia blog

- Andrej Karpathy on evaluation X

- Apoorva Joshi on LLM Application Evaluation and Performance Improvements, InfoQ,Frb 2005, infoq

- From Meta on evaluation of Llama 3 models github

- A Framework for Building Micro Metrics for LLM System Evaluation, Jan 2025, InfoQ

- Evaluate LLMs using Evaluation Harness and Hugging Face TGI/vLLM, Sep 2024, blog

- The LLM Evaluation guidebook ⚖️ from HuggingFace, Oct 2024, Hugging Face Evaluation guidebook

- Let's talk about LLM Evaluation, HuggingFace, article

- Using LLMs for Evaluation LLM-as-a-Judge and other scalable additions to human quality ratings. Aug 2024, Deep Learning Focus

- Examining the robustness of LLM evaluation to the distributional assumptions of benchmarks, Microsoft, Aug 2024, ACL 2024

- Introducing SimpleQA, OpenAI, Oct 2024 OpenAI

- Catch me if you can! How to beat GPT-4 with a 13B model, LM sys org

- Why it’s impossible to review AIs, and why TechCrunch is doing it anyway Techcrun mat 2024

- A.I. has a measurement problem, NY Times, Apr 2024

- Beyond Accuracy: The Changing Landscape Of AI Evaluation, Forbes, Mar 2024

- Mozilla AI Exploring LLM Evaluation at scale

- Evaluation part of How to Maximize LLM Performance

- Mozilla AI blog published multiple good articles in Mozilla AI blog

- DeepMind AI Safety evaluation June 24 deepmind blog, Introducing Frontier Safety Framework

- AI Snake Oil, June 2024, AI leaderboards are no longer useful. It's time to switch to Pareto curves.

- Hamel Dev March 2024, Your AI Product Needs Eval. How to construct domain-specific LLM evaluation systems

- Global PIQA: Evaluating Physical Commonsense Reasoning Across 100+ Languages and Cultures, Oct 2025, arxiv

- SimpleQA Verified: A Reliable Factuality Benchmark to Measure Parametric Knowledge, Sep 2025, arxiv

- MCP Atlas Sep 2025 Leaderboard blog post

- MathArena Apex, Aug 2025 blogpost

- AIME 2025 Benchmark Leaderboard, leaderboard AIME 2025 dataset huggingface

- LiveCodeBench Pro: How Do Olympiad Medalists Judge LLMs in Competitive Programming?, Jun 2025, arxiv

- τ2-Bench: Evaluating Conversational Agents in a Dual-Control Environment, Jun 2025, parxiv

- ARC-AGI-2: A New Challenge for Frontier AI Reasoning Systems, May 2025, arxiv

- Terminal Bench 2.0 Leaderboard and data

- Vending-Bench: A Benchmark for Long-Term Coherence of Autonomous Agents, Feb 2025, arxiv

- Humanity's Last Exam, Jan 2025, arxiv

- Video-MMMU: Evaluating Knowledge Acquisition from Multi-Discipline Professional Videos, Jan 2025, arxiv

- OmniDocBench: Benchmarking Diverse PDF Document Parsing with Comprehensive Annotations, Dec 2024, arxiv

- FACTS Grounding: A new benchmark for evaluating the factuality of large language models, Dec 2024, Google Blog

- MMMU-Pro: A More Robust Multi-discipline Multimodal Understanding Benchmark, Sep 2024, arxiv

- MRCR v2 (Multi-Round Coreference Resolution version 2), leaderboard Michelangelo: Long Context Evaluations Beyond Haystacks via Latent Structure Queries, DeepMind

- CharXiv: Charting Gaps in Realistic Chart Understanding in Multimodal LLMs, Jun 2024, arxiv

- SWE Bench Verified blog and data at openai

- ScreenSpot-Pro: GUI Grounding for Professional High-Resolution Computer Use, Apr 2024, arxiv

- GPQA Diamond, dataset , original paper GPQA: A Graduate-Level Google-Proof Q&A Benchmark, Nov 2023, arxiv

- GPQA diamond Leaderboard leaderboard at epoch

- Measuring Massive Multitask Language Understanding, May 2020, arxiv

- MMLU-Pro+: Evaluating Higher-Order Reasoning and Shortcut Learning in LLMs, Sep 2024, Audesk AI, arxiv

- MMLU Pro Massive Multitask Language Understanding - Pro version, Jun 2024, arxiv

- Super-NaturalInstructions: Generalization via Declarative Instructions on 1600+ NLP Tasks EMNLP 2022, pdf

- Measuring Massive Multitask Language Understanding, MMLU, ICLR, 2021, arxiv MMLU dataset

- BigBench: Beyond the Imitation Game: Quantifying and extrapolating the capabilities of language models, 2022, arxiv, datasets

- Challenging BIG-Bench Tasks and Whether Chain-of-Thought Can Solve Them, Oct 2022, arxiv

- LiveTradeBench: Seeking Real-World Alpha with Large Language Models, Nov 2025, Evaluation on Live data, arxiv

- Measuring what Matters: Construct Validity in Large Language Model Benchmarks, NeuriIPS 2025

- The Leaderboard Illusion, Apr 2025, arxiv

- Rankers, Judges, and Assistants: Towards Understanding the Interplay of LLMs in Information Retrieval Evaluation, DeepMind, Mar 2025, arxiv

- Toward an evaluation science for generative AI systems, Mar 2025, arxiv

- The LLM Evaluation guidebook ⚖️ from HuggingFace, Oct 2024, Hugging Face Evaluation guidebook

- MixEval: Deriving Wisdom of the Crowd from LLM Benchmark Mixtures, NeurIPS 2024

- SCORE: Systematic COnsistency and Robustness Evaluation for Large Language Models, Feb 2025, Nvidia, arxiv

- Evaluating the Evaluations: A Perspective on Benchmarks, Opinion paper, Amazon, Jan 2025, SIGIR

- Inherent Trade-Offs between Diversity and Stability in Multi-Task Benchmarks, Max Planck Institute for Intelligent Systems, Tübingen, May 2024, ICML 2024, arxiv

- A Systematic Survey and Critical Review on Evaluating Large Language Models: Challenges, Limitations, and Recommendations, EMNLP 2024, ACLAnthology

- Re-evaluating Automatic LLM System Ranking for Alignment with Human Preference, Dec 2024, arxiv

- Adding Error Bars to Evals: A Statistical Approach to Language Model Evaluations, Nov 2024, Anthropic, arxiv

- Lessons from the Trenches on Reproducible Evaluation of Language Models, May 2024, arxiv

- Ranking Unraveled: Recipes for LLM Rankings in Head-to-Head AI Combat, Nov 2024, arxiv

- Towards Evaluation Guidelines for Empirical Studies involving LLMs, Nov 2024, arxiv

- Sabotage Evaluations for Frontier Models, Anthropic, Nov 2024, paper blog post

- AI Benchmarks and Datasets for LLM Evaluation, Dec 2024, arxiv, a survey of many LLM benchmarks

- Lessons from the Trenches on Reproducible Evaluation of Language Models, May 2024, arxiv

- Examining the robustness of LLM evaluation to the distributional assumptions of benchmarks, Aug 2024, ACL 2024

- Synthetic data in evaluation*, see Chapter 3 in Best Practices and Lessons Learned on Synthetic Data for Language Models, Apr 2024, arxiv

- From Crowdsourced Data to High-Quality Benchmarks: Arena-Hard and BenchBuilder Pipeline, UC Berkeley, Jun 2024, arxiv github repo

- When Benchmarks are Targets: Revealing the Sensitivity of Large Language Model Leaderboards, National Center for AI (NCAI), Feb 2024, arxiv

- Lifelong Benchmarks: Efficient Model Evaluation in an Era of Rapid Progress, Feb 2024, arxiv

- Are We on the Right Way for Evaluating Large Vision-Language Models?, Apr 2024, arxiv

- What Are We Measuring When We Evaluate Large Vision-Language Models? An Analysis of Latent Factors and Biases, Apr 2024, arxiv

- Detecting Pretraining Data from Large Language Models, Oct 2023, arxiv

- Revisiting Text-to-Image Evaluation with Gecko: On Metrics, Prompts, and Human Ratings, Apr 2024, arxiv

- Faithful model evaluation for model-based metrics, EMNLP 2023, amazon science

- AI Snake Oil, June 2024, AI leaderboards are no longer useful. It's time to switch to Pareto curves.

- State of What Art? A Call for Multi-Prompt LLM Evaluation , Aug 2024, Transactions of the Association for Computational Linguistics (2024) 12

- Data Contamination Through the Lens of Time, Abacus AI etc, Oct 2023, arxiv

- Same Pre-training Loss, Better Downstream: Implicit Bias Matters for Language Models, ICML 2023, mlr press

- Are Emergent Abilities of Large Language Models a Mirage? Apr 23 arxiv

- Don't Make Your LLM an Evaluation Benchmark Cheater nov 2023 arxiv

- Holistic Evaluation of Text-to-Image Models, Stanford etc NeurIPS 2023, NeurIPS

- Model Spider: Learning to Rank Pre-Trained Models Efficiently, Nanjing University etc NeurIPS 2023

- Evaluating Open-QA Evaluation, 2023, arxiv

- (RE: stat methods ) Prediction-Powered Inference Jan 23 arxiv PPI++: Efficient Prediction-Powered Inference nov 23, arxiv

- Elo Uncovered: Robustness and Best Practices in Language Model Evaluation, Nov 2023 arxiv

- A Theory of Dynamic Benchmarks, ICLR 2023, University of California, Berkeley, arxiv

- Holistic Evaluation of Language Models, Center for Research on Foundation Models (CRFM), Stanford, Oct 2022, arxiv

- What Will it Take to Fix Benchmarking in Natural Language Understanding?, NY University , Google Brain, Oct 2022, arxiv

- Beyond the Imitation Game: Quantifying and extrapolating the capabilities of language models, Jun 2022, arxiv

- Evaluating Question Answering Evaluation, 2019, ACL

- Evaluation of OpenAI o1: Opportunities and Challenges of AGI, University of Alberta etc, Sep 2024, arxiv

- TrustLLM: Trustworthiness in Large Language Models, Jan 2024, arxiv

- Evaluating AI systems under uncertain ground truth: a case study in dermatology, Jul 2023, Google DeepMind etc, arxiv

- Developing a Framework for Auditing Large Language Models Using Human-in-the-Loop, Univ of Washington, Stanford, Amazon AI etc, Feb 2024, arxiv

- Which Prompts Make The Difference? Data Prioritization For Efficient Human LLM Evaluation, Cohere, Nov 2023, arxiv

- Evaluating Question Answering Evaluation, 2019, ACL

- Efficient Inference for Noisy LLM-as-a-Judge Evaluation, Jan 2026, arxiv

- MemAlign: Building Better LLM Judges From Human Feedback With Scalable Memory, Feb 2026, Databricks

- How to Correctly Report LLM-as-a-Judge Evaluations, nov 2025, arxiv

- CLUE: Using Large Language Models for Judging Document Usefulness in Web Search Evaluation, Oct 2025, CIKM 2025

- Incentivizing Agentic Reasoning in LLM Judges via Tool-Integrated Reinforcement Learning, Google, Oct 2025, arxiv

- Analyzing Uncertainty of LLM-as-a-Judge: Interval Evaluations with Conformal Prediction, Sep 2025, arxiv

- An Empirical Study of LLM-as-a-Judge for LLM Evaluation: Fine-tuned Judge Model is not a General Substitute for GPT-4, Jul 2025, ACL 2025

- Can We Trust the Judges? Validation of Factuality Evaluation Methods via Answer Perturbation, TruthEval

- Rankers, Judges, and Assistants: Towards Understanding the Interplay of LLMs in Information Retrieval Evaluation, DeepMind, Mar 2025, arxiv

- Judge Anything: MLLM as a Judge Across Any Modality, Mar 2025, arxiv

- No Free Labels: Limitations of LLM-as-a-Judge Without Human Grounding, Mar 2025, [arxiv](No Free Labels: Limitations of LLM-as-a-Judge Without Human Grounding )

- Can LLMs Replace Human Evaluators? An Empirical Study of LLM-as-a-Judge in Software Engineering, Feb 2025, arxiv

- Learning to Plan & Reason for Evaluation with Thinking-LLM-as-a-Judge, Jan 2025, arxiv

- LLMs-as-Judges: A Comprehensive Survey on LLM-based Evaluation Methods, Tsinghua University, Dec 2024, arxiv

- Are LLM-Judges Robust to Expressions of Uncertainty? Investigating the effect of Epistemic Markers on LLM-based Evaluation, Seoul National University , Naver etc Oct 2024, arxiv

- JudgeBench: A Benchmark for Evaluating LLM-based Judges, UC Berkeley, Oct 2024, arxiv

- From Crowdsourced Data to High-Quality Benchmarks: Arena-Hard and BenchBuilder Pipeline, Oct 2024, UC Berkeley, arxiv

- Using LLMs for Evaluation LLM-as-a-Judge and other scalable additions to human quality ratings. Aug 2024, Deep Learning Focus

- Systematic Evaluation of LLM-as-a-Judge in LLM Alignment Tasks: Explainable Metrics and Diverse Prompt Templates, Aug 2024, arxiv

- Language Model Council: Democratically Benchmarking Foundation Models on Highly Subjective Tasks, Jun 2024, arxiv

- Judging the Judges: Evaluating Alignment and Vulnerabilities in LLMs-as-Judges, University of Massachusetts Amherst, Meta, Jun 2024, arxiv

- Length-Controlled AlpacaEval: A Simple Way to Debias Automatic Evaluators, Stanford University, Apr 2024, arxiv leaderboard code

- Large Language Models are Inconsistent and Biased Evaluators, Grammarly Duke Nvidia, May 2024, arxiv

- Report Cards: Qualitative Evaluation of Language Models Using Natural Language Summaries, University of Toronto and Vector Institute, Sep 2024, arxiv

- Evaluating LLMs at Detecting Errors in LLM Responses, Penn State University, Allen AI etc, Apr 2024, arxiv

- Replacing Judges with Juries: Evaluating LLM Generations with a Panel of Diverse Models, Cohere, Apr 2024, arxiv

- Aligning with Human Judgement: The Role of Pairwise Preference in Large Language Model Evaluators, Mar 2024, arxiv

- LLM Evaluators Recognize and Favor Their Own Generations, Apr 2024, pdf

- Who Validates the Validators? Aligning LLM-Assisted Evaluation of LLM Outputs with Human Preferences, Apr 2024, arxiv

- The Generative AI Paradox on Evaluation: What It Can Solve, It May Not Evaluate, Feb 2024, arxiv

- Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena Jun 2023, arxiv

- Discovering Language Model Behaviors with Model-Written Evaluations, Dec 2022, arxiv

- Benchmarking Foundation Models with Language-Model-as-an-Examiner, 2022, NEURIPS

- Red Teaming Language Models with Language Models, Feb 2022, arxiv

- ChatEval: Towards Better LLM-based Evaluators through Multi-Agent Debate, Aug 2023, arxiv

- ALLURE: Auditing and Improving LLM-based Evaluation of Text using Iterative In-Context-Learning, Sep 2023, arxiv

- Style Over Substance: Evaluation Biases for Large Language Models, Jul 2023, arxiv

- Large Language Models Are State-of-the-Art Evaluators of Translation Quality, Feb 2023, arxiv

- Large Language Models Are State-of-the-Art Evaluators of Code Generation, Apr 2023, researchgate

- MIEB: Massive Image Embedding Benchmark, apr 2025, arxiv

- MMTEB: Massive Multilingual Text Embedding Benchmark, Feb 2025, hugging face, leaderboard Brief: 1043 languages in total, primarily in Bitext mining (text pairing), but also 255 in classification, 209 in clustering, and 142 in Retrieval., 550 tasks, anything from sentiment analysis, question-answering reranking, to long-document retrieval. 17 domains, like legal, religious, programming, web, social, medical, blog, academic, etc. Across this collection of tasks, we subdivide into a lot of separate benchmarks, like MTEB(eng, v2), MTEB(Multilingual, v1), MTEB(Law, v1). Our new MTEB(eng, v2) is much smaller and faster than the original English MTEB, making submissions much cheaper and simpler. from Tom Aarsen's linkedin

- ChemTEB: Chemical Text Embedding Benchmark, an Overview of Embedding Models Performance & Efficiency on a Specific Domain, Nov 2024, arxiv

- MTEB: Massive Text Embedding Benchmark Oct 2022 [arxiv](https://arxiv.org/abs/2210.07316 Leaderboard) Leaderboard

- Marqo embedding benchmark for eCommerce at Huggingface, text to image and category to image tasks

- LongEmbed: Extending Embedding Models for Long Context Retrieval, Apr 2024, arxiv

- The Scandinavian Embedding Benchmarks: Comprehensive Assessment of Multilingual and Monolingual Text Embedding, openreview pdf

- MMTEB: Community driven extension to MTEB repository

- Chinese MTEB C-MTEB repository

- French MTEB repository

- HellaSwag, HellaSwag: Can a Machine Really Finish Your Sentence? 2019, arxiv Paper + code + dataset https://rowanzellers.com/hellaswag/

- The LAMBADA dataset: Word prediction requiring a broad discourse context 2016, arxiv

- MIRAGE-Bench: LLM Agent is Hallucinating and Where to Find Them, Jul 2025, arxiv

- The MASK Benchmark: Disentangling Honesty From Accuracy in AI Systems, The Center for Safe AI, Scale AI, arxiv MASK Benchmark

- The FACTS Grounding Leaderboard: Benchmarking LLMs' Ability to Ground Responses to Long-Form Input, DeepMind, Jan 2025, arxiv Leaderboard

- Introducing SimpleQA, OpenAI, Oct 2024 OpenAI

- A Survey of Hallucination in Large Visual Language Models, Oct 2024, See Chapter IV, Evaluation of Hallucinations arxiv

- Long-form factuality in large language models, Google DeepMind etc, Mar 2024, arxiv

- TRUSTLLM: TRUSTWORTHINESS IN LARGE LANGUAGE MODELS: A PRINCIPLE AND BENCHMARK, Lehigh University, University of Notre Dame, MS Research, etc, Jan 2024, arxiv,

- INVITE: A testbed of automatically generated invalid questions to evaluate large language models for hallucinations, Amazon Science, EMNLP 2023, amazon science

- Generating Benchmarks for Factuality Evaluation of Language Models, Jul 2023, arxiv

- AlignScore: Evaluating Factual Consistency with a Unified Alignment Function, May 2023, arxiv

- ChatGPT as a Factual Inconsistency Evaluator for Text Summarization, Mar 2023, arxiv

- HaluEval: A Large-Scale Hallucination Evaluation Benchmark for Large Language Models, Dec 2023, ACL

- Siren's Song in the AI Ocean: A Survey on Hallucination in Large Language Models, Tencent AI lab etc, Sep 2023, arxiv

- Measuring Faithfulness in Chain-of-Thought Reasoning, Anthropic etc, Jul 2023, [arxiv

- FActScore: Fine-grained Atomic Evaluation of Factual Precision in Long Form Text Generation, University of Washington etc, May 2023, arxiv repository

- TRUE: Re-evaluating Factual Consistency Evaluation, Apt 2022, arxiv

QA is used in many vertical domains, see Vertical section below

- NLP-QA: A Large-scale Benchmark for Informative Question Answering over Natural Language Processing Documents, Nov 2025 , short paper CIKM 2025

- SuperGPQA: Scaling LLM Evaluation across 285 Graduate Disciplines, Mar 2025, arxiv

- CoReQA: Uncovering Potentials of Language Models in Code Repository Question Answering, Jan 2025, arxiv

- Unveiling the power of language models in chemical research question answering, Jan 2025, Nature, communication chemistry ScholarChemQA Dataset

- Search Engines in an AI Era: The False Promise of Factual and Verifiable Source-Cited Responses, Oct 2024, Salesforce, arxiv Answer Engine (RAG) Evaluation Repository

- HELMET: How to Evaluate Long-Context Language Models Effectively and Thoroughly, Oct 2024, arxiv

- Introducing SimpleQA, OpenAI, Oct 2024 OpenAI

- NovelQA: A Benchmark for Long-Range Novel Question Answering, Mar 2024, arxiv

- NovelQA: Benchmarking Question Answering on Documents Exceeding 200K Tokens, Mar 2024, arxiv

- Are Large Language Models Consistent over Value-laden Questions?, Jul 2024, arxiv

- LongBench: A Bilingual, Multitask Benchmark for Long Context Understanding, Aug 2023, arxiv

- L-Eval: Instituting Standardized Evaluation for Long Context Language Models, Jul 2023. arxiv

- A Dataset of Information-Seeking Questions and Answers Anchored in Research Papers, QASPER, May 2021, arxiv

- MultiDoc2Dial: Modeling Dialogues Grounded in Multiple Documents, EMNLP 2021, ACL

- CommonsenseQA: A Question Answering Challenge Targeting Commonsense Knowledge, Jun 2019, ACL

- Can a Suit of Armor Conduct Electricity? A New Dataset for Open Book Question Answering, Sep 2018, arxiv OpenBookQA dataset at AllenAI

- Jin, Di, et al. "What Disease does this Patient Have? A Large-scale Open Domain Question Answering Dataset from Medical Exams., 2020, arxiv MedQA

- Think you have Solved Question Answering? Try ARC, the AI2 Reasoning Challenge, 2018, arxiv ARC Easy dataset ARC dataset

- BoolQ: Exploring the Surprising Difficulty of Natural Yes/No Questions, 2019, arxiv BoolQ dataset

- BookQA: Stories of Challenges and Opportunities, Oct 2019, arxiv

- HellaSwag, HellaSwag: Can a Machine Really Finish Your Sentence? 2019, arxiv Paper + code + dataset https://rowanzellers.com/hellaswag/

- PIQA: Reasoning about Physical Commonsense in Natural Language, Nov 2019, arxiv PIQA dataset

- Crowdsourcing Multiple Choice Science Questions arxiv SciQ dataset

- The NarrativeQA Reading Comprehension Challenge, Dec 2017, arxiv dataset at deepmind

- WinoGrande: An Adversarial Winograd Schema Challenge at Scale, 2017, arxiv Winogrande dataset

- TruthfulQA: Measuring How Models Mimic Human Falsehoods, Sep 2021, arxiv

- TyDi QA: A Benchmark for Information-Seeking Question Answering in Typologically Diverse Languages, 2020, arxiv data

- Natural Questions: A Benchmark for Question Answering Research, Transactions ACL 2019

- MTRAG: A Multi-Turn Conversational Benchmark for Evaluating Retrieval-Augmented Generation Systems, Jan 2025, arxiv

- MT-Bench-101: A Fine-Grained Benchmark for Evaluating Large Language Models in Multi-Turn Dialogues Feb 24 arxiv

- How Well Can LLMs Negotiate? NEGOTIATIONARENA Platform and Analysis Feb 2024 arxiv

- LMRL Gym: Benchmarks for Multi-Turn Reinforcement Learning with Language Models Nov 2023, arxiv

- BotChat: Evaluating LLMs’ Capabilities of Having Multi-Turn Dialogues, Oct 2023, arxiv

- Parrot: Enhancing Multi-Turn Instruction Following for Large Language Models, Oct 2023, arxiv

- Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena, NeurIPS 2023, NeurIPS

- MINT: Evaluating LLMs in Multi-turn Interaction with Tools and Language Feedback, Sep 2023, arxiv

- Are Language Models Efficient Reasoners? A Perspective from Logic Programming, Oct 2025, arxiv

- LogicGame: Benchmarking Rule-Based Reasoning Abilities of Large Language Models, LogicGame: Benchmarking Rule-Based Reasoning Abilities of Large Language Models, ACL Findings 2025

- DeepSeek-Prover-V2: Advancing Formal Mathematical Reasoning via Reinforcement Learning for Subgoal Decomposition, Apr 2025, ProverBench, a collection of 325 formalized problems, arxiv

- Proof or Bluff? Evaluating LLMs on 2025 USA Math Olympiad, Mar 2025, arxiv

- EnigmaEval: A Benchmark of Long Multimodal Reasoning Challenges, ScaleAI, Feb 2025, arxiv

- Evaluating Generalization Capability of Language Models across Abductive, Deductive and Inductive Logical Reasoning, Feb 2025, Coling 2025

- Humanity's Last Exam, Jan 2025, arxiv

- JustLogic: A Comprehensive Benchmark for Evaluating Deductive Reasoning in Large Language Models, Jan 2025, arxiv

- See 5.3 Evaluations chapter of DeepSeek R3 tech report on how new frontier models are evaluated Dec 2024 DeepSeek-V3 Technical Report and 3.1. DeepSeek-R1 Evaluation Chapter of DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning Jan 2025 arxiv

- Evaluating Generalization Capability of Language Models across Abductive, Deductive and Inductive Logical Reasoning, Jan 2025, Proceedings of the 31st International Conference on Computational Linguistics)

- FrontierMath at EpochAI, FrontierAI page, FrontierMath: A Benchmark for Evaluating Advanced Mathematical Reasoning in AI, Nov 2024, arxiv

- Easy Problems That LLMs Get Wrong, May 2024, arxiv, a comprehensive Linguistic Benchmark designed to evaluate the limitations of Large Language Models (LLMs) in domains such as logical reasoning, spatial intelligence, and linguistic understanding

- Visual CoT: Advancing Multi-Modal Language Models with a Comprehensive Dataset and Benchmark for Chain-of-Thought Reasoning, NeurIPS 2024 Track Datasets and Benchmarks Spotlight, Sep 2024, OpenReview

- Comparing Humans, GPT-4, and GPT-4V On Abstraction and Reasoning Tasks 2023, arxiv

- LLM Reasoners: New Evaluation, Library, and Analysis of Step-by-Step Reasoning with Large Language Models, arxiv

- Evaluating LLMs' Mathematical Reasoning in Financial Document Question Answering, Feb 24, arxiv

- Competition-Level Problems are Effective LLM Evaluators, Dec 23, arxiv

- Eyes Can Deceive: Benchmarking Counterfactual Reasoning Capabilities of Multimodal Large Language Models, Apr 2024, arxiv

- MuSR: Testing the Limits of Chain-of-thought with Multistep Soft Reasoning, Oct 2023, arxiv

- Evaluating Large Language Models for Cross-Lingual Retrieval, Sep 2025, arxiv

- A Comprehensive Evaluation of Embedding Models and LLMs for IR and QA Across English and Italian, May 2025, Advances in Natural Language Processing and Text Mining May 2025

- The Bitter Lesson Learned from 2,000+ Multilingual Benchmarks, Apr 2025, arxiv

- Mexa: Multilingual Evaluation of English-Centric LLMs via Cross-Lingual Alignment, (ICLR 2025 submission) open review

- MMTEB: Massive Multilingual Text Embedding Benchmark, Feb 2025, arxiv

- Evalita-LLM: Benchmarking Large Language Models on Italian, Feb 2025, arxiv

- Multilingual Large Language Models: A Systematic Survey, Nov 2024, see Evaluation chapter about details of evaluation of multi-lingual large language models Evaluation chapter, arxiv

- Chinese SimpleQA: A Chinese Factuality Evaluation for Large Language Models, Taobao & Tmall Group of Alibaba, Nov 2024, arxiv

- Cross-Lingual Auto Evaluation for Assessing Multilingual LLMs, Oct 2024, arxiv

- Towards Multilingual LLM Evaluation for European Languages, TU Dresden etc, Oct 2024, arxiv

- MM-Eval: A Multilingual Meta-Evaluation Benchmark for LLM-as-a-Judge and Reward Models, Oct 2024, arxiv

- LLMzSzŁ: a comprehensive LLM benchmark for Polish, Jan 2024, arxiv

- Are Large Language Model-based Evaluators the Solution to Scaling Up Multilingual Evaluation?, Microsoft/CMU etc , Sep 2024, arxiv

- AlGhafa Evaluation Benchmark for Arabic Language Models Dec 23, ACL Anthology ACL pdf article

- CALAMITA: Challenge the Abilities of LAnguage Models in ITAlian, Dec 2024, Tenth Italian Conference on Computational Linguistics,

- Evaluating and Advancing Multimodal Large Language Models in Ability Lens, Nov 2024, arxiv

- Introducing the Open Ko-LLM Leaderboard: Leading the Korean LLM Evaluation Ecosystem HF blog

- Heron-Bench: A Benchmark for Evaluating Vision Language Models in Japanese , Apr 2024 arxiv

- BanglaQuAD: A Bengali Open-domain Question Answering Dataset, Oct 2024, arxiv

- MultiPragEval: Multilingual Pragmatic Evaluation of Large Language Models, Jun 2024, arxiv

- Are Large Language Model-based Evaluators the Solution to Scaling Up Multilingual Evaluation?, Mar 2024, Findings of the Association for Computational Linguistics: EACL 2024

- The Invalsi Benchmark: measuring Language Models Mathematical and Language understanding in Italian, Mar 2024, arxiv

- MEGA: Multilingual Evaluation of Generative AI, Mar 2023, arxiv

- Khayyam Challenge (PersianMMLU): Is Your LLM Truly Wise to The Persian Language?, Apr 2024, arxiv

- Aya Model: An Instruction Finetuned Open-Access Multilingual Language Model, Cohere, Feb 2024, arxiv see Evaluation chapter with details how to evaluate multi lingual model capabilities

- XTREME-UP: A User-Centric Scarce-Data Benchmark for Under-Represented Languages, May 2023, arxiv

- M3Exam: A Multilingual, Multimodal, Multilevel Benchmark for Examining Large Language Models, 2023, NIPS website

- LAraBench: Benchmarking Arabic AI with Large Language Models, May 23, arxiv

- AlignBench: Benchmarking Chinese Alignment of Large Language Models, Nov 2023, arxiv

- XOR QA: Cross-lingual Open-Retrieval Question Answering, Oct 2020, arxiv

- CLUE: A Chinese Language Understanding Evaluation Benchmark, Apr 2020, arxiv CLUEWSC(Winograd Scheme Challenge)

- MMTEB: Massive Multilingual Text Embedding Benchmark, Feb 2025, arxiv

- The Scandinavian Embedding Benchmarks: Comprehensive Assessment of Multilingual and Monolingual Text Embedding, openreview pdf

- Chinese MTEB C-MTEB repository

- French MTEB repository

- C-Eval: A Multi-Level Multi-Discipline Chinese Evaluation Suite for Foundation Models, May 2023, arxiv

- Roboflow100-VL: A Multi-Domain Object Detection Benchmark for Vision-Language Models, RoboFlow, CMU, NeurIPS 2025

- MM-OPERA: Benchmarking Open-ended Association Reasoning for Large Vision-Language Models, Oct 2025, arxiv

- How Well Does GPT-4o Understand Vision? Evaluating Multimodal Foundation Models on Standard Computer Vision Tasks, Jul 2025, HF

- MIEB: Massive Image Embedding Benchmark, apr 2025, arxiv

- Judge Anything: MLLM as a Judge Across Any Modality, Mar 2025, arxiv

- Can Large Vision Language Models Read Maps Like a Human?, Mar 2025, arxiv

- MM-Spatial: Exploring 3D Spatial Understanding in Multimodal LLMs, see Cubify Anything VQA (CA-VQA) in the paper, Mar 2025, arxiv

- ViDoRe Benchmark V2: Raising the Bar for Visual Retrieval, The Visual Document Retrieval Benchmark, Mar 2025, HuggingSpace

- MMRC: A Large-Scale Benchmark for Understanding Multimodal Large Language Model in Real-World Conversation, Feb 2025, arxiv

- EnigmaEval: A Benchmark of Long Multimodal Reasoning Challenges, ScaleAI, Feb 2025, arxiv

- Benchmark Evaluations, Applications, and Challenges of Large Vision Language Models: A Survey, Jan 2025, arxiv

- LVLM-EHub: A Comprehensive Evaluation Benchmark for Large Vision-Language Models, Nov 2024, IEEE

- ScImage: How Good Are Multimodal Large Language Models at Scientific Text-to-Image Generation?, Dec 2024, arxiv

- RealWorldQA, Apr 2024, HuggingFace

- VoiceBench: Benchmarking LLM-Based Voice Assistants, Oct 2024, arxiv

- Image2Struct: Benchmarking Structure Extraction for Vision-Language Models, Oct 2024, arxiv

- MMBench: Is Your Multi-modal Model an All-Around Player?, Oct 2024 springer ECCV 2024

- MMIE: Massive Multimodal Interleaved Comprehension Benchmark for Large Vision-Language Models, Oct 2024, arxiv

- MMT-Bench: A Comprehensive Multimodal Benchmark for Evaluating Large Vision-Language Models Towards Multitask AGI, Apr 2024, arxiv

- MMMU: A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI, CVPR 2024, CVPR

- ConvBench: A Multi-Turn Conversation Evaluation Benchmark with Hierarchical Ablation Capability for Large Vision-Language Models, Dec 2024, open review github for the benchmark and evaluation framework

- Careless Whisper: Speech-to-Text Hallucination Harms, FAccT '24, ACM

- AutoBench-V: Can Large Vision-Language Models Benchmark Themselves?, Oct 2024 arxiv

- HaloQuest: A Visual Hallucination Dataset for Advancing Multimodal Reasoning, Oct 2024, Computer Vision – ECCV 2024

- VHELM: A Holistic Evaluation of Vision Language Models, Oct 2024, arxiv

- Vibe-Eval: A hard evaluation suite for measuring progress of multimodal language models, Reka AI, May 2024 arxiv dataset blog post

- Zero-Shot Visual Reasoning by Vision-Language Models: Benchmarking and Analysis, Aug 2024, arxiv

- CARES: A Comprehensive Benchmark of Trustworthiness in Medical Vision Language Models, Jun 2024, arxiv

- EmbSpatial-Bench: Benchmarking Spatial Understanding for Embodied Tasks with Large Vision-Language Models, Jun 2024, arxiv

- MFC-Bench: Benchmarking Multimodal Fact-Checking with Large Vision-Language Models, Jun 2024, arxiv

- Holistic Evaluation of Text-to-Image Models Nov 23 arxiv

- VBench: Comprehensive Benchmark Suite for Video Generative Models Nov 23 arxiv

- Evaluating Text-to-Visual Generation with Image-to-Text Generation, Apr 2024, arxiv

- What Are We Measuring When We Evaluate Large Vision-Language Models? An Analysis of Latent Factors and Biases, Apr 2024, arxiv

- Are We on the Right Way for Evaluating Large Vision-Language Models?, Apr 2024, arxiv

- MMC: Advancing Multimodal Chart Understanding with Large-scale Instruction Tuning, Nov 2023, arxiv

- BLINK: Multimodal Large Language Models Can See but Not Perceive, Apr 2024, arxiv github

- Eyes Can Deceive: Benchmarking Counterfactual Reasoning Capabilities of Multimodal Large Language Models, Apr 2024, arxiv

- Revisiting Text-to-Image Evaluation with Gecko: On Metrics, Prompts, and Human Ratings, Apr 2024, arxiv

- VALOR-EVAL: Holistic Coverage and Faithfulness Evaluation of Large Vision-Language Models, Apr 2024, arxiv

- MathVista: Evaluating Mathematical Reasoning of Foundation Models in Visual Contexts, Oct 2023, [arxiv](MathVista: Evaluating Mathematical Reasoning of Foundation Models in Visual Contexts)

- Evaluation part of https://arxiv.org/abs/2404.18930, Apr 2024, arxiv, repository

- VisIT-Bench: A Benchmark for Vision-Language Instruction Following Inspired by Real-World Use, Aug 2023. arxiv

- MM-Vet: Evaluating Large Multimodal Models for Integrated Capabilities, Aug 2023, arxiv

- SEED-Bench: Benchmarking Multimodal LLMs with Generative Comprehension, Jul 2023, arxiv

- LAMM: Language-Assisted Multi-Modal Instruction-Tuning Dataset, Framework, and Benchmark, NeurIPS 2023, NeurIPS

- Holistic Evaluation of Text-to-Image Models, Stanford etc NeurIPS 2023, NeurIPS

- mPLUG-Owl: Modularization Empowers Large Language Models with Multimodality, Apr 2023 arxiv

- MMAU: A Massive Multi-Task Audio Understanding and Reasoning Benchmark, MMAU Music, MMAU Sound, Oct 2024, arxiv

- MuChoMusic: Evaluating Music Understanding in Multimodal Audio-Language Models, Aug 2024, arxiv

- GAMA: A Large Audio-Language Model with Advanced Audio Understanding and Complex Reasoning Abilities, Jun 2024, arxiv

- Audio Entailment: Assessing Deductive Reasoning for Audio Understanding, May 2023, audio entailment clotho and audio entailment audio caps, arxiv

- Listen, Think, and Understand, OpenAQA dataset, May 2023, arxiv

- Clotho-AQA: A Crowdsourced Dataset for Audio Question Answering, Apr 2022, arxiv

- AudioCaps: Generating Captions for Audios in The Wild, NA ACL 2019

- Medley-solos-DB: a cross-collection dataset for musical instrument recognition, 2019, zenodo

- CREMA-D Crowd-sourced Emotional Multimodal Actors Datasetrepository paper CREMA-D: Crowd-sourced Emotional Multimodal Actors Dataset, IEEE transactions in affective computing 2014

- AdvancedIF: Rubric-Based Benchmarking and Reinforcement Learning for Advancing LLM Instruction Following, Nov 2025, arxiv

- Find the INTENTION OF INSTRUCTION: Comprehensive Evaluation of Instruction Understanding for Large Language Models, Dec 2024, arxiv

- HREF: Human Response-Guided Evaluation of Instruction Following in Language Models, Dec 2024, arxiv

- CFBench: A Comprehensive Constraints-Following Benchmark for LLMs. Aug 2024, arxiv

- Instruction-Following Evaluation for Large Language Models, IFEval, Nov 2023, arxiv

- Evaluating Large Language Models at Evaluating Instruction Following Oct 2023, arxiv

- FLASK: Fine-grained Language Model Evaluation based on Alignment Skill Sets, Jul 2023, arxiv , FLASK dataset

- DINGO: Towards Diverse and Fine-Grained Instruction-Following Evaluation, Mar 2024, aaai, pdf

- LongForm: Effective Instruction Tuning with Reverse Instructions, Apr 2023, arxiv dataset

- Evaluating the Moral Beliefs Encoded in LLMs, Jul 23 arxiv

- AI Deception: A Survey of Examples, Risks, and Potential Solutions Aug 23 arxiv

- Aligning AI With Shared Human Value, Aug 20 - Feb 23, arxiv Re: ETHICS benchmark

- What are human values, and how do we align AI to them?, Mar 2024, pdf

- TrustLLM: Trustworthiness in Large Language Models, Jan 2024, arxiv

- Helpfulness, Honesty, Harmlessness (HHH) framework from Antrhtopic, introduced in A General Language Assistantas a Laboratory for Alignment, 2021, arxiv, it's in BigBench now bigbench

- WorldValuesBench: A Large-Scale Benchmark Dataset for Multi-Cultural Value Awareness of Language Models, April 2024, arxiv

- Chapter 19 in The Ethics of Advanced AI Assistants, Apr 2024, Google DeepMind, pdf at google

- BEHONEST: Benchmarking Honesty of Large Language Models, June 2024, arxiv

- FairPair: A Robust Evaluation of Biases in Language Models through Paired Perturbations, Apr 2024 arxiv

- BOLD: Dataset and Metrics for Measuring Biases in Open-Ended Language Generation, 2021, arxiv, dataset

- “I’m fully who I am”: Towards centering transgender and non-binary voices to measure biases in open language generation, ACM FAcct 2023, amazon science

- This Land is {Your, My} Land: Evaluating Geopolitical Biases in Language Models, May 2023, arxiv

- RAG LLMs are Not Safer: A Safety Analysis of Retrieval-Augmented Generation for Large Language Models, Bloomberg, Apr 2025, arxiv

- Understanding and Mitigating Risks of Generative AI in Financial Services, Bloomberg, Apr 2025, Bloomberg

- The MASK Benchmark: Disentangling Honesty From Accuracy in AI Systems, The Center for Safe AI, Scale AI, arxiv MASK Benchmark

- Lessons From Red Teaming 100 Generative AI Products, Microsoft, Jan 2025, arxiv

- Trading Inference-Time Compute for Adversarial Robustness, OpenAI, Jan 2025, arxiv

- Medical large language models are vulnerable to data-poisoning attacks, New York University, Jan 2025, Nature Medicine

- Benchmark for general-purpose AI chat model, December 2024, AILuminate from ML Commons, mlcommons website

- Fooling LLM graders into giving better grades through neural activity guided adversarial prompting, Stanford University, Dec 2024, arxiv

- SORRY-Bench: Systematically Evaluating Large Language Model Safety Refusal Behaviors, Jun 2024, arxiv

- The WMDP Benchmark: Measuring and Reducing Malicious Use With Unlearning, Weapons of Mass Destruction Proxy (WMDP) benchmark, Mar 2024, arxiv

- HarmBench: A Standardized Evaluation Framework for Automated Red Teaming and Robust Refusal, Feb 2024, arxiv HarmBench data and code

- ECCV 2024 MM-SafetyBench: A Benchmark for Safety Evaluation of Multimodal Large Language Models, Shanghai AI Laboratory, etc, Jan 2024, github arxiv nov 2023

- Introducing v0.5 of the AI Safety Benchmark from MLCommons, ML Commons, Google Research etc Apr 2024, arxiv

- SecCodePLT: A Unified Platform for Evaluating the Security of Code GenAI, Virtue AI, etc, Oct 2024, arxiv

- Beyond Prompt Brittleness: Evaluating the Reliability and Consistency of Political Worldviews in LLMs , University of Stuttgart, etc, Nov 2024, MIT Press

- Safetywashing: Do AI Safety Benchmarks Actually Measure Safety Progress?, Center for AI Safety etc, Jul 2024. arxiv

- Risk Taxonomy, Mitigation, and Assessment Benchmarks of Large Language Model Systems, Zhongguancun Laboratory, etc, Jan 2024, arxiv

- LLMSecCode: Evaluating Large Language Models for Secure Coding, Chalmers University of Technology, Aug 2024, arxiv

- Attack Atlas: A Practitioner's Perspective on Challenges and Pitfalls in Red Teaming GenAI, IBM Research etc, Sep 2024, arxiv

- DetoxBench: Benchmarking Large Language Models for Multitask Fraud & Abuse Detection, Amazon.com, Sep 2024, arxiv

- Purple Llama, an umbrella project from Meta, Meta, Purple Llama repository

- How Many Are in This Image A Safety Evaluation Benchmark for Vision LLMs, ECCV 2024, ECCV 2024

- Explore, Establish, Exploit: Red Teaming Language Models from Scratch, MIT CSAIL etc, Jun 2023, arxiv

- Rethinking Backdoor Detection Evaluation for Language Models, University of Southern California, Aug 2024, arxiv pdf

- Gradient-Based Language Model Red Teaming, Google Research & Anthropic, Jan 24, arxiv

- JailbreakBench: An Open Robustness Benchmark for Jailbreaking Large Language Models, University of Pennsylvania, ETH Zurich, etc, , Mar 2024, arxiv

- Announcing a Benchmark to Improve AI Safety MLCommons has made benchmarks for AI performance—now it's time to measure safety, Apr 2024 IEEE Spectrum

- Model evaluation for extreme risks, Google DeepMind, OpenAI, etc, May 2023, arxiv

- A StrongREJECT for Empty Jailbreaks, Center for Human-Compatible AI, UC Berkeley, Feb 2024, arxiv StrongREJECT Benchmark

- Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training, Anthropic, Redwood Research etc Jan 2024, arxiv

- How Many Unicorns Are in This Image? A Safety Evaluation Benchmark for Vision LLMs, Nov 2023, arxiv

- On Evaluating Adversarial Robustness of Large Vision-Language Models, NeurIPS 2023, NeuriPS 2023

- How Robust is Google's Bard to Adversarial Image Attacks?, Sep 2023, arxiv

- CYBERSECEVAL 3: Advancing the Evaluation of Cybersecurity Risks and Capabilities in Large Language Models, July 2023, Meta arxiv

- CYBERSECEVAL 2: A Wide-Ranging Cybersecurity Evaluation Suite for Large Language Models, Apr 2024, Meta arxiv

- Benchmarking OpenAI o1 in Cyber Security, Oct 2024, arxiv

- Cybench: A Framework for Evaluating Cybersecurity Capabilities and Risks of Language Models, Aug 2024, arxiv

and other software co-pilot tasks

- SWE-Bench Pro: Can AI Agents Solve Long-Horizon Software Engineering Tasks?, Sep 2025, arxiv

- The SWE-Bench Illusion: When State-of-the-Art LLMs Remember Instead of Reason, Jun 2025, arxiv

- SWE-bench Goes Live!, May 2025. arxiv

- SWE-PolyBench: A multi-language benchmark for repository level evaluation of coding agents, Apr 2025, arxiv

- Multi-SWE-bench: A Multilingual Benchmark for Issue Resolving, Apr 2025, arxiv

- Evaluating Large Language Models in Code Generation: INFINITE Methodology for Defining the Inference Index, Mar 2025, arxiv

- coPilot Arena: github repo, article: Copilot Arena: A Platform for Code LLM Evaluation in the Wild, Feb 2025, arxiv

- Can LLMs Replace Human Evaluators? An Empirical Study of LLM-as-a-Judge in Software Engineering, Feb 2025, arxiv

- SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?, OpenAI, Feb 2025, arxiv

- Mutation-Guided LLM-based Test Generation at Meta, Jan 2025, see 4 ENGINEERS’ EVALUATION OF ACH chapter of arxiv and blog post at meta

- SecCodePLT: A Unified Platform for Evaluating the Security of Code GenAI, Oct 2024, arxiv

- L2CEval: Evaluating Language-to-Code Generation Capabilities of Large Language Models , Oct 2024, arxiv

- SWE-bench Verified, Introducing SWE-bench Verified

- Aider Polyglot, code editing benchmark Aider polyglot site

- A Survey on Evaluating Large Language Models in Code Generation Tasks, Peking University etc, Aug 2024, arxiv

- LLMSecCode: Evaluating Large Language Models for Secure Coding, Aug 2024, arxiv

- Copilot Evaluation Harness: Evaluating LLM-Guided Software Programming Feb 24 arxiv

- LiveCodeBench: Holistic and Contamination Free Evaluation of Large Language Models for Code, Berkeley, MIT, Cornell, Mar 2024, arxiv

- Introducing SWE-bench Verified, OpenAI, Aug 2024, OpenAI SWE Bench Leaderboard

- SWE Bench SWE-bench: Can Language Models Resolve Real-World GitHub Issues? Feb 2024 arxiv Tech Report

- Gorilla Functional Calling Leaderboard, Berkeley Leaderboard

- DevBench: A Comprehensive Benchmark for Software Development, Mar 2024,arxiv

- Evaluating Large Language Models Trained on Code HumanEval Jul 2022 arxiv

- CodeXGLUE: A Machine Learning Benchmark Dataset for Code Understanding and Generation Feb 21 arxiv

- MBPP (Mostly Basic Python Programming) benchmark, introduced in Program Synthesis with Large Language Models , 2021 papers with code data

- CodeMind: A Framework to Challenge Large Language Models for Code Reasoning, Feb 2024, arxiv

- CRUXEval: A Benchmark for Code Reasoning, Understanding and Execution, Jan 2024, arxiv

- CodeRL: Mastering Code Generation through Pretrained Models and Deep Reinforcement Learning, Jul 2022, arxiv code at salesforce github

- Evaluation & Hallucination Detection for Abstractive Summaries, online blog article

- A dataset and benchmark for hospital course summarization with adapted large language models, Dec 2024, Journal of the American Medical Informatics Association

- A Field Guide to Automatic Evaluation of LLM-Generated Summaries, SIGIR 2024, SIGIR 24

- Benchmarking Large Language Models for News Summarization , Jan 2024, Transactions of ACL

- SEAHORSE: A Multilingual, Multifaceted Dataset for Summarization Evaluation, May 2023, arxiv benchmark data

- Human-like Summarization Evaluation with ChatGPT, Apr 2023, arxiv

- Evaluating the Factual Consistency of Large Language Models Through News Summarization, Nov 2022, arxiv

- USB: A Unified Summarization Benchmark Across Tasks and Domains, May 2023, arxiv

- QAFactEval: Improved QA-Based Factual Consistency Evaluation for Summarization, Jan 2021, arxiv

- SummaC: Re-Visiting NLI-based Models for Inconsistency Detection in Summarization, Nov 2021, arxiv github data

- WikiAsp: A Dataset for Multi-domain Aspect-based Summarization, 2021, Transactions ACL dataset

- LLM-Inference-Bench: Inference Benchmarking of Large Language Models on AI Accelerators, Oct 2024, arxiv

- Ray/Anyscale's LLM Performance Leaderboard (explanation:)

- MLCommons MLPerf benchmarks (inference) MLPerf announcement of the LLM track

Agentic Tasks

- Demystifying evals for AI agents, Anthropic, Jan 2026, Anthropic

- DeliveryBench: Can Agents Earn Profit in Real World?, Dec 2025, arxiv

- Crumbling Under Pressure: PropensityBench Reveals AI’s Weaknesses, Nov 2025, arxiv

- AdvancedIF: Rubric-Based Benchmarking and Reinforcement Learning for Advancing LLM Instruction Following, Nov 2025, arxiv

- MCPVerse: An Expansive, Real-World Benchmark for Agentic Tool Use, Oct 2025, arxiv

- CookBench: A Long-Horizon Embodied Planning Benchmark for Complex Cooking Scenarios, aug 2025, arxiv

- Establishing Best Practices for Building Rigorous Agentic Benchmarks, Jul 2025, arxiv

- MCPEval: Automatic MCP-based Deep Evaluation for AI Agent Models, Jul 2025, arxiv

- TRAIL: Trace Reasoning and Agentic Issue Localization, May 2025, arxiv

- Survey on Evaluation of LLM-based Agents, Mar 2025, arxiv

- Embodied Agent Interface: Benchmarking LLMs for Embodied Decision Making, Oct 2024, arxiv

- AutoGenBench -- A Tool for Measuring and Evaluating AutoGen Agents from Microsoft see an example how it's used in the evaluation of Magentic-One: A Generalist Multi-Agent System for Solving Complex Tasks

- Overcoming the Machine Penalty with Imperfectly Fair AI Agents, Sep 2024, arxiv

- LLM4RL: Enhancing Reinforcement Learning with Large Language Models, Aug 2024, IEEE Explore

- Put Your Money Where Your Mouth Is: Evaluating Strategic Planning and Execution of LLM Agents in an Auction Arena, Oct 2023, arxiv

- Chapter 4 4 LLM-based autonomous agent evaluation in A survey on large language model based autonomous agents, Front. Comput. Sci., 2024, Front. Comput. Sci., 2024, at Springer

- LLM-Deliberation: Evaluating LLMs with Interactive Multi-Agent Negotiation Games, Sep 2023,arxiv

- AgentBench: Evaluating LLMs as Agents, Aug 2023, arxiv

- How Far Are We on the Decision-Making of LLMs? Evaluating LLMs' Gaming Ability in Multi-Agent Environments, Mar 2024, arxiv

- R-Judge: Benchmarking Safety Risk Awareness for LLM Agents, Jan 2024, arxiv

- ProAgent: Building Proactive Cooperative Agents with Large Language Models, Aug 2023, arxiv

- Towards A Unified Agent with Foundation Models, Jul 2023, arxiv

- RestGPT: Connecting Large Language Models with Real-World RESTful APIs, Jun 2023, arxiv

- Large Language Models Are Semi-Parametric Reinforcement Learning Agents, Jun 2023, arxiv

- ManiSkill2: A Unified Benchmark for Generalizable Manipulation Skills, Feb 2023, arxiv

AGI (Artificial General Intelligence) evaluation refers to the process of assessing whether an AI system possesses or approaches general intelligence—the ability to perform any intellectual task that a human can.

- Abstraction and Reasoning Corpus for Artificial General Intelligence (ARC-AGI-2), Mar 2025, github an explanation from François Chollet on X more details ARCPrize announcement

- Evaluating Intelligence via Trial and Error, Feb 2025, arxiv

- Humanity's Last Exam, Center for Safe AI, Scale AI, Jan 2025 arxiv

- Evaluation of OpenAI o1: Opportunities and Challenges of AGI, Sep 2024, arxiv

- GAIA: a benchmark for General AI Assistants, Nov 2023, arxiv, a joint effort by Meta, HuggingFace to design a benchmark measuring AGI assistants GAIA benchmark

- Levels of AGI for Operationalizing Progress on the Path to AGI, 2023, levels of agi

- Suri: Multi-constraint Instruction Following for Long-form Text Generation, Jun 2024, arxiv

- LongWriter: Unleashing 10,000+ Word Generation from Long Context LLMs, Aug 2024, arxiv

- LongBench: A Bilingual, Multitask Benchmark for Long Context Understanding, Aug 2023, arxiv

- HelloBench: Evaluating Long Text Generation Capabilities of Large Language Models, Sep 2024, arxiv

- LongDA: Benchmarking LLM Agents for Long-Document Data Analysis, Jan 2026, arxiv Document Understadinng

- M-LongDoc: A Benchmark For Multimodal Super-Long Document Understanding And A Retrieval-Aware Tuning Framework, EMNLP 2025 EMNLP 2025

- LongDocURL: a Comprehensive Multimodal Long Document Benchmark Integrating Understanding, Reasoning, and Locatingp ACL 2025 ACL 2025

- Benchmarking Retrieval-Augmented Multimodal Generation for Document Question Answering, May 2025, arxiv

- DocBench: A Benchmark for Evaluating LLM-based Document Reading Systems, ACL Workshop 2025, ACL 2025

- Document Haystack: A Long Context Multimodal Image/Document Understanding Vision LLM Benchmark, ICCV Workshop 2025, Amazon AGI team ICCV 2025

- DesignQA: A Multimodal Benchmark for Evaluating Large Language Models’ Understanding of Engineering Documentation, Dec 2024, ASME 2024

- MMLONGBENCH-DOC: Benchmarking Long-context Document Understanding with Visualizations, neurIPS 2024

- VRDU: A Benchmark for Visually-rich Document Understanding, KDD 2023 KDD 2023

- GPT4Graph: Can Large Language Models Understand Graph Structured Data ? An Empirical Evaluation and Benchmarking, May 2023, arxiv

- LLM4DyG: Can Large Language Models Solve Spatial-Temporal Problems on Dynamic Graphs? Oct 2023, arxiv

- Talk like a Graph: En Graphs for Large Language Models, Oct 2023, arxiv

- Open Graph Benchmark: Datasets for Machine Learning on Graphs, NeurIPS 2020

- Can Language Models Solve Graph Problems in Natural Language? NeurIPS 2023

- Evaluating Large Language Models on Graphs: Performance Insights and Comparative Analysis, Aug 2023, [https://arxiv.org/abs/2308.11224]

- RM-Bench: Benchmarking Reward Models of Language Models with Subtlety and Style, Oct 2024, arxiv

- HelpSteer2: Open-source dataset for training top-performing reward models, Aug 2024, arxiv

- RewardBench: Evaluating Reward Models for Language Modeling, Mar 2024, arxiv

- MT-Bench-101: A Fine-Grained Benchmark for Evaluating Large Language Models in Multi-Turn Dialogues Feb 24 arxiv

(TODO as there are more than three papers per class, make a class a separate chapter in this Compendium)

- REFINEBENCH: Evaluating Refinement Capability of Language Models with Checklists, KAIST, CMU, NVidia, NeurIPS 2025

- BabyBabelLM: A Multilingual Benchmark of Developmentally Plausible Training Data, Oct 2025, arxiv

- Butter-Bench: Evaluating LLM Controlled Robots for Practical Intelligence, Oct 2025, arxiv

- EvoBench: Towards Real-world LLM-Generated Text Detection Benchmarking for Evolving Large Language Models, ACL 2025

- LLM Evaluate: An Industry-Focused Evaluation Tool for Large Language Models, Coling 2025

- Better Benchmarking LLMs for Zero-Shot Dependency Parsing, Feb 2025, arxiv

- LongProc: Benchmarking Long-Context Language Models on Long Procedural Generation, Jan 2025, arxiv

- Fooling LLM graders into giving better grades through neural activity guided adversarial prompting, Dec 2024, arxiv

- OmniEvalKit: A Modular, Lightweight Toolbox for Evaluating Large Language Model and its Omni-Extensions, Dec 2024, arxiv

- Holmes ⌕ A Benchmark to Assess the Linguistic Competence of Language Models , Dec 2024, MIT Press Transactions of ACL, 2024

- EscapeBench: Pushing Language Models to Think Outside the Box, Dec 2024, arxiv

- DesignQA: A Multimodal Benchmark for Evaluating Large Language Models’ Understanding of Engineering Documentation, Dec 2024, The American Society of Mechanical Engineers

- Tulu 3: Pushing Frontiers in Open Language Model Post-Training, Nov 2024, arxiv see 7.1 Open Language Model Evaluation System (OLMES) and AllenAI Githib rep for Olmes

- CLAVE: An Adaptive Framework for Evaluating Values of LLM Generated Responses, NeuIPS 2024

- Embodied Agent Interface: Benchmarking LLMs for Embodied Decision Making, Oct 2024, arxiv

- Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks, Nov 2024, arxiv

- BENCHAGENTS: Automated Benchmark Creation with Agent Interaction, Oct 2024, arxiv

- To the Globe (TTG): Towards Language-Driven Guaranteed Travel Planning, Meta AI, Oct 2024, arxiv evaluation for tasks of travel planning

- Assessing the Performance of Human-Capable LLMs -- Are LLMs Coming for Your Job?, Oct 2024, arxiv, SelfScore, a novel benchmark designed to assess the performance of automated Large Language Model (LLM) agents on help desk and professional consultation task

- Should We Really Edit Language Models? On the Evaluation of Edited Language Models, Oct 2024, arxiv

- DyKnow: Dynamically Verifying Time-Sensitive Factual Knowledge in LLMs, EMNLP 2024, Oct 2024, arxiv, Repository for DyKnow

- From Crowdsourced Data to High-Quality Benchmarks: Arena-Hard and BenchBuilder Pipeline, UC Berkeley, Jun 2024, arxiv github repo ArenaHard benchmark

- OLMES: A Standard for Language Model Evaluations, Jun 2024, arxiv

- Evaluating Superhuman Models with Consistency Checks, Apr 2024, IEEE

- Jeopardy dataset at HuggingFace, huggingface

- A framework for few-shot language model evaluation, Zenodo, Jul 2024, Zenodo

- ORAN-Bench-13K: An Open Source Benchmark for Assessing LLMs in Open Radio Access Networks, Jul 2024 arxiv

- AGIEval: A Human-Centric Benchmark for Evaluating Foundation Models, Aug 2023, arxiv

- Evaluation of Response Generation Models: Shouldn’t It Be Shareable and Replicable?, Dec 2022, Proceedings of the 2nd Workshop on Natural Language Generation, Evaluation, and Metrics (GEM) Github repository for Human Evaluation Protocol

- From Babbling to Fluency: Evaluating the Evolution of Language Models in Terms of Human Language Acquisition, Oct 2024, arxiv

- DARG: Dynamic Evaluation of Large Language Models via Adaptive Reasoning Graph, June 2024, arxiv

- RM-Bench: Benchmarking Reward Models of Language Models with Subtlety and Style, Oct 2024, arxiv

- Table Meets LLM: Can Large Language Models Understand Structured Table Data? A Benchmark and Empirical Study Mar 24, WSDM 24, ms blog

- How Much are Large Language Models Contaminated? A Comprehensive Survey and the LLMSanitize Library, Nanyang Technological University, Mar 2024, arxiv

- LLM Comparative Assessment: Zero-shot NLG Evaluation through Pairwise Comparisons using Large Language Models, jul 2023 arxiv

- OpenEQA: From word models to world models, Meta, Apr 2024, Understanding physical spaces by Models, Meta AI blog

- Is Your LLM Outdated? Benchmarking LLMs & Alignment Algorithms for Time-Sensitive Knowledge. Apr 2024, arxiv

- ELITR-Bench: A Meeting Assistant Benchmark for Long-Context Language Models, Apr 2024, arxiv

- LongEmbed: Extending Embedding Models for Long Context Retrieval, Apr 2024, arxiv, benchmark for long context tasks, repository for LongEmbed

- LoTa-Bench: Benchmarking Language-oriented Task Planners for Embodied Agents, Feb 2024, arxiv

- Benchmarking and Building Long-Context Retrieval Models with LoCo and M2-BERT, Feb 2024, arxiv, LoCoV1 benchmark for long context LLM,

- A User-Centric Benchmark for Evaluating Large Language Models, Apr 2024, arxiv, data of user centric benchmark at github

- Evaluating Quantized Large Language Models, Tsinghua University etc, International Conference on Machine Learning, PMLR 2024, arxiv

- RACE: Large-scale ReAding Comprehension Dataset From Examinations, 2017, arxiv RACE dataset at CMU

- CrowS-Pairs: A Challenge Dataset for Measuring Social Biases in Masked Language Models, 2020, arxiv CrowS-Pairs dataset

- DROP: A Reading Comprehension Benchmark Requiring Discrete Reasoning Over Paragraphs, Jun 2019, ACL data

- RewardBench: Evaluating Reward Models for Language Modeling, Mar 2024, arxiv

- Toward informal language processing: Knowledge of slang in large language models, EMNLP 2023, amazon science

- FOFO: A Benchmark to Evaluate LLMs' Format-Following Capability, Feb 2024, arxiv

- Can LLM Already Serve as A Database Interface? A BIg Bench for Large-Scale Database Grounded Text-to-SQLs, 05 2024,Bird, a big benchmark for large-scale database grounded in text-to-SQL tasks, containing 12,751 pairs of text-to-SQL data and 95 databases with a total size of 33.4 GB, spanning 37 professional domain arxiv data and leaderboard

- MuSiQue: Multihop Questions via Single-hop Question Composition, Aug 2021, arxiv

- Evaluating Copyright Takedown Methods for Language Models, June 2024, arxiv

and knowledge assistant and information seeking LLM based systems,

- RAG-IGBench: Innovative Evaluation for RAG-based Interleaved Generation in Open-domain Question Answering, NeurIPS 2025

- Evaluating Large Language Models for Cross-Lingual Retrieval, Sep 2025, arxiv

- A Comprehensive Evaluation of Embedding Models and LLMs for IR and QA Across English and Italian, May 2025, Advances in Natural Language Processing and Text Mining May 2025

- RankArena: A Unified Platform for Evaluating Retrieval, Reranking and RAG with Human and LLM Feedback, Aug 2025, arxiv

- RAGtifier: Evaluating RAG Generation Approaches of State-of-the-Art RAG Systems for the SIGIR LiveRAG Competition, Jun 2025, arxiv

- Mind2Web 2: Evaluating Agentic Search with Agent-as-a-Judge, Jun 2025, arxiv

- MTRAG: A Multi-Turn Conversational Benchmark for Evaluating Retrieval-Augmented Generation Systems, Jan 2025, arxiv

- RAD-Bench: Evaluating Large Language Models Capabilities in Retrieval Augmented Dialogues, Sep 2024, arrxiv

- TREC iKAT 2023: A Test Collection for Evaluating Conversational and Interactive Knowledge Assistants, SIGIR 2024

- Google Frames Dataset for evaluation of RAG systems, Sep 2024, [arxiv paper: Fact, Fetch, and Reason: A Unified Evaluation of Retrieval-Augmented Generation

- Search Engines in an AI Era: The False Promise of Factual and Verifiable Source-Cited Responses, Oct 2024, Salesforce, arxiv Answer Engine (RAG) Evaluation Repository ](https://arxiv.org/abs/2409.12941) Hugging Face, dataset

- MultiHop-RAG: Benchmarking Retrieval-Augmented Generation for Multi-Hop Queries, Jan 2024, arxiv

- FaithDial: A Faithful Benchmark for Information-Seeking Dialogue , Dec 2022, MIT Press

- Open-Retrieval Conversational Question Answering, SIGIR 2020

- RAGAS: Automated Evaluation of Retrieval Augmented Generation Jul 23, arxiv

- ARES: An Automated Evaluation Framework for Retrieval-Augmented Generation Systems Nov 23, arxiv

- Evaluating Retrieval Quality in Retrieval-Augmented Generation, Apr 2024, arxiv

- IRSC: A Zero-shot Evaluation Benchmark for Information Retrieval through Semantic Comprehension in Retrieval-Augmented Generation Scenarios, Sep 2024, arxiv

- InnovatorBench: Evaluating Agents’ Ability to Conduct Innovative LLM Research, Oct 2025 arxiv

- DeepScholar-Bench: A Live Benchmark and Automated Evaluation for Generative Research Synthesis, Aug 2025, arxiv

- BrowseComp-Plus: A More Fair and Transparent Evaluation Benchmark of Deep-Research Agent, Aug 2025, arxiv

- DeepResearch Bench: A Comprehensive Benchmark for Deep Research Agents, Jun 2025, arxiv

- DeepResearchGym: A Free, Transparent, and Reproducible Evaluation Sandbox for Deep Research, May 2025, arxiv

- AstaBench (from AllenAI), Benchmark at Guthub

- FieldWorkArena: Agentic AI Benchmark for Real Field Work Tasks, May 2025, arxiv

- GAIA: a benchmark for General AI Assistants, Nov 2023, arxiv

- WideSearch: Benchmarking Agentic Broad Info-Seeking, Aug 2025, arxiv

- BrowseComp-Plus: A More Fair and Transparent Evaluation Benchmark of Deep-Research Agent, Aug 2025, arxiv

- Mind2Web 2: Evaluating Agentic Search with Agent-as-a-Judge, Jun 2025, arxiv

- Agent-X: Evaluating Deep Multimodal Reasoning in Vision-Centric Agentic Tasks, May 2025, arxiv

- InfoDeepDeek emergntmind

- WebArena: A Realistic Web Environment for Building Autonomous Agents, Apr 2024, arxiv

- AgentBoard: An Analytical Evaluation Board of Multi-turn LLM Agents, Dec 2024, arxiv

- R2MED: A Benchmark for Reasoning-Driven Medical Retrieval, May 2025, arxiv

- GraphRAG-Bench: Challenging Domain-Specific Reasoning for Evaluating Graph Retrieval-Augmented Generation, Jun 2025, arxiv

- MR2-BENCH: GOING BEYOND MATCHING TO REA-SONING IN MULTIMODAL RETRIEVAL, Sep 2025, arxiv

- BRIGHT: A Realistic and Challenging Benchmark for Reasoning-Intensive Retrieval, Jul 2024, arxiv

And Dialog systems search

- REFINEBENCH: Evaluating Refinement Capability of Language Models with Checklists, KAIST, CMU, NVidia, NeurIPS 2025

- A survey on chatbots and large language models: Testing and evaluation techniques, Jan 2025, Natural Language Processing Journal Mar 2025

- How Well Can Large Language Models Reflect? A Human Evaluation of LLM-generated Reflections for Motivational Interviewing Dialogues, Jan 2025, Proceedings of the 31st International Conference on Computational Linguistics COLING

- Benchmark for general-purpose AI chat model, December 2024, AILuminate from ML Commons, mlcommons website

- Comparative Analysis of Finetuning Strategies and Automated Evaluation Metrics for Large Language Models in Customer Service Chatbots, Aug 2024, preprint

- Introducing v0.5 of the AI Safety Benchmark from MLCommons, Apr 2024, arxiv

- Foundation metrics for evaluating effectiveness of healthcare conversations powered by generative AI Feb 24, Nature

- CausalScore: An Automatic Reference-Free Metric for Assessing Response Relevance in Open-Domain Dialogue Systems, Jun 2024, arxiv

- Simulated user feedback for the LLM production, TDS

- How Well Can LLMs Negotiate? NEGOTIATIONARENA Platform and Analysis Feb 2024 arxiv

- Rethinking the Evaluation of Dialogue Systems: Effects of User Feedback on Crowdworkers and LLMs, Apr 2024, arxiv

- A Two-dimensional Zero-shot Dialogue State Tracking Evaluation Method using GPT-4, Jun 2024, arxiv

- Tutor CoPilot: A Human-AI Approach for Scaling Real-Time Expertise, Stanford, Oct 2024, arxiv

- From Interaction to Impact: Towards Safer AI Agents Through Understanding and Evaluating UI Operation Impacts, University of Washington , Apple, Oct 2024, arxiv

- Copilot Evaluation Harness: Evaluating LLM-Guided Software Programming Feb 24 arxiv

- ELITR-Bench: A Meeting Assistant Benchmark for Long-Context Language Models, Apr 2024, arxiv

- ConsintBench: Evaluating Language Models on Real-World Consumer Intent Understanding, Oct 2025, arxiv

- Investigating Users' Search Behavior and Outcome with ChatGPT in Learning-oriented Search Tasks, SIGIR-AP 2024, ACM

- Is ChatGPT Fair for Recommendation? Evaluating Fairness in Large Language Model Recommendation,RecSys 2023

- Is ChatGPT a Good Recommender? A Preliminary Study Apr 2023 arxiv

- IRSC: A Zero-shot Evaluation Benchmark for Information Retrieval through Semantic Comprehension in Retrieval-Augmented Generation Scenarios, Sep 2024, arxiv

- LLMRec: Benchmarking Large Language Models on Recommendation Task, Aug 2023, arxiv

- OpenP5: Benchmarking Foundation Models for Recommendation, Jun 2023, researchgate

- Marqo embedding benchmark for eCommerce at Huggingface, text to image and category to image tasks

- LaMP: When Large Language Models Meet Personalization, Apr 2023, arxiv

- Search Engines in an AI Era: The False Promise of Factual and Verifiable Source-Cited Responses, Oct 2024, Salesforce, arxiv Answer Engine (RAG) Evaluation Repository

- BIRCO: A Benchmark of Information Retrieval Tasks with Complex Objectives, Feb 2024, arxiv

- Is ChatGPT Good at Search? Investigating Large Language Models as Re-Ranking Agents, Apr 2023, arxiv

- BEIR: A Heterogenous Benchmark for Zero-shot Evaluation of Information Retrieval Models, Oct 2021, arxiv

- BENCHMARK : LoTTE, Long-Tail Topic-stratified Evaluation for IR that features 12 domain-specific search tests, spanning StackExchange communities and using queries from GooAQ, ColBERT repository wth the benchmark data

- LongEmbed: Extending Embedding Models for Long Context Retrieval, Apr 2024, arxiv, benchmark for long context tasks, repository for LongEmbed

- Benchmarking and Building Long-Context Retrieval Models with LoCo and M2-BERT, Feb 2024, arxiv, LoCoV1 benchmark for long context LLM,

- STARK: Benchmarking LLM Retrieval on Textual and Relational Knowledge Bases, Apr 2024, arxiv code github

- Constitutional AI: Harmlessness from AI Feedback, Sep 2022 arxiv (See Appendix B Identifying and Classifying Harmful Conversations, other parts)

- Towards Effective GenAI Multi-Agent Collaboration: Design and Evaluation for Enterprise Applications, Dec 2024, arxiv

- Assessing and Verifying Task Utility in LLM-Powered Applications, May 2024, arxiv

- Towards better Human-Agent Alignment: Assessing Task Utility in LLM-Powered Applications, Feb 2024, arxiv

- MedHELM: Holistic Evaluation of Large Language Models for Medical Tasks, May 2025, arxiv published in Nature

- A systematic review of large language model (LLM) evaluations in clinical medicine, Mar 2025, BMC Medical Informatics and Decision Making

- Medical Large Language Model Benchmarks Should Prioritize Construct Validity, Mar 2025, arxiv

- A systematic review of large language model (LLM) evaluations in clinical medicine, Mar 2025, BMC Medical Informatics and Decision Making

- MedSafetyBench: Evaluating and Improving the Medical Safety of Large Language Models, Dec 2024, openreview arxiv benchmark code and data at github

- Evaluation of LLMs accuracy and consistency in the registered dietitian exam through prompt engineering and knowledge retrieval, Nature, Jan 2025, Scientific reporta Nature

- Medical large language models are vulnerable to data-poisoning attacks, Jan 2025, Nature Medicine

- A dataset and benchmark for hospital course summarization with adapted large language models, Dec 2024, Journal of the American Medical Informatics Association

- MedQA-CS: Benchmarking Large Language Models Clinical Skills Using an AI-SCE Framework, Oct 2024, arxiv

- A framework for human evaluation of large language models in healthcare derived from literature review, September 2024, Nature Digital Medicine

- Evaluation and mitigation of cognitive biases in medical language models, Oct 2024 Nature

- A Preliminary Study of o1 in Medicine: Are We Closer to an AI Doctor?, Sep 2024, arxiv

- Foundation metrics for evaluating effectiveness of healthcare conversations powered by generative AI Feb 24, Nature

- Evaluation and mitigation of the limitations of large language models in clinical decision-making, July 2024, Nature Medicine

- Evaluating Generative AI Responses to Real-world Drug-Related Questions, June 2024, Psychiatry Research

- MedExQA: Medical Question Answering Benchmark with Multiple Explanations, Jun 2024, arxiv

- GPT versus Resident Physicians — A Benchmark Based on Official Board Scores, Apr 2024, source benchmark dataset at github for NEJM Medical Board Residency Exams Question Answering Benchmark, or NEJMQA

- Clinical Insights: A Comprehensive Review of Language Models in Medicine, Aug 2024, arxiv See table 2 for evaluation

- Health-LLM: Large Language Models for Health Prediction via Wearable Sensor Data Jan 2024 arxiv