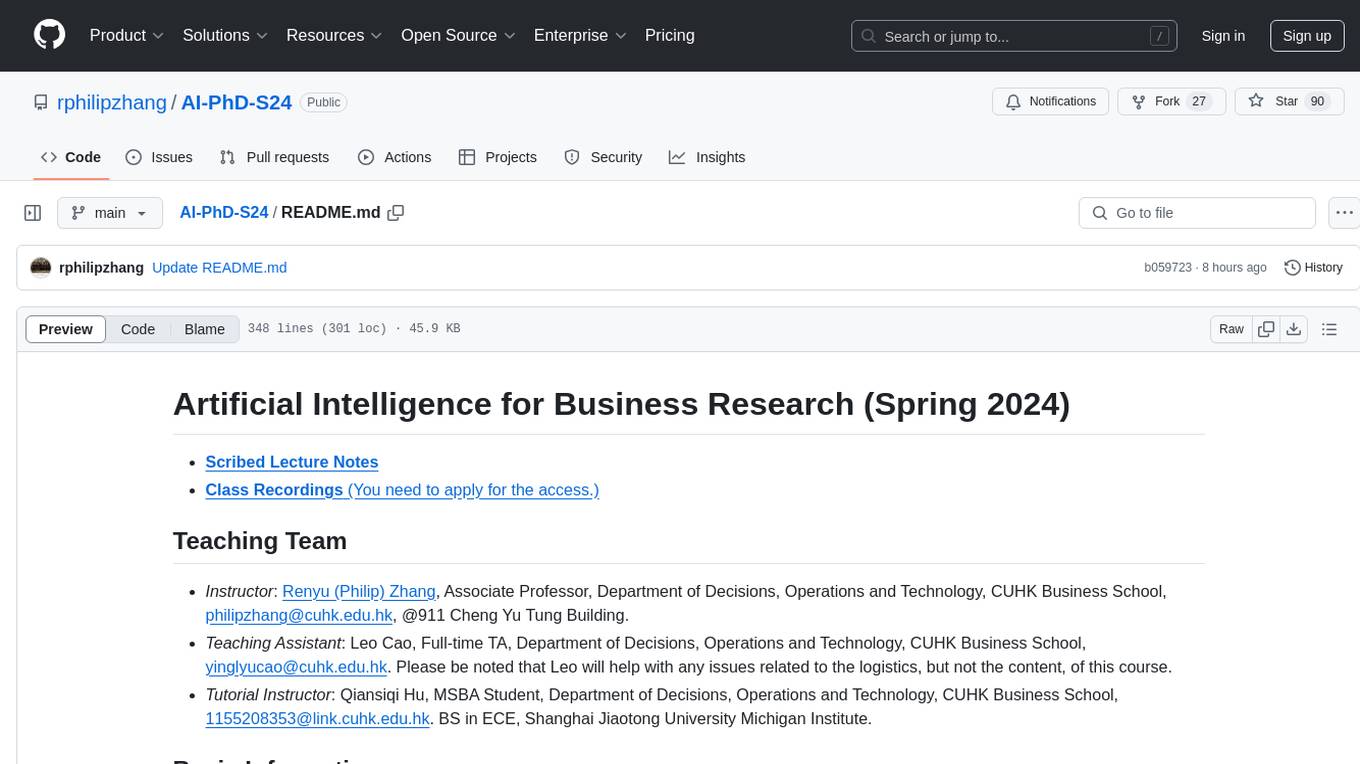

AI-PhD-S24

Mono repo for the PhD course AI for Business Research (DSME 6635, S24)

Stars: 90

AI-PhD-S24 is a mono-repo for the PhD course 'AI for Business Research' at CUHK Business School in Spring 2024. The course aims to provide a basic understanding of machine learning and artificial intelligence concepts/methods used in business research, showcase how ML/AI is utilized in business research, and introduce state-of-the-art AI/ML technologies. The course includes scribed lecture notes, class recordings, and covers topics like AI/ML fundamentals, DL, NLP, CV, unsupervised learning, and diffusion models.

README:

- Instructor: Renyu (Philip) Zhang, Associate Professor, Department of Decisions, Operations and Technology, CUHK Business School, [email protected], @911 Cheng Yu Tung Building.

- Teaching Assistant: Leo Cao, Full-time TA, Department of Decisions, Operations and Technology, CUHK Business School, [email protected]. Please be noted that Leo will help with any issues related to the logistics, but not the content, of this course.

- Tutorial Instructor: Qiansiqi Hu, MSBA Student, Department of Decisions, Operations and Technology, CUHK Business School, [email protected]. BS in ECE, Shanghai Jiaotong University Michigan Institute.

- Website: https://github.com/rphilipzhang/AI-PhD-S24

- Time: Tuesday, 12:30pm-3:15pm, from Jan 9, 2024 to Apr 16, 2024, except for Feb 13 (Chinese New Year) and Mar 5 (Final Project Discussion)

- Location: Cheng Yu Tung Building (CYT) LT5

Welcome to the mono-repo of the PhD course AI for Business Research (DSME 6635) at CUHK Business School in Spring 2024. You may download the Syllabus of this course first. The purpose of this course is to learn the following:

- Have a basic understanding of the fundamental concepts/methods in machine learning (ML) and artificial intelligence (AI) that are used (or potentially useful) in business research.

- Understand how business researchers have utilized ML/AI and what managerial questions have been addressed by ML/AI in the recent decade.

- Nurture a taste of what the state-of-the-art AI/ML technologies can do in the ML/AI community and, potentially, in your own research field.

We will meet each Tuesday at 12:30pm in Cheng Yu Tung Building (CYT) LT5 (please pay attention to this room change). Please ask for my approval if you need to join us via the following Zoom links:

- Zoom link, Meeting ID 996 4239 3764, Passcode 386119.

Most of the code in this course will be distributed through the Google CoLab cloud computing environment to avoid the incompatibility and version control issues on your local individual computer. On the other hand, you can always download the Jupyter Notebook from CoLab and run it your own computer.

- The CoLab files of this course can be found at this folder.

- The Google Sheet to sign up for groups and group tasks can be found here.

- The overleaf template for scribing the lecture notes of this course can be found here.

If you have any feedback on this course, please directly contact Philip at [email protected] and we will try our best to address it.

Subject to modifications. All classes start at 12:30pm and end at 3:15pm.

| Session | Date | Topic | Key Words |

|---|---|---|---|

| 1 | 1.09 | AI/ML in a Nutshell | Course Intro, ML Models, Model Evaluations |

| 2 | 1.16 | Intro to DL | DL Intro, Neural Nets, Computational Issues in DL |

| 3 | 1.23 | Prediction and Traditional NLP | Prediction in Biz Research, Pre-processing |

| 4 | 1.30 | NLP (II): Traditional NLP | $N$-gram, NLP Performance Evaluations, Naïve Bayes |

| 5 | 2.06 | NLP (III): Word2Vec | CBOW, Skip Gram |

| 6 | 2.20 | NLP (IV): RNN | Glove, Language Model Evaluation, RNN |

| 7 | 2.27 | NLP (V): Seq2Seq | LSTM, Seq2Seq, Attention Mechanism |

| 7.5 | 3.05 | NLP (V.V): Transformer | The Bitter Lesson, Attention is All You Need |

| 8 | 3.12 | NLP (VI): Pre-training | Computational Tricks in DL, BERT, GPT |

| 9 | 3.19 | NLP (VII): LLM | Emergent Abilities, Chain-of-Thought, In-context Learning, GenAI in Business Research |

| 10 | 3.26 | CV (I): Image Classification | CNN, AlexNet, ResNet, ViT |

| 11 | 4.02 | CV (II): Image Segmentation and Video Analysis | R-CNN, YOLO, 3D-CNN |

| 12 | 4.09 | Unsupervised Learning (I): Clustering & Topic Modeling | GMM, EM Algorithm, LDA |

| 13 | 4.16 | Unsupervised Learning (II): Diffusion Models | VAE, DDPM, LDM, DiT |

All problem sets are due at 12:30pm right before class.

| Date | Time | Event | Note |

|---|---|---|---|

| 1.10 | 11:59pm | Group Sign-Ups | Each group has at most two students. |

| 1.12 | 7:00pm-9:00pm | Python Tutorial | Given by Qiansiqi Hu, Python Tutorial CoLab |

| 1.19 | 7:00pm-9:00pm | PyTorch Tutorial | Given by Qiansiqi Hu, PyTorch Tutorial CoLab |

| 3.05 | 9:00am-6:00pm | Final Project Discussion | Please schedule a meeting with Philip. |

| 3.12 | 12:30pm | Final Project Proposal | 1-page maximum |

| 4.30 | 11:59pm | Scribed Lecture Notes | Overleaf link |

| 5.12 | 11:59pm | Project Paper, Slides, and Code | Paper page limit: 10 |

Find more on the Syllabus.

- Books: ESL, Deep Learning, Dive into Deep Learning, ML Fairness, Applied Causal Inference Powered by ML and AI

- Courses: ML Intro by Andrew Ng, DL Intro by Andrew Ng, NLP (CS224N) by Chris Manning, CV (CS231N) by Fei-Fei Li, Deep Unsupervised Learning by Pieter Abbeel, DLR by Sergey Levine, DL Theory by Matus Telgarsky, LLM by Danqi Chen, Generative AI by Andrew Ng, Machine Learning and Big Data by Melissa Dell and Matthew Harding, Digital Economics and the Economics of AI by Martin Beraja, Chiara Farronato, Avi Goldfarb, and Catherine Tucker

The following schedule is tentative and subject to changes.

- Keywords: Course Introduction, Machine Learning Basics, Bias-Variance Trade-off, Cross Validation, $k$-Nearest Neighbors, Decision Tree, Ensemble Methods

- Slides: Course Introduction, Machine Learning Basics

- CoLab Notebook Demos: k-Nearest Neighbors, Decision Tree

- Homework: Problem Set 1: Bias-Variance Trade-Off

- Online Python Tutorial: Python Tutorial CoLab, 7:00pm-9:00pm, Jan/12/2024 (Friday), given by Qiansiqi Hu, [email protected]. Zoom Link, Meeting ID: 923 4642 4433, Pass code: 178146

-

References:

- The Elements of Statistical Learning (2nd Edition), 2009, by Trevor Hastie, Robert Tibshirani, Jerome Friedman, https://hastie.su.domains/ElemStatLearn/.

- Probabilistic Machine Learning: An Introduction, 2022, by Kevin Murphy, https://probml.github.io/pml-book/book1.html.

- Mullainathan, Sendhil, and Jann Spiess. 2017. Machine learning: an applied econometric approach. Journal of Economic Perspectives 31(2): 87-106.

- Athey, Susan, and Guido W. Imbens. 2019. Machine learning methods that economists should know about. Annual Review of Economics 11: 685-725.

- Hofman, Jake M., et al. 2021. Integrating explanation and prediction in computational social science. Nature 595.7866: 181-188.

- Bastani, Hamsa, Dennis Zhang, and Heng Zhang. 2022. Applied machine learning in operations management. Innovative Technology at the Interface of Finance and Operations. Springer: 189-222.

- Kelly, Brian, and Dacheng Xiu. 2023. Financial machine learning, SSRN, https://ssrn.com/abstract=4501707.

- The Bitter Lesson, by Rich Sutton, which develops so far the most critical insight of AI: "The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin."

- Keywords: Random Forests, eXtreme Gradient Boosting Trees, Deep Learning Basics, Neural Nets Models, Computational Issues of Deep Learning

- Slides: Machine Learning Basics, Deep Learning Basics

- CoLab Notebook Demos: Random Forest, Extreme Gradient Boosting Tree, Gradient Descent, Chain Rule

-

Presentation: By Xinyu Li and Qingyu Xu.

- Gu, Shihao, Brian Kelly, and Dacheng Xiu. 2020. Empirical asset pricing via machine learning. Review of Financial Studies 33: 2223-2273. Link to the paper.

- Homework: Problem Set 2: Implementing Neural Nets

- Online PyTorch Tutorial: PyTorch Tutorial CoLab, 7:00pm-9:00pm, Jan/19/2024 (Friday), given by Qiansiqi Hu, [email protected]. Zoom Link, Meeting ID: 923 4642 4433, Pass code: 178146

-

References:

- Deep Learning, 2016, by Ian Goodfellow, Yoshua Bengio and Aaron Courville, https://www.deeplearningbook.org/.

- Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola, https://d2l.ai/.

- Probabilistic Machine Learning: Advanced Topics, 2023, by Kevin Murphy, https://probml.github.io/pml-book/book2.html.

- Deep Learning with PyTorch, 2020, by Eli Stevens, Luca Antiga, and Thomas Viehmann.

- Gu, Shihao, Brian Kelly, and Dacheng Xiu. 2020. Empirical asset pricing with machine learning. Review of Financial Studies 33: 2223-2273.

- Keywords: Optimization and Computational Issues of Deep Learning, Prediction Problems in Business Research, Pre-processing and Word Representations in Traditional Natural Language Processing

- Slides: Deep Learning Basics, Prediction Problems in Business Research, NLP(I): Pre-processing and Word Representations

- CoLab Notebook Demos: He Initialization, Dropout, Micrograd, NLP Pre-processing

-

Presentation: By Letian Kong and Liheng Tan.

- Mullainathan, Sendhil, and Jann Spiess. 2017. Machine learning: an applied econometric approach. Journal of Economic Perspectives 31(2): 87-106. Link to the paper.

- Homework: Problem Set 2: Implementing Neural Nets, due at 12:30pm, Jan/30/2024 (Tuesday).

-

References:

- Kleinberg, Jon, Jens Ludwig, Sendhil Mullainathan, and Ziad Obermeyer. 2015. Prediction policy problems. American Economic Review 105(5): 491-495.

- Mullainathan, Sendhil, and Jann Spiess. 2017. Machine learning: an applied econometric approach. Journal of Economic Perspectives 31(2): 87-106.

- Kleinberg, Jon, Himabindu Lakkaraju, Jure Leskovec, Jens Ludwig, and Sendhil Mullainathan. 2018. Human decisions and machine predictions. Quarterly Journal of Economics 133(1): 237-293.

- Bajari, Patrick, Denis Nekipelov, Stephen P. Ryan, and Miaoyu Yang. 2015. Machine learning methods for demand estimation. American Economic Review, 105(5): 481-485.

- Farias, Vivek F., and Andrew A. Li. 2019. Learning preferences with side information. Management Science 65(7): 3131-3149.

- Cui, Ruomeng, Santiago Gallino, Antonio Moreno, and Dennis J. Zhang. 2018. The operational value of social media information. Production and Operations Management, 27(10): 1749-1769.

- Gentzkow, Matthew, Bryan Kelly, and Matt Taddy. 2019. Text as data. Journal of Economic Literature, 57(3): 535-574.

- Chapter 2, Introduction to Information Retrieval, 2008, Cambridge University Press, by Christopher D. Manning, Prabhakar Raghavan and Hinrich Schutze, https://nlp.stanford.edu/IR-book/information-retrieval-book.html.

- Chapter 2, Speech and Language Processing (3rd ed. draft), 2023, by Dan Jurafsky and James H. Martin, https://web.stanford.edu/~jurafsky/slp3/.

- Parameter Initialization and Batch Normalization (in Chinese)

- GPU Comparisons

- GitHub Repo for Micrograd, by Andrej Karpathy.

- Hand Written Notes

- Keywords: Pre-processing and Word Representations in NLP, N-Gram, Naïve Bayes, Language Model Evaluation, Traditional NLP Applied to Business/Econ Research

- Slides: NLP(I): Pre-processing and Word Representations, NLP(II): N-Gram, Naïve Bayes, and Language Model Evaluation

- CoLab Notebook Demos: NLP Pre-processing, N-Gram, Naïve Bayes

-

Presentation: By Zhi Li and Boya Peng.

- Hansen, Stephen, Michael McMahon, and Andrea Prat. 2018. Transparency and deliberation within the FOMC: A computational linguistics approach. Quarterly Journal of Economics, 133(2): 801-870. Link to the paper.

- Homework: Problem Set 3: Implementing Traditional NLP Techniques, due at 12:30pm, Feb/6/2024 (Tuesday).

-

References:

- Gentzkow, Matthew, Bryan Kelly, and Matt Taddy. 2019. Text as data. Journal of Economic Literature, 57(3): 535-574.

- Hansen, Stephen, Michael McMahon, and Andrea Prat. 2018. Transparency and deliberation within the FOMC: A computational linguistics approach. Quarterly Journal of Economics, 133(2): 801-870.

- Chapters 2, 12, & 13, Introduction to Information Retrieval, 2008, Cambridge University Press, by Christopher D. Manning, Prabhakar Raghavan and Hinrich Schutze, https://nlp.stanford.edu/IR-book/information-retrieval-book.html.

- Chapter 2, 3 & 4, Speech and Language Processing (3rd ed. draft), 2023, by Dan Jurafsky and James H. Martin, https://web.stanford.edu/~jurafsky/slp3/.

- Natural Language Tool Kit (NLTK) Documentation

- Hand Written Notes

- Keywords: Traditional NLP Applied to Business/Econ Research, Word2Vec: Continuous Bag of Words and Skip-Gram

- Slides: NLP(II): N-Gram, Naïve Bayes, and Language Model Evaluation, NLP(III): Word2Vec

- CoLab Notebook Demos: Word2Vec: CBOW, Word2Vec: Skip-Gram

-

Presentation: By Xinyu Xu and Shu Zhang.

- Timoshenko, Artem, and John R. Hauser. 2019. Identifying customer needs from user-generated content. Marketing Science, 38(1): 1-20. Link to the paper.

- Homework: No homework this week. Probably you should think about your final project when enjoying your Lunar New Year Holiday.

-

References:

- Gentzkow, Matthew, Bryan Kelly, and Matt Taddy. 2019. Text as data. Journal of Economic Literature, 57(3): 535-574.

- Tetlock, Paul. 2007. Giving content to investor sentiment: The role of media in the stock market. Journal of Finance, 62(3): 1139-1168.

- Baker, Scott, Nicholas Bloom, and Steven Davis, 2016. Measuring economic policy uncertainty. Quarterly Journal of Economics, 131(4): 1593-1636.

- Gentzkow, Matthew, and Jesse Shapiro. 2010. What drives media slant? Evidence from US daily newspapers. Econometrica, 78(1): 35-71.

- Timoshenko, Artem, and John R. Hauser. 2019. Identifying customer needs from user-generated content. Marketing Science, 38(1): 1-20.

- Mikolov, Tomas, Kai Chen, Greg Corrado, and Jeff Dean. 2013. Efficient estimation of word representations in vector space. ArXiv Preprint, arXiv:1301.3781.

- Mikolov, Tomas, Ilya Sutskever, Kai Chen, Greg Corrado, and Jeff Dean. 2013. Distributed representations of words and phrases and their compositionality. Advances in Neural Information Processing Systems (NeurIPS) 26.

- Parts I - II, Lecture Notes and Slides for CS224n: Natural Language Processing with Deep Learning, by Christopher D. Manning, Diyi Yang, and Tatsunori Hashimoto, https://web.stanford.edu/class/cs224n/.

- Word Embeddings Trained on Google News Corpus

- Hand Written Notes

- Keywords: Word2Vec: GloVe, Word Embedding and Language Model Evaluations, Word2Vec and RNN Applied to Business/Econ Research, RNN

- Slides: Guest Lecture Announcement, NLP(III): Word2Vec, NLP(IV): RNN & Seq2Seq

- CoLab Notebook Demos: Word2Vec: CBOW, Word2Vec: Skip-Gram

-

Presentation: By Qiyu Dai and Yifan Ren.

- Huang, Allen H., Hui Wang, and Yi Yang. 2023. FinBERT: A large language model for extracting information from financial text. Contemporary Accounting Research, 40(2): 806-841. Link to the paper. Link to GitHub Repo.

- Homework: Problem Set 4 - Word2Vec & LSTM for Sentiment Analysis

-

References:

- Ash, Elliot, and Stephen Hansen. 2023. Text algorithms in economics. Annual Review of Economics, 15: 659-688. Associated GitHub with Code Demonstrations.

- Li, Kai, Feng Mai, Rui Shen, and Xinyan Yan. 2021. Measuring corporate culture using machine learning. Review of Financial Studies, 34(7): 3265-3315.

- Chen, Fanglin, Xiao Liu, Davide Proserpio, and Isamar Troncoso. 2022. Product2Vec: Leveraging representation learning to model consumer product choice in large assortments. Available at SSRN 3519358.

- Pennington, Jeffrey, Richard Socher, and Christopher Manning. 2014. Glove: Global vectors for word representation. Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP) (pp. 1532-1543).

- Parts 2 and 5, Lecture Notes and Slides for CS224n: Natural Language Processing with Deep Learning, by Christopher D. Manning, Diyi Yang, and Tatsunori Hashimoto, https://web.stanford.edu/class/cs224n/.

- Chapters 9 and 10, Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola, https://d2l.ai/.

- RNN and LSTM Visualizations

- Hand Written Notes

-

Keywords: RNN and its Applications to Business/Econ Research, LSTM, Seq2Seq, Attention Mechanism

-

Slides: Final Project, NLP(IV): RNN & Seq2Seq, NLP(V): Attention & Transformer

-

CoLab Notebook Demos: RNN & LSTM, Attention Mechanism

-

Presentation: By Qinghe Gui and Chaoyuan Jiang.

- Zhang, Mengxia and Lan Luo. 2023. Can consumer-posted photos serve as a leading indicator of restaurant survival? Evidence from Yelp. Management Science 69(1): 25-50. Link to the paper.

-

Homework: Problem Set 4 - Word2Vec & LSTM for Sentiment Analysis

-

References:

- Qi, Meng, Yuanyuan Shi, Yongzhi Qi, Chenxin Ma, Rong Yuan, Di Wu, Zuo-Jun (Max) Shen. 2023. A Practical End-to-End Inventory Management Model with Deep Learning. Management Science, 69(2): 759-773.

- Sarzynska-Wawer, Justyna, Aleksander Wawer, Aleksandra Pawlak, Julia Szymanowska, Izabela Stefaniak, Michal Jarkiewicz, and Lukasz Okruszek. 2021. Detecting formal thought disorder by deep contextualized word representations. Psychiatry Research, 304, 114135.

- Hansen, Stephen, Peter J. Lambert, Nicholas Bloom, Steven J. Davis, Raffaella Sadun, and Bledi Taska. 2023. Remote work across jobs, companies, and space (No. w31007). National Bureau of Economic Research.

- Sutskever, Ilya, Oriol Vinyals, and Quoc V. Le. 2014. Sequence to sequence learning with neural networks. Advances in neural information processing systems, 27.

- Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. 2015. Neural machine translation by jointly learning to align and translate. ICLR

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... and Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Parts 5, 6, and 8, Lecture Notes and Slides for CS224n: Natural Language Processing with Deep Learning, by Christopher D. Manning, Diyi Yang, and Tatsunori Hashimoto, https://web.stanford.edu/class/cs224n/.

- Chapters 9, 10, and 11, Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola, https://d2l.ai/.

- RNN and LSTM Visualizations

- PyTorch's Tutorial of Seq2Seq for Machine Translation

- Illustrated Transformer

- Transformer from Scratch, with the Code on GitHub

- Hand Written Notes

- Keywords: Bitter Lesson: Power of Computation in AI, Attention Mechanism, Transformer

- Slides: The Bitter Lesson, NLP(V): Attention & Transformer

- CoLab Notebook Demos: Attention Mechanism, Transformer

- Homework: One-page Proposal for Your Final Project

-

References:

- The Bitter Lesson, by Rich Sutton

- Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. 2015. Neural machine translation by jointly learning to align and translate. ICLR

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... and Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Part 8, Lecture Notes and Slides for CS224n: Natural Language Processing with Deep Learning, by Christopher D. Manning, Diyi Yang, and Tatsunori Hashimoto, https://web.stanford.edu/class/cs224n/.

- Chapter 11, Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola, https://d2l.ai/.

- Illustrated Transformer

- Transformer from Scratch, with the Code on GitHub

- Andrej Karpathy's Lecture to Build Transformers

- Hand Written Notes

- Keywords: Computations in AI, BERT (Bidirectional Encoder Representations from Transformers), GPT (Generative Pretrained Transformers)

- Slides: Guest Lecture by Dr. Liubo Li on Deep Learning Computation, Pretraining

- CoLab Notebook Demos: Crafting Intelligence: The Art of Deep Learning Modeling, BERT API @ Hugging Face

-

Presentation: By Zhankun Chen and Yiyi Zhao.

- Noy, Shakked and Whitney Zhang. 2023. Experimental evidence on the productivity effects of generative artificial intelligence. Science, 381: 187-192. Link to the Paper

- Homework: Problem Set 5 - Sentiment Analysis with Hugging Face, due at 12:30pm, March 26, Tuesday.

-

References:

- Devlin, Jacob, Ming-Wei Chang, Kenton Lee, Kristina Toutanova. 2018. BERT: Pre-training of deep bidirectional transformers for language understanding. ArXiv preprint arXiv:1810.04805. GitHub Repo

- Radford, Alec, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever. 2018. Improving language understanding by generative pre-training, (GPT-1) PDF link, GitHub Repo

- Radford, Alec, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, Ilya Sutskever. 2019. Language models are unsupervised multitask learners. OpenAI blog, 1(8), 9. (GPT-2) PDF Link, GitHub Repo

- Brown, Tom, et al. 2020. Language models are few-shot learners. Advances in neural information processing systems, 33, 1877-1901. (GPT-3) GitHub Repo

- Huang, Allen H., Hui Wang, and Yi Yang. 2023. FinBERT: A large language model for extracting information from financial text. Contemporary Accounting Research, 40(2): 806-841. GitHub Repo

- Part 9, Lecture Notes and Slides for CS 224N: Natural Language Processing with Deep Learning, by Christopher D. Manning, Diyi Yang, and Tatsunori Hashimoto. Link to CS 224N

- Part 2 & 4, Slides for COS 597G: Understanding Large Language Models, by Danqi Chen. Link to COS 597G

- A Visual Guide to BERT, How GPT-3 Works

- Andrej Karpathy's Lecture to Build GPT-2 (124M) from Scratch

- Hand Written Notes

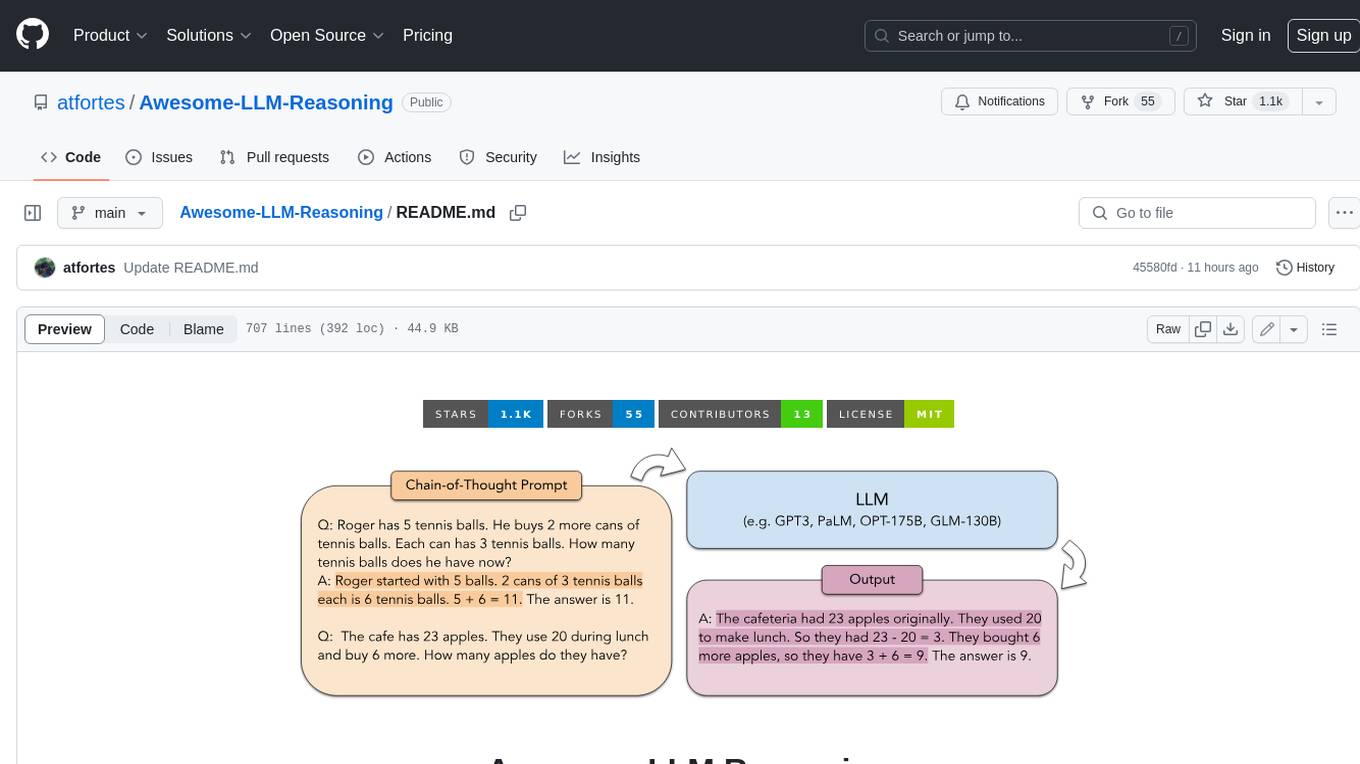

- Keywords: Large Language Models, Generative AI, Emergent Ababilities, Instruction Fine-Tuning (IFT), Reinforcement Learning with Human Feedback (RLHF), In-Context Learning, Chain-of-Thought (CoT)

- Slides: What's Next, Pretraining, Large Language Models

- CoLab Notebook Demos: BERT API @ Hugging Face

-

Presentation: By Jia Liu.

- Liu, Liu, Dzyabura, Daria, Mizik, Natalie. 2020. Visual listening in: Extracting brand image portrayed on social media. Marketing Science, 39(4): 669-686. Link to the Paper

- Homework: Problem Set 5 - Sentiment Analysis with Hugging Face, due at 12:30pm, March 26, Tuesday (soft-deadline).

-

References:

- Wei, Jason, et al. 2021. Finetuned language models are zero-shot learners. ArXiv preprint arXiv:2109.01652, link to the paper.

- Wei, Jason, et al. 2022. Emergent abilities of large language models. ArXiv preprint arXiv:2206.07682, link to the paper.

- Ouyang, Long, et al. 2022. Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems, 35, 27730-27744.

- Wei, Jason, et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems, 35, 24824-24837.

- Kaplan, Jared. 2020. Scaling laws for neural language models. ArXiv preprint arXiv:2001.08361, link to the paper.

- Hoffmann, Jordan, et al. 2022. Training compute-optimal large language models. ArXiv preprint arXiv:2203.15556, link to the paper.

- Shinn, Noah, et al. 2023. Reflexion: Language agents with verbal reinforcement learning. ArXiv preprint arXiv:2303.11366, link to the paper.

- Reisenbichler, Martin, Thomas Reutterer, David A. Schweidel, and Daniel Dan. 2022. Frontiers: Supporting content marketing with natural language generation. Marketing Science, 41(3): 441-452.

- Romera-Paredes, B., Barekatain, M., Novikov, A. et al. 2023. Mathematical discoveries from program search with large language models. Nature, link to the paper.

- Part 10, Lecture Notes and Slides for CS224N: Natural Language Processing with Deep Learning, by Christopher D. Manning, Diyi Yang, and Tatsunori Hashimoto. Link to CS 224N

- COS 597G: Understanding Large Language Models, by Danqi Chen. Link to COS 597G

- Andrej Karpathy's 1-hour Talk on LLM

- CS224n, Hugging Face Tutorial

- Keywords: Large Language Models Applications, Convolution Neural Nets (CNN), LeNet, AlexNet, VGG, ResNet, ViT

- Slides: What's Next, Large Language Models, Image Classification

- CoLab Notebook Demos: CNN, LeNet, & AlexNet, VGG, ResNet, ViT

-

Presentation: By Yingxin Lin and Zeshen Ye.

- Netzer, Oded, Alain Lemaire, and Michal Herzenstein. 2019. When words sweat: Identifying signals for loan default in the text of loan applications. Journal of Marketing Research, 56(6): 960-980. Link to the Paper

- Homework: Problem Set 6 - AlexNet and ResNet, due at 12:30pm, April 9, Tuesday.

-

References:

- Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. 2012. Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, 25.

- He, Kaiming, Xiangyu Zhang, Shaoqing Ren and Jian Sun. 2016. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 770-778.

- Dosovitskiy, Alexey, et al. 2020. An image is worth 16x16 words: Transformers for image recognition at scale. ArXiv preprint, arXiv:2010.11929, link to the paper, link to the GitHub repo.

- Jean, Neal, Marshall Burke, Michael Xie, Matthew W. Davis, David B. Lobell, and Stefand Ermon. 2016. Combining satellite imagery and machine learning to predict poverty. Science, 353(6301), 790-794.

- Zhang, Mengxia and Lan Luo. 2023. Can consumer-posted photos serve as a leading indicator of restaurant survival? Evidence from Yelp. Management Science 69(1): 25-50.

- Course Notes (Lectures 5 & 6) for CS231n: Deep Learning for Computer Vision, by Fei-Fei Li, Ruohan Gao, & Yunzhu Li. Link to CS231n.

- Chapters 7 and 8, Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola. Link to the book.

- Fine-Tune ViT for Image Classification with Hugging Face 🤗 Transformers

- Hugging Face 🤗 ViT CoLab Tutorial

- Keywords: Image Processing Applications, Localization, R-CNNs, YOLOs, Semantic Segmentation, 3D CNN, Video Analysis Applications

- Slides: What's Next, Image Classification, Object Detection and Video Analysis

- CoLab Notebook Demos: Data Augmentation, Faster R-CNN & YOLO v5

-

Presentation: By Qinlu Hu and Yilin Shi.

- Yang, Jeremy, Juanjuan Zhang, and Yuhan Zhang. 2023. Engagement that sells: Influencer video advertising on TikTok. Available at SSRN Link to the Paper

- Homework: Problem Set 6 - AlexNet and ResNet, due at 12:30pm, April 9, Tuesday.

-

References:

- Girshick, R., Donahue, J., Darrell, T. and Malik, J., 2014. Rich feature hierarchies for accurate object detection and semantic segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 580-587).

- Redmon, Joseph, Santosh Divvala, Ross Girshick, and Ali Farhadi. 2016. You only look once: Unified, real-time object detection. Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 779-788).

- Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R. and Fei-Fei, L., 2014. Large-scale video classification with convolutional neural networks. Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (pp. 1725-1732).

- Glaeser, Edward L., Scott D. Kominers, Michael Luca, and Nikhil Naik. 2018. Big data and big cities: The promises and limitations of improved measures of urban life. Economic Inquiry, 56(1): 114-137.

- Zhang, S., Xu, K. and Srinivasan, K., 2023. Frontiers: Unmasking Social Compliance Behavior During the Pandemic. Marketing Science, 42(3), pp.440-450.

- Course Notes (Lectures 10 & 11) for CS231n: Deep Learning for Computer Vision, by Fei-Fei Li, Ruohan Gao, & Yunzhu Li. Link to CS231n.

- Chapter 14, Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola. Link to the book.

- Hand Written Notes

- Keywords: K-Means, Gaussian Mixture Models, EM-Algorithm, Latent Dirichlet Allocation, Variational Auto-Encoder

- Slides: What's Next, Clustering, Topic Modeling & VAE

- CoLab Notebook Demos: K-Means, LDA, VAE

- Homework: Problem Set 7 - Unsupervised Learning (EM & LDA), due at 12:30pm, April 23, Tuesday.

-

References:

- Blei, David M., Ng, Andrew Y., and Jordan, Michael I. 2003. Latent Dirichlet allocation. Journal of Machine Learning Research, 3(Jan): 993-1022.

- Kingma, D.P. and Welling, M., 2013. Auto-encoding Variational Bayes. arXiv preprint arXiv:1312.6114.

- Kingma, D.P. and Welling, M., 2019. An introduction to variational autoencoders. Foundations and Trends® in Machine Learning, 12(4), pp.307-392.

- Bandiera, O., Prat, A., Hansen, S., & Sadun, R. 2020. CEO behavior and firm performance. Journal of Political Economy, 128(4), 1325-1369.

- Liu, Jia and Olivier Toubia. 2018. A semantic approach for estimating consumer content preferences from online search queries. Marketing Science, 37(6): 930-952.

- Mueller, Hannes, and Christopher Rauh. 2018. Reading between the lines: Prediction of political violence using newspaper text. American Political Science Review, 112(2): 358-375.

- Tian, Z., Dew, R. and Iyengar, R., 2023. Mega or Micro? Influencer Selection Using Follower Elasticity. Journal of Marketing Research.

- Chapters 8.5 and 14, The Elements of Statistical Learning (2nd Edition), 2009, by Trevor Hastie, Robert Tibshirani, Jerome Friedman, Link to Book.

- Course Notes (Lectures 1 & 4) for CS294-158-SP24: Deep Unsupervised Learning, taught by Pieter Abbeel, Wilson Yan, Kevin Frans, Philipp Wu. Link to CS294-158-SP24.

- Hand Written Notes

- Keywords: VAE, Denoised Diffusion Probabilistic Models, Latent Diffusion Models, CLIP, Imagen, Diffusion Transformers

- Slides: Clustering, Topic Modeling & VAE, Diffusion Models, Course Summary

- CoLab Notebook Demos: VAE, DDPM, DiT

- Homework: Problem Set 7 - Unsupervised Learning (EM & LDA), due at 12:30pm, April 23, Tuesday.

-

References:

- Kingma, D.P. and Welling, M., 2013. Auto-encoding Variational Bayes. arXiv preprint arXiv:1312.6114.

- Kingma, D.P. and Welling, M., 2019. An introduction to variational autoencoders. Foundations and Trends® in Machine Learning, 12(4), pp.307-392.

- Ho, J., Jain, A. and Abbeel, P., 2020. Denoising diffusion probabilistic models. Advances in neural information processing systems, 33, 6840-6851.

- Chan, S.H., 2024. Tutorial on Diffusion Models for Imaging and Vision. arXiv preprint arXiv:2403.18103.

- Peebles, W. and Xie, S., 2023. Scalable diffusion models with transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 4195-4205. Link to GitHub Repo.

- Tian, Z., Dew, R. and Iyengar, R., 2023. Mega or Micro? Influencer Selection Using Follower Elasticity. Journal of Marketing Research.

- Ludwig, J. and Mullainathan, S., 2024. Machine learning as a tool for hypothesis generation. Quarterly Journal of Economics, 139(2), 751-827.

- Burnap, A., Hauser, J.R. and Timoshenko, A., 2023. Product aesthetic design: A machine learning augmentation. Marketing Science, 42(6), 1029-1056.

- Course Notes (Lecture 6) for CS294-158-SP24: Deep Unsupervised Learning, taught by Pieter Abbeel, Wilson Yan, Kevin Frans, Philipp Wu. Link to CS294-158-SP24.

- CVPR 2022 Tutorial: Denoising Diffusion-based Generative Modeling: Foundations and Applications, by Karsten Kreis, Ruiqi Gao, and Arash Vahdat Link to the Tutorial

- Lilian Weng (OpenAI)'s Blog on Diffusion Models

- Lilian Weng (OpenAI)'s Blog on Diffusion Models for Video Generation

- Hugging Face Diffusers 🤗 Library

- Hand Written Notes

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-PhD-S24

Similar Open Source Tools

AI-PhD-S24

AI-PhD-S24 is a mono-repo for the PhD course 'AI for Business Research' at CUHK Business School in Spring 2024. The course aims to provide a basic understanding of machine learning and artificial intelligence concepts/methods used in business research, showcase how ML/AI is utilized in business research, and introduce state-of-the-art AI/ML technologies. The course includes scribed lecture notes, class recordings, and covers topics like AI/ML fundamentals, DL, NLP, CV, unsupervised learning, and diffusion models.

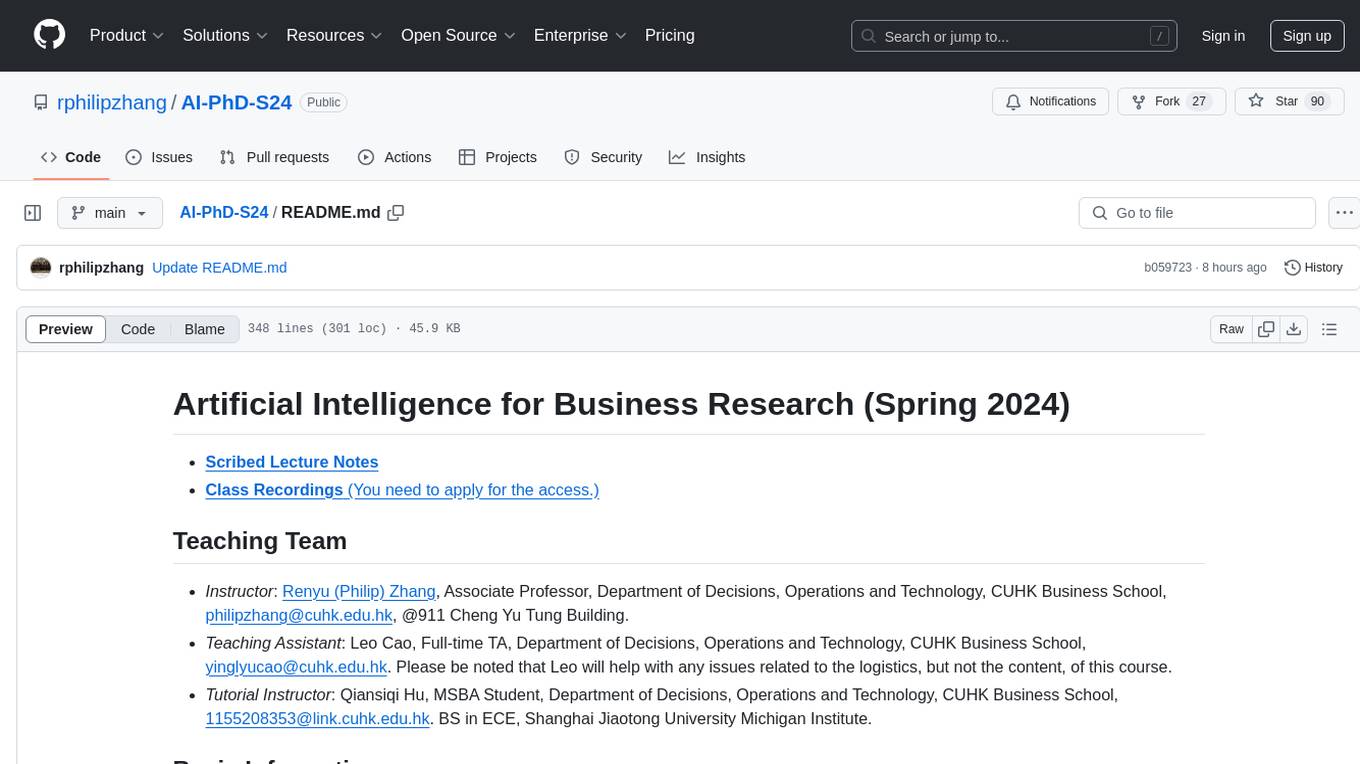

AI-PhD-S25

AI-PhD-S25 is a mono-repo for the DOTE 6635 course on AI for Business Research at CUHK Business School. The course aims to provide a fundamental understanding of ML/AI concepts and methods relevant to business research, explore applications of ML/AI in business research, and discover cutting-edge AI/ML technologies. The course resources include Google CoLab for code distribution, Jupyter Notebooks, Google Sheets for group tasks, Overleaf template for lecture notes, replication projects, and access to HPC Server compute resource. The course covers topics like AI/ML in business research, deep learning basics, attention mechanisms, transformer models, LLM pretraining, posttraining, causal inference fundamentals, and more.

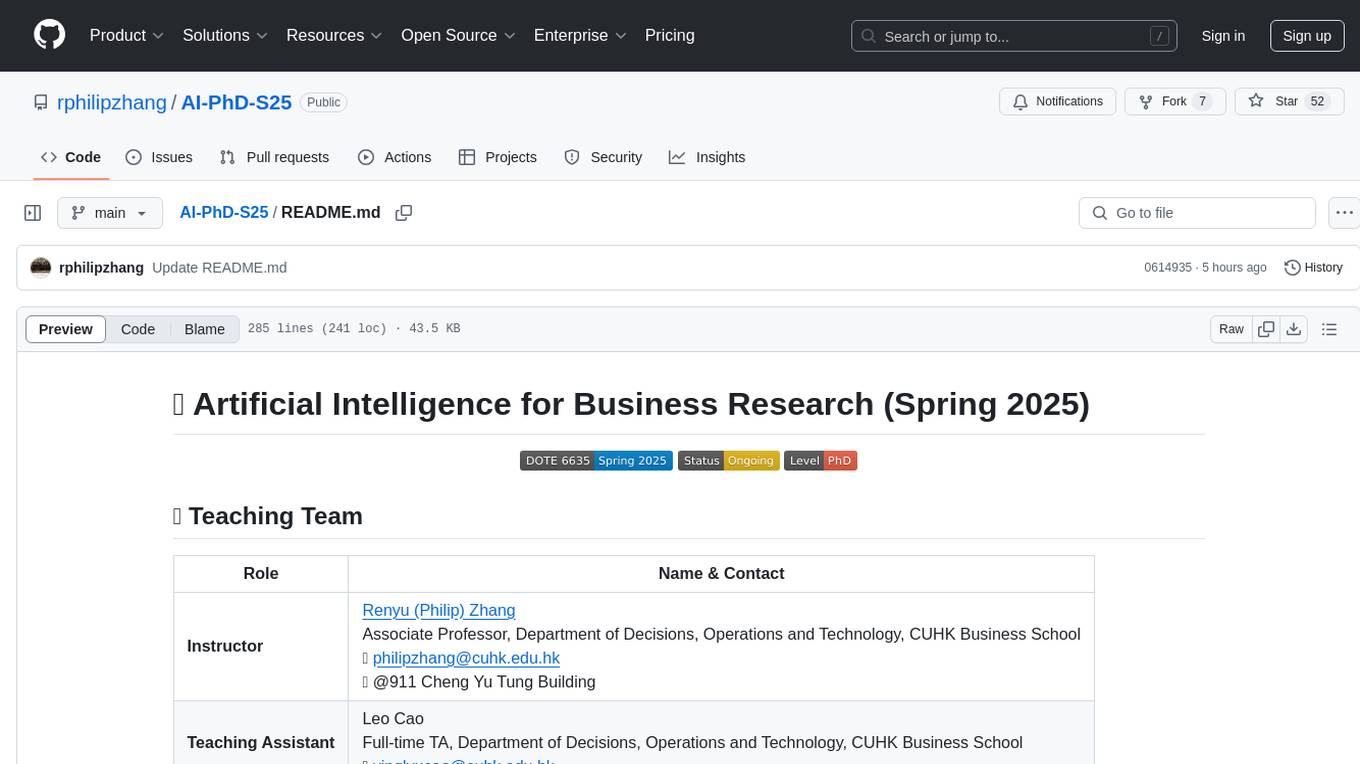

llm-self-correction-papers

This repository contains a curated list of papers focusing on the self-correction of large language models (LLMs) during inference. It covers various frameworks for self-correction, including intrinsic self-correction, self-correction with external tools, self-correction with information retrieval, and self-correction with training designed specifically for self-correction. The list includes survey papers, negative results, and frameworks utilizing reinforcement learning and OpenAI o1-like approaches. Contributions are welcome through pull requests following a specific format.

Time-LLM

Time-LLM is a reprogramming framework that repurposes large language models (LLMs) for time series forecasting. It allows users to treat time series analysis as a 'language task' and effectively leverage pre-trained LLMs for forecasting. The framework involves reprogramming time series data into text representations and providing declarative prompts to guide the LLM reasoning process. Time-LLM supports various backbone models such as Llama-7B, GPT-2, and BERT, offering flexibility in model selection. The tool provides a general framework for repurposing language models for time series forecasting tasks.

SLAM-LLM

SLAM-LLM is a deep learning toolkit for training custom multimodal large language models (MLLM) focusing on speech, language, audio, and music processing. It provides detailed recipes for training and high-performance checkpoints for inference. The toolkit supports various tasks such as automatic speech recognition (ASR), text-to-speech (TTS), visual speech recognition (VSR), automated audio captioning (AAC), spatial audio understanding, and music caption (MC). Users can easily extend to new models and tasks, utilize mixed precision training for faster training with less GPU memory, and perform multi-GPU training with data and model parallelism. Configuration is flexible based on Hydra and dataclass, allowing different configuration methods.

LLM101n

LLM101n is a course focused on building a Storyteller AI Large Language Model (LLM) from scratch in Python, C, and CUDA. The course covers various topics such as language modeling, machine learning, attention mechanisms, tokenization, optimization, device usage, precision training, distributed optimization, datasets, inference, finetuning, deployment, and multimodal applications. Participants will gain a deep understanding of AI, LLMs, and deep learning through hands-on projects and practical examples.

Knowledge-Conflicts-Survey

Knowledge Conflicts for LLMs: A Survey is a repository containing a survey paper that investigates three types of knowledge conflicts: context-memory conflict, inter-context conflict, and intra-memory conflict within Large Language Models (LLMs). The survey reviews the causes, behaviors, and possible solutions to these conflicts, providing a comprehensive analysis of the literature in this area. The repository includes detailed information on the types of conflicts, their causes, behavior analysis, and mitigating solutions, offering insights into how conflicting knowledge affects LLMs and how to address these conflicts.

Prompt4ReasoningPapers

Prompt4ReasoningPapers is a repository dedicated to reasoning with language model prompting. It provides a comprehensive survey of cutting-edge research on reasoning abilities with language models. The repository includes papers, methods, analysis, resources, and tools related to reasoning tasks. It aims to support various real-world applications such as medical diagnosis, negotiation, etc.

awesome-tool-llm

This repository focuses on exploring tools that enhance the performance of language models for various tasks. It provides a structured list of literature relevant to tool-augmented language models, covering topics such as tool basics, tool use paradigm, scenarios, advanced methods, and evaluation. The repository includes papers, preprints, and books that discuss the use of tools in conjunction with language models for tasks like reasoning, question answering, mathematical calculations, accessing knowledge, interacting with the world, and handling non-textual modalities.

AIRS

AIRS is a collection of open-source software tools, datasets, and benchmarks focused on Artificial Intelligence for Science in Quantum, Atomistic, and Continuum Systems. The goal is to develop and maintain an integrated, open, reproducible, and sustainable set of resources to advance the field of AI for Science. The current resources include tools for Quantum Mechanics, Density Functional Theory, Small Molecules, Protein Science, Materials Science, Molecular Interactions, and Partial Differential Equations.

SkyRL

SkyRL is a full-stack RL library that provides components such as 'skyagent' for training long-horizon, real-world agents, 'skyrl-train' for modular RL training, and 'skyrl-gym' for a variety of tool-use tasks. It offers a library of math, coding, search, and SQL environments implemented in the Gymnasium API, optimized for multi-turn tool use LLMs on long-horizon, real-environment tasks.

meridian

Meridian is a tool that provides personalized daily intelligence briefings by scraping news from hundreds of sources, analyzing stories with AI, and delivering concise briefs. It offers key global events, context, implications analysis, and open-source transparency. Built for the curious who seek in-depth news beyond headlines without spending too much time. The tool uses multi-stage LLM processing for article and cluster analysis, smart clustering techniques to group related articles, and a web interface powered by Nuxt 3. The workflow involves scraping RSS feeds, processing articles with Gemini for relevance, clustering articles, and generating a final brief. The project leverages AI models like Gemini, multilingual embeddings, UMAP, and HDBSCAN for analysis. The tech stack includes Turborepo, Cloudflare services, TypeScript, PostgreSQL, and Nuxt 3 with Vue 3 and Tailwind for the frontend.

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

OmniGibson

OmniGibson is a platform for accelerating Embodied AI research built upon NVIDIA's Omniverse platform. It features photorealistic visuals, physical realism, fluid and soft body support, large-scale high-quality scenes and objects, dynamic kinematic and semantic object states, mobile manipulator robots with modular controllers, and an OpenAI Gym interface. The platform provides a comprehensive environment for researchers to conduct experiments and simulations in the field of Embodied AI.

kornia

Kornia is a differentiable computer vision library for PyTorch. It consists of a set of routines and differentiable modules to solve generic computer vision problems. At its core, the package uses PyTorch as its main backend both for efficiency and to take advantage of the reverse-mode auto-differentiation to define and compute the gradient of complex functions.

LLM4IR-Survey

LLM4IR-Survey is a collection of papers related to large language models for information retrieval, organized according to the survey paper 'Large Language Models for Information Retrieval: A Survey'. It covers various aspects such as query rewriting, retrievers, rerankers, readers, search agents, and more, providing insights into the integration of large language models with information retrieval systems.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

mslearn-ai-fundamentals

This repository contains materials for the Microsoft Learn AI Fundamentals module. It covers the basics of artificial intelligence, machine learning, and data science. The content includes hands-on labs, interactive learning modules, and assessments to help learners understand key concepts and techniques in AI. Whether you are new to AI or looking to expand your knowledge, this module provides a comprehensive introduction to the fundamentals of AI.

awesome-ai-tools

Awesome AI Tools is a curated list of popular tools and resources for artificial intelligence enthusiasts. It includes a wide range of tools such as machine learning libraries, deep learning frameworks, data visualization tools, and natural language processing resources. Whether you are a beginner or an experienced AI practitioner, this repository aims to provide you with a comprehensive collection of tools to enhance your AI projects and research. Explore the list to discover new tools, stay updated with the latest advancements in AI technology, and find the right resources to support your AI endeavors.

go2coding.github.io

The go2coding.github.io repository is a collection of resources for AI enthusiasts, providing information on AI products, open-source projects, AI learning websites, and AI learning frameworks. It aims to help users stay updated on industry trends, learn from community projects, access learning resources, and understand and choose AI frameworks. The repository also includes instructions for local and external deployment of the project as a static website, with details on domain registration, hosting services, uploading static web pages, configuring domain resolution, and a visual guide to the AI tool navigation website. Additionally, it offers a platform for AI knowledge exchange through a QQ group and promotes AI tools through a WeChat public account.

AI-Notes

AI-Notes is a repository dedicated to practical applications of artificial intelligence and deep learning. It covers concepts such as data mining, machine learning, natural language processing, and AI. The repository contains Jupyter Notebook examples for hands-on learning and experimentation. It explores the development stages of AI, from narrow artificial intelligence to general artificial intelligence and superintelligence. The content delves into machine learning algorithms, deep learning techniques, and the impact of AI on various industries like autonomous driving and healthcare. The repository aims to provide a comprehensive understanding of AI technologies and their real-world applications.

promptpanel

Prompt Panel is a tool designed to accelerate the adoption of AI agents by providing a platform where users can run large language models across any inference provider, create custom agent plugins, and use their own data safely. The tool allows users to break free from walled-gardens and have full control over their models, conversations, and logic. With Prompt Panel, users can pair their data with any language model, online or offline, and customize the system to meet their unique business needs without any restrictions.

ai-demos

The 'ai-demos' repository is a collection of example code from presentations focusing on building with AI and LLMs. It serves as a resource for developers looking to explore practical applications of artificial intelligence in their projects. The code snippets showcase various techniques and approaches to leverage AI technologies effectively. The repository aims to inspire and educate developers on integrating AI solutions into their applications.

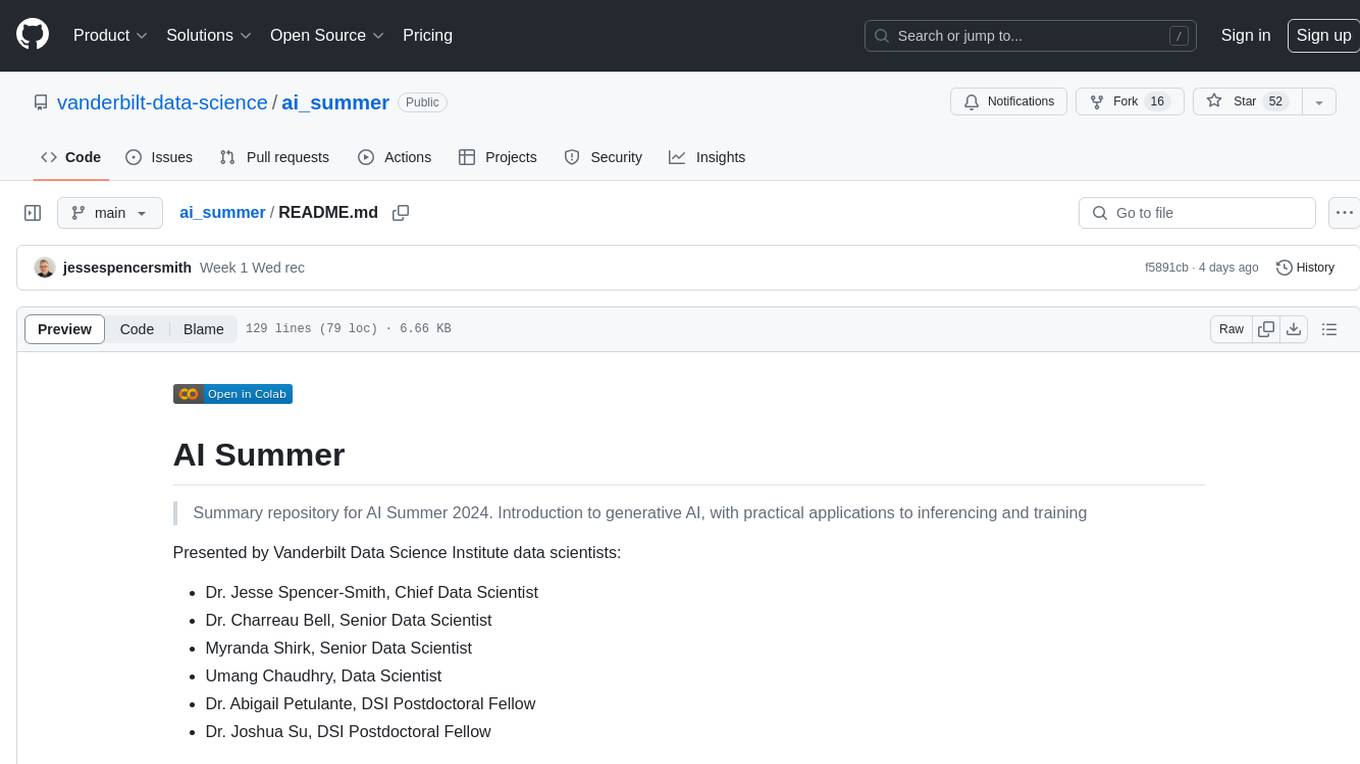

ai_summer

AI Summer is a repository focused on providing workshops and resources for developing foundational skills in generative AI models and transformer models. The repository offers practical applications for inferencing and training, with a specific emphasis on understanding and utilizing advanced AI chat models like BingGPT. Participants are encouraged to engage in interactive programming environments, decide on projects to work on, and actively participate in discussions and breakout rooms. The workshops cover topics such as generative AI models, retrieval-augmented generation, building AI solutions, and fine-tuning models. The goal is to equip individuals with the necessary skills to work with AI technologies effectively and securely, both locally and in the cloud.