AI-PhD-S25

Mono-repo for the PhD course AI for Business Research (DOTE 6635, S25)

Stars: 64

AI-PhD-S25 is a mono-repo for the DOTE 6635 course on AI for Business Research at CUHK Business School. The course aims to provide a fundamental understanding of ML/AI concepts and methods relevant to business research, explore applications of ML/AI in business research, and discover cutting-edge AI/ML technologies. The course resources include Google CoLab for code distribution, Jupyter Notebooks, Google Sheets for group tasks, Overleaf template for lecture notes, replication projects, and access to HPC Server compute resource. The course covers topics like AI/ML in business research, deep learning basics, attention mechanisms, transformer models, LLM pretraining, posttraining, causal inference fundamentals, and more.

README:

| Role | Name & Contact |

|---|---|

| Instructor |

Renyu (Philip) Zhang Associate Professor, Department of Decisions, Operations and Technology, CUHK Business School 📧 [email protected] 📍 @911 Cheng Yu Tung Building |

| Teaching Assistant | Leo Cao Full-time TA, Department of Decisions, Operations and Technology, CUHK Business School 📧 [email protected] |

| Tutorial Instructor |

Xinyu Li PhD Candidate (Management Information Systems), CUHK Business School 📧 [email protected] |

- 🌐 Website: https://github.com/rphilipzhang/AI-PhD-S25

- ⏰ Time: Tuesday, 12:30pm-3:15pm (Jan 14 - Apr 15, 2025)

- Excluding: Jan 28 (Chinese New Year)

- 📍 Location: Wu Ho Man Yuen Building (WMY) 504

Welcome to the mono-repo of DOTE 6635: AI for Business Research at CUHK Business School!

- 🧠 Gain fundamental understanding of ML/AI concepts and methods relevant to business research.

- 💡 Explore applications of ML/AI in business research over the past decade.

- 🚀 Discover and nuture the taste of cutting-edge AI/ML technologies and their potential in your research domain.

Need to join remotely? Use our Zoom link (please seek approval from Philip):

- 🎥 Join Meeting

- Meeting ID: 918 6344 5131

- Passcode: 459761

Most of the code in this course will be distributed through the Google CoLab cloud computing environment to avoid the incompatibility and version control issues on your local individual computer. On the other hand, you can always download the Jupyter Notebook from CoLab and run it your own computer.

- 📚 The Literature References discussed in the slides can be found on this document.

- 📓 The CoLab files of this course can be found at this folder.

- 📊 The Google Sheet to sign up for groups and group tasks can be found here.

- 📝 The overleaf template for scribing the lecture notes of this course can be found here.

- 🔬 The replication projects can be found here.

- 🖥️ The HPC Server compute resource of the CUHK DOT Department can be found here.

If you have any feedback on this course, please directly contact Philip at [email protected] and we will try our best to address it.

- 🗂️ GitHub Repos: Spring 2024@CUHK, Summer 2024@SJTU Antai

- 🎥 Video Recordings (You need to apply for access): Spring 2024@CUHK, Summer 2024@SJTU Antai

- 📝 Scribed Notes: Spring 2024@CUHK

Subject to modifications. All classes start at 12:30pm and end at 3:15pm.

| Session | Date | Topic | Key Words |

|---|---|---|---|

| 1 | 1.14 | AI/ML in a Nutshell | Course Intro, Prediction in Biz Research |

| 2 | 1.21 | Intro to DL | ML Model Evaluations, DL Intro, Neural Nets |

| 3 | 2.04 | LLM (I) | DL Computations, Attention Mechanism |

| 4 | 2.11 | LLM (II) | Transformer, ViT, DiT |

| 5 | 2.18 | LLM (III) | BERT, GPT |

| 6 | 2.25 | LLM (IV) | LLM Pre-training, DL Computations |

| 7 | 3.04 | LLM (V) | Post-training, Fine-tuning, RLHF, Test-Time Scaling, Inference, Quantization |

| 8 | 3.11 | LLM (VI) | Agentic AI, AI as Human Simulators, Applications in Business Research |

| 9 | 3.18 | Causal (I) | Causal Inference Intro, RCT |

| 10 | 3.25 | Causal (II) | IPW, AIPW, Double Machine Learning, Neyman Orthogonality |

| 11 | 4.01 | Causal (III) | Partial Linear Models, Double Machine Learning |

| 12 | 4.08 | Causal (IV) | Double Machine Learning, Neyman Orthogonality |

| 13 | 4.15 | Causal (V) | Causal Trees, Causal Forests, Course Wrap-up |

| 13+ | Summer | Coruse Remake | Synthetic Control, Matrix Completion, LLM x Causal Inference, Interference, etc. |

All problem sets are due at 12:30pm right before class.

| Date | Time | Event | Note |

|---|---|---|---|

| 1.15 | 11:59pm | Group Sign-Ups | Each group has at most two students. |

| 1.17 | 7:00pm-9:00pm | Python Tutorial | Given by Xinyu Li, Python Tutorial CoLab |

| 1.24 | 7:00pm-9:00pm | PyTorch and DOT HPC Server Tutorial | Given by Xinyu Li, PyTorch Tutorial CoLab |

| 3.04 | 9:00am-6:00pm | Final Project Discussion | Please schedule a meeting with Philip. |

| 3.11 | 12:30pm | Final Project Proposal | 1-page maximum |

| 4.30 | 11:59pm | Scribed Lecture Notes | Overleaf link |

| 5.11 | 11:59pm | Project Paper, Slides, and Code | Paper page limit: 10 |

Find more on the Syllabus and the literature references discussed in the slides.

-

📖 Books:

-

🎓 Courses:

- Foundations: ML Intro by Andrew Ng, DL Intro by Andrew Ng, Generative AI by Andrew Ng, Introduction to Causal Inference by Brady Neal

- Advanced Technologies: NLP (CS224N) by Chris Manning, CV (CS231N) by Fei-Fei Li, Deep Unsupervised Learning by Pieter Abbeel, DLR by Sergey Levine, DL Theory by Matus Telgarsky, LLM by Danqi Chen, LLM from Scratch (CS336) by Persy Liang, Efficient Deep Learning Computing by Song Han, Deep Generative Models by Kaiming He, LLM Agents by Dawn Song, Advanced LLM Agents by Dawn Song, Data, Learning, and Algorithms by Tengyuan Liang, Hands-on DRL by Weinan Zhang, Jian Shen, and Yu Yong (in Chinese), Understanding LLM: Foundations and Safety by Dawn Song

- Biz/Econ Applications of AI: Machine Learning and Big Data by Melissa Dell and Matthew Harding, Digital Economics and the Economics of AI by Martin Beraja, Chiara Farronato, Avi Goldfarb, and Catherine Tucker, Generative AI and Causal Inference with Texts, NLP for Computational Social Science by Diyi Yang

-

💡 Tutorials and Blogs:

- GitHub of Andrej Karpathy, Blog of Lilian Weng, Double Machine Learning Package Documentation, Causality and Deep Learning (ICML 2022 Tutorial), Causal Inference and Machine Learning (KDD 2021 Tutorial), Online Causal Inference Seminar, Training a Chinese LLM from Scratch (in Chinese), Physics of Language Models (ICML 2024 Tutorial), Counterfactual Learning and Evaluation for Recommender Systems: Foundations, Implementations, and Recent Advances (RecSys 2021 Tutorial), Language Agents: Foundations, Prospects, and Risks (EMNLP 2024 Tutorial), GitHub Repo: Upgrading Cursor to Devin

The following schedule is tentative and subject to changes.

- 🔑 Keywords: Course Introduction, Prediction in Biz Research, Basic ML Models

- 📊 Slides: Course Intro, Prediction, ML Intro

- 💻 CoLab Notebook Demos: Bootstrap, k-Nearest Neighbors, Decision Tree, Random Forest, Gradient Boosting Tree

- ✍️ Homework: Problem Set 1 - Housing Price Prediction, due at 12:30pm, Feb/4/2025

- 🎓 Online Python Tutorial: Python Tutorial CoLab, 7:00pm-9:00pm, Jan/17/2025 (Friday), given by Xinyu Li, [email protected]. Zoom Link, Meeting ID: 939 4486 4920, Passcode: 456911

- 📚 References:

- The Elements of Statistical Learning (2nd Edition), 2009, by Trevor Hastie, Robert Tibshirani, Jerome Friedman, link to ESL.

- Probabilistic Machine Learning: An Introduction, 2022, by Kevin Murphy, link to PML.

- Mullainathan, Sendhil, and Jann Spiess. 2017. Machine learning: an applied econometric approach. Journal of Economic Perspectives 31(2): 87-106.

- Athey, Susan, and Guido W. Imbens. 2019. Machine learning methods that economists should know about. Annual Review of Economics 11: 685-725.

- Kleinberg, Jon, Jens Ludwig, Sendhil Mullainathan, and Ziad Obermeyer. 2015. Prediction policy problems. American Economic Review 105(5): 491-495.

- Hofman, Jake M., et al. 2021. Integrating explanation and prediction in computational social science. Nature 595.7866: 181-188.

- Bastani, Hamsa, Dennis Zhang, and Heng Zhang. 2022. Applied machine learning in operations management. Innovative Technology at the Interface of Finance and Operations. Springer: 189-222.

- Kelly, Brian, and Dacheng Xiu. 2023. Financial machine learning, SSRN, link to the paper.

- The Bitter Lesson, by Rich Sutton, which develops so far the most critical insight of AI: "The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin."

- Chatpers 1 & 3.2, Scribed Notes of Spring 2024 Course Offering.

- 🔑 Keywords: Bias-Variance Trade-off, Cross Validation, Bootstrap, Neural Nets, Computational Issues of Deep Learning

- 📊 Slides: ML Intro, DL Intro

- 💻 CoLab Notebook Demos: Gradient Descent, Chain Rule, He Innitialization

- ✍️ Homework: Problem Set 2: Implementing Neural Nets, due at 12:30pm, Feb/11/2025

- 🎓 Online PyTorch and DOT HPC Server Tutorial: PyTorch Tutorial CoLab, 7:00pm-9:00pm, Jan/24/2025 (Friday), given by Xinyu Li, [email protected]. Zoom Link, Meeting ID: 939 4486 4920, Passcode: 456911

- 📚 References:

- Deep Learning, 2016, by Ian Goodfellow, Yoshua Bengio and Aaron Courville, link to DL.

- Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola, link to d2dl.

- Probabilistic Machine Learning: Advanced Topics, 2023, by Kevin Murphy, link to PML2.

- Deep Learning with PyTorch, 2020, by Eli Stevens, Luca Antiga, and Thomas Viehmann.

- Dell, Mellissa. 2024. Deep learning for economists. Journal of Economic Literature, forthcoming, link to the paper.

- Davies, A., Veličković, P., Buesing, L., Blackwell, S., Zheng, D., Tomašev, N., Tanburn, R., Battaglia, P., Blundell, C., Juhász, A. and Lackenby, M., 2021. Advancing mathematics by guiding human intuition with AI. Nature, 600(7887), pp.70-74.

- Ye, Z., Zhang, Z., Zhang, D., Zhang, H. and Zhang, R.P., 2023. Deep-Learning-Based Causal Inference for Large-Scale Combinatorial Experiments: Theory and Empirical Evidence. Available at SSRN 4375327, link to the paper.

- Luyang Chen, Markus Pelger, Jason Zhu (2023) Deep Learning in Asset Pricing. Management Science 70(2):714-750.

- Wang, Z., Gao, R. and Li, S. 2024. Neural-Network Mixed Logit Choice Model: Statistical and Optimality Guarantees. Working paper.

- Why Does Adam Work So Well? (in Chinese), Overview of gradient descent algorithms

- Chatpers 1 & 2, Scribed Notes of Spring 2024 Course Offering.

- 🔑 Keywords: Deep Learning Computations, Seq2Seq, Attention Mechanism, Transformer

- 📊 Slides: What's New, DL Intro, Transformer

- 💻 CoLab Notebook Demos: Dropout, Micrograd, Attention Mechanism

- ✍️ Homework: Problem Set 2: Implementing Neural Nets, due at 12:30pm, Feb/11/2025

- 📝 Presentation of Replication Project: By Jiaci Yi and Yachong Wang

- Gui, G. and Toubia, O., 2023. The challenge of using LLMs to simulate human behavior: A causal inference perspective. arXiv:2312.15524. Link to the paper. Replication Report, Code, and Slides.

- 📚 References:

- Deep Learning, 2016, by Ian Goodfellow, Yoshua Bengio and Aaron Courville, link to DL.

- Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola, link to d2dl.

- Dell, Mellissa. 2024. Deep learning for economists. Journal of Economic Literature, forthcoming, link to the paper.

- Sutskever, Ilya, Oriol Vinyals, and Quoc V. Le. 2014. Sequence to sequence learning with neural networks. Advances in neural information processing systems, 27.

- Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. 2015. Neural machine translation by jointly learning to align and translate. ICLR

- Lecture Notes and Slides for CS224n: Natural Language Processing with Deep Learning, by Christopher D. Manning, Diyi Yang, and Tatsunori Hashimoto. Link to CS224n.

- Parameter Initialization and Batch Normalization (in Chinese), GPU Comparisons, GitHub Repo for Micrograd by Andrej Karpathy.

- RNN and LSTM Visualizations, PyTorch's Tutorial of Seq2Seq for Machine Translation.

- Chatpers 2 & 6, Scribed Notes of Spring 2024 Course Offering.

- Handwritten Notes

- 🔑 Keywords: Transformer, ViT, DiT, Decision Transformer

- 📊 Slides: What's New, Transformer

- 💻 CoLab Notebook Demos: Attention Mechanism, Transformer

- ✍️ Homework: Problem Set 3: Sentiment Analysis with BERT, due at 12:30pm, Mar/4/2025

- 📝 Presentation of Replication Project: By Xiqing Qin and Yuxin Chen

- Manning, B.S., Zhu, K. and Horton, J.J., 2024. Automated social science: Language models as scientists and subjects (No. w32381). National Bureau of Economic Research. Link to the paper, link to GitHub Repo. Replication Report, Code, and Slides.

- 📚 References:

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... and Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Qi, Meng, Yuanyuan Shi, Yongzhi Qi, Chenxin Ma, Rong Yuan, Di Wu, Zuo-Jun (Max) Shen. 2023. A Practical End-to-End Inventory Management Model with Deep Learning. Management Science, 69(2): 759-773.

- Sarzynska-Wawer, Justyna, Aleksander Wawer, Aleksandra Pawlak, Julia Szymanowska, Izabela Stefaniak, Michal Jarkiewicz, and Lukasz Okruszek. 2021. Detecting formal thought disorder by deep contextualized word representations. Psychiatry Research, 304, 114135.

- Hansen, Stephen, Peter J. Lambert, Nicholas Bloom, Steven J. Davis, Raffaella Sadun, and Bledi Taska. 2023. Remote work across jobs, companies, and space (No. w31007). National Bureau of Economic Research.

- Chapter 11, Dive into Deep Learning (2nd Edition), 2023, by Aston Zhang, Zack Lipton, Mu Li, and Alex J. Smola, link to d2dl.

- Lecture Notes and Slides for CS224n: Natural Language Processing with Deep Learning, by Christopher D. Manning, Diyi Yang, and Tatsunori Hashimoto. Link to CS224n.

- Part 2, Slides for COS 597G: Understanding Large Language Models, by Danqi Chen. Link to COS 597G

- Illustrated Transformer, Transformer from Scratch with the Code on GitHub.

- Andrej Karpathy's Lecture: Deep Dive into LLM

- Chatpers 7 Scribed Notes of Spring 2024 Course Offering.

- Handwritten Notes

- 🔑 Keywords: Pretraining, Scaling Law, BERT, GPT, DeepSeek

- 📊 Slides: What's New, Pretraining

- 💻 CoLab Notebook Demos: Attention Mechanism, Transformer

- ✍️ Homework: Problem Set 3: Sentiment Analysis with BERT, due at 12:30pm, Mar/4/2025

- 📝 Presentation of Replication Project: By Guohao Li and Jin Wang

- Li, P., Castelo, N., Katona, Z. and Sarvary, M., 2024. Frontiers: Determining the validity of large language models for automated perceptual analysis. Marketing Science, 43(2), pp.254-266. Link to the paper. Link to the replication package. Replication Report, Code, and Slides.

- 📚 References:

- Devlin, Jacob, Ming-Wei Chang, Kenton Lee, Kristina Toutanova. 2018. BERT: Pre-training of deep bidirectional transformers for language understanding. ArXiv preprint arXiv:1810.04805. GitHub Repo

- Radford, Alec, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever. 2018. Improving language understanding by generative pre-training, (GPT-1) PDF link, GitHub Repo

- Radford, Alec, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, Ilya Sutskever. 2019. Language models are unsupervised multitask learners. OpenAI blog, 1(8), 9. (GPT-2) PDF Link, GitHub Repo

- Brown, Tom, et al. 2020. Language models are few-shot learners. Advances in neural information processing systems, 33, 1877-1901. (GPT-3) GitHub Repo

- DeepSeek-AI, 2024. Deepseek-V3 Technical Report. arXiv:2412.19437. GitHub Repo

- Huang, Allen H., Hui Wang, and Yi Yang. 2023. FinBERT: A large language model for extracting information from financial text. Contemporary Accounting Research, 40(2): 806-841. (FinBERT) GitHub Repo

- Gorodnichenko, Y., Pham, T. and Talavera, O., 2023. The voice of monetary policy. American Economic Review, 113(2), pp.548-584.

- Reisenbichler, Martin, Thomas Reutterer, David A. Schweidel, and Daniel Dan. 2022. Frontiers: Supporting content marketing with natural language generation. Marketing Science, 41(3): 441-452.

- Books, Notes, and Courses: Chapter 11.9 of Dive into Deep Learning, Part 9 of CS224N: Natural Language Processing with Deep Learning, Part 2 & 4 of COS 597G: Understanding Large Language Models, Part 3 & 4 of CS336: Large Language Models from Scratch, Chatper 8 of Scribed Notes for Spring 2024 Course Offering, Handwritten Notes.

- Andrej Karpathy's Lectures: Build GPT-2 (124M) from Scratch GitHub Repo for NanoGPT, Deep Dive into LLM, Build the GPT Tokenizer

- Miscellaneous Resources: CS224n, Hugging Face 🤗 Tutorial, A Visual Guide to BERT, LLM Visualization, How GPT-3 Works, Video on DeepSeek MoE, TikTokenizer, Inference with Base LLM

- 🔑 Keywords: Posttraining, Instruct GPT, SFT, RLHF, DPO, Test-Time Scaling, Knowledge Distillation

- 📊 Slides: What's New, Pretraining, Posttraining

- 💻 CoLab Notebook Demos: BERT API @ Hugging Face 🤗, BERT Finetuning

- ✍️ Homework: Problem Set 4: Finetuning LLM, due at 12:30pm, Mar/18/2025

- 📝 Presentation of Replication Project: By Di Wu and Chuchu Sun

- Radford, Alec, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, Ilya Sutskever. 2019. Language models are unsupervised multitask learners. OpenAI blog, 1(8), 9. (GPT-2) PDF Link, GitHub Repo, Replication Report, Code, and Slides.

- 📚 References:

- Ouyang, Long, et al. 2022. Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems, 35, 27730-27744.

- Chu, T., Zhai, Y., Yang, J., Tong, S., Xie, S., Schuurmans, D., Le, Q.V., Levine, S. and Ma, Y., 2025. Sft memorizes, rl generalizes: A comparative study of foundation model post-training. arXiv preprint arXiv:2501.17161.

- Wei, Jason, et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems, 35, 24824-24837.

- Yao, S., Yu, D., Zhao, J., Shafran, I., Griffiths, T., Cao, Y. and Narasimhan, K., 2023. Tree of thoughts: Deliberate problem solving with large language models. Advances in neural information processing systems, 36, pp.11809-11822.

- Brynjolfsson, E., Li, D. and Raymond, L., 2025. Generative AI at work. The Quarterly Journal of Economics, p.qjae044.

- Hinton, G., Vinyals, O. and Dean, J., 2015. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531.

- Books, Notes, and Courses: Part 10 of CS224N: Natural Language Processing with Deep Learning, Talk @ Stanford by Barret Zoph and John Schulman, Part 3, 4, 9 & 10 of CS336: Large Language Models from Scratch, Part 9 & 14 of MIT 6.5940: TinyML and Efficient Deep Learning Computing, Chatper 9 of Scribed Notes for Spring 2024 Course Offering, Handwritten Notes.

- Andrej Karpathy's Lectures: Build GPT-2 (124M) from Scratch GitHub Repo for NanoGPT, Deep Dive into LLM, Build the GPT Tokenizer

- Miscellaneous Resources: CS224n, Hugging Face 🤗 Tutorial, LLM Visualization, How GPT-3 Works, Video on DeepSeek MoE, Video on DeekSeep Native Sparse Attention, TikTokenizer, Inference with Base LLM

- 🔑 Keywords: Posttraining, SFT, PEFT, RLHF, DPO

- 📊 Slides: What's New, What's Next, Posttraining

- 💻 CoLab Notebook Demos: LLM Finetuning, Quantization

- ✍️ Homework: Problem Set 4: Finetuning LLM, due at 12:30pm, Mar/18/2025

- 📝 No Presentation of Replication Project in This Week.

- 📚 References:

- Chu, T., Zhai, Y., Yang, J., Tong, S., Xie, S., Schuurmans, D., Le, Q.V., Levine, S. and Ma, Y., 2025. Sft memorizes, rl generalizes: A comparative study of foundation model post-training. arXiv preprint arXiv:2501.17161.

- Wei, Jason, et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems, 35, 24824-24837.

- Yao, S., Yu, D., Zhao, J., Shafran, I., Griffiths, T., Cao, Y. and Narasimhan, K., 2023. Tree of thoughts: Deliberate problem solving with large language models. Advances in neural information processing systems, 36, pp.11809-11822.

- Brynjolfsson, E., Li, D. and Raymond, L., 2025. Generative AI at work. The Quarterly Journal of Economics, p.qjae044.

- Hinton, G., Vinyals, O. and Dean, J., 2015. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531.

- Muennighoff, N., Yang, Z., Shi, W., Li, X.L., Fei-Fei, L., Hajishirzi, H., Zettlemoyer, L., Liang, P., Candès, E. and Hashimoto, T., 2025. s1: Simple test-time scaling. arXiv preprint arXiv:2501.19393.

- Books, Notes, and Courses: Part 10 & 11 of CS224N: Natural Language Processing with Deep Learning, Talk @ Stanford by Barret Zoph and John Schulman, Part 15 & 16 of CS336: Large Language Models from Scratch, Part 5, 6, 9 & 14 of MIT 6.5940: TinyML and Efficient Deep Learning Computing, Finetuning LLMs, Quantization Fundamentals, Chatper 9 of Scribed Notes for Spring 2024 Course Offering, Handwritten Note.

- Andrej Karpathy's Lectures: Build GPT-2 (124M) from Scratch GitHub Repo for NanoGPT, Deep Dive into LLM, How to Use LLM, Build the GPT Tokenizer

- Miscellaneous Resources: Video on DeepSeek R1, Video on DeekSeep MLA, Approximating KL Divergence, DeepSeek Open-Infra Repo

- 🔑 Keywords: Test-Time Scaling, Knowledge Distillation, Inference, Quantization, LLM Evaluations, LLM Agents

- 📊 Slides: What's New, What's Next, Posttraining, Inference, Research Tools

- 💻 CoLab Notebook Demos: Quantization

- ✍️ Homework: Problem Set 4: Finetuning LLM, due at 12:30pm, Mar/18/2025

- 📝 Presentation of Replication Project: By Tao Wang and Zhe Liu

- Jens Ludwig, Sendhil Mullainathan, Machine Learning as a Tool for Hypothesis Generation, The Quarterly Journal of Economics, Volume 139, Issue 2, May 2024, Pages 751–827, link to the paper, Replication Report, Code, and Slides.

- 📚 References:

- Ouyang, Long, et al. 2022. Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems, 35, 27730-27744.

- Chu, T., Zhai, Y., Yang, J., Tong, S., Xie, S., Schuurmans, D., Le, Q.V., Levine, S. and Ma, Y., 2025. Sft memorizes, rl generalizes: A comparative study of foundation model post-training. arXiv preprint arXiv:2501.17161.

- Wei, Jason, et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems, 35, 24824-24837.

- Yao, S., Yu, D., Zhao, J., Shafran, I., Griffiths, T., Cao, Y. and Narasimhan, K., 2023. Tree of thoughts: Deliberate problem solving with large language models. Advances in neural information processing systems, 36, pp.11809-11822.

- Brynjolfsson, E., Li, D. and Raymond, L., 2025. Generative AI at work. The Quarterly Journal of Economics, p.qjae044.

- Hinton, G., Vinyals, O. and Dean, J., 2015. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531.

- Muennighoff, N., Yang, Z., Shi, W., Li, X.L., Fei-Fei, L., Hajishirzi, H., Zettlemoyer, L., Liang, P., Candès, E. and Hashimoto, T., 2025. s1: Simple test-time scaling. arXiv preprint arXiv:2501.19393.

- Park, J.S., O'Brien, J., Cai, C.J., Morris, M.R., Liang, P. and Bernstein, M.S., 2023, October. Generative agents: Interactive simulacra of human behavior. In Proceedings of the 36th annual acm symposium on user interface software and technology (pp. 1-22).

- Ties de Kok (2025) ChatGPT for Textual Analysis? How to Use Generative LLMs in Accounting Research. Management Science forthcoming. GitHub Link

- Books, Notes, and Courses: Part 11 & 12 of CS224N: Natural Language Processing with Deep Learning, Talk @ Stanford by Barret Zoph and John Schulman, Part 16 & 17 of CS336: Large Language Models from Scratch, Part 5, 6, 9 & 14 of MIT 6.5940: TinyML and Efficient Deep Learning Computing, Berkeley LLM Agents, Finetuning LLMs, Quantization Fundamentals, Building and Evaluating Advanced RAG, RLHF Short Course, Chatper 9 of Scribed Notes for Spring 2024 Course Offering. Handwritten Note.

- Andrej Karpathy's Lectures: Build GPT-2 (124M) from Scratch GitHub Repo for NanoGPT, Deep Dive into LLM, How to Use LLM, Build the GPT Tokenizer

- Miscellaneous Resources: Video on DeepSeek R1, Video on DeekSeep MLA, Approximating KL Divergence, DeepSeek Open-Infra Repo, Language Agents: Foundations, Prospects, and Risks (EMNLP 2024 Tutorial)

- 🔑 Keywords: Potential Outcomes Model, RCT, Unconfoundedness, IPW, AIPW

- 📊 Slides: What's New, What's Next, Causal Inference

- 💻 CoLab Notebook Demos: Causal Inference under Unconfoundedness

- ✍️ Homework: Problem Set 5: Bias and Variance with Mis-speficied Linear Regression, due at 12:30pm, Apr/1/2025

- 📝 Presentation of Replication Project: By Keming Li and Qilin Huang

- Costello, T.H., Pennycook, G. and Rand, D.G., 2024. Durably reducing conspiracy beliefs through dialogues with AI. Science, 385(6714), p.eadq1814, link to the paper, Replication Report, Code, and Slides.

- 📚 References:

- Rubin, D.B., 1974. Estimating causal effects of treatments in randomized and nonrandomized studies. Journal of educational Psychology, 66(5), p.688.

- Rosenbaum, P.R. and Rubin, D.B., 1983. The central role of the propensity score in observational studies for causal effects. Biometrika, 70(1), pp.41-55.

- Li, F., Morgan, K.L. and Zaslavsky, A.M., 2018. Balancing covariates via propensity score weighting. Journal of the American Statistical Association, 113(521), pp.390-400.

- Robins, J.M., Rotnitzky, A. and Zhao, L.P., 1994. Estimation of regression coefficients when some regressors are not always observed. Journal of the American statistical Association, 89(427), pp.846-866.

- Books, Notes, and Courses: Chapters 1, 2, & 3 of Causal Inference: A Statistical Learning Approach, Chapters 2 & 5 of Applied Causal Inference Powered by ML and AI, Chapters 2 & 3 of Duke STA 640: Causal Inference, Handwritten Note

- Miscellaneous Resources: Videos of Stanford ECON 293 Machine Learning and Causal Inference, AEA Continuing Education on Machine Learning and Econometrics

- 🔑 Keywords: IPW, AIPW, root-n consistency, DML

- 📊 Slides: What's New, What's Next, Causal Inference, Double Machine Learning (Incomplete)

- 💻 CoLab Notebook Demos: Causal Inference under Unconfoundedness, IPW vs. AIPW

- ✍️ Homework: Problem Set 5: Bias and Variance with Mis-speficied Linear Regression, due at 12:30pm, Apr/1/2025; Problem Set 6: Double Machine Learning, due at 12:30pm, Apr/8/2025

- 📝 Presentation of Replication Project: By Zizhou Zhang and Lin Ma

- Ye, Z., Zhang, Z., Zhang, D., Zhang, H. and Zhang, R.P., 2023. Deep-learning-based causal inference for large-scale combinatorial experiments: Theory and empirical evidence. Management Science, forthcoming. Link to the Paper, Link to the Code, Replication Report, Code, and Slides.

- 📚 References:

- Imbens, G. and Xu, Y., 2024. Lalonde (1986) after nearly four decades: Lessons learned. arXiv preprint arXiv:2406.00827. Link to GitHub

- Rosenbaum, P.R. and Rubin, D.B., 1983. The central role of the propensity score in observational studies for causal effects. Biometrika, 70(1), pp.41-55.

- Li, F., Morgan, K.L. and Zaslavsky, A.M., 2018. Balancing covariates via propensity score weighting. Journal of the American Statistical Association, 113(521), pp.390-400.

- Robins, J.M., Rotnitzky, A. and Zhao, L.P., 1994. Estimation of regression coefficients when some regressors are not always observed. Journal of the American statistical Association, 89(427), pp.846-866.

- Dudík, M., Erhan, D., Langford, J. and Li, L., 2014. Doubly robust policy evaluation and optimization. Statistical Science, 29(4): 485-511.

- Books, Notes, and Courses: Chapters 2, 3, 14 of Causal Inference: A Statistical Learning Approach, Chapters 2, 5 of Applied Causal Inference Powered by ML and AI, Chapters 2, 3 of Duke STA 640: Causal Inference, Handwritten Note

- Miscellaneous Resources: Videos of Stanford ECON 293 Machine Learning and Causal Inference, AEA Continuing Education on Machine Learning and Econometrics, DML Package, Slides on DML

- 🔑 Keywords: Doubly robust policy evaluation, partial linear model, FWL Theorem, DML

- 📊 Slides: What's New, What's Next, Causal Inference, Double Machine Learning (Incomplete)

- 💻 CoLab Notebook Demos: FWL Theorem, DML with EconML-ATE, DML with EconML-CATE, EconML vs. DoubleML

- ✍️ Homework: Problem Set 6: Double Machine Learning, due at 12:30pm, Apr/8/2025

- 📝 Presentation of Replication Project: By Yiquan Chao and An Sheng

- Ali Goli, Anja Lambrecht, Hema Yoganarasimhan (2023) A Bias Correction Approach for Interference in Ranking Experiments. Marketing Science 43(3):590-614. Link to the Paper, Replication Report, Code, and Slides.

- 📚 References:

- Robins, J.M., Rotnitzky, A. and Zhao, L.P., 1994. Estimation of regression coefficients when some regressors are not always observed. Journal of the American statistical Association, 89(427), pp.846-866.

- Dudík, M., Erhan, D., Langford, J. and Li, L., 2014. Doubly robust policy evaluation and optimization. Statistical Science, 29(4): 485-511.

- Robinson P.M., 1988. Root-n-consistent semiparametric regression. Econometrica, Vol. 56, No. 4, pp. 931-954.

- Chernozhukov, V., Chetverikov, D., Demirer, M., Duflo, E., Hansen, C., Newey, W. and Robins, J., 2018. Double/debiased machine learning for treatment and structural parameters. The Econometrics Journal, Volume 21, Issue 1, Pages C1–C68.

- Books, Notes, and Courses: Chapters 2, 3, 14 of Causal Inference: A Statistical Learning Approach, Chapters 2, 5, 9 of Applied Causal Inference Powered by ML and AI, Chapters 2, 3 of Duke STA 640: Causal Inference, Chapters 12, 22 of Causal Inference for The Brave and True, Handwritten Note

- Miscellaneous Resources: Videos of Stanford ECON 293 Machine Learning and Causal Inference, AEA Continuing Education on Machine Learning and Econometrics, DML Package, Slides on DML

- 🔑 Keywords: DML, Neyman Orthogonality, Score Function, DML-DL, DML-DiD

- 📊 Slides: What's New, What's Next, Double Machine Learning

- 💻 CoLab Notebook Demos: DML with EconML-ATE, DML with EconML-CATE, EconML vs. DoubleML, DeDL

- ✍️ Homework: No coding homework this week. Please work on your final projects.

- 📝 Presentation of Replication Project: By Mengyang Lin and Bingqi Zhang

- Calvano, Emilio, Giacomo Calzolari, Vincenzo Denicolò, and Sergio Pastorello. 2020. "Artificial Intelligence, Algorithmic Pricing, and Collusion." American Economic Review 110 (10): 3267–97. Link to the Paper, Replication Report, Code, and Slides.

- 📚 References:

- Chernozhukov, V., Chetverikov, D., Demirer, M., Duflo, E., Hansen, C., Newey, W. and Robins, J., 2018. Double/debiased machine learning for treatment and structural parameters. The Econometrics Journal, Volume 21, Issue 1, Pages C1–C68.

- Brett R. Gordon, Robert Moakler, Florian Zettelmeyer (2022) Close Enough? A Large-Scale Exploration of Non-Experimental Approaches to Advertising Measurement. Marketing Science 42(4):768-793.

- Farrell, M.H., Liang, T. and Misra, S., 2021. Deep neural networks for estimation and inference. Econometrica, 89(1), pp.181-213.

- Farrell, M.H., Liang, T. and Misra, S., 2020. Deep learning for individual heterogeneity: An automatic inference framework. arXiv preprint arXiv:2010.14694.

- Ye, Z., Zhang, Z., Zhang, D., Zhang, H. and Zhang, R.P., 2023. Deep-learning-based causal inference for large-scale combinatorial experiments: Theory and empirical evidence. Management Science, forthcoming.

- Books, Notes, and Courses: Chapter 3 of Causal Inference: A Statistical Learning Approach, Chapter 9 of Applied Causal Inference Powered by ML and AI, Chapters 22 of Causal Inference for The Brave and True

- Miscellaneous Resources: Videos of Stanford ECON 293 Machine Learning and Causal Inference, AEA Continuing Education on Machine Learning and Econometrics, DML Package, Slides on DML, Original Slides of Victor Chernozhukov

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-PhD-S25

Similar Open Source Tools

AI-PhD-S25

AI-PhD-S25 is a mono-repo for the DOTE 6635 course on AI for Business Research at CUHK Business School. The course aims to provide a fundamental understanding of ML/AI concepts and methods relevant to business research, explore applications of ML/AI in business research, and discover cutting-edge AI/ML technologies. The course resources include Google CoLab for code distribution, Jupyter Notebooks, Google Sheets for group tasks, Overleaf template for lecture notes, replication projects, and access to HPC Server compute resource. The course covers topics like AI/ML in business research, deep learning basics, attention mechanisms, transformer models, LLM pretraining, posttraining, causal inference fundamentals, and more.

AI-PhD-S24

AI-PhD-S24 is a mono-repo for the PhD course 'AI for Business Research' at CUHK Business School in Spring 2024. The course aims to provide a basic understanding of machine learning and artificial intelligence concepts/methods used in business research, showcase how ML/AI is utilized in business research, and introduce state-of-the-art AI/ML technologies. The course includes scribed lecture notes, class recordings, and covers topics like AI/ML fundamentals, DL, NLP, CV, unsupervised learning, and diffusion models.

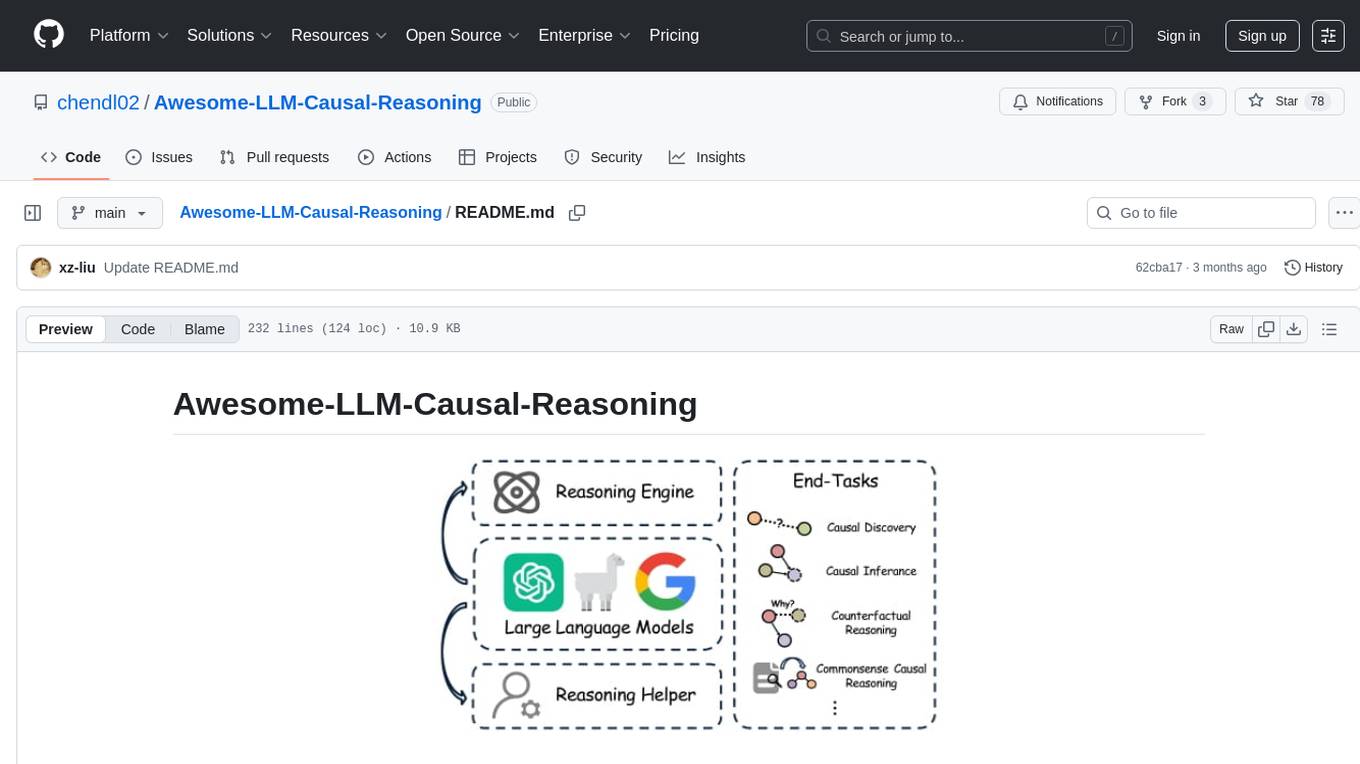

Awesome-LLM-Causal-Reasoning

The Awesome-LLM-Causal-Reasoning repository provides a comprehensive review of research focused on enhancing Large Language Models (LLMs) for causal reasoning (CR). It categorizes existing methods based on the role of LLMs as reasoning engines or helpers, evaluates LLMs' performance on various causal reasoning tasks, and discusses methodologies and insights for future research. The repository includes papers, datasets, and benchmarks related to causal reasoning in LLMs.

llm-self-correction-papers

This repository contains a curated list of papers focusing on the self-correction of large language models (LLMs) during inference. It covers various frameworks for self-correction, including intrinsic self-correction, self-correction with external tools, self-correction with information retrieval, and self-correction with training designed specifically for self-correction. The list includes survey papers, negative results, and frameworks utilizing reinforcement learning and OpenAI o1-like approaches. Contributions are welcome through pull requests following a specific format.

Time-LLM

Time-LLM is a reprogramming framework that repurposes large language models (LLMs) for time series forecasting. It allows users to treat time series analysis as a 'language task' and effectively leverage pre-trained LLMs for forecasting. The framework involves reprogramming time series data into text representations and providing declarative prompts to guide the LLM reasoning process. Time-LLM supports various backbone models such as Llama-7B, GPT-2, and BERT, offering flexibility in model selection. The tool provides a general framework for repurposing language models for time series forecasting tasks.

LLM101n

LLM101n is a course focused on building a Storyteller AI Large Language Model (LLM) from scratch in Python, C, and CUDA. The course covers various topics such as language modeling, machine learning, attention mechanisms, tokenization, optimization, device usage, precision training, distributed optimization, datasets, inference, finetuning, deployment, and multimodal applications. Participants will gain a deep understanding of AI, LLMs, and deep learning through hands-on projects and practical examples.

SLAM-LLM

SLAM-LLM is a deep learning toolkit for training custom multimodal large language models (MLLM) focusing on speech, language, audio, and music processing. It provides detailed recipes for training and high-performance checkpoints for inference. The toolkit supports various tasks such as automatic speech recognition (ASR), text-to-speech (TTS), visual speech recognition (VSR), automated audio captioning (AAC), spatial audio understanding, and music caption (MC). Users can easily extend to new models and tasks, utilize mixed precision training for faster training with less GPU memory, and perform multi-GPU training with data and model parallelism. Configuration is flexible based on Hydra and dataclass, allowing different configuration methods.

AIRS

AIRS is a collection of open-source software tools, datasets, and benchmarks focused on Artificial Intelligence for Science in Quantum, Atomistic, and Continuum Systems. The goal is to develop and maintain an integrated, open, reproducible, and sustainable set of resources to advance the field of AI for Science. The current resources include tools for Quantum Mechanics, Density Functional Theory, Small Molecules, Protein Science, Materials Science, Molecular Interactions, and Partial Differential Equations.

awesome-tool-llm

This repository focuses on exploring tools that enhance the performance of language models for various tasks. It provides a structured list of literature relevant to tool-augmented language models, covering topics such as tool basics, tool use paradigm, scenarios, advanced methods, and evaluation. The repository includes papers, preprints, and books that discuss the use of tools in conjunction with language models for tasks like reasoning, question answering, mathematical calculations, accessing knowledge, interacting with the world, and handling non-textual modalities.

FuseAI

FuseAI is a repository that focuses on knowledge fusion of large language models. It includes FuseChat, a state-of-the-art 7B LLM on MT-Bench, and FuseLLM, which surpasses Llama-2-7B by fusing three open-source foundation LLMs. The repository provides tech reports, releases, and datasets for FuseChat and FuseLLM, showcasing their performance and advancements in the field of chat models and large language models.

Slow_Thinking_with_LLMs

STILL is an open-source project exploring slow-thinking reasoning systems, focusing on o1-like reasoning systems. The project has released technical reports on enhancing LLM reasoning with reward-guided tree search algorithms and implementing slow-thinking reasoning systems using an imitate, explore, and self-improve framework. The project aims to replicate the capabilities of industry-level reasoning systems by fine-tuning reasoning models with long-form thought data and iteratively refining training datasets.

IvyGPT

IvyGPT is a medical large language model that aims to generate the most realistic doctor consultation effects. It has been fine-tuned on high-quality medical Q&A data and trained using human feedback reinforcement learning. The project features full-process training on medical Q&A LLM, multiple fine-tuning methods support, efficient dataset creation tools, and a dataset of over 300,000 high-quality doctor-patient dialogues for training.

interpret

InterpretML is an open-source package that incorporates state-of-the-art machine learning interpretability techniques under one roof. With this package, you can train interpretable glassbox models and explain blackbox systems. InterpretML helps you understand your model's global behavior, or understand the reasons behind individual predictions. Interpretability is essential for: - Model debugging - Why did my model make this mistake? - Feature Engineering - How can I improve my model? - Detecting fairness issues - Does my model discriminate? - Human-AI cooperation - How can I understand and trust the model's decisions? - Regulatory compliance - Does my model satisfy legal requirements? - High-risk applications - Healthcare, finance, judicial, ...

llm_benchmark

The 'llm_benchmark' repository is a personal evaluation project that tracks and tests various large models in areas such as logic, mathematics, programming, and human intuition. The evaluation consists of a private question bank with around 30 questions and 240 test cases, updated monthly. The scoring method involves assigning points based on correct deductions and meeting specific requirements, with scores normalized to a scale of 10. The repository aims to observe the long-term evolution trends of different large models from a subjective perspective, providing insights and a testing approach for individuals to assess large models.

intro_pharma_ai

This repository serves as an educational resource for pharmaceutical and chemistry students to learn the basics of Deep Learning through a collection of Jupyter Notebooks. The content covers various topics such as Introduction to Jupyter, Python, Cheminformatics & RDKit, Linear Regression, Data Science, Linear Algebra, Neural Networks, PyTorch, Convolutional Neural Networks, Transfer Learning, Recurrent Neural Networks, Autoencoders, Graph Neural Networks, and Summary. The notebooks aim to provide theoretical concepts to understand neural networks through code completion, but instructors are encouraged to supplement with their own lectures. The work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

mslearn-ai-fundamentals

This repository contains materials for the Microsoft Learn AI Fundamentals module. It covers the basics of artificial intelligence, machine learning, and data science. The content includes hands-on labs, interactive learning modules, and assessments to help learners understand key concepts and techniques in AI. Whether you are new to AI or looking to expand your knowledge, this module provides a comprehensive introduction to the fundamentals of AI.

awesome-ai-tools

Awesome AI Tools is a curated list of popular tools and resources for artificial intelligence enthusiasts. It includes a wide range of tools such as machine learning libraries, deep learning frameworks, data visualization tools, and natural language processing resources. Whether you are a beginner or an experienced AI practitioner, this repository aims to provide you with a comprehensive collection of tools to enhance your AI projects and research. Explore the list to discover new tools, stay updated with the latest advancements in AI technology, and find the right resources to support your AI endeavors.

go2coding.github.io

The go2coding.github.io repository is a collection of resources for AI enthusiasts, providing information on AI products, open-source projects, AI learning websites, and AI learning frameworks. It aims to help users stay updated on industry trends, learn from community projects, access learning resources, and understand and choose AI frameworks. The repository also includes instructions for local and external deployment of the project as a static website, with details on domain registration, hosting services, uploading static web pages, configuring domain resolution, and a visual guide to the AI tool navigation website. Additionally, it offers a platform for AI knowledge exchange through a QQ group and promotes AI tools through a WeChat public account.

AI-Notes

AI-Notes is a repository dedicated to practical applications of artificial intelligence and deep learning. It covers concepts such as data mining, machine learning, natural language processing, and AI. The repository contains Jupyter Notebook examples for hands-on learning and experimentation. It explores the development stages of AI, from narrow artificial intelligence to general artificial intelligence and superintelligence. The content delves into machine learning algorithms, deep learning techniques, and the impact of AI on various industries like autonomous driving and healthcare. The repository aims to provide a comprehensive understanding of AI technologies and their real-world applications.

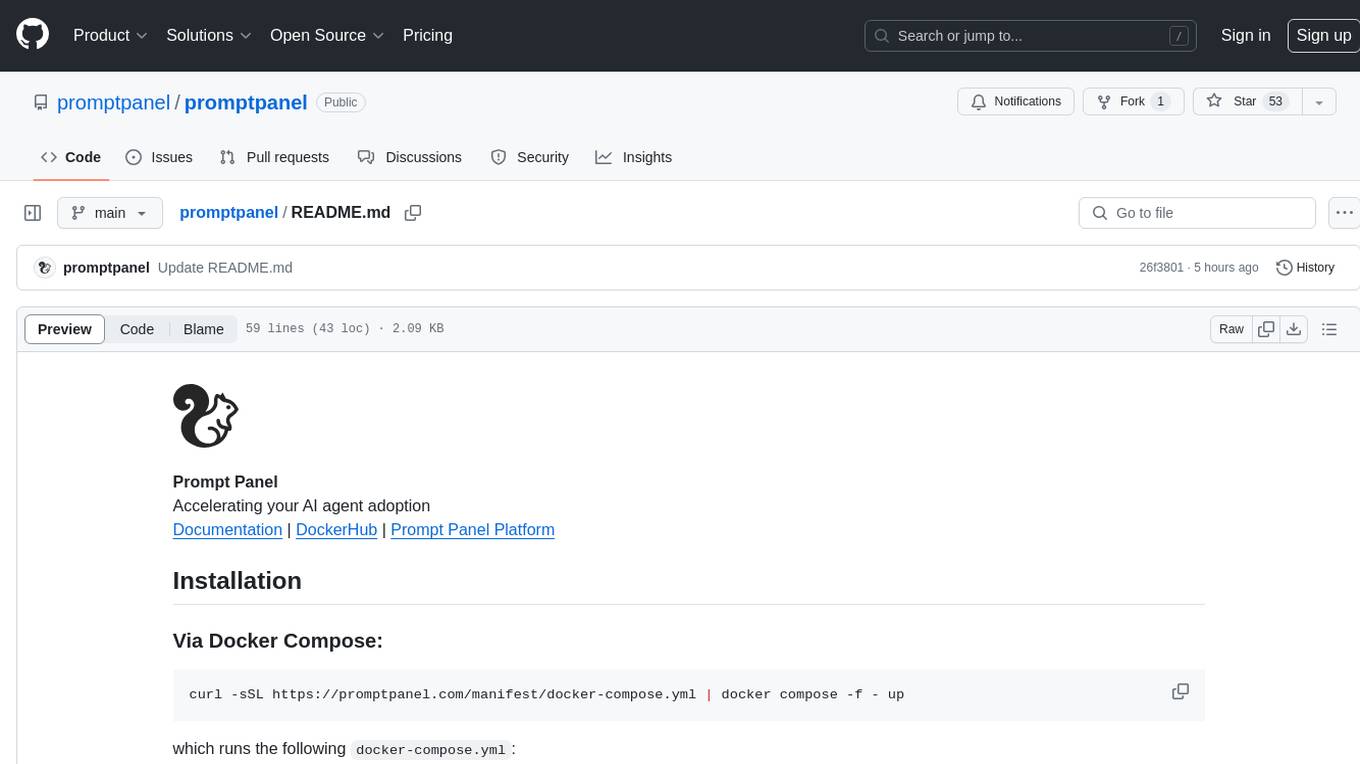

promptpanel

Prompt Panel is a tool designed to accelerate the adoption of AI agents by providing a platform where users can run large language models across any inference provider, create custom agent plugins, and use their own data safely. The tool allows users to break free from walled-gardens and have full control over their models, conversations, and logic. With Prompt Panel, users can pair their data with any language model, online or offline, and customize the system to meet their unique business needs without any restrictions.

ai-demos

The 'ai-demos' repository is a collection of example code from presentations focusing on building with AI and LLMs. It serves as a resource for developers looking to explore practical applications of artificial intelligence in their projects. The code snippets showcase various techniques and approaches to leverage AI technologies effectively. The repository aims to inspire and educate developers on integrating AI solutions into their applications.

ai_summer

AI Summer is a repository focused on providing workshops and resources for developing foundational skills in generative AI models and transformer models. The repository offers practical applications for inferencing and training, with a specific emphasis on understanding and utilizing advanced AI chat models like BingGPT. Participants are encouraged to engage in interactive programming environments, decide on projects to work on, and actively participate in discussions and breakout rooms. The workshops cover topics such as generative AI models, retrieval-augmented generation, building AI solutions, and fine-tuning models. The goal is to equip individuals with the necessary skills to work with AI technologies effectively and securely, both locally and in the cloud.