Prompt4ReasoningPapers

[ACL 2023] Reasoning with Language Model Prompting: A Survey

Stars: 908

Prompt4ReasoningPapers is a repository dedicated to reasoning with language model prompting. It provides a comprehensive survey of cutting-edge research on reasoning abilities with language models. The repository includes papers, methods, analysis, resources, and tools related to reasoning tasks. It aims to support various real-world applications such as medical diagnosis, negotiation, etc.

README:

- 2024-03-05 We release a new paper: "KnowAgent: Knowledge-Augmented Planning for LLM-Based Agents".

- 2024-02-06 We release a new paper: "EasyInstruct: An Easy-to-use Instruction Processing Framework for Large Language Models" with an HF demo EasyInstruct.

- 2024-01-03 We release a new paper:"A Comprehensive Study of Knowledge Editing for Large Language Models" with a new benchmark KnowEdit! We are looking forward to any comments or discussions on this topic :)

- 2023-7-12 We release EasyEdit, an easy-to-use knowledge editing framework for Large Language Models.

- 2023-6-19 We open-source KnowLM, a knowledgeable large language model framework with pre-training and instruction fine-tuning code (supports multi-machine multi-GPU setup) and various LLMs.

- 2023-3-27 We release EasyInstruct, a package for instructing Large Language Models (LLMs) like ChatGPT in your research experiments. It is designed to be easy to use and easy to extend!

- 2023-2-19 We upload a tutorial of our survey paper to help you learn more about reasoning with language model prompting (Attached with a video (Chinese) of the tutorial).

- 2022-12-19 We release a new survey paper:"Reasoning with Language Model Prompting: A Survey" based on this repository! We are looking forward to any comments or discussions on this topic :)

- 2022-09-14 We create this repository to maintain a paper list on Reasoning with Language Model Prompting.

Reasoning, as an essential ability for complex problem-solving, can provide back-end support for various real-world applications, such as medical diagnosis, negotiation, etc. This paper provides a comprehensive survey of cutting-edge research on reasoning with language model prompting. We introduce research works with comparisons and summaries and provide systematic resources to help beginners. We also discuss the potential reasons for emerging such reasoning abilities and highlight future research directions.

-

Reasoning with Language Model Prompting: A Survey.

Shuofei Qiao, Yixin Ou, Ningyu Zhang, Xiang Chen, Yunzhi Yao, Shumin Deng, Chuanqi Tan, Fei Huang, Huajun Chen. [abs], 2022.12

-

Towards Reasoning in Large Language Models: A Survey.

Jie Huang, Kevin Chen-Chuan Chang. [abs], 2022.12

-

A Survey of Deep Learning for Mathematical Reasoning.

Pan Lu, Liang Qiu, Wenhao Yu, Sean Welleck, Kai-Wei Chang. [abs], 2022.12

-

A Survey for In-context Learning.

Qingxiu Dong, Lei Li, Damai Dai, Ce Zheng, Zhiyong Wu, Baobao Chang, Xu Sun, Jingjing Xu, Lei Li, Zhifang Sui. [abs], 2022.12

-

Knowledge-enhanced Neural Machine Reasoning: A Review.

Tanmoy Chowdhury, Chen Ling, Xuchao Zhang, Xujiang Zhao, Guangji Bai, Jian Pei, Haifeng Chen, Liang Zhao. [abs], 2023.2

-

Augmented Language Models: a Survey.

Grégoire Mialon, Roberto Dessì, Maria Lomeli, Christoforos Nalmpantis, Ram Pasunuru, Roberta Raileanu, Baptiste Rozière, Timo Schick, Jane Dwivedi-Yu, Asli Celikyilmaz, Edouard Grave, Yann LeCun, Thomas Scialom. [abs], 2023.2

-

The Life Cycle of Knowledge in Big Language Models: A Survey.

Boxi Cao, Hongyu Lin, Xianpei Han, Le Sun. [abs], 2023.3

-

Is Prompt All You Need? No. A Comprehensive and Broader View of Instruction Learning.

Renze Lou, Kai Zhang, Wenpeng Yin. [abs], 2023.3

-

Logical Reasoning over Natural Language as Knowledge Representation: A Survey.

Zonglin Yang, Xinya Du, Rui Mao, Jinjie Ni, Erik Cambria. [abs], 2023.3

-

Nature Language Reasoning, A Survey.

Fei Yu, Hongbo Zhang, Benyou Wang. [abs], 2023.3

-

A Survey of Large Language Models.

Wayne Xin Zhao, Kun Zhou, Junyi Li, Tianyi Tang, Xiaolei Wang, Yupeng Hou, Yingqian Min, Beichen Zhang, Junjie Zhang, Zican Dong, Yifan Du, Chen Yang, Yushuo Chen, Zhipeng Chen, Jinhao Jiang, Ruiyang Ren, Yifan Li, Xinyu Tang, Zikang Liu, Peiyu Liu, Jian-Yun Nie, Ji-Rong Wen. [abs], 2023.3

-

Tool Learning with Foundation Models.

Yujia Qin, Shengding Hu, Yankai Lin, Weize Chen, Ning Ding, Ganqu Cui, Zheni Zeng, Yufei Huang, Chaojun Xiao, Chi Han, Yi Ren Fung, Yusheng Su, Huadong Wang, Cheng Qian, Runchu Tian, Kunlun Zhu, Shihao Liang, Xingyu Shen, Bokai Xu, Zhen Zhang, Yining Ye, Bowen Li, Ziwei Tang, Jing Yi, Yuzhang Zhu, Zhenning Dai, Lan Yan, Xin Cong, Yaxi Lu, Weilin Zhao, Yuxiang Huang, Junxi Yan, Xu Han, Xian Sun, Dahai Li, Jason Phang, Cheng Yang, Tongshuang Wu, Heng Ji, Zhiyuan Liu, Maosong Sun. [abs], 2023.4

-

A Survey of Chain of Thought Reasoning: Advances, Frontiers and Future.

Zheng Chu, Jingchang Chen, Qianglong Chen, Weijiang Yu, Tao He, Haotian Wang, Weihua Peng, Ming Liu, Bing Qin, Ting Liu. [abs], 2023.9

-

A Survey of Reasoning with Foundation Models: Concepts, Methodologies, and Outlook.

Jiankai Sun, Chuanyang Zheng, Enze Xie, Zhengying Liu, Ruihang Chu, Jianing Qiu, Jiaqi Xu, Mingyu Ding, Hongyang Li, Mengzhe Geng, Yue Wu, Wenhai Wang, Junsong Chen, Zhangyue Yin, Xiaozhe Ren, Jie Fu, Junxian He, Wu Yuan, Qi Liu, Xihui Liu, Yu Li, Hao Dong, Yu Cheng, Ming Zhang, Pheng Ann Heng, Jifeng Dai, Ping Luo, Jingdong Wang, Ji-Rong Wen, Xipeng Qiu, Yike Guo, Hui Xiong, Qun Liu, Zhenguo Li. [abs], 2023.12

-

Prompting Contrastive Explanations for Commonsense Reasoning Tasks.

Bhargavi Paranjape, Julian Michael, Marjan Ghazvininejad, Luke Zettlemoyer, Hannaneh Hajishirzi. [abs], 2021.6

-

Template Filling for Controllable Commonsense Reasoning.

Dheeraj Rajagopal, Vivek Khetan, Bogdan Sacaleanu, Anatole Gershman, Andrew Fano, Eduard Hovy. [abs], 2021.11

-

Chain of Thought Prompting Elicits Reasoning in Large Language Models.

Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Brian Ichter, Fei Xia, Ed H. Chi, Quoc V. Le, Denny Zhou. [abs], 2022.1

-

Large Language Models are Zero-Shot Reasoners.

Takeshi Kojima, Shixiang Shane Gu, Machel Reid, Yutaka Matsuo, Yusuke Iwasawa. [abs], 2022.5

-

Psychologically-informed chain-of-thought prompts for metaphor understanding in large language models.

Ben Prystawski, Paul Thibodeau, Noah Goodman. [abs], 2022.9

-

Complexity-based Prompting for Multi-step Reasoning.

Yao Fu, Hao Peng, Ashish Sabharwal, Peter Clark, Tushar Khot. [abs], 2022.10

-

Language Models are Multilingual Chain-of-thought Reasoners.

Freda Shi, Mirac Suzgun, Markus Freitag, Xuezhi Wang, Suraj Srivats, Soroush Vosoughi, Hyung Won Chung, Yi Tay, Sebastian Ruder, Denny Zhou, Dipanjan Das, Jason Wei. [abs], 2022.10

-

Automatic Chain of Thought Prompting in Large Language Models.

Zhuosheng Zhang, Aston Zhang, Mu Li, Alex Smola. [abs], 2022.10

-

Large Language Models are few(1)-shot Table Reasoners.

Wenhu Chen. [abs], 2022.10

-

Teaching Algorithmic Reasoning via In-context Learning.

Hattie Zhou, Azade Nova, Hugo Larochelle, Aaron Courville, Behnam Neyshabur, Hanie Sedghi. [abs], 2022.11

-

Active Prompting with Chain-of-Thought for Large Language Models.

Shizhe Diao, Pengcheng Wang, Yong Lin, Tong Zhang. [abs], 2023.2

-

Automatic Prompt Augmentation and Selection with Chain-of-Thought from Labeled Data.

KaShun Shum, Shizhe Diao, Tong Zhang. [abs], 2023.2

-

A prompt pattern catalog to enhance prompt engineering with chatgpt.

Jules White, Quchen Fu, Sam Hays, Michael Sandborn, Carlos Olea, Henry Gilbert, Ashraf Elnashar, Jesse Spencer-Smith, Douglas C Schmidt. [abs], 2023.2

-

ChatGPT Prompt Patterns for Improving Code Quality, Refactoring, Requirements Elicitation, anLearning to Reason and Memorize with Self-Notesd Software Design.

Jules White, Sam Hays, Quchen Fu, Jesse Spencer-Smith, Douglas C Schmidt. [abs], 2023.3

-

Learning to Reason and Memorize with Self-Notes.

Jack lanchantin, Shubham Toshniwal, Jason Weston, Arthur Szlam, Sainbayar Sukhbaatar. [abs], 2023.5

-

Plan-and-Solve Prompting: Improving Zero-Shot Chain-of-Thought Reasoning by Large Language Models.

Lei Wang, Wanyu Xu, Yihuai Lan, Zhiqiang Hu, Yunshi Lan, Roy Ka-Wei Lee, Ee-Peng Lim. [abs], 2023.5

-

Beyond Chain-of-Thought, Effective Graph-of-Thought Reasoning in Large Language Models.

Yao Yao, Zuchao Li, Hai Zhao. [abs], 2023.5

-

Re-Reading Improves Reasoning in Language Models.

Xiaohan Xu, Chongyang Tao, Tao Shen, Can Xu, Hongbo Xu, Guodong Long, Jian-guang Lou. [abs], 2023.9

-

Query-Dependent Prompt Evaluation and Optimization with Offline Inverse RL.

Hao Sun, Alihan Huyuk, Mihaela van der Schaar.[abs], 2023.9

-

Iteratively Prompt Pre-trained Language Models for Chain of Thought.

Boshi Wang, Xiang Deng, Huan Sun. [abs], 2022.3

-

Selection-Inference: Exploiting Large Language Models for Interpretable Logical Reasoning.

Antonia Creswell, Murray Shanahan, Irina Higgins. [abs], 2022.5

-

Least-to-Most Prompting Enables Complex Reasoning in Large Language Models.

Denny Zhou, Nathanael Schärli, Le Hou, Jason Wei, Nathan Scales, Xuezhi Wang, Dale Schuurmans, Olivier Bousquet, Quoc Le, Ed Chi. [abs], 2022.5

-

Maieutic Prompting: Logically Consistent Reasoning with Recursive Explanations.

Jaehun Jung, Lianhui Qin, Sean Welleck, Faeze Brahman, Chandra Bhagavatula, Ronan Le Bras, Yejin Choi. [abs], 2022.5

-

Faithful Reasoning Using Large Language Models.

Antonia Creswell, Murray Shanahan. [abs], 2022.8

-

Compositional Semantic Parsing with Large Language Models.

Andrew Drozdov, Nathanael Schärli, Ekin Akyürek, Nathan Scales, Xinying Song, Xinyun Chen, Olivier Bousquet, Denny Zhou. [abs], 2022.9

-

Decomposed Prompting: A Modular Approach for Solving Complex Tasks.

Tushar Khot, Harsh Trivedi, Matthew Finlayson, Yao Fu, Kyle Richardson, Peter Clark, Ashish Sabharwal. [abs], 2022.10

-

Measuring and Narrowing the Compositionality Gap in Language Models.

Ofir Press, Muru Zhang, Sewon Min, Ludwig Schmidt, Noah A. Smith, Mike Lewis. [abs], 2022.10

-

Successive Prompting for Decomposing Complex Questions.

Dheeru Dua, Shivanshu Gupta, Sameer Singh, Matt Gardner. [abs], 2022.12

-

The Impact of Symbolic Representations on In-context Learning for Few-shot Reasoning.

Hanlin Zhang, Yi-Fan Zhang, Li Erran Li, Eric Xing. [abs], 2022.12

-

LAMBADA: Backward Chaining for Automated Reasoning in Natural Language.

Seyed Mehran Kazemi, Najoung Kim, Deepti Bhatia, Xin Xu, Deepak Ramachandran. [abs], 2022.12

-

Iterated Decomposition: Improving Science Q&A by Supervising Reasoning Processes.

Justin Reppert, Ben Rachbach, Charlie George, Luke Stebbing, Jungwon Byun, Maggie Appleton, Andreas Stuhlmüller. [abs], 2023.1

-

Self-Polish: Enhance Reasoning in Large Language Models via Problem Refinement.

Zhiheng Xi, Senjie Jin, Yuhao Zhou, Rui Zheng, Songyang Gao, Tao Gui, Qi Zhang, Xuanjing Huang. [abs], 2023.5

-

Reframing Human-AI Collaboration for Generating Free-Text Explanations.

Sarah Wiegreffe, Jack Hessel, Swabha Swayamdipta, Mark Riedl, Yejin Choi. [abs], 2021.12

-

The Unreliability of Explanations in Few-Shot In-Context Learning.

Xi Ye, Greg Durrett. [abs], 2022.5

-

Discriminator-Guided Multi-step Reasoning with Language Models.

Muhammad Khalifa, Lajanugen Logeswaran, Moontae Lee, Honglak Lee, Lu Wang. [abs], 2023.5

-

RCOT: Detecting and Rectifying Factual Inconsistency in Reasoning by Reversing Chain-of-Thought.

Tianci Xue, Ziqi Wang, Zhenhailong Wang, Chi Han, Pengfei Yu, Heng Ji. [abs], 2023.5

-

Self-Consistency Improves Chain of Thought Reasoning in Language Models.

Xuezhi Wang, Jason Wei, Dale Schuurmans, Quoc Le, Ed H. Chi, Sharan Narang, Aakanksha Chowdhery, Denny Zhou. [abs], 2022.3

-

On the Advance of Making Language Models Better Reasoners.

Yifei Li, Zeqi Lin, Shizhuo Zhang, Qiang Fu, Bei Chen, Jian-Guang Lou, Weizhu Chen. [abs], 2022.6

-

Complexity-based Prompting for Multi-step Reasoning.

Yao Fu, Hao Peng, Ashish Sabharwal, Peter Clark, Tushar Khot. [abs], 2022.10

-

Large Language Models are reasoners with Self-Verification.

Yixuan Weng, Minjun Zhu, Shizhu He, Kang Liu, Jun Zhao. [abs], 2022.12

-

Answering Questions by Meta-Reasoning over Multiple Chains of Thought.

Ori Yoran, Tomer Wolfson, Ben Bogin, Uri Katz, Daniel Deutch, Jonathan Berant. [abs], 2023.4

-

Tree of Thoughts: Deliberate Problem Solving with Large Language Models.

Shunyu Yao, Dian Yu, Jeffrey Zhao, Izhak Shafran, Thomas L. Griffiths, Yuan Cao, Karthik Narasimhan. [abs], 2023.5

-

Improving Factuality and Reasoning in Language Models through Multiagent Debate.

Yilun Du, Shuang Li, Antonio Torralba, Joshua B. Tenenbaum, Igor Mordatch. [abs], 2023.5

-

AutoMix: Automatically Mixing Language Models

Aman Madaan, Pranjal Aggarwal, Ankit Anand, Srividya Pranavi Potharaju, Swaroop Mishra, Pei Zhou, Aditya Gupta, Dheeraj Rajagopal, Karthik Kappaganthu, Yiming Yang, Shyam Upadhyay, Mausam, Manaal Faruqui. [abs], 2023.9

-

Reversal of Thought: Enhancing Large Language Models with Preference-Guided Reverse Reasoning Warm-up.

Jiahao Yuan, Dehui Du, Hao Zhang, Zixiang Di, Usman Naseem. [abs], [code], 2024.10

-

STaR: Bootstrapping Reasoning With Reasoning.

Eric Zelikman, Yuhuai Wu, Noah D. Goodman. [abs], 2022.3

-

Large Language Models Can Self-Improve.

Jiaxin Huang, Shixiang Shane Gu, Le Hou, Yuexin Wu, Xuezhi Wang, Hongkun Yu, Jiawei Han. [abs], 2022.10

-

Reflexion: An Autonomous Agent with Dynamic Memory and Self-reflection.

Noah Shinn, Beck Labash, Ashwin Gopinath. [abs], 2023.3

-

Self-Refine: Iterative Refinement with Self-Feedback.

Aman Madaan, Niket Tandon, Prakhar Gupta, Skyler Hallinan, Luyu Gao, Sarah Wiegreffe, Uri Alon, Nouha Dziri, Shrimai Prabhumoye, Yiming Yang, Sean Welleck, Bodhisattwa Prasad Majumder, Shashank Gupta, Amir Yazdanbakhsh, Peter Clark. [abs], 2023.3

-

REFINER: Reasoning Feedback on Intermediate Representations.

Debjit Paul, Mete Ismayilzada, Maxime Peyrard, Beatriz Borges, Antoine Bosselut, Robert West, Boi Faltings. [abs], 2023.4

-

Reasoning with Language Model is Planning with World Model

Shibo Hao*, Yi Gu*, Haodi Ma, Joshua Jiahua Hong, Zhen Wang, Daisy Zhe Wang, Zhiting Hu [abs], 2023.5

-

Enhancing Zero-Shot Chain-of-Thought Reasoning in Large Language Models through Logic.

Xufeng Zhao, Mengdi Li, Wenhao Lu, Cornelius Weber, Jae Hee Lee, Kun Chu, Stefan Wermter. [abs] [code], 2024.2

-

Mind's Eye: Grounded Language Model Reasoning through Simulation.

Ruibo Liu, Jason Wei, Shixiang Shane Gu, Te-Yen Wu, Soroush Vosoughi, Claire Cui, Denny Zhou, Andrew M. Dai. [abs], 2022.10

-

Language Models of Code are Few-Shot Commonsense Learners.

Aman Madaan, Shuyan Zhou, Uri Alon, Yiming Yang, Graham Neubig. [abs], 2022.10

-

PAL: Program-aided Language Models.

Luyu Gao, Aman Madaan, Shuyan Zhou, Uri Alon, Pengfei Liu, Yiming Yang, Jamie Callan, Graham Neubig. [abs], 2022.11

-

Program of Thoughts Prompting: Disentangling Computation from Reasoning for Numerical Reasoning Tasks.

Wenhu Chen, Xueguang Ma, Xinyi Wang, William W. Cohen. [abs], 2022.11

-

Faithful Chain-of-Thought Reasoning.

Qing Lyu, Shreya Havaldar, Adam Stein, Li Zhang, Delip Rao, Eric Wong, Marianna Apidianaki, Chris Callison-Burch. [abs], 2023.1

-

Large Language Models are Versatile Decomposers: Decompose Evidence and Questions for Table-based Reasoning.

Yunhu Ye, Binyuan Hui, Min Yang, Binhua Li, Fei Huang, Yongbin Li. [abs], 2023.1

-

Synthetic Prompting: Generating Chain-of-Thought Demonstrations for Large Language Models.

Zhihong Shao, Yeyun Gong, Yelong Shen, Minlie Huang, Nan Duan, Weizhu Chen. [abs], 2023.2

-

MathPrompter: Mathematical Reasoning Using Large Language Models.

Shima Imani, Liang Du, Harsh Shrivastava. [abs], 2023.3

-

Automatic Model Selection with Large Language Models for Reasoning.

Xu Zhao, Yuxi Xie, Kenji Kawaguchi, Junxian He, Qizhe Xie. [abs], 2023.5

-

Code Prompting: a Neural Symbolic Method for Complex Reasoning in Large Language Models.

Yi Hu, Haotong Yang, Zhouchen Lin, Muhan Zhang. [abs], 2023.5

-

The Magic of IF: Investigating Causal Reasoning Abilities in Large Language Models of Code.

Xiao Liu, Da Yin, Chen Zhang, Yansong Feng, Dongyan Zhao. [abs], 2023.5

- When Do Program-of-Thought Works for Reasoning?

Zhen Bi, Ningyu Zhang, Yinuo Jiang, Shumin Deng, Guozhou Zheng, Huajun Chen. [abs], 2023.12

-

Toolformer: Language Models Can Teach Themselves to Use Tools.

Timo Schick, Jane Dwivedi-Yu, Roberto Dessì, Roberta Raileanu, Maria Lomeli, Luke Zettlemoyer, Nicola Cancedda, Thomas Scialom. [abs], 2023.2

-

ART: Automatic multi-step reasoning and tool-use for large language models.

Bhargavi Paranjape, Scott Lundberg, Sameer Singh, Hannaneh Hajishirzi, Luke Zettlemoyer, Marco Tulio Ribeiro. [abs], 2023.3

-

Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models.

Pan Lu, Baolin Peng, Hao Cheng, Michel Galley, Kai-Wei Chang, Ying Nian Wu, Song-Chun Zhu, Jianfeng Gao. [abs], 2023.4

-

CRITIC: Large Language Models Can Self-Correct with Tool-Interactive Critiquing.

Zhibin Gou, Zhihong Shao, Yeyun Gong, Yelong Shen, Yujiu Yang, Nan Duan, Weizhu Chen. [abs], 2023.5

-

Making Language Models Better Tool Learners with Execution Feedback.

Shuofei Qiao, Honghao Gui, Huajun Chen, Ningyu Zhang. [abs], 2023.5

-

CREATOR: Disentangling Abstract and Concrete Reasonings of Large Language Models through Tool Creation.

Cheng Qian, Chi Han, Yi R. Fung, Yujia Qin, Zhiyuan Liu, Heng Ji. [abs], 2023.5

-

ChatCoT: Tool-Augmented Chain-of-Thought Reasoning on Chat-based Large Language Models.

Zhipeng Chen, Kun Zhou, Beichen Zhang, Zheng Gong, Wayne Xin Zhao, Ji-Rong Wen. [abs], 2023.5

-

MultiTool-CoT: GPT-3 Can Use Multiple External Tools with Chain of Thought Prompting.

Tatsuro Inaba, Hirokazu Kiyomaru, Fei Cheng, Sadao Kurohashi. [abs], 2023.5

-

ToolkenGPT: Augmenting Frozen Language Models with Massive Tools via Tool Embeddings

Shibo Hao, Tianyang Liu, Zhen Wang, Zhiting Hu [abs], 2023.5

-

Generated Knowledge Prompting for Commonsense Reasoning.

Jiacheng Liu, Alisa Liu, Ximing Lu, Sean Welleck, Peter West, Ronan Le Bras, Yejin Choi, Hannaneh Hajishirzi. [abs], 2021.10

-

Rainier: Reinforced Knowledge Introspector for Commonsense Question Answering.

Jiacheng Liu, Skyler Hallinan, Ximing Lu, Pengfei He, Sean Welleck, Hannaneh Hajishirzi, Yejin Choi. [abs], 2022.10

-

Explanations from Large Language Models Make Small Reasoners Better.

Shiyang Li, Jianshu Chen, Yelong Shen, Zhiyu Chen, Xinlu Zhang, Zekun Li, Hong Wang, Jing Qian, Baolin Peng, Yi Mao, Wenhu Chen, Xifeng Yan. [abs], 2022.10

-

PINTO: Faithful Language Reasoning Using Prompt-Generated Rationales.

Peifeng Wang, Aaron Chan, Filip Ilievski, Muhao Chen, Xiang Ren. [abs], 2022.11

-

TSGP: Two-Stage Generative Prompting for Unsupervised Commonsense Question Answering.

Yueqing Sun, Yu Zhang, Le Qi, Qi Shi. [abs], 2022.11

-

Distilling Multi-Step Reasoning Capabilities of Large Language Models into Smaller Models via Semantic Decompositions.

Kumar Shridhar, Alessandro Stolfo, Mrinmaya Sachan. [abs], 2022.12

-

Teaching Small Language Models to Reason.

Lucie Charlotte Magister, Jonathan Mallinson, Jakub Adamek, Eric Malmi, Aliaksei Severyn. [abs], 2022.12

-

Large Language Models Are Reasoning Teachers.

Namgyu Ho, Laura Schmid, Se-Young Yun. [abs], 2022.12

-

Specializing Smaller Language Models towards Multi-Step Reasoning.

Yao Fu, Hao Peng, Litu Ou, Ashish Sabharwal, Tushar Khot. [abs], 2023.1

-

PaD: Program-aided Distillation Specializes Large Models in Reasoning.

Xuekai Zhu, Biqing Qi, Kaiyan Zhang, Xingwei Long, Bowen Zhou. [abs], 2023.5

-

MemPrompt: Memory-assisted prompt editing to improve GPT-3 after deployment

Aman Madaan, Niket Tandon, Peter Clark, Yiming Yang. [abs], 2022.1

-

LogicSolver: Towards Interpretable Math Word Problem Solving with Logical Prompt-enhanced Learning.

Zhicheng Yang, Jinghui Qin, Jiaqi Chen, Liang Lin, Xiaodan Liang. [abs], 2022.5

-

Selective Annotation Makes Language Models Better Few-Shot Learners.

Hongjin Su, Jungo Kasai, Chen Henry Wu, Weijia Shi, Tianlu Wang, Jiayi Xin, Rui Zhang, Mari Ostendorf, Luke Zettlemoyer, Noah A. Smith, Tao Yu. [abs], 2022.9

-

Dynamic Prompt Learning via Policy Gradient for Semi-structured Mathematical Reasoning.

Pan Lu, Liang Qiu, Kai-Wei Chang, Ying Nian Wu, Song-Chun Zhu, Tanmay Rajpurohit, Peter Clark, Ashwin Kalyan. [abs], 2022.9

-

Interleaving Retrieval with Chain-of-Thought Reasoning for Knowledge-Intensive Multi-Step Questions.

Harsh Trivedi, Niranjan Balasubramanian, Tushar Khot, Ashish Sabharwal. [abs], 2022.12

-

Rethinking with Retrieval: Faithful Large Language Model Inference.

Hangfeng He, Hongming Zhang, Dan Roth. [abs], 2023.1

-

Verify-and-Edit: A Knowledge-Enhanced Chain-of-Thought Framework.

Ruochen Zhao, Xingxuan Li, Shafiq Joty, Chengwei Qin, Lidong Bing. [abs], 2023.5

-

Language Model Cascades.

David Dohan, Winnie Xu, Aitor Lewkowycz, Jacob Austin, David Bieber, Raphael Gontijo Lopes, Yuhuai Wu, Henryk Michalewski, Rif A. Saurous, Jascha Sohl-dickstein, Kevin Murphy, Charles Sutton. [abs], 2022.7

-

Learn to Explain: Multimodal Reasoning via Thought Chains for Science Question Answering.

Pan Lu, Swaroop Mishra, Tony Xia, Liang Qiu, Kai-Wei Chang, Song-Chun Zhu, Oyvind Tafjord, Peter Clark, Ashwin Kalyan. [abs], 2022.9

-

Multimodal Analogical Reasoning over Knowledge Graphs.

Ningyu Zhang, Lei Li, Xiang Chen, Xiaozhuan Liang, Shumin Deng, Huajun Chen. [abs], 2022.10

-

Scaling Instruction-Finetuned Language Models.

Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Yunxuan Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Alex Castro-Ros, Marie Pellat, Kevin Robinson, Dasha Valter, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, Jason Wei. [abs], 2022.10

-

See, Think, Confirm: Interative Prompting Between Vision and Language Models for Knowledge-based Visual Reasoning.

Zhenfang Chen, Qinhong Zhou, Yikang Shen, Yining Hong, Hao Zhang, Chuang Gan. [abs], 2023.1

-

Multimodal Chain-of-Thought Reasoning in Language Models.

Zhuosheng Zhang, Aston Zhang, Mu Li, Hai Zhao, George Karypis, Alex Smola. [abs], 2023.2

-

Language Is not All You Need: Aligning Perception with Language Models.

Shaohan Huang, Li Dong, Wenhui Wang, Yaru Hao, Saksham Singhal, Shuming Ma, Tengchao Lv, Lei Cui, Owais Khan Mohammed, Qiang Liu, Kriti Aggarwal, Zewen Chi, Johan Bjorck, Vishrav Chaudhary, Subhojit Som, Xia Song, Furu Wei. [abs], 2023.2

-

Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models.

Chenfei Wu, Shengming Yin, Weizhen Qi, Xiaodong Wang, Zecheng Tang, Nan Duan. [abs], 2023.3

-

ViperGPT: Visual Inference via Python Execution for Reasoning.

Dídac Surís, Sachit Menon, Carl Vondrick. [abs], 2023.3

-

MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action.

Zhengyuan Yang, Linjie Li , Jianfeng Wang, Kevin Lin, Ehsan Azarnasab, Faisal Ahmed, Zicheng Liu, Ce Liu, Michael Zeng, Lijuan Wang. [abs], 2023.3

-

Boosting Theory-of-Mind Performance in Large Language Models via Prompting.

Shima Rahimi Moghaddam, Christopher J. Honey. [abs], 2023.4

-

Enhancing Reasoning Capabilities of LLMs via Principled Synthetic Logic Corpus.

Terufumi Morishita, Gaku Morio, Atsuki Yamaguchi, Yasuhiro Sogawa. [abs], 2023.11

-

Can language models learn from explanations in context?

Andrew K. Lampinen, Ishita Dasgupta, Stephanie C. Y. Chan, Kory Matthewson, Michael Henry Tessler, Antonia Creswell, James L. McClelland, Jane X. Wang, Felix Hill. [abs], 2022.4

-

Emergent Abilities of Large Language Models.

Jason Wei, Yi Tay, Rishi Bommasani, Colin Raffel, Barret Zoph, Sebastian Borgeaud, Dani Yogatama, Maarten Bosma, Denny Zhou, Donald Metzler, Ed H. Chi, Tatsunori Hashimoto, Oriol Vinyals, Percy Liang, Jeff Dean, William Fedus. [abs], 2022.6

-

Language models show human-like content effects on reasoning.

Ishita Dasgupta, Andrew K. Lampinen, Stephanie C. Y. Chan, Antonia Creswell, Dharshan Kumaran, James L. McClelland, Felix Hill. [abs], 2022.7

-

Rationale-Augmented Ensembles in Language Models.

Xuezhi Wang, Jason Wei, Dale Schuurmans, Quoc Le, Ed Chi, Denny Zhou. [abs], 2022.7

-

Can Large Language Models Truly Understand Prompts? A Case Study with Negated Prompts.

Joel Jang, Seongheyon Ye, Minjoon Seo. [abs], 2022.9

-

Text and Patterns: For Effective Chain of Thought, It Takes Two to Tango

Aman Madaan, Amir Yazdanbakhsh. [abs], 2022.9

-

Challenging BIG-Bench Tasks and Whether Chain-of-Thought Can Solve Them.

Mirac Suzgun, Nathan Scales, Nathanael Schärli, Sebastian Gehrmann, Yi Tay, Hyung Won Chung, Aakanksha Chowdhery, Quoc V. Le, Ed H. Chi, Denny Zhou, Jason Wei. [abs], 2022.10

-

Language Models are Greedy Reasoners: A Systematic Formal Analysis of Chain-of-thought.

Abulhair Saparov, He He. [abs], 2022.10

-

Knowledge Unlearning for Mitigating Privacy Risks in Language Models.

Joel Jang, Dongkeun Yoon, Sohee Yang, Sungmin Cha, Moontae Lee, Lajanugen Logeswaran, Minjoon Seo. [abs], 2022.10

-

Emergent Analogical Reasoning in Large Language Models.

Taylor Webb, Keith J. Holyoak, Hongjing Lu. [abs], 2022.12

-

Towards Understanding Chain-of-Thought Prompting: An Empirical Study of What Matters.

Boshi Wang, Sewon Min, Xiang Deng, Jiaming Shen, You Wu, Luke Zettlemoyer, Huan Sun. [abs], 2022.12

-

On Second Thought, Let’s Not Think Step by Step! Bias and Toxicity in Zero-Shot Reasoning.

Omar Shaikh, Hongxin Zhang, William Held, Michael Bernstein, Diyi Yang. [abs], 2022.12

-

Can Retriever-Augmented Language Models Reason? The Blame Game Between the Retriever and the Language Model.

Parishad BehnamGhader, Santiago Miret, Siva Reddy. [abs], 2022.12

-

Why Can GPT Learn In-Context? Language Models Secretly Perform Gradient Descent as Meta-Optimizers.

Damai Dai, Yutao Sun, Li Dong, Yaru Hao, Zhifang Sui, Furu Wei. [abs], 2022.12

-

Dissociating language and thought in large language models: a cognitive perspective.

Kyle Mahowald, Anna A. Ivanova, Idan A. Blank, Nancy Kanwisher, Joshua B. Tenenbaum, Evelina Fedorenko. [abs], 2023.1

-

Large Language Models Can Be Easily Distracted by Irrelevant Context.

Freda Shi, Xinyun Chen, Kanishka Misra, Nathan Scales, David Dohan, Ed Chi, Nathanael Schärli, Denny Zhou. [abs], 2023.2

-

A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity.

Yejin Bang, Samuel Cahyawijaya, Nayeon Lee, Wenliang Dai, Dan Su, Bryan Wilie, Holy Lovenia, Ziwei Ji, Tiezheng Yu, Willy Chung, Quyet V. Do, Yan Xu, Pascale Fung. [abs], 2023.2

-

ChatGPT is a Knowledgeable but Inexperienced Solver: An Investigation of Commonsense Problem in Large Language Models.

Ning Bian, Xianpei Han, Le Sun, Hongyu Lin, Yaojie Lu, Ben He. [abs], 2023.3

-

Why think step-by-step? Reasoning emerges from the locality of experience.

Ben Prystawski, Noah D. Goodman. [abs], 2023.4

-

Learning Deductive Reasoning from Synthetic Corpus based on Formal Logic.

Terufumi Morishita, Gaku Morio, Atsuki Yamaguchi, Yasuhiro Sogawa. [abs], 2023.8

| Reasoning Skills | Benchmarks |

|---|---|

| Arithmetic Reasoning | GSM8K, SVAMP, ASDiv, AQuA-RAT, MAWPS, AddSub, MultiArith, SingleEq, SingleOp |

| Commonsense Reasoning | CommonsenseQA, StrategyQA, ARC, SayCan, BoolQA, HotpotQA, OpenBookQA, PIQA, WikiWhy |

| Symbolic Reasoning | Last Letter Concatenation, Coin Flip, Reverse List |

| Logical Reasoning | ProofWriter, EntailmentBank, RuleTaker, CLUTRR, FLD, FLDx2 |

| Multimodal Reasoning | SCIENCEQA |

| Others | BIG-bench, SCAN, Chain-of-Thought Hub, MR-BEN, WorFBench |

- ThoughtSource: A central, open resource for data and tools related to chain-of-thought reasoning in LLMs.

- LangChain: A library designed to help developers build applications using LLMs combined with other sources of computation or knowledge.

- LogiTorch: A PyTorch-based library for logical reasoning on natural language.

- λprompt: A library that allows for building a full large LM-based prompt machines, including ones that self-edit to correct and even self-write their own execution code.

- Promptify: Prompt Engineering, Solve NLP Problems with LLM's & Easily generate different NLP Task prompts for popular generative models like GPT, PaLM, and more with Promptify.

- MiniChain: A tiny library for coding with large language models that aims to implement the core prompt chaining functionality.

- LlamaIndex: A project that provides a central interface to connect your LLM's with external data.

- EasyInstruct: A package for instructing Large Language Models (LLMs) like GPT-3 in your research experiments. It is designed to be easy to use and easy to extend.

- Add a new paper or update an existing paper, thinking about which category the work should belong to.

- Use the same format as existing entries to describe the work.

- Add the abstract link of the paper (

/abs/format if it is an arXiv publication). - A very brief explanation why you think a paper should be added or updated is recommended.

Don't worry if you put something wrong, they will be fixed for you. Just contribute and promote your awesome work here!

If you find this survey useful for your research, please consider citing

@inproceedings{qiao-etal-2023-reasoning,

title = "Reasoning with Language Model Prompting: A Survey",

author = "Qiao, Shuofei and

Ou, Yixin and

Zhang, Ningyu and

Chen, Xiang and

Yao, Yunzhi and

Deng, Shumin and

Tan, Chuanqi and

Huang, Fei and

Chen, Huajun",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.294",

pages = "5368--5393",

abstract = "Reasoning, as an essential ability for complex problem-solving, can provide back-end support for various real-world applications, such as medical diagnosis, negotiation, etc. This paper provides a comprehensive survey of cutting-edge research on reasoning with language model prompting. We introduce research works with comparisons and summaries and provide systematic resources to help beginners. We also discuss the potential reasons for emerging such reasoning abilities and highlight future research directions. Resources are available at https://github.com/zjunlp/Prompt4ReasoningPapers (updated periodically).",

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Prompt4ReasoningPapers

Similar Open Source Tools

Prompt4ReasoningPapers

Prompt4ReasoningPapers is a repository dedicated to reasoning with language model prompting. It provides a comprehensive survey of cutting-edge research on reasoning abilities with language models. The repository includes papers, methods, analysis, resources, and tools related to reasoning tasks. It aims to support various real-world applications such as medical diagnosis, negotiation, etc.

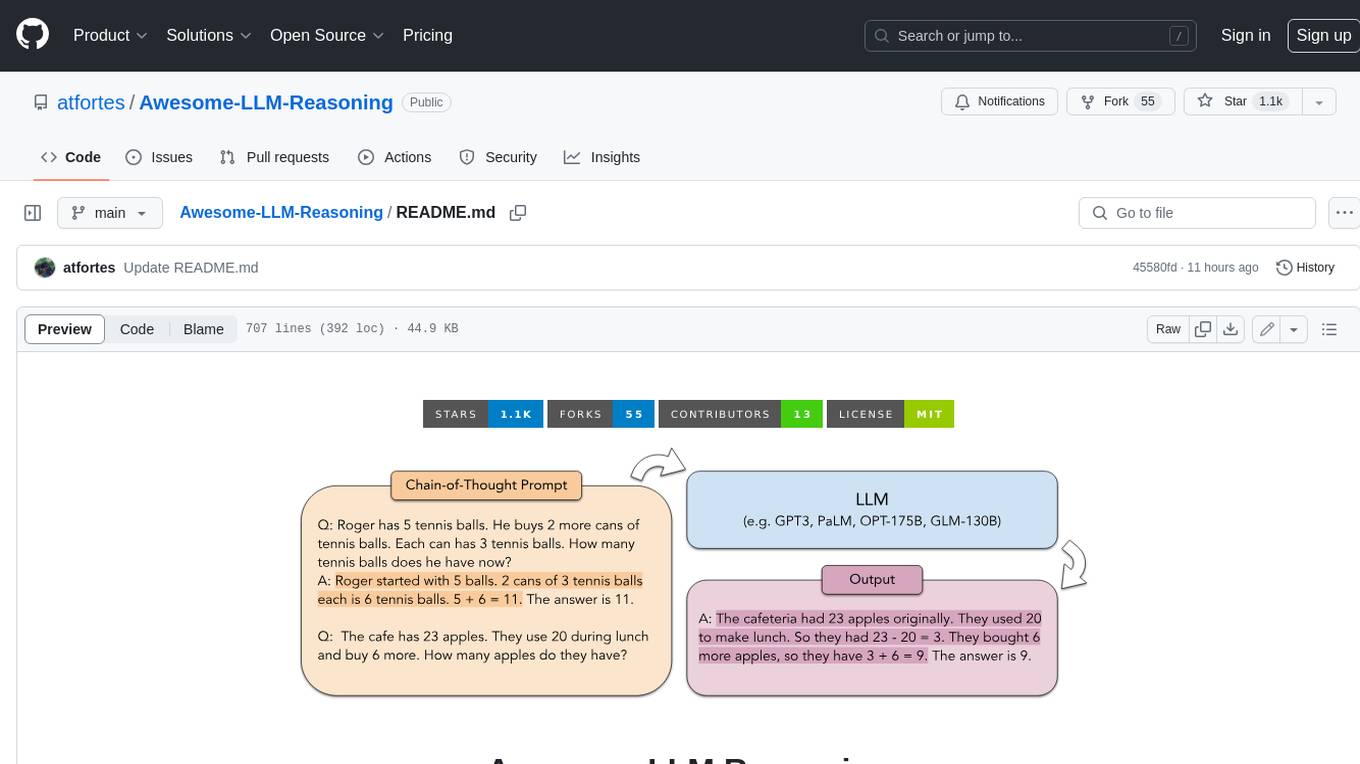

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

awesome-llm-role-playing-with-persona

Awesome-llm-role-playing-with-persona is a curated list of resources for large language models for role-playing with assigned personas. It includes papers and resources related to persona-based dialogue systems, personalized response generation, psychology of LLMs, biases in LLMs, and more. The repository aims to provide a comprehensive collection of research papers and tools for exploring role-playing abilities of large language models in various contexts.

llm-self-correction-papers

This repository contains a curated list of papers focusing on the self-correction of large language models (LLMs) during inference. It covers various frameworks for self-correction, including intrinsic self-correction, self-correction with external tools, self-correction with information retrieval, and self-correction with training designed specifically for self-correction. The list includes survey papers, negative results, and frameworks utilizing reinforcement learning and OpenAI o1-like approaches. Contributions are welcome through pull requests following a specific format.

awesome-ai-llm4education

The 'awesome-ai-llm4education' repository is a curated list of papers related to artificial intelligence (AI) and large language models (LLM) for education. It collects papers from top conferences, journals, and specialized domain-specific conferences, categorizing them based on specific tasks for better organization. The repository covers a wide range of topics including tutoring, personalized learning, assessment, material preparation, specific scenarios like computer science, language, math, and medicine, aided teaching, as well as datasets and benchmarks for educational research.

Awesome-Multimodal-LLM-for-Code

This repository contains papers, methods, benchmarks, and evaluations for code generation under multimodal scenarios. It covers UI code generation, scientific code generation, slide code generation, visually rich programming, logo generation, program repair, UML code generation, and general benchmarks.

LLM-Synthetic-Data

LLM-Synthetic-Data is a repository focused on real-time, fine-grained LLM-Synthetic-Data generation. It includes methods, surveys, and application areas related to synthetic data for language models. The repository covers topics like pre-training, instruction tuning, model collapse, LLM benchmarking, evaluation, and distillation. It also explores application areas such as mathematical reasoning, code generation, text-to-SQL, alignment, reward modeling, long context, weak-to-strong generalization, agent and tool use, vision and language, factuality, federated learning, generative design, and safety.

AI-PhD-S24

AI-PhD-S24 is a mono-repo for the PhD course 'AI for Business Research' at CUHK Business School in Spring 2024. The course aims to provide a basic understanding of machine learning and artificial intelligence concepts/methods used in business research, showcase how ML/AI is utilized in business research, and introduce state-of-the-art AI/ML technologies. The course includes scribed lecture notes, class recordings, and covers topics like AI/ML fundamentals, DL, NLP, CV, unsupervised learning, and diffusion models.

Awesome-LLM-Strawberry

Awesome LLM Strawberry is a collection of research papers and blogs related to OpenAI Strawberry(o1) and Reasoning. The repository is continuously updated to track the frontier of LLM Reasoning.

awesome-deeplogic

Awesome deep logic is a curated list of papers and resources focusing on integrating symbolic logic into deep neural networks. It includes surveys, tutorials, and research papers that explore the intersection of logic and deep learning. The repository aims to provide valuable insights and knowledge on how logic can be used to enhance reasoning, knowledge regularization, weak supervision, and explainability in neural networks.

awesome-AI4MolConformation-MD

The 'awesome-AI4MolConformation-MD' repository focuses on protein conformations and molecular dynamics using generative artificial intelligence and deep learning. It provides resources, reviews, datasets, packages, and tools related to AI-driven molecular dynamics simulations. The repository covers a wide range of topics such as neural networks potentials, force fields, AI engines/frameworks, trajectory analysis, visualization tools, and various AI-based models for protein conformational sampling. It serves as a comprehensive guide for researchers and practitioners interested in leveraging AI for studying molecular structures and dynamics.

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

Knowledge-Conflicts-Survey

Knowledge Conflicts for LLMs: A Survey is a repository containing a survey paper that investigates three types of knowledge conflicts: context-memory conflict, inter-context conflict, and intra-memory conflict within Large Language Models (LLMs). The survey reviews the causes, behaviors, and possible solutions to these conflicts, providing a comprehensive analysis of the literature in this area. The repository includes detailed information on the types of conflicts, their causes, behavior analysis, and mitigating solutions, offering insights into how conflicting knowledge affects LLMs and how to address these conflicts.

awesome-tool-llm

This repository focuses on exploring tools that enhance the performance of language models for various tasks. It provides a structured list of literature relevant to tool-augmented language models, covering topics such as tool basics, tool use paradigm, scenarios, advanced methods, and evaluation. The repository includes papers, preprints, and books that discuss the use of tools in conjunction with language models for tasks like reasoning, question answering, mathematical calculations, accessing knowledge, interacting with the world, and handling non-textual modalities.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.