Awesome-LLM-Watermark

UP-TO-DATE LLM Watermark paper. 🔥🔥🔥

Stars: 212

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

README:

This repo includes papers about the watermarking for text and images.

-

Is Watermarking LLM-Generated Code Robust? Tiny ICLR 2024

-

Tarun Suresh, Shubham Ugare, Gagandeep Singh, Sasa Misailovic

-

-

Towards Better Statistical Understanding of Watermarking LLMs. Preprint.

-

Zhongze Cai, Shang Liu, Hanzhao Wang, Huaiyang Zhong, Xiaocheng Li

-

-

Topic-based Watermarks for LLM-Generated Text. Preprint.

-

Alexander Nemecek, Yuzhou Jiang, Erman Ayday

-

-

A Statistical Framework of Watermarks for Large Language Models: Pivot, Detection Efficiency and Optimal Rules. Preprint.

-

Xiang Li, Feng Ruan, Huiyuan Wang, Qi Long, Weijie J. Su

-

-

WaterJudge: Quality-Detection Trade-off when Watermarking Large Language Models. Preprint.

-

Piotr Molenda, Adian Liusie, Mark J. F. Gales

-

-

Duwak: Dual Watermarks in Large Language Models. Preprint.

-

Chaoyi Zhu, Jeroen Galjaard, Pin-Yu Chen, Lydia Y. Chen

-

-

Lost in Overlap: Exploring Watermark Collision in LLMs. Preprint.

-

Yiyang Luo, Ke Lin, Chao Gu

-

-

WaterMax: breaking the LLM watermark detectability-robustness-quality trade-off. Preprint.

-

Eva Giboulot, Furon Teddy

-

-

WARDEN: Multi-Directional Backdoor Watermarks for Embedding-as-a-Service Copyright Protection. Preprint.

-

Anudeex Shetty, Yue Teng, Ke He, Qiongkai Xu

-

-

EmMark: Robust Watermarks for IP Protection of Embedded Quantized Large Language Models. Preprint.

-

Ruisi Zhang, Farinaz Koushanfar

-

-

Token-Specific Watermarking with Enhanced Detectability and Semantic Coherence for Large Language Models. Preprint.

-

Mingjia Huo, Sai Ashish Somayajula, Youwei Liang, Ruisi Zhang, Farinaz Koushanfar, Pengtao Xie

-

-

Attacking LLM Watermarks by Exploiting Their Strengths. Preprint.

-

Qi Pang, Shengyuan Hu, Wenting Zheng, Virginia Smith

-

-

Multi-Bit Distortion-Free Watermarking for Large Language Models. preprint.

- Massieh Kordi Boroujeny, Ya Jiang, Kai Zeng, Brian Mark

- https://arxiv.org/abs/2402.16578

-

Watermarking Makes Language Models Radioactive. Preprint.

-

Tom Sander, Pierre Fernandez, Alain Durmus, Matthijs Douze, Teddy Furon

-

-

Can Watermarks Survive Translation? On the Cross-lingual Consistency of Text Watermark for Large Language Models. Preprint.

-

Zhiwei He, Binglin Zhou, Hongkun Hao, Aiwei Liu, Xing Wang, Zhaopeng Tu, Zhuosheng Zhang, Rui Wang

-

-

GumbelSoft: Diversified Language Model Watermarking via the GumbelMax-trick. Preprint.

-

Jiayi Fu, Xuandong Zhao, Ruihan Yang, Yuansen Zhang, Jiangjie Chen, Yanghua Xiao

-

-

k-SemStamp: A Clustering-Based Semantic Watermark for Detection of Machine-Generated Text. Preprint.

-

Abe Bohan Hou, Jingyu Zhang, Yichen Wang, Daniel Khashabi, Tianxing He

-

-

Proving membership in LLM pretraining data via data watermarks. Preprint.

-

Johnny Tian-Zheng Wei, Ryan Yixiang Wang, Robin Jia

-

-

Permute-and-Flip: An optimally robust and watermarkable decoder for LLMs. Preprint.

- Xuandong Zhao, Lei Li, Yu-Xiang Wang

- https://arxiv.org/abs/2402.05864

-

Provably Robust Multi-bit Watermarking for AI-generated Text via Error Correction Code. Preprint.

- Wenjie Qu, Dong Yin, Zixin He, Wei Zou, Tianyang Tao, Jinyuan Jia, Jiaheng Zhang

- https://arxiv.org/abs/2401.16820

-

Instructional Fingerprinting of Large Language Models. Preprint.

- Jiashu Xu, Fei Wang, Mingyu Derek Ma, Pang Wei Koh, Chaowei Xiao, Muhao Chen

- https://arxiv.org/abs/2401.12255

-

Adaptive Text Watermark for Large Language Models. Preprint.

- Yepeng Liu, Yuheng Bu

- https://arxiv.org/abs/2401.13927

-

Excuse me, sir? Your language model is leaking (information) Preprint.

-

Or Zamir

-

-

Cross-Attention Watermarking of Large Language Models. ICASSP2024.

-

Folco Bertini Baldassini, Huy H. Nguyen, Ching-Chung Chang, Isao Echizen

-

-

Optimizing watermarks for large language models. Preprint.

-

Bram Wouters

-

-

Towards Optimal Statistical Watermarking. Preprint.

-

Baihe Huang, Banghua Zhu, Hanlin Zhu, Jason D. Lee, Jiantao Jiao, Michael I. Jordan

-

-

A Survey of Text Watermarking in the Era of Large Language Models. Preprint. Survey paper.

-

Aiwei Liu, Leyi Pan, Yijian Lu, Jingjing Li, Xuming Hu, Lijie Wen, Irwin King, Philip S. Yu

-

-

On the Learnability of Watermarks for Language Models. Preprint.

-

Chenchen Gu, Xiang Lisa Li, Percy Liang, Tatsunori Hashimoto

-

-

New Evaluation Metrics Capture Quality Degradation due to LLM Watermarking. Preprint.

-

Karanpartap Singh, James Zou

-

-

Mark My Words: Analyzing and Evaluating Language Model Watermarks. Preprint.

-

Julien Piet, Chawin Sitawarin, Vivian Fang, Norman Mu, David Wagner

-

-

I Know You Did Not Write That! A Sampling Based Watermarking Method for Identifying Machine Generated Text. Preprint.

-

Kaan Efe Keleş, Ömer Kaan Gürbüz, Mucahid Kutlu

-

-

Improving the Generation Quality of Watermarked Large Language Models via Word Importance Scoring. Preprint

- Yuhang Li, Yihan Wang, Zhouxing Shi, Cho-Jui Hsieh

- https://arxiv.org/abs/2311.09668

-

Performance Trade-offs of Watermarking Large Language Models. Preprint.

- Anirudh Ajith, Sameer Singh, Danish Pruthi

- https://arxiv.org/abs/2311.09816

-

X-Mark: Towards Lossless Watermarking Through Lexical Redundancy. Preprint.

- Liang Chen, Yatao Bian, Yang Deng, Shuaiyi Li, Bingzhe Wu, Peilin Zhao, Kam-fai Wong

- https://arxiv.org/abs/2311.09832

-

WaterBench: Towards Holistic Evaluation of Watermarks for Large Language Models. ACL 2024.

- Shangqing Tu, Yuliang Sun, Yushi Bai, Jifan Yu, Lei Hou, Juanzi Li

- https://arxiv.org/abs/2311.07138

- Benchmark dataset

-

Watermarks in the Sand: Impossibility of Strong Watermarking for Generative Models. Preprint.

-

Hanlin Zhang, Benjamin L. Edelman, Danilo Francati, Daniele Venturi, Giuseppe Ateniese, Boaz Barak

-

-

REMARK-LLM: A Robust and Efficient Watermarking Framework for Generative Large Language Models. Preprint.

- Ruisi Zhang, Shehzeen Samarah Hussain, Paarth Neekhara, Farinaz Koushanfar

- https://arxiv.org/abs/2310.12362

-

Embarrassingly Simple Text Watermarks. Preprint.

- Ryoma Sato, Yuki Takezawa, Han Bao, Kenta Niwa, Makoto Yamada

- https://arxiv.org/abs/2310.08920

-

Necessary and Sufficient Watermark for Large Language Models. Preprint.

- Yuki Takezawa, Ryoma Sato, Han Bao, Kenta Niwa, Makoto Yamada

- https://arxiv.org/abs/2310.00833

-

Functional Invariants to Watermark Large Transformers. Preprint.

- Fernandez Pierre, Couairon Guillaume, Furon Teddy, Douze Matthijs

- https://arxiv.org/abs/2310.11446

-

Watermarking LLMs with Weight Quantization. EMNLP2023 findings.

- Linyang Li, Botian Jiang, Pengyu Wang, Ke Ren, Hang Yan, Xipeng Qiu

- https://arxiv.org/abs/2310.11237

-

DiPmark: A Stealthy, Efficient and Resilient Watermark for Large Language Models. Preprint.

- Yihan Wu, Zhengmian Hu, Hongyang Zhang, Heng Huang

- https://arxiv.org/abs/2310.07710

-

A Semantic Invariant Robust Watermark for Large Language Models. Preprint.

- Aiwei Liu, Leyi Pan, Xuming Hu, Shiao Meng, Lijie Wen

- https://arxiv.org/abs/2310.06356

-

SemStamp: A Semantic Watermark with Paraphrastic Robustness for Text Generation. Preprint.

- Abe Bohan Hou, Jingyu Zhang, Tianxing He, Yichen Wang, Yung-Sung Chuang, Hongwei Wang, Lingfeng Shen, Benjamin Van Durme, Daniel Khashabi, Yulia Tsvetkov

- https://arxiv.org/abs/2310.03991

-

Advancing Beyond Identification: Multi-bit Watermark for Language Models. Preprint.

- KiYoon Yoo, Wonhyuk Ahn, Nojun Kwak.

- https://arxiv.org/abs/2308.00221

-

Three Bricks to Consolidate Watermarks for Large Language Models. Preprint.

- Pierre Fernandez, Antoine Chaffin, Karim Tit, Vivien Chappelier, Teddy Furon.

- https://arxiv.org/abs/2308.00113

-

Towards Codable Text Watermarking for Large Language Models. Preprint.

- Lean Wang, Wenkai Yang, Deli Chen, Hao Zhou, Yankai Lin, Fandong Meng, Jie Zhou, Xu Sun.

- https://arxiv.org/abs/2307.15992

-

A Private Watermark for Large Language Models. Preprint.

- Aiwei Liu, Leyi Pan, Xuming Hu, Shu'ang Li, Lijie Wen, Irwin King, Philip S. Yu.

- https://arxiv.org/abs/2307.16230

-

Robust Distortion-free Watermarks for Language Models. Preprint.

- Rohith Kuditipudi John Thickstun Tatsunori Hashimoto Percy Liang.

- https://arxiv.org/abs/2307.15593

-

Watermarking Conditional Text Generation for AI Detection: Unveiling Challenges and a Semantic-Aware Watermark Remedy. Preprint.

- Yu Fu, Deyi Xiong, Yue Dong.

- https://arxiv.org/abs/2307.13808

-

Provable Robust Watermarking for AI-Generated Text. Preprint.

- Xuandong Zhao, Prabhanjan Ananth, Lei Li, Yu-Xiang Wang.

- https://arxiv.org/abs/2306.17439

-

On the Reliability of Watermarks for Large Language Models. Preprint.

- John Kirchenbauer, Jonas Geiping, Yuxin Wen, Manli Shu, Khalid Saifullah, Kezhi Kong, Kasun Fernando, Aniruddha Saha, Micah Goldblum, Tom Goldstein.

- https://arxiv.org/abs/2306.04634

-

Undetectable Watermarks for Language Models. Preprint.

- Miranda Christ, Sam Gunn, Or Zamir.

- https://arxiv.org/abs/2306.09194

-

Watermarking Text Data on Large Language Models for Dataset Copyright Protection. Preprint.

- Yixin Liu, Hongsheng Hu, Xuyun Zhang, Lichao Sun.

- https://arxiv.org/abs/2305.13257

-

Baselines for Identifying Watermarked Large Language Models. Preprint.

- Leonard Tang, Gavin Uberti, Tom Shlomi.

- https://arxiv.org/abs/2305.18456

-

Who Wrote this Code? Watermarking for Code Generation. Preprint.

- Taehyun Lee, Seokhee Hong, Jaewoo Ahn, Ilgee Hong, Hwaran Lee, Sangdoo Yun, Jamin Shin, Gunhee Kim.

- https://arxiv.org/abs/2305.15060

-

Robust Multi-bit Natural Language Watermarking through Invariant Features. ACL 2023.

- KiYoon Yoo, Wonhyuk Ahn, Jiho Jang, Nojun Kwak.

- https://arxiv.org/abs/2305.01904

-

Are You Copying My Model? Protecting the Copyright of Large Language Models for EaaS via Backdoor Watermark. ACL 2023.

- Wenjun Peng, Jingwei Yi, Fangzhao Wu, Shangxi Wu, Bin Zhu, Lingjuan Lyu, Binxing Jiao, Tong Xu, Guangzhong Sun, Xing Xie.

- https://arxiv.org/abs/2305.10036

-

Watermarking Text Generated by Black-Box Language Models. Preprint.

- Xi Yang, Kejiang Chen, Weiming Zhang, Chang Liu, Yuang Qi, Jie Zhang, Han Fang, Nenghai Yu.

- https://arxiv.org/abs/2305.08883

-

Protecting Language Generation Models via Invisible Watermarking. ICML 2023.

- Xuandong Zhao, Yu-Xiang Wang, Lei Li.

- https://arxiv.org/abs/2302.03162

-

A Watermark for Large Language Models. ICML 2023. Outstanding Paper Award

- John Kirchenbauer, Jonas Geiping, Yuxin Wen, Jonathan Katz, Ian Miers, Tom Goldstein.

- https://arxiv.org/abs/2301.10226

-

Distillation-Resistant Watermarking for Model Protection in NLP. EMNLP 2022

- Xuandong Zhao, Lei Li, Yu-Xiang Wang.

- https://arxiv.org/abs/2210.03312

-

CATER: Intellectual Property Protection on Text Generation APIs via Conditional Watermarks. NeurIPS 2022

- Xuanli He, Qiongkai Xu, Yi Zeng, Lingjuan Lyu, Fangzhao Wu, Jiwei Li, Ruoxi Jia.

- https://arxiv.org/abs/2209.08773

-

Adversarial Watermarking Transformer: Towards Tracing Text Provenance with Data Hiding. IEEE S&P 2021

- Sahar Abdelnabi, Mario Fritz.

- https://arxiv.org/abs/2009.03015

-

Watermarking GPT Outputs. slides 2023

- Scott Aaronson, Hendrik Kirchner

- https://www.scottaaronson.com/talks/watermark.ppt

-

Watermarking the Outputs of Structured Prediction with an Application in Statistical Machine Translation. EMNLP 2011

- Ashish Venugopal, Jakob Uszkoreit, David Talbot, Franz Och, Juri Ganitkevitch.

- https://aclanthology.org/D11-1126/

-

Flexible and Secure Watermarking for Latent Diffusion Model. MM23.

- Cheng Xiong, Chuan Qin, Guorui Feng, Xinpeng Zhang

- https://dl.acm.org/doi/abs/10.1145/3581783.3612448

-

Leveraging Optimization for Adaptive Attacks on Image Watermarks. Preprint.

- Nils Lukas, Abdulrahman Diaa, Lucas Fenaux, Florian Kerschbaum

- https://arxiv.org/abs/2309.16952

-

Catch You Everything Everywhere: Guarding Textual Inversion via Concept Watermarking. Preprint.

- Weitao Feng, Jiyan He, Jie Zhang, Tianwei Zhang, Wenbo Zhou, Weiming Zhang, Nenghai Yu

- https://arxiv.org/abs/2309.05940

-

Hey That's Mine Imperceptible Watermarks are Preserved in Diffusion Generated Outputs. Preprint.

- Luke Ditria, Tom Drummond

- https://arxiv.org/abs/2308.11123

-

Generative Watermarking Against Unauthorized Subject-Driven Image Synthesis. Preprint.

- Yihan Ma, Zhengyu Zhao, Xinlei He, Zheng Li, Michael Backes, Yang Zhang

- https://arxiv.org/abs/2306.07754

-

Invisible Image Watermarks Are Provably Removable Using Generative AI. Preprint.

- Xuandong Zhao, Kexun Zhang, Zihao Su, Saastha Vasan, Ilya Grishchenko, Christopher Kruegel, Giovanni Vigna, Yu-Xiang Wang, Lei Li.

- https://arxiv.org/abs/2306.01953

-

Tree-Ring Watermarks: Fingerprints for Diffusion Images that are Invisible and Robust. Preprint.

- Yuxin Wen, John Kirchenbauer, Jonas Geiping, Tom Goldstein.

- https://arxiv.org/abs/2305.20030

-

Evading Watermark based Detection of AI-Generated Content. CCS 2023.

- Zhengyuan Jiang, Jinghuai Zhang, Neil Zhenqiang Gong.

- https://arxiv.org/abs/2305.03807

-

The Stable Signature: Rooting Watermarks in Latent Diffusion Models. ICCV 2023.

- Pierre Fernandez, Guillaume Couairon, Hervé Jégou, Matthijs Douze, Teddy Furon.

- https://arxiv.org/abs/2303.15435

-

Watermarking Images in Self-Supervised Latent Spaces. ICASSP 2022.

- Pierre Fernandez, Alexandre Sablayrolles, Teddy Furon, Hervé Jégou, Matthijs Douze.

- https://arxiv.org/abs/2112.09581

First, think about which category the work should belong to.

Second, use the same format as the others to describe the work.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-LLM-Watermark

Similar Open Source Tools

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

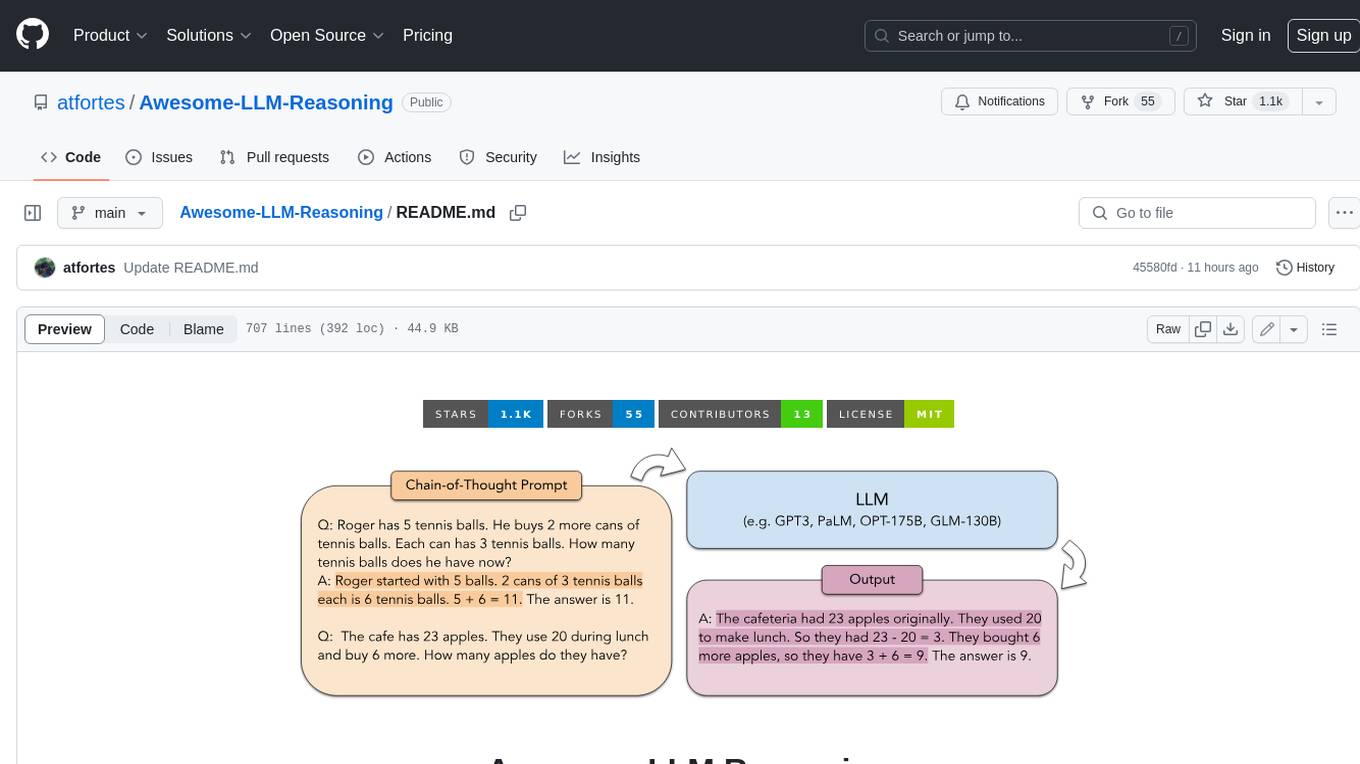

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

awesome-llm-role-playing-with-persona

Awesome-llm-role-playing-with-persona is a curated list of resources for large language models for role-playing with assigned personas. It includes papers and resources related to persona-based dialogue systems, personalized response generation, psychology of LLMs, biases in LLMs, and more. The repository aims to provide a comprehensive collection of research papers and tools for exploring role-playing abilities of large language models in various contexts.

Prompt4ReasoningPapers

Prompt4ReasoningPapers is a repository dedicated to reasoning with language model prompting. It provides a comprehensive survey of cutting-edge research on reasoning abilities with language models. The repository includes papers, methods, analysis, resources, and tools related to reasoning tasks. It aims to support various real-world applications such as medical diagnosis, negotiation, etc.

Awesome-LLM-Reasoning-Openai-o1-Survey

The repository 'Awesome LLM Reasoning Openai-o1 Survey' provides a collection of survey papers and related works on OpenAI o1, focusing on topics such as LLM reasoning, self-play reinforcement learning, complex logic reasoning, and scaling law. It includes papers from various institutions and researchers, showcasing advancements in reasoning bootstrapping, reasoning scaling law, self-play learning, step-wise and process-based optimization, and applications beyond math. The repository serves as a valuable resource for researchers interested in exploring the intersection of language models and reasoning techniques.

LLMAgentPapers

LLM Agents Papers is a repository containing must-read papers on Large Language Model Agents. It covers a wide range of topics related to language model agents, including interactive natural language processing, large language model-based autonomous agents, personality traits in large language models, memory enhancements, planning capabilities, tool use, multi-agent communication, and more. The repository also provides resources such as benchmarks, types of tools, and a tool list for building and evaluating language model agents. Contributors are encouraged to add important works to the repository.

LLM-as-a-Judge

LLM-as-a-Judge is a repository that includes papers discussed in a survey paper titled 'A Survey on LLM-as-a-Judge'. The repository covers various aspects of using Large Language Models (LLMs) as judges for tasks such as evaluation, reasoning, and decision-making. It provides insights into evaluation pipelines, improvement strategies, and specific tasks related to LLMs. The papers included in the repository explore different methodologies, applications, and future research directions for leveraging LLMs as evaluators in various domains.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLMs) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLMs. The repository includes research papers, tools, and techniques related to leveraging LLMs for tasks like data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, knob tuning, query optimization, and database diagnosis.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLM) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLM. The repository includes works on data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, configuration tuning, query optimization, and anomaly diagnosis using LLMs. It aims to provide insights and advancements in leveraging LLMs for improving data processing, analysis, and database management tasks.

awesome-ai-llm4education

The 'awesome-ai-llm4education' repository is a curated list of papers related to artificial intelligence (AI) and large language models (LLM) for education. It collects papers from top conferences, journals, and specialized domain-specific conferences, categorizing them based on specific tasks for better organization. The repository covers a wide range of topics including tutoring, personalized learning, assessment, material preparation, specific scenarios like computer science, language, math, and medicine, aided teaching, as well as datasets and benchmarks for educational research.

llm-self-correction-papers

This repository contains a curated list of papers focusing on the self-correction of large language models (LLMs) during inference. It covers various frameworks for self-correction, including intrinsic self-correction, self-correction with external tools, self-correction with information retrieval, and self-correction with training designed specifically for self-correction. The list includes survey papers, negative results, and frameworks utilizing reinforcement learning and OpenAI o1-like approaches. Contributions are welcome through pull requests following a specific format.

Awesome-Multimodal-LLM-for-Code

This repository contains papers, methods, benchmarks, and evaluations for code generation under multimodal scenarios. It covers UI code generation, scientific code generation, slide code generation, visually rich programming, logo generation, program repair, UML code generation, and general benchmarks.

Awesome-LLM-Preference-Learning

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

AI-PhD-S24

AI-PhD-S24 is a mono-repo for the PhD course 'AI for Business Research' at CUHK Business School in Spring 2024. The course aims to provide a basic understanding of machine learning and artificial intelligence concepts/methods used in business research, showcase how ML/AI is utilized in business research, and introduce state-of-the-art AI/ML technologies. The course includes scribed lecture notes, class recordings, and covers topics like AI/ML fundamentals, DL, NLP, CV, unsupervised learning, and diffusion models.

For similar tasks

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

non-ai-licenses

This repository provides templates for software and digital work licenses that restrict usage in AI training datasets or AI technologies. It includes various license styles such as Apache, BSD, MIT, UPL, ISC, CC0, and MPL-2.0.

For similar jobs

LLM-and-Law

This repository is dedicated to summarizing papers related to large language models with the field of law. It includes applications of large language models in legal tasks, legal agents, legal problems of large language models, data resources for large language models in law, law LLMs, and evaluation of large language models in the legal domain.

start-llms

This repository is a comprehensive guide for individuals looking to start and improve their skills in Large Language Models (LLMs) without an advanced background in the field. It provides free resources, online courses, books, articles, and practical tips to become an expert in machine learning. The guide covers topics such as terminology, transformers, prompting, retrieval augmented generation (RAG), and more. It also includes recommendations for podcasts, YouTube videos, and communities to stay updated with the latest news in AI and LLMs.

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognised principles through standardised tests. It offers a new API Connector feature to bypass size limitations, test various AI frameworks, and configure connection settings for batch requests. The toolkit operates within an enterprise environment, conducting technical tests on common supervised learning models for tabular and image datasets. It does not define AI ethical standards or guarantee complete safety from risks or biases.

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

langtest

LangTest is a comprehensive evaluation library for custom LLM and NLP models. It aims to deliver safe and effective language models by providing tools to test model quality, augment training data, and support popular NLP frameworks. LangTest comes with benchmark datasets to challenge and enhance language models, ensuring peak performance in various linguistic tasks. The tool offers more than 60 distinct types of tests with just one line of code, covering aspects like robustness, bias, representation, fairness, and accuracy. It supports testing LLMS for question answering, toxicity, clinical tests, legal support, factuality, sycophancy, and summarization.

Awesome-Jailbreak-on-LLMs

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, and exciting jailbreak methods on Large Language Models (LLMs). The repository contains papers, codes, datasets, evaluations, and analyses related to jailbreak attacks on LLMs. It serves as a comprehensive resource for researchers and practitioners interested in exploring various jailbreak techniques and defenses in the context of LLMs. Contributions such as additional jailbreak-related content, pull requests, and issue reports are welcome, and contributors are acknowledged. For any inquiries or issues, contact [email protected]. If you find this repository useful for your research or work, consider starring it to show appreciation.