aiverify

AI Verify

Stars: 140

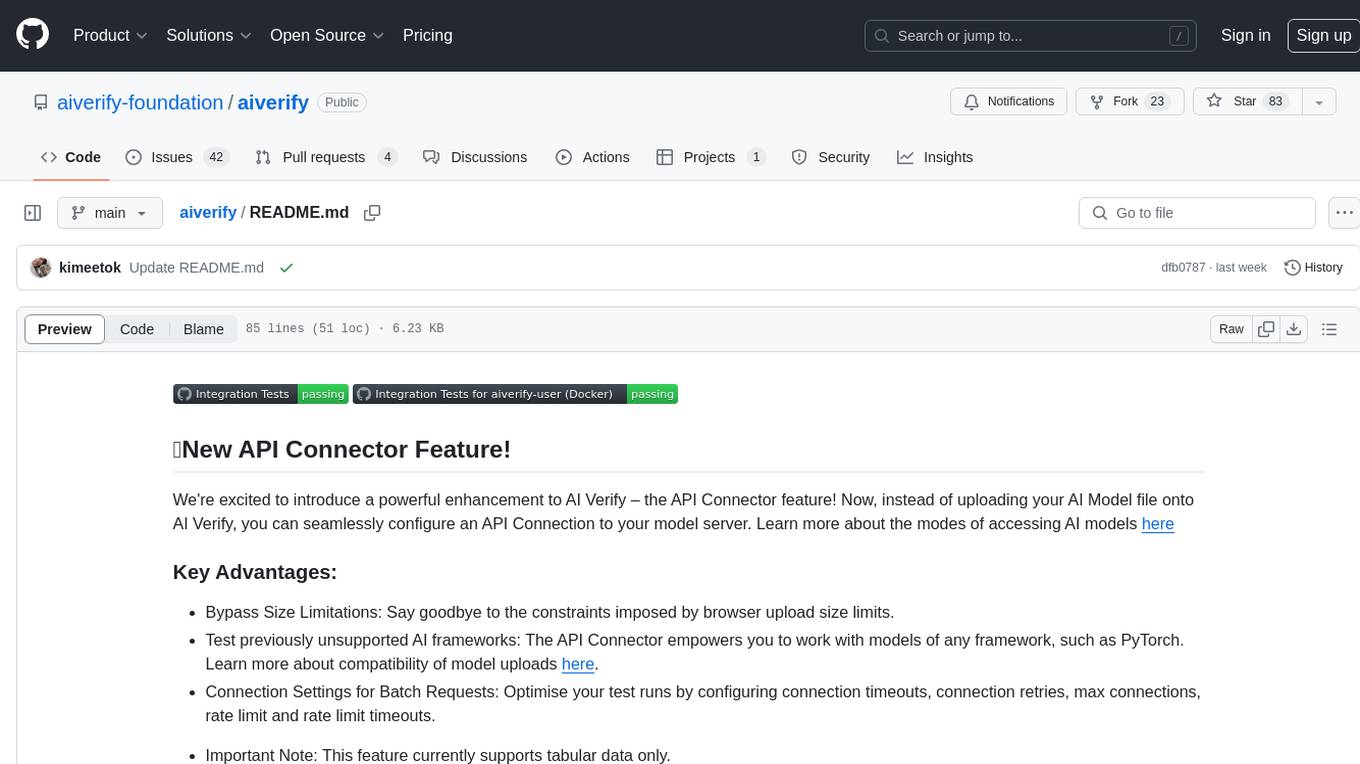

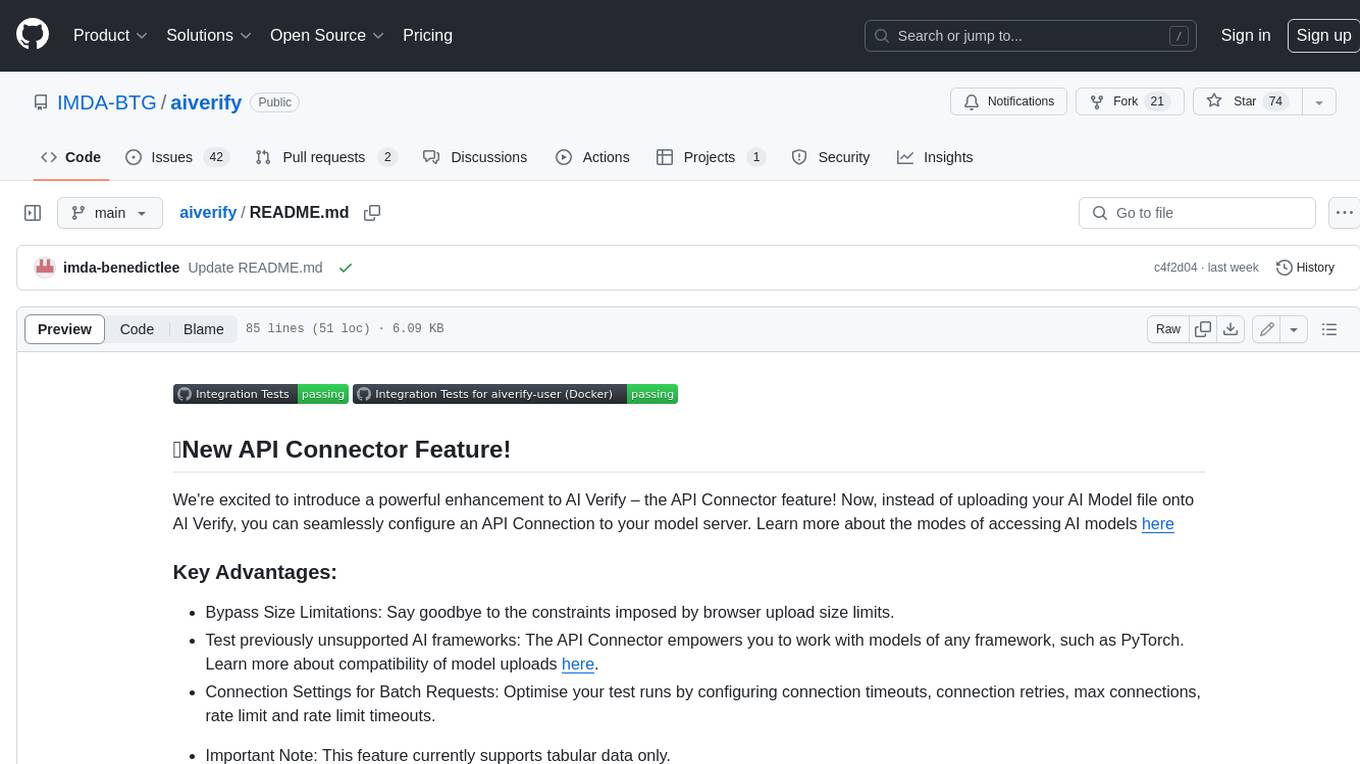

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognised principles through standardised tests. It offers a new API Connector feature to bypass size limitations, test various AI frameworks, and configure connection settings for batch requests. The toolkit operates within an enterprise environment, conducting technical tests on common supervised learning models for tabular and image datasets. It does not define AI ethical standards or guarantee complete safety from risks or biases.

README:

We're excited to introduce a powerful enhancement to AI Verify – the API Connector feature! Now, instead of uploading your AI Model file onto AI Verify, you can seamlessly configure an API Connection to your model server. Learn more about the modes of accessing AI models here

- Bypass Size Limitations: Say goodbye to the constraints imposed by browser upload size limits.

- Test previously unsupported AI frameworks: The API Connector empowers you to work with models of any framework, such as PyTorch. Learn more about compatibility of model uploads here.

- Connection Settings for Batch Requests: Optimise your test runs by configuring connection timeouts, connection retries, max connections, rate limit and rate limit timeouts.

- Important Note: This feature currently supports tabular data only.

To take advantage of this feature, refer to our How-To Guide. This guide provides step-by-step instructions on setting up the API Connector for seamless integration with your model server.

We are excited to have you try it out and hear what you think about this feature! Discussion Board

Welcome to AI Verify! AI Verify is launched under the AI Verify Foundation, a subsidiary under the IMDA.

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against a set of internationally recognised principles through standardised tests. AI Verify is consistent with international AI governance frameworks such as those from European Union, OECD and Singapore.

It is a single integrated toolkit that operates within an enterprise environment. It can perform technical tests on common supervised learning classification and regression models for most tabular and image datasets. It however does not define AI ethical standards and does not guarantee that any AI system tested will be free from risks or biases or is completely safe.

This document provides important notices and information about the project that contributors and users should be aware of. Please take the time to read through this document thoroughly.

New to AI Verify? Generally, you should install AI Verify by building and running the Dockerfile, which contains all the dependencies you need. This should get you ready to use AI Verify! See the installation guide for building and running from source code if you wish to deal with dependencies on your own.

Learn how you may utilise AI Verify effectively here.

Learn how you can utilise the developer tools effectively to contribute to the project here.

We encourage contributions from the community to help improve this project. Before contributing, please read our Contributing Guidelines to understand the process and expectations.

Found a bug or have a feature request? Please report it on our issue tracker. We appreciate your feedback and contributions to making this project better. Make sure to adhere to the designated format as provided in the contributing guidelines.

Comprehensive documentation is available for your reference. It includes installation and setup instructions, user guides, and other relevant information. Please refer to the documentation before opening an issue or asking for support.

This project is released under the Apache 2.0 license, which can be found under the project's license file. By contributing to this project, you agree to release your contributions under the same license. Please ensure that you are familiar with the license terms. You may find a list of the licenses used by dependencies and any other material used by this project there too.

If you have any questions or need assistance, please check the project discussions or issue tracker for existing threads. If you cannot find a resolution, feel free to create a new discussion or issue, or head over to our contact page if you require assistance.

Thank you for your interest in AI Verify, and we look forward to your contributions!

AI Verify

Copyright 2024 AI Verify Foundation

This product includes software developed under the AI Verify Foundation.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiverify

Similar Open Source Tools

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognised principles through standardised tests. It offers a new API Connector feature to bypass size limitations, test various AI frameworks, and configure connection settings for batch requests. The toolkit operates within an enterprise environment, conducting technical tests on common supervised learning models for tabular and image datasets. It does not define AI ethical standards or guarantee complete safety from risks or biases.

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against a set of internationally recognised principles through standardised tests. AI Verify is consistent with international AI governance frameworks such as those from European Union, OECD and Singapore. It is a single integrated toolkit that operates within an enterprise environment. It can perform technical tests on common supervised learning classification and regression models for most tabular and image datasets. It however does not define AI ethical standards and does not guarantee that any AI system tested will be free from risks or biases or is completely safe.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

azure-openai-dev-skills-orchestrator

An opinionated .NET framework, that is built on top of Semantic Kernel and Orleans, which helps creating and hosting event-driven AI Agents.

gpdb

Greenplum Database (GPDB) is an advanced, fully featured, open source data warehouse, based on PostgreSQL. It provides powerful and rapid analytics on petabyte scale data volumes. Uniquely geared toward big data analytics, Greenplum Database is powered by the world’s most advanced cost-based query optimizer delivering high analytical query performance on large data volumes.

xef

xef.ai is a one-stop library designed to bring the power of modern AI to applications and services. It offers integration with Large Language Models (LLM), image generation, and other AI services. The library is packaged in two layers: core libraries for basic AI services integration and integrations with other libraries. xef.ai aims to simplify the transition to modern AI for developers by providing an idiomatic interface, currently supporting Kotlin. Inspired by LangChain and Hugging Face, xef.ai may transmit source code and user input data to third-party services, so users should review privacy policies and take precautions. Libraries are available in Maven Central under the `com.xebia` group, with `xef-core` as the core library. Developers can add these libraries to their projects and explore examples to understand usage.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

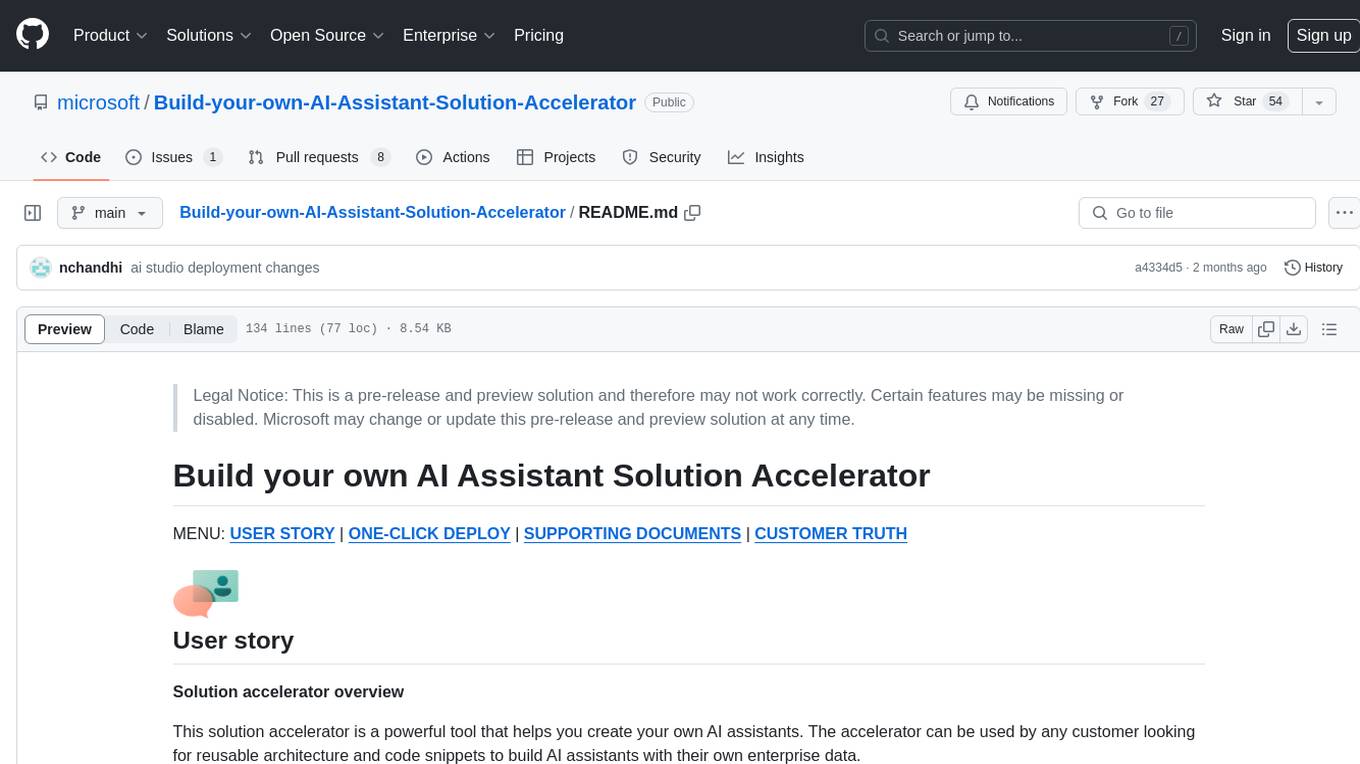

Build-your-own-AI-Assistant-Solution-Accelerator

Build-your-own-AI-Assistant-Solution-Accelerator is a pre-release and preview solution that helps users create their own AI assistants. It leverages Azure Open AI Service, Azure AI Search, and Microsoft Fabric to identify, summarize, and categorize unstructured information. Users can easily find relevant articles and grants, generate grant applications, and export them as PDF or Word documents. The solution accelerator provides reusable architecture and code snippets for building AI assistants with enterprise data. It is designed for researchers looking to explore flu vaccine studies and grants to accelerate grant proposal submissions.

magic

Magic Cloud is a software development automation platform based on AI, Low-Code, and No-Code. It allows dynamic code creation and orchestration using Hyperlambda, generative AI, and meta programming. The platform includes features like CRUD generation, No-Code AI, Hyperlambda programming language, AI agents creation, and various components for software development. Magic is suitable for backend development, AI-related tasks, and creating AI chatbots. It offers high-level programming capabilities, productivity gains, and reduced technical debt.

chat-with-your-data-solution-accelerator

Chat with your data using OpenAI and AI Search. This solution accelerator uses an Azure OpenAI GPT model and an Azure AI Search index generated from your data, which is integrated into a web application to provide a natural language interface, including speech-to-text functionality, for search queries. Users can drag and drop files, point to storage, and take care of technical setup to transform documents. There is a web app that users can create in their own subscription with security and authentication.

autoMate

autoMate is an AI-powered local automation tool designed to help users automate repetitive tasks and reclaim their time. It leverages AI and RPA technology to operate computer interfaces, understand screen content, make autonomous decisions, and support local deployment for data security. With natural language task descriptions, users can easily automate complex workflows without the need for programming knowledge. The tool aims to transform work by freeing users from mundane activities and allowing them to focus on tasks that truly create value, enhancing efficiency and liberating creativity.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

modelbench

ModelBench is a tool for running safety benchmarks against AI models and generating detailed reports. It is part of the MLCommons project and is designed as a proof of concept to aggregate measures, relate them to specific harms, create benchmarks, and produce reports. The tool requires LlamaGuard for evaluating responses and a TogetherAI account for running benchmarks. Users can install ModelBench from GitHub or PyPI, run tests using Poetry, and create benchmarks by providing necessary API keys. The tool generates static HTML pages displaying benchmark scores and allows users to dump raw scores and manage cache for faster runs. ModelBench is aimed at enabling users to test their own models and create tests and benchmarks.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

eureka-ml-insights

The Eureka ML Insights Framework is a repository containing code designed to help researchers and practitioners run reproducible evaluations of generative models efficiently. Users can define custom pipelines for data processing, inference, and evaluation, as well as utilize pre-defined evaluation pipelines for key benchmarks. The framework provides a structured approach to conducting experiments and analyzing model performance across various tasks and modalities.

For similar tasks

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognised principles through standardised tests. It offers a new API Connector feature to bypass size limitations, test various AI frameworks, and configure connection settings for batch requests. The toolkit operates within an enterprise environment, conducting technical tests on common supervised learning models for tabular and image datasets. It does not define AI ethical standards or guarantee complete safety from risks or biases.

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against a set of internationally recognised principles through standardised tests. AI Verify is consistent with international AI governance frameworks such as those from European Union, OECD and Singapore. It is a single integrated toolkit that operates within an enterprise environment. It can perform technical tests on common supervised learning classification and regression models for most tabular and image datasets. It however does not define AI ethical standards and does not guarantee that any AI system tested will be free from risks or biases or is completely safe.

llm-app-stack

LLM App Stack, also known as Emerging Architectures for LLM Applications, is a comprehensive list of available tools, projects, and vendors at each layer of the LLM app stack. It covers various categories such as Data Pipelines, Embedding Models, Vector Databases, Playgrounds, Orchestrators, APIs/Plugins, LLM Caches, Logging/Monitoring/Eval, Validators, LLM APIs (proprietary and open source), App Hosting Platforms, Cloud Providers, and Opinionated Clouds. The repository aims to provide a detailed overview of tools and projects for building, deploying, and maintaining enterprise data solutions, AI models, and applications.

parea-sdk-py

Parea AI provides a SDK to evaluate & monitor AI applications. It allows users to test, evaluate, and monitor their AI models by defining and running experiments. The SDK also enables logging and observability for AI applications, as well as deploying prompts to facilitate collaboration between engineers and subject-matter experts. Users can automatically log calls to OpenAI and Anthropic, create hierarchical traces of their applications, and deploy prompts for integration into their applications.

goose

Codename Goose is an open-source, extensible AI agent designed to provide functionalities beyond code suggestions. Users can install, execute, edit, and test with any LLM. The tool aims to enhance the coding experience by offering advanced features and capabilities. Stay updated for the upcoming 1.0 release scheduled by the end of January 2025. Explore the v0.X documentation available on the project's GitHub pages.

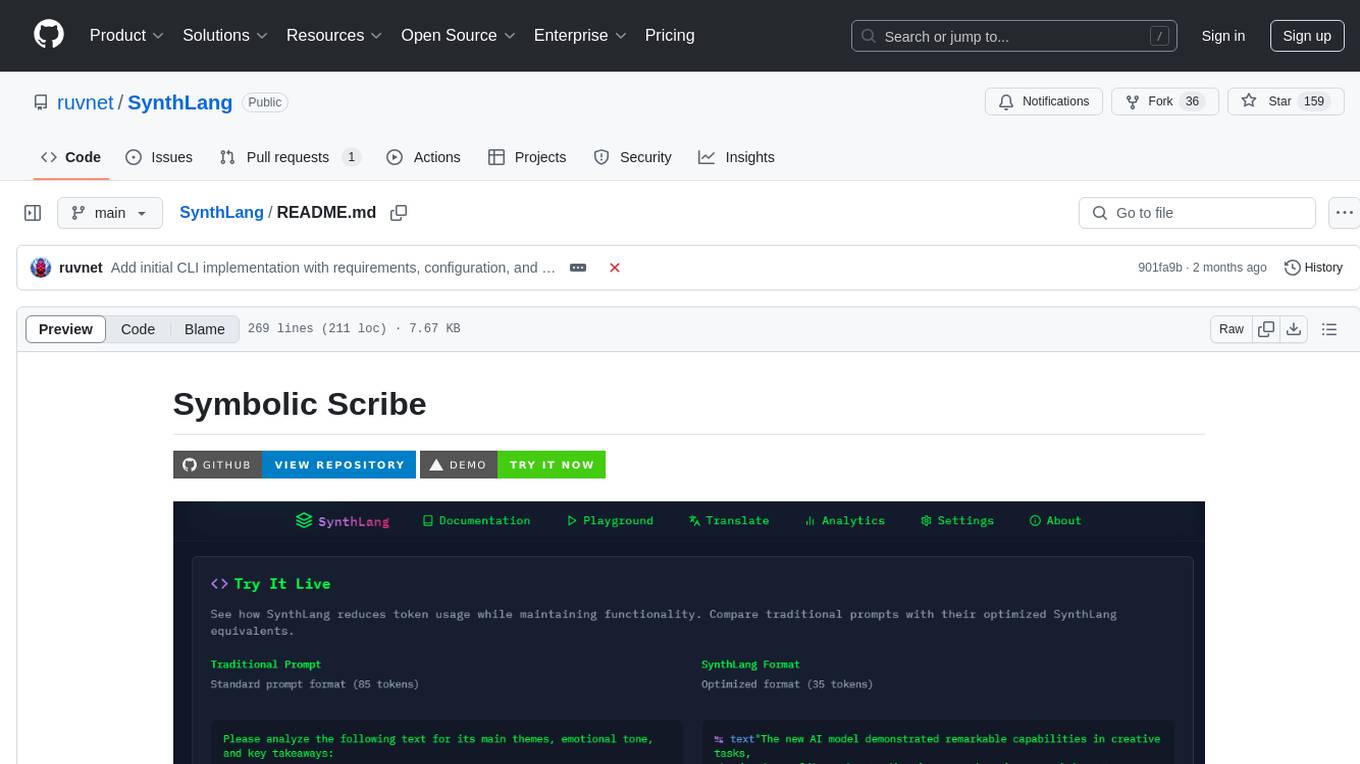

SynthLang

SynthLang is a tool designed to optimize AI prompts by reducing costs and improving processing speed. It brings academic rigor to prompt engineering, creating precise and powerful AI interactions. The tool includes core components like a Translator Engine, Performance Optimization, Testing Framework, and Technical Architecture. It offers mathematical precision, academic rigor, enhanced security, a modern interface, and instant testing. Users can integrate mathematical frameworks, model complex relationships, and apply structured prompts to various domains. Security features include API key management and data privacy. The tool also provides a CLI for prompt engineering and optimization capabilities.

For similar jobs

LLM-and-Law

This repository is dedicated to summarizing papers related to large language models with the field of law. It includes applications of large language models in legal tasks, legal agents, legal problems of large language models, data resources for large language models in law, law LLMs, and evaluation of large language models in the legal domain.

start-llms

This repository is a comprehensive guide for individuals looking to start and improve their skills in Large Language Models (LLMs) without an advanced background in the field. It provides free resources, online courses, books, articles, and practical tips to become an expert in machine learning. The guide covers topics such as terminology, transformers, prompting, retrieval augmented generation (RAG), and more. It also includes recommendations for podcasts, YouTube videos, and communities to stay updated with the latest news in AI and LLMs.

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognised principles through standardised tests. It offers a new API Connector feature to bypass size limitations, test various AI frameworks, and configure connection settings for batch requests. The toolkit operates within an enterprise environment, conducting technical tests on common supervised learning models for tabular and image datasets. It does not define AI ethical standards or guarantee complete safety from risks or biases.

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

langtest

LangTest is a comprehensive evaluation library for custom LLM and NLP models. It aims to deliver safe and effective language models by providing tools to test model quality, augment training data, and support popular NLP frameworks. LangTest comes with benchmark datasets to challenge and enhance language models, ensuring peak performance in various linguistic tasks. The tool offers more than 60 distinct types of tests with just one line of code, covering aspects like robustness, bias, representation, fairness, and accuracy. It supports testing LLMS for question answering, toxicity, clinical tests, legal support, factuality, sycophancy, and summarization.

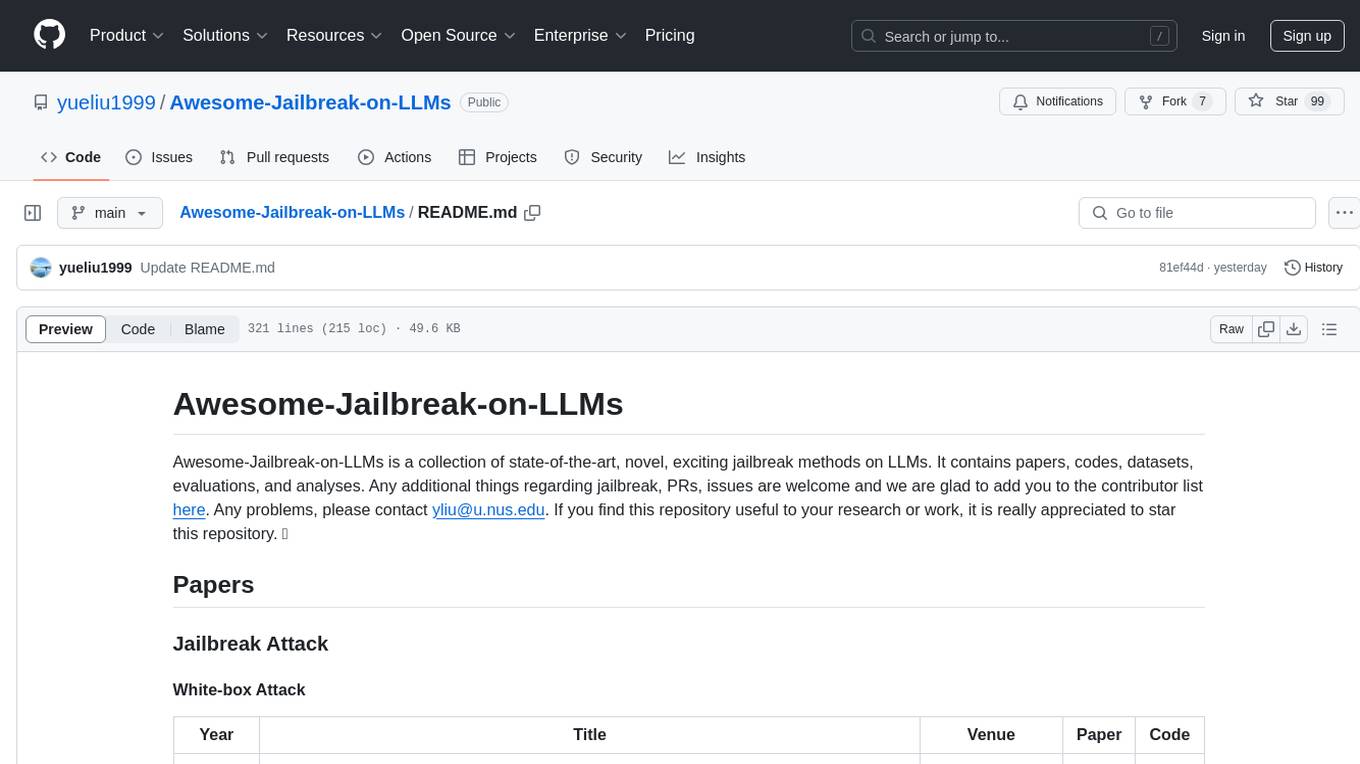

Awesome-Jailbreak-on-LLMs

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, and exciting jailbreak methods on Large Language Models (LLMs). The repository contains papers, codes, datasets, evaluations, and analyses related to jailbreak attacks on LLMs. It serves as a comprehensive resource for researchers and practitioners interested in exploring various jailbreak techniques and defenses in the context of LLMs. Contributions such as additional jailbreak-related content, pull requests, and issue reports are welcome, and contributors are acknowledged. For any inquiries or issues, contact [email protected]. If you find this repository useful for your research or work, consider starring it to show appreciation.