helix

♾️ Helix is a private GenAI stack for building AI agents with declarative pipelines, knowledge (RAG), API bindings, and first-class testing.

Stars: 715

HelixML is a private GenAI platform that allows users to deploy the best of open AI in their own data center or VPC while retaining complete data security and control. It includes support for fine-tuning models with drag-and-drop functionality. HelixML brings the best of open source AI to businesses in an ergonomic and scalable way, optimizing the tradeoff between GPU memory and latency.

README:

SaaS • Private Deployment • Docs • Discord

Deploy AI agents in your own data center or VPC and retain complete data security & control.

HelixML is an enterprise-grade platform for building and deploying AI agents with support for RAG (Retrieval-Augmented Generation), API calling, vision, and multi-provider LLM support. Build and deploy LLM applications by writing a simple helix.yaml configuration file.

Our intelligent GPU scheduler packs models efficiently into available GPU memory and dynamically loads and unloads models based on demand, optimizing resource utilization.

- Easy-to-use Web UI for agent interaction and management

- Session-based architecture with pause/resume capabilities

- Multi-step reasoning with tool orchestration

- Memory management for context-aware interactions

- Support for multiple LLM providers (OpenAI, Anthropic, and local models)

- REST API integration with OpenAPI schema support

- MCP (Model Context Protocol) server compatibility

- GPTScript integration for advanced scripting

- OAuth token management for secure third-party access

- Custom tool development with flexible SDK

- Built-in document ingestion (PDFs, Word, text files)

- Web scraper for automatic content extraction

- Multiple RAG backends: Typesense, Haystack, PGVector, LlamaIndex

- Vector embeddings with PGVector for semantic search

- Vision RAG support for multimodal content

Main use cases:

- Upload and analyze corporate documents

- Add website documentation URLs to create instant customer support agents

- Build knowledge bases from multiple sources

Context is everything. Agents can process tens of thousands of tokens per step—Helix provides complete visibility under the hood:

Tracing features:

- View all agent execution steps

- Inspect requests and responses to LLM providers, third-party APIs, and MCP servers

- Real-time token usage tracking

- Pricing and cost analysis

- Performance metrics and debugging

- Multi-tenancy with organization, team, and role-based access control

- Scheduled tasks and cron jobs

- Webhook triggers for event-driven workflows

- Evaluation framework for testing and quality assurance

- Payment integration with Stripe support

- Notifications via Slack, Discord, and email

- Keycloak authentication with OAuth and OIDC support

HelixML uses a microservices architecture with the following components:

┌─────────────────────────────────────────────────────────┐

│ Frontend (React) │

│ vite + TypeScript │

└────────────────────┬────────────────────────────────────┘

│

┌────────────────────▼────────────────────────────────────┐

│ API / Control Plane (Go) │

│ ┌──────────────┬──────────────┬──────────────────────┐ │

│ │ Agents │ Knowledge │ Auth & Sessions │ │

│ │ Skills │ RAG Pipeline│ Organizations │ │

│ │ Tools │ Vector DB │ Usage Tracking │ │

│ └──────────────┴──────────────┴──────────────────────┘ │

└─────────┬──────────────────────────────────┬───────────┘

│ │

┌─────────▼──────────┐ ┌─────────▼──────────┐

│ PostgreSQL │ │ GPU Runners │

│ + PGVector │ │ Model Scheduler │

└────────────────────┘ └────────────────────┘

│

┌─────────▼──────────────────────────────────────────────┐

│ Supporting Services: Keycloak, Typesense, Haystack, │

│ GPTScript Runner, Chrome/Rod, Tika, SearXNG │

└────────────────────────────────────────────────────────┘

Three-layer agent hierarchy:

- Session: Manages agent lifecycle and state

- Agent: Coordinates skills and handles LLM interactions

- Skills: Group related tools for specific capabilities

- Tools: Individual actions (API calls, functions, scripts)

- Go 1.24.0 - Main backend language

- PostgreSQL + PGVector - Data storage and vector embeddings

- GORM - ORM for database operations

- Gorilla Mux - HTTP routing

- Keycloak - Identity and access management

- NATS - Message queue

- Zerolog - Structured logging

- React 18.3.1 - UI framework

- TypeScript - Type-safe JavaScript

- Material-UI (MUI) - Component library

- MobX - State management

- Vite - Build tool

- Monaco Editor - Code editing

- OpenAI SDK - GPT models integration

- Anthropic SDK - Claude models integration

- LangChain Go - LLM orchestration

- GPTScript - Scripting capabilities

- Typesense / Haystack / LlamaIndex - RAG backends

- Docker & Docker Compose - Containerization

- Kubernetes + Helm - Orchestration

- Flux - GitOps operator

Use our quickstart installer:

curl -sL -O https://get.helixml.tech/install.sh

chmod +x install.sh

sudo ./install.shThe installer will prompt you before making changes to your system. By default, the dashboard will be available on http://localhost:8080.

For setting up a deployment with a DNS name, see ./install.sh --help or read the detailed docs. We've documented easy TLS termination for you.

Next steps:

- Attach your own GPU runners per runners docs

- Use any external OpenAI-compatible LLM

Use our Helm charts for production deployments:

All server configuration is done via environment variables. You can find the complete list of configuration options in api/pkg/config/config.go.

Key environment variables:

-

OPENAI_API_KEY- OpenAI API credentials -

ANTHROPIC_API_KEY- Anthropic API credentials -

POSTGRES_*- Database connection settings -

KEYCLOAK_*- Authentication settings -

SERVER_URL- Public URL for the deployment -

RUNNER_*- GPU runner configuration

See the configuration documentation for detailed setup instructions.

For local development, refer to the Helix local development guide.

Prerequisites:

- Docker Desktop (or Docker + Docker Compose)

- Go 1.24.0+

- Node.js 18+

- Make

Quick development setup:

# Clone the repository

git clone https://github.com/helixml/helix.git

cd helix

# Start supporting services

docker-compose up -d postgres keycloak

# Run the backend

cd api

go run . serve

# Run the frontend (in a new terminal)

cd frontend

npm install

npm run devSee local-development.md for comprehensive setup instructions.

- Overview - Platform introduction

- Getting Started - Build your first agent

- Control Plane Deployment - Production deployment guide

- Runner Deployment - GPU runner setup

- Agent Architecture - Technical specification

- API Reference - REST API documentation

- Contributing Guide - How to contribute

- Upgrading Guide - Migration instructions

We welcome contributions! Please see our Contributing Guide for details.

By contributing, you confirm that:

- Your changes will fall under the same license

- Your changes will be owned by HelixML, Inc.

Helix is licensed under a similar license to Docker Desktop. You can run the source code (in this repo) for free for:

- Personal Use: Individuals or people personally experimenting

- Educational Use: Schools and universities

- Small Business Use: Companies with under $10M annual revenue and less than 250 employees

If you fall outside of these terms, please use the Launchpad to purchase a license for large commercial use. Trial licenses are available for experimentation.

You are not allowed to use our code to build a product that competes with us.

- We generate revenue to support the development of Helix. We are an independent software company.

- We don't want cloud providers to take our open source code and build a rebranded service on top of it.

If you would like to use some part of this code under a more permissive license, please get in touch.

- Discord Community - Join our community for help and discussions

- GitHub Issues - Report bugs or request features

- Documentation - Comprehensive guides and references

- Email - Contact us for commercial inquiries

If you find Helix useful, please consider giving us a star on GitHub!

Built with ❤️ by HelixML, Inc.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for helix

Similar Open Source Tools

helix

HelixML is a private GenAI platform that allows users to deploy the best of open AI in their own data center or VPC while retaining complete data security and control. It includes support for fine-tuning models with drag-and-drop functionality. HelixML brings the best of open source AI to businesses in an ergonomic and scalable way, optimizing the tradeoff between GPU memory and latency.

Shannon

Shannon is a battle-tested infrastructure for AI agents that solves problems at scale, such as runaway costs, non-deterministic failures, and security concerns. It offers features like intelligent caching, deterministic replay of workflows, time-travel debugging, WASI sandboxing, and hot-swapping between LLM providers. Shannon allows users to ship faster with zero configuration multi-agent setup, multiple AI patterns, time-travel debugging, and hot configuration changes. It is production-ready with features like WASI sandbox, token budget control, policy engine (OPA), and multi-tenancy. Shannon helps scale without breaking by reducing costs, being provider agnostic, observable by default, and designed for horizontal scaling with Temporal workflow orchestration.

mesh

MCP Mesh is an open-source control plane for MCP traffic that provides a unified layer for authentication, routing, and observability. It replaces multiple integrations with a single production endpoint, simplifying configuration management. Built for multi-tenant organizations, it offers workspace/project scoping for policies, credentials, and logs. With core capabilities like MeshContext, AccessControl, and OpenTelemetry, it ensures fine-grained RBAC, full tracing, and metrics for tools and workflows. Users can define tools with input/output validation, access control checks, audit logging, and OpenTelemetry traces. The project structure includes apps for full-stack MCP Mesh, encryption, observability, and more, with deployment options ranging from Docker to Kubernetes. The tech stack includes Bun/Node runtime, TypeScript, Hono API, React, Kysely ORM, and Better Auth for OAuth and API keys.

claudex

Claudex is an open-source, self-hosted Claude Code UI that runs entirely on your machine. It provides multiple sandboxes, allows users to use their own plans, offers a full IDE experience with VS Code in the browser, and is extensible with skills, agents, slash commands, and MCP servers. Users can run AI agents in isolated environments, view and interact with a browser via VNC, switch between multiple AI providers, automate tasks with Celery workers, and enjoy various chat features and preview capabilities. Claudex also supports marketplace plugins, secrets management, integrations like Gmail, and custom instructions. The tool is configured through providers and supports various providers like Anthropic, OpenAI, OpenRouter, and Custom. It has a tech stack consisting of React, FastAPI, Python, PostgreSQL, Celery, Redis, and more.

gpt-all-star

GPT-All-Star is an AI-powered code generation tool designed for scratch development of web applications with team collaboration of autonomous AI agents. The primary focus of this research project is to explore the potential of autonomous AI agents in software development. Users can organize their team, choose leaders for each step, create action plans, and work together to complete tasks. The tool supports various endpoints like OpenAI, Azure, and Anthropic, and provides functionalities for project management, code generation, and team collaboration.

openakita

OpenAkita is a self-evolving AI Agent framework that autonomously learns new skills, performs daily self-checks and repairs, accumulates experience from task execution, and persists until the task is done. It auto-generates skills, installs dependencies, learns from mistakes, and remembers preferences. The framework is standards-based, multi-platform, and provides a Setup Center GUI for intuitive installation and configuration. It features self-learning and evolution mechanisms, a Ralph Wiggum Mode for persistent execution, multi-LLM endpoints, multi-platform IM support, desktop automation, multi-agent architecture, scheduled tasks, identity and memory management, a tool system, and a guided wizard for setup.

solo-server

Solo Server is a lightweight server designed for managing hardware-aware inference. It provides seamless setup through a simple CLI and HTTP servers, an open model registry for pulling models from platforms like Ollama and Hugging Face, cross-platform compatibility for effortless deployment of AI models on hardware, and a configurable framework that auto-detects hardware components (CPU, GPU, RAM) and sets optimal configurations.

AgentX

AgentX is a next-generation open-source AI agent development framework and runtime platform. It provides an event-driven runtime with a simple framework and minimal UI. The platform is ready-to-use and offers features like multi-user support, session persistence, real-time streaming, and Docker readiness. Users can build AI Agent applications with event-driven architecture using TypeScript for server-side (Node.js) and client-side (Browser/React) development. AgentX also includes comprehensive documentation, core concepts, guides, API references, and various packages for different functionalities. The architecture follows an event-driven design with layered components for server-side and client-side interactions.

mimiclaw

MimiClaw is a pocket AI assistant that runs on a $5 chip, specifically designed for the ESP32-S3 board. It operates without Linux or Node.js, using pure C language. Users can interact with MimiClaw through Telegram, enabling it to handle various tasks and learn from local memory. The tool is energy-efficient, running on USB power 24/7. With MimiClaw, users can have a personal AI assistant on a chip the size of a thumb, making it convenient and accessible for everyday use.

FinMem-LLM-StockTrading

This repository contains the Python source code for FINMEM, a Performance-Enhanced Large Language Model Trading Agent with Layered Memory and Character Design. It introduces FinMem, a novel LLM-based agent framework devised for financial decision-making, encompassing three core modules: Profiling, Memory with layered processing, and Decision-making. FinMem's memory module aligns closely with the cognitive structure of human traders, offering robust interpretability and real-time tuning. The framework enables the agent to self-evolve its professional knowledge, react agilely to new investment cues, and continuously refine trading decisions in the volatile financial environment. It presents a cutting-edge LLM agent framework for automated trading, boosting cumulative investment returns.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

kweaver

KWeaver is an open-source ecosystem for building, deploying, and running decision intelligence AI applications. It adopts ontology as the core methodology for business knowledge networks, with DIP as the core platform, aiming to provide elastic, agile, and reliable enterprise-grade decision intelligence to further unleash productivity. The DIP platform includes key subsystems such as ADP, Decision Agent, DIP Studio, and AI Store.

aichildedu

AICHILDEDU is a microservice-based AI education platform for children that integrates LLMs, image generation, and speech synthesis to provide personalized storybook creation, intelligent conversational learning, and multimedia content generation. It offers features like personalized story generation, educational quiz creation, multimedia integration, age-appropriate content, multi-language support, user management, parental controls, and asynchronous processing. The platform follows a microservice architecture with components like API Gateway, User Service, Content Service, Learning Service, and AI Services. Technologies used include Python, FastAPI, PostgreSQL, MongoDB, Redis, LangChain, OpenAI GPT models, TensorFlow, PyTorch, Transformers, MinIO, Elasticsearch, Docker, Docker Compose, and JWT-based authentication.

boxlite

BoxLite is an embedded, lightweight micro-VM runtime designed for AI agents running OCI containers with hardware-level isolation. It is built for high concurrency with no daemon required, offering features like lightweight VMs, high concurrency, hardware isolation, embeddability, and OCI compatibility. Users can spin up 'Boxes' to run containers for AI agent sandboxes and multi-tenant code execution scenarios where Docker alone is insufficient and full VM infrastructure is too heavy. BoxLite supports Python, Node.js, and Rust with quick start guides for each, along with features like CPU/memory limits, storage options, networking capabilities, security layers, and image registry configuration. The tool provides SDKs for Python and Node.js, with Go support coming soon. It offers detailed documentation, examples, and architecture insights for users to understand how BoxLite works under the hood.

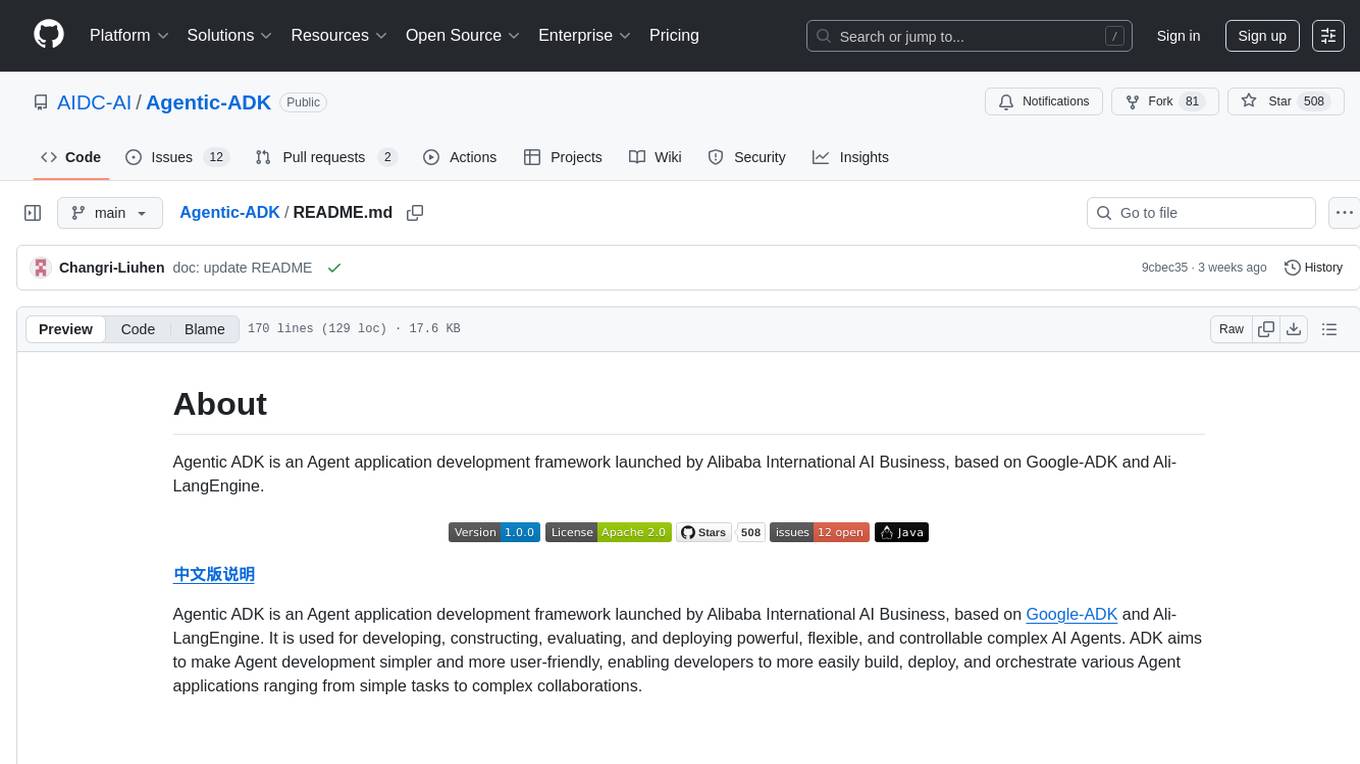

Agentic-ADK

Agentic ADK is an Agent application development framework launched by Alibaba International AI Business, based on Google-ADK and Ali-LangEngine. It is used for developing, constructing, evaluating, and deploying powerful, flexible, and controllable complex AI Agents. ADK aims to make Agent development simpler and more user-friendly, enabling developers to more easily build, deploy, and orchestrate various Agent applications ranging from simple tasks to complex collaborations.

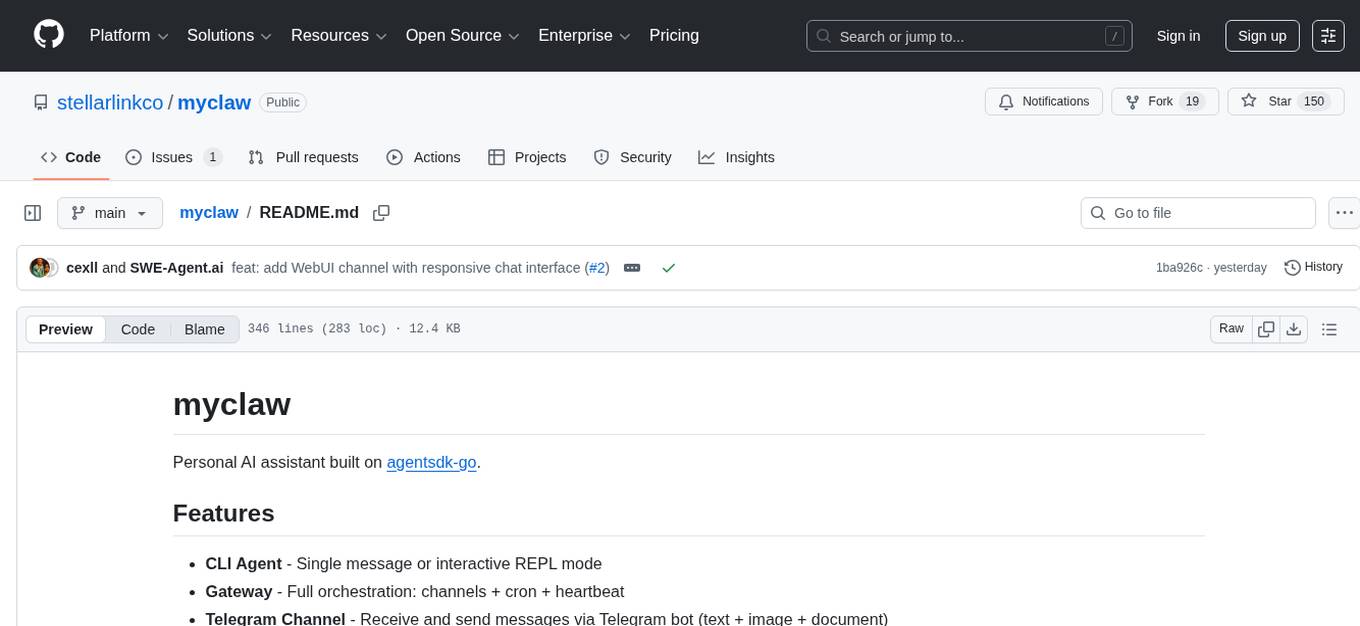

myclaw

myclaw is a personal AI assistant built on agentsdk-go that offers a CLI agent for single message or interactive REPL mode, full orchestration with channels, cron, and heartbeat, support for various messaging channels like Telegram, Feishu, WeCom, WhatsApp, and a web UI, multi-provider support for Anthropic and OpenAI models, image recognition and document processing, scheduled tasks with JSON persistence, long-term and daily memory storage, custom skill loading, and more. It provides a comprehensive solution for interacting with AI models and managing tasks efficiently.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.