LLM4DB

None

Stars: 126

LLM4DB is a repository focused on the intersection of Large Language Models (LLM) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLM. The repository includes works on data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, configuration tuning, query optimization, and anomaly diagnosis using LLMs. It aims to provide insights and advancements in leveraging LLMs for improving data processing, analysis, and database management tasks.

README:

Continuously update the works in (1) LLM for Data Processing, (2) LLM for Data Analysis, (3) LLM for Data System Optimization, and (4) Data Managment for LLM based on our past tutorial [slides].

Kindly let us know if we have missed any great papers. Thank you! :)

- 0. System & Review

- 1. LLM for Data Processing

- 2. LLM for Data Analysis

- 3. LLM for Database Optimization

- 4. Data Management for LLM

NeurDB: On the Design and Implementation of an AI-powered Autonomous Database

hanhao Zhao, Shaofeng Cai, Haotian Gao, Hexiang Pan, Siqi Xiang, Naili Xing, Gang Chen, Beng Chin Ooi, Yanyan Shen, Yuncheng Wu, Meihui Zhang. CIDR 2025. [pdf]

How Large Language Models Will Disrupt Data Management

Raul Castro Fernandez, Aaron J. Elmore, Michael J. Franklin, Sanjay Krishnan, Chenhao Tan. VLDB 2023. [pdf]

From Large Language Models to Databases and Back: A Discussion on Research and Education

Sihem Amer-Yahia, Angela Bonifati, Lei Chen, Guoliang Li, Kyuseok Shim, Jianliang Xu, Xiaochun Yang. SIGMOD Record. [pdf]

Applications and Challenges for Large Language Models: From Data Management Perspective

Zhang, Meihui, Zhaoxuan Ji, Zhaojing Luo, Yuncheng Wu, and Chengliang Chai. ICDE 2024. [pdf]

Demystifying Data Management for Large Language Models

Xupeng Miao, Zhihao Jia, and Bin Cui. SIGMOD 2024. [pdf]

DB-GPT: Large Language Model Meets Database

Xuanhe Zhou, Zhaoyan Sun, Guoliang Li. Data Science and Engineering 2023. [pdf]

LLM-Enhanced Data Management

Xuanhe Zhou, Xinyang Zhao, Guoliang Li. arxiv 2024. [pdf]

Trustworthy and Efficient LLMs Meet Databases

Kyoungmin Kim, Anastasia Ailamaki. arxiv 2024. [pdf]

Can Foundation Models Wrangle Your Data?

Avanika Narayan, Ines Chami, Laurel J. Orr, Christopher Ré. VLDB 2022. [pdf]

Data Management For Training Large Language Models: A Survey

Zige Wang, Wanjun Zhong, Yufei Wang, Qi Zhu, Fei Mi, Baojun Wang, Lifeng Shang, Xin Jiang, Qun Liu. arxiv 2024. [pdf]

When Large Language Models Meet Vector Databases: A Survey

Zhi Jing, Yongye Su, Yikun Han, Bo Yuan, Haiyun Xu, Chunjiang Liu, Kehai Chen, Min Zhang. arxiv 2024. [pdf]

Survey of Vector Database Management Systems

James Jie Pan, Jianguo Wang, Guoliang Li. arxiv 2023. [pdf]

There are many related works, with a focus on prioritizing papers within the database field.

GIDCL: A Graph-Enhanced Interpretable Data Cleaning Framework with Large Language Models

Mengyi Yan, Yaoshu Wang, Yue Wang, Xiaoye Miao, Jianxin Li. SIGMOD 2025. [pdf]

Mind the Data Gap: Bridging LLMs to Enterprise Data Integration

Moe Kayali, Fabian Wenz, Nesime Tatbul, Çağatay Demiralp. CIDR 2025. [pdf]

AutoDCWorkflow: LLM-based Data Cleaning Workflow Auto-Generation and Benchmark

Lan Li, Liri Fang, Vetle I. Torvik. arxiv 2024. [pdf]

Jellyfish: A Large Language Model for Data Preprocessing

Haochen Zhang, Yuyang Dong, Chuan Xiao, Masafumi Oyamada. arxiv 2024. [pdf]

CleanAgent: Automating Data Standardization with LLM-based Agents

Danrui Qi, Jiannan Wang. arxiv 2024. [pdf]

LLMClean: Context-Aware Tabular Data Cleaning via LLM-Generated OFDs

Fabian Biester, Mohamed Abdelaal, Daniel Del Gaudio. arxiv 2024. [pdf]

LLMs with User-defined Prompts as Generic Data Operators for Reliable Data Processing

Luyi Ma, Nikhil Thakurdesai, Jiao Chen, Jianpeng Xu, Evren Körpeoglu, Sushant Kumar, Kannan Achan. IEEE Big Data 2023. [pdf]

SEED: Domain-Specific Data Curation With Large Language Models

Zui Chen, Lei Cao, Sam Madden, Tim Kraska, Zeyuan Shang, Ju Fan, Nan Tang, Zihui Gu, Chunwei Liu, Michael Cafarella. arxiv 2023. [pdf]

Large Language Models as Data Preprocessors

Haochen Zhang, Yuyang Dong, Chuan Xiao, Masafumi Oyamada. arxiv 2023. [pdf]

Data Cleaning Using Large Language Models

Shuo Zhang, Zezhou Huang, Eugene Wu. arxiv 2024. [pdf]

Match, Compare, or Select? An Investigation of Large Language Models for Entity Matching

Tianshu Wang, Hongyu Lin, Xiaoyang Chen, Xianpei Han, Hao Wang, Zhenyu Zeng, Le Sun. COLING 2025. [pdf]

Cost-Effective In-Context Learning for Entity Resolution: A Design Space Exploration

Meihao Fan, Xiaoyue Han, Ju Fan, Chengliang Chai, Nan Tang, Guoliang Li, Xiaoyong Du. ICDE 2024. [pdf]

In Situ Neural Relational Schema Matcher

Xingyu Du, Gongsheng Yuan, Sai Wu, Gang Chen, and Peng Lu. ICDE 2024. [pdf]

KcMF: A Knowledge-compliant Framework for Schema and Entity Matching with Fine-tuning-free LLMs

Yongqin Xu, Huan Li, Ke Chen, Lidan Shou. arxiv 2024. [pdf]

Unicorn: A Unified Multi-tasking Model for Supporting Matching Tasks in Data Integration

Jianhong Tu, Ju Fan, Nan Tang, Peng Wang, Guoliang Li, Xiaoyong Du. SIGMOD 2023. [pdf]

Entity matching using large language models

Ralph Peeters, Christian Bizer. arxiv 2023. [pdf]

Deep Entity Matching with Pre-Trained Language Models

Yuliang Li, Jinfeng Li, Yoshihiko Suhara, AnHai Doan, Wang-Chiew Tan. VLDB 2021. [pdf]

Dual-Objective Fine-Tuning of BERT for Entity Matching

Ralph Peeters, Christian Bizer. VLDB 2021. [pdf]

Knowledge Graph-based Retrieval-Augmented Generation for Schema Matching

Chuangtao Ma, Sriom Chakrabarti, Arijit Khan, Bálint Molnár. arxiv 2024. [pdf]

Magneto: Combining Small and Large Language Models for Schema Matching

Yurong Liu, Eduardo Pena, Aécio Santos, Eden Wu, Juliana Freire. arix 2024. [pdf]

Schema Matching with Large Language Models: an Experimental Study

Marcel Parciak, Brecht Vandevoort, Frank Neven, Liesbet M. Peeters, Stijn Vansummeren. arxiv 2024. [pdf]

Schema Matching using Pre-Trained Language Models

Yunjia Zhang, Avrilia Floratou, Joyce Cahoon, Subru Krishnan, Andreas C. Müller, Dalitso Banda, Fotis Psallidas, Jignesh M. Patel. ICDE 2023. [pdf]

KcMF: A Knowledge-compliant Framework for Schema and Entity Matching with Fine-tuning-free LLMs

Yongqin Xu, Huan Li, Ke Chen, Lidan Shou. arxiv 2024. [pdf]

CHORUS: Foundation Models for Unified Data Discovery and Exploration

Moe Kayali, Anton Lykov, Ilias Fountalis, Nikolaos Vasiloglou, Dan Olteanu, Dan Suciu. VLDB 2024. [pdf]

Language Models Enable Simple Systems for Generating Structured Views of Heterogeneous Data Lakes

Simran Arora, Brandon Yang, Sabri Eyuboglu, Avanika Narayan, Andrew Hojel, Immanuel Trummer, Christopher Ré. VLDB 2024. [pdf]

DeepJoin: Joinable Table Discovery with Pre-trained Language Models

Yuyang Dong, Chuan Xiao, Takuma Nozawa, Masafumi Enomoto, Masafumi Oyamada. VLDB 2023. [pdf]

There are many related works, with a focus on prioritizing NL2SQL papers within the database field.

Text2SQL is Not Enough: Unifying AI and Databases with TAG

Asim Biswal, Siddharth Jha, Carlos Guestrin, Matei Zaharia, Joseph E Gonzalez, Amog Kamsetty, Shu Liu, Liana Patel. CIDR 2025. [pdf]

Are Your LLM-based Text-to-SQL Models Secure? Exploring SQL Injection via Backdoor Attacks

Meiyu Lin, Haichuan Zhang, Jiale Lao, Renyuan Li, Yuanchun Zhou, Carl Yang, Yang Cao, Mingjie Tang. arxiv 2025. [pdf]

CoddLLM: Empowering Large Language Models for Data Analytics

Jiani Zhang, Hengrui Zhang, Rishav Chakravarti, Yiqun Hu, Patrick Ng, Asterios Katsifodimos, Huzefa Rangwala, George Karypis, Alon Halevy. arxiv 2025. [pdf]

The Dawn of Natural Language to SQL: Are We Fully Ready?

Boyan Li, Yuyu Luo, Chengliang Chai, Guoliang Li, Nan Tang. VLDB 2024. [pdf]

PURPLE: Making a Large Language Model a Better SQL Writer

Ren, Tonghui, Yuankai Fan, Zhenying He, Ren Huang, Jiaqi Dai, Can Huang, Yinan Jing, Kai Zhang, Yifan Yang, and X. Sean Wang. ICDE 2024. [pdf]

SM3-Text-to-Query: Synthetic Multi-Model Medical Text-to-Query Benchmark

Sithursan Sivasubramaniam, Cedric Osei-Akoto, Yi Zhang, Kurt Stockinger, Jonathan Fuerst. NeurIPS 2024. [pdf]

Towards Automated Cross-domain Exploratory Data Analysis through Large Language Models

Jun-Peng Zhu, Boyan Niu, Peng Cai, Zheming Ni, Jianwei Wan, Kai Xu, Jiajun Huang, Shengbo Ma, Bing Wang, Xuan Zhou, Guanglei Bao, Donghui Zhang, Liu Tang, and Qi Liu. arxiv 2024. [pdf]

Spider 2.0: Evaluating Language Models on Real-World Enterprise Text-to-SQL Workflows

Fangyu Lei, Jixuan Chen, Yuxiao Ye, Ruisheng Cao, Dongchan Shin, Hongjin Su, Zhaoqing Suo, Hongcheng Gao, Wenjing Hu, Pengcheng Yin, Victor Zhong, Caiming Xiong, Ruoxi Sun, Qian Liu, Sida Wang, Tao Yu. arxiv 2024. [pdf]

SiriusBI: Building End-to-End Business Intelligence Enhanced by Large Language Models

Jie Jiang, Haining Xie, Yu Shen, Zihan Zhang, Meng Lei, Yifeng Zheng, Yide Fang, Chunyou Li, Danqing Huang, Wentao Zhang, Yang Li, Xiaofeng Yang, Bin Cui, Peng Chen. arxiv 2024. [pdf]

Grounding Natural Language to SQL Translation with Data-Based Self-Explanations

Yuankai Fan, Tonghui Ren, Can Huang, Zhenying He, X. Sean Wang. arxiv 2024. [pdf]

LR-SQL: A Supervised Fine-Tuning Method for Text2SQL Tasks under Low-Resource Scenarios

Wen Wuzhenghong, Zhang Yongpan, Pan Su, Sun Yuwei, Lu Pengwei, Ding Cheng. arxiv 2024. [pdf]

CHASE-SQL: Multi-Path Reasoning and Preference Optimized Candidate Selection in Text-to-SQL

Mohammadreza Pourreza, Hailong Li, Ruoxi Sun, Yeounoh Chung, Shayan Talaei, Gaurav Tarlok Kakkar, Yu Gan, Amin Saberi, Fatma Ozcan, Sercan O. Arik. arxiv 2024. [pdf]

MoMQ: Mixture-of-Experts Enhances Multi-Dialect Query Generation across Relational and Non-Relational Databases

Zhisheng Lin, Yifu Liu, Zhiling Luo, Jinyang Gao, Yu Li. arxiv 2024. [pdf]

Generating highly customizable python code for data processing with large language models

Immanuel Trummer. VLDB Journal 2025. [pdf]

From BERT to GPT-3 Codex: Harnessing the Potential of Very Large Language Models for Data Management

Immanuel Trummer. VLDB 2022. [pdf]

Few-shot Text-to-SQL Translation using Structure and Content Prompt Learning

Zihui Gu, Ju Fan, Nan Tang, et al. SIGMOD 2023. [pdf]

AutoDDG: Automated Dataset Description Generation using Large Language Models

Haoxiang Zhang, Yurong Liu, Wei-Lun (Allen) Hung, Aécio Santos, Juliana Freire. arxiv 2025. [pdf]

Db-gpt: Empowering database interactions with private large language models

Siqiao Xue, Caigao Jiang, Wenhui Shi, Fangyin Cheng, Keting Chen, Hongjun Yang, Zhiping Zhang, Jianshan He, Hongyang Zhang, Ganglin Wei, Wang Zhao, Fan Zhou, Danrui Qi, Hong Yi, Shaodong Liu, Faqiang Chen. arxiv 2023. [pdf]

LLM4Vis: Explainable Visualization Recommendation using ChatGPT

Lei Wang, Songheng Zhang, Yun Wang, Ee-Peng Lim, Yong Wang. EMNLP 2023. [pdf]

λ-Tune: Harnessing Large Language Models for Automated Database System Tuning

Victor Giannankouris, Immanuel Trummer. SIGMOD 2025. [pdf]

Automatic Database Configuration Debugging using Retrieval-Augmented Language Models

Sibei Chen, Ju Fan, Bin Wu, Nan Tang, Chao Deng, Pengyi PYW Wang, Ye Li, Jian Tan, Feifei Li, Jingren Zhou, Xiaoyong Du. SIGMOD 2025. [pdf]

LLMIdxAdvis: Resource-Efficient Index Advisor Utilizing Large Language Model

Xinxin Zhao, Haoyang Li, Jing Zhang, Xinmei Huang, Tieying Zhang, Jianjun Chen, Rui Shi, Cuiping Li, Hong Chen. arxiv 2025. [pdf]

LATuner: An LLM-Enhanced Database Tuning System Based on Adaptive Surrogate Model

Fan C, Pan Z, Sun W, et al. Joint European Conference on Machine Learning and Knowledge Discovery in Databases 2024. [pdf]

LLMTune: Accelerate Database Knob Tuning with Large Language Models

Huang X, Li H, Zhang J, et al. arXiv 2024. [pdf]

Is Large Language Model Good at Database Knob Tuning? A Comprehensive Experimental Evaluation

Yiyan Li, Haoyang Li, Zhao Pu, Jing Zhang, Xinyi Zhang, Tao Ji, Luming Sun, Cuiping Li, Hong Chen. arXiv 2024. [pdf]

GPTuner: A Manual-Reading Database Tuning System via GPT-Guided Bayesian Optimization

Jiale Lao, Yibo Wang, Yufei Li, Jianping Wang, Yunjia Zhang, Zhiyuan Cheng, Wanghu Chen, Mingjie Tang, Jianguo Wang. VLDB 2024. [pdf]

DB-BERT: a Database Tuning Tool that “Reads the Manual”

Immanuel Trummer. SIGMOD 2022. [pdf]

Query Rewriting via LLMs

Sriram Dharwada, Himanshu Devrani, Jayant Haritsa, Harish Doraiswamy. arxiv 2025. [pdf]

Can Large Language Models Be Query Optimizer for Relational Databases?

Jie Tan, Kangfei Zhao, Rui Li, Jeffrey Xu Yu, Chengzhi Piao, Hong Cheng, Helen Meng, Deli Zhao, and Yu Rong. arxiv 2025. [pdf]

A Query Optimization Method Utilizing Large Language Models

Zhiming Yao, Haoyang Li, Jing Zhang, Cuiping Li, Hong Chen. arxiv 2025. [pdf]

LLM-R2: A Large Language Model Enhanced Rule-based Rewrite System for Boosting Query Efficiency

Zhaodonghui Li, Haitao Yuan#, Huiming Wang, Gao Cong, Lidong Bing. VLDB 2024. [pdf]

The Unreasonable Effectiveness of LLMs for Query Optimization

Peter Akioyamen, Zixuan Yi, Ryan Marcus. NeurIPS 2024 (Workshop). [pdf]

R-Bot: An LLM-based Query Rewrite System

Zhaoyan Sun, Xuanhe Zhou, Guoliang Li. arxiv 2024. [pdf]

Query Rewriting via Large Language Models

Jie Liu, Barzan Mozafari. arxiv 2024. [pdf]

RCRank: Multimodal Ranking of Root Causes of Slow Queries in Cloud Database Systems

Biao Ouyang, Yingying Zhang, Hanyin Cheng, Yang Shu, Chenjuan Guo, Bin Yang, Qingsong Wen, Lunting Fan, Christian S. Jensen. VLDB 2025. [pdf]

D-Bot: Database Diagnosis System using Large Language Models

Xuanhe Zhou, Guoliang Li, Zhaoyan Sun, Zhiyuan Liu, Weize Chen, et al. VLDB 2024. [pdf] [code]

Panda: Performance debugging for databases using LLM agents

Vikramank Singh, Kapil Eknath Vaidya, ..., Tim Kraska. CIDR 2024. [pdf]

DBG-PT: A Large Language Model Assisted Query Performance Regression Debugger

Victor Giannakouris, Immanuel Trummer. VLDB 2024 (Demo). [pdf]

Query Performance Explanation through Large Language Model for HTAP Systems

Haibo Xiu, Li Zhang, Tieying Zhang, Jun Yang, Jianjun Chen. arxiv 2024. [pdf]

LLM As DBA

Xuanhe Zhou, Guoliang Li, Zhiyuan Liu. arXiv 2023. [pdf]

Data-Juicer: A One-Stop Data Processing System for Large Language Models

Daoyuan Chen, Yilun Huang, Zhijian Ma, Hesen Chen, Xuchen Pan, Ce Ge, Dawei Gao, Yuexiang Xie, Zhaoyang Liu, Jinyang Gao, Yaliang Li, Bolin Ding, Jingren Zhou. SIGMOD 2024. [pdf]

CoachLM: Automatic Instruction Revisions Improve the Data Quality in LLM Instruction Tuning

Liu, Yilun, Shimin Tao, Xiaofeng Zhao, Ming Zhu, Wenbing Ma, Junhao Zhu, Chang Su et al. ICDE 2024. [pdf]

Relational Database Augmented Large Language Model

Zongyue Qin, Chen Luo, Zhengyang Wang, Haoming Jiang, Yizhou Sun. arxiv 2024. [pdf]

SAGE: A Framework of Precise Retrieval for RAG

Jintao Zhang, Guoliang Li, Jinyang Su. ICDE 2025. [pdf]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM4DB

Similar Open Source Tools

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLM) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLM. The repository includes works on data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, configuration tuning, query optimization, and anomaly diagnosis using LLMs. It aims to provide insights and advancements in leveraging LLMs for improving data processing, analysis, and database management tasks.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLMs) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLMs. The repository includes research papers, tools, and techniques related to leveraging LLMs for tasks like data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, knob tuning, query optimization, and database diagnosis.

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

Prompt4ReasoningPapers

Prompt4ReasoningPapers is a repository dedicated to reasoning with language model prompting. It provides a comprehensive survey of cutting-edge research on reasoning abilities with language models. The repository includes papers, methods, analysis, resources, and tools related to reasoning tasks. It aims to support various real-world applications such as medical diagnosis, negotiation, etc.

Awesome-LLM-Preference-Learning

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

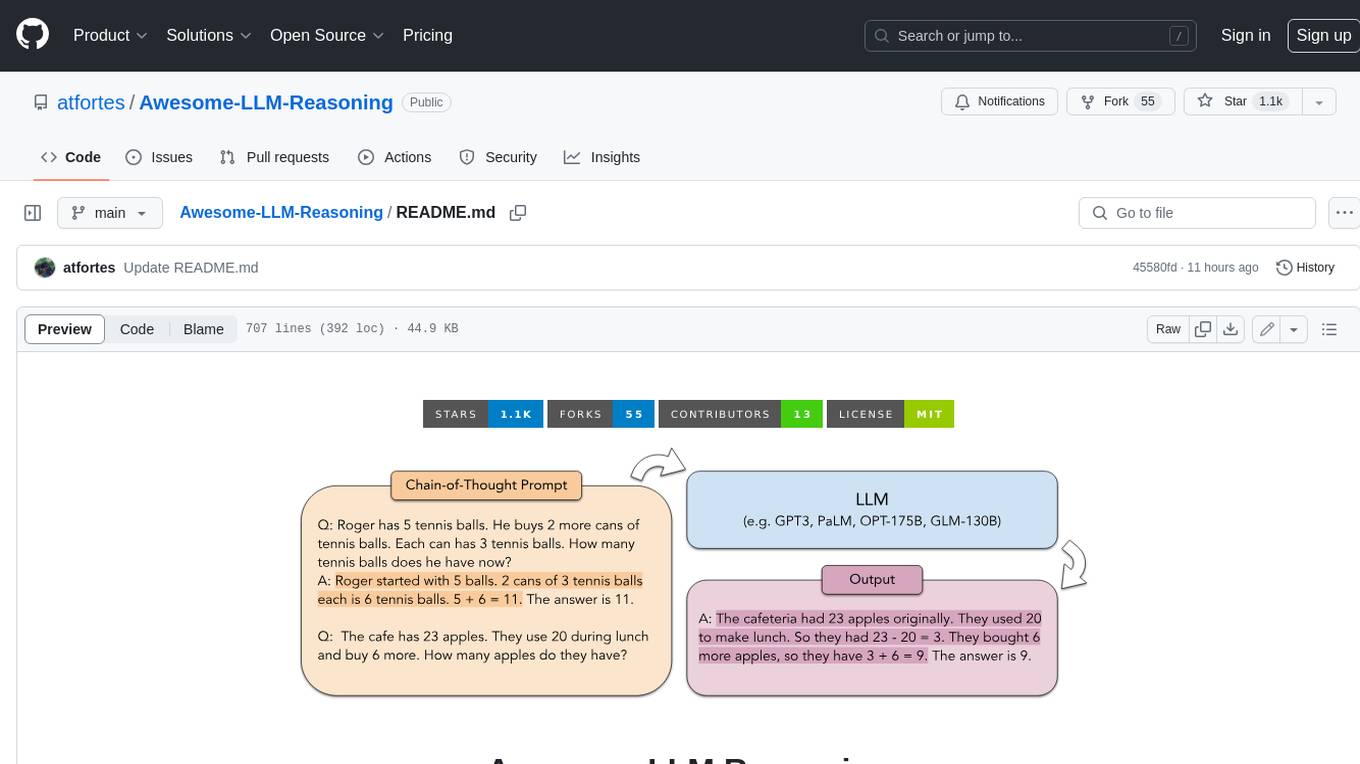

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

awesome-generative-information-retrieval

This repository contains a curated list of resources on generative information retrieval, including research papers, datasets, tools, and applications. Generative information retrieval is a subfield of information retrieval that uses generative models to generate new documents or passages of text that are relevant to a given query. This can be useful for a variety of tasks, such as question answering, summarization, and document generation. The resources in this repository are intended to help researchers and practitioners stay up-to-date on the latest advances in generative information retrieval.

LLMAgentPapers

LLM Agents Papers is a repository containing must-read papers on Large Language Model Agents. It covers a wide range of topics related to language model agents, including interactive natural language processing, large language model-based autonomous agents, personality traits in large language models, memory enhancements, planning capabilities, tool use, multi-agent communication, and more. The repository also provides resources such as benchmarks, types of tools, and a tool list for building and evaluating language model agents. Contributors are encouraged to add important works to the repository.

ai4math-papers

The 'ai4math-papers' repository contains a collection of research papers related to AI applications in mathematics, including automated theorem proving, synthetic theorem generation, autoformalization, proof refactoring, premise selection, benchmarks, human-in-the-loop interactions, and constructing examples/counterexamples. The papers cover various topics such as neural theorem proving, reinforcement learning for theorem proving, generative language modeling, formal mathematics statement curriculum learning, and more. The repository serves as a valuable resource for researchers and practitioners interested in the intersection of AI and mathematics.

awesome-llm-role-playing-with-persona

Awesome-llm-role-playing-with-persona is a curated list of resources for large language models for role-playing with assigned personas. It includes papers and resources related to persona-based dialogue systems, personalized response generation, psychology of LLMs, biases in LLMs, and more. The repository aims to provide a comprehensive collection of research papers and tools for exploring role-playing abilities of large language models in various contexts.

SLMs-Survey

SLMs-Survey is a comprehensive repository that includes papers and surveys on small language models. It covers topics such as technology, on-device applications, efficiency, enhancements for LLMs, and trustworthiness. The repository provides a detailed overview of existing SLMs, their architecture, enhancements, and specific applications in various domains. It also includes information on SLM deployment optimization techniques and the synergy between SLMs and LLMs.

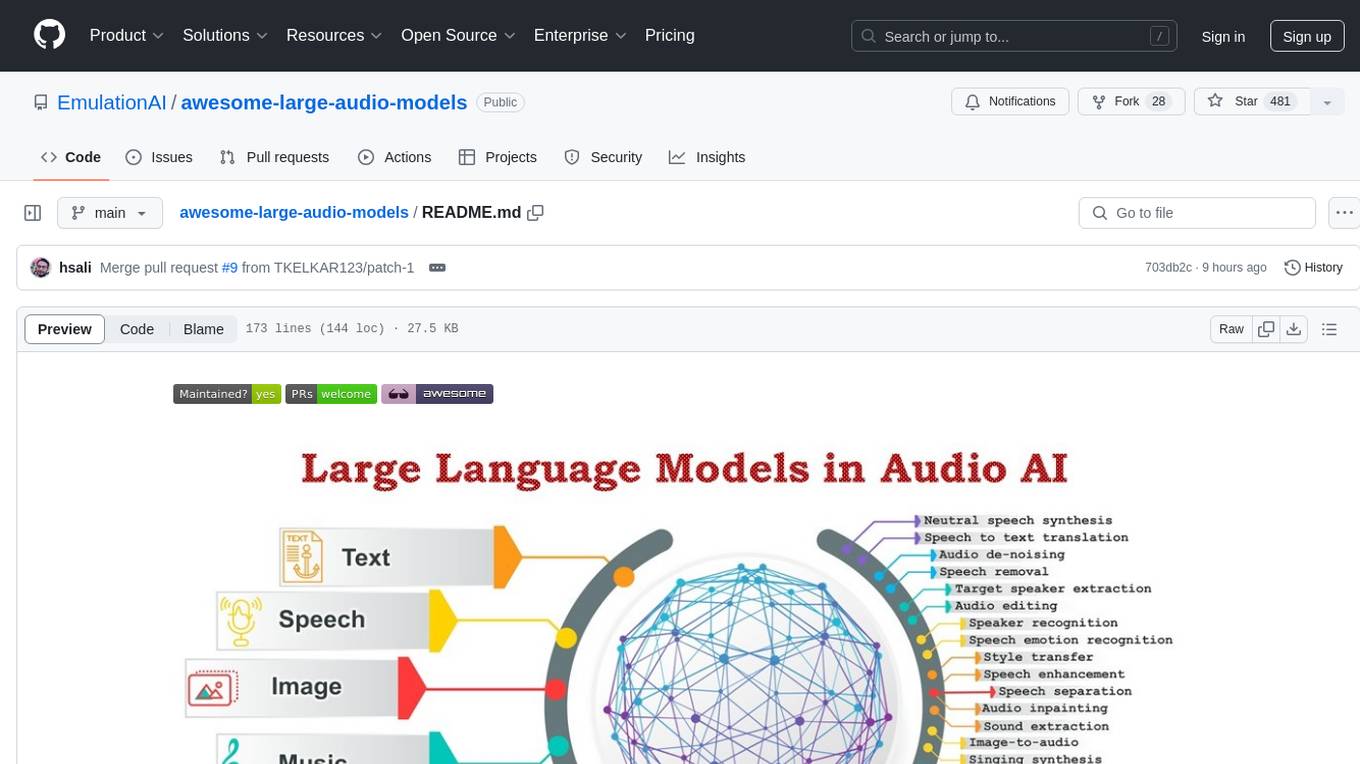

awesome-large-audio-models

This repository is a curated list of awesome large AI models in audio signal processing, focusing on the application of large language models to audio tasks. It includes survey papers, popular large audio models, automatic speech recognition, neural speech synthesis, speech translation, other speech applications, large audio models in music, and audio datasets. The repository aims to provide a comprehensive overview of recent advancements and challenges in applying large language models to audio signal processing, showcasing the efficacy of transformer-based architectures in various audio tasks.

Awesome-Multimodal-LLM-for-Code

This repository contains papers, methods, benchmarks, and evaluations for code generation under multimodal scenarios. It covers UI code generation, scientific code generation, slide code generation, visually rich programming, logo generation, program repair, UML code generation, and general benchmarks.

For similar tasks

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLM) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLM. The repository includes works on data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, configuration tuning, query optimization, and anomaly diagnosis using LLMs. It aims to provide insights and advancements in leveraging LLMs for improving data processing, analysis, and database management tasks.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.