awesome-llm-role-playing-with-persona

Awesome-llm-role-playing-with-persona: a curated list of resources for large language models for role-playing with assigned personas

Stars: 514

Awesome-llm-role-playing-with-persona is a curated list of resources for large language models for role-playing with assigned personas. It includes papers and resources related to persona-based dialogue systems, personalized response generation, psychology of LLMs, biases in LLMs, and more. The repository aims to provide a comprehensive collection of research papers and tools for exploring role-playing abilities of large language models in various contexts.

README:

Awesome-llm-role-playing-agents: a curated list of resources for large language models, personas, and role-playing agents.

This repo primarily focuses on character role-playing, such as fictional characters, celebrities, and historical figures. While role-playing language agents are related to many research topics, such as multi-agent systems and long-context models, we cannot guarantee the inclusion of papers in these areas.

Here are some other paper lists you might be interested in:

💡

🔬

🤖

-

2024-10-09 🎉Our survey paper"From Persona to Personalization:A Survey on Role-Playing Language Agents" [arxiv] has been accepted to TMLR!

-

2024-08-19 FYI: Boson AI has released their benchmark [RPBench Leaderboard] and open-source model [Higgs-Llama-3 70B V2] which ranks first on it.

-

2024-06-28 We reorganized this repo, putting more focus on role-playing agents.

-

2024-04-30 We are super excited to announce our survey paper: "From Persona to Personalization:A Survey on Role-Playing Language Agents" [arxiv]

-

2024-04-17 We are looking for collaborators interested in research on this topic. Contact us via email: [email protected]

-

2023-10-30 We create this repository to maintain a paper list on LLMs Role-Playing with Assigned Personas .

Established Characters

-

From Persona to Personalization: A Survey on Role-Playing Language Agents.

Jiangjie Chen, Xintao Wang, Rui Xu, Siyu Yuan, Yikai Zhang, Wei Shi, Jian Xie, Shuang Li, Ruihan Yang, Tinghui Zhu, Aili Chen, Nianqi Li, Lida Chen, Caiyu Hu, Siye Wu, Scott Ren, Ziquan Fu, Yanghua Xiao. [abs], TMLR 2024.04

-

InCharacter: Evaluating Personality Fidelity in Role-Playing Agents through Psychological Interviews. (Previously: Do Role-Playing Chatbots Capture the Character Personalities? Assessing Personality Traits for Role-Playing Chatbots)

Xintao Wang, Yunze Xiao, Jen-tse Huang, Siyu Yuan, Rui Xu, Haoran Guo, Quan Tu, Yaying Fei, Ziang Leng, Wei Wang, Jiangjie Chen, Cheng Li, Yanghua Xiao. [abs], ACL 2024

-

RoleLLM: Benchmarking, Eliciting, and Enhancing Role-Playing Abilities of Large Language Models.

Zekun Moore Wang, Zhongyuan Peng, Haoran Que, Jiaheng Liu, Wangchunshu Zhou, Yuhan Wu, Hongcheng Guo, Ruitong Gan, Zehao Ni, Man Zhang, Zhaoxiang Zhang, Wanli Ouyang, Ke Xu, Wenhu Chen, Jie Fu, Junran Peng., [abs], Findings of ACL 2024

-

ChatHaruhi: Reviving Anime Character in Reality via Large Language Model.

Cheng Li, Ziang Leng, Chenxi Yan, Junyi Shen, Hao Wang, Weishi MI, Yaying Fei, Xiaoyang Feng, Song Yan, HaoSheng Wang, Linkang Zhan, Yaokai Jia, Pingyu Wu, Haozhen Sun. [abs], 2023.8

-

Character-LLM: A Trainable Agent for Role-Playing.

Yunfan Shao, Linyang Li, Junqi Dai, Xipeng Qiu., [abs], EMNLP 2023

-

Mitigating Hallucination in Fictional Character Role-Play

Nafis Sadeq, Zhouhang Xie, Byungkyu Kang, Prarit Lamba, Xiang Gao, Julian McAuley., [abs] [dataset], Findings of EMNLP 2024

-

CharacterGLM: Customizing Chinese Conversational AI Characters with Large Language Models. Jinfeng Zhou, Zhuang Chen, Dazhen Wan, Bosi Wen, Yi Song, Jifan Yu, Yongkang Huang, Libiao Peng, Jiaming Yang, Xiyao Xiao, Sahand Sabour, Xiaohan Zhang, Wenjing Hou, Yijia Zhang, Yuxiao Dong, Jie Tang, Minlie Huang., [abs], 2023.11

-

PIPPA: A Partially Synthetic Conversational Dataset Tear Gosling, Alpin Dale, Yinhe Zheng. [abs], 2023.08

-

Character is Destiny: Can Large Language Models Simulate Persona-Driven Decisions in Role-Playing?

Rui Xu, Xintao Wang, Jiangjie Chen, Siyu Yuan, Xinfeng Yuan, Jiaqing Liang, Zulong Chen, Xiaoqing Dong, Yanghua Xiao. [abs], 2024.04

-

Large Language Models are Superpositions of All Characters: Attaining Arbitrary Role-play via Self-Alignment Keming Lu, Bowen Yu, Chang Zhou, Jingren Zhou., [abs], 2024.01

-

Large Language Models Meet Harry Potter: A Bilingual Dataset for Aligning Dialogue Agents with Characters. Nuo Chen, Yan Wang, Haiyun Jiang, Deng Cai, Yuhan Li, Ziyang Chen, Longyue Wang, Jia Li., [abs], 2022.11

-

Evaluating Character Understanding of Large Language Models via Character Profiling from Fictional Works Xinfeng Yuan, Siyu Yuan, Yuhan Cui, Tianhe Lin, Xintao Wang, Rui Xu, Jiangjie Chen, Deqing Yang. [abs], 2024.04

-

From Role-Play to Drama-Interaction: An LLM Solution Weiqi Wu, Hongqiu Wu, Lai Jiang, Xingyuan Liu, Jiale Hong, Hai Zhao, Min Zhang. [abs], Findings of ACL 2024

-

TimeChara: Evaluating Point-in-Time Character Hallucination of Role-Playing Large Language Models Jaewoo Ahn, Taehyun Lee, Junyoung Lim, Jin-Hwa Kim, Sangdoo Yun, Hwaran Lee, Gunhee Kim. [abs], Findings of ACL 2024

-

“Let Your Characters Tell Their Story”: A Dataset for Character-Centric Narrative Understanding Faeze Brahman, Meng Huang, Oyvind Tafjord, Chao Zhao, Mrinmaya Sachan, Snigdha Chaturvedi., [abs], EMNLP findings 2021

-

CharacterEval: A Chinese Benchmark for Role-Playing Conversational Agent Evaluation. Quan Tu, Shilong Fan, Zihang Tian, Rui Yan., [abs], ACL 2024

-

Neeko: Leveraging Dynamic LoRA for Efficient Multi-Character Role-Playing Agent Xiaoyan Yu, Tongxu Luo, Yifan Wei, Fangyu Lei, Yiming Huang, Hao Peng, Liehuang Zhu., [abs], 2024.02

-

RoleEval: A Bilingual Role Evaluation Benchmark for Large Language Models Tianhao Shen, Sun Li, Quan Tu, Deyi Xiong. [abs], 2023.12

-

Enhancing Role-playing Systems through Aggressive Queries: Evaluation and Improvement Yihong Tang, Jiao Ou, Che Liu, Fuzheng Zhang, Di Zhang, Kun Gai. [abs], 2024.02

-

SocialBench: Sociality Evaluation of Role-Playing Conversational Agents Yihong Tang, Jiao Ou, Che Liu, Fuzheng Zhang, Di Zhang, Kun Gai. [abs], 2024.03

-

Capturing Minds, Not Just Words: Enhancing Role-Playing Language Models with Personality-Indicative Data Yiting Ran, Xintao Wang, Rui Xu, Xinfeng Yuan, Jiaqing Liang, Yanghua Xiao, Deqing Yang. [abs], Findings of EMNLP 2024

-

MMRole: A Comprehensive Framework for Developing and Evaluating Multimodal Role-Playing Agents Yanqi Dai, Huanran Hu, Lei Wang, Shengjie Jin, Xu Chen, Zhiwu Lu. [abs], 2024.08

-

Revealing the Challenge of Detecting Character Knowledge Errors in LLM Role-Playing Wenyuan Zhang, Jiawei Sheng, Shuaiyi Nie, Zefeng Zhang, Xinghua Zhang, Yongquan He, Tingwen Liu. [abs], 2024.09

Original Characters

-

Scaling Synthetic Data Creation with 1,000,000,000 Personas Xin Chan, Xiaoyang Wang, Dian Yu, Haitao Mi, Dong Yu. [abs], 2024.06

-

Stark: Social Long-Term Multi-Modal Conversation with Persona Commonsense Knowledge Young-Jun Lee, Dokyong Lee, Junyoung Youn, Kyeongjin Oh, Byungsoo Ko, Jonghwan Hyeon, Ho-Jin Choi. [abs], EMNLP 2024

-

Expertprompting: Instructing large language models to be distinguished experts

Benfeng Xu , An Yang , Junyang Lin , Quan Wang , Chang Zhou, Yongdong Zhang and Zhendong Mao. [abs], 2023.5

-

Toxicity in ChatGPT: Analyzing Persona-assigned Language Models

Ameet Deshpande, Vishvak Murahari, Tanmay Rajpurohit, Ashwin Kalyan, Karthik Narasimhan. [abs], 2023.4

-

Better Zero-Shot Reasoning with Role-Play Prompting Aobo Kong, Shiwan Zhao, Hao Chen, Qicheng Li, Yong Qin, Ruiqi Sun, Xin Zhou. [abs], 2023.8

-

Bias Runs Deep: Implicit Reasoning Biases in Persona-Assigned LLMs Shashank Gupta, Vaishnavi Shrivastava, Ameet Deshpande, Ashwin Kalyan, Peter Clark, Ashish Sabharwal, Tushar Khot [abs], 2023.11

-

Is "A Helpful Assistant" the Best Role for Large Language Models? A Systematic Evaluation of Social Roles in System Prompts. Mingqian Zheng, Jiaxin Pei, David Jurgens [abs], 2023.11

-

CoMPosT: Characterizing and Evaluating Caricature in LLM Simulations Myra Cheng, Tiziano Piccardi, Diyi Yang. [abs], 2023.10

-

Deciphering Stereotypes in Pre-Trained Language Models Weicheng Ma, Henry Scheible, Brian Wang, Goutham Veeramachaneni, Pratim Chowdhary, Alan Sun, Andrew Koulogeorge, Lili Wang, Diyi Yang, Soroush Vosoughi. [abs], EMNLP Findings 2023

-

CultureLLM: Incorporating Cultural Differences into Large Language Models Cheng Li, Mengzhou Chen, Jindong Wang, Sunayana Sitaram, Xing Xie. [abs], 2024.02

-

Beyond Demographics: Aligning Role-playing LLM-based Agents Using Human Belief Networks Yun-Shiuan Chuang, Zach Studdiford, Krirk Nirunwiroj, Agam Goyal, Vincent V. Frigo, Sijia Yang, Dhavan Shah, Junjie Hu, Timothy T. Rogers. [abs], 2024.06

-

LaMP: When Large Language Models Meet Personalization Alireza Salemi, Sheshera Mysore, Michael Bendersky, Hamed Zamani [abs], 2023.04.

-

How Well Can LLMs Echo Us? Evaluating AI Chatbots' Role-Play Ability with ECHO Man Tik Ng, Hui Tung Tse, Jen-tse Huang, Jingjing Li, Wenxuan Wang, Michael R. Lyu. [abs], 2024.04

-

On the steerability of large language models toward data-driven personas Junyi Li, Ninareh Mehrabi, Charith Peris, Palash Goyal, Kai-Wei Chang, Aram Galstyan, Richard Zemel, Rahul Gupta [abs], NAACL 2024.

-

Show, Don’t Tell: Aligning Language Models with Demonstrated Feedback Omar Shaikh, Michelle Lam, Joey Hejna, Yijia Shao, Michael Bernstein, Diyi Yang [abs], 2024.06

-

PersLLM: A Personified Training Approach for Large Language Models Zheni Zeng, Jiayi Chen, Huimin Chen, Yukun Yan, Yuxuan Chen, Zhenghao Liu, Zhiyuan Liu, Maosong Sun [abs], 2024.07

-

LiveChat: A Large-Scale Personalized Dialogue Dataset Automatically Constructed from Live Streaming

Jingsheng Gao, Yixin Lian, Ziyi Zhou, Yuzhuo Fu, Baoyuan Wang. [abs], 2023.6. Tags: streamer persona.

-

COSPLAY: Concept Set Guided Personalized Dialogue Generation Across Both Party Personas Jingsheng Gao, Yixin Lian, Ziyi Zhou, Yuzhuo Fu, Baoyuan Wang. [abs], SIGIR 2022.

-

Personalizing Dialogue Agents: I have a dog, do you have pets too? Saizheng Zhang, Emily Dinan, Jack Urbanek, Arthur Szlam, Douwe Kiela, Jason Weston. [abs], ACL 2018.

-

MPCHAT: Towards Multimodal Persona-Grounded Conversation Jaewoo Ahn, Yeda Song, Sangdoo Yun, Gunhee Kim. [abs], ACL 2023. Tags: multimodal persona.

-

Personalized Reward Learning with Interaction-Grounded Learning (IGL) Jessica Maghakian, Paul Mineiro, Kishan Panaganti, Mark Rucker, Akanksha Saran, Cheng Tan [abs], 2022.11.

-

When Large Language Models Meet Personalization: Perspectives of Challenges and Opportunities Jin Chen, Zheng Liu, Xu Huang, Chenwang Wu, Qi Liu, Gangwei Jiang, Yuanhao Pu, Yuxuan Lei, Xiaolong Chen, Xingmei Wang, Defu Lian, Enhong Chen [abs], 2023.07, Survey.

-

Beyond ChatBots: ExploreLLM for Structured Thoughts and Personalized Model Responses Xiao Ma, Swaroop Mishra, Ariel Liu, Sophie Su, Jilin Chen, Chinmay Kulkarni, Heng-Tze Cheng, Quoc Le, Ed Chi [abs], 2023.12.

-

PersonalityChat: Conversation Distillation for Personalized Dialog Modeling with Facts and Traits Ehsan Lotfi, Maxime De Bruyn, Jeska Buhmann, Walter Daelemans. [abs], 2024.01.

-

Generative Agents: Interactive Simulacra of Human Behavior Joon Sung Park, Joseph C. O'Brien, Carrie J. Cai, Meredith Ringel Morris, Percy Liang, Michael S. Bernstein. [abs], 2023.04.

-

Communicative Agents for Software Development Chen Qian, Xin Cong, Wei Liu, Cheng Yang, Weize Chen, Yusheng Su, Yufan Dang, Jiahao Li, Juyuan Xu, Dahai Li, Zhiyuan Liu, Maosong Sun. [abs], 2023.07.

-

Corex: Pushing the Boundaries of Complex Reasoning through Multi-Model Collaboration. Qiushi Sun, Zhangyue Yin, Xiang Li, Zhiyong Wu, Xipeng Qiu, Lingpeng Kong. [abs], 2023.09.

-

AVALONBENCH: Evaluating LLMs Playing the Game of Avalon. Jonathan Light, Min Cai, Sheng Shen, Ziniu Hu. [abs], 2023.10.

-

War and Peace (WarAgent): Large Language Model-based Multi-Agent Simulation of World Wars. Wenyue Hua, Lizhou Fan, Lingyao Li, Kai Mei, Jianchao Ji, Yingqiang Ge, Libby Hemphill, Yongfeng Zhang. [abs], 2023.11.

-

Leveraging Word Guessing Games to Assess the Intelligence of Large Language Models. Tian Liang, Zhiwei He, Jen-tse Huang, Wenxuan Wang, Wenxiang Jiao, Rui Wang, Yujiu Yang, Zhaopeng Tu, Shuming Shi, Xing Wang. [abs], 2023.10.

-

AntEval: Quantitatively Evaluating Informativeness and Expressiveness of Agent Social Interactions. Yuanzhi Liang, Linchao Zhu, Yi Yang. [abs], 2024.01.

-

Exchange-of-Thought: Enhancing Large Language Model Capabilities through Cross-Model Communication Zhangyue Yin, Qiushi Sun, Cheng Chang, Qipeng Guo, Junqi Dai, Xuanjing Huang, Xipeng Qiu. [abs], 2024.01.

-

Exploring Collaboration Mechanisms for LLM Agents: A Social Psychology View Jintian Zhang, Xin Xu, Ningyu Zhang, Ruibo Liu, Bryan Hooi, Shumin Deng. [abs], 2023.10

-

Training socially aligned language models on simulated social interactions Ruibo Liu, Ruixin Yang, Chenyan Jia, Ge Zhang, Diyi Yang, Soroush Vosoughi. [abs], ICLR 2024

-

How Far Are We on the Decision-Making of LLMs? Evaluating LLMs' Gaming Ability in Multi-Agent Environments Jen-tse Huang, Eric John Li, Man Ho Lam, Tian Liang, Wenxuan Wang, Youliang Yuan, Wenxiang Jiao, Xing Wang, Zhaopeng Tu, Michael R. Lyu. [abs], 2024.03

-

AgentGroupChat: An Interactive Group Chat Simulacra For Better Eliciting Collective Emergent Behavior Zhouhong Gu, Xiaoxuan Zhu, Haoran Guo, Lin Zhang, Yin Cai, Hao Shen, Jiangjie Chen, Zheyu Ye, Yifei Dai, Yan Gao, Yao Hu, Hongwei Feng, Yanghua Xiao [abs], 2024.03

-

Is this the real life? Is this just fantasy? The Misleading Success of Simulating Social Interactions With LLMs Xuhui Zhou, Zhe Su, Tiwalayo Eisape, Hyunwoo Kim, Maarten Sap. [abs], 2024.03

-

EvoAgent: Towards Automatic Multi-Agent Generation via Evolutionary Algorithms Siyu Yuan, Kaitao Song, Jiangjie Chen, Xu Tan, Dongsheng Li, Deqing Yang. [abs], 2024.06

-

HoLLMwood: Unleashing the Creativity of Large Language Models in Screenwriting via Role Playing Jing Chen, Xinyu Zhu, Cheng Yang, Chufan Shi, Yadong Xi, Yuxiang Zhang, Junjie Wang, Jiashu Pu, Rongsheng Zhang, Yujiu Yang, Tian Feng [abs], 2024.06

-

Artificial Leviathan: Exploring Social Evolution of LLM Agents Through the Lens of Hobbesian Social Contract Theory Gordon Dai, Weijia Zhang, Jinhan Li, Siqi Yang, Chidera Onochie lbe, Srihas Rao, Arthur Caetano, Misha Sra. [abs], 2024.06

-

Dialogue Action Tokens: Steering Language Models in Goal-Directed Dialogue with a Multi-Turn Planner Kenneth Li, Yiming Wang, Fernanda Viégas, Martin Wattenberg. [abs], 2024.06

-

LLM Discussion: Enhancing the Creativity of Large Language Models via Discussion Framework and Role-Play Li-Chun Lu, Shou-Jen Chen, Tsung-Min Pai, Chan-Hung Yu, Hung-yi Lee, Shao-Hua Sun. [abs], COLM 2024

-

Persona Inconstancy in Multi-Agent LLM Collaboration: Conformity, Confabulation, and Impersonation Razan Baltaji, Babak Hemmatian, Lav R. Varshney. [abs], 2024.05

-

Personality Traits in Large Language Models

Mustafa Safdari, Greg Serapio-García, Clément Crepy, Stephen Fitz, Peter Romero, Luning Sun, Marwa Abdulhai, Aleksandra Faust, Maja Matarić. [abs], 2023.7

-

Estimating the Personality of White-Box Language Models.

Saketh Reddy Karra, Son The Nguyen, Theja Tulabandhula. [abs], 2022.4

-

PersonaLLM: Investigating the Ability of GPT-3.5 to Express Personality Traits and Gender Differences.

Hang Jiang, Xiajie Zhang, Xubo Cao, Jad Kabbara., [abs], 2023.5

-

Does GPT-3 Demonstrate Psychopathy? Evaluating Large Language Models from a Psychological Perspective.

Xingxuan Li, Yutong Li, Shafiq Joty, Linlin Liu, Fei Huang, Lin Qiu, Lidong Bing. [abs], 2022.12

-

Evaluating and Inducing Personality in Pre-trained Language Models. Guangyuan Jiang, Manjie Xu, Song-Chun Zhu, Wenjuan Han, Chi Zhang, Yixin Zhu. [abs], 2022.6

-

Revisiting the Reliability of Psychological Scales on Large Language Models (Previously: ChatGPT an ENFJ, Bard an ISTJ:Empirical Study on Personalities of Large Language Models.) Jen-tse Huang, Wenxuan Wang, Man Ho Lam, Eric John Li, Wenxiang Jiao, Michael R. Lyu. [abs], 2023.5

-

Do llms possess a personality? making the mbti test an amazing evaluation for large language models.

Keyu Pan, Yawen Zeng. [abs], 2023.7

-

Can chatgpt assess human personalities? a general evaluation framework.

Haocong Rao, Cyril Leung, Chunyan Miao. [abs], 2023.3

-

Who is GPT-3? An Exploration of Personality, Values and Demographics.

Marilù Miotto, Nicola Rossberg, Bennett Kleinberg., [abs], 2022.9

-

Editing Personality for LLMs

Shengyu Mao, Ningyu Zhang, Xiaohan Wang, Mengru Wang, Yunzhi Yao, Yong Jiang, Pengjun Xie, Fei Huang, Huajun Chen., [abs], 2023.10

-

MBTI Personality Prediction for Fictional Characters Using Movie Scripts

Yisi Sang, Xiangyang Mou, Mo Yu, Dakuo Wang, Jing Li, Jeffrey Stanton., [abs], EMNLP 2022

-

Unleashing Cognitive Synergy in Large Language Models: A Task-Solving Agent through Multi-Persona Self-Collaboration

Zhenhailong Wang, Shaoguang Mao, Wenshan Wu, Tao Ge, Furu Wei, Heng Ji., [abs], 2023.7

-

Open Models, Closed Minds? On Agents Capabilities in Mimicking Human Personalities through Open Large Language Models

Lucio La Cava, Davide Costa, Andrea Tagarelli., [abs], 2024.01

-

Theory of Mind May Have Spontaneously Emerged in Large Language Models

Michal Kosinski. [abs], 2023.2

-

SOTOPIA: Interactive Evaluation for Social Intelligence in Language Agents

Xuhui Zhou, Hao Zhu, Leena Mathur, Ruohong Zhang, Haofei Yu, Zhengyang Qi, Louis-Philippe Morency, Yonatan Bisk, Daniel Fried, Graham Neubig, Maarten Sap. [abs], 2023.10

-

SOTOPIA-π: Interactive Learning of Socially Intelligent Language Agents

Ruiyi Wang, Haofei Yu, Wenxin Zhang, Zhengyang Qi, Maarten Sap, Graham Neubig, Yonatan Bisk, Hao Zhu. [abs], 2024.03

-

Towards Objectively Benchmarking Social Intelligence for Language Agents at Action Level Chenxu Wang, Bin Dai, Huaping Liu, Baoyuan Wang. [abs], 2024.04

-

OpenToM: A Comprehensive Benchmark for Evaluating Theory-of-Mind Reasoning Capabilities of Large Language Models. Hainiu Xu, Runcong Zhao, Lixing Zhu, Jinhua Du, Yulan He. [abs], 2024.02.

-

Is this the real life? Is this just fantasy? The Misleading Success of Simulating Social Interactions With LLMs Xuhui Zhou, Zhe Su, Tiwalayo Eisape, Hyunwoo Kim, Maarten Sap [abs], 2024.03

-

Role-Play with Large Language Models Murray Shanahan, Kyle McDonell, and Laria Reynolds.[abs], 2023.5

-

Consciousness in artificial intelligence: Insights from the science of consciousness. Patrick Butlin, Robert Long, Eric Elmoznino, Yoshua Bengio, Jonathan Birch, Axel Constant, George Deane, Stephen M. Fleming, Chris Frith, Xu Ji, Ryota Kanai, Colin Klein, Grace Lindsay, Matthias Michel, Liad Mudrik, Megan A. K. Peters, Eric Schwitzgebel, Jonathan Simon, Rufin VanRullen.[abs], 2023.8

-

The Self-Perception and Political Biases of ChatGPT.

Jérôme Rutinowski, Sven Franke, Jan Endendyk, Ina Dormuth, Markus Pauly., 2023.4. Tags: personality traits, political biases, dark personality traits.

-

The political ideology of conversational AI: Converging evidence on ChatGPT's pro-environmental, left-libertarian orientation.

Jochen Hartmann, Jasper Schwenzow, Maximilian Witte., 2023.1. Tags: political biases.

-

Inducing anxiety in large language models increases exploration and bias.

Julian Coda-Forno, Kristin Witte, Akshay K. Jagadish, Marcel Binz, Zeynep Akata, Eric Schulz., 2023.4. Tags: anxiety.

-

Exploring Collaboration Mechanisms for LLM Agents: A Social Psychology View.

Jintian Zhang, Xin Xu, Shumin Deng., 2023.10. Tags: social psychology.

-

RPBench Leaderboard Boson AI. [leaderboard]

-

Higgs-Llama-3-70B Boson AI. [huggingface]

-

[Character.ai 赛道的真相 (The Truth About the Character.ai Track)], 2024.08

-

[李沐:创业一年,人间三年!(Mu Li (Boson AI): One Year of Entrepreneurship, Three Years in the Human World!)], 2024.08

-

[盘点ACL 2024 角色扮演方向都发了啥 (A Review of Publications in Role-Playing Direction at ACL 2024)], 2024.07

-

[角色扮演大模型的碎碎念 (Ramblings on Large Language Models for Role-Playing)], 2024.03

-

One Thousand and One Pairs: A “novel” challenge for long-context language models Marzena Karpinska, Katherine Thai, Kyle Lo, Tanya Goyal, Mohit Iyyer. [abs], 2024.06

-

NovelQA: Benchmarking Question Answering on Documents Exceeding 200K Tokens Cunxiang Wang, Ruoxi Ning, Boqi Pan, Tonghui Wu, Qipeng Guo, Cheng Deng, Guangsheng Bao, Xiangkun Hu, Zheng Zhang, Qian Wang, Yue Zhang. [abs], 2024.03

-

Visual-RolePlay: Universal Jailbreak Attack on MultiModal Large Language Models via Role-playing Image Character Siyuan Ma, Weidi Luo, Yu Wang, Xiaogeng Liu. [abs], 2024.06

-

AI Cat Narrator: Designing an AI Tool for Exploring the Shared World and Social Connection with a Cat Zhenchi Lai, Janet Yi-Ching Huang, Rung-Huei Liang. [abs], 2024.06

-

THEANINE: Revisiting Memory Management in Long-term Conversations with Timeline-augmented Response Generation Seo Hyun Kim, Kai Tzu-iunn Ong, Taeyoon Kwon, Namyoung Kim, Keummin Ka, SeongHyeon Bae, Yohan Jo, Seung-won Hwang, Dongha Lee, Jinyoung Yeo. [abs], 2024.06

-

ESC-Eval: Evaluating Emotion Support Conversations in Large Language Models Haiquan Zhao, Lingyu Li, Shisong Chen, Shuqi Kong, Jiaan Wang, Kexin Huang, Tianle Gu, Yixu Wang, Dandan Liang, Zhixu Li, Yan Teng, Yanghua Xiao, Yingchun Wang. [abs], 2024.06

-

PATIENT-Ψ: Using Large Language Models to Simulate Patients for Training Mental Health Professionals Ruiyi Wang, Stephanie Milani, Jamie C. Chiu, Jiayin Zhi, Shaun M. Eack, Travis Labrum, Samuel M. Murphy, Nev Jones, Kate Hardy, Hong Shen, Fei Fang, Zhiyu Zoey Chen. [abs], 2024.05

-

CharacterMeet: Supporting Creative Writers' Entire Story Character Construction Processes Through Conversation with LLM-Powered Chatbot Avatars *Hua Xuan Qin, Shan Jin, Ze Gao, Mingming Fan, Pan Hui.' [abs], CHI 2024.

-

Stephanie: Step-by-Step Dialogues for Mimicking Human Interactions in Social Conversations Hao Yang, Hongyuan Lu, Xinhua Zeng, Yang Liu, Xiang Zhang, Haoran Yang, Yumeng Zhang, Yiran Wei, Wai Lam. [abs], 2024.07

🤲" Join us in improving this repository! Spotted any notable works we might have missed? We welcome your additions. Every contribution counts! "

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-llm-role-playing-with-persona

Similar Open Source Tools

awesome-llm-role-playing-with-persona

Awesome-llm-role-playing-with-persona is a curated list of resources for large language models for role-playing with assigned personas. It includes papers and resources related to persona-based dialogue systems, personalized response generation, psychology of LLMs, biases in LLMs, and more. The repository aims to provide a comprehensive collection of research papers and tools for exploring role-playing abilities of large language models in various contexts.

Prompt4ReasoningPapers

Prompt4ReasoningPapers is a repository dedicated to reasoning with language model prompting. It provides a comprehensive survey of cutting-edge research on reasoning abilities with language models. The repository includes papers, methods, analysis, resources, and tools related to reasoning tasks. It aims to support various real-world applications such as medical diagnosis, negotiation, etc.

LLMAgentPapers

LLM Agents Papers is a repository containing must-read papers on Large Language Model Agents. It covers a wide range of topics related to language model agents, including interactive natural language processing, large language model-based autonomous agents, personality traits in large language models, memory enhancements, planning capabilities, tool use, multi-agent communication, and more. The repository also provides resources such as benchmarks, types of tools, and a tool list for building and evaluating language model agents. Contributors are encouraged to add important works to the repository.

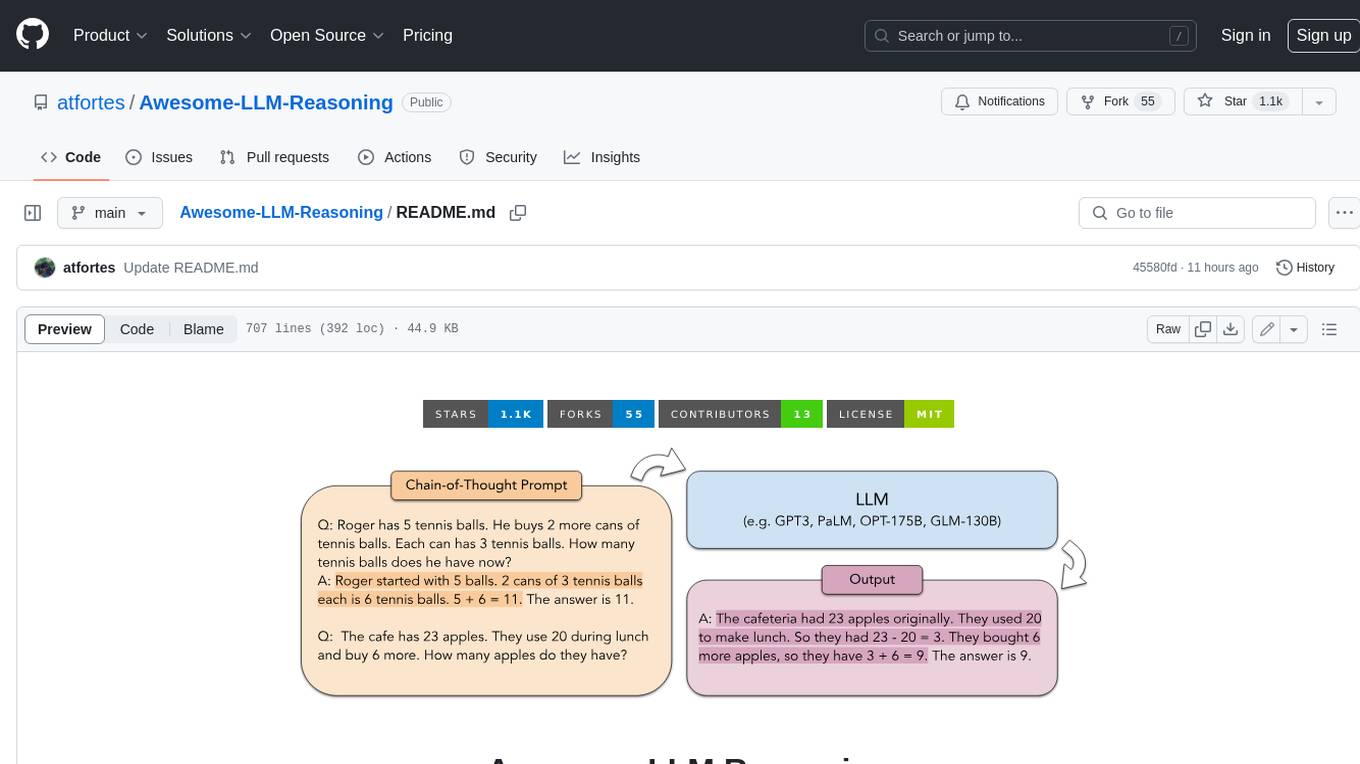

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

Awesome-LLM-Preference-Learning

The repository 'Awesome-LLM-Preference-Learning' is the official repository of a survey paper titled 'Towards a Unified View of Preference Learning for Large Language Models: A Survey'. It contains a curated list of papers related to preference learning for Large Language Models (LLMs). The repository covers various aspects of preference learning, including on-policy and off-policy methods, feedback mechanisms, reward models, algorithms, evaluation techniques, and more. The papers included in the repository explore different approaches to aligning LLMs with human preferences, improving mathematical reasoning in LLMs, enhancing code generation, and optimizing language model performance.

llm-self-correction-papers

This repository contains a curated list of papers focusing on the self-correction of large language models (LLMs) during inference. It covers various frameworks for self-correction, including intrinsic self-correction, self-correction with external tools, self-correction with information retrieval, and self-correction with training designed specifically for self-correction. The list includes survey papers, negative results, and frameworks utilizing reinforcement learning and OpenAI o1-like approaches. Contributions are welcome through pull requests following a specific format.

Awesome-LLM-Reasoning-Openai-o1-Survey

The repository 'Awesome LLM Reasoning Openai-o1 Survey' provides a collection of survey papers and related works on OpenAI o1, focusing on topics such as LLM reasoning, self-play reinforcement learning, complex logic reasoning, and scaling law. It includes papers from various institutions and researchers, showcasing advancements in reasoning bootstrapping, reasoning scaling law, self-play learning, step-wise and process-based optimization, and applications beyond math. The repository serves as a valuable resource for researchers interested in exploring the intersection of language models and reasoning techniques.

LLM-as-a-Judge

LLM-as-a-Judge is a repository that includes papers discussed in a survey paper titled 'A Survey on LLM-as-a-Judge'. The repository covers various aspects of using Large Language Models (LLMs) as judges for tasks such as evaluation, reasoning, and decision-making. It provides insights into evaluation pipelines, improvement strategies, and specific tasks related to LLMs. The papers included in the repository explore different methodologies, applications, and future research directions for leveraging LLMs as evaluators in various domains.

awesome-ai-llm4education

The 'awesome-ai-llm4education' repository is a curated list of papers related to artificial intelligence (AI) and large language models (LLM) for education. It collects papers from top conferences, journals, and specialized domain-specific conferences, categorizing them based on specific tasks for better organization. The repository covers a wide range of topics including tutoring, personalized learning, assessment, material preparation, specific scenarios like computer science, language, math, and medicine, aided teaching, as well as datasets and benchmarks for educational research.

awesome-open-ended

A curated list of open-ended learning AI resources focusing on algorithms that invent new and complex tasks endlessly, inspired by human advancements. The repository includes papers, safety considerations, surveys, perspectives, and blog posts related to open-ended AI research.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLMs) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLMs. The repository includes research papers, tools, and techniques related to leveraging LLMs for tasks like data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, knob tuning, query optimization, and database diagnosis.

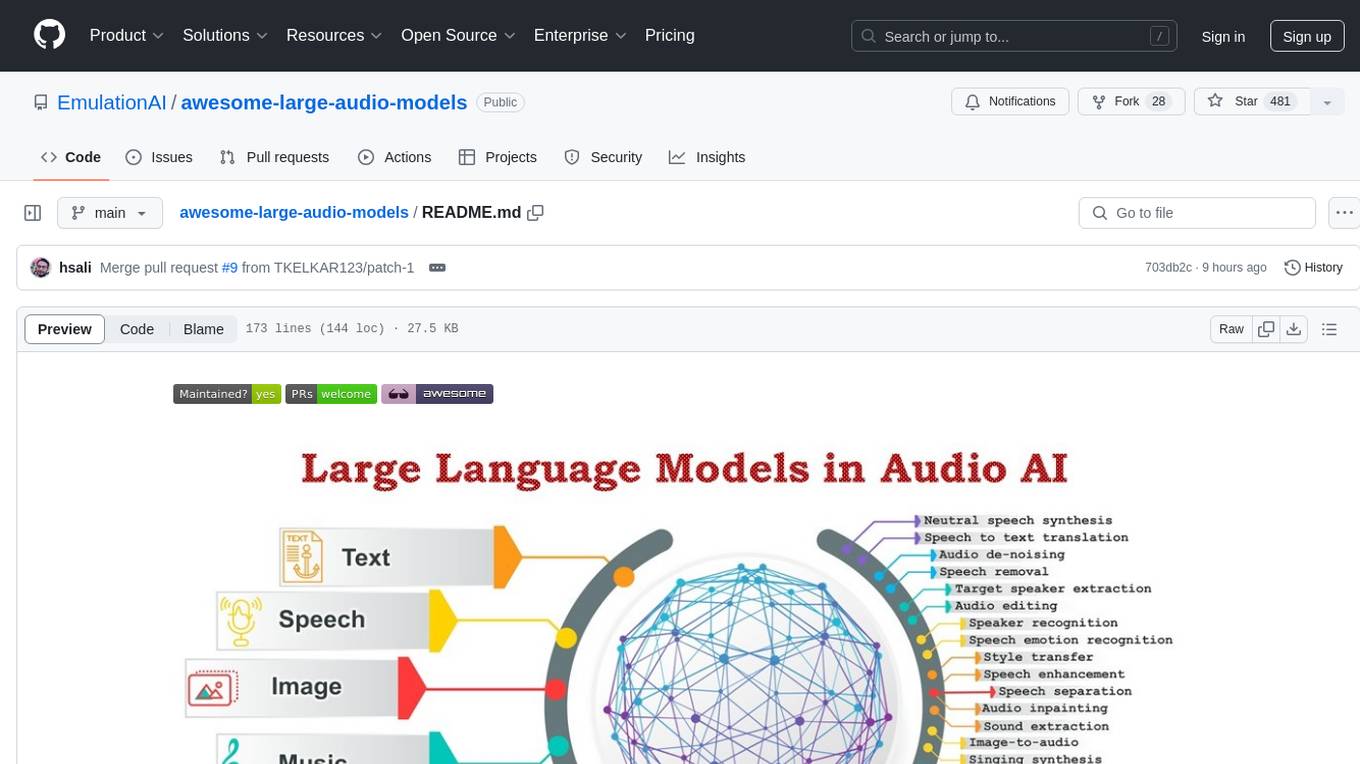

awesome-large-audio-models

This repository is a curated list of awesome large AI models in audio signal processing, focusing on the application of large language models to audio tasks. It includes survey papers, popular large audio models, automatic speech recognition, neural speech synthesis, speech translation, other speech applications, large audio models in music, and audio datasets. The repository aims to provide a comprehensive overview of recent advancements and challenges in applying large language models to audio signal processing, showcasing the efficacy of transformer-based architectures in various audio tasks.

LLM4DB

LLM4DB is a repository focused on the intersection of Large Language Models (LLM) and Database technologies. It covers various aspects such as data processing, data analysis, database optimization, and data management for LLM. The repository includes works on data cleaning, entity matching, schema matching, data discovery, NL2SQL, data exploration, data visualization, configuration tuning, query optimization, and anomaly diagnosis using LLMs. It aims to provide insights and advancements in leveraging LLMs for improving data processing, analysis, and database management tasks.

For similar tasks

awesome-llm-role-playing-with-persona

Awesome-llm-role-playing-with-persona is a curated list of resources for large language models for role-playing with assigned personas. It includes papers and resources related to persona-based dialogue systems, personalized response generation, psychology of LLMs, biases in LLMs, and more. The repository aims to provide a comprehensive collection of research papers and tools for exploring role-playing abilities of large language models in various contexts.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.