awesome-sound_event_detection

Reading list for research topics in Sound AI

Stars: 147

The 'awesome-sound_event_detection' repository is a curated reading list focusing on sound event detection and Sound AI. It includes research papers covering various sub-areas such as learning formulation, network architecture, pooling functions, missing or noisy audio, data augmentation, representation learning, multi-task learning, few-shot learning, zero-shot learning, knowledge transfer, polyphonic sound event detection, loss functions, audio and visual tasks, audio captioning, audio retrieval, audio generation, and more. The repository provides a comprehensive collection of papers, datasets, and resources related to sound event detection and Sound AI, making it a valuable reference for researchers and practitioners in the field.

README:

Sound event detection aims at processing the continuous acoustic signal and converting it into symbolic descriptions of the corresponding sound events present at the auditory scene. Sound event detection can be utilized in a variety of applications, including context-based indexing and retrieval in multimedia databases, unobtrusive monitoring in health care, and surveillance. Since 2017, to utilise large multimedia data available, learning acoustic information from weak annotations was formulated. This reading list consists of papers for sound event detection and Sound AI.

Papers covering multiple sub-areas are listed in both the sections. If there are any areas, papers, and datasets I missed, please let me know or feel free to make a pull request.

The reading list is no longer being actively maintained. However, PRs for relevant papers are welcomed.

INTERSPEECH 2022 papers added

ICASSP 2022 papers added

The reading list is expanded to include topics in Sound AI

WASPAA 2021 papers added

INTERSPEECH 2021 papers added

ICASSP 2021 papers added

- Survey Papers

-

Areas

- Learning formulation

- Network architecture

- Pooling fuctions

- Missing or noisy audio

- Data Augmentation

- Audio Generation

- Representation Learning

- Multi-Task Learning

- Adversarial Attacks

- Few-Shot

- Zero-Shot

- Knowledge-transfer

- Polyphonic SED

- Loss function

- Audio and Visual

- Audio Captioning

- Audio Retrieval

- Healthcare

- Robotics

- Dataset

- Workshops/Conferences/Journals

- More

Sound event detection and time–frequency segmentation from weakly labelled data, TASLP 2019

Sound Event Detection: A Tutorial, IEEE Signal Processing Magazine, Volume 38, Issue 5

Automated Audio Captioning: an Overview of Recent Progress and New Challenges, EURASIP Journal on Audio Speech and Music Processing 2022

Weakly supervised scalable audio content analysis, ICME 2016

Audio Event Detection using Weakly Labeled Data, 24th ACM Multimedia Conference 2016

An approach for self-training audio event detectors using web data, 25th EUSIPCO 2017

A joint detection-classification model for audio tagging of weakly labelled data, ICASSP 2017

Connectionist Temporal Localization for Sound Event Detection with Sequential Labeling, ICASSP 2019

Multi-Task Learning for Interpretable Weakly Labelled Sound Event Detection, ArXiv 2020

A Sequential Self Teaching Approach for Improving Generalization in Sound Event Recognition, ICML 2020

Non-Negative Matrix Factorization-Convolutional Neural Network (NMF-CNN) For Sound Event Detection, ArXiv 2020

Duration robust weakly supervised sound event detection, ICASSP 2020

SeCoST:: Sequential Co-Supervision for Large Scale Weakly Labeled Audio Event Detection, ICASSP 2020

Guided Learning for Weakly-Labeled Semi-Supervised Sound Event Detection, ICASSP 2020

Unsupervised Contrastive Learning of Sound Event Representations, ICASSP 2021

Sound Event Detection Based on Curriculum Learning Considering Learning Difficulty of Events, ICASSP 2021

Comparison of Deep Co-Training and Mean-Teacher Approaches for Semi-Supervised Audio Tagging, ICASSP 2021

Enhancing Audio Augmentation Methods with Consistency Learning, ICASSP 2021

Weakly-supervised audio event detection using event-specific Gaussian filters and fully convolutional networks, ICASSP 2017

Deep CNN Framework for Audio Event Recognition using Weakly Labeled Web Data, NIPS Workshop on Machine Learning for Audio 2017

Large-Scale Weakly Supervised Audio Classification Using Gated Convolutional Neural Network, ICASSP 2018

Orthogonality-Regularized Masked NMF for Learning on Weakly Labeled Audio Data, ICASSP 2018

Sound event detection and time–frequency segmentation from weakly labelled data, TASLP 2019

Attention-based Atrous Convolutional Neural Networks: Visualisation and Understanding Perspectives of Acoustic Scenes, ICASSP 2019

Sound Event Detection of Weakly Labelled Data With CNN-Transformer and Automatic Threshold Optimization, TASLP 2020

DD-CNN: Depthwise Disout Convolutional Neural Network for Low-complexity Acoustic Scene Classification, ArXiv 2020

Effective Perturbation based Semi-Supervised Learning Method for Sound Event Detection, INTERSPEECH 2020

Weakly-Supervised Sound Event Detection with Self-Attention, ICASSP 2020

Improving Deep Learning Sound Events Classifiers using Gram Matrix Feature-wise Correlations, ICASSP 2021

An Improved Event-Independent Network for Polyphonic Sound Event Localization and Detection, ICASSP 2021

AST: Audio Spectrogram Transformer, INTERSPEECH 2021

Event Specific Attention for Polyphonic Sound Event Detection, INTERSPEECH 2021

Sound Event Detection with Adaptive Frequency Selection, WASPAA 2021

SSAST: Self-Supervised Audio Spectrogram Transformer, AAAI 2022

HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound Classification and Detection, ICASSP 2022

MAE-AST: Masked Autoencoding Audio Spectrogram Transformer, INTERSPEECH 2022

Efficient Training of Audio Transformers with Patchout, INTERSPEECH 2022

BEATs: Audio Pre-Training with Acoustic Tokenizers, ArXiv 2022

Adaptive Pooling Operators for Weakly Labeled Sound Event Detection, TASLP 2018

Comparing the Max and Noisy-Or Pooling Functions in Multiple Instance Learning for Weakly Supervised Sequence Learning Tasks, Interspeech 2018

A Comparison of Five Multiple Instance Learning Pooling Functions for Sound Event Detection with Weak Labeling, ICASSP 2019

Hierarchical Pooling Structure for Weakly Labeled Sound Event Detection, INTERSPEECH 2019

Weakly labelled audioset tagging with attention neural networks, TASLP 2019

Sound event detection and time–frequency segmentation from weakly labelled data, TASLP 2019

Multi-Task Learning for Interpretable Weakly Labelled Sound Event Detection, ArXiv 2019

A Global-Local Attention Framework for Weakly Labelled Audio Tagging, ICASSP 2021

Sound event detection and time–frequency segmentation from weakly labelled data, TASLP 2019

Multi-Task Learning for Interpretable Weakly Labelled Sound Event Detection, ArXiv 2019

Improving weakly supervised sound event detection with self-supervised auxiliary tasks, INTERSPEECH 2021

SpecAugment++: A Hidden Space Data Augmentation Method for Acoustic Scene Classification, INTERSPEECH 2021

Contrastive Predictive Coding of Audio with an Adversary, INTERSPEECH 2020

Towards Learning a Universal Non-Semantic Representation of Speech, INTERSPEECH 2021

ACCDOA: Activity-Coupled Cartesian Direction of Arrival Representation for Sound Event Localization and Detection, ICASSP 2021

FRILL: A Non-Semantic Speech Embedding for Mobile Devices, INTERSPEECH 2021

HEAR 2021: Holistic Evaluation of Audio Representations, PMLR: NeurIPS 2021 Competition Track

Conformer-Based Self-Supervised Learning for Non-Speech Audio Tasks, ICASSP 2022

Towards Learning Universal Audio Representations, ICASSP 2022

SSAST: Self-Supervised Audio Spectrogram Transformer, AAAI 2022

A Joint Separation-Classification Model for Sound Event Detection of Weakly Labelled Data, ICASSP 2018

Multi-Task Learning for Interpretable Weakly Labelled Sound Event Detection, ArXiv 2019

Multi-Task Learning and post processing optimisation for sound event detection, DCASE 2019

Label-efficient audio classification through multitask learning and self-supervision, ICLR 2019

A Joint Framework for Audio Tagging and Weakly Supervised Acoustic Event Detection Using DenseNet with Global Average Pooling, INTERSPEECH 2020

Improving weakly supervised sound event detection with self-supervised auxiliary tasks, INTERSPEECH 2021

Identifying Actions for Sound Event Classification, WASPAA 2021

Impact of Acoustic Event Tagging on Scene Classification in a Multi-Task Learning Framework, INTERSPEECH 2022

Few-Shot Audio Classification with Attentional Graph Neural Networks, INTERSPEECH 2019

Continual Learning of New Sound Classes Using Generative Replay, WASSPA 2019

Few-Shot Sound Event Detection, ICASSP 2020

Few-Shot Continual Learning for Audio Classification, ICASSP 2021

Unsupervised and Semi-Supervised Few-Shot Acoustic Event Classification, ICASSP 2021

Who Calls the Shots? Rethinking Few-Shot Learning for Audio, WASPAA 2021

A Mutual Learning Framework For Few-Shot Sound Event Detection, ICASSP 2022

Active Few-Shot Learning for Sound Event Detection, INTERSPEECH 2022

Adapting Language-Audio Models as Few-Shot Audio Learners, INTERSPEECH 2023

AudioCLIP: Extending CLIP to Image, Text and Audio, ICASSP 2022

Wav2CLIP: Learning Robust Audio Representations From CLIP, ICASSP 2022

CLAP 👏: Learning Audio Concepts From Natural Language Supervision, ICASSP 2023

Large-scale Contrastive Language-Audio Pretraining with Feature Fusion and Keyword-to-Caption Augmentation, ICASSP 2023

Listen, Think, and Understand, ArXiv 2023

Pengi 🐧: An Audio Language Model for Audio Tasks, ArXiv 2023

Unified-IO 2: Scaling Autoregressive Multimodal Models with Vision, Language, Audio, and Action, ArXiv 2023

ONE-PEACE: Exploring One General Representation Model Toward Unlimited Modalities, ArXiv 2023

Qwen-Audio: Advancing Universal Audio Understanding via Unified Large-Scale Audio-Language Models, ArXiv 2023

Audio Flamingo: A Novel Audio Language Model with Few-Shot Learning and Dialogue Abilities, ArXiv 2024

Transfer learning of weakly labelled audio, WASPAA 2017

Knowledge Transfer from Weakly Labeled Audio Using Convolutional Neural Network for Sound Events and Scenes, ICASSP 2018

PANNs: Large-Scale Pretrained Audio Neural Networks for Audio Pattern Recognition, TASLP 2020

Do sound event representations generalize to other audio tasks? A case study in audio transfer learning, INTERSPEECH 2021

A first attempt at polyphonic sound event detection using connectionist temporal classification, ICASSP 2017

Polyphonic Sound Event Detection with Weak Labeling, Thesis 2018

Polyphonic Sound Event Detection and Localization using a Two-Stage Strategy, DCASE 2019

Evaluation of Post-Processing Algorithms for Polyphonic Sound Event Detection, WASPAA 2019

Specialized Decision Surface and Disentangled Feature for Weakly-Supervised Polyphonic Sound Event Detection, TASLP 2020

Spatial Data Augmentation with Simulated Room Impulse Responses for Sound Event Localization and Detection, ICASSP 2022

Impact of Sound Duration and Inactive Frames on Sound Event Detection Performance, ICASSP 2021

A Light-Weight Multimodal Framework for Improved Environmental Audio Tagging, ICASSP 2018

Large Scale Audiovisual Learning of Sounds with Weakly Labeled Data, IJCAI 2020

Labelling unlabelled videos from scratch with multi-modal self-supervision, NeurIPS 2020

Audio-Visual Event Recognition Through the Lens of Adversary, ICASSP 2021

Taming Visually Guided Sound Generation, BMVC 2021

Learning Audio-Video Modalities from Image Captions, ECCV 2022

UAVM: Towards Unifying Audio and Visual Models, IEEE Signal Processing letters

Contrastive Audio-Visual Masked Autoencoder, ICLR 2023

Automated audio captioning with recurrent neural networks, WASPAA 2017

Audio caption: Listen and tell, ICASSP 2018

AudioCaps: Generating captions for audios in the wild, NAACL 2019

Audio Captioning Based on Combined Audio and Semantic Embeddings, ISM 2020

Clotho: An Audio Captioning Dataset, ICASSP 2020

A Transformer-based Audio Captioning Model with Keyword Estimation, INTERSPEECH 2020

Text-to-Audio Grounding: Building Correspondence Between Captions and Sound Events, ICASSP 2021

Learning Contextual Tag Embeddings for Cross-Modal Alignment of Audio and Tags, ICASSP 2021

Automated Audio Captioning using Transfer Learning and Reconstruction Latent Space Similarity Regularization, ICASSP 2022

Sound Event Detection Guided by Semantic Contexts of Scenes, ICASSP 2022

Interactive Audio-text Representation for Automated Audio Captioning with Contrastive Learning, INTERSPEECH 2022

Audio Retrieval with Natural Language Queries: A Benchmark Study, IEEE Transactions on Multimedia 2022

On Metric Learning for Audio-Text Cross-Modal Retrieval, INTERSPEECH 2022

Introducing Auxiliary Text Query-modifier to Content-based Audio Retrieval, INTERSPEECH 2022

Audio Retrieval with WavText5K and CLAP Training, ArXiv 2022

Acoustic Scene Generation with Conditional Samplernn, ICASSP 2019

Conditional Sound Generation Using Neural Discrete Time-Frequency Representation Learning, MLSP 2021

Taming Visually Guided Sound Generation, BMVC 2021

Diffsound: Discrete Diffusion Model for Text-to-sound Generation, ArXiv 2022

AudioGen: Textually Guided Audio Generation, ICML 2023

Make-An-Audio: Text-To-Audio Generation with Prompt-Enhanced Diffusion Models, ArXiv 2023

AudioLDM: Text-to-Audio Generation with Latent Diffusion Models, ICML 2023

AUDIT: Audio Editing by Following Instructions with Latent Diffusion Models, ArXiv 2023

Diverse and Vivid Sound Generation from Text Descriptions, ICASSP 2023

Make-An-Audio 2: Temporal-Enhanced Text-to-Audio Generation, ArXiv 2023

Simple and Controllable Music Generation, ArXiv 2023

Audiobox: Unified Audio Generation with Natural Language Prompts, ArXiv 2023

Masked Audio Generation using a Single Non-Autoregressive Transformer, ArXiv 2024

Audio event and scene recognition: A unified approach using strongly and weakly labeled data, IJCNN 2017

Sound Event Detection Using Point-Labeled Data, WASPAA 2019

An Investigation of the Effectiveness of Phase for Audio Classification, ICASSP 2022

| Task | Dataset | Source | Num. Files |

|---|---|---|---|

| Sound Event Classification | ESC-50 | freesound.org | 2k files |

| Sound Event Classification | DCASE17 Task 4 | YT videos | 2k files |

| Sound Event Classification | US8K | freesound.org | 8k files |

| Sound Event Classification | FSD50K | freesound.org | 50k files |

| Sound Event Classification | AudioSet | YT videos | 2M files |

| COVID-19 Detection using Coughs | DiCOVA | Volunteers recording audio via a website | 1k files |

| Few-shot Bioacoustic Event Detection | DCASE21 Task 5 | audio | 4k+ files |

| Acoustic Scene Classification | DCASE18 Task 1 | Recorded by TUT | 1.5k |

| Various | VGG-Sound | Web videos | 200k files |

| Audio Captioning | Clotho | freesound.org | 5k files |

| Audio Captioning | AudioCaps | YT videos | 51k files |

| Audio-text | SoundDescs | BBC Sound Effects | 32k files |

| Audio-text | WavText5K | Varied | 5k files |

| Audio-text | LAION 630k | Varied | 630k files |

| Audio-text | WavCaps | Varied | 400k files |

| Action Recognition | UCF101 | Web videos | 13k files |

| Unlabeled | YFCC100M | Yahoo videos | 1M files |

Other audio-based datasets to consider

DCASE dataset list

List of old workshops (archived) and on-going workshops/conferences/journals:

| Venues | link |

|---|---|

| Machine Learning for Audio Signal Processing, NIPS 2017 workshop | https://nips.cc/Conferences/2017/Schedule?showEvent=8790 |

| MLSP: Machine Learning for Signal Processing | https://ieeemlsp.cc/ |

| WASPAA: IEEE Workshop on Applications of Signal Processing to Audio and Acoustics | https://www.waspaa.com |

| ICASSP: IEEE International Conference on Acoustics Speech and Signal Processing | https://2021.ieeeicassp.org/ |

| INTERSPEECH | https://www.interspeech2021.org/ |

| IEEE/ACM Transactions on Audio, Speech and Language Processing | https://dl.acm.org/journal/taslp |

| DCASE | http://dcase.community/ |

Computational Analysis of Sound Scenes and Events

- If you are interested in audio-captioning, K. Drossos maintains a detailed reading list here

- Tracking states of the arts and recent results (bibliography) on sound AI topics and audio tasks here

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-sound_event_detection

Similar Open Source Tools

awesome-sound_event_detection

The 'awesome-sound_event_detection' repository is a curated reading list focusing on sound event detection and Sound AI. It includes research papers covering various sub-areas such as learning formulation, network architecture, pooling functions, missing or noisy audio, data augmentation, representation learning, multi-task learning, few-shot learning, zero-shot learning, knowledge transfer, polyphonic sound event detection, loss functions, audio and visual tasks, audio captioning, audio retrieval, audio generation, and more. The repository provides a comprehensive collection of papers, datasets, and resources related to sound event detection and Sound AI, making it a valuable reference for researchers and practitioners in the field.

llms-tools

The 'llms-tools' repository is a comprehensive collection of AI tools, open-source projects, and research related to Large Language Models (LLMs) and Chatbots. It covers a wide range of topics such as AI in various domains, open-source models, chats & assistants, visual language models, evaluation tools, libraries, devices, income models, text-to-image, computer vision, audio & speech, code & math, games, robotics, typography, bio & med, military, climate, finance, and presentation. The repository provides valuable resources for researchers, developers, and enthusiasts interested in exploring the capabilities of LLMs and related technologies.

awesome-generative-ai-guide

This repository serves as a comprehensive hub for updates on generative AI research, interview materials, notebooks, and more. It includes monthly best GenAI papers list, interview resources, free courses, and code repositories/notebooks for developing generative AI applications. The repository is regularly updated with the latest additions to keep users informed and engaged in the field of generative AI.

ianvs

Ianvs is a distributed synergy AI benchmarking project incubated in KubeEdge SIG AI. It aims to test the performance of distributed synergy AI solutions following recognized standards, providing end-to-end benchmark toolkits, test environment management tools, test case control tools, and benchmark presentation tools. It also collaborates with other organizations to establish comprehensive benchmarks and related applications. The architecture includes critical components like Test Environment Manager, Test Case Controller, Generation Assistant, Simulation Controller, and Story Manager. Ianvs documentation covers quick start, guides, dataset descriptions, algorithms, user interfaces, stories, and roadmap.

AI.Labs

AI.Labs is an open-source project that integrates advanced artificial intelligence technologies to create a powerful AI platform. It focuses on integrating AI services like large language models, speech recognition, and speech synthesis for functionalities such as dialogue, voice interaction, and meeting transcription. The project also includes features like a large language model dialogue system, speech recognition for meeting transcription, speech-to-text voice synthesis, integration of translation and chat, and uses technologies like C#, .Net, SQLite database, XAF, OpenAI API, TTS, and STT.

awesome-openvino

Awesome OpenVINO is a curated list of AI projects based on the OpenVINO toolkit, offering a rich assortment of projects, libraries, and tutorials covering various topics like model optimization, deployment, and real-world applications across industries. It serves as a valuable resource continuously updated to maximize the potential of OpenVINO in projects, featuring projects like Stable Diffusion web UI, Visioncom, FastSD CPU, OpenVINO AI Plugins for GIMP, and more.

LLM-PLSE-paper

LLM-PLSE-paper is a repository focused on the applications of Large Language Models (LLMs) in Programming Language and Software Engineering (PL/SE) domains. It covers a wide range of topics including bug detection, specification inference and verification, code generation, fuzzing and testing, code model and reasoning, code understanding, IDE technologies, prompting for reasoning tasks, and agent/tool usage and planning. The repository provides a comprehensive collection of research papers, benchmarks, empirical studies, and frameworks related to the capabilities of LLMs in various PL/SE tasks.

ManipVQA

ManipVQA is a framework that enhances Multimodal Large Language Models (MLLMs) with manipulation-centric knowledge through a Visual Question-Answering (VQA) format. It addresses the deficiency of conventional MLLMs in understanding affordances and physical concepts crucial for manipulation tasks. By infusing robotics-specific knowledge, including tool detection, affordance recognition, and physical concept comprehension, ManipVQA improves the performance of robots in manipulation tasks. The framework involves fine-tuning MLLMs with a curated dataset of interactive objects, enabling robots to understand and execute natural language instructions more effectively.

Main

This repository contains material related to the new book _Synthetic Data and Generative AI_ by the author, including code for NoGAN, DeepResampling, and NoGAN_Hellinger. NoGAN is a tabular data synthesizer that outperforms GenAI methods in terms of speed and results, utilizing state-of-the-art quality metrics. DeepResampling is a fast NoGAN based on resampling and Bayesian Models with hyperparameter auto-tuning. NoGAN_Hellinger combines NoGAN and DeepResampling with the Hellinger model evaluation metric.

geoai

geoai is a Python package designed for utilizing Artificial Intelligence (AI) in the context of geospatial data. It allows users to visualize various types of geospatial data such as vector, raster, and LiDAR data. Additionally, the package offers functionalities for segmenting remote sensing imagery using the Segment Anything Model and classifying remote sensing imagery with deep learning models. With a focus on geospatial AI applications, geoai provides a versatile tool for processing and analyzing spatial data with the power of AI.

LLMEvaluation

The LLMEvaluation repository is a comprehensive compendium of evaluation methods for Large Language Models (LLMs) and LLM-based systems. It aims to assist academics and industry professionals in creating effective evaluation suites tailored to their specific needs by reviewing industry practices for assessing LLMs and their applications. The repository covers a wide range of evaluation techniques, benchmarks, and studies related to LLMs, including areas such as embeddings, question answering, multi-turn dialogues, reasoning, multi-lingual tasks, ethical AI, biases, safe AI, code generation, summarization, software performance, agent LLM architectures, long text generation, graph understanding, and various unclassified tasks. It also includes evaluations for LLM systems in conversational systems, copilots, search and recommendation engines, task utility, and verticals like healthcare, law, science, financial, and others. The repository provides a wealth of resources for evaluating and understanding the capabilities of LLMs in different domains.

FastFlowLM

FastFlowLM is a Python library for efficient and scalable language model inference. It provides a high-performance implementation of language model scoring using n-gram language models. The library is designed to handle large-scale text data and can be easily integrated into natural language processing pipelines for tasks such as text generation, speech recognition, and machine translation. FastFlowLM is optimized for speed and memory efficiency, making it suitable for both research and production environments.

interaqt

Interaqt is a project that aims to separate application business logic from its specific implementation by providing a structured data model and tools to automatically decide and implement software architecture. It liberates individuals and teams from implementation specifics, performance requirements, and cost demands, allowing them to focus on articulating business logic. The approach is considered optimal in the era of large language models (LLMs) as it eliminates uncertainty in generated systems and enables independence from engineering involvement unless specific capabilities are required.

amazon-sagemaker-generativeai

Repository for training and deploying Generative AI models, including text-text, text-to-image generation, prompt engineering playground and chain of thought examples using SageMaker Studio. The tool provides a platform for users to experiment with generative AI techniques, enabling them to create text and image outputs based on input data. It offers a range of functionalities for training and deploying models, as well as exploring different generative AI applications.

xlstm-jax

The xLSTM-jax repository contains code for training and evaluating the xLSTM model on language modeling using JAX. xLSTM is a Recurrent Neural Network architecture that improves upon the original LSTM through Exponential Gating, normalization, stabilization techniques, and a Matrix Memory. It is optimized for large-scale distributed systems with performant triton kernels for faster training and inference.

For similar tasks

awesome-sound_event_detection

The 'awesome-sound_event_detection' repository is a curated reading list focusing on sound event detection and Sound AI. It includes research papers covering various sub-areas such as learning formulation, network architecture, pooling functions, missing or noisy audio, data augmentation, representation learning, multi-task learning, few-shot learning, zero-shot learning, knowledge transfer, polyphonic sound event detection, loss functions, audio and visual tasks, audio captioning, audio retrieval, audio generation, and more. The repository provides a comprehensive collection of papers, datasets, and resources related to sound event detection and Sound AI, making it a valuable reference for researchers and practitioners in the field.

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

HPT

Hyper-Pretrained Transformers (HPT) is a novel multimodal LLM framework from HyperGAI, trained for vision-language models capable of understanding both textual and visual inputs. The repository contains the open-source implementation of inference code to reproduce the evaluation results of HPT Air on different benchmarks. HPT has achieved competitive results with state-of-the-art models on various multimodal LLM benchmarks. It offers models like HPT 1.5 Air and HPT 1.0 Air, providing efficient solutions for vision-and-language tasks.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

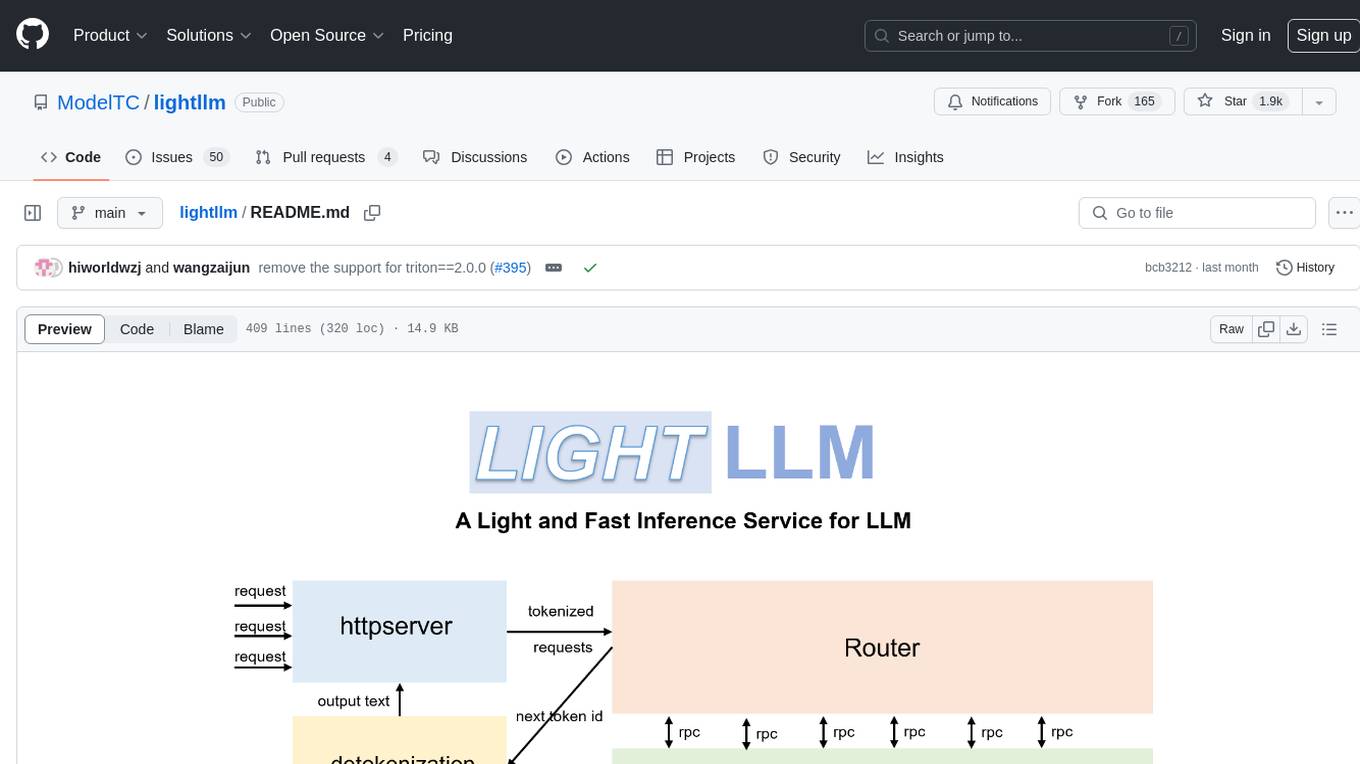

lightllm

LightLLM is a Python-based LLM (Large Language Model) inference and serving framework known for its lightweight design, scalability, and high-speed performance. It offers features like tri-process asynchronous collaboration, Nopad for efficient attention operations, dynamic batch scheduling, FlashAttention integration, tensor parallelism, Token Attention for zero memory waste, and Int8KV Cache. The tool supports various models like BLOOM, LLaMA, StarCoder, Qwen-7b, ChatGLM2-6b, Baichuan-7b, Baichuan2-7b, Baichuan2-13b, InternLM-7b, Yi-34b, Qwen-VL, Llava-7b, Mixtral, Stablelm, and MiniCPM. Users can deploy and query models using the provided server launch commands and interact with multimodal models like QWen-VL and Llava using specific queries and images.

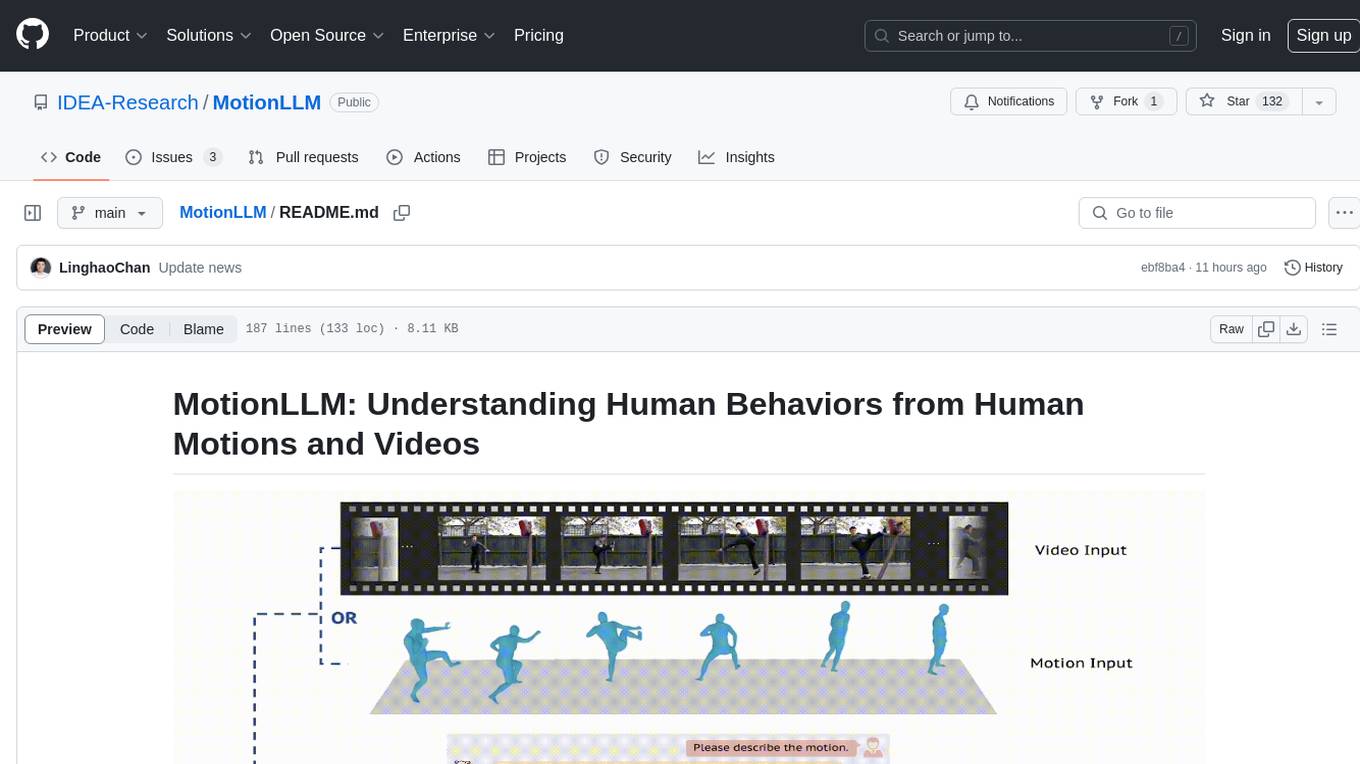

MotionLLM

MotionLLM is a framework for human behavior understanding that leverages Large Language Models (LLMs) to jointly model videos and motion sequences. It provides a unified training strategy, dataset MoVid, and MoVid-Bench for evaluating human behavior comprehension. The framework excels in captioning, spatial-temporal comprehension, and reasoning abilities.

Vitron

Vitron is a unified pixel-level vision LLM designed for comprehensive understanding, generating, segmenting, and editing static images and dynamic videos. It addresses challenges in existing vision LLMs such as superficial instance-level understanding, lack of unified support for images and videos, and insufficient coverage across various vision tasks. The tool requires Python >= 3.8, Pytorch == 2.1.0, and CUDA Version >= 11.8 for installation. Users can deploy Gradio demo locally and fine-tune their models for specific tasks.

AI-Competition-Collections

AI-Competition-Collections is a repository that collects and curates various experiences and tips from AI competitions. It includes posts on competition experiences in computer vision, NLP, speech, and other AI-related fields. The repository aims to provide valuable insights and techniques for individuals participating in AI competitions, covering topics such as image classification, object detection, OCR, adversarial attacks, and more.

For similar jobs

metavoice-src

MetaVoice-1B is a 1.2B parameter base model trained on 100K hours of speech for TTS (text-to-speech). It has been built with the following priorities: * Emotional speech rhythm and tone in English. * Zero-shot cloning for American & British voices, with 30s reference audio. * Support for (cross-lingual) voice cloning with finetuning. * We have had success with as little as 1 minute training data for Indian speakers. * Synthesis of arbitrary length text

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

bark.cpp

Bark.cpp is a C/C++ implementation of the Bark model, a real-time, multilingual text-to-speech generation model. It supports AVX, AVX2, and AVX512 for x86 architectures, and is compatible with both CPU and GPU backends. Bark.cpp also supports mixed F16/F32 precision and 4-bit, 5-bit, and 8-bit integer quantization. It can be used to generate realistic-sounding audio from text prompts.

NSMusicS

NSMusicS is a local music software that is expected to support multiple platforms with AI capabilities and multimodal features. The goal of NSMusicS is to integrate various functions (such as artificial intelligence, streaming, music library management, cross platform, etc.), which can be understood as similar to Navidrome but with more features than Navidrome. It wants to become a plugin integrated application that can almost have all music functions.

ai-voice-cloning

This repository provides a tool for AI voice cloning, allowing users to generate synthetic speech that closely resembles a target speaker's voice. The tool is designed to be user-friendly and accessible, with a graphical user interface that guides users through the process of training a voice model and generating synthetic speech. The tool also includes a variety of features that allow users to customize the generated speech, such as the pitch, volume, and speaking rate. Overall, this tool is a valuable resource for anyone interested in creating realistic and engaging synthetic speech.

RVC_CLI

**RVC_CLI: Retrieval-based Voice Conversion Command Line Interface** This command-line interface (CLI) provides a comprehensive set of tools for voice conversion, enabling you to modify the pitch, timbre, and other characteristics of audio recordings. It leverages advanced machine learning models to achieve realistic and high-quality voice conversions. **Key Features:** * **Inference:** Convert the pitch and timbre of audio in real-time or process audio files in batch mode. * **TTS Inference:** Synthesize speech from text using a variety of voices and apply voice conversion techniques. * **Training:** Train custom voice conversion models to meet specific requirements. * **Model Management:** Extract, blend, and analyze models to fine-tune and optimize performance. * **Audio Analysis:** Inspect audio files to gain insights into their characteristics. * **API:** Integrate the CLI's functionality into your own applications or workflows. **Applications:** The RVC_CLI finds applications in various domains, including: * **Music Production:** Create unique vocal effects, harmonies, and backing vocals. * **Voiceovers:** Generate voiceovers with different accents, emotions, and styles. * **Audio Editing:** Enhance or modify audio recordings for podcasts, audiobooks, and other content. * **Research and Development:** Explore and advance the field of voice conversion technology. **For Jobs:** * Audio Engineer * Music Producer * Voiceover Artist * Audio Editor * Machine Learning Engineer **AI Keywords:** * Voice Conversion * Pitch Shifting * Timbre Modification * Machine Learning * Audio Processing **For Tasks:** * Convert Pitch * Change Timbre * Synthesize Speech * Train Model * Analyze Audio

openvino-plugins-ai-audacity

OpenVINO™ AI Plugins for Audacity* are a set of AI-enabled effects, generators, and analyzers for Audacity®. These AI features run 100% locally on your PC -- no internet connection necessary! OpenVINO™ is used to run AI models on supported accelerators found on the user's system such as CPU, GPU, and NPU. * **Music Separation**: Separate a mono or stereo track into individual stems -- Drums, Bass, Vocals, & Other Instruments. * **Noise Suppression**: Removes background noise from an audio sample. * **Music Generation & Continuation**: Uses MusicGen LLM to generate snippets of music, or to generate a continuation of an existing snippet of music. * **Whisper Transcription**: Uses whisper.cpp to generate a label track containing the transcription or translation for a given selection of spoken audio or vocals.

WavCraft

WavCraft is an LLM-driven agent for audio content creation and editing. It applies LLM to connect various audio expert models and DSP function together. With WavCraft, users can edit the content of given audio clip(s) conditioned on text input, create an audio clip given text input, get more inspiration from WavCraft by prompting a script setting and let the model do the scriptwriting and create the sound, and check if your audio file is synthesized by WavCraft.