awesome-lifelong-llm-agent

This repository collects awesome survey, resource, and paper for lifelong learning LLM agents

Stars: 55

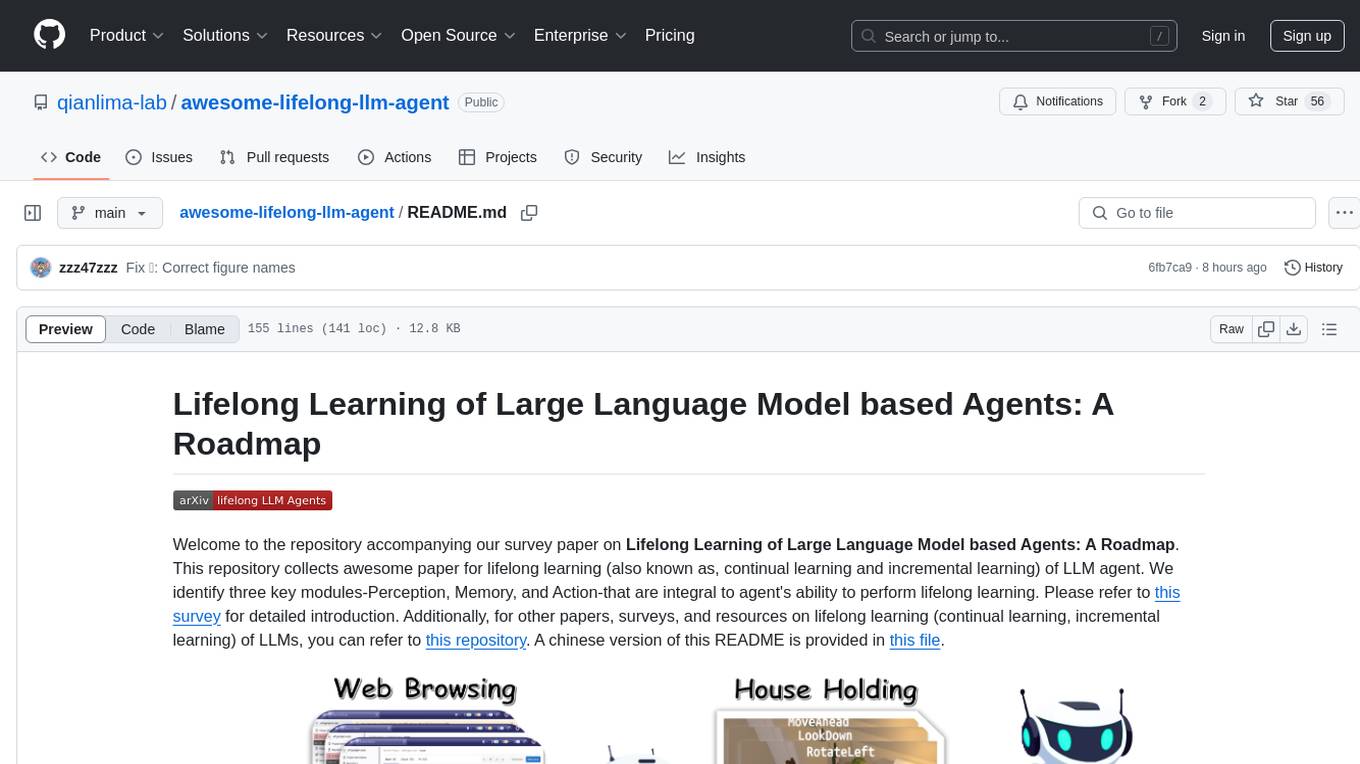

This repository is a collection of papers and resources related to Lifelong Learning of Large Language Model (LLM) based Agents. It focuses on continual learning and incremental learning of LLM agents, identifying key modules such as Perception, Memory, and Action. The repository serves as a roadmap for understanding lifelong learning in LLM agents and provides a comprehensive overview of related research and surveys.

README:

Welcome to the repository accompanying our survey paper on Lifelong Learning of Large Language Model based Agents: A Roadmap. This repository collects awesome paper for lifelong learning (also known as, continual learning and incremental learning) of LLM agent. We identify three key modules-Perception, Memory, and Action-that are integral to agent's ability to perform lifelong learning. Please refer to this survey for detailed introduction. Additionally, for other papers, surveys, and resources on lifelong learning (continual learning, incremental learning) of LLMs, you can refer to this repository. A chinese version of this README is provided in this file.

- 2025-1-14: We released a survey paper "Lifelong Learning of Large Language Model based Agents: A Roadmap". Feel free to cite or open pull requests.

| Title | Venue | Date |

|---|---|---|

| AgentOccam: A Simple Yet Strong Baseline for LLM-Based Web Agent | arXiv | 2024-10 |

| GPT-4V(ision) is a Generalist Web Agent, if Grounded | ICLR | 2024-01 |

| Webarena: A realistic web environment for building autonomous agents | ICLR | 2023-07 |

| Synapse: Trajectory-asexemplar prompting with memory for computer control | ICLR | 2023-06 |

| Multimodal web navigation with instruction-finetuned foundation models | ICLR | 2023-05 |

| Title | Venue | Date |

|---|---|---|

| Llms can evolve continually on modality for x-modal reasoning | NeurIPS | 2024-10 |

| Modaverse: Efficiently transforming modalities with llms | CVPR | 2024-01 |

| Omnivore: A single model for many visual modalities | CVPR | 2022-01 |

| Perceiver: General perception with iterative attention | ICML | 2021-07 |

| Vatt: Transformers for multimodal self-supervised learning from raw video, audio and text | NeurIPS | 2021-04 |

| Title | Venue | Date |

|---|---|---|

| Character-llm: A trainable agent for role-playing | EMNLP | 2023-10 |

| Connecting Large Language Models with Evolutionary Algorithms Yields Powerful Prompt Optimizers | ICLR | 2023-09 |

| Adapting Language Models to Compress Contexts | ACL | 2023-05 |

| Critic: Large language models can self-correct with tool-interactive critiquing} | NeurIPS Workshop | 2023-05 |

| Cogltx: Applying bert to long texts | NeurIPS | 2020-12 |

| Title | Venue | Date |

|---|---|---|

| ELDER: Enhancing Lifelong Model Editing with Mixture-of-LoRA | arXiv | 2024-08 |

| WISE: Rethinking the Knowledge Memory for Lifelong Model Editing of Large Language Models | NeurIPS | 2024-05 |

| WilKE: Wise-Layer Knowledge Editor for Lifelong Knowledge Editing | ACL | 2024-02 |

| Aging with GRACE: Lifelong Model Editing with Key-Value Adaptors | ICLR | 2022-11 |

| Plug-and-Play Adaptation for Continuously-updated QA | ACL | 2022-04 |

| Title | Venue | Date |

|---|---|---|

| AgentOccam: A Simple Yet Strong Baseline for LLM-Based Web Agent | arXiv | 2024-10 |

| WebPilot: A Versatile and Autonomous Multi-Agent System for Web Task Execution with Strategic Exploration | arXiv | 2024-08 |

| SteP: Stacked LLM Policies for Web Actions | COLM | 2024-07 |

| LASER: LLM Agent with State-Space Exploration for Web Navigation | NeurIPS Workshop | 2023-09 |

| Large Language Models Are Semi-Parametric Reinforcement Learning Agent | NeurIPS | 2023-06 |

| Title | Venue | Date |

|---|---|---|

| VLM Agents Generate Their Own Memories: Distilling Experience into Embodied Programs | arXiv | 2024-06 |

| Large Language Models as Tool Makers | ICLR | 2023-05 |

| On the Tool Manipulation Capability of Open-source Large Language Models | arXiv | 2023-05 |

| Voyager: An Open-Ended Embodied Agent with Large Language Models | arXiv | 2023-05 |

| ART: Automatic multi-step reasoning and tool-use for large language models | arXiv | 2023-03 |

| Title | Venue | Date |

|---|---|---|

| Reasoning with Language Model is Planning with World Model | EMNLP | 2023-05 |

| Large Language Models as Commonsense Knowledge for Large-Scale Task Planning | NeurIPS | 2023-05 |

| Tree of Thoughts: Deliberate Problem Solving with Large Language Models | NeurIPS | 2023-05 |

| SwiftSage: A Generative Agent with Fast and Slow Thinking for Complex Interactive Tasks | NeurIPS2023 | 2023-05 |

| Reflexion: Language Agents with Verbal Reinforcement Learning | NeurIPS | 2023-03 |

| ReAct: Synergizing Reasoning and Acting in Language Models | ICLR | 2022-10 |

@article{zheng2025lifelong,

title={Lifelong Learning of Large Language Model based Agents: A Roadmap},

author={Zheng, Junhao and Shi, Chengming and Cai, Xidi and Li, Qiuke and Zhang, Duzhen and Li, Chenxing and Yu, Dong and Ma, Qianli},

journal={arXiv preprint arXiv:2501.07278},

year={2025},

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-lifelong-llm-agent

Similar Open Source Tools

awesome-lifelong-llm-agent

This repository is a collection of papers and resources related to Lifelong Learning of Large Language Model (LLM) based Agents. It focuses on continual learning and incremental learning of LLM agents, identifying key modules such as Perception, Memory, and Action. The repository serves as a roadmap for understanding lifelong learning in LLM agents and provides a comprehensive overview of related research and surveys.

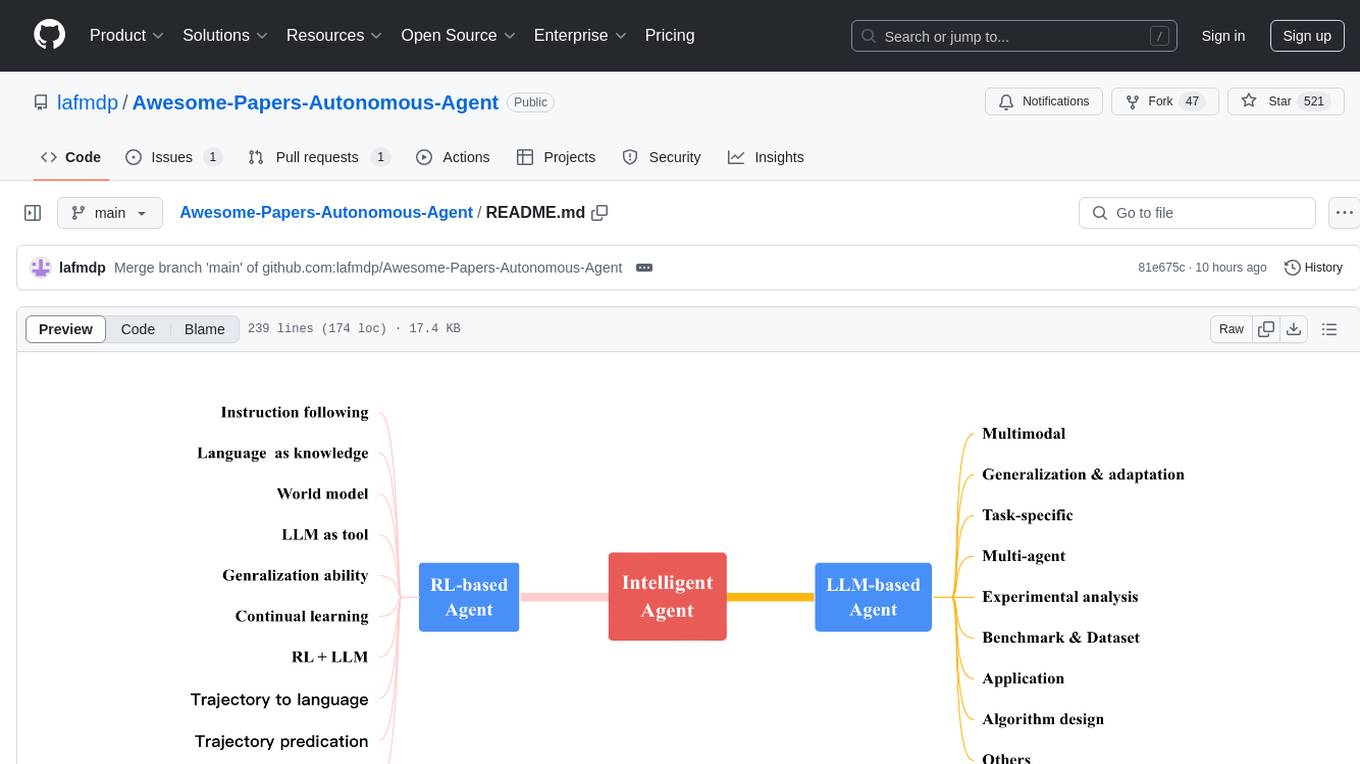

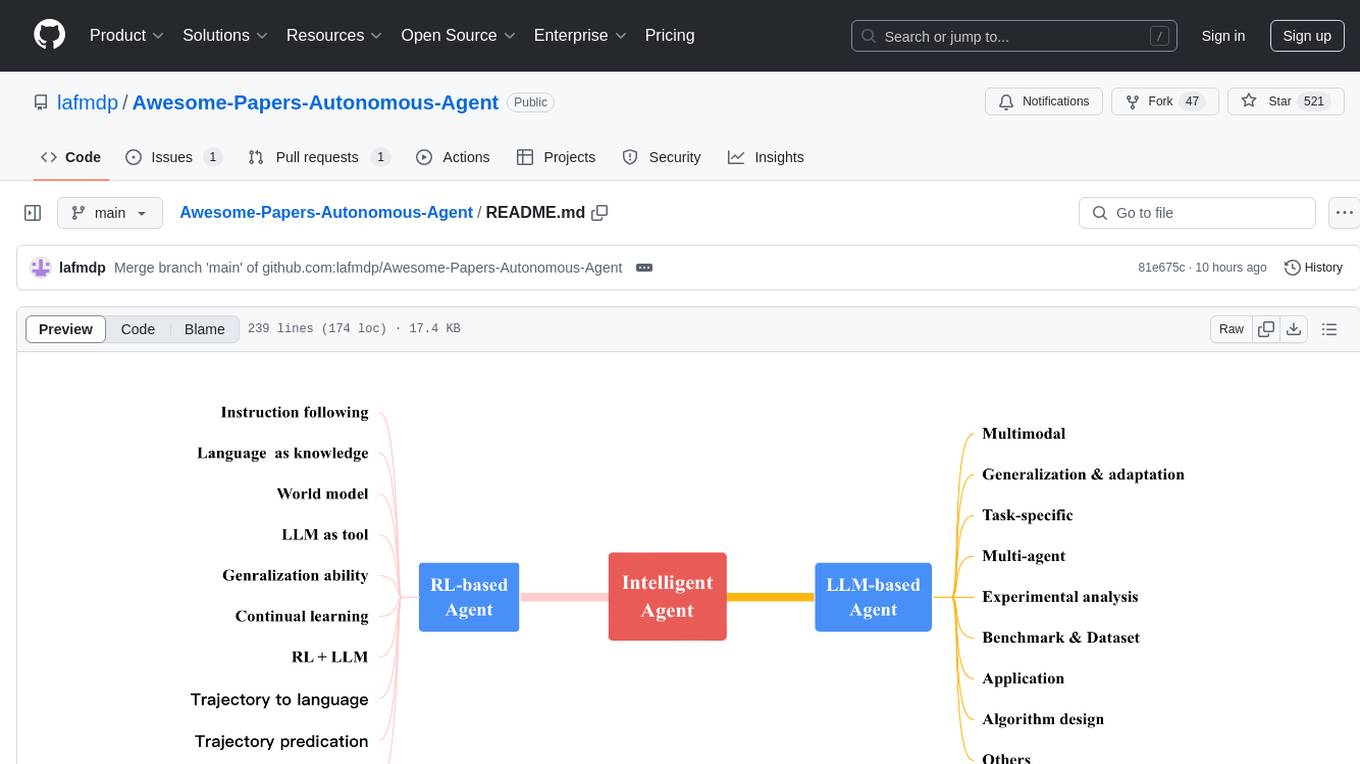

Awesome-Papers-Autonomous-Agent

Awesome-Papers-Autonomous-Agent is a curated collection of recent papers focusing on autonomous agents, specifically interested in RL-based agents and LLM-based agents. The repository aims to provide a comprehensive resource for researchers and practitioners interested in intelligent agents that can achieve goals, acquire knowledge, and continually improve. The collection includes papers on various topics such as instruction following, building agents based on world models, using language as knowledge, leveraging LLMs as a tool, generalization across tasks, continual learning, combining RL and LLM, transformer-based policies, trajectory to language, trajectory prediction, multimodal agents, training LLMs for generalization and adaptation, task-specific designing, multi-agent systems, experimental analysis, benchmarking, applications, algorithm design, and combining with RL.

Awesome-Embodied-AI

Awesome-Embodied-AI is a curated list of papers on Embodied AI and related resources, tracking and summarizing research and industrial progress in the field. It includes surveys, workshops, tutorials, talks, blogs, and papers covering various aspects of Embodied AI, such as vision-language navigation, large language model-based agents, robotics, and more. The repository welcomes contributions and aims to provide a comprehensive overview of the advancements in Embodied AI.

agentUniverse

agentUniverse is a multi-agent framework based on large language models, providing flexible capabilities for building individual agents. It focuses on collaborative pattern components to solve problems in various fields and integrates domain experience. The framework supports LLM model integration and offers various pattern components like PEER and DOE. Users can easily configure models and set up agents for tasks. agentUniverse aims to assist developers and enterprises in constructing domain-expert-level intelligent agents for seamless collaboration.

rlhf_thinking_model

This repository is a collection of research notes and resources focusing on training large language models (LLMs) and Reinforcement Learning from Human Feedback (RLHF). It includes methodologies, techniques, and state-of-the-art approaches for optimizing preferences and model alignment in LLM training. The purpose is to serve as a reference for researchers and engineers interested in reinforcement learning, large language models, model alignment, and alternative RL-based methods.

agentUniverse

agentUniverse is a multi-agent framework based on large language models, providing flexible capabilities for building individual agents. It focuses on multi-agent collaborative patterns, integrating domain experience to help agents solve problems in various fields. The framework includes pattern components like PEER and DOE for event interpretation, industry analysis, and financial report generation. It offers features for agent construction, multi-agent collaboration, and domain expertise integration, aiming to create intelligent applications with professional know-how.

LMOps

LMOps is a research initiative focusing on fundamental research and technology for building AI products with foundation models, particularly enabling AI capabilities with Large Language Models (LLMs) and Generative AI models. The project explores various aspects such as prompt optimization, longer context handling, LLM alignment, acceleration of LLMs, LLM customization, and understanding in-context learning. It also includes tools like Promptist for automatic prompt optimization, Structured Prompting for efficient long-sequence prompts consumption, and X-Prompt for extensible prompts beyond natural language. Additionally, LLMA accelerators are developed to speed up LLM inference by referencing and copying text spans from documents. The project aims to advance technologies that facilitate prompting language models and enhance the performance of LLMs in various scenarios.

Next-Generation-LLM-based-Recommender-Systems-Survey

The Next-Generation LLM-based Recommender Systems Survey is a comprehensive overview of the latest advancements in recommender systems leveraging Large Language Models (LLMs). The survey covers various paradigms, approaches, and applications of LLMs in recommendation tasks, including generative and non-generative models, multimodal recommendations, personalized explanations, and industrial deployment. It discusses the comparison with existing surveys, different paradigms, and specific works in the field. The survey also addresses challenges and future directions in the domain of LLM-based recommender systems.

awesome-llms-fine-tuning

This repository is a curated collection of resources for fine-tuning Large Language Models (LLMs) like GPT, BERT, RoBERTa, and their variants. It includes tutorials, papers, tools, frameworks, and best practices to aid researchers, data scientists, and machine learning practitioners in adapting pre-trained models to specific tasks and domains. The resources cover a wide range of topics related to fine-tuning LLMs, providing valuable insights and guidelines to streamline the process and enhance model performance.

Academic_LLM_Sec_Papers

Academic_LLM_Sec_Papers is a curated collection of academic papers related to LLM Security Application. The repository includes papers sorted by conference name and published year, covering topics such as large language models for blockchain security, software engineering, machine learning, and more. Developers and researchers are welcome to contribute additional published papers to the list. The repository also provides information on listed conferences and journals related to security, networking, software engineering, and cryptography. The papers cover a wide range of topics including privacy risks, ethical concerns, vulnerabilities, threat modeling, code analysis, fuzzing, and more.

agentUniverse

agentUniverse is a framework for developing applications powered by multi-agent based on large language model. It provides essential components for building single agent and multi-agent collaboration mechanism for customizing collaboration patterns. Developers can easily construct multi-agent applications and share pattern practices from different fields. The framework includes pre-installed collaboration patterns like PEER and DOE for complex task breakdown and data-intensive tasks.

MemoryBear

MemoryBear is a next-generation AI memory system developed by RedBear AI, focusing on overcoming limitations in knowledge storage and multi-agent collaboration. It empowers AI with human-like memory capabilities, enabling deep knowledge understanding and cognitive collaboration. The system addresses challenges such as knowledge forgetting, memory gaps in multi-agent collaboration, and semantic ambiguity during reasoning. MemoryBear's core features include memory extraction engine, graph storage, hybrid search, memory forgetting engine, self-reflection engine, and FastAPI services. It offers a standardized service architecture for efficient integration and invocation across applications.

oreilly-llm-rl-alignment

This repository contains Jupyter notebooks for the courses 'Aligning Large Language Models' and 'Reinforcement Learning with Large Language Models' by Sinan Ozdemir. It covers effective best practices and industry case studies in using Large Language Models (LLMs). The courses provide in-depth exploration of alignment techniques, evaluation methods, ethical considerations, and reinforcement learning concepts with practical applications. Participants will gain theoretical insights and hands-on experience in working with LLMs, including fine-tuning models and understanding advanced concepts like RLHF, RLAIF, and Constitutional AI.

langtest

LangTest is a comprehensive evaluation library for custom LLM and NLP models. It aims to deliver safe and effective language models by providing tools to test model quality, augment training data, and support popular NLP frameworks. LangTest comes with benchmark datasets to challenge and enhance language models, ensuring peak performance in various linguistic tasks. The tool offers more than 60 distinct types of tests with just one line of code, covering aspects like robustness, bias, representation, fairness, and accuracy. It supports testing LLMS for question answering, toxicity, clinical tests, legal support, factuality, sycophancy, and summarization.

CodeFuse-muAgent

CodeFuse-muAgent is a Multi-Agent framework designed to streamline Standard Operating Procedure (SOP) orchestration for agents. It integrates toolkits, code libraries, knowledge bases, and sandbox environments for rapid construction of complex Multi-Agent interactive applications. The framework enables efficient execution and handling of multi-layered and multi-dimensional tasks.

For similar tasks

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

Awesome_LLM_System-PaperList

Since the emergence of chatGPT in 2022, the acceleration of Large Language Model has become increasingly important. Here is a list of papers on LLMs inference and serving.

LLM-Tool-Survey

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

Awesome-CVPR2024-ECCV2024-AIGC

A Collection of Papers and Codes for CVPR 2024 AIGC. This repository compiles and organizes research papers and code related to CVPR 2024 and ECCV 2024 AIGC (Artificial Intelligence and Graphics Computing). It serves as a valuable resource for individuals interested in the latest advancements in the field of computer vision and artificial intelligence. Users can find a curated list of papers and accompanying code repositories for further exploration and research. The repository encourages collaboration and contributions from the community through stars, forks, and pull requests.

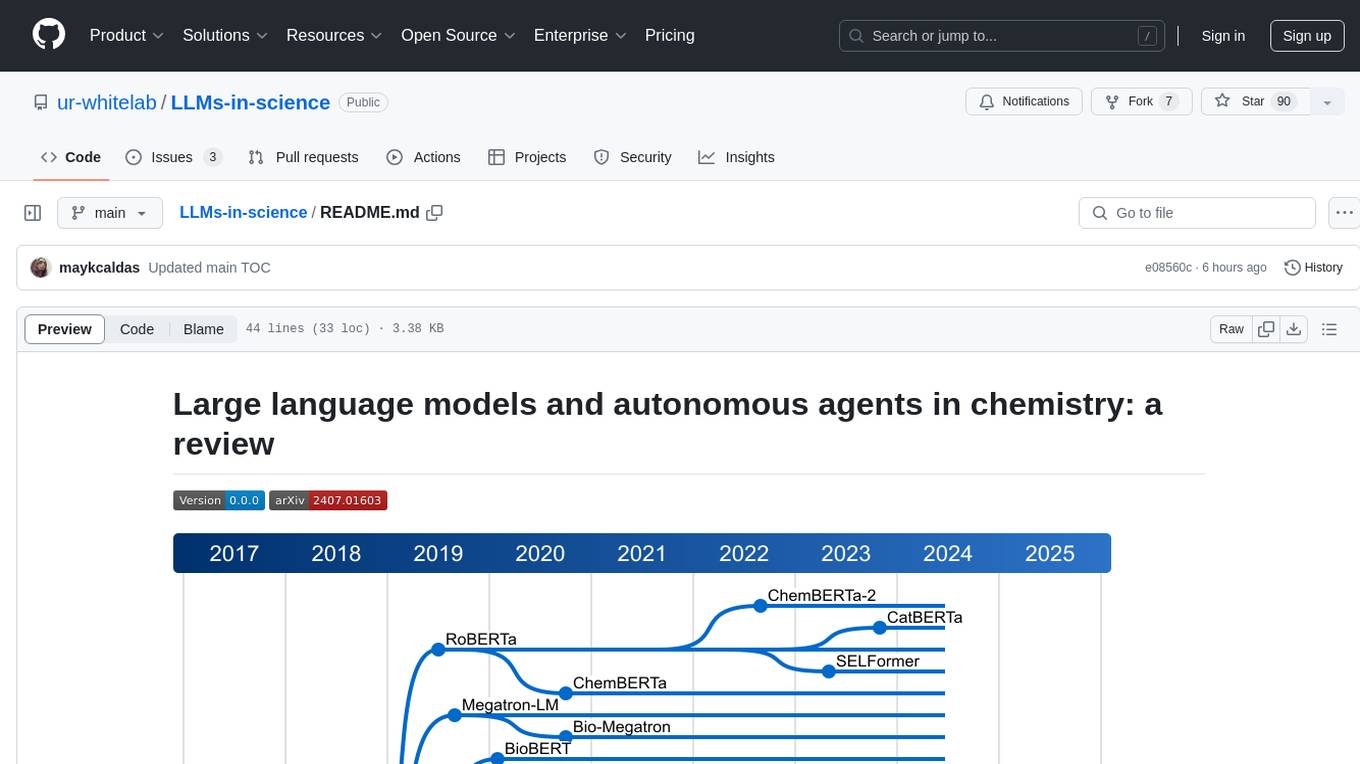

LLMs-in-science

The 'LLMs-in-science' repository is a collaborative environment for organizing papers related to large language models (LLMs) and autonomous agents in the field of chemistry. The goal is to discuss trend topics, challenges, and the potential for supporting scientific discovery in the context of artificial intelligence. The repository aims to maintain a systematic structure of the field and welcomes contributions from the community to keep the content up-to-date and relevant.

Awesome-Papers-Autonomous-Agent

Awesome-Papers-Autonomous-Agent is a curated collection of recent papers focusing on autonomous agents, specifically interested in RL-based agents and LLM-based agents. The repository aims to provide a comprehensive resource for researchers and practitioners interested in intelligent agents that can achieve goals, acquire knowledge, and continually improve. The collection includes papers on various topics such as instruction following, building agents based on world models, using language as knowledge, leveraging LLMs as a tool, generalization across tasks, continual learning, combining RL and LLM, transformer-based policies, trajectory to language, trajectory prediction, multimodal agents, training LLMs for generalization and adaptation, task-specific designing, multi-agent systems, experimental analysis, benchmarking, applications, algorithm design, and combining with RL.

awesome-lifelong-llm-agent

This repository is a collection of papers and resources related to Lifelong Learning of Large Language Model (LLM) based Agents. It focuses on continual learning and incremental learning of LLM agents, identifying key modules such as Perception, Memory, and Action. The repository serves as a roadmap for understanding lifelong learning in LLM agents and provides a comprehensive overview of related research and surveys.

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.