LLM-Tool-Survey

This is the repository for the Tool Learning survey.

Stars: 220

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

README:

Recently, tool learning with large language models(LLMs) has emerged as a promising paradigm for augmenting the capabilities of LLMs to tackle highly complex problems.

This is the collection of papers related to tool learning with LLMs. These papers are organized according to our survey paper "Tool Learning with Large Language Models: A Survey".

中文: We have noticed that PaperAgent and 旺知识 have provided a brief and a comprehensive introduction in Chinese, respectively. We greatly appreciate their assistance.

Please feel free to contact us if you have any questions or suggestions!

🎉👍 Please feel free to open an issue or make a pull request! 🎉👍

If you find our work helps your research, please kindly cite our paper:

@article{qu2024toolsurvey,

author={Qu, Changle and Dai, Sunhao and Wei, Xiaochi and Cai, Hengyi and Wang, Shuaiqiang and Yin, Dawei and Xu, Jun and Wen, Ji-Rong},

title={Tool Learning with Large Language Models: A Survey},

journal={arXiv preprint arXiv:2405.17935},

year={2024}

}- Survey: Tool Learning with Large Language Models

Recently, tool learning with large language models (LLMs) has emerged as a promising paradigm for augmenting the capabilities of LLMs to tackle highly complex problems. Despite growing attention and rapid advancements in this field, the existing literature remains fragmented and lacks systematic organization, posing barriers to entry for newcomers. This gap motivates us to conduct a comprehensive survey of existing works on tool learning with LLMs. In this survey, we focus on reviewing existing literature from the two primary aspects (1) why tool learning is beneficial and (2) how tool learning is implemented, enabling a comprehensive understanding of tool learning with LLMs. We first explore the “why” by reviewing both the benefits of tool integration and the inherent benefits of the tool learning paradigm from six specific aspects. In terms of “how”, we systematically review the literature according to a taxonomy of four key stages in the tool learning workflow: task planning, tool selection, tool calling, and response generation. Additionally, we provide a detailed summary of existing benchmarks and evaluation methods, categorizing them according to their relevance to different stages. Finally, we discuss current challenges and outline potential future directions, aiming to inspire both researchers and industrial developers to further explore this emerging and promising area.

-

Knowledge Acquisition.

-

Search Engine

Internet-Augmented Dialogue Generation, ACL 2022. [Paper]

WebGPT: Browser-assisted question-answering with human feedback, Preprint 2021. [Paper]

Internet-augmented language models through few-shot prompting for open-domain question answering, Preprint 2022. [Paper]

REPLUG: Retrieval-Augmented Black-Box Language Models, Preprint 2023. [Paper]

Toolformer: Language Models Can Teach Themselves to Use Tools, NeurIPS 2023. [Paper]

ART: Automatic multi-step reasoning and tool-use for large language models, Preprint 2023. [Paper]

ToolCoder: Teach Code Generation Models to use API search tools, Preprint 2023. [Paper]

CRITIC: Large Language Models Can Self-Correct with Tool-Interactive Critiquing, ICLR 2024. [Paper]

-

Database & Knowledge Graph

Lamda: Language models for dialog applications, Preprint 2022. [Paper]

Gorilla: Large Language Model Connected with Massive APIs, NeurIPS 2024. [Paper]

ToolkenGPT: Augmenting Frozen Language Models with Massive Tools via Tool Embeddings, NeurIPS 2023. [Paper]

ToolQA: A Dataset for LLM Question Answering with External Tools, NeurIPS 2023. [Paper]

Syntax Error-Free and Generalizable Tool Use for LLMs via Finite-State Decoding, NeurIPS 2023. [Paper]

Middleware for LLMs: Tools are Instrumental for Language Agents in Complex Environments, EMNLP 2024. [Paper]

-

Weather or Map

On the Tool Manipulation Capability of Open-source Large Language Models, NeurIPS 2023. [Paper]

ToolAlpaca: Generalized Tool Learning for Language Models with 3000 Simulated Cases, Preprint 2023. [Paper]

Tool Learning with Foundation Models, Preprint 2023. [Paper]

-

-

Expertise Enhancement.

-

Mathematical Tools

Training verifiers to solve math word problems, Preprint 2021. [Paper]

MRKL Systems: A modular, neuro-symbolic architecture that combines large language models, external knowledge sources and discrete reasoning, Preprint 2021. [Paper]

Chaining Simultaneous Thoughts for Numerical Reasoning, EMNLP 2022. [Paper]

Calc-X and Calcformers: Empowering Arithmetical Chain-of-Thought through Interaction with Symbolic Systems, EMNLP 2023. [Paper]

Solving math word problems by combining language models with symbolic solvers, NeurIPS 2023. [Paper]

Evaluating and improving tool-augmented computation-intensive math reasoning, NeurIPS 2023. [Paper]

ToRA: A Tool-Integrated Reasoning Agent for Mathematical Problem Solving, ICLR 2024. [Paper]

MATHSENSEI: A Tool-Augmented Large Language Model for Mathematical Reasoning, Preprint 2024. [Paper]

Calc-CMU at SemEval-2024 Task 7: Pre-Calc -- Learning to Use the Calculator Improves Numeracy in Language Models, NAACL 2024. [Paper]

MathViz-E: A Case-study in Domain-Specialized Tool-Using Agents, Preprint 2024. [Paper]

-

Python Interpreter

Pal: Program-aided language models, ICML 2023. [Paper]

Program of Thoughts Prompting: Disentangling Computation from Reasoning for Numerical Reasoning Tasks, TMLR 2023. [Paper]

Fact-Checking Complex Claims with Program-Guided Reasoning, ACL 2023. [Paper]

Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models, NeurIPS 2023. [Paper]

LeTI: Learning to Generate from Textual Interactions, NAACL 2024. [Paper]

Mint: Evaluating llms in multi-turn interaction with tools and language feedback, ICLR 2024. [Paper]

Executable Code Actions Elicit Better LLM Agents, ICML 2024. [Paper]

CodeNav: Beyond tool-use to using real-world codebases with LLM agents, Preprint 2024. [Paper]

APPL: A Prompt Programming Language for Harmonious Integration of Programs and Large Language Model Prompts, Preprint 2024. [Paper]

BigCodeBench: Benchmarking Code Generation with Diverse Function Calls and Complex Instructions, Preprint 2024. [Paper]

CodeAgent: Enhancing Code Generation with Tool-Integrated Agent Systems for Real-World Repo-level Coding Challenges, ACL 2024. [Paper]

MuMath-Code: Combining Tool-Use Large Language Models with Multi-perspective Data Augmentation for Mathematical Reasoning, EMNLP 2024. [Paper]

-

Others

Chemical: MultiTool-CoT: GPT-3 Can Use Multiple External Tools with Chain of Thought Prompting, ACL 2023. [Paper]

ChemCrow: Augmenting large-language models with chemistry tools, Nature Machine Intelligence 2024. [Paper]

A REVIEW OF LARGE LANGUAGE MODELS AND AUTONOMOUS AGENTS IN CHEMISTRY, Preprint 2024. [Paper]

Biomedical: GeneGPT: Augmenting Large Language Models with Domain Tools for Improved Access to Biomedical Information, ISMB 2024. [Paper]

Financial: Equipping Language Models with Tool Use Capability for Tabular Data Analysis in Finance, EACL 2024. [Paper]

Financial: Simulating Financial Market via Large Language Model based Agents, Preprint 2024. [Paper]

Financial: A Multimodal Foundation Agent for Financial Trading: Tool-Augmented, Diversified, and Generalist, KDD 2024. [Paper]

Medical: AgentMD: Empowering Language Agents for Risk Prediction with Large-Scale Clinical Tool Learning, Preprint 2024. [Paper]

MMedAgent: Learning to Use Medical Tools with Multi-modal Agent, Preprint 2024. [Paper]

Recommendation: Let Me Do It For You: Towards LLM Empowered Recommendation via Tool Learning, SIGIR 2024. [Paper]

Gas Turbines: DOMAIN-SPECIFIC ReAct FOR PHYSICS-INTEGRATED ITERATIVE MODELING: A CASE STUDY OF LLM AGENTS FOR GAS PATH ANALYSIS OF GAS TURBINES, Preprint 2024. [Paper]

WORLDAPIS: The World Is Worth How Many APIs? A Thought Experiment, ACL 2024 Workshop. [Paper]

Tool-Assisted Agent on SQL Inspection and Refinement in Real-World Scenarios, Preprint 2024. [Paper]

HoneyComb: A Flexible LLM-Based Agent System for Materials Science, Preprint 2024. [Paper]

-

-

Automation and Efficiency.

-

Schedule Tools

ToolQA: A Dataset for LLM Question Answering with External Tools, NeurIPS 2023. [Paper]

-

Set Reminders

ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, ICLR 2024. [Paper]

-

Filter Emails

ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, ICLR 2024. [Paper]

-

Project Management

ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, ICLR 2024. [Paper]

-

Online Shopping Assistants

WebShop: Towards Scalable Real-World Web Interaction with Grounded Language Agents, NeurIPS 2022. [Paper]

-

-

Interaction Enhancement.

-

Multi-modal Tools

Vipergpt: Visual inference via python execution for reasoning, ICCV 2023. [Paper]

MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action, Preprint 2023. [Paper]

InternGPT: Solving Vision-Centric Tasks by Interacting with ChatGPT Beyond Language, Preprint 2023. [Paper]

AssistGPT: A General Multi-modal Assistant that can Plan, Execute, Inspect, and Learn, Preprint 2023. [Paper]

CLOVA: A closed-loop visual assistant with tool usage and update, CVPR 2024. [Paper]

DiffAgent: Fast and Accurate Text-to-Image API Selection with Large Language Model, CVPR 2024. [Paper]

MLLM-Tool: A Multimodal Large Language Model For Tool Agent Learning, Preprint 2024. [Paper]

m&m's: A Benchmark to Evaluate Tool-Use for multi-step multi-modal Tasks, Preprint 2024. [Paper]

From the Least to the Most: Building a Plug-and-Play Visual Reasoner via Data Synthesis, Preprint 2024. [Paper]

-

Machine Translator

Toolformer: Language Models Can Teach Themselves to Use Tools, NeurIPS 2023. [Paper]

Tool Learning with Foundation Models, Preprint 2023. [Paper]

-

Natural Language Processing Tools

HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face, NeurIPS 2023. [Paper]

GitAgent: Facilitating Autonomous Agent with GitHub by Tool Extension, Preprint 2023. [Paper]

-

- Enhanced Interpretability and User Trust.

- Improved Robustness and Adaptability.

-

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, NeurIPS 2022. [Paper]

ReAct: Synergizing Reasoning and Acting in Language Models, ICLR 2023. [Paper]

ART: Automatic multi-step reasoning and tool-use for large language models, Preprint 2023. [Paper]

HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face, NeurIPS 2023. [Paper]

Graph-ToolFormer: To Empower LLMs with Graph Reasoning Ability via Prompt Augmented by ChatGPT, Preprint 2023. [Paper]

Large Language Models as Tool Makers, ICLR 2024. [Paper]

CREATOR: Tool Creation for Disentangling Abstract and Concrete Reasoning of Large Language Models, EMNLP 2023. [Paper]

ChatCoT: Tool-Augmented Chain-of-Thought Reasoning on Chat-based Large Language Models, EMNLP 2023. [Paper]

FacTool: Factuality Detection in Generative AI -- A Tool Augmented Framework for Multi-Task and Multi-Domain Scenarios, Preprint 2023. [Paper]

TPTU: Large Language Model-based AI Agents for Task Planning and Tool Usage, Preprint 2023. [Paper]

ToolChain*: Efficient Action Space Navigation in Large Language Models with A* Search, ICLR 2024. [Paper]

Fortify the Shortest Stave in Attention: Enhancing Context Awareness of Large Language Models for Effective Tool Use, ACL 2024. [Paper]

TroVE: Inducing Verifiable and Efficient Toolboxes for Solving Programmatic Tasks, Preprint 2024. [Paper]

SwissNYF: Tool Grounded LLM Agents for Black Box Setting, Preprint 2024. [Paper]

From Summary to Action: Enhancing Large Language Models for Complex Tasks with Open World APIs, Preprint 2024. [Paper]

Budget-Constrained Tool Learning with Planning, ACL 2024 Findings. [Paper]

Planning and Editing What You Retrieve for Enhanced Tool Learning, NAACL 2024. [Paper]

Large Language Models Can Plan Your Travels Rigorously with Formal Verification Tools, Preprint 2024. [Paper]

Smurfs: Leveraging Multiple Proficiency Agents with Context-Efficiency for Tool Planning, Preprint 2024. [Paper]

STRIDE: A Tool-Assisted LLM Agent Framework for Strategic and Interactive Decision-Making, Preprint 2024. [Paper]

Chain of Tools: Large Language Model is an Automatic Multi-tool Learner, Preprint 2024. [Paper]

Can Graph Learning Improve Planning in LLM-based Agents?, NeurIPS 2024. [Paper]

Tool-Planner: Dynamic Solution Tree Planning for Large Language Model with Tool Clustering, Preprint 2024. [Paper]

Tools Fail: Detecting Silent Errors in Faulty Tools, Preprint 2024. [Paper]

What Affects the Stability of Tool Learning? An Empirical Study on the Robustness of Tool Learning Frameworks, Preprint 2024. [Paper]

Tulip Agent -- Enabling LLM-Based Agents to Solve Tasks Using Large Tool Libraries, Preprint 2024. [Paper]

-

TaskMatrix.AI: Completing Tasks by Connecting Foundation Models with Millions of APIs, INTELLIGENT COMPUTING 2024. [Paper]

OpenAGI: When LLM Meets Domain Experts, Neurips 2023. [Paper]

ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, ICLR 2024. [Paper]

Toolink: Linking Toolkit Creation and Using through Chain-of-Solving on Open-Source Model, Preprint 2023. [Paper]

TPTU-v2: Boosting Task Planning and Tool Usage of Large Language Model-based Agents in Real-world Systems, ICLR 2024. [Paper]

Navigating Uncertainty: Optimizing API Dependency for Hallucination Reduction in Closed-Book Question Answering, ECIR 2024. [Paper]

Small LLMs Are Weak Tool Learners: A Multi-LLM Agent, Preprint 2024. [Paper]

Efficient Tool Use with Chain-of-Abstraction Reasoning, Preprint 2024. [Paper]

Look Before You Leap: Towards Decision-Aware and Generalizable Tool-Usage for Large Language Models, Preprint 2024. [Paper]

A Solution-based LLM API-using Methodology for Academic Information Seeking, Preprint 2024. [Paper]

Advancing Tool-Augmented Large Language Models: Integrating Insights from Errors in Inference Trees, NeurIPS 2024. [Paper]

APIGen: Automated Pipeline for Generating Verifiable and Diverse Function-Calling Datasets, Preprint 2024. [Paper]

MetaTool: Facilitating Large Language Models to Master Tools with Meta-task Augmentation, Preprint 2024. [Paper]

-

A statistical interpretation of term specificity and its application in retrieval, Journal of Documentation 1972. [Paper]

The probabilistic relevance framework: BM25 and beyond, Foundations and Trends in Information Retrieval 2009. [Paper]

Sentence-bert: Sentence embeddings using siamese bert-networks, EMNLP 2019. [Paper]

Approximate nearest neighbor negative contrastive learning for dense text retrieval, ICLR 2021. [Paper]

Efficiently Teaching an Effective Dense Retriever with Balanced Topic Aware Sampling, SIGIR 2021. [Paper]

Unsupervised Corpus Aware Language Model Pre-training for Dense Passage Retrieval, ACL 2022. [Paper]

Unsupervised dense information retrieval with contrastive learning, Preprint 2021. [Paper]

CRAFT: Customizing LLMs by Creating and Retrieving from Specialized Toolsets, ICLR 2024. [Paper]

ProTIP: Progressive Tool Retrieval Improves Planning, Preprint 2023. [Paper]

ToolRerank: Adaptive and Hierarchy-Aware Reranking for Tool Retrieval, COLING 2024. [Paper]

Enhancing Tool Retrieval with Iterative Feedback from Large Language Models, Preprint 2024. [Paper]

Re-Invoke: Tool Invocation Rewriting for Zero-Shot Tool Retrieval, Preprint 2024. [Paper]

Efficient and Scalable Estimation of Tool Representations in Vector Space, Preprint 2024. [Paper]

COLT: Towards Completeness-Oriented Tool Retrieval for Large Language Models, CIKM 2024. [Paper]

-

On the Tool Manipulation Capability of Open-source Large Language Models, Preprint 2023. [Paper]

Making Language Models Better Tool Learners with Execution Feedback, NAACL 2024. [Paper]

ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, ICLR 2024. [Paper]

Confucius: Iterative Tool Learning from Introspection Feedback by Easy-to-Difficult Curriculum, AAAI 2024. [Paper]

AnyTool: Self-Reflective, Hierarchical Agents for Large-Scale API Calls, Preprint 2024. [Paper]

TOOLVERIFIER: Generalization to New Tools via Self-Verification, Preprint 2024. [Paper]

ToolNet: Connecting Large Language Models with Massive Tools via Tool Graph, Preprint 2024. [Paper]

GeckOpt: LLM System Efficiency via Intent-Based Tool Selection, GLSVLSI 2024. [Paper]

AvaTaR: Optimizing LLM Agents for Tool-Assisted Knowledge Retrieval, NeurIPS 2024. [Paper]

Small Agent Can Also Rock! Empowering Small Language Models as Hallucination Detector, Preprint 2024. [Paper]

Adaptive Selection for Homogeneous Tools: An Instantiation in the RAG Scenario, Preprint 2024. [Paper]

-

RestGPT: Connecting Large Language Models with Real-World RESTful APIs, Preprint 2023. [Paper]

Reverse Chain: A Generic-Rule for LLMs to Master Multi-API Planning, Preprint 2023. [Paper]

GEAR: Augmenting Language Models with Generalizable and Efficient Tool Resolution, EACL 2023. [Paper]

Tool Documentation Enables Zero-Shot Tool-Usage with Large Language Models, Preprint 2023. [Paper]

ControlLLM: Augment Language Models with Tools by Searching on Graphs, Preprint 2023. [Paper]

EASYTOOL: Enhancing LLM-based Agents with Concise Tool Instruction, Preprint 2024. [Paper]

Large Language Models as Zero-shot Dialogue State Tracker through Function Calling, ACL 2024. [Paper]

Concise and Precise Context Compression for Tool-Using Language Models, ACL 2024 Findings. [Paper]

-

Gorilla: Large Language Model Connected with Massive APIs, NeurIPS 2024. [Paper]

GPT4Tools: Teaching Large Language Model to Use Tools via Self-instruction, NeurIPS 2023. [Paper]

ToolkenGPT: Augmenting Frozen Language Models with Massive Tools via Tool Embeddings, NeurIPS 2023. [Paper]

Tool-Augmented Reward Modeling, ICLR 2024. [Paper]

LLMs in the Imaginarium: Tool Learning through Simulated Trial and Error, ACL 2024. [Paper]

ToolACE: Winning the Points of LLM Function Calling, Preprint 2024. [Paper]

CITI: Enhancing Tool Utilizing Ability in Large Language Models without Sacrificing General Performance, Preprint 2024. [Paper]

Quality Matters: Evaluating Synthetic Data for Tool-Using LLMs, EMNLP 2024. [Paper]

-

TALM: Tool Augmented Language Models, Preprint 2022. [Paper]

Toolformer: Language Models Can Teach Themselves to Use Tools, NeurIPS 2023. [Paper]

A Comprehensive Evaluation of Tool-Assisted Generation Strategies, EMNLP 2023. [Paper]

-

TPE: Towards Better Compositional Reasoning over Conceptual Tools with Multi-persona Collaboration, Preprint 2023. [Paper]

RECOMP: Improving Retrieval-Augmented LMs with Compression and Selective Augmentation, ICLR 2024. [Paper]

Learning to Use Tools via Cooperative and Interactive Agents, Preprint 2024. [Paper]

| Benchmark | Reference | Description | #Tools | #Instances | Link | Release Time |

|---|---|---|---|---|---|---|

| API-Bank | [Paper] | Assessing the existing LLMs’ capabilities in planning, retrieving, and calling APIs. | 73 | 314 | [Repo] | 2023-04 |

| APIBench | [Paper] | A comprehensive benchmark constructed from TorchHub, TensorHub, and HuggingFace API Model Cards. | 1,645 | 16,450 | [Repo] | 2023-05 |

| ToolBench1 | [Paper] | A tool manipulation benchmark consisting of diverse software tools for real-world tasks. | 232 | 2,746 | [Repo] | 2023-05 |

| ToolAlpaca | [Paper] | Evaluating the ability of LLMs to utilize previously unseen tools without specific training. | 426 | 3,938 | [Repo] | 2023-06 |

| RestBench | [Paper] | A high-quality benchmark which consists of two real-world scenarios and human-annotated instructions with gold solution paths. | 94 | 157 | [Repo] | 2023-06 |

| ToolBench2 | [Paper] | An instruction-tuning dataset for tool use, which is constructed automatically using ChatGPT. | 16,464 | 126,486 | [Repo] | 2023-07 |

| MetaTool | [Paper] | A benchmark designed to evaluate whether LLMs have tool usage awareness and can correctly choose tools. | 199 | 21,127 | [Repo] | 2023-10 |

| TaskBench | [Paper] | A benchmark designed to evaluate the capability of LLMs from different aspects, including task decomposition, tool invocation, and parameter prediction. | 103 | 28,271 | [Repo] | 2023-11 |

| T-Eval | [Paper] | Evaluating the tool-utilization capability step by step. | 15 | 533 | [Repo] | 2023-12 |

| ToolEyes | [Paper] | A fine-grained system tailored for the evaluation of the LLMs’ tool learning capabilities in authentic scenarios. | 568 | 382 | [Repo] | 2024-01 |

| UltraTool | [Paper] | A novel benchmark designed to improve and evaluate LLMs’ ability in tool utilization within real-world scenarios. | 2,032 | 5,824 | [Repo] | 2024-01 |

| API-BLEND | [Paper] | A large corpora for training and systematic testing of tool-augmented LLMs. | - | 189,040 | [Repo] | 2024-02 |

| Seal-Tools | [Paper] | Seal-Tools contains hard instances that call multiple tools to complete the job, among which some are nested tool callings. | 4,076 | 14,076 | [Repo] | 2024-05 |

| ToolQA | [Paper] | It is designed to faithfully evaluate LLMs’ ability to use external tools for question answering.(QA) | 13 | 1,530 | [Repo] | 2023-06 |

| ToolEmu | [Paper] | A framework that uses a LM to emulate tool execution and enables scalable testing of LM agents against a diverse range of tools and scenarios.(Safety) | 311 | 144 | [Repo] | 2023-09 |

| ToolTalk | [Paper] | A benchmark consisting of complex user intents requiring multi-step tool usage specified through dialogue.(Conversation) | 28 | 78 | [Repo] | 2023-11 |

| VIoT | [Paper] | A benchmark include a training dataset and established performance metrics for 11 representative vision models, categorized into three groups using semi-automated annotations.(VIoT) | 11 | 1,841 | [Repo] | 2023-12 |

| RoTBench | [Paper] | A multi-level benchmark for evaluating the robustness of LLMs in tool learning.(Robustness) | 568 | 105 | [Repo] | 2024-01 |

| MLLM-Tool | [Paper] | A system incorporating open-source LLMs and multimodal encoders so that the learnt LLMs can be conscious of multi-modal input instruction and then select the functionmatched tool correctly.(Multi-modal) | 932 | 11,642 | [Repo] | 2024-01 |

| ToolSword | [Paper] | A comprehensive framework dedicated to meticulously investigating safety issues linked to LLMs in tool learning.(Safety) | 100 | 440 | [Repo] | 2024-02 |

| SciToolBench | [Paper] | Spanning five scientific domains to evaluate LLMs’ abilities with tool assistance.(Sci-Reasoning) | 2,446 | 856 | [Repo] | 2024-02 |

| InjecAgent | [Paper] | A benchmark designed to assess the vulnerability of tool-integrated LLM agents to IPI attacks.(Safety) | 17 | 1,054 | [Repo] | 2024-02 |

| StableToolBench | [Paper] | A benchmark evolving from ToolBench, proposing a virtual API server and stable evaluation system.(Stable) | 16,464 | 126,486 | [Repo] | 2024-03 |

| m&m's | [Paper] | A benchmark containing 4K+ multi-step multi-modal tasks involving 33 tools that include multi-modal models, public APIs, and image processing modules.(Multi-modal) | 33 | 4,427 | [Repo] | 2024-03 |

| GeoLLM-QA | [Paper] | A novel benchmark of 1,000 diverse tasks, designed to capture complex RS workflows where LLMs handle complex data structures, nuanced reasoning, and interactions with dynamic user interfaces.(Remote Sensing) | 117 | 1,000 | [Repo] | 2024-04 |

| ToolLens | [Paper] | ToolLens includes concise yet intentionally multifaceted queries that better mimic real-world user interactions. (Tool Retrieval) | 464 | 18,770 | [Repo] | 2024-05 |

| SoAyBench | [Paper] | A Solution-based LLM API-using Methodology for Academic Information Seeking | 7 | 792 | [Repo], [HF] | 2024-05 |

| ShortcutsBench | [Paper] | A Large-Scale Real-world Benchmark for API-based Agents | 1414 | 7627 | [Repo] | 2024-07 |

| GTA | [Paper] | A Benchmark for General Tool Agents | 14 | 229 | [Repo] | 2024-07 |

| WTU-Eval | [Paper] | A Whether-or-Not Tool Usage Evaluation Benchmark for Large Language Models | 4 | 916 | [Repo] | 2024-07 |

| AppWorld | [Paper] | A collection of complex everyday tasks requiring interactive coding with API calls | 457 | 750 | [Repo] | 2024-07 |

| ToolSandbox | [Paper] | A stateful, conversational and interactive tool-use benchmark. | 34 | 1032 | [Repo] | 2024-08 |

| CToolEval | [Paper] | A benchmark designed to evaluate LLMs in the context of Chinese societal applications. | 27 | 398 | [Repo] | 2024-08 |

| NoisyToolBench | [Paper] | This benchmark includes a collection of provided APIs, ambiguous queries, anticipated questions for clarification, and the corresponding responses. | - | 200 | [Repo] | 2024-09 |

-

Task Planning

-

Tool Usage Awareness

MetaTool Benchmark: Deciding Whether to Use Tools and Which to Use, ICLR 2024. [Paper]

Can Tool-augmented Large Language Models be Aware of Incomplete Conditions?, Preprint 2024. [Paper]

-

Pass Rate & Win Rate

ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, ICLR 2024. [Paper]

-

Accuracy

T-Eval: Evaluating the Tool Utilization Capability of Large Language Models Step by Step, ICLR 2024. [Paper]

RestGPT: Connecting Large Language Models with Real-World RESTful APIs, Preprint 2023. [Paper]

A Solution-based LLM API-using Methodology for Academic Information Seeking, Preprint 2024. [Paper]

-

-

Tool Selection

-

Precision

ShortcutsBench: A Large-Scale Real-world Benchmark for API-based Agents, Preprint 2024. [Paper]

-

Recall

Recall, precision and average precision,Department of Statistics and Actuarial Science 2004. [Paper]

-

NDCG

Cumulated gain-based evaluation of IR techniques, TOIS 2002. [Paper]

-

COMP

COLT: Towards Completeness-Oriented Tool Retrieval for Large Language Models, CIKM 2024. [Paper]

-

-

Tool Calling

-

Consistent with stipulations

T-Eval: Evaluating the Tool Utilization Capability of Large Language Models Step by Step, Preprint 2024. [Paper]

Planning and Editing What You Retrieve for Enhanced Tool Learning, NAACL 2024. [Paper]

ToolEyes: Fine-Grained Evaluation for Tool Learning Capabilities of Large Language Models in Real-world Scenarios, Preprint 2024. [Paper3]

ShortcutsBench: A Large-Scale Real-world Benchmark for API-based Agents, Preprint 2024. [Paper]

-

-

Response Generation

-

Parameter Filling

-

Precision

ShortcutsBench: A Large-Scale Real-world Benchmark for API-based Agents, Preprint 2024. [Paper]

-

- High Latency in Tool Learning

- Rigorous and Comprehensive Evaluation

- Comprehensive and Accessible Tools

- Safe and Robust Tool Learning

- Unified Tool Learning Framework

- Real-Word Benchmark for Tool Learning

- Tool Learning with Multi-Modal

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-Tool-Survey

Similar Open Source Tools

LLM-Tool-Survey

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

LLM-on-Tabular-Data-Prediction-Table-Understanding-Data-Generation

This repository serves as a comprehensive survey on the application of Large Language Models (LLMs) on tabular data, focusing on tasks such as prediction, data generation, and table understanding. It aims to consolidate recent progress in this field by summarizing key techniques, metrics, datasets, models, and optimization approaches. The survey identifies strengths, limitations, unexplored territories, and gaps in the existing literature, providing insights for future research directions. It also offers code and dataset references to empower readers with the necessary tools and knowledge to address challenges in this rapidly evolving domain.

ABigSurveyOfLLMs

ABigSurveyOfLLMs is a repository that compiles surveys on Large Language Models (LLMs) to provide a comprehensive overview of the field. It includes surveys on various aspects of LLMs such as transformers, alignment, prompt learning, data management, evaluation, societal issues, safety, misinformation, attributes of LLMs, efficient LLMs, learning methods for LLMs, multimodal LLMs, knowledge-based LLMs, extension of LLMs, LLMs applications, and more. The repository aims to help individuals quickly understand the advancements and challenges in the field of LLMs through a collection of recent surveys and research papers.

Foundations-of-LLMs

Foundations-of-LLMs is a comprehensive book aimed at readers interested in large language models, providing systematic explanations of foundational knowledge and introducing cutting-edge technologies. The book covers traditional language models, evolution of large language model architectures, prompt engineering, parameter-efficient fine-tuning, model editing, and retrieval-enhanced generation. Each chapter uses an animal as a theme to explain specific technologies, enhancing readability. The content is based on the author team's exploration and understanding of the field, with continuous monthly updates planned. The book includes a 'Paper List' for each chapter to track the latest advancements in related technologies.

Video-ChatGPT

Video-ChatGPT is a video conversation model that aims to generate meaningful conversations about videos by combining large language models with a pretrained visual encoder adapted for spatiotemporal video representation. It introduces high-quality video-instruction pairs, a quantitative evaluation framework for video conversation models, and a unique multimodal capability for video understanding and language generation. The tool is designed to excel in tasks related to video reasoning, creativity, spatial and temporal understanding, and action recognition.

MedLLMsPracticalGuide

This repository serves as a practical guide for Medical Large Language Models (Medical LLMs) and provides resources, surveys, and tools for building, fine-tuning, and utilizing LLMs in the medical domain. It covers a wide range of topics including pre-training, fine-tuning, downstream biomedical tasks, clinical applications, challenges, future directions, and more. The repository aims to provide insights into the opportunities and challenges of LLMs in medicine and serve as a practical resource for constructing effective medical LLMs.

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

Awesome-LLM-in-Social-Science

Awesome-LLM-in-Social-Science is a repository that compiles papers evaluating Large Language Models (LLMs) from a social science perspective. It includes papers on evaluating, aligning, and simulating LLMs, as well as enhancing tools in social science research. The repository categorizes papers based on their focus on attitudes, opinions, values, personality, morality, and more. It aims to contribute to discussions on the potential and challenges of using LLMs in social science research.

Awesome_papers_on_LLMs_detection

This repository is a curated list of papers focused on the detection of Large Language Models (LLMs)-generated content. It includes the latest research papers covering detection methods, datasets, attacks, and more. The repository is regularly updated to include the most recent papers in the field.

FlowDown-App

FlowDown is a blazing fast and smooth client app for using AI/LLM. It is lightweight and efficient with markdown support, universal compatibility, blazing fast text rendering, automated chat titles, and privacy by design. There are two editions available: FlowDown and FlowDown Community, with various features like chat with AI, fast markdown, privacy by design, bring your own LLM, offline LLM w/ MLX, visual LLM, web search, attachments, and language localization. FlowDown Community is now open-source, empowering developers to build interactive and responsive AI client apps.

AI-LLM-ML-CS-Quant-Readings

AI-LLM-ML-CS-Quant-Readings is a repository dedicated to taking notes on Artificial Intelligence, Large Language Models, Machine Learning, Computer Science, and Quantitative Finance. It contains a wide range of resources, including theory, applications, conferences, essentials, foundations, system design, computer systems, finance, and job interview questions. The repository covers topics such as AI systems, multi-agent systems, deep learning theory and applications, system design interviews, C++ design patterns, high-frequency finance, algorithmic trading, stochastic volatility modeling, and quantitative investing. It is a comprehensive collection of materials for individuals interested in these fields.

AIGC-Interview-Book

AIGC-Interview-Book is the ultimate guide for AIGC algorithm and development job interviews, covering a wide range of topics such as AIGC, traditional deep learning, autonomous driving, AI agent, machine learning, computer vision, natural language processing, reinforcement learning, embodied intelligence, metaverse, AGI, Python, Java, C/C++, Go, embedded systems, front-end, back-end, testing, and operations. The repository consolidates industry experience and insights from frontline AIGC algorithm experts, providing resources on AIGC knowledge framework, internal referrals at AIGC big companies, interview experiences, company guides, AI campus recruitment schedule, interview preparation, salary insights, coding guide, and job-seeking Q&A. It serves as a valuable resource for AIGC-related professionals, students, and job seekers, offering insights and guidance for career advancement and job interviews in the AIGC field.

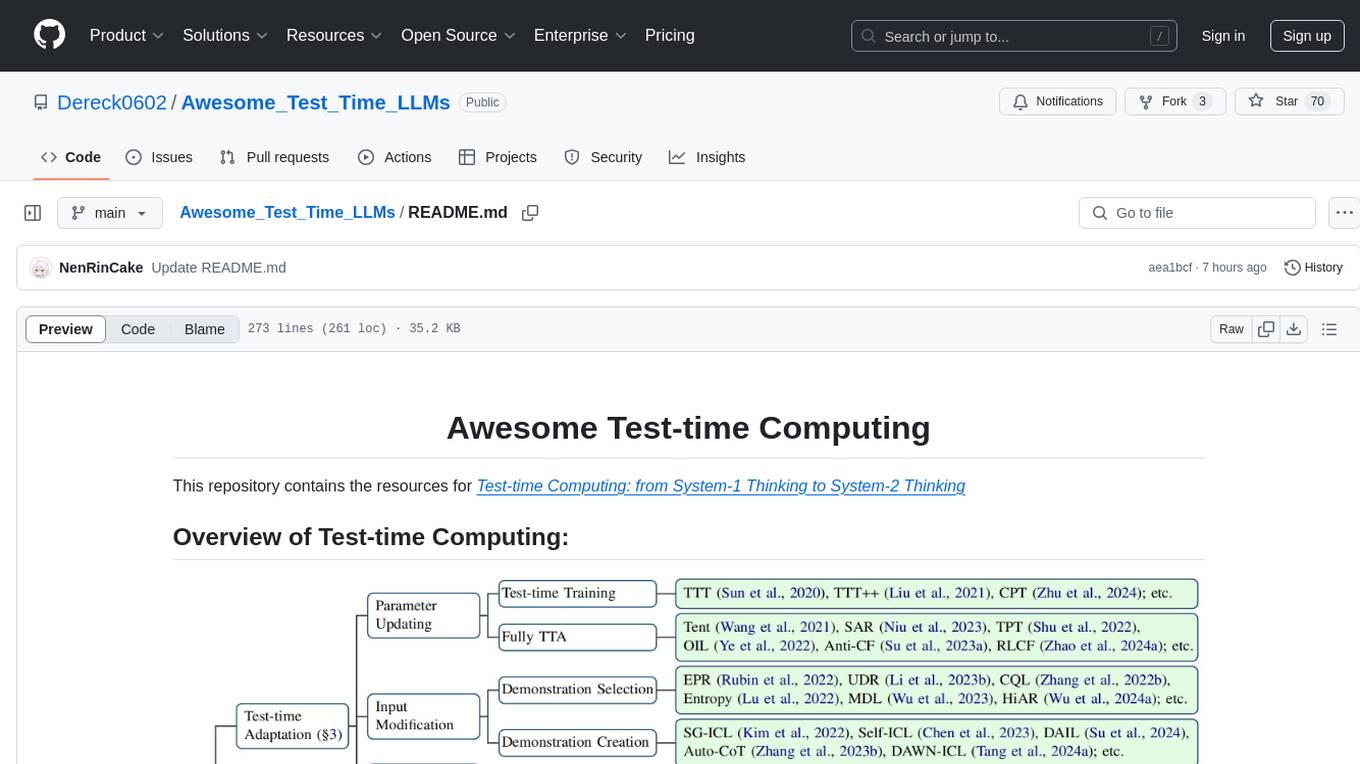

Awesome_Test_Time_LLMs

This repository focuses on test-time computing, exploring various strategies such as test-time adaptation, modifying the input, editing the representation, calibrating the output, test-time reasoning, and search strategies. It covers topics like self-supervised test-time training, in-context learning, activation steering, nearest neighbor models, reward modeling, and multimodal reasoning. The repository provides resources including papers and code for researchers and practitioners interested in enhancing the reasoning capabilities of large language models.

llm-misinformation-survey

The 'llm-misinformation-survey' repository is dedicated to the survey on combating misinformation in the age of Large Language Models (LLMs). It explores the opportunities and challenges of utilizing LLMs to combat misinformation, providing insights into the history of combating misinformation, current efforts, and future outlook. The repository serves as a resource hub for the initiative 'LLMs Meet Misinformation' and welcomes contributions of relevant research papers and resources. The goal is to facilitate interdisciplinary efforts in combating LLM-generated misinformation and promoting the responsible use of LLMs in fighting misinformation.

ClashRoyaleBuildABot

Clash Royale Build-A-Bot is a project that allows users to build their own bot to play Clash Royale. It provides an advanced state generator that accurately returns detailed information using cutting-edge technologies. The project includes tutorials for setting up the environment, building a basic bot, and understanding state generation. It also offers updates such as replacing YOLOv5 with YOLOv8 unit model and enhancing performance features like placement and elixir management. The future roadmap includes plans to label more images of diverse cards, add a tracking layer for unit predictions, publish tutorials on Q-learning and imitation learning, release the YOLOv5 training notebook, implement chest opening and card upgrading features, and create a leaderboard for the best bots developed with this repository.

For similar tasks

LLM-Tool-Survey

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

Awesome_LLM_System-PaperList

Since the emergence of chatGPT in 2022, the acceleration of Large Language Model has become increasingly important. Here is a list of papers on LLMs inference and serving.

Awesome-CVPR2024-ECCV2024-AIGC

A Collection of Papers and Codes for CVPR 2024 AIGC. This repository compiles and organizes research papers and code related to CVPR 2024 and ECCV 2024 AIGC (Artificial Intelligence and Graphics Computing). It serves as a valuable resource for individuals interested in the latest advancements in the field of computer vision and artificial intelligence. Users can find a curated list of papers and accompanying code repositories for further exploration and research. The repository encourages collaboration and contributions from the community through stars, forks, and pull requests.

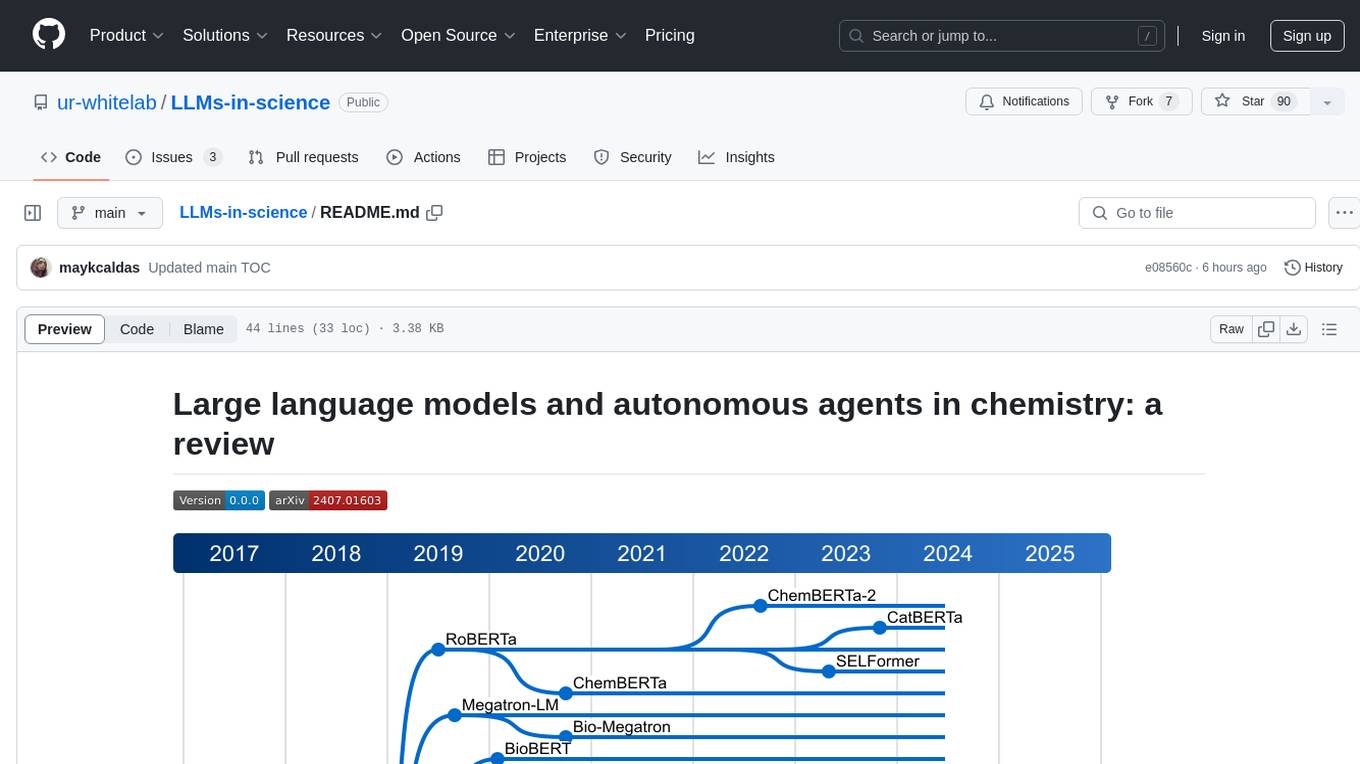

LLMs-in-science

The 'LLMs-in-science' repository is a collaborative environment for organizing papers related to large language models (LLMs) and autonomous agents in the field of chemistry. The goal is to discuss trend topics, challenges, and the potential for supporting scientific discovery in the context of artificial intelligence. The repository aims to maintain a systematic structure of the field and welcomes contributions from the community to keep the content up-to-date and relevant.

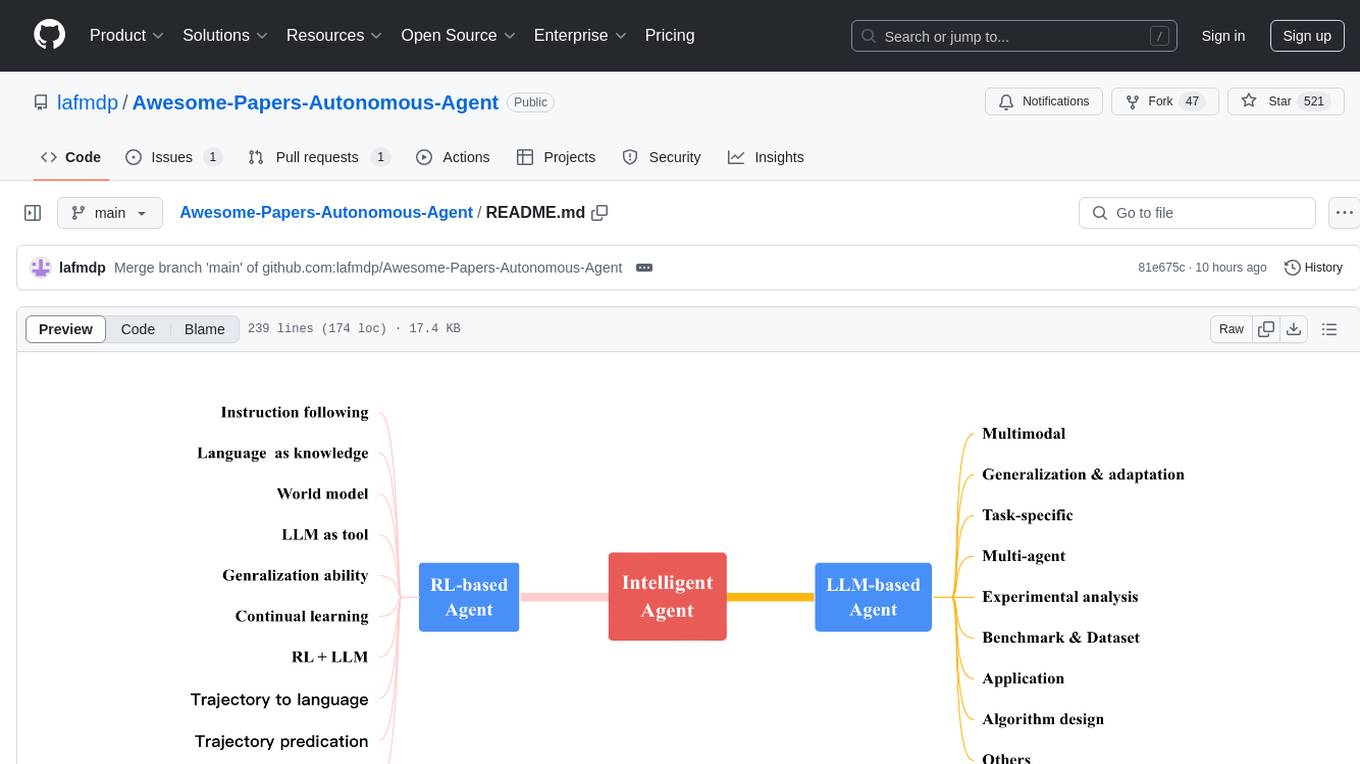

Awesome-Papers-Autonomous-Agent

Awesome-Papers-Autonomous-Agent is a curated collection of recent papers focusing on autonomous agents, specifically interested in RL-based agents and LLM-based agents. The repository aims to provide a comprehensive resource for researchers and practitioners interested in intelligent agents that can achieve goals, acquire knowledge, and continually improve. The collection includes papers on various topics such as instruction following, building agents based on world models, using language as knowledge, leveraging LLMs as a tool, generalization across tasks, continual learning, combining RL and LLM, transformer-based policies, trajectory to language, trajectory prediction, multimodal agents, training LLMs for generalization and adaptation, task-specific designing, multi-agent systems, experimental analysis, benchmarking, applications, algorithm design, and combining with RL.

awesome-lifelong-llm-agent

This repository is a collection of papers and resources related to Lifelong Learning of Large Language Model (LLM) based Agents. It focuses on continual learning and incremental learning of LLM agents, identifying key modules such as Perception, Memory, and Action. The repository serves as a roadmap for understanding lifelong learning in LLM agents and provides a comprehensive overview of related research and surveys.

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.