Awesome_papers_on_LLMs_detection

The lastest paper about detection of LLM-generated text and code

Stars: 147

This repository is a curated list of papers focused on the detection of Large Language Models (LLMs)-generated content. It includes the latest research papers covering detection methods, datasets, attacks, and more. The repository is regularly updated to include the most recent papers in the field.

README:

This repo is a curated list of papers about detection of LLMs-generated content. It includes most lastest papers about detection methods, datasets, attack, etc. We will consistently update this repo to include the most recent papers.

- Awesome_papers_on_LLMs_detection

- Contents

- Training-based Methods

- Zero-shot Methods

- Watermarking

- Attack

- Datasets

- Misc

- EAGLE: A Domain Generalization Framework for AI-generated Text Detection [pdf] 03/25/2024

- DETECTING MACHINE-GENERATED TEXTS BY MULTI-POPULATION AWARE OPTIMIZATION FOR MAXIMUM MEAN DISCREPANCY [pdf] 02/27/2024

- Threads of Subtlety: Detecting Machine-Generated Texts Through Discourse Motifs [pdf] 02/19/2024

- LLM-Detector: Improving AI-Generated Chinese Text Detection with Open-Source LLM Instruction Tuning [pdf] 02/04/2024

- FEW-SHOT DETECTION OF MACHINE-GENERATED TEXT USING STYLE REPRESENTATIONS [pdf] 01/12, 2024

- Token Prediction as Implicit Classification to Identify LLM-Generated Text [pdf] Nov. 15, 2023

- AuthentiGPT: Detecting Machine-Generated Text via Black-Box Language Models Denoising [pdf] Nov. 14, 2023

- G3Detector: General GPT-Generated Text Detector [pdf]

- GPT-Sentinel: Distinguishing Human and ChatGPT Generated Content [pdf]

- GPT Paternity Test: GPT Generated Text Detection with GPT Genetic Inheritance [pdf]

- OpenAI Text Classifier [link]

- GPTZero [link]

- CoCo: Coherence-Enhanced Machine-Generated Text Detection Under Data Limitation With Contrastive Learning [pdf]

- LLMDet: A Large Language Models Detection Tool [pdf]

- Multiscale Positive-Unlabeled Detection of AI-Generated Texts [pdf]

- RADAR: Robust AI-Text Detection via Adversarial Learning [pdf]

- On the Zero-Shot Generalization of Machine-Generated Text Detectors [pdf]

- ConDA: Contrastive Domain Adaptation for AI-generated Text Detection [pdf]

- From Text to Source: Results in Detecting Large Language Model-Generated Content [pdf]

- Ghostbuster: Detecting Text Ghostwritten by Large Language Models [pdf]

- Deepfake Text Detection in the Wild [pdf]

- Automatic Detection of Generated Text is Easiest when Humans are Fooled [pdf]

- SeqXGPT: Sentence-Level AI-Generated Text Detection [pdf]

- Origin Tracing and Detecting of LLMs [pdf]

- GLTR: Statistical Detection and Visualization of Generated Text [pdf]

- Release strategies and the social impacts of language models [pdf]

- Ten Words Only Still Help: Improving Black-Box AI-Generated Text Detection via Proxy-Guided Efficient Re-Sampling [link] 15/02/2024

- Raidar: geneRative AI Detection viA Rewriting [link] 23/01/2024

- SPOTTING LLMS WITH BINOCULARS: ZERO-SHOT DETECTION OF MACHINE-GENERATED TEXT [link]

- Detectgpt: Zero-shot machine-generated text detection using probability curvature [pdf]

- DNA-GPT: Divergent N-Gram Analysis for Training-Free Detection of GPT-Generated Text [pdf]

- Paraphrasing evades detectors of AI-generated text, but retrieval is an effective defense [pdf]

- Smaller Language Models are Better Black-box Machine-Generated Text Detectors [pdf]

- Intrinsic Dimension Estimation for Robust Detection of AI-Generated Texts [pdf]

- Does DETECTGPT Fully Utilize Perturbation? Selective Perturbation on Model-Based Contrastive Learning Detector would be Better [pdf] 02/03/2024

- DNA-GPT: Divergent N-Gram Analysis for Training-Free Detection of GPT-Generated Text [pdf]

- DetectLLM: Leveraging Log Rank Information for Zero-Shot Detection of Machine-Generated Text [pdf]

- Fast-DetectGPT: Efficient Zero-Shot Detection of Machine-Generated Text via Conditional Probability Curvature [pdf]

- GPT-who: An Information Density-based Machine-Generated Text Detector [pdf]

- Efficient Detection of LLM-generated Texts with a Bayesian Surrogate Model [pdf]

- Detecting Fake Content with Relative Entropy Scoring [pdf]

- Computer-generated text detection using machine learning: A systematic review [pdf]

- GLTR: Statistical Detection and Visualization of Generated Text [pdf]

- Watermarking Text Generated by Black-Box Language Models [pdf]

- Tracing text provenance via context-aware lexical substitution [pdf]

- Natural language watermarking and tamperproofing [pdf]

- Natural language watermarking [pdf]

- Natural language watermarking via morphosyntactic alterations [pdf]

- The hiding virtues of ambiguity: quantifiably resilient watermarking of natural language text through synonym substitutions [pdf]

- CODEIP: A Grammar-Guided Multi-Bit Watermark for Large Language Models of Code [pdf] 04/25/2024

- Watermark-based Detection and Attribution of AI-Generated Content [pdf] 04/07/2024

- A Statistical Framework of Watermarks for Large Language Models: Pivot, Detection Efficiency and Optimal Rules [pdf] 04/01/2024

- WaterJudge: Quality-Detection Trade-off when Watermarking Large Language Models [pdf] 03/29/2024

- Duwak: Dual Watermarks in Large Language Models [pdf] 03/20/2024

- WaterMax: breaking the LLM watermark detectability-robustness-quality trade-off [pdf] 03/11/2024

- Token-Specific Watermarking with Enhanced Detectability and Semantic Coherence for Large Language Models [link] 02/28/2024

- EmMark: Robust Watermarks for IP Protection of Embedded Quantized Large Language Models [link] 02/28/2024

- Multi-Bit Distortion-Free Watermarking for Large Language Models [link] 02/27/2024

- GumbelSoft: Diversified Language Model Watermarking via the GumbelMax-trick [link] 20/02/2024

- k-SEMSTAMP : A Clustering-Based Semantic Watermark for Detection of Machine-Generated Text [link] 19/02/2024

- Permute-and-Flip: An optimally robust and watermarkable decoder for LLMs [link] 08/02/2024

- Provably Robust Multi-bit Watermarking for AI-generated Text via Error Correction Code [link] 30/01/2024

- Adaptive Text Watermark for Large Language Models [pdf] 26/01/2024

- Optimizing watermarks for large language models [pdf] 31/12/2023

- Towards Optimal Statistical Watermarking [pdf] 13/12/2023

- ON THE LEARNABILITY OF WATERMARKS FOR LANGUAGE MODELS [pdf] 7/12/2023

- Mark My Words: Analyzing and Evaluating Language Model Watermarks [pdf] 3/12/2023

- I Know You Did Not Write That! A Sampling-Based Watermarking Method for Identifying Machine Generated Text [pdf] 30/11/2023

- TOWARDS CODABLE WATERMARKING FOR INJECTING MULTI-BIT INFORMATION TO LLM [pdf] 27/11/2023

- Improving the Generation Quality of Watermarked Large Language Models via Word Importance Scoring [pdf] 16/11/2023

- Performance Trade-offs of Watermarking Large Language Models [pdf] 16/11/2023

- X-Mark: Towards Lossless Watermarking Through Lexical Redundancy [pdf] 16/11/2023

- WaterBench: Towards Holistic Evaluation of Watermarks for Large Language Models [pdf] 13/11/2023

- Publicly Detectable Watermarking for Language Models [pdf] 25/10/2023

- Unbiased Watermark for Large Language Models [pdf] 18/10/2023

- A watermark for large language models [pdf]

- Undetectable Watermarks for Language Models [pdf]

- Provable Robust Watermarking for AI-Generated Text [pdf]

- Robust Distortion-free Watermarks for Language Models [pdf]

- SemStamp: A Semantic Watermark with Paraphrastic Robustness for Text Generation [pdf]

- DiPmark: A Stealthy, Efficient and Resilient Watermark for Large Language Models [pdf]

- Watermarking Conditional Text Generation for AI Detection: Unveiling Challenges and a Semantic-Aware Watermark Remedy [pdf]

- A Semantic Invariant Robust Watermark for Large Language Models [pdf]

- REMARK-LLM: A Robust and Efficient Watermarking Framework for Generative Large Language Models [pdf]

- Robust Multi-bit Natural Language Watermarking through Invariant Features [pdf]

- Advancing Beyond Identification: Multi-bit Watermark for Language Models [pdf]

- Three Bricks to Consolidate Watermarks for Large Language Models [pdf]

- My AI safety lecture for UT Effective Altruism [Link]

- Zero-Shot Detection of Machine-Generated Codes [pdf]

- Who Wrote this Code? Watermarking for Code Generation [pdf]

- Humanizing Machine-Generated Content: Evading AI-Text Detection through Adversarial Attack [pdf] 04/01/2024

- Bypassing LLM Watermarks with Color-Aware Substitutions [pdf] 03/19/2024

- Watermark Stealing in Large Language Models [pdf] 02/29/2024

- Attacking LLM Watermarks by Exploiting Their Strengths [pdf] 02/27/2024

- Can Watermarks Survive Translation? On the Cross-lingual Consistency of Text Watermark for Large Language Models [pdf] 02/22/2024

- Machine-generated Text Localization [pdf] 02/19/2024

- Stumbling Blocks: Stress Testing the Robustness of Machine-Generated Text Detectors Under Attacks [pdf] 02/19/2024

- Authorship Obfuscation in Multilingual Machine-Generated Text Detection [pdf] 01/17/2024

- LANGUAGE MODEL DETECTORS ARE EASILY OPTIMIZED AGAINST [pdf] 11/28/2023

- A Ship of Theseus: Curious Cases of Paraphrasing in LLM-Generated Texts [pdf] 11/14/2023

- Watermarks in the Sand: Impossibility of Strong Watermarking for Generative Models [pdf] 11/8/2023

- Does Human Collaboration Enhance the Accuracy of Identifying LLM-Generated Deepfake Texts? [pdf]

- Paraphrasing evades detectors of AI-generated text, but retrieval is an effective defense [pdf]

- Red Teaming Language Model Detectors with Language Models [pdf]

- Paraphrase Detection: Human vs. Machine Content [pdf]

- Large Language Models can be Guided to Evade AI-Generated Text Detection [pdf]

- Counter Turing Test CT^2: AI-Generated Text Detection is Not as Easy as You May Think -- Introducing AI Detectability Index [pdf]

- How Reliable Are AI-Generated-Text Detectors? An Assessment Framework Using Evasive Soft Prompts [pdf]

- On the Reliability of Watermarks for Large Language Models [pdf]

- Spotting AI’s Touch: Identifying LLM-Paraphrased Spans in Text [pdf] 05/22/2024

- RAID: A Shared Benchmark for Robust Evaluation of Machine-Generated Text Detectors [pdf] 05/16/2024

- M4GT-Bench: Evaluation Benchmark for Black-Box Machine-Generated Text Detection [pdf] 02/19/2024

- How Close is ChatGPT to Human Experts? Comparison Corpus, Evaluation, and Detection [pdf]

- CHEAT: A Large-scale Dataset for Detecting ChatGPT-writtEn AbsTracts [pdf]

- Ghostbuster: Detecting Text Ghostwritten by Large Language Models [pdf]

- M4: Multi-generator, Multi-domain, and Multi-lingual Black-Box Machine-Generated Text Detection [pdf]

- GPT-Sentinel: Distinguishing Human and ChatGPT Generated Content [pdf]

- Mgtbench: Benchmarking machine-generated text detection [pdf]

- HC3 Plus: A Semantic-Invariant Human ChatGPT Comparison Corpus [pdf]

- MULTITuDE: Large-Scale Multilingual Machine-Generated Text Detection Benchmark [pdf]

- TURINGBENCH: A Benchmark Environment for Turing Test in the Age of Neural Text Generation [pdf]

- Monitoring AI-Modified Content at Scale: A Case Study on the Impact of ChatGPT on AI Conference Peer Reviews [pdf] 03/13/2024

- A Survey of AI-generated Text Forensic Systems: Detection, Attribution, and Characterization [pdf] 03/05/2024

- Hidding the Ghostwriters: An Adversarial Evaluation of AI-Generated Student Essay Detection [pdf] 02/01/2024

- LLM- Detect AI Generated Text. Kaggle. [link]

- Can AI-Generated Text be Reliably Detected? [pdf]

- On the Possibilities of AI-Generated Text Detection [pdf]

- GPT detectors are biased against non-native English writers [pdf]

- ChatLog: Recording and Analyzing ChatGPT Across Time [pdf]

- On the Zero-Shot Generalization of Machine-Generated Text Detectors [pdf]

If you find this repo useful, please cite our work.

@article{yang2023survey,

title={A Survey on Detection of LLMs-Generated Content},

author={Yang, Xianjun and Pan, Liangming and Zhao, Xuandong and Chen, Haifeng and Petzold, Linda and Wang, William Yang and Cheng, Wei},

journal={arXiv preprint arXiv:2310.15654},

year={2023}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome_papers_on_LLMs_detection

Similar Open Source Tools

Awesome_papers_on_LLMs_detection

This repository is a curated list of papers focused on the detection of Large Language Models (LLMs)-generated content. It includes the latest research papers covering detection methods, datasets, attacks, and more. The repository is regularly updated to include the most recent papers in the field.

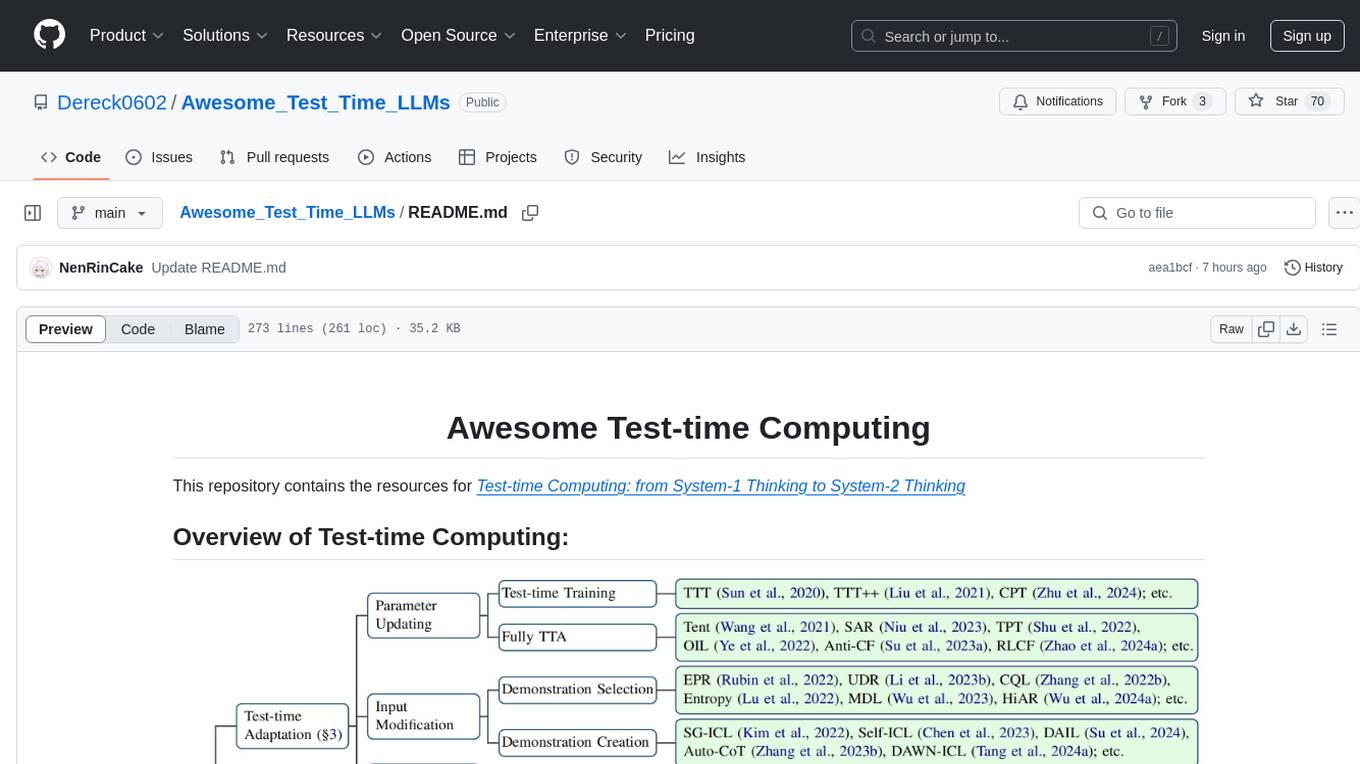

Awesome_Test_Time_LLMs

This repository focuses on test-time computing, exploring various strategies such as test-time adaptation, modifying the input, editing the representation, calibrating the output, test-time reasoning, and search strategies. It covers topics like self-supervised test-time training, in-context learning, activation steering, nearest neighbor models, reward modeling, and multimodal reasoning. The repository provides resources including papers and code for researchers and practitioners interested in enhancing the reasoning capabilities of large language models.

Awesome-LLM-Interpretability

Awesome-LLM-Interpretability is a curated list of materials related to LLM (Large Language Models) interpretability, covering tutorials, code libraries, surveys, videos, papers, and blogs. It includes resources on transformer mechanistic interpretability, visualization, interventions, probing, fine-tuning, feature representation, learning dynamics, knowledge editing, hallucination detection, and redundancy analysis. The repository aims to provide a comprehensive overview of tools, techniques, and methods for understanding and interpreting the inner workings of large language models.

awesome-and-novel-works-in-slam

This repository contains a curated list of cutting-edge works in Simultaneous Localization and Mapping (SLAM). It includes research papers, projects, and tools related to various aspects of SLAM, such as 3D reconstruction, semantic mapping, novel algorithms, large-scale mapping, and more. The repository aims to showcase the latest advancements in SLAM technology and provide resources for researchers and practitioners in the field.

MedLLMsPracticalGuide

This repository serves as a practical guide for Medical Large Language Models (Medical LLMs) and provides resources, surveys, and tools for building, fine-tuning, and utilizing LLMs in the medical domain. It covers a wide range of topics including pre-training, fine-tuning, downstream biomedical tasks, clinical applications, challenges, future directions, and more. The repository aims to provide insights into the opportunities and challenges of LLMs in medicine and serve as a practical resource for constructing effective medical LLMs.

oneclick-subtitles-generator

A comprehensive web application for auto-subtitling videos and audio, translating SRT files, generating AI narration with voice cloning, creating background images, and rendering professional subtitled videos. Designed for content creators, educators, and general users who need high-quality subtitle generation and video production capabilities.

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

awesome-LLM-game-agent-papers

This repository provides a comprehensive survey of research papers on large language model (LLM)-based game agents. LLMs are powerful AI models that can understand and generate human language, and they have shown great promise for developing intelligent game agents. This survey covers a wide range of topics, including adventure games, crafting and exploration games, simulation games, competition games, cooperation games, communication games, and action games. For each topic, the survey provides an overview of the state-of-the-art research, as well as a discussion of the challenges and opportunities for future work.

awesome_LLM-harmful-fine-tuning-papers

This repository is a comprehensive survey of harmful fine-tuning attacks and defenses for large language models (LLMs). It provides a curated list of must-read papers on the topic, covering various aspects such as alignment stage defenses, fine-tuning stage defenses, post-fine-tuning stage defenses, mechanical studies, benchmarks, and attacks/defenses for federated fine-tuning. The repository aims to keep researchers updated on the latest developments in the field and offers insights into the vulnerabilities and safeguards related to fine-tuning LLMs.

Paper-Reading-ConvAI

Paper-Reading-ConvAI is a repository that contains a list of papers, datasets, and resources related to Conversational AI, mainly encompassing dialogue systems and natural language generation. This repository is constantly updating.

ABigSurveyOfLLMs

ABigSurveyOfLLMs is a repository that compiles surveys on Large Language Models (LLMs) to provide a comprehensive overview of the field. It includes surveys on various aspects of LLMs such as transformers, alignment, prompt learning, data management, evaluation, societal issues, safety, misinformation, attributes of LLMs, efficient LLMs, learning methods for LLMs, multimodal LLMs, knowledge-based LLMs, extension of LLMs, LLMs applications, and more. The repository aims to help individuals quickly understand the advancements and challenges in the field of LLMs through a collection of recent surveys and research papers.

unilm

The 'unilm' repository is a collection of tools, models, and architectures for Foundation Models and General AI, focusing on tasks such as NLP, MT, Speech, Document AI, and Multimodal AI. It includes various pre-trained models, such as UniLM, InfoXLM, DeltaLM, MiniLM, AdaLM, BEiT, LayoutLM, WavLM, VALL-E, and more, designed for tasks like language understanding, generation, translation, vision, speech, and multimodal processing. The repository also features toolkits like s2s-ft for sequence-to-sequence fine-tuning and Aggressive Decoding for efficient sequence-to-sequence decoding. Additionally, it offers applications like TrOCR for OCR, LayoutReader for reading order detection, and XLM-T for multilingual NMT.

LLM-on-Tabular-Data-Prediction-Table-Understanding-Data-Generation

This repository serves as a comprehensive survey on the application of Large Language Models (LLMs) on tabular data, focusing on tasks such as prediction, data generation, and table understanding. It aims to consolidate recent progress in this field by summarizing key techniques, metrics, datasets, models, and optimization approaches. The survey identifies strengths, limitations, unexplored territories, and gaps in the existing literature, providing insights for future research directions. It also offers code and dataset references to empower readers with the necessary tools and knowledge to address challenges in this rapidly evolving domain.

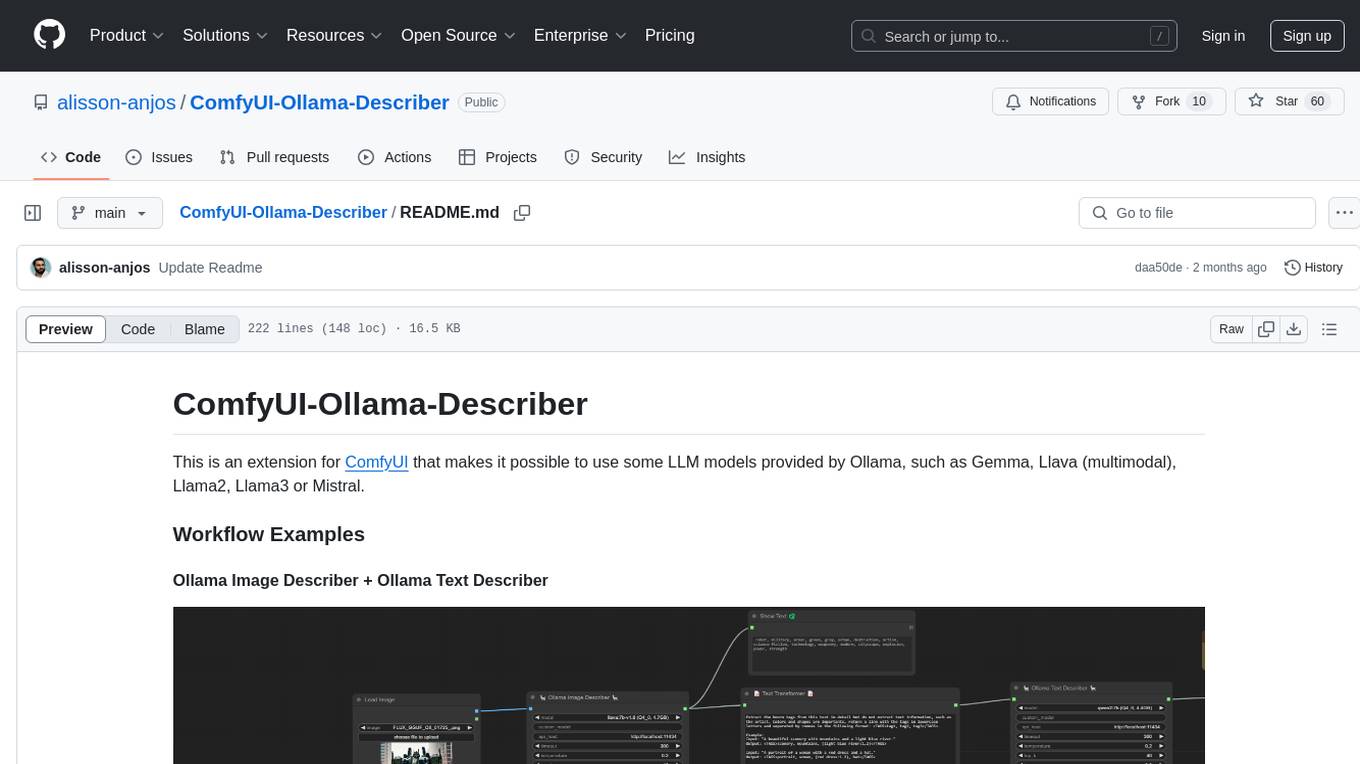

ComfyUI-Ollama-Describer

ComfyUI-Ollama-Describer is an extension for ComfyUI that enables the use of LLM models provided by Ollama, such as Gemma, Llava (multimodal), Llama2, Llama3, or Mistral. It requires the Ollama library for interacting with large-scale language models, supporting GPUs using CUDA and AMD GPUs on Windows, Linux, and Mac. The extension allows users to run Ollama through Docker and utilize NVIDIA GPUs for faster processing. It provides nodes for image description, text description, image captioning, and text transformation, with various customizable parameters for model selection, API communication, response generation, and model memory management.

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

For similar tasks

Awesome_papers_on_LLMs_detection

This repository is a curated list of papers focused on the detection of Large Language Models (LLMs)-generated content. It includes the latest research papers covering detection methods, datasets, attacks, and more. The repository is regularly updated to include the most recent papers in the field.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.