Awesome-Embodied-Agent-with-LLMs

This is a curated list of "Embodied AI or robot with Large Language Models" research. Watch this repository for the latest updates! 🔥

Stars: 1188

This repository, named Awesome-Embodied-Agent-with-LLMs, is a curated list of research related to Embodied AI or agents with Large Language Models. It includes various papers, surveys, and projects focusing on topics such as self-evolving agents, advanced agent applications, LLMs with RL or world models, planning and manipulation, multi-agent learning and coordination, vision and language navigation, detection, 3D grounding, interactive embodied learning, rearrangement, benchmarks, simulators, and more. The repository provides a comprehensive collection of resources for individuals interested in exploring the intersection of embodied agents and large language models.

README:

This is a curated list of "Embodied AI or agent with Large Language Models" research which is maintained by haonan.

Watch this repository for the latest updates and feel free to raise pull requests if you find some interesting papers!

[2024/08/01] Created a new board about social agent and role-playing. 🧑🧑🧒🧒

[2024/06/28] Created a new board about agent self-evolutionary research. 🤖

[2024/06/07] Add Mobile-Agent-v2, a mobile device operation assistant with effective navigation via multi-agent collaboration. 🚀

[2024/05/13] Add "Learning Interactive Real-World Simulators"——outstanding paper award in ICLR 2024 🥇.

[2024/04/24] Add "A Survey on Self-Evolution of Large Language Models", a systematic survey on self-evolution in LLMs! 💥

[2024/04/16] Add some CVPR 2024 papers.

[2024/04/15] Add MetaGPT, accepted for oral presentation (top 1.2%) at ICLR 2024, ranking #1 in the LLM-based Agent category. 🚀

[2024/03/13] Add CRADLE, an interesting paper exploring LLM-based agent in Red Dead Redemption II!🎮

- Survey

- Social Agent

- Self-Evolving Agents

- Advanced Agent Applications

- LLMs with RL or World Model

- Planning and Manipulation or Pretraining

- Multi-Agent Learning and Coordination

- Vision and Language Navigation

- Detection

- 3D Grounding

- Interactive Embodied Learning

- Rearrangement

- Benchmark

- Simulator

- Others

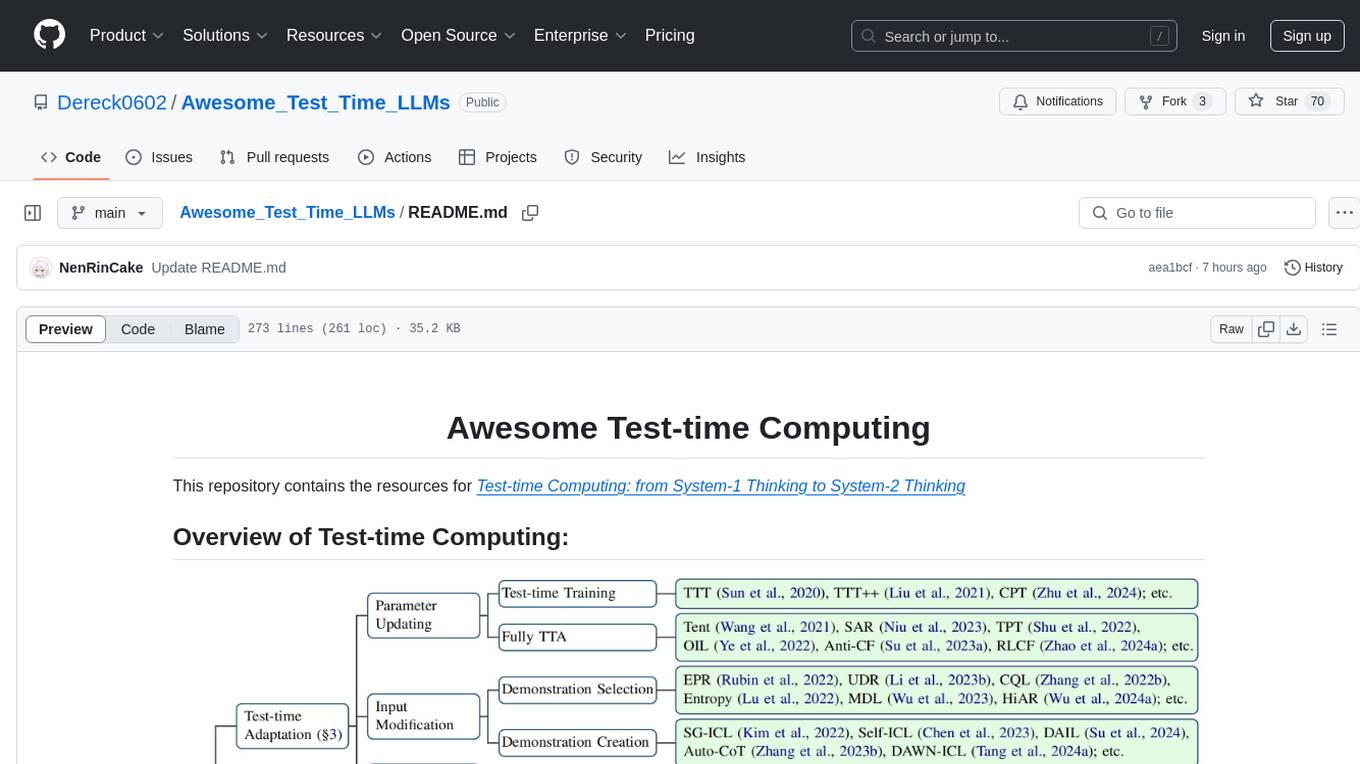

Figure 1. Trend of Embodied Agent with LLMs.[1]

Figure 2. An envisioned Agent society.[2]

Figure 1. Trend of Embodied Agent with LLMs.[1]

Figure 2. An envisioned Agent society.[2]

-

A Survey on Vision-Language-Action Models for Embodied AI [arXiv 2024.03]

The Chinese University of Hong Kong, Huawei Noah’s Ark Lab -

Large Multimodal Agents: A Survey [arXiv 2024.02] [Github]

Junlin Xie♣♡ Zhihong Chen♣♡ Ruifei Zhang♣♡ Xiang Wan♣ Guanbin Li♠

♡The Chinese University of Hong Kong, Shenzhen ♣Shenzhen Research Institute of Big Data, ♠Sun Yat-sen University -

A Survey on Self-Evolution of Large Language Models [arXiv 2024.01]

Key Lab of HCST (PKU), MOE; School of Computer Science, Peking University, Alibaba Group, Nanyang Technological University -

Agent AI: Surveying the Horizons of Multimodal Interaction [arXiv 2024.01]

Stanford University, Microsoft Research, Redmond, University of California, Los Angeles, University of Washington, Microsoft Gaming -

Igniting Language Intelligence: The Hitchhiker’s Guide From Chain-of-Thought Reasoning to Language Agents [arXiv 2023.11]

Shanghai Jiao Tong University, Amazon Web Services, Yale University -

The Rise and Potential of Large Language Model Based Agents: A Survey [arXiv 2023.09]

Fudan NLP Group, miHoYo Inc -

A Survey on LLM-based Autonomous Agents [arXiv 2023,08]

Gaoling School of Artificial Intelligence, Renmin University of China

-

AGENTGYM: Evolving Large Language Model-based Agents across Diverse Environments [arXiv 2024.06] [Github] [Project page]

Fudan NLP Lab & Fudan Vision and Learning Lab -

Interactive Evolution: A Neural-Symbolic Self-Training Framework For Large Language Models [arXiv 2024.06] [Github]

Fangzhi Xu♢♡, Qiushi Sun2, ♡, Kanzhi Cheng1, Jun Liu♢, Yu Qiao♡, Zhiyong Wu♡

♢Xi’an Jiaotong University, ♡Shanghai Artificial Intelligence Laboratory, 1The University of Hong Kong, 2Nanjing Univerisity -

Symbolic Learning Enables Self-Evolving Agents [arXiv 2024.06] [Github]

Wangchunshu Zhou, Yixin Ou, Shengwei Ding, Long Li, Jialong Wu, Tiannan Wang, Jiamin Chen, Shuai Wang, Xiaohua Xu, Ningyu Zhang, Huajun Chen, Yuchen Eleanor Jiang

AIWaves Inc.

-

[Embodied-agents] [Github]

Seamlessly integrate state-of-the-art transformer models into robotics stacks. -

Mobile-Agent-v2: Mobile Device Operation Assistant with Effective Navigation via Multi-Agent Collaboration [arXiv 2024] [Github]

Junyang Wang1, Haiyang Xu2, Haitao Jia1, Xi Zhang2, Ming Yan2, Weizhou Shen2, Ji Zhang2, Fei Huang2, Jitao Sang1

1Beijing Jiaotong University 2Alibaba Group -

Mobile-Agent: The Powerful Mobile Device Operation Assistant Family [ICLR 2024 Workshop LLM Agents] [Github]

Junyang Wang1, Haiyang Xu2, Jiabo Ye2, Ming Yan2, Weizhou Shen2, Ji Zhang2, Fei Huang2, Jitao Sang1

1Beijing Jiaotong University 2Alibaba Group -

[Machinascript-for-robots] [Github]

Build LLM-powered robots in your garage with MachinaScript For Robots! -

DiffAgent: Fast and Accurate Text-to-Image API Selection with Large Language Model [CVPR 2024] [Github]

Lirui Zhao1,2 Yue Yang2,4 Kaipeng Zhang2 Wenqi Shao2, Yuxin Zhang1, Yu Qiao2, Ping Luo2,3 Rongrong Ji1

1Xiamen University, 2OpenGVLab, Shanghai AI Laboratory 3The University of Hong Kong, 4Shanghai Jiao Tong University -

MetaGPT: Meta Programming for A Multi-Agent Collaborative Framework [ICLR 2024 (oral)]

DeepWisdom, AI Initiative, King Abdullah University of Science and Technology, Xiamen University, The Chinese University of Hong Kong, Shenzhen, Nanjing University, University of Pennsylvania, University of California, Berkeley, The Swiss AI Lab IDSIA/USI/SUPSI -

AppAgent: Multimodal Agents as Smartphone Users [Project page] [Github]

Chi Zhang∗ ZhaoYang∗ JiaxuanLiu∗ YuchengHan XinChen Zebiao Huang BinFu GangYu†

Tencent

-

KALM: Knowledgeable Agents by Offline Reinforcement Learning from Large Language Model Rollouts [NeurIPS 2024] [Project Page]

Jing-Cheng Pang, Si-Hang Yang, Kaiyuan Li, Jiaji Zhang, Xiong-Hui Chen, Nan Tang, Yang Yu

1Nanjing University, 2Polixir.ai -

Learning Interactive Real-World Simulators [ICLR 2024 (Outstanding Papers)] [Project Page]

Sherry Yang1,2, Yilun Du3, Kamyar Ghasemipour2, Jonathan Tompson2, Leslie Kaelbling3, Dale Schuurmans2, Pieter Abbeel1

1UC Berkeley, 2Google DeepMind, 3MIT -

Robust agents learn causal world models [ICLR 2024]

Jonathan Richens*, TomEveritt

Google DeepMind -

Embodied Multi-Modal Agent trained by an LLM from a Parallel TextWorld [CVPR 2024] [Github]

Yijun Yang154, Tianyi Zhou2, Kanxue Li3, Dapeng Tao3, Lvsong Li4, Li Shen4, Xiaodong He4, Jing Jiang5, Yuhui Shi1

1Southern University of Science and Technology, 2University of Maryland, College Park, 3Yunnan University, 4JD Explore Academy, 5University of Technology Sydney -

Leveraging Pre-trained Large Language Models to Construct and Utilize World Models for Model-based Task Planning [NeurIPS 2023] [Project Page][Github]

Lin_Guan1, Karthik Valmeekam1, Sarath Sreedharan2, Subbarao Kambhampati1

1School of Computing & AI Arizona State University Tempe, AZ, 2Department of Computer Science Colorado State University Fort Collins, CO -

Eureka: Human-Level Reward Design via Coding Large Language Models [NeurIPS 2023 Workshop ALOE Spotlight] [Project page] [Github]

Jason Ma1,2, William Liang2, Guanzhi Wang1,3, De-An Huang1, Osbert Bastani2, Dinesh Jayaraman2, Yuke Zhu1,4, Linxi "Jim" Fan1, Anima Anandkumar1,3

1NVIDIA; 2UPenn; 3Caltech; 4UT Austin -

RLAdapter: Bridging Large Language Models to Reinforcement Learning in Open Worlds [arXiv 2023]

-

Can Language Agents Be Alternatives to PPO? A Preliminary Empirical Study on OpenAI Gym [arXiv 2023]

-

RoboGPT: An intelligent agent of making embodied long-term decisions for daily instruction tasks [arXiv 2023]

-

Aligning Agents like Large Language Models [arXiv 2023]

-

AMAGO: Scalable In-Context Reinforcement Learning for Adaptive Agents [ICLR 2024 spotlight]

-

STARLING: Self-supervised Training of Text-based Reinforcement Learning Agent with Large Language Models [arXiv 2023]

-

Text2Reward: Dense Reward Generation with Language Models for Reinforcement Learning [ICLR 2024 spotlight]

-

Leveraging Large Language Models for Optimised Coordination in Textual Multi-Agent Reinforcement Learning [arXiv 2023]

-

Online Continual Learning for Interactive Instruction Following Agents [ICLR 2024]

-

ADAPTER-RL: Adaptation of Any Agent using Reinforcement Learning [arXiv 2023]

-

Language Reward Modulation for Pretraining Reinforcement Learning [arXiv 2023]

-

Informing Reinforcement Learning Agents by Grounding Natural Language to Markov Decision Processes [arXiv 2023]

-

Learning to Model the World with Language [arXiv 2023]

-

MAMBA: an Effective World Model Approach for Meta-Reinforcement Learning [ICLR 2024]

-

Language Reward Modulation for Pretraining Reinforcement Learning [arXiv 2023] [Github]

Ademi Adeniji, Amber Xie, Carmelo Sferrazza, Younggyo Seo, Stephen James, Pieter Abbeel

1UC Berkeley -

Guiding Pretraining in Reinforcement Learning with Large Language Models [ICML 2023]

Yuqing Du1*, Olivia Watkins1*, Zihan Wang2, Cedric Colas ´3,4, Trevor Darrell1, Pieter Abbeel1, Abhishek Gupta2, Jacob Andreas3

1Department of Electrical Engineering and Computer Science, University of California, Berkeley, USA 2University of Washington, Seattle 3Massachusetts Institute of Technology, Computer Science and Artificial Intelligence Laboratory 4 Inria, Flowers Laboratory.

-

Voyager: An Open-Ended Embodied Agent with Large Language Models [NeurIPS 2023 Workshop ALOE Spotlight] [Project page] [Github]

Guanzhi Wang1,2, Yuqi Xie3, Yunfan Jiang4, Ajay Mandlekar1, Chaowei Xiao1,5, Yuke Zhu1,3, Linxi Fan1, Anima Anandkumar1,2 1NVIDIA, 2Caltech, 3UT Austin, 4Stanford, 5UW Madison -

Agent-Pro: Learning to Evolve via Policy-Level Reflection and Optimization [ACL 2024][Github]

Wenqi Zhang, Ke Tang, Hai Wu, Mengna Wang, Yongliang Shen, Guiyang Hou, Zeqi Tan, Peng Li, Yueting Zhuang, Weiming Lu -

Self-Contrast: Better Reflection Through Inconsistent Solving Perspectives [ACL 2024]

Wenqi Zhang, Yongliang Shen, Linjuan Wu, Qiuying Peng, Jun Wang, Yueting Zhuang, Weiming Lu -

MineDreamer: Learning to Follow Instructions via Chain-of-Imagination for Simulated-World Control [arXiv 2024] [Project Page]

Enshen Zhou1,2 Yiran Qin1,3 Zhenfei Yin1,4 Yuzhou Huang3 Ruimao Zhang3 Lu Sheng2 Yu Qiao1 Jing Shao1

1Shanghai Artificial Intelligence Laboratory, 2The Chinese University of Hong Kong, Shenzhen, 3Beihang University, 4The University of Sydney -

MP5: A Multi-modal Open-ended Embodied System in Minecraft via Active Perception [CVPR 2024] [Project Page]

Yiran Qin1,2 Enshen Zhou1,3 Qichang Liu1,4 Zhenfei Yin1,5 Lu Sheng3 Ruimao Zhang2 Yu Qiao1 Jing Shao1

1Shanghai Artificial Intelligence Laboratory, 2The Chinese University of Hong Kong, Shenzhen, 3Beihang University, 4Tsinghua University, 5The University of Sydney -

Code-as-Monitor: Constraint-aware Visual Programming for Reactive and Proactive Robotic Failure Detection [CVPR 2025] [Project Page]

Enshen Zhou1* Qi Su2* Cheng Chi3*; Zhizheng Zhang4 Zhongyuan Wang3 Tiejun Huang2,3 Lu Sheng1; He Wang2,3,4;

1Beihang University, 2Peking University, 3Beijing Academy of Artificial Intelligence, 4GalBot -

RILA: Reflective and Imaginative Language Agent for Zero-Shot Semantic Audio-Visual Navigation [CVPR 2024]

Zeyuan Yang1, LIU JIAGENG, Peihao Chen2, Anoop Cherian3, Tim Marks, Jonathan Le Roux4, Chuang Gan5 1Tsinghua University, 2South China University of Technology, 3Mitsubishi Electric Research Labs (MERL), 4Mitsubishi Electric Research Labs, 5MIT-IBM Watson AI Lab -

Towards General Computer Control: A Multimodal Agent for Red Dead Redemption II as a Case Study [arXiv 2024] [Project Page] [Code]

Weihao Tan2, Ziluo Ding1, Wentao Zhang2, Boyu Li1, Bohan Zhou3, Junpeng Yue3, Haochong Xia2, Jiechuan Jiang3, Longtao Zheng2, Xinrun Xu1, Yifei Bi1, Pengjie Gu2,

1Beijing Academy of Artificial Intelligence (BAAI), China; 2Nanyang Technological University, Singapore; 3School of Computer Science, Peking University, China -

See and Think: Embodied Agent in Virtual Environment [arXiv 2023]

Zhonghan Zhao1*, Wenhao Chai2*, Xuan Wang1*, Li Boyi1, Shengyu Hao1, Shidong Cao1, Tian Ye3, Jenq-Neng Hwang2, Gaoang Wang1

1Zhejiang University 1University of Washington 1Hong Kong University of Science and Technology (GZ) -

Agent Instructs Large Language Models to be General Zero-Shot Reasoners [arXiv 2023]

Nicholas Crispino1, Kyle Montgomery1, Fankun Zeng1, Dawn Song2, Chenguang Wang1

1Washington University in St. Louis, 2UC Berkeley -

JARVIS-1: Open-world Multi-task Agents with Memory-Augmented Multimodal Language Models [NeurIPS 2023] [Project Page]

Zihao Wang1,2 Shaofei Cai1,2 Anji Liu3 Yonggang Jin4 Jinbing Hou4 Bowei Zhang5 Haowei Lin1,2 Zhaofeng He4 Zilong Zheng6 Yaodong Yang1 Xiaojian Ma6† Yitao Liang1†

1Institute for Artificial Intelligence, Peking University, 2School of Intelligence Science and Technology, Peking University, 3Computer Science Department, University of California, Los Angeles, 4Beijing University of Posts and Telecommunications, 5School of Electronics Engineering and Computer Science, Peking University, 6Beijing Institute for General Artificial Intelligence (BIGAI) -

Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents [NeurIPS 2023]

Zihao Wang1,2 Shaofei Cai1,2 Guanzhou Chen3 Anji Liu4 Xiaojian Ma4 Yitao Liang1,5†

1Institute for Artificial Intelligence, Peking University, 2School of Intelligence Science and Technology, Peking University, 3School of Computer Science, Beijing University of Posts and Telecommunications, 4Computer Science Department, University of California, Los Angeles, 5Beijing Institute for General Artificial Intelligence (BIGAI) -

CAMEL: Communicative Agents for “Mind” Exploration of Large Scale Language Model Society [NeurIPS 2023] [Github] [Project page]

Guohao Li, Hasan Abed Al Kader Hammoud, Hani Itani, Dmitrii Khizbullin, Bernard Ghanem

1King Abdullah University of Science and Technology (KAUST) -

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents [arXiv 2022] [Github] [Project page]

Wenlong Huang1, Pieter Abbeel1, Deepak Pathak2, Igor Mordatch3

1UC Berkeley, 2Carnegie Mellon University, 3Google -

FILM: Following Instructions in Language with Modular Methods [ICLR 2022] [Github] [Project page]

So Yeon Min1, Devendra Singh Chaplot2, Pradeep Ravikumar1, Yonatan Bisk1, Ruslan Salakhutdinov1

1Carnegie Mellon University 2Facebook AI Research -

Embodied Task Planning with Large Language Models [arXiv 2023] [Github] [Project page] [Demo] [Huggingface Model]

Zhenyu Wu1, Ziwei Wang2,3, Xiuwei Xu2,3, Jiwen Lu2,3, Haibin Yan1*

1School of Automation, Beijing University of Posts and Telecommunications, 2Department of Automation, Tsinghua University, 3Beijing National Research Center for Information Science and Technology -

SPRING: GPT-4 Out-performs RL Algorithms by Studying Papers and Reasoning [arXiv 2023]

Yue Wu1,4* , Shrimai Prabhumoye2 , So Yeon Min1 , Yonatan Bisk1 , Ruslan Salakhutdinov1 ,Amos Azaria3 , Tom Mitchell1 , Yuanzhi Li1,4

1Carnegie Mellon University, 2NVIDIA, 3Ariel University, 4Microsoft Research -

PONI: Potential Functions for ObjectGoal Navigation with Interaction-free Learning [CVPR 2022 (Oral)] [Project page] [Github]

Santhosh Kumar Ramakrishnan1,2, Devendra Singh Chaplot1, Ziad Al-Halah2 Jitendra Malik1,3, Kristen Grauman1,2

1Facebook AI Research, 2UT Austin, 3UC Berkeley -

Moving Forward by Moving Backward: Embedding Action Impact over Action Semantics [ICLR 2023] [Project page] [Github]

Kuo-Hao Zeng1, Luca Weihs2, Roozbeh Mottaghi1, Ali Farhadi1

1Paul G. Allen School of Computer Science & Engineering, University of Washington, 2PRIOR @ Allen Institute for AI -

Modeling Dynamic Environments with Scene Graph Memory [ICML 2023]

Andrey Kurenkov1, Michael Lingelbach1, Tanmay Agarwal1, Emily Jin1, Chengshu Li1, Ruohan Zhang1, Li Fei-Fei1, Jiajun Wu1, Silvio Savarese2, Roberto Mart´ın-Mart´ın3

1Department of Computer Science, Stanford University 2Salesforce AI Research 3Department of Computer Science, University of Texas at Austin. -

Reasoning with Language Model is Planning with World Model [arXiv 2023]

Shibo Hao∗♣, Yi Gu∗♣, Haodi Ma♢, Joshua Jiahua Hong♣, Zhen Wang♣ ♠, Daisy Zhe Wang♢, Zhiting Hu♣

♣UC San Diego, ♢University of Florida, ♠Mohamed bin Zayed University of Artificial Intelligence -

Do As I Can, Not As I Say: Grounding Language in Robotic Affordances [arXiv 2022]

Robotics at Google, Everyday Robots -

Do Embodied Agents Dream of Pixelated Sheep?: Embodied Decision Making using Language Guided World Modelling [ICML 2023]

Kolby Nottingham1 Prithviraj Ammanabrolu2 Alane Suhr2 Yejin Choi3,2 Hannaneh Hajishirzi3,2 Sameer Singh1,2 Roy Fox1

1Department of Computer Science, University of California Irvine 2Allen Institute for Artificial Intelligence 3Paul G. Allen School of Computer Science -

Context-Aware Planning and Environment-Aware Memory for Instruction Following Embodied Agents [ICCV 2023]

Byeonghwi Kim Jinyeon Kim Yuyeong Kim1,* Cheolhong Min Jonghyun Choi†

Yonsei University 1Gwangju Institute of Science and Technology -

Inner Monologue: Embodied Reasoning through Planning with Language Models [CoRL 2022] [Project page]

Robotics at Google -

Language Models Meet World Models: Embodied Experiences Enhance Language Models [arXiv 2023]

[Twitter]

Jiannan Xiang∗♠, Tianhua Tao∗♠, Yi Gu♠, Tianmin Shu♢, Zirui Wang♠, Zichao Yang♡, Zhiting Hu♠

♠UC San Diego, ♣UIUC, ♢MIT, ♡Carnegie Mellon University -

AlphaBlock: Embodied Finetuning for Vision-Language Reasoning in Robot Manipulation [arXiv 2023] [Video]

Chuhao Jin1* , Wenhui Tan1* , Jiange Yang2* , Bei Liu3† , Ruihua Song1 , Limin Wang2 , Jianlong Fu3†

1Renmin University of China, 2Nanjing University, 3Microsoft Research -

A Persistent Spatial Semantic Representation for High-level Natural Language Instruction Execution [CoRL 2021]

[Project page] [Poster]

Valts Blukis1,2, Chris Paxton1, Dieter Fox1,3, Animesh Garg1,4, Yoav Artzi2

1NVIDIA 2Cornell University 3University of Washington 4University of Toronto, Vector Institute -

LLM-Planner: Few-Shot Grounded Planning for Embodied Agents with Large Language Models [ICCV 2023] [Project page] [Github]

Chan Hee Song1, Jiaman Wu1, Clayton Washington1, Brian M. Sadler2, Wei-Lun Chao1, Yu Su1

1The Ohio State University, 2DEVCOM ARL -

Code as Policies: Language Model Programs for Embodied Control [arXiv 2023] [Project page] [Github] [Blog] [Colab]

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

Robotics at Google -

3D-LLM: Injecting the 3D World into Large Language Models [arXiv 2023]

1Yining Hong, 2Haoyu Zhen, 3Peihao Chen, 4Shuhong Zheng, 5Yilun Du, 6Zhenfang Chen, 6,7Chuang Gan

1UCLA 2 SJTU 3 SCUT 4 UIUC 5 MIT 6MIT-IBM Watson AI Lab 7 Umass Amherst -

VoxPoser: Composable 3D Value Maps for Robotic Manipulation with Language Models [arXiv 2023] [Project page] [Online Demo]

Wenlong Huang1, Chen Wang1, Ruohan Zhang1, Yunzhu Li1,2, Jiajun Wu1, Li Fei-Fei1

1Stanford University 2University of Illinois Urbana-Champaign -

Palm-e: An embodied multimodal language mode [ICML 2023] [Project page]

1Robotics at Google 2TU Berlin 3Google Research -

Large Language Models as Commonsense Knowledge for Large-Scale Task Planning [arXiv 2023]

Zirui Zhao Wee Sun Lee David Hsu

School of Computing National University of Singapore -

An Embodied Generalist Agent in 3D World [ICML 2024]

Jiangyong Huang, Silong Yong, Xiaojian Ma, Xiongkun Linghu, Puhao Li, Yan Wang, Qing Li, Song-Chun Zhu, Baoxiong Jia, Siyuan Huang Beijing Institute for General Artificial Intelligence (BIGAI)

-

Building Cooperative Embodied Agents Modularly with Large Language Models [ICLR 2024] [Project page] [Github]

Hongxin Zhang1*, Weihua Du2*, Jiaming Shan3, Qinhong Zhou1, Yilun Du4, Joshua B. Tenenbaum4, Tianmin Shu4, Chuang Gan1,5

1University of Massachusetts Amherst, 2Tsinghua University, 3Shanghai Jiao Tong University, 4MIT, 5MIT-IBM Watson AI Lab -

War and Peace (WarAgent): Large Language Model-based Multi-Agent Simulation of World Wars [arXiv 2023]

Wenyue Hua1*, Lizhou Fan2*, Lingyao Li2, Kai Mei1, Jianchao Ji1, Yingqiang Ge1, Libby Hemphill2, Yongfeng Zhang1

1Rutgers University, 2University of Michigan -

MindAgent: Emergent Gaming Interaction [arXiv 2023]

Ran Gong*1† Qiuyuan Huang*2‡ Xiaojian Ma*1 Hoi Vo3 Zane Durante†4 Yusuke Noda3 Zilong Zheng5 Song-Chun Zhu15678 Demetri Terzopoulos1 Li Fei-Fei4 Jianfeng Gao2

1UCLA; 2Microsoft Research, Redmond; 3Xbox Team, Microsoft; 4Stanford; 5BIGAI; 6PKU; 7THU; 8UCLA -

Demonstration-free Autonomous Reinforcement Learning via Implicit and Bidirectional Curriculum [ICML 2023]

Jigang Kim*1,2 Daesol Cho*1,2 H. Jin Kim1,3

1Seoul National University, 2Artificial Intelligence Institute of Seoul National University (AIIS), 3Automation and Systems Research Institute (ASRI).

Note: This paper mainly focuses on reinforcement learning for Embodied AI. -

Adaptive Coordination in Social Embodied Rearrangement [ICML 2023]

Andrew Szot1,2 Unnat Jain1 Dhruv Batra1,2 Zsolt Kira2 Ruta Desai1 Akshara Rai1

1Meta AI 2Georgia Institute of Technology.

-

IndoorSim-to-OutdoorReal: Learning to Navigate Outdoors without any Outdoor Experience [arXiv 2023]

Joanne Truong1,2, April Zitkovich1, Sonia Chernova2, Dhruv Batra2,3, Tingnan Zhang1, Jie Tan1, Wenhao Yu1

1Robotics at Google 2Georgia Institute of Technology 3Meta AI -

ESC: Exploration with Soft Commonsense Constraints for Zero-shot Object Navigation [ICML 2023]

Kaiwen Zhou1, Kaizhi Zheng1, Connor Pryor1, Yilin Shen2, Hongxia Jin2, Lise Getoor1, Xin Eric Wang1

1University of California, Santa Cruz 2Samsung Research America. -

NavGPT: Explicit Reasoning in Vision-and-Language Navigation with Large Language Models [arXiv 2023]

Gengze Zhou1 Yicong Hong2 Qi Wu1

1The University of Adelaide 2The Australian National University -

Instruct2Act: Mapping Multi-modality Instructions to Robotic Actions with Large Language Model [arXiv 2023] [Github]

Siyuan Huang1,2 Zhengkai Jiang4 Hao Dong3 Yu Qiao2 Peng Gao2 Hongsheng Li5

1Shanghai Jiao Tong University, 2Shanghai AI Laboratory, 3CFCS, School of CS, PKU, 4University of Chinese Academy of Sciences, 5The Chinese University of Hong Kong

-

DetGPT: Detect What You Need via Reasoning [arXiv 2023]

Renjie Pi1∗ Jiahui Gao2* Shizhe Diao1∗ Rui Pan1 Hanze Dong1 Jipeng Zhang1 Lewei Yao1 Jianhua Han3 Hang Xu2 Lingpeng Kong2 Tong Zhang1

1The Hong Kong University of Science and Technology 2The University of Hong Kong 3Shanghai Jiao Tong University

-

LLM-Grounder: Open-Vocabulary 3D Visual Grounding with Large Language Model as an Agent [arXiv 2023]

Jianing Yang1,, Xuweiyi Chen1,, Shengyi Qian1, Nikhil Madaan, Madhavan Iyengar1, David F. Fouhey1,2, Joyce Chai1

1University of Michigan, 2New York University -

3D-VisTA: Pre-trained Transformer for 3D Vision and Text Alignment [ICCV 2023]

Ziyu Zhu, Xiaojian Ma, Yixin Chen, Zhidong Deng, Siyuan Huang, Qing Li Beijing Institute for General Artificial Intelligence (BIGAI)

-

Grounding Large Language Models in Interactive Environments with Online Reinforcement Learning [ICML 2023]

Thomas Carta1*, Clement Romac ´1,2, Thomas Wolf2, Sylvain Lamprier3, Olivier Sigaud4, Pierre-Yves Oudeyer1

1Inria (Flowers), University of Bordeaux, 2Hugging Face, 3Univ Angers, LERIA, SFR MATHSTIC, F-49000, 4Sorbonne University, ISIR -

Learning Affordance Landscapes for Interaction Exploration in 3D Environments [NeurIPS 2020]

[Project page]

Tushar Nagarajan, Kristen Grauman

UT Austin and Facebook AI Research, UT Austin and Facebook AI Research -

Embodied Question Answering in Photorealistic Environments with Point Cloud Perception [CVPR 2019 (oral)] [Slides]

Erik Wijmans1†, Samyak Datta1, Oleksandr Maksymets2†, Abhishek Das1, Georgia Gkioxari2, Stefan Lee1, Irfan Essa1, Devi Parikh1,2, Dhruv Batra1,2

1Georgia Institute of Technology, 2Facebook AI Research -

Multi-Target Embodied Question Answering [CVPR 2019]

Licheng Yu1, Xinlei Chen3, Georgia Gkioxari3, Mohit Bansal1, Tamara L. Berg1,3, Dhruv Batra2,3

1University of North Carolina at Chapel Hill 2Georgia Tech 3Facebook AI -

Neural Modular Control for Embodied Question Answering [CoRL 2018 (Spotlight)] [Project page] [Github]

Abhishek Das1,Georgia Gkioxari2, Stefan Lee1, Devi Parikh1,2, Dhruv Batra1,2

1Georgia Institute of Technology 2Facebook AI Research -

Embodied Question Answering [CVPR 2018 (oral)] [Project page] [Github]

Abhishek Das1, Samyak Datta1, Georgia Gkioxari22, Stefan Lee1, Devi Parikh2,1, Dhruv Batra2

1Georgia Institute of Technology, 2Facebook AI Research

-

A Simple Approach for Visual Room Rearrangement: 3D Mapping and Semantic Search [ICLR 2023]

1Brandon Trabucco, 2Gunnar A Sigurdsson, 2Robinson Piramuthu, 2,3Gaurav S. Sukhatme, 1Ruslan Salakhutdinov

1CMU, 2Amazon Alexa AI, 3University of Southern California

-

SmartPlay: A Benchmark for LLMs as Intelligent Agents [ICLR 2024] [Github]

Yue Wu1,2, Xuan Tang1, Tom Mitchell1, Yuanzhi Li1,2 1Carnegie Mellon University, 2Microsoft Research -

RoboGen: Towards Unleashing Infinite Data for Automated Robot Learning via Generative Simulation [arXiv 2023] [Project page] [Github]

Yufei Wang1, Zhou Xian1, Feng Chen2, Tsun-Hsuan Wang3, Yian Wang4, Katerina Fragkiadaki1, Zackory Erickson1, David Held1, Chuang Gan4,5

1CMU, 2Tsinghua IIIS, 3MIT CSAIL, 4UMass Amherst, 5MIT-IBM AI Lab -

ALFWorld: Aligning Text and Embodied Environments for Interactive Learning [ICLR 2021] [Project page] [Github]

Mohit Shridhar† Xingdi Yuan♡ Marc-Alexandre Côté♡ Yonatan Bisk‡ Adam Trischler♡ Matthew Hausknecht♣

‡University of Washington ♡Microsoft Research, Montréal ‡Carnegie Mellon University ♣Microsoft Research -

ALFRED: A Benchmark for Interpreting Grounded Instructions for Everyday Tasks [CVPR 2020] [Project page] [Github]

Mohit Shridhar1 Jesse Thomason1 Daniel Gordon1 Yonatan Bisk1,2,3 Winson Han3 Roozbeh Mottaghi1,3 Luke Zettlemoyer1 Dieter Fox1,4

1Paul G. Allen School of Computer Sci. & Eng., Univ. of Washington, 2Language Technologies Institute @ Carnegie Mellon University, 3Allen Institute for AI, 4NVIDIA -

VIMA: Robot Manipulation with Multimodal Prompts [ICML 2023] [Project page] [Github] [VIMA-Bench]

Yunfan Jiang1 Agrim Gupta1† Zichen Zhang2† Guanzhi Wang3,4† Yongqiang Dou5 Yanjun Chen1 Li Fei-Fei1 Anima Anandkumar3,4 Yuke Zhu3,6‡ Linxi Fan3‡ -

SQA3D: Situated Question Answering in 3D Scenes [ICLR 2023] [Project page] [Slides] [Github]

Xiaojian Ma2 Silong Yong1,3* Zilong Zheng1 Qing Li1 Yitao Liang1,4 Song-Chun Zhu1,2,3,4 Siyuan Huang1

1Beijing Institute for General Artificial Intelligence (BIGAI) 2UCLA 3Tsinghua University 4Peking University -

IQA: Visual Question Answering in Interactive Environments [CVPR 2018] [Github] [Demo video (YouTube)]

Danie1 Gordon1 Aniruddha Kembhavi2 Mohammad Rastegari2,4 Joseph Redmon1 Dieter Fox1,3 Ali Farhadi1,2

1Paul G. Allen School of Computer Science, University of Washington 2Allen Institute for Artificial Intelligence 3Nvidia 4Xnor.ai -

Env-QA: A Video Question Answering Benchmark for Comprehensive Understanding of Dynamic Environments [ICCV 2021] [Project page] [Github]

Difei Gao1,2, Ruiping Wang1,2,3, Ziyi Bai1,2, Xilin Chen1,

1Key Laboratory of Intelligent Information Processing of Chinese Academy of Sciences (CAS), Institute of Computing Technology, CAS, 2University of Chinese Academy of Sciences, 3Beijing Academy of Artificial Intelligence

-

LEGENT: Open Platform for Embodied Agents [ACL 2024] [Project page] [Github]

Tsinghua University -

AI2-THOR: An Interactive 3D Environment for Visual AI [arXiv 2022] [Project page] [Github]

Allen Institute for AI, University of Washington, Stanford University, Carnegie Mellon University -

iGibson, a Simulation Environment for Interactive Tasks in Large Realistic Scenes [IROS 2021] [Project page] [Github]

Bokui Shen*, Fei Xia* et al. -

Habitat: A Platform for Embodied AI Research [ICCV 2019] [Project page] [Habitat-Sim] [Habitat-Lab] [Habitat Challenge]

Facebook AI Research, Facebook Reality Labs, Georgia Institute of Technology, Simon Fraser University, Intel Labs, UC Berkeley -

Habitat 2.0: Training Home Assistants to Rearrange their Habitat [NeurIPS 2021] [Project page]

Facebook AI Research, Georgia Tech, Intel Research, Simon Fraser University, UC Berkeley

-

Least-to-Most Prompting Enables Complex Reasoning in Large Language Models [ICLR 2023]

Google Research, Brain Team -

React: Synergizing reasoning and acting in language models [ICLR 2023]

Shunyu Yao1∗, Jeffrey Zhao2, Dian Yu2, Nan Du2, Izhak Shafran2, Karthik Narasimhan1, Yuan Cao2

1Department of Computer Science, Princeton University 2, Google Research, Brain team -

Algorithm of Thoughts: Enhancing Exploration of Ideas in Large Language Models [arXiv 2023]

Virginia Tech, Microsoft -

Graph of Thoughts: Solving Elaborate Problems with Large Language Models [arXiv 2023]

ETH Zurich, Cledar, Warsaw University of Technology -

Tree of Thoughts: Deliberate Problem Solving with Large Language Models [arXiv 2023]

Shunyu Yao1, Dian Yu2, Jeffrey Zhao2, Izhak Shafran2, Thomas L. Griffiths1, Yuan Cao2, Karthik Narasimhan1

1Princeton University, 2Google DeepMind -

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models [NeurIPS 2022]

Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Brian Ichter, Fei Xia, Ed H. Chi, Quoc V. Le, Denny Zhou

Google Research, Brain Team -

MINEDOJO: Building Open-Ended Embodied Agents with Internet-Scale Knowledge [NeurIPS 2022] [Github]

[Project page] [Knowledge Base]

Linxi Fan1 , Guanzhi Wang2∗ , Yunfan Jiang3* , Ajay Mandlekar1 , Yuncong Yang4 , Haoyi Zhu5 , Andrew Tang4 , De-An Huang1 , Yuke Zhu1,6† , Anima Anandkumar1,2†

1NVIDIA, 2Caltech, 3Stanford, 4Columbia, 5SJTU, 6UT Austin -

Distilling Internet-Scale Vision-Language Models into Embodied Agents [ICML 2023]

Theodore Sumers1∗ Kenneth Marino2 Arun Ahuja2 Rob Fergus2 Ishita Dasgupta2 -

LISA: Reasoning Segmentation via Large Language Model [arXiv 2023] [Github] [Huggingface Models] [Dataset] [Online Demo]

TXin Lai1 Zhuotao Tian2 Yukang Chen1 Yanwei Li1 Yuhui Yuan3 Shu Liu2 Jiaya Jia1,2

1The Chinese University of Hong Kong 2SmartMore 3MSRA

[1] Trend pic from this repo.

[2] Figure from this paper: The Rise and Potential of Large Language Model Based Agents: A Survey.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Embodied-Agent-with-LLMs

Similar Open Source Tools

Awesome-Embodied-Agent-with-LLMs

This repository, named Awesome-Embodied-Agent-with-LLMs, is a curated list of research related to Embodied AI or agents with Large Language Models. It includes various papers, surveys, and projects focusing on topics such as self-evolving agents, advanced agent applications, LLMs with RL or world models, planning and manipulation, multi-agent learning and coordination, vision and language navigation, detection, 3D grounding, interactive embodied learning, rearrangement, benchmarks, simulators, and more. The repository provides a comprehensive collection of resources for individuals interested in exploring the intersection of embodied agents and large language models.

awesome-and-novel-works-in-slam

This repository contains a curated list of cutting-edge works in Simultaneous Localization and Mapping (SLAM). It includes research papers, projects, and tools related to various aspects of SLAM, such as 3D reconstruction, semantic mapping, novel algorithms, large-scale mapping, and more. The repository aims to showcase the latest advancements in SLAM technology and provide resources for researchers and practitioners in the field.

MedLLMsPracticalGuide

This repository serves as a practical guide for Medical Large Language Models (Medical LLMs) and provides resources, surveys, and tools for building, fine-tuning, and utilizing LLMs in the medical domain. It covers a wide range of topics including pre-training, fine-tuning, downstream biomedical tasks, clinical applications, challenges, future directions, and more. The repository aims to provide insights into the opportunities and challenges of LLMs in medicine and serve as a practical resource for constructing effective medical LLMs.

unilm

The 'unilm' repository is a collection of tools, models, and architectures for Foundation Models and General AI, focusing on tasks such as NLP, MT, Speech, Document AI, and Multimodal AI. It includes various pre-trained models, such as UniLM, InfoXLM, DeltaLM, MiniLM, AdaLM, BEiT, LayoutLM, WavLM, VALL-E, and more, designed for tasks like language understanding, generation, translation, vision, speech, and multimodal processing. The repository also features toolkits like s2s-ft for sequence-to-sequence fine-tuning and Aggressive Decoding for efficient sequence-to-sequence decoding. Additionally, it offers applications like TrOCR for OCR, LayoutReader for reading order detection, and XLM-T for multilingual NMT.

Fueling-Ambitions-Via-Book-Discoveries

Fueling-Ambitions-Via-Book-Discoveries is an Advanced Machine Learning & AI Course designed for students, professionals, and AI researchers. The course integrates rigorous theoretical foundations with practical coding exercises, ensuring learners develop a deep understanding of AI algorithms and their applications in finance, healthcare, robotics, NLP, cybersecurity, and more. Inspired by MIT, Stanford, and Harvard’s AI programs, it combines academic research rigor with industry-standard practices used by AI engineers at companies like Google, OpenAI, Facebook AI, DeepMind, and Tesla. Learners can learn 50+ AI techniques from top Machine Learning & Deep Learning books, code from scratch with real-world datasets, projects, and case studies, and focus on ML Engineering & AI Deployment using Django & Streamlit. The course also offers industry-relevant projects to build a strong AI portfolio.

Embodied-AI-Guide

Embodied-AI-Guide is a comprehensive guide for beginners to understand Embodied AI, focusing on the path of entry and useful information in the field. It covers topics such as Reinforcement Learning, Imitation Learning, Large Language Model for Robotics, 3D Vision, Control, Benchmarks, and provides resources for building cognitive understanding. The repository aims to help newcomers quickly establish knowledge in the field of Embodied AI.

Awesome-LLM-Reasoning-Openai-o1-Survey

The repository 'Awesome LLM Reasoning Openai-o1 Survey' provides a collection of survey papers and related works on OpenAI o1, focusing on topics such as LLM reasoning, self-play reinforcement learning, complex logic reasoning, and scaling law. It includes papers from various institutions and researchers, showcasing advancements in reasoning bootstrapping, reasoning scaling law, self-play learning, step-wise and process-based optimization, and applications beyond math. The repository serves as a valuable resource for researchers interested in exploring the intersection of language models and reasoning techniques.

gen-ai-experiments

Gen-AI-Experiments is a structured collection of Jupyter notebooks and AI experiments designed to guide users through various AI tools, frameworks, and models. It offers valuable resources for both beginners and experienced practitioners, covering topics such as AI agents, model testing, RAG systems, real-world applications, and open-source tools. The repository includes folders with curated libraries, AI agents, experiments, LLM testing, open-source libraries, RAG experiments, and educhain experiments, each focusing on different aspects of AI development and application.

Awesome-LLM-Interpretability

Awesome-LLM-Interpretability is a curated list of materials related to LLM (Large Language Models) interpretability, covering tutorials, code libraries, surveys, videos, papers, and blogs. It includes resources on transformer mechanistic interpretability, visualization, interventions, probing, fine-tuning, feature representation, learning dynamics, knowledge editing, hallucination detection, and redundancy analysis. The repository aims to provide a comprehensive overview of tools, techniques, and methods for understanding and interpreting the inner workings of large language models.

Awesome-World-Models

This repository is a curated list of papers related to World Models for General Video Generation, Embodied AI, and Autonomous Driving. It includes foundation papers, blog posts, technical reports, surveys, benchmarks, and specific world models for different applications. The repository serves as a valuable resource for researchers and practitioners interested in world models and their applications in robotics and AI.

AiLearning-Theory-Applying

This repository provides a comprehensive guide to understanding and applying artificial intelligence (AI) theory, including basic knowledge, machine learning, deep learning, and natural language processing (BERT). It features detailed explanations, annotated code, and datasets to help users grasp the concepts and implement them in practice. The repository is continuously updated to ensure the latest information and best practices are covered.

ai-agent-papers

The AI Agents Papers repository provides a curated collection of papers focusing on AI agents, covering topics such as agent capabilities, applications, architectures, and presentations. It includes a variety of papers on ideation, decision making, long-horizon tasks, learning, memory-based agents, self-evolving agents, and more. The repository serves as a valuable resource for researchers and practitioners interested in AI agent technologies and advancements.

Awesome_Test_Time_LLMs

This repository focuses on test-time computing, exploring various strategies such as test-time adaptation, modifying the input, editing the representation, calibrating the output, test-time reasoning, and search strategies. It covers topics like self-supervised test-time training, in-context learning, activation steering, nearest neighbor models, reward modeling, and multimodal reasoning. The repository provides resources including papers and code for researchers and practitioners interested in enhancing the reasoning capabilities of large language models.

LLM-Navigation

LLM-Navigation is a repository dedicated to documenting learning records related to large models, including basic knowledge, prompt engineering, building effective agents, model expansion capabilities, security measures against prompt injection, and applications in various fields such as AI agent control, browser automation, financial analysis, 3D modeling, and tool navigation using MCP servers. The repository aims to organize and collect information for personal learning and self-improvement through AI exploration.

For similar tasks

Awesome-Embodied-Agent-with-LLMs

This repository, named Awesome-Embodied-Agent-with-LLMs, is a curated list of research related to Embodied AI or agents with Large Language Models. It includes various papers, surveys, and projects focusing on topics such as self-evolving agents, advanced agent applications, LLMs with RL or world models, planning and manipulation, multi-agent learning and coordination, vision and language navigation, detection, 3D grounding, interactive embodied learning, rearrangement, benchmarks, simulators, and more. The repository provides a comprehensive collection of resources for individuals interested in exploring the intersection of embodied agents and large language models.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.