LLM-Agent-Survey

Survey on LLM Agents (Published on CoLing 2025)

Stars: 113

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

README:

Our Github Repository follows the selection criteria below:

-

Allowing Coherent Understanding: They can be systematically categoried into the unified framework in my survey, according to the use of LLM-Profiled Roles (LMPRs).

- A general survey (Accepted at CoLing 2025): A Review of Prominent Paradigms for LLM-Based Agents: Tool Use (Including RAG), Planning, and Feedback Learning

-

High Quality: Papers are published on ICML, ICLR, NeurIPS, *ACL (including EMNLP), and COLING. Or unpublished papers contain useful analysis and insightful novelty

- Unpublished papers are marked with 💡 and will be updated upon publication. ⭐️ STAR this repo to stay updated!

- Paper Reviews: The paper links to OpenReview (if available) are alwasy given. I often learn much more from and resonate with many reviews about the papers and evaluate some rejected papers with the reviews. (That's why I always like NeurIPS/ICLR papers).

-

Exhasutive Review on Search Workflows

- A corresponding survey: A Survey on LLM Test-Time Compute via Search: Tasks, LLM Profiling, Search Algorithms, and Relevant Frameworks Updated Paper will be released on 6 Mar 2025

- A corresponding survey: A Survey on LLM Test-Time Compute via Search: Tasks, LLM Profiling, Search Algorithms, and Relevant Frameworks

Other Github Repositories summarize related papers with less constrained selection criteria:

- AGI-Edgerunners/LLM-Agents-Papers

- zjunlp/LLMAgentPapers

- Paitesanshi/LLM-Agent-Survey

- woooodyy/llm-agent-paper-list

- Autonomous-Agents

Other Github Repositories summarize related papers focusing on specific perspectives:

- nuster1128/LLM_Agent_Memory_Survey: Focus on memory

- teacherpeterpan/self-correction-llm-papers: Focus on feedback learning (Self Correction)

- git-disl/awesome-LLM-game-agent-papers: Focus on gaming applications

- 🎁 Surveys

- 🚀 Tool Use

- 🧠 Planning

- 🔄 Feedback Learning

- 🧩 Composition

- 🌍 World Modeling

- 📊 Benchmarks

- 📝 Citation

- A Review of Prominent Paradigms for LLM-Based Agents: Tool Use (Including RAG), Planning, and Feedback Learning, CoLing 2025 [paper]

- A Survey on Large Language Model based Autonomous Agents, Frontiers of Computer Science 2024 [paper] | [code]

- Augmented Language Models: a Survey, TMLR [paper]

- Understanding the planning of LLM agents: A survey, arXiv [paper] 💡

- The Rise and Potential of Large Language Model Based Agents: A Survey, arxiv [paper] 💡

- A Survey on the Memory Mechanism of Large Language Model based Agents, arxiv [paper] 💡

- ReAct: Synergizing Reasoning and Acting in Language Models, ICLR 2023 [paper]

- Toolformer: Language Models Can Teach Themselves to Use Tools, NeurIPS 2023 [paper]

- HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face, NeurIPS 2023 [paper]

- API-Bank: A Benchmark for Tool-Augmented LLMs, EMNLP 2023 [paper]

- ToolkenGPT: Augmenting Frozen Language Models with Massive Tools via Tool Embeddings, NeurIPS 2023 [paper]

- MultiTool-CoT: GPT-3 Can Use Multiple External Tools with Chain of Thought Prompting, ACL 2023 [paper]

- ChatCoT: Tool-Augmented Chain-of-Thought Reasoning on Chat-based Large Language Models, EMNLP 2023 [paper]

- ART: Automatic multi-step reasoning and tool-use for large language models, arXiv.2303.09014 [paper] 💡

- TALM: Tool Augmented Language Models, arXiv.2205.12255 [paper] 💡

- On the Tool Manipulation Capability of Open-source Large Language Models, arXiv.2305.16504 [paper] 💡

- Large Language Models as Tool Makers, arXiv.2305.17126 [paper] 💡

- GEAR: Augmenting Language Models with Generalizable and Efficient Tool Resolution, arXiv.2307.08775 [paper] 💡

- ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, arXiv.2307.16789 [paper] 💡

- Tool Documentation Enables Zero-Shot Tool-Usage with Large Language Models, arXiv.2308.00675 [paper] 💡

- MINT: Evaluating LLMs in Multi-turn Interaction with Tools and Language Feedback, arXiv.2309.10691 [paper] 💡

- Natural Language Embedded Programs for Hybrid Language Symbolic Reasoning, arXiv.2309.10814 [paper] 💡

- On the Planning Abilities of Large Language Models -- A Critical Investigation, NeurIPS 2023 [paper]

Details in the page (on the way to be publised).

-

Alphazero-like Tree-Search can guide large language model decoding and training, ICML 2024 [paper]

- Search Algorithm: MCTS

-

Language Agent Tree Search Unifies Reasoning, Acting, and Planning in Language Models, ICML 2024 [paper]

- Search Algorithm: MCTS

-

When is Tree Search Useful for {LLM} Planning? It Depends on the Discriminator, ACL 2024 [paper]

- Search Algorithm: MCTS

-

Everything of Thoughts: Defying the Law of Penrose Triangle for Thought Generation, ACL findings 2024 [paper]

- Search Algorithm: MCTS

-

Tree-of-Traversals: A Zero-Shot Reasoning Algorithm for Augmenting Black-box Language Models with Knowledge Graphs, ACL 2024 [paper]

- Search Algorithm: BFS/DFS

-

LLM-A*: Large Language Model Enhanced Incremental Heuristic Search on Path Planning, EMNLP findings 2024 [paper] | [code]

- Search Algorithm: A*

- LLM Reasoners: New Evaluation, Library, and Analysis of Step-by-Step Reasoning with Large Language Models, COLM2024 [paper] | [code]

- Language Agent Tree Search Unifies Reasoning Acting and Planning in Language Models, arXiv.2310.04406 [paper] 💡

- Large Language Model Guided Tree-of-Thought, arXiv.2305.08291 [paper]💡

-

Tree Search for Language Model Agents, Under Review [paper]💡

- Search Algorithm: Best-First Search

-

Q*: Improving multi-step reasoning for llms with deliberative planning, Under Review [paper]💡

- Search Algorithm: A*

-

Planning with Large Language Models for Code Generation, ICLR 2023 [paper]

- Search Algorithm: MCTS

-

Tree of Thoughts: Deliberate Problem Solving with Large Language Models, NeurIPS 2023 [paper]

- Search Algorithm: BFS/DFS

-

LLM-MCTS:Large Language Models as Commonsense Knowledge for Large-Scale Task Planning, NeurIPS 2023 [paper] | [code]

- Search Algorithm: MCTS

-

Self-Evaluation Guided Beam Search for Reasoning, NeurIPS 2023 [paper]

- Search Algorithm: BFS/DFS

-

PathFinder: Guided Search over Multi-Step Reasoning Paths, NeurIPS 2023 R0-FoMo [paper]

- Search Algorithm: Beam Search

- Plan, Verify and Switch: Integrated Reasoning with Diverse X-of-Thoughts, EMNLP 2023 [paper]

-

RAP: Reasoning with Language Model is Planning with World Model, EMNLP 2023 [paper]

- Search Algorithm: MCTS

-

Prompt-Based Monte-Carlo Tree Search for Goal-oriented Dialogue Policy Planning, EMNLP 2023 [paper]

- Search Algorithm: MCTS

-

Monte Carlo Thought Search: Large Language Model Querying for Complex Scientific Reasoning in Catalyst Design, EMNLP findings 2023 [paper]

- Search Algorithm: MCTS

-

Agent q: Advanced reasoning and learning for autonomous ai agents, arXiv.2309.10814 [paper] 💡

- Search Algorithm: MCTS

- HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face, NeurIPS 2023 [paper] | [code]

- Least-to-Most Prompting Enables Complex Reasoning in Large Language Models, NeurIPS 2023 [paper]

- Leveraging Pre-trained Large Language Models to Construct and Utilize World Models for Model-based Task Planning, NeurIPS 2023 [paper] | [code]

- On the Planning Abilities of Large Language Models - A Critical Investigation, NeurIPS 2023 [paper] | [code]

- PlanBench: An Extensible Benchmark for Evaluating Large Language Models on Planning and Reasoning about Change, NeurIPS 2023 [paper] | [code]

- LLM+P: Empowering Large Language Models with Optimal Planning Proficiency, arXiv.2304.11477 [paper]💡

- Reflexion: Language Agents with Verbal Reinforcement Learning, NeurIPS 2023 [paper]

- Self-Refine: Iterative Refinement with Self-Feedback, NeurIPS 2023 [paper]

- SelfCheck: Using LLMs to Zero-Shot Check Their Own Step-by-Step Reasoning, ICLR 2024 [paper] | [code]

- Learning From Correctness Without Prompting Makes LLM Efficient Reasoner, COLM2024 [paper]

- Learning From Mistakes Makes LLM Better Reasoner, arXiv [paper] | [code]💡

- LLM-based Rewriting of Inappropriate Argumentation using Reinforcement Learning from Machine Feedback ACL 2024 [paper]

- AdaPlanner: Adaptive Planning from Feedback with Language Models, NeurIPS 2023 [paper]

- CRITIC: Large Language Models Can Self-Correct with Tool-Interactive Critiquing, ICLR 2024 [paper]

- ISR-LLM: Iterative Self-Refined Large Language Model for Long-Horizon Sequential Task Planning, arXiv.2308.13724 [paper] 💡

- ToolChain: Efficient Action Space Navigation in Large Language Models with A* Search, ICLR 2024 [paper]

- TPTU: Task Planning and Tool Usage of Large Language Model-based AI Agents, FMDM @ NeurIPS 2023 [paper]

- TPTU-v2: Boosting Task Planning and Tool Usage of Large Language Model-based Agents in Real-world Systems, LLMAgents @ ICLR 2024 [paper]

- Can Language Models Serve as Text-Based World Simulators?, ACL 2024 [paper] | [code]

- Making Large Language Models into World Models with Precondition and Effect Knowledge, arXiv [paper] 💡

- Leveraging Pre-trained Large Language Models to Construct and Utilize World Models for Model-based Task Planning, NeurIPS 2023 [paper] | [code]

- ByteSized32: A Corpus and Challenge Task for Generating Task-Specific World Models Expressed as Text Games, EMNLP 2023 [paper] | [code]

- MetaTool Benchmark: Deciding Whether to Use Tools and Which to Use, arXiv.2310.03128 [paper] 💡

- TaskBench: Benchmarking Large Language Models for Task Automation, arXiv.2311.18760 [paper] 💡

- Large Language Models Still Can't Plan (A Benchmark for LLMs on Planning and Reasoning about Change), NeurIPS 2023 [paper]

If you find our work helpful, you can cite this paper as:

@inproceedings{li2024review,

title={A Review of Prominent Paradigms for LLM-Based Agents: Tool Use (Including RAG), Planning, and Feedback Learning},

author={Li, Xinzhe},

booktitle = "Proceedings of the 31st International Conference on Computational Linguistics",

year = "2025",

}@article{li2025survey,

title={A Survey on LLM Test-Time Compute via Search: Tasks, LLM Profiling, Search Algorithms, and Relevant Frameworks},

author={Li, Xinzhe},

journal={arXiv preprint arXiv:2501.10069},

year={2025}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-Agent-Survey

Similar Open Source Tools

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

LLM-Tool-Survey

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

rllm

rLLM (relationLLM) is a Pytorch library for Relational Table Learning (RTL) with LLMs. It breaks down state-of-the-art GNNs, LLMs, and TNNs as standardized modules and facilitates novel model building in a 'combine, align, and co-train' way using these modules. The library is LLM-friendly, processes various graphs as multiple tables linked by foreign keys, introduces new relational table datasets, and is supported by students and teachers from Shanghai Jiao Tong University and Tsinghua University.

ABigSurveyOfLLMs

ABigSurveyOfLLMs is a repository that compiles surveys on Large Language Models (LLMs) to provide a comprehensive overview of the field. It includes surveys on various aspects of LLMs such as transformers, alignment, prompt learning, data management, evaluation, societal issues, safety, misinformation, attributes of LLMs, efficient LLMs, learning methods for LLMs, multimodal LLMs, knowledge-based LLMs, extension of LLMs, LLMs applications, and more. The repository aims to help individuals quickly understand the advancements and challenges in the field of LLMs through a collection of recent surveys and research papers.

Awesome-LLM-in-Social-Science

Awesome-LLM-in-Social-Science is a repository that compiles papers evaluating Large Language Models (LLMs) from a social science perspective. It includes papers on evaluating, aligning, and simulating LLMs, as well as enhancing tools in social science research. The repository categorizes papers based on their focus on attitudes, opinions, values, personality, morality, and more. It aims to contribute to discussions on the potential and challenges of using LLMs in social science research.

LLM-on-Tabular-Data-Prediction-Table-Understanding-Data-Generation

This repository serves as a comprehensive survey on the application of Large Language Models (LLMs) on tabular data, focusing on tasks such as prediction, data generation, and table understanding. It aims to consolidate recent progress in this field by summarizing key techniques, metrics, datasets, models, and optimization approaches. The survey identifies strengths, limitations, unexplored territories, and gaps in the existing literature, providing insights for future research directions. It also offers code and dataset references to empower readers with the necessary tools and knowledge to address challenges in this rapidly evolving domain.

MedLLMsPracticalGuide

This repository serves as a practical guide for Medical Large Language Models (Medical LLMs) and provides resources, surveys, and tools for building, fine-tuning, and utilizing LLMs in the medical domain. It covers a wide range of topics including pre-training, fine-tuning, downstream biomedical tasks, clinical applications, challenges, future directions, and more. The repository aims to provide insights into the opportunities and challenges of LLMs in medicine and serve as a practical resource for constructing effective medical LLMs.

ai-agent-papers

The AI Agents Papers repository provides a curated collection of papers focusing on AI agents, covering topics such as agent capabilities, applications, architectures, and presentations. It includes a variety of papers on ideation, decision making, long-horizon tasks, learning, memory-based agents, self-evolving agents, and more. The repository serves as a valuable resource for researchers and practitioners interested in AI agent technologies and advancements.

awesome-tool-llm

This repository focuses on exploring tools that enhance the performance of language models for various tasks. It provides a structured list of literature relevant to tool-augmented language models, covering topics such as tool basics, tool use paradigm, scenarios, advanced methods, and evaluation. The repository includes papers, preprints, and books that discuss the use of tools in conjunction with language models for tasks like reasoning, question answering, mathematical calculations, accessing knowledge, interacting with the world, and handling non-textual modalities.

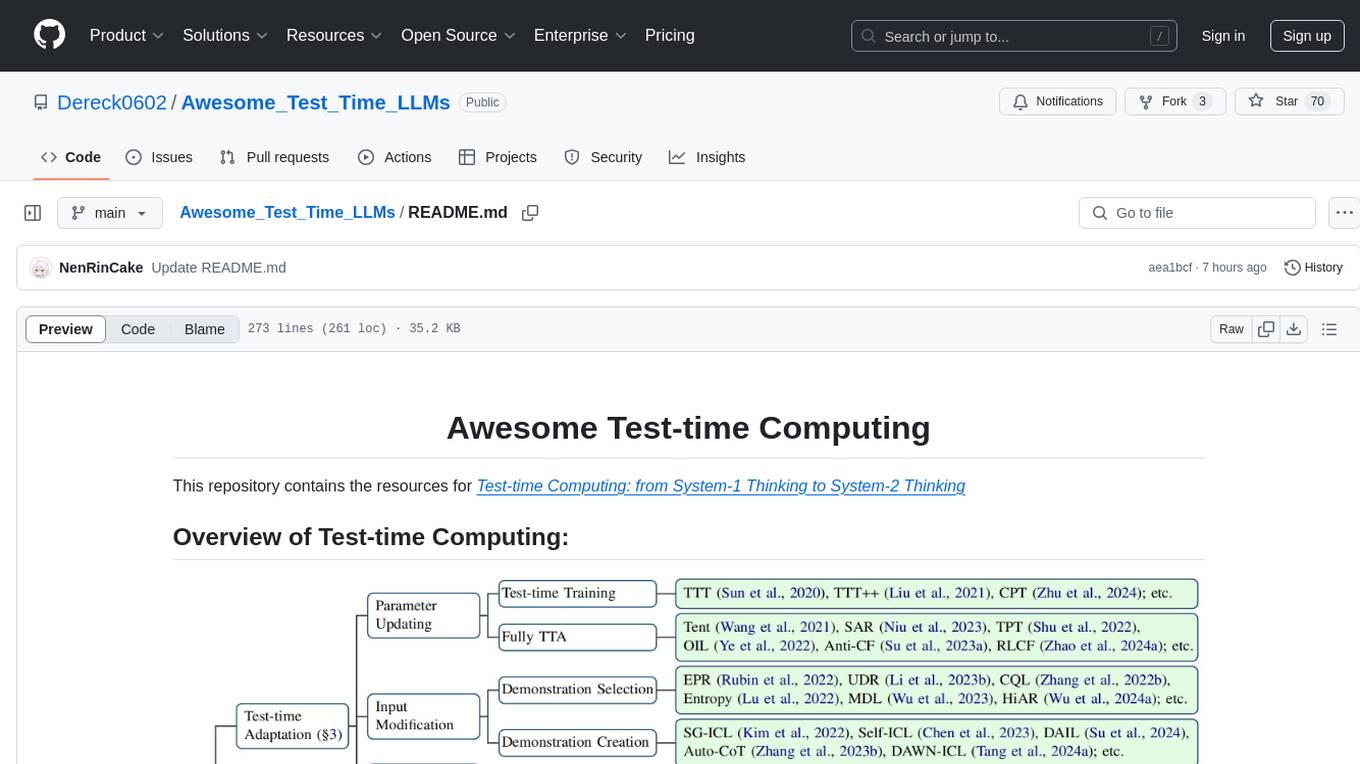

Awesome_Test_Time_LLMs

This repository focuses on test-time computing, exploring various strategies such as test-time adaptation, modifying the input, editing the representation, calibrating the output, test-time reasoning, and search strategies. It covers topics like self-supervised test-time training, in-context learning, activation steering, nearest neighbor models, reward modeling, and multimodal reasoning. The repository provides resources including papers and code for researchers and practitioners interested in enhancing the reasoning capabilities of large language models.

DriveLM

DriveLM is a multimodal AI model that enables autonomous driving by combining computer vision and natural language processing. It is designed to understand and respond to complex driving scenarios using visual and textual information. DriveLM can perform various tasks related to driving, such as object detection, lane keeping, and decision-making. It is trained on a massive dataset of images and text, which allows it to learn the relationships between visual cues and driving actions. DriveLM is a powerful tool that can help to improve the safety and efficiency of autonomous vehicles.

GPTSwarm

GPTSwarm is a graph-based framework for LLM-based agents that enables the creation of LLM-based agents from graphs and facilitates the customized and automatic self-organization of agent swarms with self-improvement capabilities. The library includes components for domain-specific operations, graph-related functions, LLM backend selection, memory management, and optimization algorithms to enhance agent performance and swarm efficiency. Users can quickly run predefined swarms or utilize tools like the file analyzer. GPTSwarm supports local LM inference via LM Studio, allowing users to run with a local LLM model. The framework has been accepted by ICML2024 and offers advanced features for experimentation and customization.

llms-interview-questions

This repository contains a comprehensive collection of 63 must-know Large Language Models (LLMs) interview questions. It covers topics such as the architecture of LLMs, transformer models, attention mechanisms, training processes, encoder-decoder frameworks, differences between LLMs and traditional statistical language models, handling context and long-term dependencies, transformers for parallelization, applications of LLMs, sentiment analysis, language translation, conversation AI, chatbots, and more. The readme provides detailed explanations, code examples, and insights into utilizing LLMs for various tasks.

MM-RLHF

MM-RLHF is a comprehensive project for aligning Multimodal Large Language Models (MLLMs) with human preferences. It includes a high-quality MLLM alignment dataset, a Critique-Based MLLM reward model, a novel alignment algorithm MM-DPO, and benchmarks for reward models and multimodal safety. The dataset covers image understanding, video understanding, and safety-related tasks with model-generated responses and human-annotated scores. The reward model generates critiques of candidate texts before assigning scores for enhanced interpretability. MM-DPO is an alignment algorithm that achieves performance gains with simple adjustments to the DPO framework. The project enables consistent performance improvements across 10 dimensions and 27 benchmarks for open-source MLLMs.

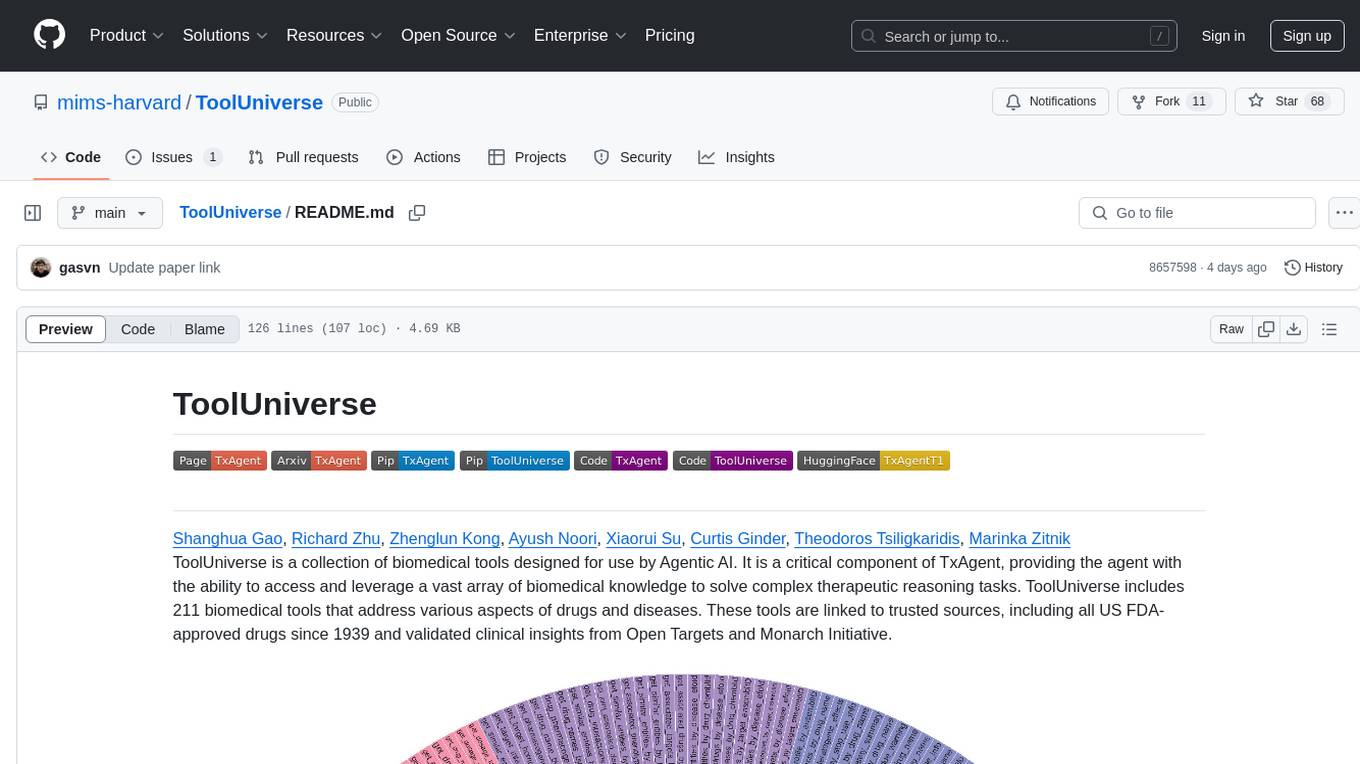

ToolUniverse

ToolUniverse is a collection of 211 biomedical tools designed for Agentic AI, providing access to biomedical knowledge for solving therapeutic reasoning tasks. The tools cover various aspects of drugs and diseases, linked to trusted sources like US FDA-approved drugs since 1939, Open Targets, and Monarch Initiative.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

For similar tasks

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

Awesome_LLM_System-PaperList

Since the emergence of chatGPT in 2022, the acceleration of Large Language Model has become increasingly important. Here is a list of papers on LLMs inference and serving.

LLM-Tool-Survey

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

Awesome-CVPR2024-ECCV2024-AIGC

A Collection of Papers and Codes for CVPR 2024 AIGC. This repository compiles and organizes research papers and code related to CVPR 2024 and ECCV 2024 AIGC (Artificial Intelligence and Graphics Computing). It serves as a valuable resource for individuals interested in the latest advancements in the field of computer vision and artificial intelligence. Users can find a curated list of papers and accompanying code repositories for further exploration and research. The repository encourages collaboration and contributions from the community through stars, forks, and pull requests.

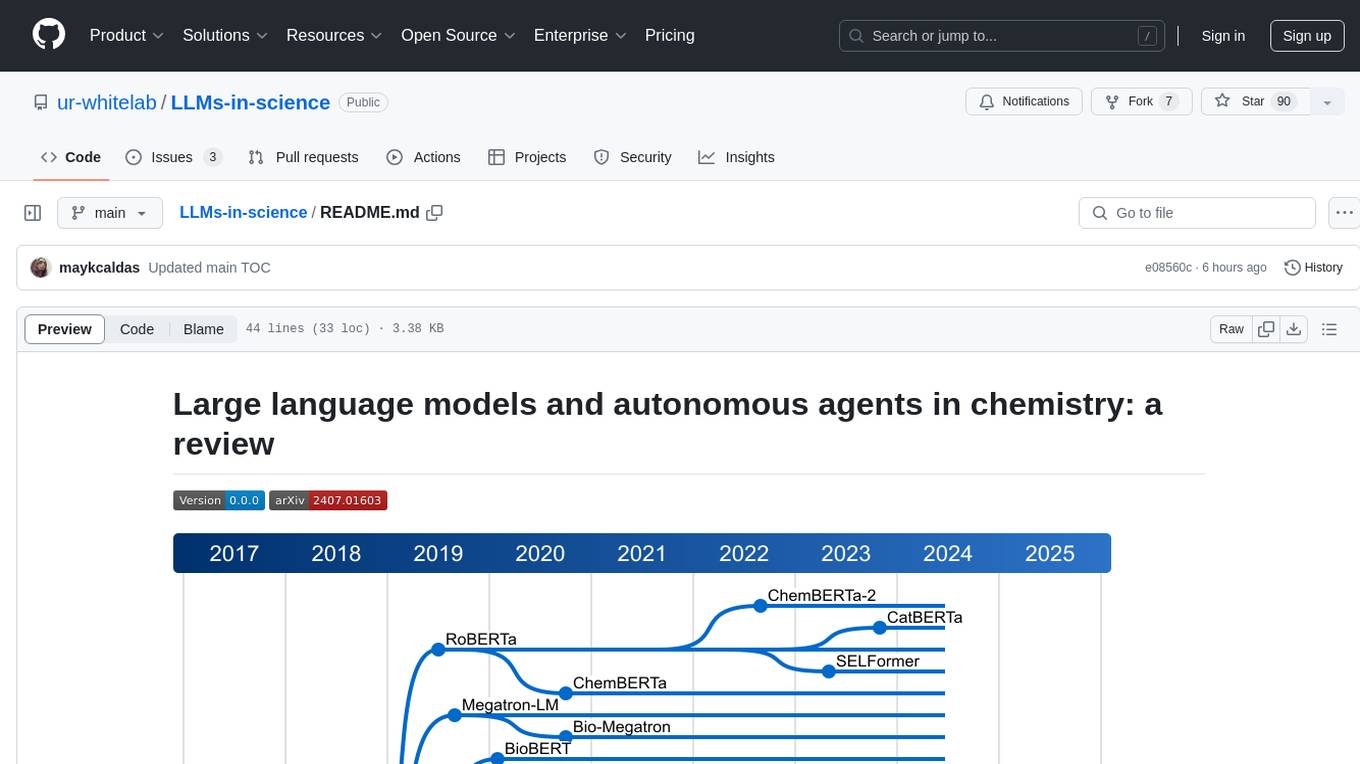

LLMs-in-science

The 'LLMs-in-science' repository is a collaborative environment for organizing papers related to large language models (LLMs) and autonomous agents in the field of chemistry. The goal is to discuss trend topics, challenges, and the potential for supporting scientific discovery in the context of artificial intelligence. The repository aims to maintain a systematic structure of the field and welcomes contributions from the community to keep the content up-to-date and relevant.

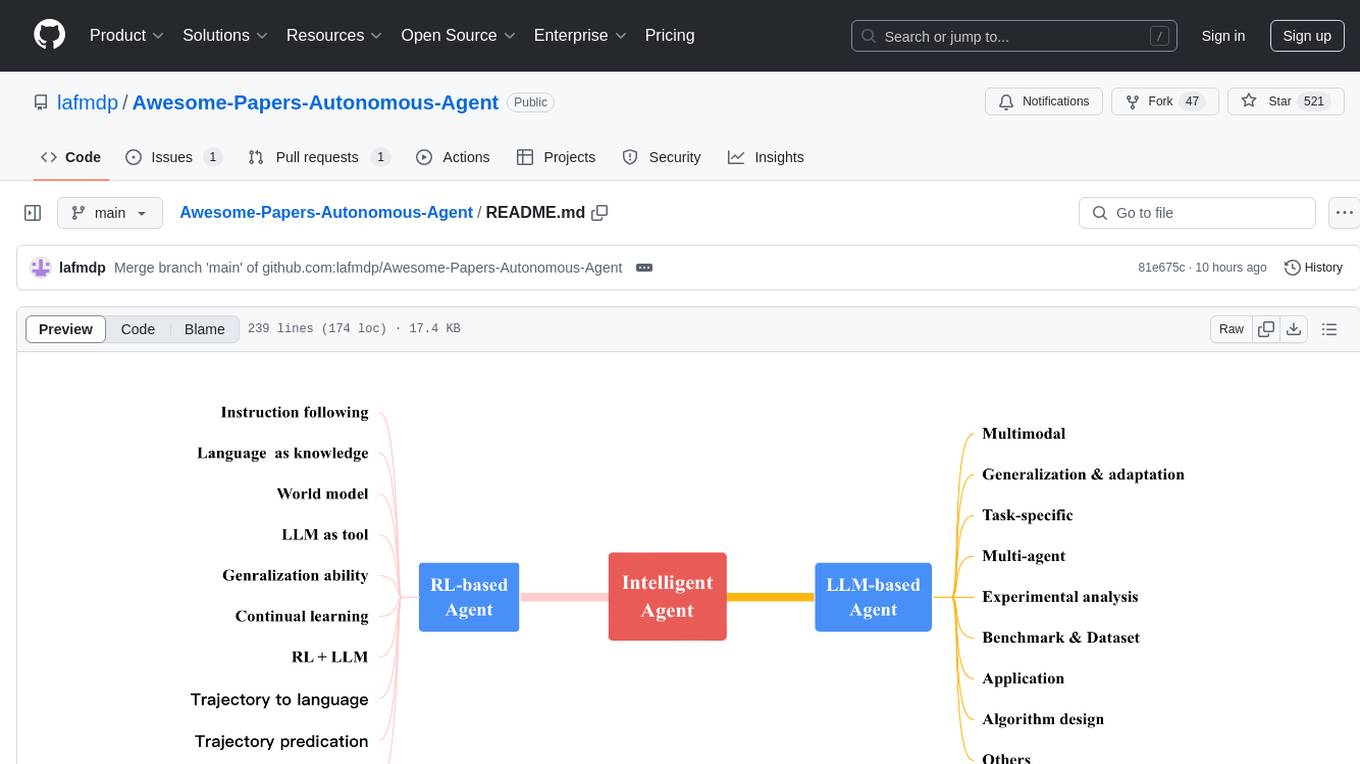

Awesome-Papers-Autonomous-Agent

Awesome-Papers-Autonomous-Agent is a curated collection of recent papers focusing on autonomous agents, specifically interested in RL-based agents and LLM-based agents. The repository aims to provide a comprehensive resource for researchers and practitioners interested in intelligent agents that can achieve goals, acquire knowledge, and continually improve. The collection includes papers on various topics such as instruction following, building agents based on world models, using language as knowledge, leveraging LLMs as a tool, generalization across tasks, continual learning, combining RL and LLM, transformer-based policies, trajectory to language, trajectory prediction, multimodal agents, training LLMs for generalization and adaptation, task-specific designing, multi-agent systems, experimental analysis, benchmarking, applications, algorithm design, and combining with RL.

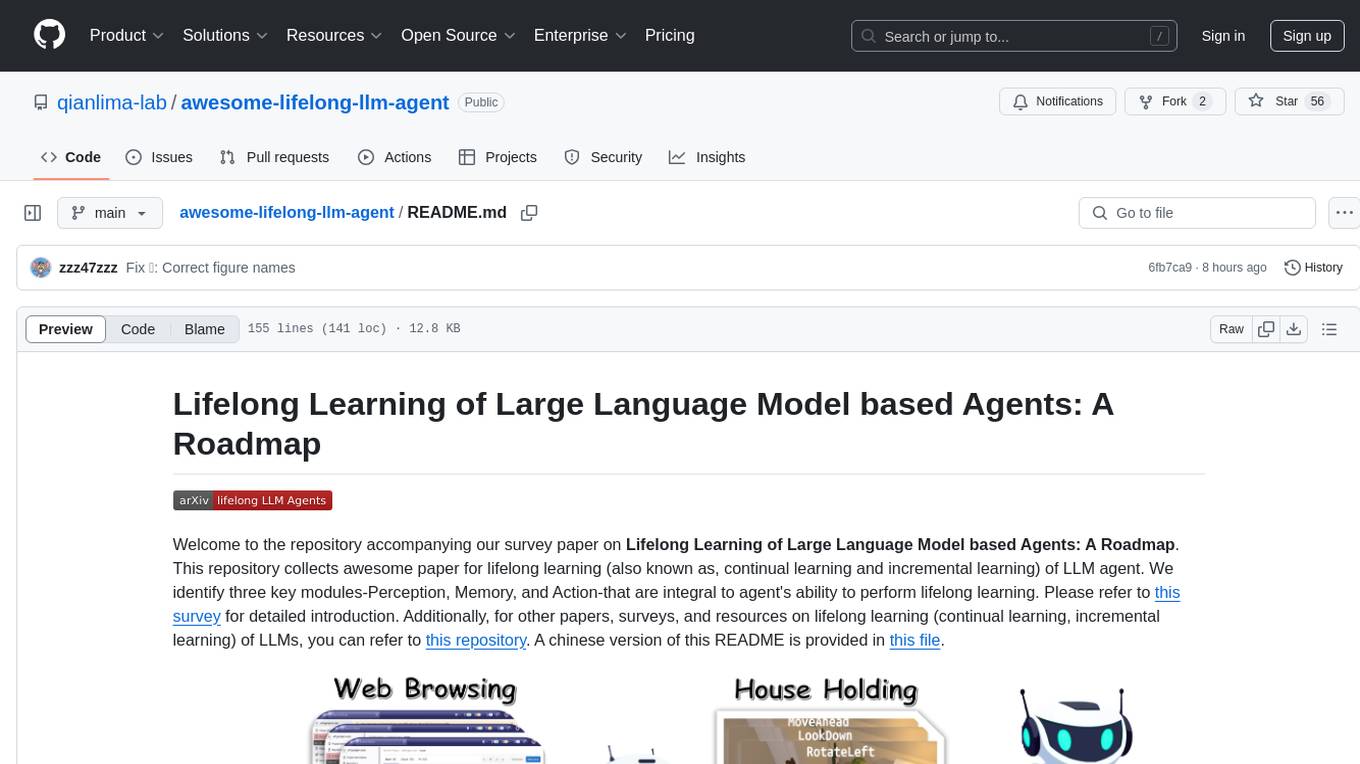

awesome-lifelong-llm-agent

This repository is a collection of papers and resources related to Lifelong Learning of Large Language Model (LLM) based Agents. It focuses on continual learning and incremental learning of LLM agents, identifying key modules such as Perception, Memory, and Action. The repository serves as a roadmap for understanding lifelong learning in LLM agents and provides a comprehensive overview of related research and surveys.

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.