Everything-LLMs-And-Robotics

The world's largest GitHub Repository for LLMs + Robotics

Stars: 718

The Everything-LLMs-And-Robotics repository is the world's largest GitHub repository focusing on the intersection of Large Language Models (LLMs) and Robotics. It provides educational resources, research papers, project demos, and Twitter threads related to LLMs, Robotics, and their combination. The repository covers topics such as reasoning, planning, manipulation, instructions and navigation, simulation frameworks, perception, and more, showcasing the latest advancements in the field.

README:

The world's largest GitHub Repository for the intersection of LLMs (multimodal included!) + Robotics

Heavily Inspired by Awesome-LLM-Robotics

If you want to make a change this repository click here

Why I made this: Go here.

- Education: LLMs

- Education: Robotics

- Education: LLMs + Robotics

- Research: Reasoning

- Research: Planning

- Research: Manipulation

- Research: Instructions and Navigation

- Research: Simulation Frameworks

- Research: Perception

- Project Demos

- Thoughtful Twitter Threads

- Citation

-

START HERE: "Transformers from Scratch", Brandon Rohrer, [Website]

-

Stanford Transformers Class: "CS25: Transformers United", Stanford, 2022, [Website]

-

Andrej Karpathy GPT Tutorial: "Let's build GPT: from scratch, in code, spelled out." Andrej Karpathy, 2023 [Youtube Video]

- AI-Enabled Robotics Class: "CS199: Stanford Robotics Independent Study", Stanford, 2023, [Website]

-

Google's 2022 Research: "Google Research, 2022 & beyond: Robotics", Google, 2023, [Website]

-

Controlling Robots Via Large Language Models: "Controlling Robots Via Large Language Models", Sanjiban Choudhury, CS 4756/5756, Cornell, 2023 [Slides]

-

AutoTAMP: "AutoTAMP: Autoregressive Task and Motion Planning with LLMs as Translators and Checkers", arXiv, June 2023. [Paper]

-

LLM Designs Robots: "CAN LARGE LANGUAGE MODELS DESIGN A ROBOT?", arXiv, Mar 2023. [Paper]

-

PaLM-E: "PaLM-E: An Embodied Multimodal Language Model", arXiV, Mar 2023. [Paper] [Website] [Demo]

-

RT-1: "RT-1: Robotics Transformer for Real-World Control at Scale", arXiv, Dec 2022. [Paper] [Code] [Website]

-

ProgPrompt: "Generating Situated Robot Task Plans using Large Language Models", arXiv, Sept 2022. [Paper] [Code Doesn't Really Exist here] [Website]

-

Code-As-Policies: "Code as Policies: Language Model Programs for Embodied Control", arXiv, Sept 2022. [Paper] [Code] [Website]

-

Say-Can: "Do As I Can, Not As I Say: Grounding Language in Robotic Affordances", arXiv, Apr 2021. [Paper] [Code] [Website]

-

Socratic: "Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language", arXiv, Apr 2021. [Paper] [Code] [Website]

-

PIGLeT: "PIGLeT: Language Grounding Through Neuro-Symbolic Interaction in a 3D World", ACL, Jun 2021. [Paper] [Code] [Website]

-

LLM-GROP: "Task and Motion Planning with Large Language Models for Object Rearrangement", arXiv, Mar 2023 [Paper]

-

Bio Lab Task Planning: "LLMs can generate robotic scripts from goal-oriented instructions in biological laboratory automation", arXiv, April 2023 [Paper]

-

PromptCraft Robotics: "ChatGPT for Robotics: Design Principles and Model Abilities", Microsoft, 2023, [Paper], [Website], [Code]

-

CLARIFY "Errors are Useful Prompts: Instruction Guided Task Programming with Verifier-Assisted Iterative Prompting", arXiv, March 2023 [Paper][Code][Website]

-

LM-Nav: "Robotic Navigation with Large Pre-Trained Models of Language, Vision, and Action", arXiv, July 2022. [Paper] [Pytorch Code] [Website]

-

InnerMonlogue: "Inner Monologue: Embodied Reasoning through Planning with Language Models", arXiv, July 2022. [Paper] [Website]

-

Housekeep: "Housekeep: Tidying Virtual Households using Commonsense Reasoning", arXiv, May 2022. [Paper] [Pytorch Code] [Website]

-

LID: "Pre-Trained Language Models for Interactive Decision-Making", arXiv, Feb 2022. [Paper] [Pytorch Code] [Website]

-

ZSP: "Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents", ICML, Jan 2022. [Paper] [Pytorch Code] [Website]

-

MOO "Open-World Object Manipulation using Pre-trained Vision-Language Models" arXiv, March 2023 [Paper] [Website]

-

TidyBot: "TidyBot: Personalized Robot Assistance with Large Language Models", arXiV, May 2023, [Paper Website Paper Website]

-

DIAL:"Robotic Skill Acquistion via Instruction Augmentation with Vision-Language Models", arXiv, Nov 2022, [Paper] [Website]

-

CLIP-Fields:"CLIP-Fields: Weakly Supervised Semantic Fields for Robotic Memory", arXiv, Oct 2022, [Paper] [PyTorch Code] [Website]

-

VIMA:"VIMA: General Robot Manipulation with Multimodal Prompts", arXiv, Oct 2022, [Paper] [Pytorch Code] [Website]

-

Perceiver-Actor:"A Multi-Task Transformer for Robotic Manipulation", CoRL, Sep 2022. [Paper] [Pytorch Code] [Website]

-

LaTTe: "LaTTe: Language Trajectory TransformEr", arXiv, Aug 2022. [Paper] [TensorFlow Code] [Website]

-

Robots Enact Malignant Stereotypes: "Robots Enact Malignant Stereotypes", FAccT, Jun 2022. [Paper] [Website] Washington Post] [Wired] (code access on request)

-

ATLA: "Leveraging Language for Accelerated Learning of Tool Manipulation", CoRL, Jun 2022. [Paper]

-

ZeST: "Can Foundation Models Perform Zero-Shot Task Specification For Robot Manipulation?", L4DC, Apr 2022. [Paper]

-

LSE-NGU: "Semantic Exploration from Language Abstractions and Pretrained Representations", arXiv, Apr 2022. [Paper]

-

Embodied-CLIP: "Simple but Effective: CLIP Embeddings for Embodied AI ", CVPR, Nov 2021. [Paper] [Pytorch Code]

-

CLIPort: "CLIPort: What and Where Pathways for Robotic Manipulation", CoRL, Sept 2021. [Paper] [Pytorch Code] [Website]

-

Text2Motion: "Text2Motion: From Natural Language Instructions to Feasible Plans", arXiv, Mar 2023 [Paper]

-

ChatGPT Robot Collaboration: "Improved Trust in Human-Robot Collaboration with ChatGPT", arXiv, April 2023. [Paper]

-

ADAPT: "ADAPT: Vision-Language Navigation with Modality-Aligned Action Prompts", CVPR, May 2022. [Paper]

-

Pre-Trained Vision Models for Control: "The Unsurprising Effectiveness of Pre-Trained Vision Models for Control", ICML, Mar 2022. [Paper] [Pytorch Code] [Website]

-

CoW: "CLIP on Wheels: Zero-Shot Object Navigation as Object Localization and Exploration", arXiv, Mar 2022. [Paper]

-

Recurrent VLN-BERT: "A Recurrent Vision-and-Language BERT for Navigation", CVPR, Jun 2021 [Paper] [Pytorch Code]

-

VLN-BERT: "Improving Vision-and-Language Navigation with Image-Text Pairs from the Web", ECCV, Apr 2020 [Paper] [Pytorch Code]

-

Interactive Language: "Interactive Language: Talking to Robots in Real Time", arXiv, Oct 2022 [Paper] [Website]

-

MineDojo: "MineDojo: Building Open-Ended Embodied Agents with Internet-Scale Knowledge", arXiv, Jun 2022. [Paper] [Code] [Website] [Open Database]

-

Habitat 2.0: "Habitat 2.0: Training Home Assistants to Rearrange their Habitat", NeurIPS, Dec 2021. [Paper] [Code] [Website]

-

BEHAVIOR: "BEHAVIOR: Benchmark for Everyday Household Activities in Virtual, Interactive, and Ecological Environments", CoRL, Nov 2021. [Paper] [Code] [Website]

-

iGibson 1.0: "iGibson 1.0: a Simulation Environment for Interactive Tasks in Large Realistic Scenes", IROS, Sep 2021. [Paper] [Code] [Website]

-

ALFRED: "ALFRED: A Benchmark for Interpreting Grounded Instructions for Everyday Tasks", CVPR, Jun 2020. [Paper] [Code] [Website]

-

BabyAI: "BabyAI: A Platform to Study the Sample Efficiency of Grounded Language Learning", ICLR, May 2019. [Paper] [Code]

-

Matcha agent: "Chat with the Environment: Interactive Multimodal Perception Using Large Language Models", IROS 2023. [Paper] [Poster] [Code] [Video] [Website]

-

LGX: "Can an Embodied Agent Find Your "Cat-shaped Mug"? LLM-Based Zero-Shot Object Navigation", arXiv, Mar 2023. [Paper]

-

Robots Acquire Skills With VLMs: "Robotic Skill Acquisition via Instruction Augmentation with Vision-Language Models" arXiv, Nov 2022. [Paper]

-

From Occulation To Insight: "From Occlusion to Insight: Object Search in Semantic Shelves using Large Language Models", arXiv, Feb 2023, [Paper]

-

RobotGPT Pt.2 "Twitter Video Of Voice-Input LLM-Powered Robot Arm", Orangewood Labs, 2023, [Video]

-

SPOT GPT: "Boston Dynamics Integration of ChatGPT into SPOT Robot", Boston Dynamics, 2023, [Video]

-

RobotGPT: "Orangewood Labs RoboGPT Demo", Orangewood Labs, 2023, [Video]

-

Mona: "Vitruvian Works Robot Demonstration", Vitruvian Works, 2023, [Video]

-

Ameca: "Ameca Expressions with GPT-3 / 4", Engineered Arts, 2023, [Video]

-

Sarcastic Robot: "Sarcastic Robot powered by GPT-4", Gabrael Levine (Hackathon Project), 2023, [Video]

-

DroneFormer: "DroneFormer: Controlling UAVs with natural language!", Brian Wu (Hackathon Project), Stanford University, 2023 [Video]

- Bitter Lesson 2.0: @hausman_k, 2023 [Thread]

If you find this repository useful, please consider citing this list:

@misc{rintamaki2023everythingllmsandroboticsrepo,

title={Everything-LLMs-And-Robotics},

author={Jacob Rintamaki},

journal={GitHub repository},

url={https://github.com/jrin771/Everything-LLMs-And-Robotics},

year={2023},

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Everything-LLMs-And-Robotics

Similar Open Source Tools

Everything-LLMs-And-Robotics

The Everything-LLMs-And-Robotics repository is the world's largest GitHub repository focusing on the intersection of Large Language Models (LLMs) and Robotics. It provides educational resources, research papers, project demos, and Twitter threads related to LLMs, Robotics, and their combination. The repository covers topics such as reasoning, planning, manipulation, instructions and navigation, simulation frameworks, perception, and more, showcasing the latest advancements in the field.

Awesome-LLM-Robotics

This repository contains a curated list of **papers using Large Language/Multi-Modal Models for Robotics/RL**. Template from awesome-Implicit-NeRF-Robotics Please feel free to send me pull requests or email to add papers! If you find this repository useful, please consider citing and STARing this list. Feel free to share this list with others! ## Overview * Surveys * Reasoning * Planning * Manipulation * Instructions and Navigation * Simulation Frameworks * Citation

Awesome-Robotics-3D

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

Paper-Reading-ConvAI

Paper-Reading-ConvAI is a repository that contains a list of papers, datasets, and resources related to Conversational AI, mainly encompassing dialogue systems and natural language generation. This repository is constantly updating.

Awesome-Quantization-Papers

This repo contains a comprehensive paper list of **Model Quantization** for efficient deep learning on AI conferences/journals/arXiv. As a highlight, we categorize the papers in terms of model structures and application scenarios, and label the quantization methods with keywords.

ai-agent-papers

The AI Agents Papers repository provides a curated collection of papers focusing on AI agents, covering topics such as agent capabilities, applications, architectures, and presentations. It includes a variety of papers on ideation, decision making, long-horizon tasks, learning, memory-based agents, self-evolving agents, and more. The repository serves as a valuable resource for researchers and practitioners interested in AI agent technologies and advancements.

LLM-Tool-Survey

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

ABigSurveyOfLLMs

ABigSurveyOfLLMs is a repository that compiles surveys on Large Language Models (LLMs) to provide a comprehensive overview of the field. It includes surveys on various aspects of LLMs such as transformers, alignment, prompt learning, data management, evaluation, societal issues, safety, misinformation, attributes of LLMs, efficient LLMs, learning methods for LLMs, multimodal LLMs, knowledge-based LLMs, extension of LLMs, LLMs applications, and more. The repository aims to help individuals quickly understand the advancements and challenges in the field of LLMs through a collection of recent surveys and research papers.

MedLLMsPracticalGuide

This repository serves as a practical guide for Medical Large Language Models (Medical LLMs) and provides resources, surveys, and tools for building, fine-tuning, and utilizing LLMs in the medical domain. It covers a wide range of topics including pre-training, fine-tuning, downstream biomedical tasks, clinical applications, challenges, future directions, and more. The repository aims to provide insights into the opportunities and challenges of LLMs in medicine and serve as a practical resource for constructing effective medical LLMs.

unilm

The 'unilm' repository is a collection of tools, models, and architectures for Foundation Models and General AI, focusing on tasks such as NLP, MT, Speech, Document AI, and Multimodal AI. It includes various pre-trained models, such as UniLM, InfoXLM, DeltaLM, MiniLM, AdaLM, BEiT, LayoutLM, WavLM, VALL-E, and more, designed for tasks like language understanding, generation, translation, vision, speech, and multimodal processing. The repository also features toolkits like s2s-ft for sequence-to-sequence fine-tuning and Aggressive Decoding for efficient sequence-to-sequence decoding. Additionally, it offers applications like TrOCR for OCR, LayoutReader for reading order detection, and XLM-T for multilingual NMT.

univer

Univer is an isomorphic full-stack framework designed for creating and editing spreadsheets, documents, and slides across web and server. It is highly extensible, high-performance, and can be embedded into applications. Univer offers a wide range of features including formulas, conditional formatting, data validation, collaborative editing, printing, import & export, and more. It supports multiple languages and provides a distraction-free editing experience with a clean interface. Univer is suitable for data analysts, software developers, project managers, content creators, and educators.

awesome_LLM-harmful-fine-tuning-papers

This repository is a comprehensive survey of harmful fine-tuning attacks and defenses for large language models (LLMs). It provides a curated list of must-read papers on the topic, covering various aspects such as alignment stage defenses, fine-tuning stage defenses, post-fine-tuning stage defenses, mechanical studies, benchmarks, and attacks/defenses for federated fine-tuning. The repository aims to keep researchers updated on the latest developments in the field and offers insights into the vulnerabilities and safeguards related to fine-tuning LLMs.

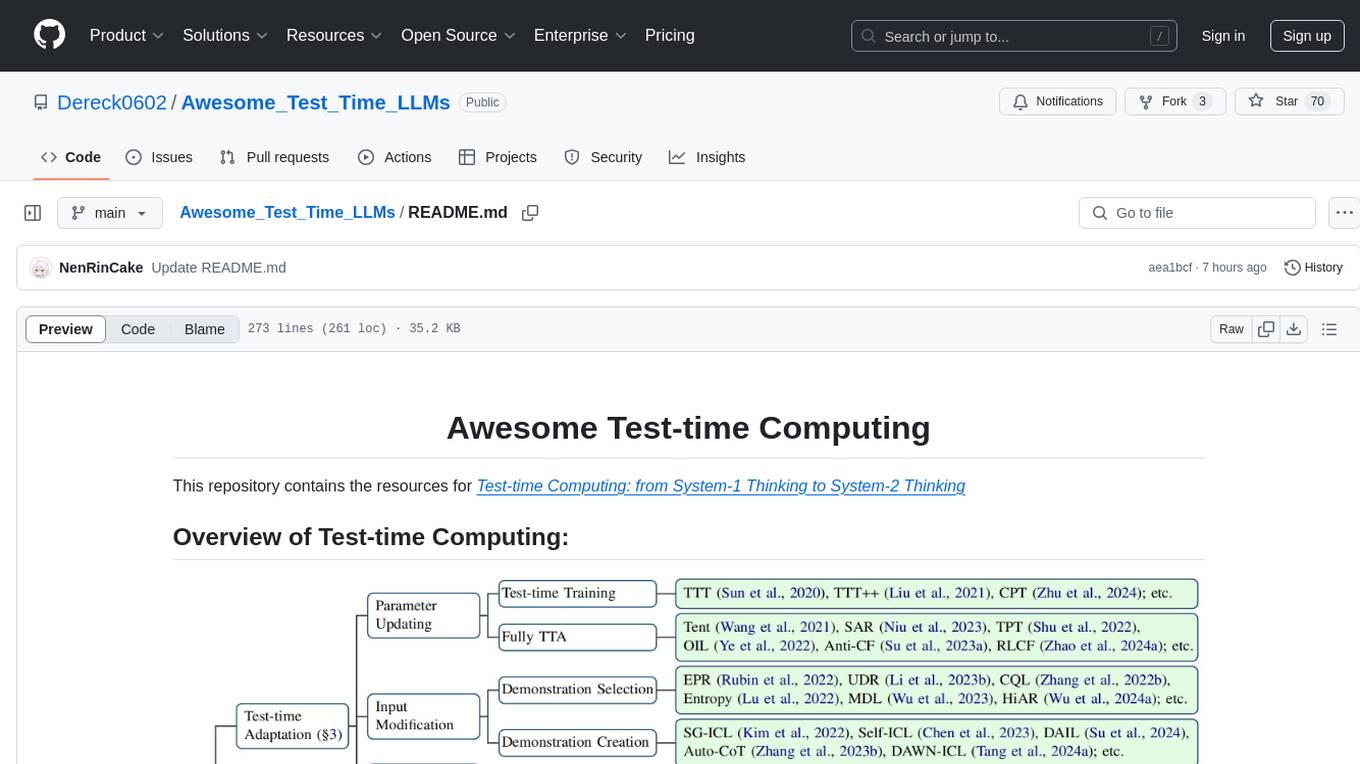

Awesome_Test_Time_LLMs

This repository focuses on test-time computing, exploring various strategies such as test-time adaptation, modifying the input, editing the representation, calibrating the output, test-time reasoning, and search strategies. It covers topics like self-supervised test-time training, in-context learning, activation steering, nearest neighbor models, reward modeling, and multimodal reasoning. The repository provides resources including papers and code for researchers and practitioners interested in enhancing the reasoning capabilities of large language models.

LearnPrompt

LearnPrompt is a permanent, free, open-source AIGC course platform that currently supports various tools like ChatGPT, Agent, Midjourney, Runway, Stable Diffusion, AI digital humans, AI voice & music, and large model fine-tuning. The platform offers features such as multilingual support, comment sections, daily selections, and submissions. Users can explore different modules, including sound cloning, RAG, GPT-SoVits, and OpenAI Sora world model. The platform aims to continuously update and provide tutorials, examples, and knowledge systems related to AI technologies.

llm-misinformation-survey

The 'llm-misinformation-survey' repository is dedicated to the survey on combating misinformation in the age of Large Language Models (LLMs). It explores the opportunities and challenges of utilizing LLMs to combat misinformation, providing insights into the history of combating misinformation, current efforts, and future outlook. The repository serves as a resource hub for the initiative 'LLMs Meet Misinformation' and welcomes contributions of relevant research papers and resources. The goal is to facilitate interdisciplinary efforts in combating LLM-generated misinformation and promoting the responsible use of LLMs in fighting misinformation.

For similar tasks

Everything-LLMs-And-Robotics

The Everything-LLMs-And-Robotics repository is the world's largest GitHub repository focusing on the intersection of Large Language Models (LLMs) and Robotics. It provides educational resources, research papers, project demos, and Twitter threads related to LLMs, Robotics, and their combination. The repository covers topics such as reasoning, planning, manipulation, instructions and navigation, simulation frameworks, perception, and more, showcasing the latest advancements in the field.

awesome-robotics-ai-companies

A curated list of companies in the robotics and artificially intelligent agents industry, including large companies, stable start-ups, non-profits, and government research labs. The list covers companies working on autonomous vehicles, robotics, artificial intelligence, machine learning, computer vision, and more. It aims to showcase industry innovators and important players in the field of robotics and AI.

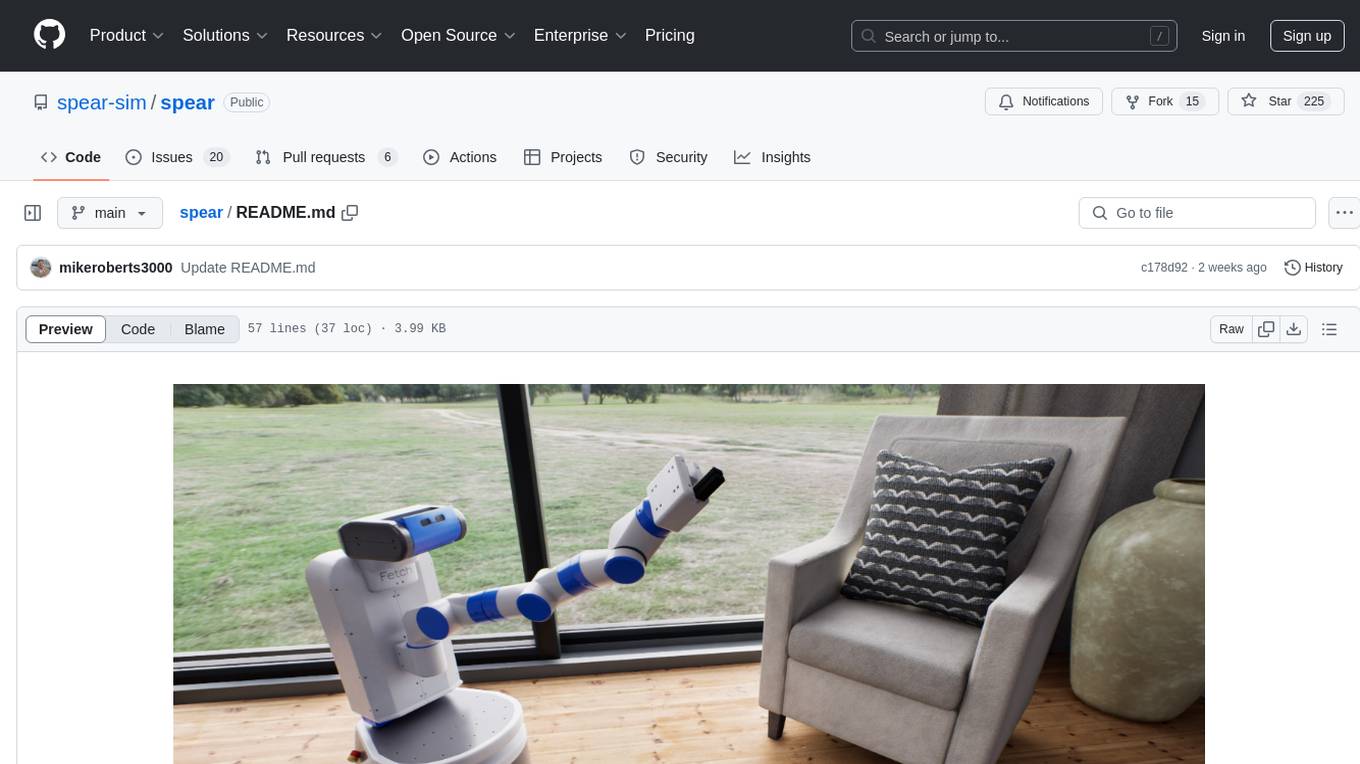

spear

SPEAR is a Simulator for Photorealistic Embodied AI Research that addresses limitations in existing simulators by offering 300 unique virtual indoor environments with detailed geometry, photorealistic materials, and unique floor plans. It provides an OpenAI Gym interface for interaction via Python, released under an MIT License. The simulator was developed with support from the Intelligent Systems Lab at Intel and Kujiale.

anthrax-ai

AnthraxAI is a Vulkan-based game engine that allows users to create and develop 3D games. The engine provides features such as scene selection, camera movement, object manipulation, debugging tools, audio playback, and real-time shader code updates. Users can build and configure the project using CMake and compile shaders using the glslc compiler. The engine supports building on both Linux and Windows platforms, with specific dependencies for each. Visual Studio Code integration is available for building and debugging the project, with instructions provided in the readme for setting up the workspace and required extensions.

lerobotdepot

LeRobotDepot is a repository listing open-source hardware, components, and 3D-printable projects compatible with the LeRobot library. It helps users discover, build, and contribute to affordable robotics solutions powered by AI. The repository includes various robot arms, grippers, cameras, and accessories, along with detailed information on pricing, compatibility, and additional components. Users can find kits for assembling arms, wrist cameras, haptic sensors, and other modules. The repository also features mobile arms, bi-manual arms, humanoid robots, and task kits for specific tasks like push T task and handling a toaster. Additionally, there are resources for teleoperation, cameras, and common accessories like self-fusing silicone rubber for increasing grip friction.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.