Awesome-Robotics-3D

A curated list of 3D Vision papers relating to Robotics domain in the era of large models i.e. LLMs/VLMs, inspired by awesome-computer-vision, including papers, codes, and related websites

Stars: 474

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

README:

This repo contains a curated list of 3D Vision papers relating to Robotics domain in the era of large models i.e. LLMs/VLMs, inspired by awesome-computer-vision

Please feel free to send me pull requests or email to add papers!

If you find this repository useful, please consider citing 📝 and STARing ⭐ this list.

Feel free to share this list with others! List curated and maintained by Zubair Irshad. If you have any questions, please get in touch!

Other relevant survey papers:

-

"When LLMs step into the 3D World: A Survey and Meta-Analysis of 3D Tasks via Multi-modal Large Language Models", arXiv, May 2024. [Paper]

-

"A Comprehensive Study of 3-D Vision-Based Robot Manipulation", TCYB 2021. [Paper]

- Policy Learning

- Pretraining

- VLM and LLM

- Representations

- Simulations, Datasets and Benchmarks

- Citation

-

3D Diffuser Actor: "Policy diffusion with 3d scene representations", arXiv Feb 2024. [Paper] [Webpage] [Code]

-

3D Diffusion Policy: "Generalizable Visuomotor Policy Learning via Simple 3D Representations", RSS 2024. [Paper] [Webpage] [Code]

-

DNAct: "Diffusion Guided Multi-Task 3D Policy Learning", arXiv Mar 2024. [Paper] [Webpage]

-

ManiCM: "Real-time 3D Diffusion Policy via Consistency Model for Robotic Manipulation", arXiv Jun 2024. [Paper] [Webpage] [Code]

-

HDP: "Hierarchical Diffusion Policy for Kinematics-Aware Multi-Task Robotic Manipulation", CVPR 2024. [Paper] [Webpage] [Code]

-

Imagination Policy: "Using Generative Point Cloud Models for Learning Manipulation Policies", arXiv Jun 2024. [Paper] [Webpage]

-

PCWM: "Point Cloud Models Improve Visual Robustness in Robotic Learners", ICRA 2024. [Paper] [Webpage]

-

RVT: "Generalizable Visuomotor Policy Learning via Simple 3D Representations", CORL 2023. [Paper] [Webpage] [Code]

-

Act3D: "3D Feature Field Transformers for Multi-Task Robotic Manipulation", CORL 2023. [Paper] [Webpage] [Code]

-

VIHE: "Transformer-Based 3D Object Manipulation Using Virtual In-Hand View", arXiv, Mar 2024. [Paper] [Webpage] [Code]

-

SGRv2: "Leveraging Locality to Boost Sample Efficiency in Robotic Manipulation", arXiv, Jun 2024. [Paper] [Webpage]

-

Sigma-Agent: "Contrastive Imitation Learning for Language-guided Multi-Task Robotic Manipulation", arXiv June 2024. [Paper]

-

RVT-2: "Learning Precise Manipulation from Few Demonstrations", RSS 2024. [Paper] [Webpage] [Code]

-

SAM-E: "Leveraging Visual Foundation Model with Sequence Imitation for Embodied Manipulation", ICML 2024. [Paper] [Webpage] [Code]

-

RISE: "3D Perception Makes Real-World Robot Imitation Simple and Effective", arXiv, Apr 2024. [Paper] [Webpage] [Code]

-

Polarnet: "3D Point Clouds for Language-Guided Robotic Manipulation", CORL 2023. [Paper] [Webpage] [Code]

-

Chaineddiffuser: "Unifying Trajectory Diffusion and Keypose Prediction for Robotic Manipulation", CORL 2023. [Paper] [Webpage] [Code]

-

Pointcloud_RL: "On the Efficacy of 3D Point Cloud Reinforcement Learning", arXiv, June 2023. [Paper] [Code]

-

Perceiver-Actor: "A Multi-Task Transformer for Robotic Manipulation", CORL 2022. [Paper] [Webpage] [Code]

-

CLIPort: "What and Where Pathways for Robotic Manipulation", CORL 2021. [Paper] [Webpage] [Code]

-

Polarnet: "3D Point Clouds for Language-Guided Robotic Manipulation", CORL 2023. [Paper] [Webpage] [Code]

-

3D-MVP: "3D Multiview Pretraining for Robotic Manipulation", arXiv, June 2024. [Paper] [Webpage]

-

DexArt: "Benchmarking Generalizable Dexterous Manipulation with Articulated Objects", CVPR 2023. [Paper] [Webpage] [Code]

-

RoboUniView: "Visual-Language Model with Unified View Representation for Robotic Manipulaiton", arXiv, Jun 2023. [Paper] [Website] [Code]

-

SUGAR: "Pre-training 3D Visual Representations for Robotics", CVPR 2024. [Paper] [Webpage] [Code]

-

DPR: "Visual Robotic Manipulation with Depth-Aware Pretraining", arXiv, Jan 2024. [Paper]

-

MV-MWM: "Multi-View Masked World Models for Visual Robotic Manipulation", ICML 2023. [Paper] [Code]

-

Point Cloud Matters: "Rethinking the Impact of Different Observation Spaces on Robot Learning", arXiv, Feb 2024. [Paper] [Code]

-

RL3D: "Visual Reinforcement Learning with Self-Supervised 3D Representations", IROS 2023. [Paper] [Website] [Code]

-

ShapeLLM: "ShapeLLM: Universal 3D Object Understanding for Embodied Interaction", ECCV 2024. [Paper/PDF] [Code] [Website]

-

3D-VLA: "3D Vision-Language-Action Generative World Model", ICML 2024. [Paper] [Website] [Code]

-

RoboPoint: "A Vision-Language Model for Spatial Affordance Prediction for Robotics", CORL 2024. [Paper] [Website]

-

Open6DOR: "Benchmarking Open-instruction 6-DoF Object Rearrangement and A VLM-based Approach", IROS 2024. [Paper] [Website] [Code]

-

ReasoningGrasp: "Reasoning Grasping via Multimodal Large Language Model", CORL 2024. [Paper]

-

SpatialVLM: "Endowing Vision-Language Models with Spatial Reasoning Capabilities", CVPR 2024. [Paper] [Website] [Code]

-

SpatialRGPT: "Grounded Spatial Reasoning in Vision Language Model", arXiv, June 2024. [Paper] [Website]

-

Scene-LLM: "Extending Language Model for 3D Visual Understanding and Reasoning", arXiv, Mar 2024. [Paper]

-

ManipLLM: "Embodied Multimodal Large Language Model for Object-Centric Robotic Manipulation ", CVPR 2024. [Paper] [Website] [Code]

-

Manipulate-Anything: "Manipulate-Anything: Automating Real-World Robots using Vision-Language Models", CoRL, 2024. [Paper] [Website]

-

MOKA: "Open-Vocabulary Robotic Manipulation through Mark-Based Visual Prompting", RSS 2024. [Paper] [Website] [Code]

-

Agent3D-Zero: "An Agent for Zero-shot 3D Understanding", arXIv, Mar 2024. [Paper] [Website] [Code]

-

MultiPLY: "A Multisensory Object-Centric Embodied Large Language Model in 3D World", CVPR 2024. [Paper] [Website] [Code]

-

ThinkGrasp: "A Vision-Language System for Strategic Part Grasping in Clutter", arXiv, Jul 2024. [Paper] [Website]

-

VoxPoser: "Composable 3D Value Maps for Robotic Manipulation with Language Models", CORL 2023. [Paper] [Website] [Code]

-

Dream2Real: "Zero-Shot 3D Object Rearrangement with Vision-Language Models", ICRA 2024. [Paper] [Website] [Code]

-

LEO: "An Embodied Generalist Agent in 3D World", ICML 2024. [Paper] [Website] [Code]

-

SpatialPIN: "Enhancing Spatial Reasoning Capabilities of Vision-Language Models through Prompting and Interacting 3D Priors", arXiv, Mar 2024. [Paper] [Website]

-

SpatialBot: "Precise Spatial Understanding with Vision Language Models", arXiv, Jun 2024. [Paper] [Code]

-

COME-robot: "Closed-Loop Open-Vocabulary Mobile Manipulation with GPT-4V", arXiv, Apr 2024. [Paper] [Website]

-

3D-LLM: "Open-Vocabulary Robotic Manipulation through Mark-Based Visual Prompting", Neurips 2023. [Paper] [Website] [Code]

-

VLMaps: "Visual Language Maps for Robot Navigation", ICRA 2023. [Paper] [Website] [Code]

-

MoMa-LLM: "Language-Grounded Dynamic Scene Graphs for Interactive Object Search with Mobile Manipulation", RA-L 2024. [Paper] [Website] [Code]

-

LGrasp6D: "Language-Driven 6-DoF Grasp Detection Using Negative Prompt Guidance", ECCV 2024. [Paper] [Website]

-

OpenAD: "Open-Vocabulary Affordance Detection in 3D Point Clouds", IROS 2023. [Paper] [Website] [Code]

-

3DAPNet: "Language-Conditioned Affordance-Pose Detection in 3D Point Clouds", ICRA 2024. [Paper] [Website] [Code]

-

OpenKD: "Open-Vocabulary Affordance Detection using Knowledge Distillation and Text-Point Correlation", ICRA 2024. [Paper] [Code]

-

PARIS3D: "Reasoning Based 3D Part Segmentation Using Large Multimodal Model", ECCV 2024. [Paper] [Code]

-

RoVi-Aug: "Robot and Viewpoint Augmentation for Cross-Embodiment Robot Learning", CORL 2024. [Paper] [Webpage]

-

Vista: "View-Invariant Policy Learning via Zero-Shot Novel View Synthesis", CORL 2024. [Paper] [Webpage] [Code]

-

GraspSplats: "Efficient Manipulation with 3D Feature Splatting", CORL 2024. [Paper] [Webpage] [Code]

-

RAM: "Retrieval-Based Affordance Transfer for Generalizable Zero-Shot Robotic Manipulation", CORL 2024. [Paper] [Webpage] [Code]

-

Language-Embedded Gaussian Splats (LEGS): "Incrementally Building Room-Scale Representations with a Mobile Robot", IROS 2024. [Paper] [Webpage]

-

Splat-MOVER: "Multi-Stage, Open-Vocabulary Robotic Manipulation via Editable Gaussian Splatting", arXiv May 2024. [Paper] [Webpage]

-

GNFactor: "Multi-Task Real Robot Learning with Generalizable Neural Feature Fields", CORL 2023. [Paper] [Webpage] [Code]

-

ManiGaussian: "Dynamic Gaussian Splatting for Multi-task Robotic Manipulation", ECCV 2024. [Paper] [Webpage] [Code]

-

GaussianGrasper: "3D Language Gaussian Splatting for Open-vocabulary Robotic Grasping", arXiv Mar 2024. [Paper] [Webpage] [Code]

-

ORION: "Vision-based Manipulation from Single Human Video with Open-World Object Graphs", arXiv May 2024. [Paper] [Webpage]

-

ConceptGraphs: "Open-Vocabulary 3D Scene Graphs for Perception and Planning", ICRA 2024. [Paper] [Webpage] [Code]

-

SparseDFF: "Sparse-View Feature Distillation for One-Shot Dexterous Manipulation", ICLR 2024. [Paper] [Webpage]

-

GROOT: "Learning Generalizable Manipulation Policies with Object-Centric 3D Representations", CORL 2023. [Paper] [Webpage] [Code]

-

Distilled Feature Fields: "Enable Few-Shot Language-Guided Manipulation", CORL 2023. [Paper] [Webpage] [Code]

-

SGR: "A Universal Semantic-Geometric Representation for Robotic Manipulation", CORL 2023. [Paper] [Webpage] [Code]

-

OVMM: "Open-vocabulary Mobile Manipulation in Unseen Dynamic Environments with 3D Semantic Maps", arXiv, Jun 2024. [Paper]

-

CLIP-Fields: "Weakly Supervised Semantic Fields for Robotic Memory", RSS 2023. [Paper] [Webpage] [Code]

-

NeRF in the Palm of Your Hand: "Corrective Augmentation for Robotics via Novel-View Synthesis", CVPR 2023. [Paper] [Webpage]

-

JCR: "Unifying Scene Representation and Hand-Eye Calibration with 3D Foundation Models", arXiv, Apr 2024. [Paper] [Code]

-

D3Fields: "Dynamic 3D Descriptor Fields for Zero-Shot Generalizable Robotic Manipulation", arXiv, Sep 2023. [Paper] [Webpage] [Code]

-

SayPlan: "Grounding Large Language Models using 3D Scene Graphs for Scalable Robot Task Planning", CORL 2023. [Paper] [Webpage]

-

Dex-NeRF: "Using a Neural Radiance field to Grasp Transparent Objects", CORL 2021. [Paper] [Webpage]

-

The Colosseum: "A Benchmark for Evaluating Generalization for Robotic Manipulation", RSS 2024. [Paper] [Website] [Code]

-

OpenEQA: "Embodied Question Answering in the Era of Foundation Models", CVPR 2024. [Paper] [Website] [Code]

-

DROID: "A Large-Scale In-the-Wild Robot Manipulation Dataset", RSS 2024. [Paper] [Website] [Code]

-

RH20T: "A Comprehensive Robotic Dataset for Learning Diverse Skills in One-Shot", ICRA 2024. [Paper] [Website] [Code]

-

Gen2Sim: "A Comprehensive Robotic Dataset for Learning Diverse Skills in One-Shot", ICRA 2024. [Paper] [Website] [Code]

-

BEHAVIOR Vision Suite: "Customizable Dataset Generation via Simulation", CVPR 2024. [Paper] [Website] [Code]

-

RoboCasa: "Large-Scale Simulation of Everyday Tasks for Generalist Robots", RSS 2024. [Paper] [Website] [Code]

-

ARNOLD: "ARNOLD: A Benchmark for Language-Grounded Task Learning With Continuous States in Realistic 3D Scenes", ICCV 2023. [Paper] [Webpage] [Code]

-

VIMA: "General Robot Manipulation with Multimodal Prompts", ICML 2023. [Paper] [Website] [Code]

-

ManiSkill2: "A Unified Benchmark for Generalizable Manipulation Skills", ICLR 2023. [Paper] [Website] [Code]

-

Robo360: "A 3D Omnispective Multi-Material Robotic Manipulation Dataset", arxiv, Dec 2023. [Paper]

-

AR2-D2: "Training a Robot Without a Robot", CORL 2023. [Paper] [Website] [Code]

-

Habitat 2.0: "Training Home Assistants to Rearrange their Habitat", Neuips 2021. [Paper] [Website] [Code]

-

VL-Grasp: "a 6-Dof Interactive Grasp Policy for Language-Oriented Objects in Cluttered Indoor Scenes", IROS 2023. [Paper] [Code]

-

OCID-Ref: "A 3D Robotic Dataset with Embodied Language for Clutter Scene Grounding", NAACL 2021. [Paper] [Code]

-

ManipulaTHOR: "A Framework for Visual Object Manipulation", CVPR 2021. [Paper] [Website] [Code]

-

RoboTHOR: "An Open Simulation-to-Real Embodied AI Platform", CVPR 2020. [Paper] [Website] [Code]

-

HabiCrowd: "HabiCrowd: A High Performance Simulator for Crowd-Aware Visual Navigation", IROS 2024. [Paper] [Website] [Code]

If you find this repository useful, please consider citing this list:

@misc{irshad2024roboticd3D,

title = {Awesome Robotics 3D - A curated list of resources on 3D vision papers relating to robotics},

author = {Muhammad Zubair Irshad},

journal = {GitHub repository},

url = {https://github.com/zubair-irshad/Awesome-Robotics-3D},

year = {2024},

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Robotics-3D

Similar Open Source Tools

Awesome-Robotics-3D

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

Awesome-LLM-Robotics

This repository contains a curated list of **papers using Large Language/Multi-Modal Models for Robotics/RL**. Template from awesome-Implicit-NeRF-Robotics Please feel free to send me pull requests or email to add papers! If you find this repository useful, please consider citing and STARing this list. Feel free to share this list with others! ## Overview * Surveys * Reasoning * Planning * Manipulation * Instructions and Navigation * Simulation Frameworks * Citation

awesome-LLM-game-agent-papers

This repository provides a comprehensive survey of research papers on large language model (LLM)-based game agents. LLMs are powerful AI models that can understand and generate human language, and they have shown great promise for developing intelligent game agents. This survey covers a wide range of topics, including adventure games, crafting and exploration games, simulation games, competition games, cooperation games, communication games, and action games. For each topic, the survey provides an overview of the state-of-the-art research, as well as a discussion of the challenges and opportunities for future work.

awesome-and-novel-works-in-slam

This repository contains a curated list of cutting-edge works in Simultaneous Localization and Mapping (SLAM). It includes research papers, projects, and tools related to various aspects of SLAM, such as 3D reconstruction, semantic mapping, novel algorithms, large-scale mapping, and more. The repository aims to showcase the latest advancements in SLAM technology and provide resources for researchers and practitioners in the field.

Paper-Reading-ConvAI

Paper-Reading-ConvAI is a repository that contains a list of papers, datasets, and resources related to Conversational AI, mainly encompassing dialogue systems and natural language generation. This repository is constantly updating.

llm-misinformation-survey

The 'llm-misinformation-survey' repository is dedicated to the survey on combating misinformation in the age of Large Language Models (LLMs). It explores the opportunities and challenges of utilizing LLMs to combat misinformation, providing insights into the history of combating misinformation, current efforts, and future outlook. The repository serves as a resource hub for the initiative 'LLMs Meet Misinformation' and welcomes contributions of relevant research papers and resources. The goal is to facilitate interdisciplinary efforts in combating LLM-generated misinformation and promoting the responsible use of LLMs in fighting misinformation.

Awesome-World-Models

This repository is a curated list of papers related to World Models for General Video Generation, Embodied AI, and Autonomous Driving. It includes foundation papers, blog posts, technical reports, surveys, benchmarks, and specific world models for different applications. The repository serves as a valuable resource for researchers and practitioners interested in world models and their applications in robotics and AI.

awesome-llm-attributions

This repository focuses on unraveling the sources that large language models tap into for attribution or citation. It delves into the origins of facts, their utilization by the models, the efficacy of attribution methodologies, and challenges tied to ambiguous knowledge reservoirs, biases, and pitfalls of excessive attribution.

Awesome-LLMs-in-Graph-tasks

This repository is a collection of papers on leveraging Large Language Models (LLMs) in Graph Tasks. It provides a comprehensive overview of how LLMs can enhance graph-related tasks by combining them with traditional Graph Neural Networks (GNNs). The integration of LLMs with GNNs allows for capturing both structural and contextual aspects of nodes in graph data, leading to more powerful graph learning. The repository includes summaries of various models that leverage LLMs to assist in graph-related tasks, along with links to papers and code repositories for further exploration.

Efficient_Foundation_Model_Survey

Efficient Foundation Model Survey is a comprehensive analysis of resource-efficient large language models (LLMs) and multimodal foundation models. The survey covers algorithmic and systemic innovations to support the growth of large models in a scalable and environmentally sustainable way. It explores cutting-edge model architectures, training/serving algorithms, and practical system designs. The goal is to provide insights on tackling resource challenges posed by large foundation models and inspire future breakthroughs in the field.

LLM-Agents-Papers

A repository that lists papers related to Large Language Model (LLM) based agents. The repository covers various topics including survey, planning, feedback & reflection, memory mechanism, role playing, game playing, tool usage & human-agent interaction, benchmark & evaluation, environment & platform, agent framework, multi-agent system, and agent fine-tuning. It provides a comprehensive collection of research papers on LLM-based agents, exploring different aspects of AI agent architectures and applications.

prompt-in-context-learning

An Open-Source Engineering Guide for Prompt-in-context-learning from EgoAlpha Lab. 📝 Papers | ⚡️ Playground | 🛠 Prompt Engineering | 🌍 ChatGPT Prompt | ⛳ LLMs Usage Guide > **⭐️ Shining ⭐️:** This is fresh, daily-updated resources for in-context learning and prompt engineering. As Artificial General Intelligence (AGI) is approaching, let’s take action and become a super learner so as to position ourselves at the forefront of this exciting era and strive for personal and professional greatness. The resources include: _🎉Papers🎉_: The latest papers about _In-Context Learning_ , _Prompt Engineering_ , _Agent_ , and _Foundation Models_. _🎉Playground🎉_: Large language models(LLMs)that enable prompt experimentation. _🎉Prompt Engineering🎉_: Prompt techniques for leveraging large language models. _🎉ChatGPT Prompt🎉_: Prompt examples that can be applied in our work and daily lives. _🎉LLMs Usage Guide🎉_: The method for quickly getting started with large language models by using LangChain. In the future, there will likely be two types of people on Earth (perhaps even on Mars, but that's a question for Musk): - Those who enhance their abilities through the use of AIGC; - Those whose jobs are replaced by AI automation. 💎EgoAlpha: Hello! human👤, are you ready?

For similar tasks

Awesome-Robotics-3D

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

For similar jobs

LitServe

LitServe is a high-throughput serving engine designed for deploying AI models at scale. It generates an API endpoint for models, handles batching, streaming, and autoscaling across CPU/GPUs. LitServe is built for enterprise scale with a focus on minimal, hackable code-base without bloat. It supports various model types like LLMs, vision, time-series, and works with frameworks like PyTorch, JAX, Tensorflow, and more. The tool allows users to focus on model performance rather than serving boilerplate, providing full control and flexibility.

Lidar_AI_Solution

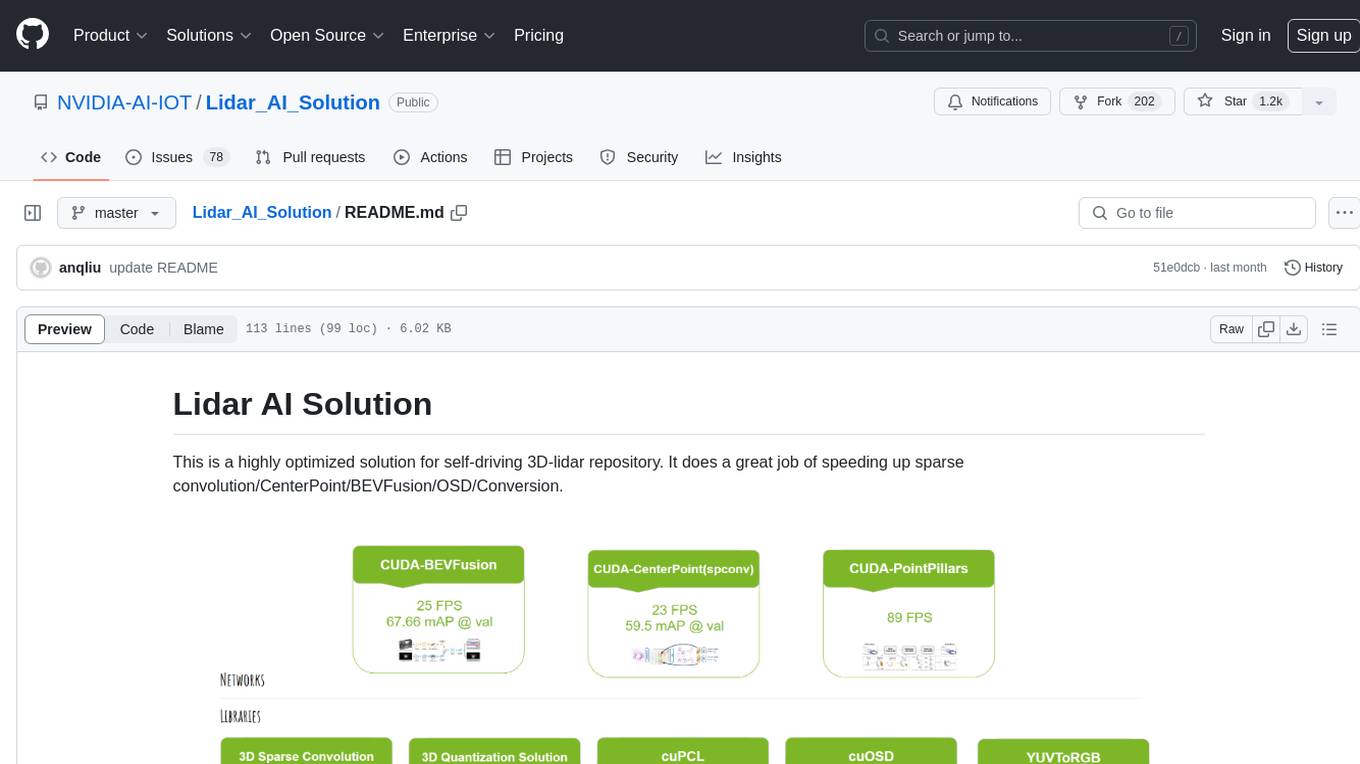

Lidar AI Solution is a highly optimized repository for self-driving 3D lidar, providing solutions for sparse convolution, BEVFusion, CenterPoint, OSD, and Conversion. It includes CUDA and TensorRT implementations for various tasks such as 3D sparse convolution, BEVFusion, CenterPoint, PointPillars, V2XFusion, cuOSD, cuPCL, and YUV to RGB conversion. The repository offers easy-to-use solutions, high accuracy, low memory usage, and quantization options for different tasks related to self-driving technology.

generative-ai-sagemaker-cdk-demo

This repository showcases how to deploy generative AI models from Amazon SageMaker JumpStart using the AWS CDK. Generative AI is a type of AI that can create new content and ideas, such as conversations, stories, images, videos, and music. The repository provides a detailed guide on deploying image and text generative AI models, utilizing pre-trained models from SageMaker JumpStart. The web application is built on Streamlit and hosted on Amazon ECS with Fargate. It interacts with the SageMaker model endpoints through Lambda functions and Amazon API Gateway. The repository also includes instructions on setting up the AWS CDK application, deploying the stacks, using the models, and viewing the deployed resources on the AWS Management Console.

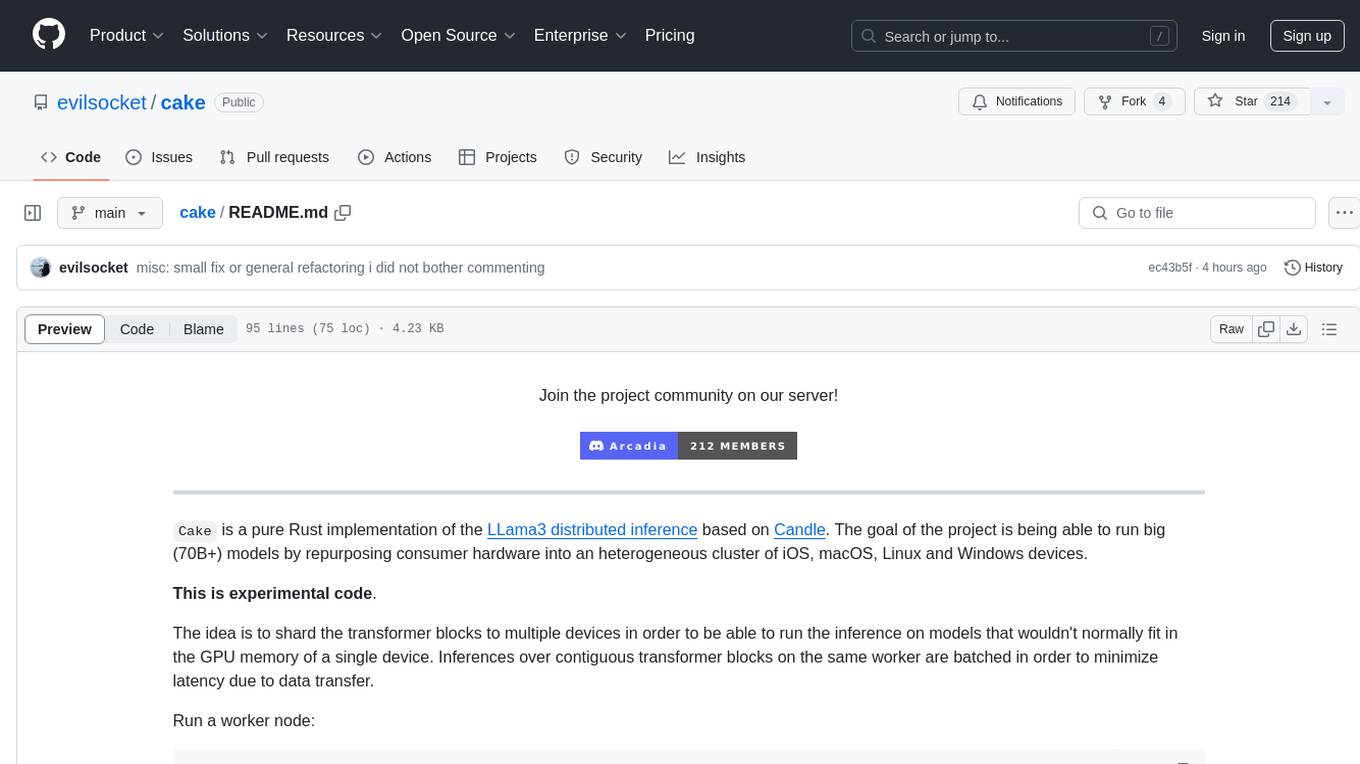

cake

cake is a pure Rust implementation of the llama3 LLM distributed inference based on Candle. The project aims to enable running large models on consumer hardware clusters of iOS, macOS, Linux, and Windows devices by sharding transformer blocks. It allows running inferences on models that wouldn't fit in a single device's GPU memory by batching contiguous transformer blocks on the same worker to minimize latency. The tool provides a way to optimize memory and disk space by splitting the model into smaller bundles for workers, ensuring they only have the necessary data. cake supports various OS, architectures, and accelerations, with different statuses for each configuration.

Awesome-Robotics-3D

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

vector-inference

This repository provides an easy-to-use solution for running inference servers on Slurm-managed computing clusters using vLLM. All scripts in this repository run natively on the Vector Institute cluster environment. Users can deploy models as Slurm jobs, check server status and performance metrics, and shut down models. The repository also supports launching custom models with specific configurations. Additionally, users can send inference requests and set up an SSH tunnel to run inference from a local device.

rhesis

Rhesis is a comprehensive test management platform designed for Gen AI teams, offering tools to create, manage, and execute test cases for generative AI applications. It ensures the robustness, reliability, and compliance of AI systems through features like test set management, automated test generation, edge case discovery, compliance validation, integration capabilities, and performance tracking. The platform is open source, emphasizing community-driven development, transparency, extensible architecture, and democratizing AI safety. It includes components such as backend services, frontend applications, SDK for developers, worker services, chatbot applications, and Polyphemus for uncensored LLM service. Rhesis enables users to address challenges unique to testing generative AI applications, such as non-deterministic outputs, hallucinations, edge cases, ethical concerns, and compliance requirements.