awesome-llm-attributions

A Survey of Attributions for Large Language Models

Stars: 152

This repository focuses on unraveling the sources that large language models tap into for attribution or citation. It delves into the origins of facts, their utilization by the models, the efficacy of attribution methodologies, and challenges tied to ambiguous knowledge reservoirs, biases, and pitfalls of excessive attribution.

README:

A Survey of Large Language Models Attribution [ArXiv preprint]

Open-domain dialogue systems, driven by large language models, have changed the way we use conversational AI. However, these systems often produce content that might not be reliable. In traditional open-domain settings, the focus is mostly on the answer’s relevance or accuracy rather than evaluating whether the answer is attributed to the retrieved documents. A QA model with high accuracy may not necessarily achieve high attribution.

Attribution refers to the capacity of a model, such as an LLM, to generate and provide evidence, often in the form of references or citations, that substantiates the claims or statements it produces. This evidence is derived from identifiable sources, ensuring that the claims can be logically inferred from a foundational corpus, making them comprehensible and verifiable by a general audience. The primary purposes of attribution include enabling users to validate the claims made by the model, promoting the generation of text that closely aligns with the cited sources to enhance accuracy and reduce misinformation or hallucination, and establishing a structured framework for evaluating the completeness and relevance of the supporting evidence in relation to the presented claims.

In this repository, we focus on unraveling the sources that these systems tap into for attribution or citation. We delve into the origins of these facts, their utilization by the models, the efficacy of these attribution methodologies, and grapple with challenges tied to ambiguous knowledge reservoirs, inherent biases, and the pitfalls of excessive attribution.

✨ Work in progress. We would like to appreciate any contributions via PRs, issues from NLP community.

-

[2021/07] Rethinking Search: Making Domain Experts out of Dilettantes Donald Metzler et al. arXiv. [paper]

-

[2021/12] Measuring Attribution in Natural Language Generation Models. H Rashkin et al. CL. [paper]

-

[2022/11] The attribution problem with generative AI Anna Rogers [blog]

-

[2023/07] Citation: A Key to Building Responsible and Accountable Large Language Models Jie Huang et al. arXiv. [paper]

-

[2023/10] Establishing Trustworthiness: Rethinking Tasks and Model Evaluation Robert Litschko et al. arXiv. [paper]

-

[2023/11] Unifying Corroborative and Contributive Attributions in Large Language Models Theodora Worledge et al. NeurIPS ATTRIB Workshop 2023 [paper]

-

[2024/03] Reliable, Adaptable, and Attributable Language Models with Retrieval Akari Asai et al. arXiv. [paper]

-

[2021/11] The Fact Extraction and VERification (FEVER) Shared Task James Thorne et al. EMNLP'18 [paper]

-

[2021/08] A Survey on Automated Fact-Checking Zhijiang Guo et al. TACL'22 [paper]

-

[2021/10] Truthful AI: Developing and governing AI that does not lie Owain Evans et al. arXiv [paper]

-

[2021/05] Evaluating Attribution in Dialogue Systems: The BEGIN Benchmark Nouha Dziri et al. TACL'22 [paper] [code]

-

[2023/10] Explainable Claim Verification via Knowledge-Grounded Reasoning with Large Language Models Haoran Wang et al. Findings of EMNLP'23 [paper]

-

[2022/07] Improving Wikipedia Verifiability with AI Fabio Petroni et al. arXiv. [paper] [code]

-

[2022/12] Foveate, Attribute, and Rationalize: Towards Physically Safe and Trustworthy AI Alex Mei et al. findings of ACL'22 [paper]

-

[2023/07] Inseq: An Interpretability Toolkit for Sequence Generation Models Gabriele Sarti et al. ACL Demo'23 [paper] [library]

-

[2023/10] Quantifying the Plausibility of Context Reliance in Neural Machine Translation Gabriele Sarti et al. arXiv. [paper]

-

[2017/06] A unified view of gradient-based attribution methods for Deep Neural Networks. Marco Ancona et al. arXiv. [paper]

-

[2021/03] Towards multi-modal causability with Graph Neural Networks enabling information fusion for explainable AI. Andreas Holzinger et al. arXiv. [paper]

-

[2023/03] Retrieving Multimodal Information for Augmented Generation: A Survey. Ruochen Zhao et al. arXiv. [paper]

-

[2023/07] Improving Explainability of Disentangled Representations using Multipath-Attribution Mappings. Lukas Klein et al. arXiv. [paper]

-

[2023/07] Visual Explanations of Image-Text Representations via Mult-Modal Information Bottleneck Attribution. Ying Wang et al. arXiv. [paper]

-

[2023/07] MAEA: Multimodal Attribution for Embodied AI. Vidhi Jain et al. arXiv. [paper]

-

[2023/10] Rephrase, Augment, Reason: Visual Grounding of Questions for Vision-Language Models. Archiki Prasad et al. arXiv. [paper] [code]

-

[2019/11] Transforming Wikipedia into Augmented Data for Query-Focused Summarization. Haichao Zhu et al. TASLP. [paper]

-

[2023/04] WebBrain: Learning to Generate Factually Correct Articles for Queries by Grounding on Large Web Corpus. Hongjin Qian et al. arXiv. [paper]

- [2022/02] Transformer Memory as a Differentiable Search Index Yi Tay et al. NeurIPS'22 [paper]

- [2023/08] Optimizing Factual Accuracy in Text Generation through Dynamic Knowledge Selection Hongjin Qian et al. arxiv. [paper]

-

[2023/02] The ROOTS Search Tool: Data Transparency for LLMs Aleksandra Piktus et al. arXiv. [paper]

-

[2022/05] ORCA: Interpreting Prompted Language Models via Locating Supporting Data Evidence in the Ocean of Pretraining Data Xiaochuang Han et al. arXiv. [paper]

-

[2022/05] Understanding In-Context Learning via Supportive Pretraining Data Xiaochuang Han et al. arXiv. [paper]

-

[2022/07] [link the fine-tuned LLM to its pre-trained base model] Matching Pairs: Attributing Fine-Tuned Models to their Pre-Trained Large Language Models Myles Foley et al. ACL 2023. [paper]

-

[2021/04] Retrieval augmentation reduces hallucination in conversation Kurt Shuster et al. arXiv. [paper]

-

[2020/07] Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering Gautier Izacard et al. arXiv. [paper]

-

[2021/12] Improving language models by retrieving from trillions of tokens Sebastian Borgeaud et al. arXiv. [paper]

-

[2022/12] Rethinking with Retrieval: Faithful Large Language Model Inference Hangfeng He et al. arXiv. [paper]

-

[2022/12] CiteBench: A benchmark for Scientific Citation Text Generation Martin Funkquist et al. arXiv. [paper]

-

[2023/04] WebBrain: Learning to Generate Factually Correct Articles for Queries by Grounding on Large Web Corpus Hongjing Qian et al. arXiv. [paper] [code]

-

[2023/05] Enabling Large Language Models to Generate Text with Citations Tianyu Gao et al. arXiv. [paper] [code]

-

[2023/07] HAGRID: A Human-LLM Collaborative Dataset for Generative Information-Seeking with Attribution Ehsan Kamalloo et al. arXiv. [paper] [code]

-

[2023/09] EXPERTQA : Expert-Curated Questions and Attributed Answers Chaitanya Malaviya et al. arXiv. [paper] [code]

-

[2023/11] SEMQA: Semi-Extractive Multi-Source Question Answering Tal Schuster et al. arXiv. [paper] [code]

-

[2024/01] Benchmarking Large Language Models in Complex Question Answering Attribution using Knowledge Graphs Nan Hu et al. arXiv. [paper]

-

[2024/05] WebCiteS: Attributed Query-Focused Summarization on Chinese Web Search Results with Citations Haolin Deng et al. ACL'24 [paper]

-

[2023/05] "According to ..." Prompting Language Models Improves Quoting from Pre-Training Data Orion Weller et al. arXiv. [paper]

-

[2023/07] Credible Without Credit: Domain Experts Assess Generative Language Models Denis Peskoff et al. ACL 2023. [paper]

-

[2023/09] ChatGPT Hallucinates when Attributing Answers Guido Zuccon et al. arXiv. [paper]

-

[2023/09] Towards Reliable and Fluent Large Language Models: Incorporating Feedback Learning Loops in QA Systems Dongyub Lee et al. arXiv. [paper]

-

[2023/09] Retrieving Evidence from EHRs with LLMs: Possibilities and Challenges Hiba Ahsan et al. arXiv. [paper]

-

[2023/10] Learning to Plan and Generate Text with Citations Annoymous et al. OpenReview, ICLR 2024 [paper]

-

[2023/10] 1-PAGER: One Pass Answer Generation and Evidence Retrieval Palak Jain et al. arxiv [paper]

-

[2024/2] How well do LLMs cite relevant medical references? An evaluation framework and analyses Kevin Wu et al. arXiv. [paper]

-

[2024/4] Source-Aware Training Enables Knowledge Attribution in Language Models. Muhammad Khalifa et al. arXiv. [paper]

-

[2024/4] CoTAR: Chain-of-Thought Attribution Reasoning with Multi-level Granularity Moshe Berchansky et al. arXiv. [paper]

-

[2024/7] Improving Retrieval Augmented Language Model with Self-Reasoning Xia et al. arXiv. [paper]

-

[2023/04] Search-in-the-Chain: Towards the Accurate, Credible and Traceable Content Generation for Complex Knowledge-intensive Tasks Shicheng Xu et al. arXiv. [paper]

-

[2023/05] Mitigating Language Model Hallucination with Interactive Question-Knowledge Alignment Shuo Zhang et al. arXiv. [paper]

-

[2023/03] SmartBook: AI-Assisted Situation Report Generation Revanth Gangi Reddy et al. arXiv. [paper]

-

[2023/10] Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection Akari Asai et al. arXiv. [paper] [homepage]

-

[2023/11] LLatrieval: LLM-Verified Retrieval for Verifiable Generation Xiaonan Li et al. arXiv. [paper] [code]

-

[2023/11] Effective Large Language Model Adaptation for Improved Grounding Xi Ye et al. arXiv. [paper]

-

[2024/01] Towards Verifiable Text Generation with Evolving Memory and Self-Reflection Hao Sun et al. arXiv. [paper]

-

[2024/02] Training Language Models to Generate Text with Citations via Fine-grained Rewards Chengyu Huang et al. arXiv. [paper]

-

[2024/03] Improving Attributed Text Generation of Large Language Models via Preference Learning Dongfang Li et al. arXiv. [paper]

-

[2022/10] RARR: Researching and Revising What Language Models Say, Using Language Models Luyu Gao et al. arXiv. [paper]

-

[2023/04] The Internal State of an LLM Knows When its Lying Amos Azaria et al. arXiv. [paper]

-

[2023/05] Do Language Models Know When They're Hallucinating References? Ayush Agrawal et al. arXiv. [paper]

-

[2023/05] Complex Claim Verification with Evidence Retrieved in the Wild Jifan Chen et al. arXiv. [paper][code]

-

[2023/06] Retrieving Supporting Evidence for LLMs Generated Answers Siqing Huo et al. arXiv. [paper]

-

[2024/06] CaLM: Contrasting Large and Small Language Models to Verify Grounded Generation I-Hung Hsu1 et al. arXiv. [paper]

-

[2022/03] LaMDA: Language Models for Dialog Applications. Romal Thoppilan et al. arXiv. [paper]

-

[2022/03] WebGPT: Browser-assisted question-answering with human feedback. Reiichiro Nakano, Jacob Hilton, Suchir Balaji et al. arXiv.[paper]

-

[2022/03] GopherCite - Teaching language models to support answers with verified quotes. Jacob Menick et al. arXiv. [paper]

-

[2022/09] Improving alignment of dialogue agents via targeted human judgements. Amelia Glaese et al. arXiv. [paper]

-

[2023/05] WebCPM: Interactive Web Search for Chinese Long-form Question Answering. Yujia Qin et al. arXiv. [paper]

-

[2022/07] Improving Wikipedia Verifiability with AI Fabio Petroni et al. arXiv. [paper]

-

[2022/12] Attributed Question Answering: Evaluation and Modeling for Attributed Large Language Models. B Bohnet et al. arXiv. [paper] [code]

-

[2023/04] Evaluating Verifiability in Generative Search Engines Nelson F. Liu et al. arXiv. [paper] [annonated data]

-

[2023/05] WICE: Real-World Entailment for Claims in Wikipedia Ryo Kamoi et al. arXiv. [paper]

-

[2023/05] Evaluating and Modeling Attribution for Cross-Lingual Question Answering Benjamin Muller et al. arXiv. [paper]

-

[2023/05] FActScore: Fine-grained Atomic Evaluation of Factual Precision in Long Form Text Generation Sewon Min et al. arXiv. [paper] [code]

-

[2023/05] Automatic Evaluation of Attribution by Large Language Models. X Yue et al. arXiv. [paper] [code]

-

[2023/07] FacTool: Factuality Detection in Generative AI -- A Tool Augmented Framework for Multi-Task and Multi-Domain Scenarios I-Chun Chern et al. arXiv. [paper][code]

-

[2023/09] Quantifying and Attributing the Hallucination of Large Language Models via Association Analysis Li Du et al. arXiv. [paper]

-

[2023/10] Towards Verifiable Generation: A Benchmark for Knowledge-aware Language Model Attribution Xinze Li et al. arXiv. [paper]

-

[2023/10] Understanding Retrieval Augmentation for Long-Form Question Answering Hung-Ting Chen et al. arXiv. [paper]

-

[2023/11] Enhancing Medical Text Evaluation with GPT-4 Yiqing Xie et al. arXiv. [paper]

a. hallucination of attribution i.e. does attribution faithfully to its content?

b. Inability to attribute parameter knowledge of model self.

c. Validity of the knowledge source - source trustworthiness. Faithfulness ≠ Factuality

d. Bias in attribution method

e. Over-attribution & under-attribution

f. Knowledge conflict

@misc{li2023llmattribution,

title={A Survey of Large Language Models Attribution},

author={Dongfang Li and Zetian Sun and Xinshuo Hu and Zhenyu Liu and Ziyang Chen and Baotian Hu and Aiguo Wu and Min Zhang},

year={2023},

eprint={2311.03731},

archivePrefix={arXiv},

primaryClass={cs.CL},

howpublished={\url{https://github.com/HITsz-TMG/awesome-llm-attributions}},

}

For finding survey of hallucination please refer to:

- Siren's Song in the AI Ocean: A Survey on Hallucination in Large Language Models

- Cognitive Mirage: A Review of Hallucinations in Large Language Models

- A Survey of Hallucination in Large Foundation Models

- Dongfang Li

- Zetian Sun

- Xinshuo Hu

- Zhenyu Liu

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-llm-attributions

Similar Open Source Tools

awesome-llm-attributions

This repository focuses on unraveling the sources that large language models tap into for attribution or citation. It delves into the origins of facts, their utilization by the models, the efficacy of attribution methodologies, and challenges tied to ambiguous knowledge reservoirs, biases, and pitfalls of excessive attribution.

llm-misinformation-survey

The 'llm-misinformation-survey' repository is dedicated to the survey on combating misinformation in the age of Large Language Models (LLMs). It explores the opportunities and challenges of utilizing LLMs to combat misinformation, providing insights into the history of combating misinformation, current efforts, and future outlook. The repository serves as a resource hub for the initiative 'LLMs Meet Misinformation' and welcomes contributions of relevant research papers and resources. The goal is to facilitate interdisciplinary efforts in combating LLM-generated misinformation and promoting the responsible use of LLMs in fighting misinformation.

LLM4IR-Survey

LLM4IR-Survey is a collection of papers related to large language models for information retrieval, organized according to the survey paper 'Large Language Models for Information Retrieval: A Survey'. It covers various aspects such as query rewriting, retrievers, rerankers, readers, search agents, and more, providing insights into the integration of large language models with information retrieval systems.

Knowledge-Conflicts-Survey

Knowledge Conflicts for LLMs: A Survey is a repository containing a survey paper that investigates three types of knowledge conflicts: context-memory conflict, inter-context conflict, and intra-memory conflict within Large Language Models (LLMs). The survey reviews the causes, behaviors, and possible solutions to these conflicts, providing a comprehensive analysis of the literature in this area. The repository includes detailed information on the types of conflicts, their causes, behavior analysis, and mitigating solutions, offering insights into how conflicting knowledge affects LLMs and how to address these conflicts.

awesome-LLM-game-agent-papers

This repository provides a comprehensive survey of research papers on large language model (LLM)-based game agents. LLMs are powerful AI models that can understand and generate human language, and they have shown great promise for developing intelligent game agents. This survey covers a wide range of topics, including adventure games, crafting and exploration games, simulation games, competition games, cooperation games, communication games, and action games. For each topic, the survey provides an overview of the state-of-the-art research, as well as a discussion of the challenges and opportunities for future work.

Awesome-LLM-Robotics

This repository contains a curated list of **papers using Large Language/Multi-Modal Models for Robotics/RL**. Template from awesome-Implicit-NeRF-Robotics Please feel free to send me pull requests or email to add papers! If you find this repository useful, please consider citing and STARing this list. Feel free to share this list with others! ## Overview * Surveys * Reasoning * Planning * Manipulation * Instructions and Navigation * Simulation Frameworks * Citation

awesome-deeplogic

Awesome deep logic is a curated list of papers and resources focusing on integrating symbolic logic into deep neural networks. It includes surveys, tutorials, and research papers that explore the intersection of logic and deep learning. The repository aims to provide valuable insights and knowledge on how logic can be used to enhance reasoning, knowledge regularization, weak supervision, and explainability in neural networks.

awesome_LLM-harmful-fine-tuning-papers

This repository is a comprehensive survey of harmful fine-tuning attacks and defenses for large language models (LLMs). It provides a curated list of must-read papers on the topic, covering various aspects such as alignment stage defenses, fine-tuning stage defenses, post-fine-tuning stage defenses, mechanical studies, benchmarks, and attacks/defenses for federated fine-tuning. The repository aims to keep researchers updated on the latest developments in the field and offers insights into the vulnerabilities and safeguards related to fine-tuning LLMs.

Awesome-Robotics-3D

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

Awesome-TimeSeries-SpatioTemporal-LM-LLM

Awesome-TimeSeries-SpatioTemporal-LM-LLM is a curated list of Large (Language) Models and Foundation Models for Temporal Data, including Time Series, Spatio-temporal, and Event Data. The repository aims to summarize recent advances in Large Models and Foundation Models for Time Series and Spatio-Temporal Data with resources such as papers, code, and data. It covers various applications like General Time Series Analysis, Transportation, Finance, Healthcare, Event Analysis, Climate, Video Data, and more. The repository also includes related resources, surveys, and papers on Large Language Models, Foundation Models, and their applications in AIOps.

ABigSurveyOfLLMs

ABigSurveyOfLLMs is a repository that compiles surveys on Large Language Models (LLMs) to provide a comprehensive overview of the field. It includes surveys on various aspects of LLMs such as transformers, alignment, prompt learning, data management, evaluation, societal issues, safety, misinformation, attributes of LLMs, efficient LLMs, learning methods for LLMs, multimodal LLMs, knowledge-based LLMs, extension of LLMs, LLMs applications, and more. The repository aims to help individuals quickly understand the advancements and challenges in the field of LLMs through a collection of recent surveys and research papers.

Call-for-Reviewers

The `Call-for-Reviewers` repository aims to collect the latest 'call for reviewers' links from various top CS/ML/AI conferences/journals. It provides an opportunity for individuals in the computer/ machine learning/ artificial intelligence fields to gain review experience for applying for NIW/H1B/EB1 or enhancing their CV. The repository helps users stay updated with the latest research trends and engage with the academic community.

Everything-LLMs-And-Robotics

The Everything-LLMs-And-Robotics repository is the world's largest GitHub repository focusing on the intersection of Large Language Models (LLMs) and Robotics. It provides educational resources, research papers, project demos, and Twitter threads related to LLMs, Robotics, and their combination. The repository covers topics such as reasoning, planning, manipulation, instructions and navigation, simulation frameworks, perception, and more, showcasing the latest advancements in the field.

For similar tasks

composio

Composio is a production-ready toolset for AI agents that enables users to integrate AI agents with various agentic tools effortlessly. It provides support for over 100 tools across different categories, including popular softwares like GitHub, Notion, Linear, Gmail, Slack, and more. Composio ensures managed authorization with support for six different authentication protocols, offering better agentic accuracy and ease of use. Users can easily extend Composio with additional tools, frameworks, and authorization protocols. The toolset is designed to be embeddable and pluggable, allowing for seamless integration and consistent user experience.

AirLine

AirLine is a learnable edge-based line detection algorithm designed for various robotic tasks such as scene recognition, 3D reconstruction, and SLAM. It offers a novel approach to extracting line segments directly from edges, enhancing generalization ability for unseen environments. The algorithm balances efficiency and accuracy through a region-grow algorithm and local edge voting scheme for line parameterization. AirLine demonstrates state-of-the-art precision with significant runtime acceleration compared to other learning-based methods, making it ideal for low-power robots.

awesome-llm-attributions

This repository focuses on unraveling the sources that large language models tap into for attribution or citation. It delves into the origins of facts, their utilization by the models, the efficacy of attribution methodologies, and challenges tied to ambiguous knowledge reservoirs, biases, and pitfalls of excessive attribution.

OpenFactVerification

Loki is an open-source tool designed to automate the process of verifying the factuality of information. It provides a comprehensive pipeline for dissecting long texts into individual claims, assessing their worthiness for verification, generating queries for evidence search, crawling for evidence, and ultimately verifying the claims. This tool is especially useful for journalists, researchers, and anyone interested in the factuality of information.

MiniCheck

MiniCheck is an efficient fact-checking tool designed to verify claims against grounding documents using large language models. It provides a sentence-level fact-checking model that can be used to evaluate the consistency of claims with the provided documents. MiniCheck offers different models, including Bespoke-MiniCheck-7B, which is the state-of-the-art and commercially usable. The tool enables users to fact-check multi-sentence claims by breaking them down into individual sentences for optimal performance. It also supports automatic prefix caching for faster inference when repeatedly fact-checking the same document with different claims.

For similar jobs

prometheus-eval

Prometheus-Eval is a repository dedicated to evaluating large language models (LLMs) in generation tasks. It provides state-of-the-art language models like Prometheus 2 (7B & 8x7B) for assessing in pairwise ranking formats and achieving high correlation scores with benchmarks. The repository includes tools for training, evaluating, and using these models, along with scripts for fine-tuning on custom datasets. Prometheus aims to address issues like fairness, controllability, and affordability in evaluations by simulating human judgments and proprietary LM-based assessments.

cladder

CLadder is a repository containing the CLadder dataset for evaluating causal reasoning in language models. The dataset consists of yes/no questions in natural language that require statistical and causal inference to answer. It includes fields such as question_id, given_info, question, answer, reasoning, and metadata like query_type and rung. The dataset also provides prompts for evaluating language models and example questions with associated reasoning steps. Additionally, it offers dataset statistics, data variants, and code setup instructions for using the repository.

awesome-llm-unlearning

This repository tracks the latest research on machine unlearning in large language models (LLMs). It offers a comprehensive list of papers, datasets, and resources relevant to the topic.

COLD-Attack

COLD-Attack is a framework designed for controllable jailbreaks on large language models (LLMs). It formulates the controllable attack generation problem and utilizes the Energy-based Constrained Decoding with Langevin Dynamics (COLD) algorithm to automate the search of adversarial LLM attacks with control over fluency, stealthiness, sentiment, and left-right-coherence. The framework includes steps for energy function formulation, Langevin dynamics sampling, and decoding process to generate discrete text attacks. It offers diverse jailbreak scenarios such as fluent suffix attacks, paraphrase attacks, and attacks with left-right-coherence.

Awesome-LLM-in-Social-Science

Awesome-LLM-in-Social-Science is a repository that compiles papers evaluating Large Language Models (LLMs) from a social science perspective. It includes papers on evaluating, aligning, and simulating LLMs, as well as enhancing tools in social science research. The repository categorizes papers based on their focus on attitudes, opinions, values, personality, morality, and more. It aims to contribute to discussions on the potential and challenges of using LLMs in social science research.

awesome-llm-attributions

This repository focuses on unraveling the sources that large language models tap into for attribution or citation. It delves into the origins of facts, their utilization by the models, the efficacy of attribution methodologies, and challenges tied to ambiguous knowledge reservoirs, biases, and pitfalls of excessive attribution.

context-cite

ContextCite is a tool for attributing statements generated by LLMs back to specific parts of the context. It allows users to analyze and understand the sources of information used by language models in generating responses. By providing attributions, users can gain insights into how the model makes decisions and where the information comes from.

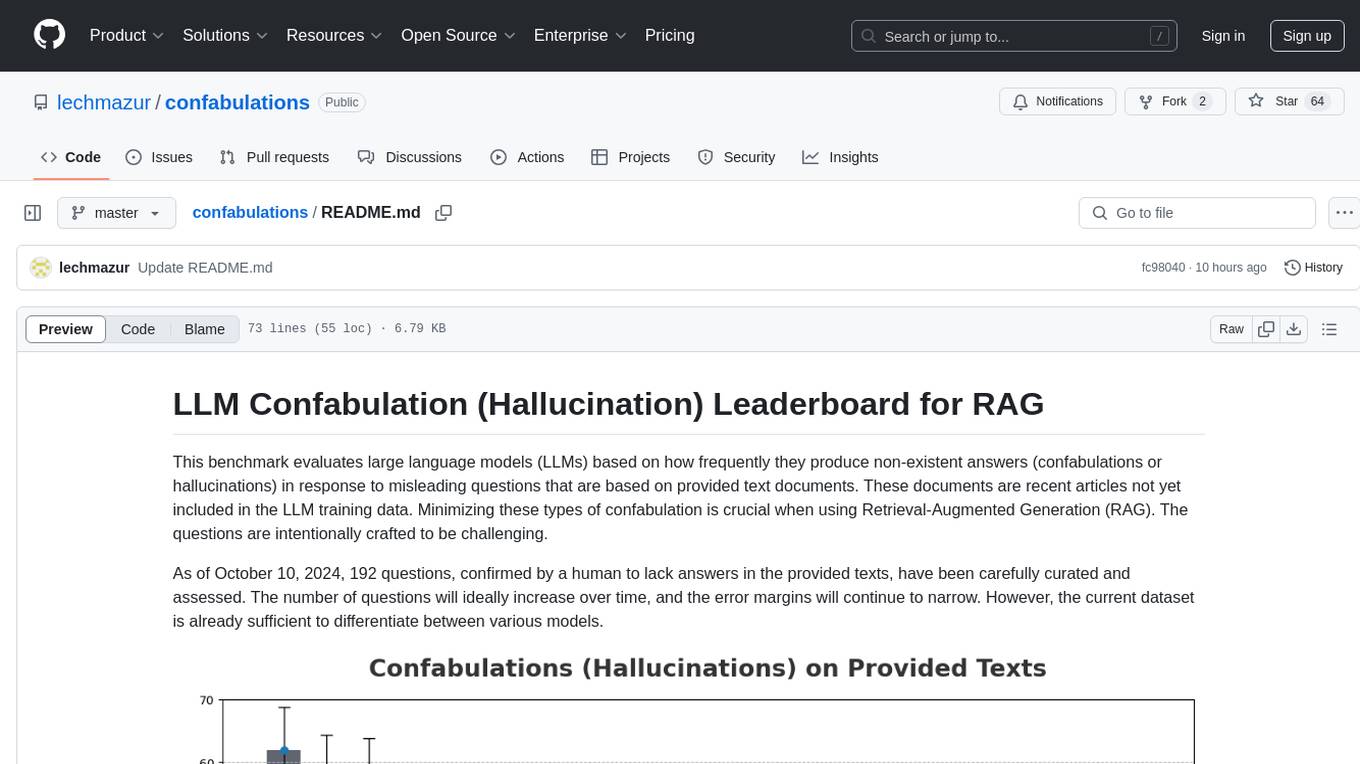

confabulations

LLM Confabulation Leaderboard evaluates large language models based on confabulations and non-response rates to challenging questions. It includes carefully curated questions with no answers in provided texts, aiming to differentiate between various models. The benchmark combines confabulation and non-response rates for comprehensive ranking, offering insights into model performance and tendencies. Additional notes highlight the meticulous human verification process, challenges faced by LLMs in generating valid responses, and the use of temperature settings. Updates and other benchmarks are also mentioned, providing a holistic view of the evaluation landscape.