cake

Distributed LLM and StableDiffusion inference for mobile, desktop and server.

Stars: 2374

cake is a pure Rust implementation of the llama3 LLM distributed inference based on Candle. The project aims to enable running large models on consumer hardware clusters of iOS, macOS, Linux, and Windows devices by sharding transformer blocks. It allows running inferences on models that wouldn't fit in a single device's GPU memory by batching contiguous transformer blocks on the same worker to minimize latency. The tool provides a way to optimize memory and disk space by splitting the model into smaller bundles for workers, ensuring they only have the necessary data. cake supports various OS, architectures, and accelerations, with different statuses for each configuration.

README:

Join the project community on our server!

Cake is a Rust framework for distributed inference of large models like LLama3 and Stable Diffusion based on Candle. The goal of the project is being able to run big (70B+) models by repurposing consumer hardware into an heterogeneous cluster of iOS, Android, macOS, Linux and Windows devices, effectively leveraging planned obsolescence as a tool to make AI more accessible and democratic.

⚠ This is experimental code that's being actively developed and changed very quickly, expect bugs ⚠

The idea is to shard the transformer blocks to multiple devices in order to be able to run the inference on models that wouldn't normally fit in the GPU memory of a single device. Inferences over contiguous transformer blocks on the same worker are batched in order to minimize latency due to data transfer.

| OS | Architectures | Acceleration | Status |

|---|---|---|---|

| GNU/Linux | arm, arm64, x86_64 | - | ✅ |

| GNU/Linux | arm, arm64, x86_64 | CUDA | ✅ |

| GNU/Linux | arm, arm64, x86_64 | BLAS | ✅ |

| Windows | x86_64 | BLAS | untested |

| Windows | x86_64 | CUDA | ✅ |

| macOS | x86_64 | - | ✅ |

| macOS | aarch64 | - | ✅ |

| macOS | aarch64 | Metal | ✅ |

| Android | arm, arm64, x86_64 | - | ✅ |

| Android | arm, arm64, x86_64 | CUDA | untested |

| iOS / iPadOS | aarch64 | - | ✅ |

| iOS / iPadOS | aarch64 | Metal | 🛠️ 90% done, WIP |

| Web | - | WebGPU | in theory possible, not done |

CUDA >= 12.2 is required for CUDA accelerated systems.

With Rust installed, you can build the core library and the CLI utilities with different accelerations.

Without acceleration (will use CPU):

cargo build --releaseWith Metal acceleration for Apple Silicon:

cargo build --release --features metalWith CUDA acceleration:

cargo build --release --features cudaTo generate the iOS bindings in the app that can then be compiled and deployed via XCode:

make iosRun a worker node:

cake-cli --model /path/to/Meta-Llama-3-8B \ # model path, read below on how to optimize model size for workers

--mode worker \ # run as worker

--name worker0 \ # worker name in topology file

--topology topology.yml \ # topology

--address 0.0.0.0:10128 # bind addressRun a master node with an OpenAI compatible REST API:

cake-cli --model /path/to/Meta-Llama-3-8B \ # model path

--api 0.0.0.0:8080 \ # API bind address

--topology topology.yml # topology fileWhere topology.yml determines which layers are served by which worker (you can find a list of all the layers of a model in its tensor index file):

linux_server_1:

host: 'linux_server.host:10128'

description: 'NVIDIA Titan X Pascal (12GB)'

layers:

- 'model.layers.0-5'

linux_server_2:

host: 'linux_server2.host:10128'

description: 'NVIDIA GeForce 3080 (10GB)'

layers:

- 'model.layers.6-16'

iphone:

host: 'iphone.host:10128'

description: 'iPhone 15 Pro Max'

layers:

- 'model.layers.17'

ipad:

host: 'ipad.host:10128'

description: 'iPad'

layers:

- 'model.layers.18-19'

macbook:

host: 'macbook.host:10128'

description: 'M1 Max'

layers:

- 'model.layers.20-31'You can now interact with the cluster by:

curl http://master-ip:8080/api/v1/chat/completions \ ~

-H "Content-Type: application/json" \

-d '{

"messages": [

{

"role": "system",

"content": "You are a helpful AI assistant."

},

{

"role": "user",

"content": "Why is the sky blue?"

}

]

}'As a memory and disk space optimization, you might want to give the worker only the data it actually needs from the model instead of the whole folder, in which case you can use the cake-split-model utility. For instance to generate a smaller version of the llama3 safetensors, you can:

cake-split-model --model-path path/to/Meta-Llama-3-8B \ # source model to split

--topology path/to/topology.yml \ # topology file

--output output-folder-name # output folder where all the workers data bundles will be savedThis will create a smaller folder with only the required layers tensors and the topology file for the specific worker. Remember to also copy other model contents (config.json, tokenizer.json, etc) in the worker bundle before deploying it.

Define the model parts inside topology.yml:

wsl2_on_windows:

host: 192.168.1.2:10128

description: NVIDIA RTX 4090 24GB

layers:

- unet

macbook:

host: 192.168.1.3:10128

description: Macbook M2

layers:

- clip

- vaeRun a worker node:

cake-cli --model /path/to/hf/cache \ # The cache dir for huggingface models

--mode worker \ # run as worker

--name wsl2_on_windows \ # worker name in topology file

--model-type image-model \ # use image-model for SD, text-model or skip for LLM

--topology topology.yml \ # topology

--address 0.0.0.0:10128 # bind addressThe model could be switched between SD1.5, SD2.1, SDXL and SDXL Turbo by specifying more command line arguments.

The model files will be downloaded from Huggingface automatically if not found in the local cache directory.

Run a master node with REST API:

cake-cli --model /path/to/hf/cache \ # The cache dir for huggingface models

--api 0.0.0.0:8080 \ # API bind address

--model-type image-model \ # use image-model for SD, text-model or skip for LLM

--topology topology.yml # topology fileGenerate images using the cluster:

curl http://master-ip:8080/api/v1/image \ ~

-H "Content-Type: application/json" \

-d '{

"image_args": {

"sd-image-prompt": "An old man sitting on the chair at seaside",

"sd-num-samples": 1,

"sd-image-seed": 2439383

}

}'More control arguments could be found inside the codes.

Released under the GPL 3 license. To see the licenses of the project dependencies, install cargo license with cargo install cargo-license and then run cargo license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cake

Similar Open Source Tools

cake

cake is a pure Rust implementation of the llama3 LLM distributed inference based on Candle. The project aims to enable running large models on consumer hardware clusters of iOS, macOS, Linux, and Windows devices by sharding transformer blocks. It allows running inferences on models that wouldn't fit in a single device's GPU memory by batching contiguous transformer blocks on the same worker to minimize latency. The tool provides a way to optimize memory and disk space by splitting the model into smaller bundles for workers, ensuring they only have the necessary data. cake supports various OS, architectures, and accelerations, with different statuses for each configuration.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

google-cloud-gcp-openai-api

This project provides a drop-in replacement REST API for Google Cloud Vertex AI (PaLM 2, Codey, Gemini) that is compatible with the OpenAI API specifications. It aims to make Google Cloud Platform Vertex AI more accessible by translating OpenAI API calls to Vertex AI. The software is developed in Python and based on FastAPI and LangChain, designed to be simple and customizable for individual needs. It includes step-by-step guides for deployment, supports various OpenAI API services, and offers configuration through environment variables. Additionally, it provides examples for running locally and usage instructions consistent with the OpenAI API format.

moatless-tools

Moatless Tools is a hobby project focused on experimenting with using Large Language Models (LLMs) to edit code in large existing codebases. The project aims to build tools that insert the right context into prompts and handle responses effectively. It utilizes an agentic loop functioning as a finite state machine to transition between states like Search, Identify, PlanToCode, ClarifyChange, and EditCode for code editing tasks.

runpod-worker-comfy

runpod-worker-comfy is a serverless API tool that allows users to run any ComfyUI workflow to generate an image. Users can provide input images as base64-encoded strings, and the generated image can be returned as a base64-encoded string or uploaded to AWS S3. The tool is built on Ubuntu + NVIDIA CUDA and provides features like built-in checkpoints and VAE models. Users can configure environment variables to upload images to AWS S3 and interact with the RunPod API to generate images. The tool also supports local testing and deployment to Docker hub using Github Actions.

mLoRA

mLoRA (Multi-LoRA Fine-Tune) is an open-source framework for efficient fine-tuning of multiple Large Language Models (LLMs) using LoRA and its variants. It allows concurrent fine-tuning of multiple LoRA adapters with a shared base model, efficient pipeline parallelism algorithm, support for various LoRA variant algorithms, and reinforcement learning preference alignment algorithms. mLoRA helps save computational and memory resources when training multiple adapters simultaneously, achieving high performance on consumer hardware.

LEADS

LEADS is a lightweight embedded assisted driving system designed to simplify the development of instrumentation, control, and analysis systems for racing cars. It is written in Python and C/C++ with impressive performance. The system is customizable and provides abstract layers for component rearrangement. It supports hardware components like Raspberry Pi and Arduino, and can adapt to various hardware types. LEADS offers a modular structure with a focus on flexibility and lightweight design. It includes robust safety features, modern GUI design with dark mode support, high performance on different platforms, and powerful ESC systems for traction control and braking. The system also supports real-time data sharing, live video streaming, and AI-enhanced data analysis for driver training. LEADS VeC Remote Analyst enables transparency between the driver and pit crew, allowing real-time data sharing and analysis. The system is designed to be user-friendly, adaptable, and efficient for racing car development.

AQLM

AQLM is the official PyTorch implementation for Extreme Compression of Large Language Models via Additive Quantization. It includes prequantized AQLM models without PV-Tuning and PV-Tuned models for LLaMA, Mistral, and Mixtral families. The repository provides inference examples, model details, and quantization setups. Users can run prequantized models using Google Colab examples, work with different model families, and install the necessary inference library. The repository also offers detailed instructions for quantization, fine-tuning, and model evaluation. AQLM quantization involves calibrating models for compression, and users can improve model accuracy through finetuning. Additionally, the repository includes information on preparing models for inference and contributing guidelines.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

paxml

Pax is a framework to configure and run machine learning experiments on top of Jax.

r2ai

r2ai is a tool designed to run a language model locally without internet access. It can be used to entertain users or assist in answering questions related to radare2 or reverse engineering. The tool allows users to prompt the language model, index large codebases, slurp file contents, embed the output of an r2 command, define different system-level assistant roles, set environment variables, and more. It is accessible as an r2lang-python plugin and can be scripted from various languages. Users can use different models, adjust query templates dynamically, load multiple models, and make them communicate with each other.

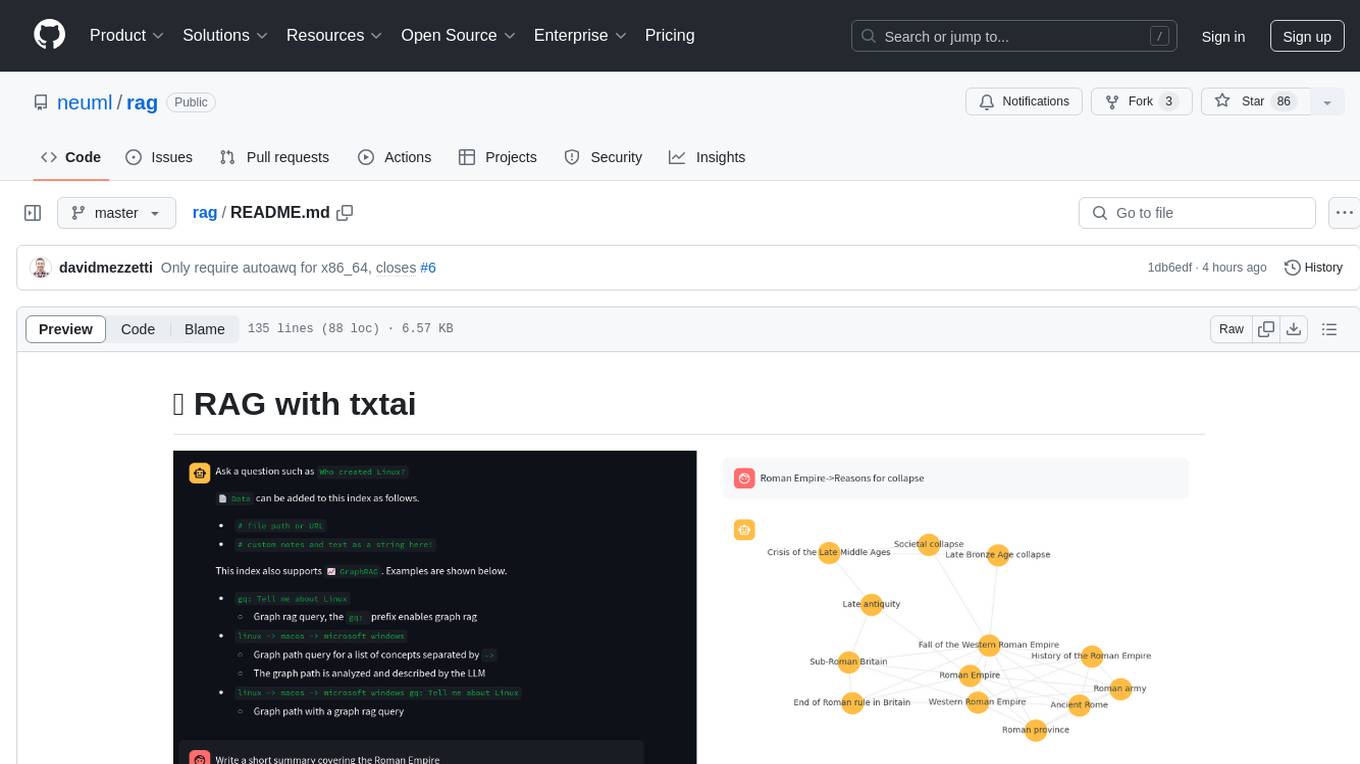

rag

RAG with txtai is a Retrieval Augmented Generation (RAG) Streamlit application that helps generate factually correct content by limiting the context in which a Large Language Model (LLM) can generate answers. It supports two categories of RAG: Vector RAG, where context is supplied via a vector search query, and Graph RAG, where context is supplied via a graph path traversal query. The application allows users to run queries, add data to the index, and configure various parameters to control its behavior.

stable-diffusion-webui

Stable Diffusion WebUI Docker Image allows users to run Automatic1111 WebUI in a docker container locally or in the cloud. The images do not bundle models or third-party configurations, requiring users to use a provisioning script for container configuration. It supports NVIDIA CUDA, AMD ROCm, and CPU platforms, with additional environment variables for customization and pre-configured templates for Vast.ai and Runpod.io. The service is password protected by default, with options for version pinning, startup flags, and service management using supervisorctl.

Construction-Hazard-Detection

Construction-Hazard-Detection is an AI-driven tool focused on improving safety at construction sites by utilizing the YOLOv8 model for object detection. The system identifies potential hazards like overhead heavy loads and steel pipes, providing real-time analysis and warnings. Users can configure the system via a YAML file and run it using Docker. The primary dataset used for training is the Construction Site Safety Image Dataset enriched with additional annotations. The system logs are accessible within the Docker container for debugging, and notifications are sent through the LINE messaging API when hazards are detected.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

thepipe

The Pipe is a multimodal-first tool for feeding files and web pages into vision-language models such as GPT-4V. It is best for LLM and RAG applications that require a deep understanding of tricky data sources. The Pipe is available as a hosted API at thepi.pe, or it can be set up locally.

For similar tasks

cake

cake is a pure Rust implementation of the llama3 LLM distributed inference based on Candle. The project aims to enable running large models on consumer hardware clusters of iOS, macOS, Linux, and Windows devices by sharding transformer blocks. It allows running inferences on models that wouldn't fit in a single device's GPU memory by batching contiguous transformer blocks on the same worker to minimize latency. The tool provides a way to optimize memory and disk space by splitting the model into smaller bundles for workers, ensuring they only have the necessary data. cake supports various OS, architectures, and accelerations, with different statuses for each configuration.

llm-export

llm-export is a tool for exporting llm models to onnx and mnn formats. It has features such as passing onnxruntime correctness tests, optimizing the original code to support dynamic shapes, reducing constant parts, optimizing onnx models using OnnxSlim for performance improvement, and exporting lora weights to onnx and mnn formats. Users can clone the project locally, clone the desired LLM project locally, and use LLMExporter to export the model. The tool supports various export options like exporting the entire model as one onnx model, exporting model segments as multiple models, exporting model vocabulary to a text file, exporting specific model layers like Embedding and lm_head, testing the model with queries, validating onnx model consistency with onnxruntime, converting onnx models to mnn models, and more. Users can specify export paths, skip optimization steps, and merge lora weights before exporting.

llm-deploy

LLM-Deploy focuses on the theory and practice of model/LLM reasoning and deployment, aiming to be your partner in mastering the art of LLM reasoning and deployment. Whether you are a newcomer to this field or a senior professional seeking to deepen your skills, you can find the key path to successfully deploy large language models here. The project covers reasoning and deployment theories, model and service optimization practices, and outputs from experienced engineers. It serves as a valuable resource for algorithm engineers and individuals interested in reasoning deployment.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

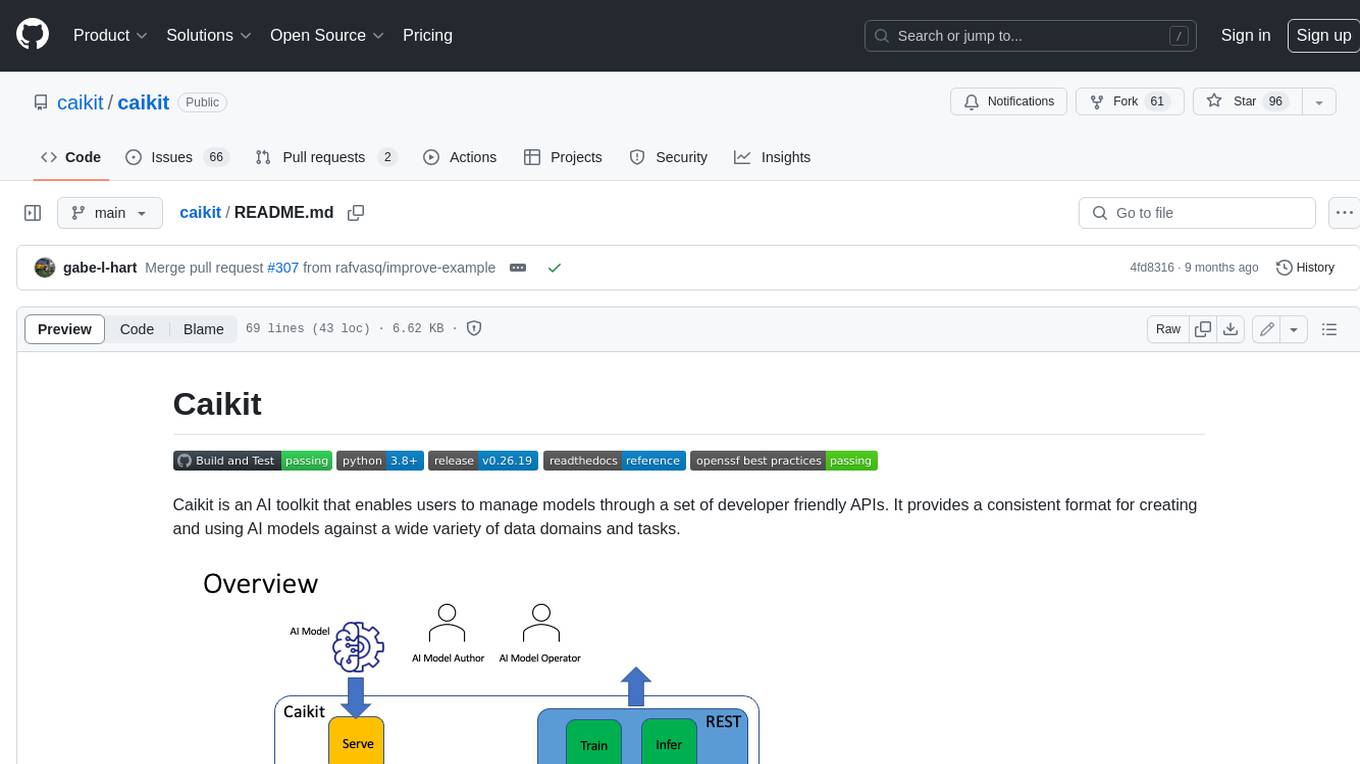

caikit

Caikit is an AI toolkit that enables users to manage models through a set of developer friendly APIs. It provides a consistent format for creating and using AI models against a wide variety of data domains and tasks.

agents

The LiveKit Agent Framework is designed for building real-time, programmable participants that run on servers. Easily tap into LiveKit WebRTC sessions and process or generate audio, video, and data streams. The framework includes plugins for common workflows, such as voice activity detection and speech-to-text. Agents integrates seamlessly with LiveKit server, offloading job queuing and scheduling responsibilities to it. This eliminates the need for additional queuing infrastructure. Agent code developed on your local machine can scale to support thousands of concurrent sessions when deployed to a server in production.

llm-finetuning

llm-finetuning is a repository that provides a serverless twist to the popular axolotl fine-tuning library using Modal's serverless infrastructure. It allows users to quickly fine-tune any LLM model with state-of-the-art optimizations like Deepspeed ZeRO, LoRA adapters, Flash attention, and Gradient checkpointing. The repository simplifies the fine-tuning process by not exposing all CLI arguments, instead allowing users to specify options in a config file. It supports efficient training and scaling across multiple GPUs, making it suitable for production-ready fine-tuning jobs.

LeanCopilot

Lean Copilot is a tool that enables the use of large language models (LLMs) in Lean for proof automation. It provides features such as suggesting tactics/premises, searching for proofs, and running inference of LLMs. Users can utilize built-in models from LeanDojo or bring their own models to run locally or on the cloud. The tool supports platforms like Linux, macOS, and Windows WSL, with optional CUDA and cuDNN for GPU acceleration. Advanced users can customize behavior using Tactic APIs and Model APIs. Lean Copilot also allows users to bring their own models through ExternalGenerator or ExternalEncoder. The tool comes with caveats such as occasional crashes and issues with premise selection and proof search. Users can get in touch through GitHub Discussions for questions, bug reports, feature requests, and suggestions. The tool is designed to enhance theorem proving in Lean using LLMs.

For similar jobs

LitServe

LitServe is a high-throughput serving engine designed for deploying AI models at scale. It generates an API endpoint for models, handles batching, streaming, and autoscaling across CPU/GPUs. LitServe is built for enterprise scale with a focus on minimal, hackable code-base without bloat. It supports various model types like LLMs, vision, time-series, and works with frameworks like PyTorch, JAX, Tensorflow, and more. The tool allows users to focus on model performance rather than serving boilerplate, providing full control and flexibility.

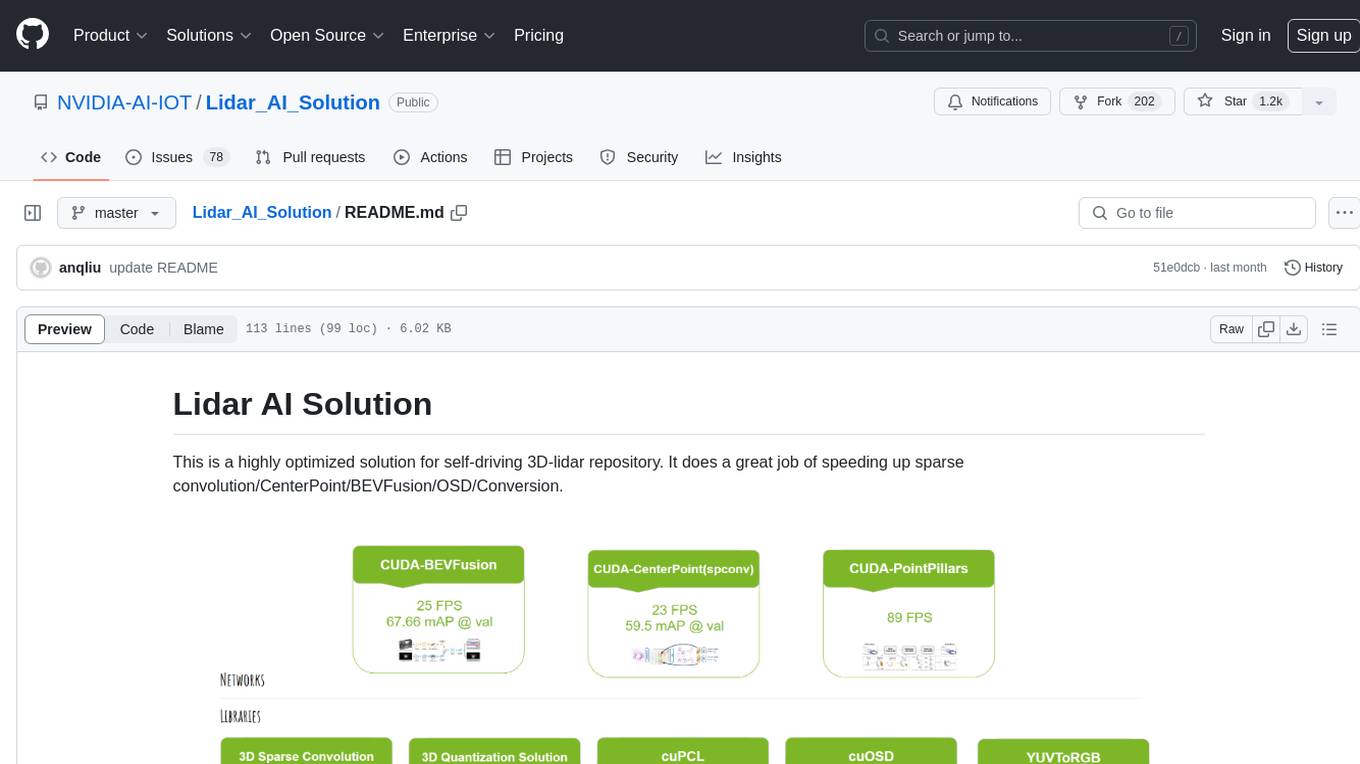

Lidar_AI_Solution

Lidar AI Solution is a highly optimized repository for self-driving 3D lidar, providing solutions for sparse convolution, BEVFusion, CenterPoint, OSD, and Conversion. It includes CUDA and TensorRT implementations for various tasks such as 3D sparse convolution, BEVFusion, CenterPoint, PointPillars, V2XFusion, cuOSD, cuPCL, and YUV to RGB conversion. The repository offers easy-to-use solutions, high accuracy, low memory usage, and quantization options for different tasks related to self-driving technology.

generative-ai-sagemaker-cdk-demo

This repository showcases how to deploy generative AI models from Amazon SageMaker JumpStart using the AWS CDK. Generative AI is a type of AI that can create new content and ideas, such as conversations, stories, images, videos, and music. The repository provides a detailed guide on deploying image and text generative AI models, utilizing pre-trained models from SageMaker JumpStart. The web application is built on Streamlit and hosted on Amazon ECS with Fargate. It interacts with the SageMaker model endpoints through Lambda functions and Amazon API Gateway. The repository also includes instructions on setting up the AWS CDK application, deploying the stacks, using the models, and viewing the deployed resources on the AWS Management Console.

cake

cake is a pure Rust implementation of the llama3 LLM distributed inference based on Candle. The project aims to enable running large models on consumer hardware clusters of iOS, macOS, Linux, and Windows devices by sharding transformer blocks. It allows running inferences on models that wouldn't fit in a single device's GPU memory by batching contiguous transformer blocks on the same worker to minimize latency. The tool provides a way to optimize memory and disk space by splitting the model into smaller bundles for workers, ensuring they only have the necessary data. cake supports various OS, architectures, and accelerations, with different statuses for each configuration.

Awesome-Robotics-3D

Awesome-Robotics-3D is a curated list of 3D Vision papers related to Robotics domain, focusing on large models like LLMs/VLMs. It includes papers on Policy Learning, Pretraining, VLM and LLM, Representations, and Simulations, Datasets, and Benchmarks. The repository is maintained by Zubair Irshad and welcomes contributions and suggestions for adding papers. It serves as a valuable resource for researchers and practitioners in the field of Robotics and Computer Vision.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

vector-inference

This repository provides an easy-to-use solution for running inference servers on Slurm-managed computing clusters using vLLM. All scripts in this repository run natively on the Vector Institute cluster environment. Users can deploy models as Slurm jobs, check server status and performance metrics, and shut down models. The repository also supports launching custom models with specific configurations. Additionally, users can send inference requests and set up an SSH tunnel to run inference from a local device.

rhesis

Rhesis is a comprehensive test management platform designed for Gen AI teams, offering tools to create, manage, and execute test cases for generative AI applications. It ensures the robustness, reliability, and compliance of AI systems through features like test set management, automated test generation, edge case discovery, compliance validation, integration capabilities, and performance tracking. The platform is open source, emphasizing community-driven development, transparency, extensible architecture, and democratizing AI safety. It includes components such as backend services, frontend applications, SDK for developers, worker services, chatbot applications, and Polyphemus for uncensored LLM service. Rhesis enables users to address challenges unique to testing generative AI applications, such as non-deterministic outputs, hallucinations, edge cases, ethical concerns, and compliance requirements.