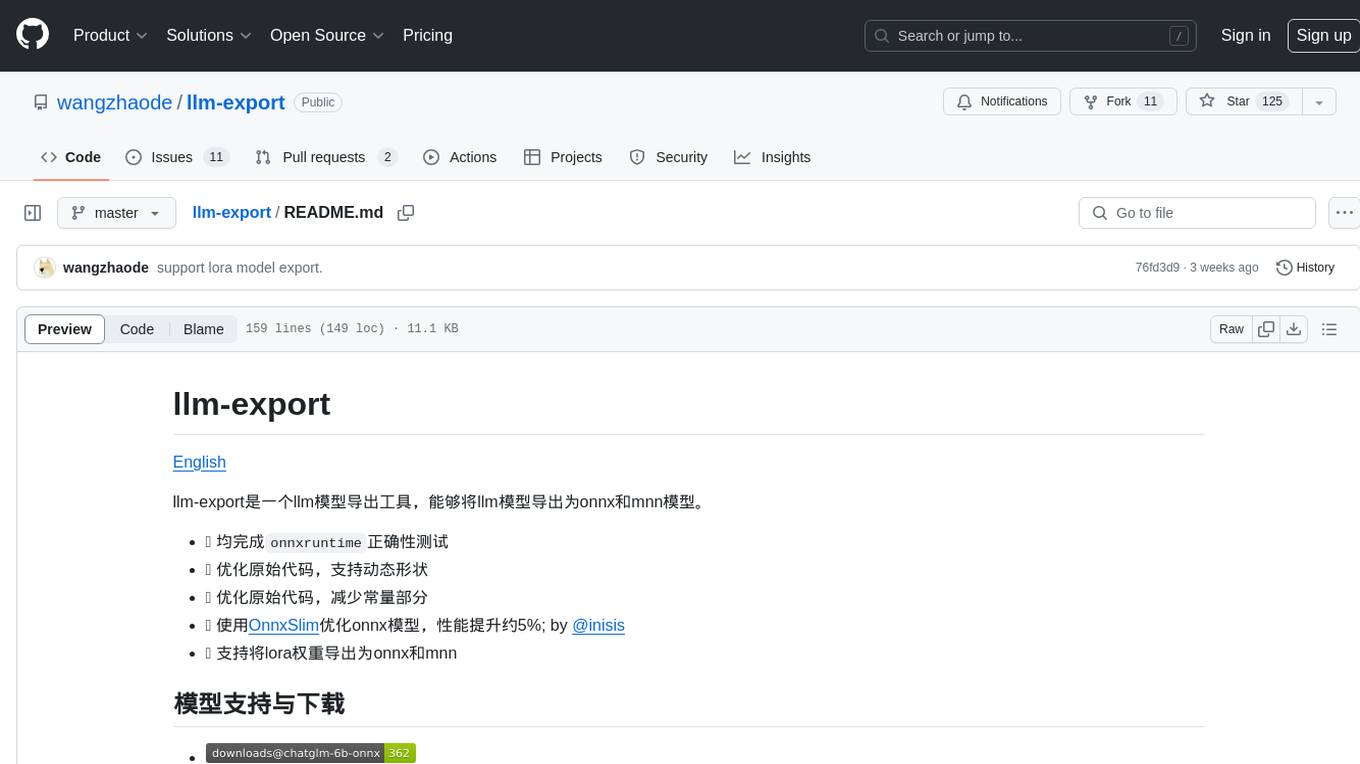

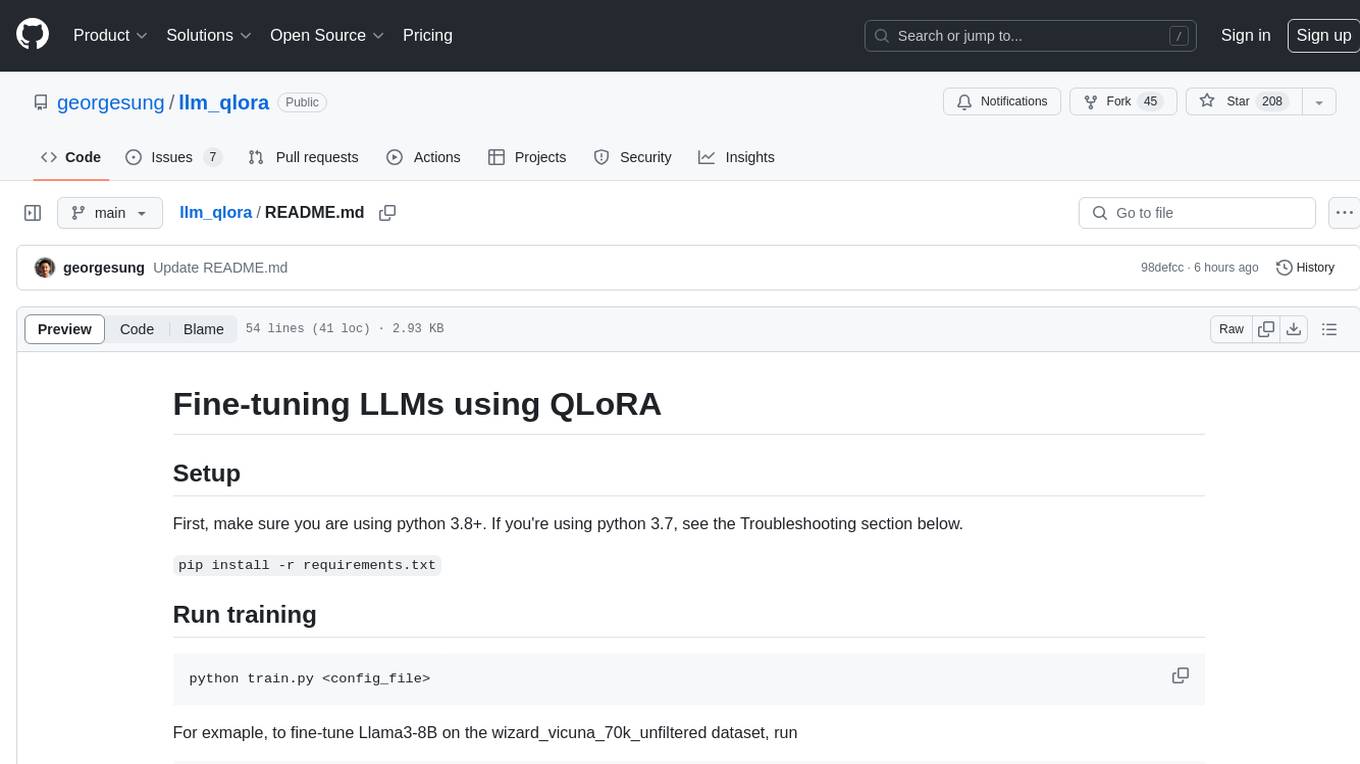

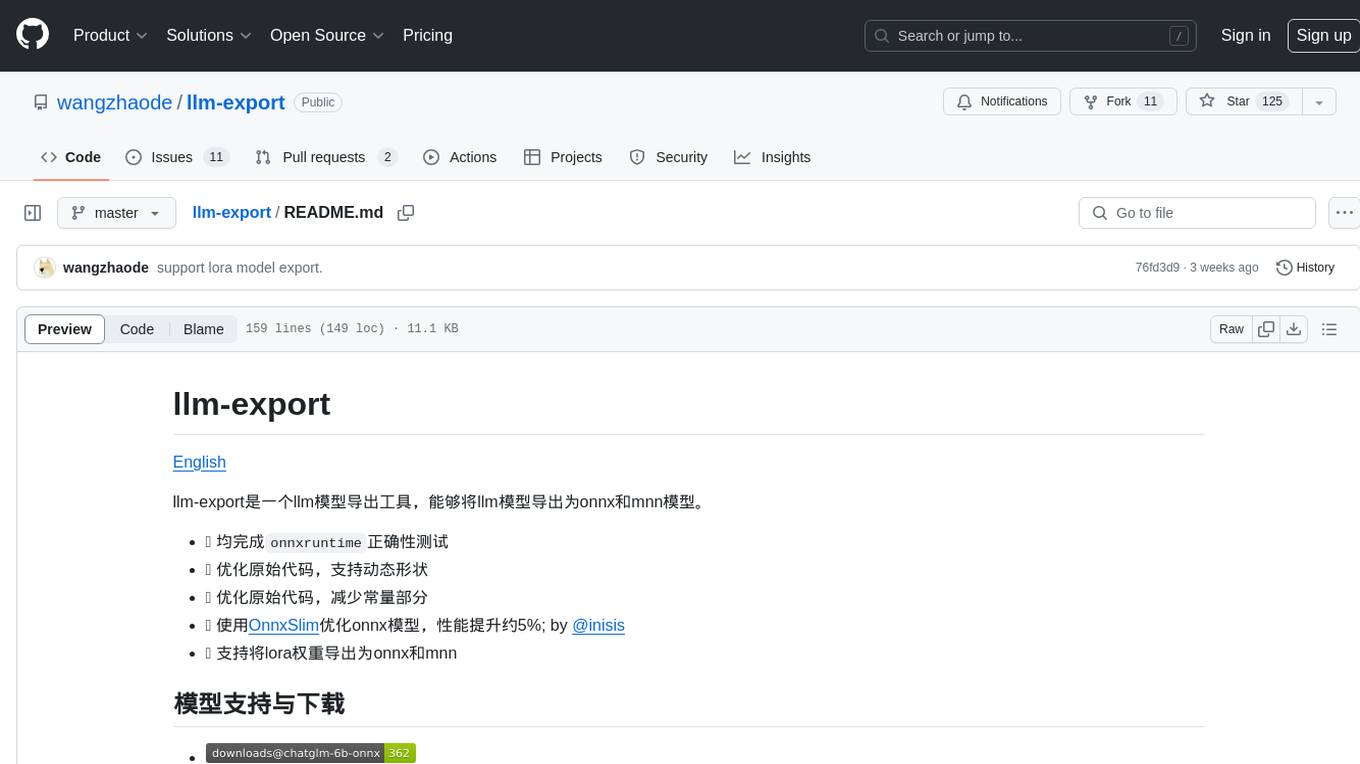

llm-export

llm-export can export llm model to onnx.

Stars: 255

llm-export is a tool for exporting llm models to onnx and mnn formats. It has features such as passing onnxruntime correctness tests, optimizing the original code to support dynamic shapes, reducing constant parts, optimizing onnx models using OnnxSlim for performance improvement, and exporting lora weights to onnx and mnn formats. Users can clone the project locally, clone the desired LLM project locally, and use LLMExporter to export the model. The tool supports various export options like exporting the entire model as one onnx model, exporting model segments as multiple models, exporting model vocabulary to a text file, exporting specific model layers like Embedding and lm_head, testing the model with queries, validating onnx model consistency with onnxruntime, converting onnx models to mnn models, and more. Users can specify export paths, skip optimization steps, and merge lora weights before exporting.

README:

llm-export是一个llm模型导出工具,能够将llm模型导出为onnx和mnn模型。

- 🚀 优化原始代码,支持动态形状

- 🚀 优化原始代码,减少常量部分

- 🚀 使用OnnxSlim优化onnx模型,性能提升约5%; by @inisis

- 🚀 支持将lora权重导出为onnx和mnn

- 🚀 MNN推理代码mnn-llm

- 🚀 Onnx推理代码onnx-llm, OnnxLLM

# pip install

pip install llmexport

# git install

pip install git+https://github.com/wangzhaode/llm-export@master

# local install

git clone https://github.com/wangzhaode/llm-export && cd llm-export/

pip install .- 下载模型

git clone https://huggingface.co/Qwen/Qwen2-1.5B-Instruct

# 如果huggingface下载慢可以使用modelscope

git clone https://modelscope.cn/qwen/Qwen2-1.5B-Instruct.git- 测试模型

# 测试文本输入

llmexport --path Qwen2-1.5B-Instruct --test "你好"

# 测试图像文本

llmexport --path Qwen2-VL-2B-Instruct --test "<img>https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-VL/assets/demo.jpeg</img>介绍一下图片里的内容"- 导出模型

# 将Qwen2-1.5B-Instruct导出为onnx模型

llmexport --path Qwen2-1.5B-Instruct --export onnx

# 将Qwen2-1.5B-Instruct导出为mnn模型, 量化参数为4bit, blokc-wise = 128

llmexport --path Qwen2-1.5B-Instruct --export mnn --quant_bit 4 --quant_block 128- 支持将模型为onnx或mnn模型,使用

--export onnx或--export mnn - 支持对模型进行对话测试,使用

--test $query会返回llm的回复内容 - 默认会使用onnx-slim对onnx模型进行优化,跳过该步骤使用

--skip_slim - 制定量化bit数使用

--quant_bit;量化的block大小使用--quant_block - 使用

--lm_quant_bit来制定lm_head层权重的量化bit数,不指定则使用--quant_bit的量化bit数 - 支持使用自己编译的

MNNConvert,使用--mnnconvert - 支持

awq量化 - 支持使用

GPTQ量化的模型权重 - 支持LoRA权重的合并/分离导出,使用

--lora_path和--lora_split

usage: llmexport.py [-h] --path PATH [--type TYPE] [--tokenizer_path TOKENIZER_PATH] [--lora_path LORA_PATH] [--gptq_path GPTQ_PATH] [--dst_path DST_PATH]

[--verbose] [--test TEST] [--export EXPORT] [--onnx_slim] [--quant_bit QUANT_BIT] [--quant_block QUANT_BLOCK] [--lm_quant_bit LM_QUANT_BIT]

[--mnnconvert MNNCONVERT] [--ppl] [--awq] [--sym] [--tie_embed] [--lora_split]

llm_exporter

options:

-h, --help show this help message and exit

--path PATH path(`str` or `os.PathLike`):

Can be either:

- A string, the *model id* of a pretrained model like `THUDM/chatglm-6b`. [TODO]

- A path to a *directory* clone from repo like `../chatglm-6b`.

--type TYPE type(`str`, *optional*):

The pretrain llm model type.

--tokenizer_path TOKENIZER_PATH

tokenizer path, defaut is `None` mean using `--path` value.

--lora_path LORA_PATH

lora path, defaut is `None` mean not apply lora.

--gptq_path GPTQ_PATH

gptq path, defaut is `None` mean not apply gptq.

--dst_path DST_PATH export onnx/mnn model to path, defaut is `./model`.

--verbose Whether or not to print verbose.

--test TEST test model inference with query `TEST`.

--export EXPORT export model to an onnx/mnn model.

--onnx_slim Whether or not to use onnx-slim.

--quant_bit QUANT_BIT

mnn quant bit, 4 or 8, default is 4.

--quant_block QUANT_BLOCK

mnn quant block, default is 0 mean channle-wise.

--lm_quant_bit LM_QUANT_BIT

mnn lm_head quant bit, 4 or 8, default is `quant_bit`.

--mnnconvert MNNCONVERT

local mnnconvert path, if invalid, using pymnn.

--ppl Whether or not to get all logits of input tokens.

--awq Whether or not to use awq quant.

--sym Whether or not to using symmetric quant (without zeropoint), defualt is False.

--tie_embed Whether or not to using tie_embedding, defualt is False.

--lora_split Whether or not export lora split, defualt is False.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-export

Similar Open Source Tools

llm-export

llm-export is a tool for exporting llm models to onnx and mnn formats. It has features such as passing onnxruntime correctness tests, optimizing the original code to support dynamic shapes, reducing constant parts, optimizing onnx models using OnnxSlim for performance improvement, and exporting lora weights to onnx and mnn formats. Users can clone the project locally, clone the desired LLM project locally, and use LLMExporter to export the model. The tool supports various export options like exporting the entire model as one onnx model, exporting model segments as multiple models, exporting model vocabulary to a text file, exporting specific model layers like Embedding and lm_head, testing the model with queries, validating onnx model consistency with onnxruntime, converting onnx models to mnn models, and more. Users can specify export paths, skip optimization steps, and merge lora weights before exporting.

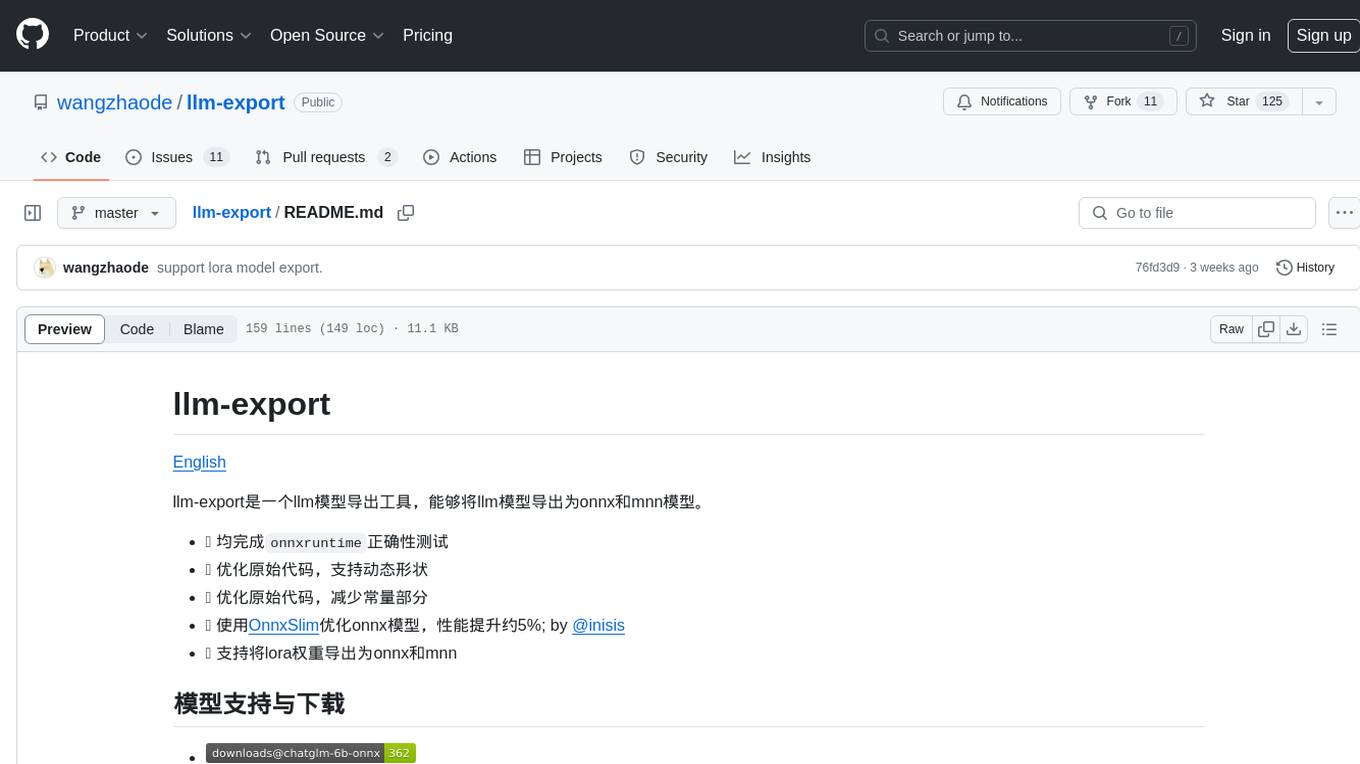

no-cost-ai

No-cost-ai is a repository dedicated to providing a comprehensive list of free AI models and tools for developers, researchers, and curious builders. It serves as a living index for accessing state-of-the-art AI models without any cost. The repository includes information on various AI applications such as chat interfaces, media generation, voice and music tools, AI IDEs, and developer APIs and platforms. Users can find links to free models, their limits, and usage instructions. Contributions to the repository are welcome, and users are advised to use the listed services at their own risk due to potential changes in models, limitations, and reliability of free services.

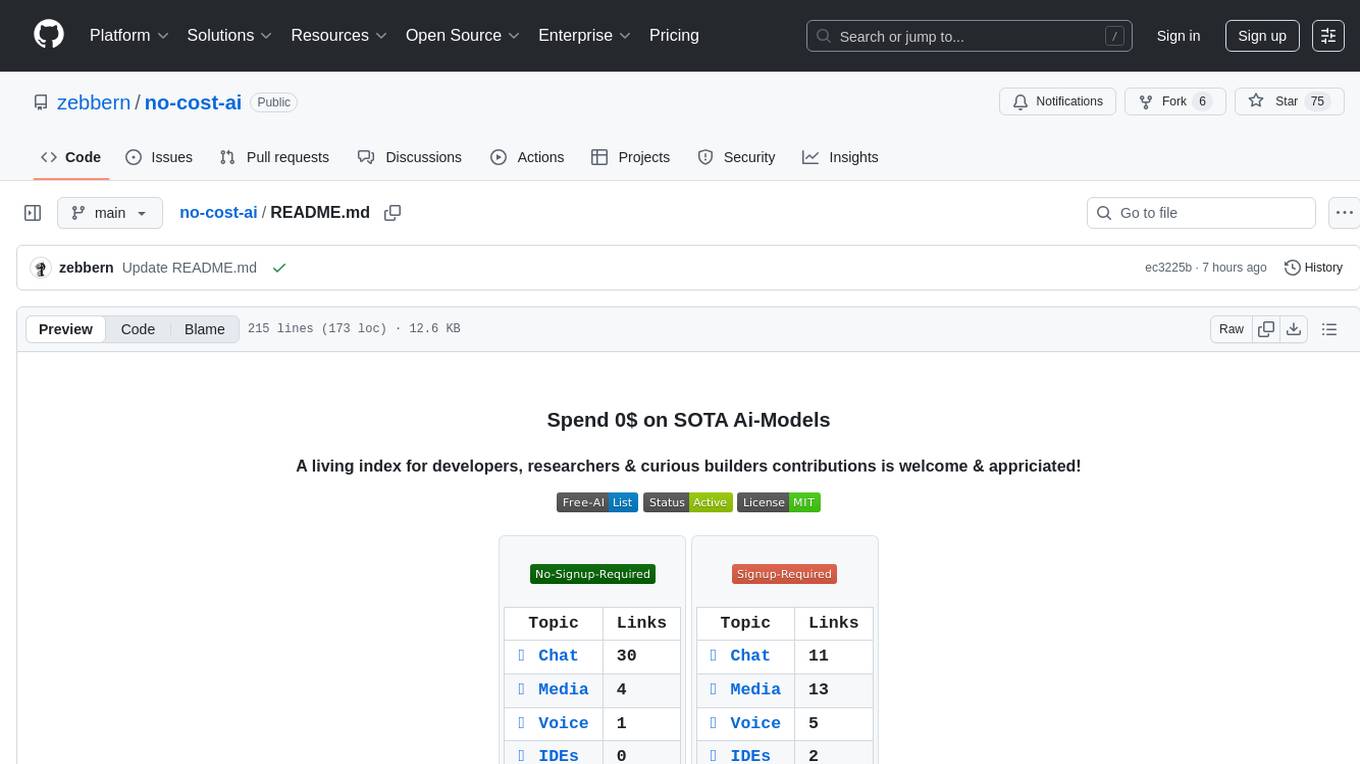

open-llms

Open LLMs is a repository containing various Large Language Models licensed for commercial use. It includes models like T5, GPT-NeoX, UL2, Bloom, Cerebras-GPT, Pythia, Dolly, and more. These models are designed for tasks such as transfer learning, language understanding, chatbot development, code generation, and more. The repository provides information on release dates, checkpoints, papers/blogs, parameters, context length, and licenses for each model. Contributions to the repository are welcome, and it serves as a resource for exploring the capabilities of different language models.

Github-Ranking-AI

This repository provides a list of the most starred and forked repositories on GitHub. It is updated automatically and includes information such as the project name, number of stars, number of forks, language, number of open issues, description, and last commit date. The repository is divided into two sections: LLM and chatGPT. The LLM section includes repositories related to large language models, while the chatGPT section includes repositories related to the chatGPT chatbot.

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

aidea-server

AIdea Server is an open-source Golang-based server that integrates mainstream large language models and drawing models. It supports various functionalities including OpenAI's GPT-3.5 and GPT-4, Anthropic's Claude instant and Claude 2.1, Google's Gemini Pro, as well as Chinese models like Tongyi Qianwen, Wenxin Yiyuan, and more. It also supports open-source large models like Yi 34B, Llama2, and AquilaChat 7B. Additionally, it provides features for text-to-image, super-resolution, coloring black and white images, generating art fonts and QR codes, among others.

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

LLamaTuner

LLamaTuner is a repository for the Efficient Finetuning of Quantized LLMs project, focusing on building and sharing instruction-following Chinese baichuan-7b/LLaMA/Pythia/GLM model tuning methods. The project enables training on a single Nvidia RTX-2080TI and RTX-3090 for multi-round chatbot training. It utilizes bitsandbytes for quantization and is integrated with Huggingface's PEFT and transformers libraries. The repository supports various models, training approaches, and datasets for supervised fine-tuning, LoRA, QLoRA, and more. It also provides tools for data preprocessing and offers models in the Hugging Face model hub for inference and finetuning. The project is licensed under Apache 2.0 and acknowledges contributions from various open-source contributors.

LightMem

LightMem is a lightweight and efficient memory management framework designed for Large Language Models and AI Agents. It provides a simple yet powerful memory storage, retrieval, and update mechanism to help you quickly build intelligent applications with long-term memory capabilities. The framework is minimalist in design, ensuring minimal resource consumption and fast response times. It offers a simple API for easy integration into applications with just a few lines of code. LightMem's modular architecture supports custom storage engines and retrieval strategies, making it flexible and extensible. It is compatible with various cloud APIs like OpenAI and DeepSeek, as well as local models such as Ollama and vLLM.

Awesome-Tabular-LLMs

This repository is a collection of papers on Tabular Large Language Models (LLMs) specialized for processing tabular data. It includes surveys, models, and applications related to table understanding tasks such as Table Question Answering, Table-to-Text, Text-to-SQL, and more. The repository categorizes the papers based on key ideas and provides insights into the advancements in using LLMs for processing diverse tables and fulfilling various tabular tasks based on natural language instructions.

go-cyber

Cyber is a superintelligence protocol that aims to create a decentralized and censorship-resistant internet. It uses a novel consensus mechanism called CometBFT and a knowledge graph to store and process information. Cyber is designed to be scalable, secure, and efficient, and it has the potential to revolutionize the way we interact with the internet.

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

search2ai

S2A allows your large model API to support networking, searching, news, and web page summarization. It currently supports OpenAI, Gemini, and Moonshot (non-streaming). The large model will determine whether to connect to the network based on your input, and it will not connect to the network for searching every time. You don't need to install any plugins or replace keys. You can directly replace the custom address in your commonly used third-party client. You can also deploy it yourself, which will not affect other functions you use, such as drawing and voice.

llm-deploy

LLM-Deploy focuses on the theory and practice of model/LLM reasoning and deployment, aiming to be your partner in mastering the art of LLM reasoning and deployment. Whether you are a newcomer to this field or a senior professional seeking to deepen your skills, you can find the key path to successfully deploy large language models here. The project covers reasoning and deployment theories, model and service optimization practices, and outputs from experienced engineers. It serves as a valuable resource for algorithm engineers and individuals interested in reasoning deployment.

Awesome-LLM-3D

This repository is a curated list of papers related to 3D tasks empowered by Large Language Models (LLMs). It covers tasks such as 3D understanding, reasoning, generation, and embodied agents. The repository also includes other Foundation Models like CLIP and SAM to provide a comprehensive view of the area. It is actively maintained and updated to showcase the latest advances in the field. Users can find a variety of research papers and projects related to 3D tasks and LLMs in this repository.

TRACE

TRACE is a temporal grounding video model that utilizes causal event modeling to capture videos' inherent structure. It presents a task-interleaved video LLM model tailored for sequential encoding/decoding of timestamps, salient scores, and textual captions. The project includes various model checkpoints for different stages and fine-tuning on specific datasets. It provides evaluation codes for different tasks like VTG, MVBench, and VideoMME. The repository also offers annotation files and links to raw videos preparation projects. Users can train the model on different tasks and evaluate the performance based on metrics like CIDER, METEOR, SODA_c, F1, mAP, Hit@1, etc. TRACE has been enhanced with trace-retrieval and trace-uni models, showing improved performance on dense video captioning and general video understanding tasks.

For similar tasks

llm_qlora

LLM_QLoRA is a repository for fine-tuning Large Language Models (LLMs) using QLoRA methodology. It provides scripts for training LLMs on custom datasets, pushing models to HuggingFace Hub, and performing inference. Additionally, it includes models trained on HuggingFace Hub, a blog post detailing the QLoRA fine-tuning process, and instructions for converting and quantizing models. The repository also addresses troubleshooting issues related to Python versions and dependencies.

llm-export

llm-export is a tool for exporting llm models to onnx and mnn formats. It has features such as passing onnxruntime correctness tests, optimizing the original code to support dynamic shapes, reducing constant parts, optimizing onnx models using OnnxSlim for performance improvement, and exporting lora weights to onnx and mnn formats. Users can clone the project locally, clone the desired LLM project locally, and use LLMExporter to export the model. The tool supports various export options like exporting the entire model as one onnx model, exporting model segments as multiple models, exporting model vocabulary to a text file, exporting specific model layers like Embedding and lm_head, testing the model with queries, validating onnx model consistency with onnxruntime, converting onnx models to mnn models, and more. Users can specify export paths, skip optimization steps, and merge lora weights before exporting.

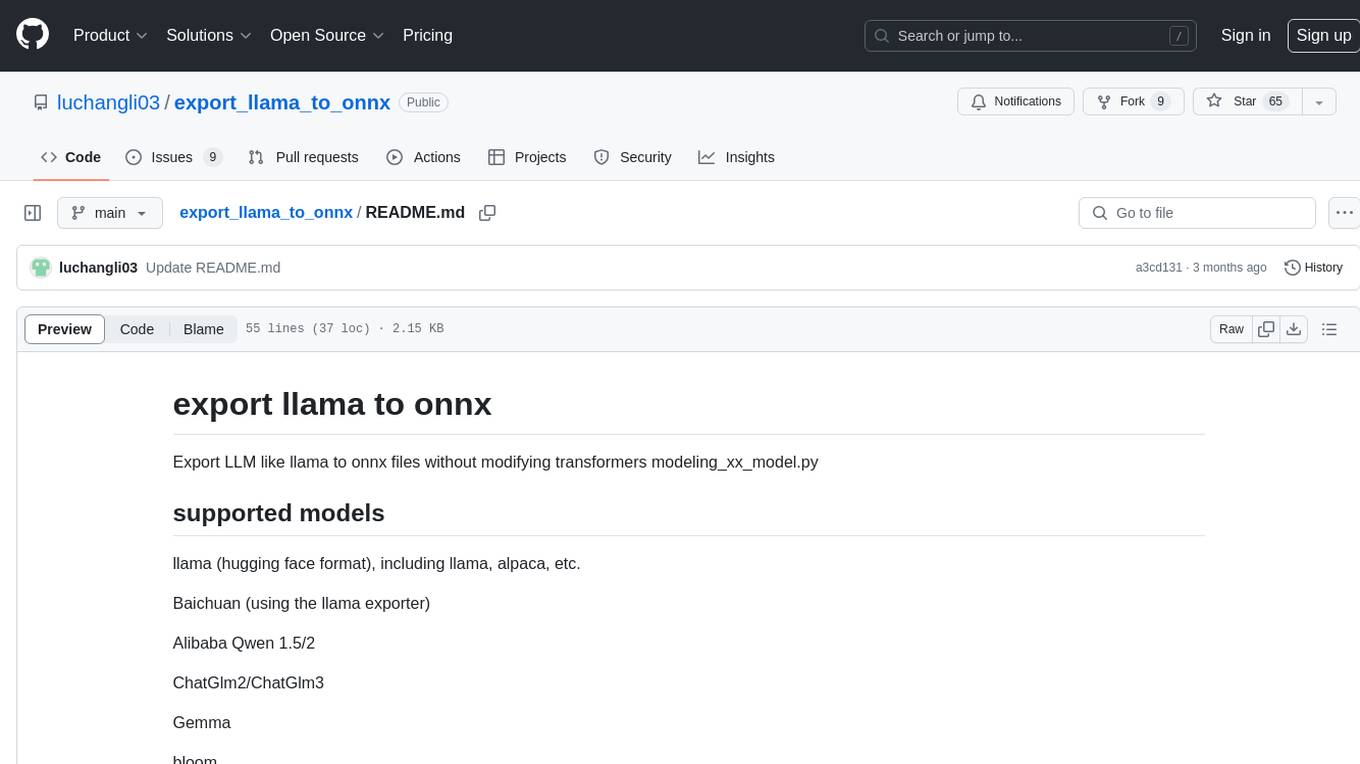

export_llama_to_onnx

Export LLM like llama to ONNX files without modifying transformers modeling_xx_model.py. Supported models include llama (Hugging Face format), Baichuan, Alibaba Qwen 1.5/2, ChatGlm2/ChatGlm3, and Gemma. Usage examples provided for exporting different models to ONNX files. Various arguments can be used to configure the export process. Note on uninstalling/disabling FlashAttention and xformers before model conversion. Recommendations for handling kv_cache format and simplifying large ONNX models. Disclaimer regarding correctness of exported models and consequences of usage.

fish-identification

Fishial.ai is a project focused on training and validating scripts for fish segmentation and classification models. It includes various scripts for automatic training with different loss functions, dataset manipulation, and model setup using Detectron2 API. The project also provides tools for converting classification models to TorchScript format and creating training datasets. The models available include MaskRCNN for fish segmentation and various versions of ResNet18 for fish classification with different class counts and features. The project aims to facilitate fish identification and analysis through machine learning techniques.

fastc

Fastc is a tool focused on CPU execution, using efficient models for embedding generation and cosine similarity classification. It allows for efficient multi-classifier execution without extra overhead. Users can easily train text classifiers, export models, publish to HuggingFace, load existing models, make class predictions, use instruct templates, and launch an inference server. The tool provides an HTTP API for text classification with JSON payloads and supports multiple languages for language identification.

uzu

uzu is a high-performance inference engine for AI models on Apple Silicon. It features a simple, high-level API, hybrid architecture for GPU kernel computation, unified model configurations, traceable computations, and utilizes unified memory on Apple devices. The tool provides a CLI mode for running models, supports its own model format, and offers prebuilt Swift and TypeScript frameworks for bindings. Users can quickly start by adding the uzu dependency to their Cargo.toml and creating an inference Session with a specific model and configuration. Performance benchmarks show metrics for various models on Apple M2, highlighting the tokens/s speed for each model compared to llama.cpp with bf16/f16 precision.

cake

cake is a pure Rust implementation of the llama3 LLM distributed inference based on Candle. The project aims to enable running large models on consumer hardware clusters of iOS, macOS, Linux, and Windows devices by sharding transformer blocks. It allows running inferences on models that wouldn't fit in a single device's GPU memory by batching contiguous transformer blocks on the same worker to minimize latency. The tool provides a way to optimize memory and disk space by splitting the model into smaller bundles for workers, ensuring they only have the necessary data. cake supports various OS, architectures, and accelerations, with different statuses for each configuration.

llm-deploy

LLM-Deploy focuses on the theory and practice of model/LLM reasoning and deployment, aiming to be your partner in mastering the art of LLM reasoning and deployment. Whether you are a newcomer to this field or a senior professional seeking to deepen your skills, you can find the key path to successfully deploy large language models here. The project covers reasoning and deployment theories, model and service optimization practices, and outputs from experienced engineers. It serves as a valuable resource for algorithm engineers and individuals interested in reasoning deployment.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.