Best AI tools for< Convert Model >

20 - AI tool Sites

DraftAid

DraftAid is an AI-powered drawing automation tool that streamlines the fabrication drawing process, reducing the time from weeks to minutes. It integrates seamlessly with existing CAD software and offers extensive customization options to align with specific project requirements, delivering consistently accurate and high-quality drawings.

ImagineMe

ImagineMe is a personal AI art generator that allows users to create stunning art of themselves from a simple text description. The application uses AI models to convert text into corresponding images, enabling users to visualize themselves in various scenarios. ImagineMe offers an easy, affordable, and magical way to create personalized art.

FluxImg AI Image Generator

FluxImg.com is a state-of-the-art AI image generator tool that utilizes advanced AI models to convert text prompts into high-quality, detail-rich images. Users can easily create customized images by inputting descriptive text and further customize the generated images to suit their needs. The tool offers various image size options and supports a wide range of styles and types, including abstract art, realistic scenes, portraits, landscapes, logos, and illustrations. FluxImg.com stands out for its unparalleled image quality, user-friendly interface, and advanced features like Flux.1 Pro and Flux.1 Schnell for enhanced control and rapid iterations.

Token Counter

Token Counter is an AI tool designed to convert text input into tokens for various AI models. It helps users accurately determine the token count and associated costs when working with AI models. By providing insights into tokenization strategies and cost structures, Token Counter streamlines the process of utilizing advanced technologies.

Voicepen

Voicepen is an AI-powered tool that converts audio recordings into high-quality blog posts. It uses advanced speech recognition and natural language processing technologies to accurately transcribe and format your audio content into well-written, SEO-optimized blog posts. With Voicepen, you can easily create engaging and informative blog content without spending hours writing and editing.

Ragobble

Ragobble is an audio to LLM data tool that allows you to easily convert audio files into text data that can be used to train large language models (LLMs). With Ragobble, you can quickly and easily create high-quality training data for your LLM projects.

Make your image 3D

This website provides a tool that allows users to convert 2D images into 3D images. The tool uses artificial intelligence to extract depth information from the image, which is then used to create a 3D model. The resulting 3D model can be embedded into a website or shared via a link.

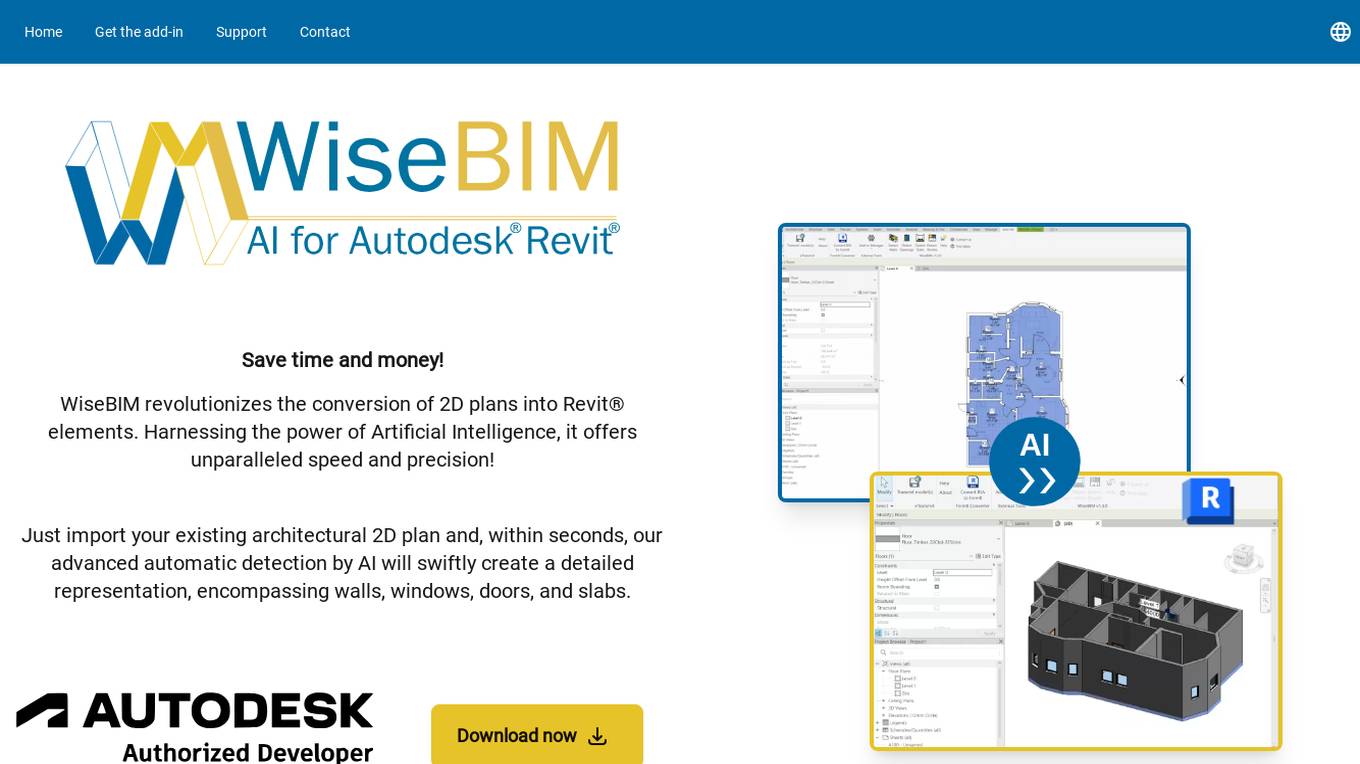

WiseBIM

WiseBIM is an AI tool designed for Autodesk Revit users to streamline the conversion of 2D plans into detailed Revit elements. By leveraging Artificial Intelligence technology, WiseBIM offers exceptional speed and accuracy in creating Revit models from architectural plans. The tool simplifies the process for engineering offices, architects, construction companies, owners, facility, and property managers, enabling them to save time and money by automating the detection and creation of Revit elements.

GetWebsite.Report

GetWebsite.Report is an innovative web service that leverages state-of-the-art AI models to analyze and optimize landing pages across five main categories: user interface, user experience, visual design, content, and SEO. It provides actionable insights to enhance the performance and effectiveness of digital presence. The tool offers personalized recommendations to improve conversion rates, SEO, usability, and messaging. It is rated 4.8/5 by 290+ users and comes with a 100% money-back guarantee if not satisfied. GetWebsite.Report is designed to be adaptable across diverse industries, offering practical advice and resources for optimizing user experience and search visibility.

TED SMRZR

TED SMRZR is a web application that converts TEDx Talks into short summaries. It uses AI models to fetch the transcript from the TEDx video, punctuate the transcribed data, and then summarize it. The summarized talks are then translated into different languages and compared to similar TEDx Talks for deep insights. TED SMRZR provides nicely punctuated TED Talks to read and short summaries for all the available TED Talks. Users can also select multiple Talks and compare their summaries.

Files2Prompt

Files2Prompt is a free online tool that allows you to convert files to text prompts for large language models (LLMs) like ChatGPT, Claude, and Gemini. With Files2Prompt, you can easily generate prompts from various file formats, including Markdown, JSON, and XML. The converted prompts can be used to ask questions, generate text, translate languages, write different kinds of creative content, and more.

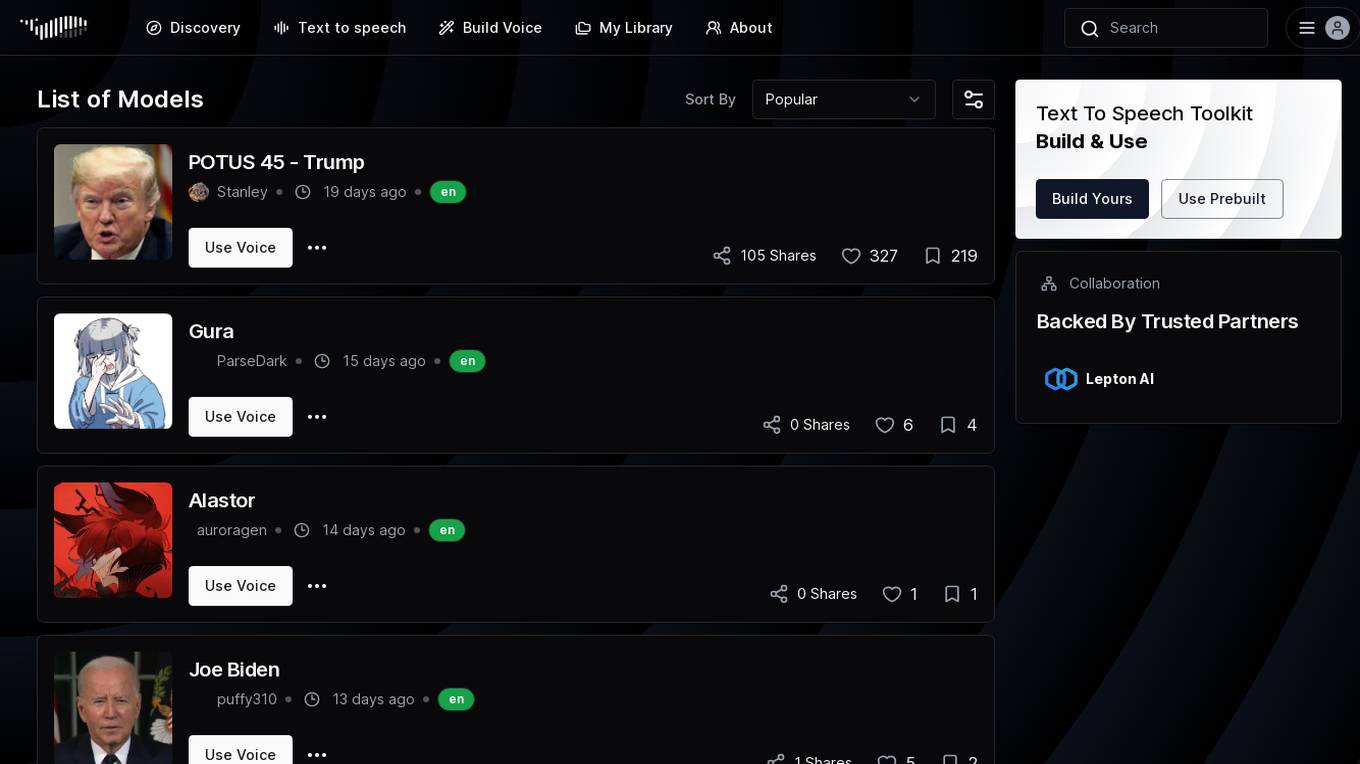

Fish Audio

Fish Audio is an AI-powered audio generation tool that allows users to convert text into speech. With a user-friendly interface, it offers a range of models for generating high-quality voices. Users can build their own voice models or use prebuilt ones, and collaborate with others. Backed by trusted partners, Fish Audio leverages Lepton AI's top models to provide a seamless experience for creating audio content.

CodeConvert AI

CodeConvert AI is an online tool that allows users to convert code across 25+ programming languages with a simple click of a button. It offers high-quality code conversion using advanced AI models, eliminating the need for manual rewriting. Users can convert code without the hassle of downloading or installing any software, ensuring privacy and security as the tool does not retain user input or generated output code. CodeConvert AI provides unlimited usage on paid plans and supports a wide range of programming languages, making it a valuable resource for developers looking to save time and effort in code conversion.

Kombai

Kombai is an AI tool designed to code email and web designs like humans. It uses deep learning and heuristics models to interpret UI designs and generate high-quality HTML, CSS, or React code with human-like names for classes and components. Kombai aims to help developers save time by automating the process of writing UI code based on design files without the need for tagging, naming, or grouping elements. The tool is currently in 'public research preview' and is free for individual developers to use.

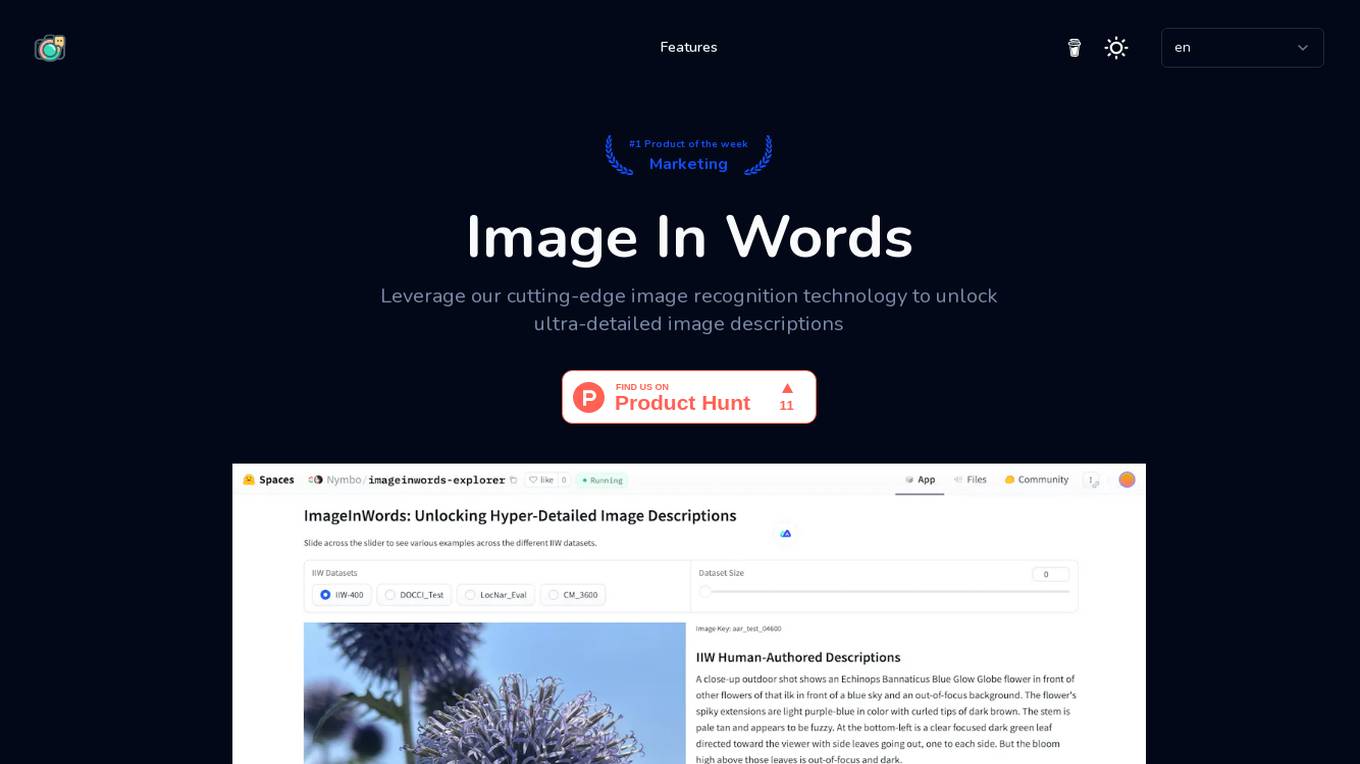

Image In Words

Image In Words is a generative model designed for scenarios that require generating ultra-detailed text from images. It leverages cutting-edge image recognition technology to provide high-quality and natural image descriptions. The framework ensures detailed and accurate descriptions, improves model performance, reduces fictional content, enhances visual-language reasoning capabilities, and has wide applications across various fields. Image In Words supports English and has been trained using approximately 100,000 hours of English data. It has demonstrated high quality and naturalness in various tests.

Glyf

Glyf is an AI-powered 3D design tool that allows users to create stunning 3D art and designs with just a few words. With Glyf, you can convert simple 3D designs into high-quality pieces of art or create new designs from scratch using AI. Glyf is perfect for artists, designers, and anyone who wants to create beautiful 3D content.

SpeechText.AI

SpeechText.AI is a powerful artificial intelligence software for speech to text conversion and audio transcription. It offers accurate transcriptions of audio and video files using domain-specific speech recognition technology. The application provides various features to transcribe, edit, and export audio content in different formats. With state-of-the-art deep neural network models, SpeechText.AI achieves close to human accuracy in converting audio to text. The tool is widely used for transcription of interviews, medical data, conference calls, podcasts, and more, catering to various industries such as finance, healthcare, legal, and HR.

EaseMate AI

EaseMate AI is an all-in-one AI assistant platform that integrates leading AI models like GPT, Gemini, DeepSeek, Nano Banana, Veo 3, Sora 2, Kling, and more. It offers a wide range of features for study, work, and creativity, including AI image and video generation, math solver, AI writer, chatbot, PDF tools, and more. Users can access various AI tools for free to enhance productivity, learning, and content creation.

Transgate

Transgate is an AI-powered speech-to-text conversion tool that allows users to convert audio/video files to text with high accuracy and efficiency. It offers a pay-as-you-go model, supports over 50 languages, and guarantees 98%+ accuracy. Transgate is designed to boost productivity by minimizing costs and eliminating manual transcription tasks, catering to industries like AI/ML, medical, legal, education, consulting, and market research.

PNGAI

PNGAI is a free online AI PNG Generator powered by Flux, offering a user-friendly AI PNG Generator to create stunning PNG images in just a few clicks. Users can simply describe their image, and the AI PNG Generator will quickly generate diverse visuals, making it ideal for designers, artists, and content creators. The tool provides features like Text to PNG Generator, Image Remix, Image to Describe, and an Easy-to-Use PNG AI interface. PNGAI utilizes Flux as the core model for image generation, delivering top-quality images with advanced features and diverse options.

4 - Open Source AI Tools

llm_qlora

LLM_QLoRA is a repository for fine-tuning Large Language Models (LLMs) using QLoRA methodology. It provides scripts for training LLMs on custom datasets, pushing models to HuggingFace Hub, and performing inference. Additionally, it includes models trained on HuggingFace Hub, a blog post detailing the QLoRA fine-tuning process, and instructions for converting and quantizing models. The repository also addresses troubleshooting issues related to Python versions and dependencies.

llm-export

llm-export is a tool for exporting llm models to onnx and mnn formats. It has features such as passing onnxruntime correctness tests, optimizing the original code to support dynamic shapes, reducing constant parts, optimizing onnx models using OnnxSlim for performance improvement, and exporting lora weights to onnx and mnn formats. Users can clone the project locally, clone the desired LLM project locally, and use LLMExporter to export the model. The tool supports various export options like exporting the entire model as one onnx model, exporting model segments as multiple models, exporting model vocabulary to a text file, exporting specific model layers like Embedding and lm_head, testing the model with queries, validating onnx model consistency with onnxruntime, converting onnx models to mnn models, and more. Users can specify export paths, skip optimization steps, and merge lora weights before exporting.

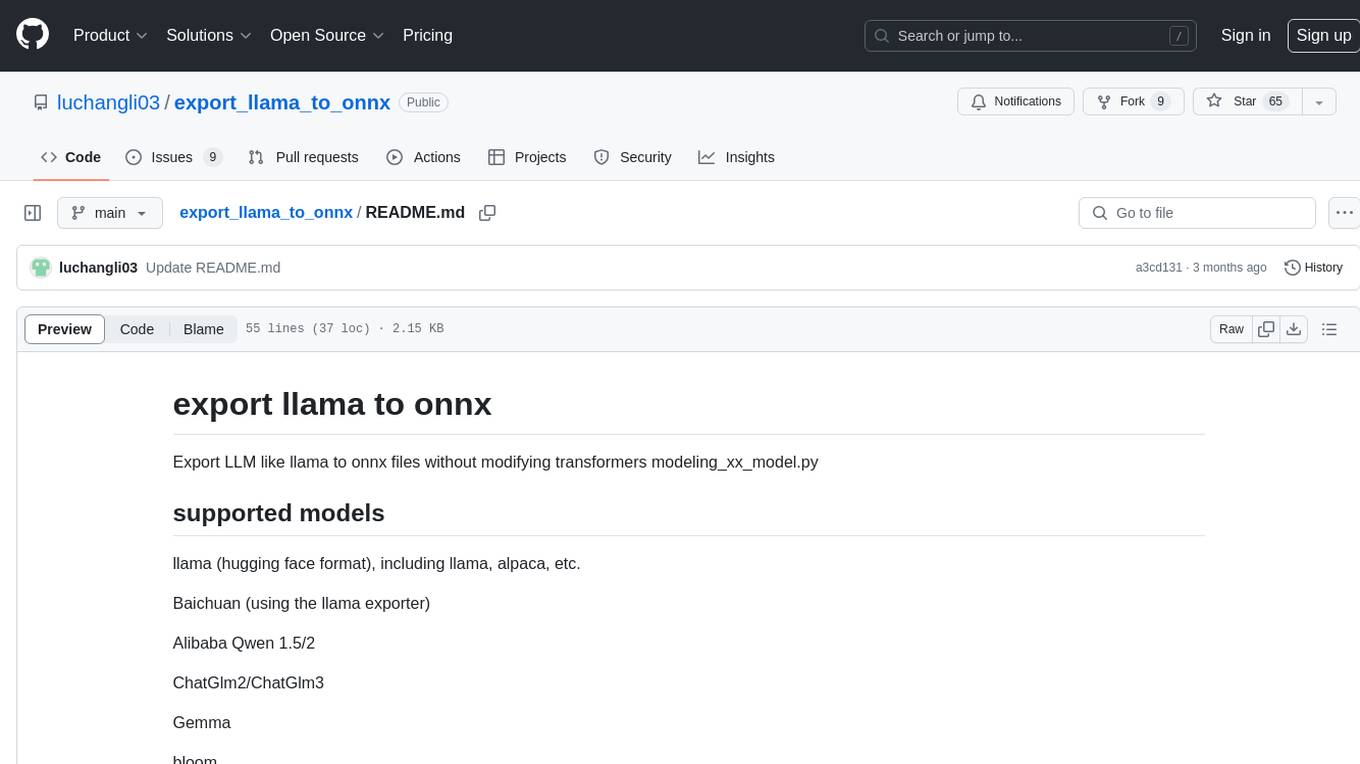

export_llama_to_onnx

Export LLM like llama to ONNX files without modifying transformers modeling_xx_model.py. Supported models include llama (Hugging Face format), Baichuan, Alibaba Qwen 1.5/2, ChatGlm2/ChatGlm3, and Gemma. Usage examples provided for exporting different models to ONNX files. Various arguments can be used to configure the export process. Note on uninstalling/disabling FlashAttention and xformers before model conversion. Recommendations for handling kv_cache format and simplifying large ONNX models. Disclaimer regarding correctness of exported models and consequences of usage.

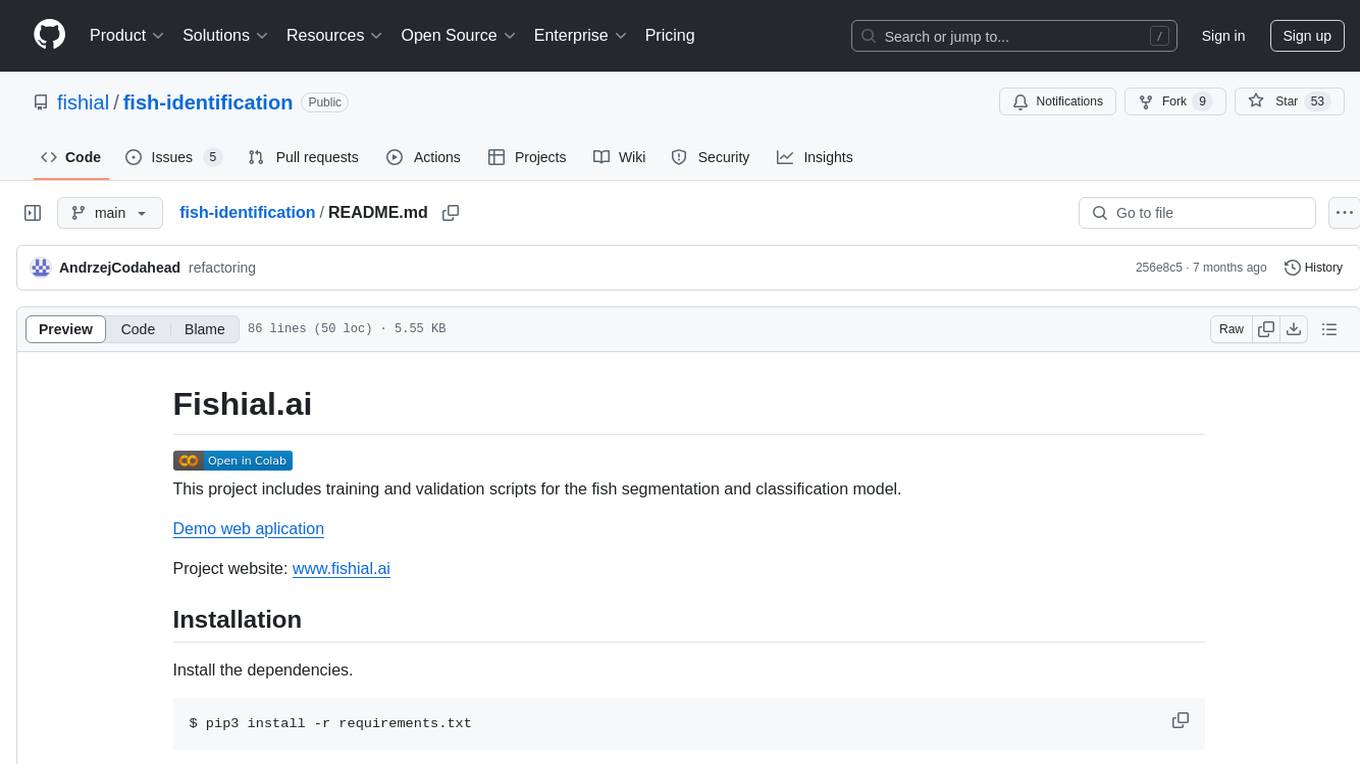

fish-identification

Fishial.ai is a project focused on training and validating scripts for fish segmentation and classification models. It includes various scripts for automatic training with different loss functions, dataset manipulation, and model setup using Detectron2 API. The project also provides tools for converting classification models to TorchScript format and creating training datasets. The models available include MaskRCNN for fish segmentation and various versions of ResNet18 for fish classification with different class counts and features. The project aims to facilitate fish identification and analysis through machine learning techniques.

20 - OpenAI Gpts

Black Female Headshot Generator AI

Make Black Female headshot from description or convert photos into headshots. Your online headshot generator.

Text to DB Schema

Convert application descriptions to consumable DB schemas or create-table SQL statements

LiDAR GPT - LAStools Comprehensive Expert

Expert in LAStools with in-depth command line knowledge.

Size Wizard

Find the right size clothes. I convert your measurements into sizes of different standards. Say “hello” in your language to start.

Malevich GPT - Emoji to Art 🤯 -> 🎨

Convert emotions and feelings to evocative abstract art. Share you daily mood with text or emoji and I help you to create masterpiece .

Global Salary Converter (PPP adjusted)

Convert salaries across countries, adjusted for Purchasing Power Parity (PPP)

Quotes CloudArt

I can convert your favorite quotes into a word cloud with a specified shape.

Athena Notes AI

I convert transcripts into detailed meeting notes with insights, summaries, and action items, plus a downloadable MS Word file.

Screenshot To Code GPT

Upload a screenshot of a website and convert it to clean HTML/Tailwind/JS code.

CondenserPRO: 1-page condensed papers

Convert 20-page articles/ reports/ white-papers to a 1 pager with maximum information fidelity. Summaries so good, you'll never want to read the original first! Upload your PDF and say 'GO'.

LaTeX Picture & Document Transcriber

Convert into usable LaTeX code any pictures of your handwritten notes, documents in any format. Start by uploading what you need to convert.

Formal to Informal Text Converter AI

I convert and turn formal text to informal style instantly. Simply put your formal text below and click Enter! Perfect for sentences, paragraphs, and daily messages.

Law Document

Convert simple documents and notes into supported legal terminology. Copyright (C) 2024, Sourceduty - All Rights Reserved.