Best AI tools for< Deep Learning Specialist >

Infographic

20 - AI tool Sites

Caffe

Caffe is a deep learning framework developed by Berkeley AI Research (BAIR) and community contributors. It is designed for speed, modularity, and expressiveness, allowing users to define models and optimization through configuration without hard-coding. Caffe supports both CPU and GPU training, making it suitable for research experiments and industry deployment. The framework is extensible, actively developed, and tracks the state-of-the-art in code and models. Caffe is widely used in academic research, startup prototypes, and large-scale industrial applications in vision, speech, and multimedia.

Clarion Analytics

Clarion Analytics is a leading AI tool that provides bespoke AI solutions for businesses of all sizes. Their expert team empowers clients with Deep Learning, Computer Vision, and Large Language Models to tackle complex visual and language challenges. They offer services such as AI Consulting & Strategy, Data and ML Engineering, AI Software Development, and Generative AI solutions, delivering tailored strategies for business growth and efficiency.

SentiSight.ai

SentiSight.ai is a machine learning platform for image recognition solutions, offering services such as object detection, image segmentation, image classification, image similarity search, image annotation, computer vision consulting, and intelligent automation consulting. Users can access pre-trained models, background removal, NSFW detection, text recognition, and image recognition API. The platform provides tools for image labeling, project management, and training tutorials for various image recognition models. SentiSight.ai aims to streamline the image annotation process, empower users to build and train their own models, and deploy them for online or offline use.

Berkeley Artificial Intelligence Research (BAIR) Lab

The Berkeley Artificial Intelligence Research (BAIR) Lab is a renowned research lab at UC Berkeley focusing on computer vision, machine learning, natural language processing, planning, control, and robotics. With over 50 faculty members and 300 graduate students, BAIR conducts research on fundamental advances in AI and interdisciplinary themes like multi-modal deep learning and human-compatible AI.

DataTeams

DataTeams is an AI-powered platform that simplifies the process of hiring top Data and AI talent for enterprises and startups. It helps in finding skill-specific, pre-vetted Engineers & Analysts for Data Science, Analytics, Data Engineering, Deep Learning & AI across various industry verticals. The platform uses a combination of AI and manual efforts to screen applicants, conduct interviews, and select the best profiles for clients. DataTeams offers a flexible approach to hiring, from engaging contract professionals within hours to allowing clients to try out candidates before making formal offers.

Ultralytics YOLO

Ultralytics YOLO is an advanced real-time object detection and image segmentation model that leverages cutting-edge advancements in deep learning and computer vision. It offers unparalleled performance in terms of speed and accuracy, making it suitable for various applications and easily adaptable to different hardware platforms. The comprehensive Ultralytics Docs provide resources to help users understand and utilize its features and capabilities, catering to both seasoned machine learning practitioners and newcomers to the field.

Maximo AI

Maximo AI is an all-in-one AI solution designed for trading and content creation. It offers a comprehensive set of tools and features to assist users in making informed decisions and creating engaging content. With Maximo AI, users can leverage artificial intelligence to optimize trading strategies and enhance content creation processes. The platform is user-friendly and intuitive, making it suitable for both beginners and experienced professionals in the trading and content creation industries.

Fotogram.ai

Fotogram.ai is an AI-powered image editing tool that offers a wide range of features to enhance and transform your photos. With Fotogram.ai, users can easily apply filters, adjust colors, remove backgrounds, add effects, and retouch images with just a few clicks. The tool uses advanced AI algorithms to provide professional-level editing capabilities to users of all skill levels. Whether you are a photographer looking to streamline your workflow or a social media enthusiast wanting to create stunning visuals, Fotogram.ai has you covered.

Likely.AI

Likely.AI is an AI-powered platform designed for the real estate industry, offering innovative solutions to enhance database management, marketing content creation, and predictive analytics. The platform utilizes advanced AI models to predict likely sellers, update contact information, and trigger automated notifications, ensuring real estate professionals stay ahead of the competition. With features like contact enrichment, predictive modeling, 24/7 contact monitoring, and AI-driven marketing content generation, Likely.AI revolutionizes how real estate businesses operate and engage with their clients. The platform aims to streamline workflows, improve lead generation, and maximize ROI for users in the residential real estate sector.

RTB House

RTB House is a global leader in online ad campaigns, offering a range of AI-powered solutions to help businesses drive sales and engage with customers. Their technology leverages deep learning to optimize ad campaigns, providing personalized retargeting, branding, and fraud protection. RTB House works with agencies and clients across various industries, including fashion, electronics, travel, and multi-category retail.

EnterpriseAI

EnterpriseAI is an advanced computing platform that focuses on the intersection of high-performance computing (HPC) and artificial intelligence (AI). The platform provides in-depth coverage of the latest developments, trends, and innovations in the AI-enabled computing landscape. EnterpriseAI offers insights into various sectors such as financial services, government, healthcare, life sciences, energy, manufacturing, retail, and academia. The platform covers a wide range of topics including AI applications, security, data storage, networking, and edge/IoT technologies.

DaVinciFace

DaVinciFace is an AI software that utilizes Deep Learning technology to generate DaVinci-styled portraits from any human face photo. The application is based on Generative Adversarial Network (GAN) technology with over 500 million parameters for training the network, providing users with a DaVinci's style portrait in less than 2 minutes. DaVinciFace ensures privacy by deleting photos from its database after generating the portrait and does not publish any user photos without explicit consent.

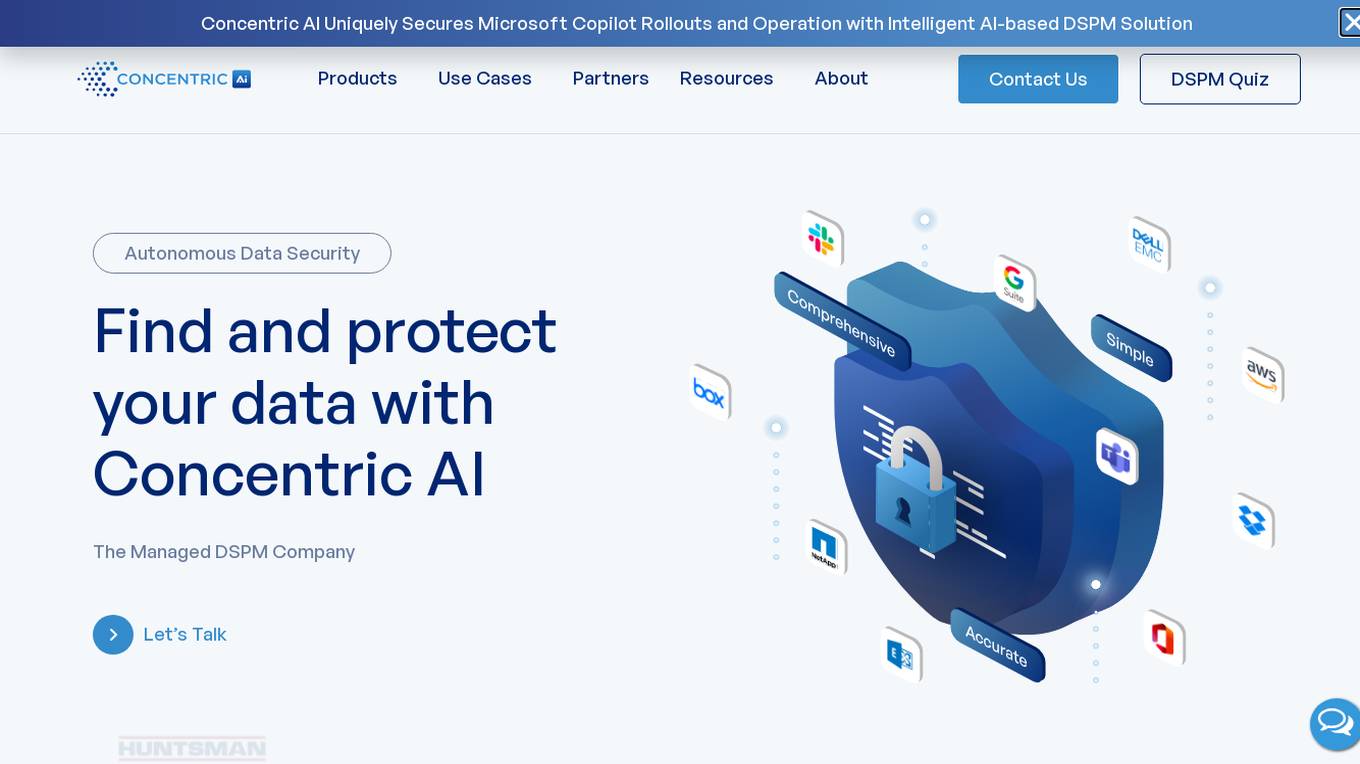

Concentric AI

Concentric AI is a Managed Data Security Posture Management tool that utilizes Semantic Intelligence to provide comprehensive data security solutions. The platform offers features such as autonomous data discovery, data risk identification, centralized remediation, easy deployment, and data security posture management. Concentric AI helps organizations protect sensitive data, prevent data loss, and ensure compliance with data security regulations. The tool is designed to simplify data governance and enhance data security across various data repositories, both in the cloud and on-premises.

Neosmart

Neosmart is an AI tool that provides insights and serves as a bridge to AI technology. The platform offers a wide range of resources, including articles, reports, and courses, to help users understand and leverage artificial intelligence in various industries. Neosmart covers topics such as AI applications in healthcare, marketing, legal, education, and more. It also features news updates, interviews with experts, and case studies to keep users informed about the latest trends and developments in the AI field.

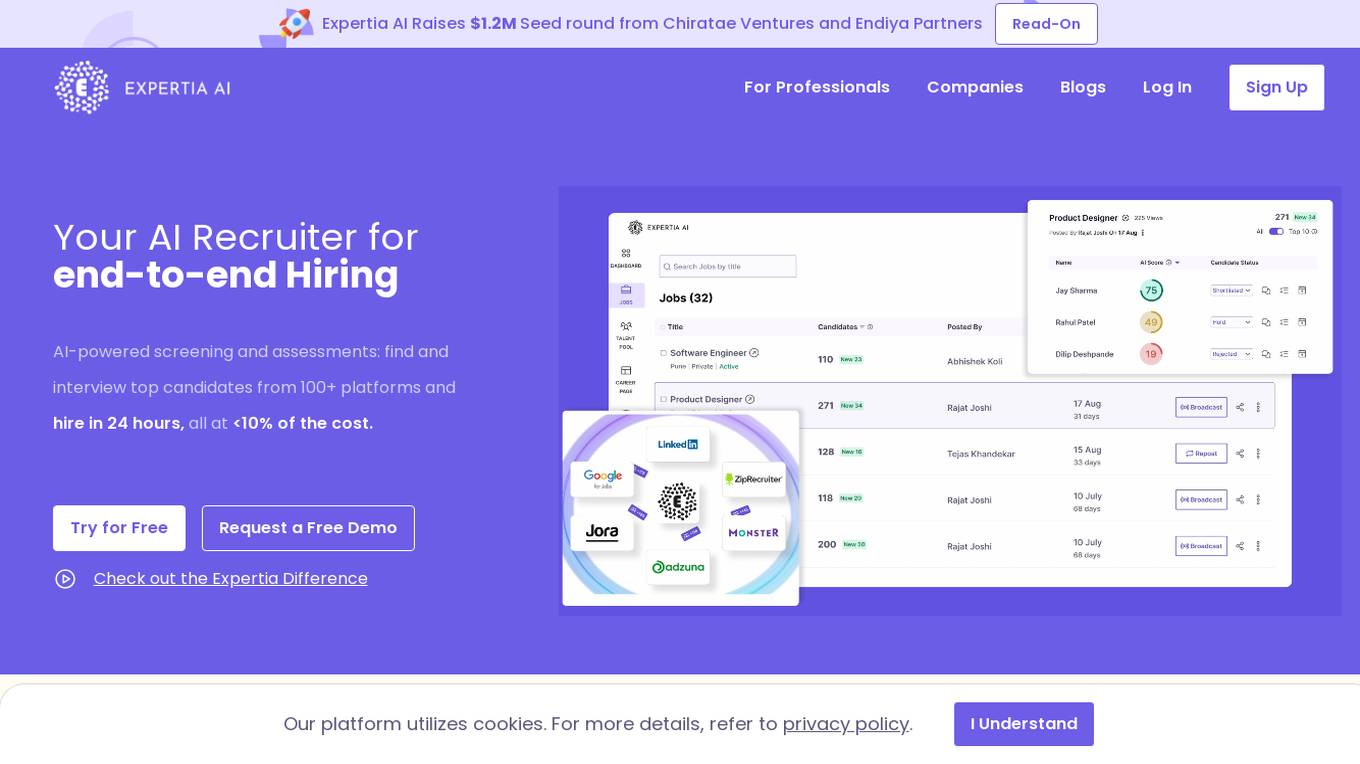

Expertia AI

Expertia AI is an AI-powered hiring partner that leverages advanced algorithms and machine learning to streamline the recruitment process. It offers a comprehensive suite of tools to assist HR professionals in sourcing, screening, and selecting top talent efficiently. By automating repetitive tasks and providing data-driven insights, Expertia AI helps companies make informed hiring decisions and improve overall recruitment outcomes.

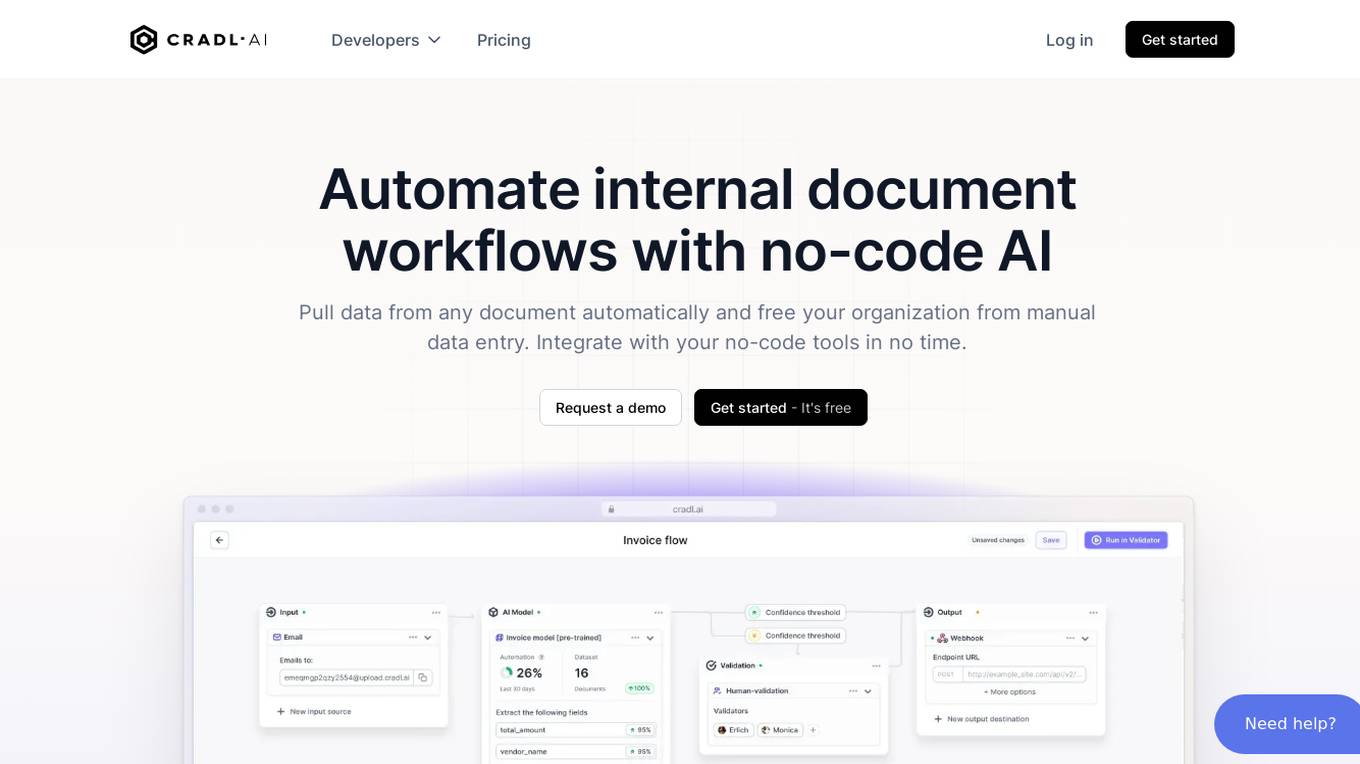

Cradl AI

Cradl AI is an AI-powered tool designed to automate document workflows with no-code AI. It enables users to extract data from any document automatically, integrate with no-code tools, and build custom AI models through an easy-to-use interface. The tool empowers automation teams across industries by extracting data from complex document layouts, regardless of language or structure. Cradl AI offers features such as line item extraction, fine-tuning AI models, human-in-the-loop validation, and seamless integration with automation tools. It is trusted by organizations for business-critical document automation, providing enterprise-level features like encrypted transmission, GDPR compliance, secure data handling, and auto-scaling.

Vize.ai

Vize.ai is a custom image recognition API provided by Ximilar, a leading company in Visual AI and Search. The tool offers powerful artificial intelligence capabilities with high accuracy using deep learning algorithms. It allows users to easily set up and implement cutting-edge vision automation without any development costs. Vize.ai enables users to train custom neural networks to recognize specific images and provides a scalable solution with continuous improvements in machine learning algorithms. The tool features an intuitive interface that requires no machine learning or coding knowledge, making it accessible for a wide range of users across industries.

VeroCloud

VeroCloud is a platform offering tailored solutions for AI, HPC, and scalable growth. It provides cost-effective cloud solutions with guaranteed uptime, performance efficiency, and cost-saving models. Users can deploy HPC workloads seamlessly, configure environments as needed, and access optimized environments for GPU Cloud, HPC Compute, and Tally on Cloud. VeroCloud supports globally distributed endpoints, public and private image repos, and deployment of containers on secure cloud. The platform also allows users to create and customize templates for seamless deployment across computing resources.

The AI Guild

The AI Guild is Europe's leading practitioner community in various AI-related fields such as Analytics Engineering, Data Science, Machine Learning, NLP, and more. It offers career support, exclusive connections, technical skills profiles, and growth opportunities for its members. Additionally, the AI Guild provides services for companies, including support in evaluating use cases, deploying to production, and scaling infrastructure.

Salesforce AI Blog

Salesforce AI Blog is an AI tool that focuses on various AI research topics such as accountability, accuracy, AI agents, AI coding, AI ethics, AI object detection, deep learning, forecasting, generative AI, and more. The blog showcases cutting-edge research, advancements, and projects in the field of artificial intelligence. It also highlights the work of Salesforce Research team members and their contributions to the AI community.

22 - Open Source Tools

zeta

Zeta is a tool designed to build state-of-the-art AI models faster by providing modular, high-performance, and scalable building blocks. It addresses the common issues faced while working with neural nets, such as chaotic codebases, lack of modularity, and low performance modules. Zeta emphasizes usability, modularity, and performance, and is currently used in hundreds of models across various GitHub repositories. It enables users to prototype, train, optimize, and deploy the latest SOTA neural nets into production. The tool offers various modules like FlashAttention, SwiGLUStacked, RelativePositionBias, FeedForward, BitLinear, PalmE, Unet, VisionEmbeddings, niva, FusedDenseGELUDense, FusedDropoutLayerNorm, MambaBlock, Film, hyper_optimize, DPO, and ZetaCloud for different tasks in AI model development.

ludwig

Ludwig is a declarative deep learning framework designed for scale and efficiency. It is a low-code framework that allows users to build custom AI models like LLMs and other deep neural networks with ease. Ludwig offers features such as optimized scale and efficiency, expert level control, modularity, and extensibility. It is engineered for production with prebuilt Docker containers, support for running with Ray on Kubernetes, and the ability to export models to Torchscript and Triton. Ludwig is hosted by the Linux Foundation AI & Data.

tensorrtllm_backend

The TensorRT-LLM Backend is a Triton backend designed to serve TensorRT-LLM models with Triton Inference Server. It supports features like inflight batching, paged attention, and more. Users can access the backend through pre-built Docker containers or build it using scripts provided in the repository. The backend can be used to create models for tasks like tokenizing, inferencing, de-tokenizing, ensemble modeling, and more. Users can interact with the backend using provided client scripts and query the server for metrics related to request handling, memory usage, KV cache blocks, and more. Testing for the backend can be done following the instructions in the 'ci/README.md' file.

llm-export

llm-export is a tool for exporting llm models to onnx and mnn formats. It has features such as passing onnxruntime correctness tests, optimizing the original code to support dynamic shapes, reducing constant parts, optimizing onnx models using OnnxSlim for performance improvement, and exporting lora weights to onnx and mnn formats. Users can clone the project locally, clone the desired LLM project locally, and use LLMExporter to export the model. The tool supports various export options like exporting the entire model as one onnx model, exporting model segments as multiple models, exporting model vocabulary to a text file, exporting specific model layers like Embedding and lm_head, testing the model with queries, validating onnx model consistency with onnxruntime, converting onnx models to mnn models, and more. Users can specify export paths, skip optimization steps, and merge lora weights before exporting.

NeMo-Framework-Launcher

The NeMo Framework Launcher is a cloud-native tool designed for launching end-to-end NeMo Framework training jobs. It focuses on foundation model training for generative AI models, supporting large language model pretraining with techniques like model parallelism, tensor, pipeline, sequence, distributed optimizer, mixed precision training, and more. The tool scales to thousands of GPUs and can be used for training LLMs on trillions of tokens. It simplifies launching training jobs on cloud service providers or on-prem clusters, generating submission scripts, organizing job results, and supporting various model operations like fine-tuning, evaluation, export, and deployment.

AI-resources

AI-resources is a repository containing links to various resources for learning Artificial Intelligence. It includes video lectures, courses, tutorials, and open-source libraries related to deep learning, reinforcement learning, machine learning, and more. The repository categorizes resources for beginners, average users, and advanced users/researchers, providing a comprehensive collection of materials to enhance knowledge and skills in AI.

Liger-Kernel

Liger Kernel is a collection of Triton kernels designed for LLM training, increasing training throughput by 20% and reducing memory usage by 60%. It includes Hugging Face Compatible modules like RMSNorm, RoPE, SwiGLU, CrossEntropy, and FusedLinearCrossEntropy. The tool works with Flash Attention, PyTorch FSDP, and Microsoft DeepSpeed, aiming to enhance model efficiency and performance for researchers, ML practitioners, and curious novices.

prajna

Prajna is an open-source programming language specifically developed for building more modular, automated, and intelligent artificial intelligence infrastructure. It aims to cater to various stages of AI research, training, and deployment by providing easy access to CPU, GPU, and various TPUs for AI computing. Prajna features just-in-time compilation, GPU/heterogeneous programming support, tensor computing, syntax improvements, and user-friendly interactions through main functions, Repl, and Jupyter, making it suitable for algorithm development and deployment in various scenarios.

marlin

Marlin is a highly optimized FP16xINT4 matmul kernel designed for large language model (LLM) inference, offering close to ideal speedups up to batchsizes of 16-32 tokens. It is suitable for larger-scale serving, speculative decoding, and advanced multi-inference schemes like CoT-Majority. Marlin achieves optimal performance by utilizing various techniques and optimizations to fully leverage GPU resources, ensuring efficient computation and memory management.

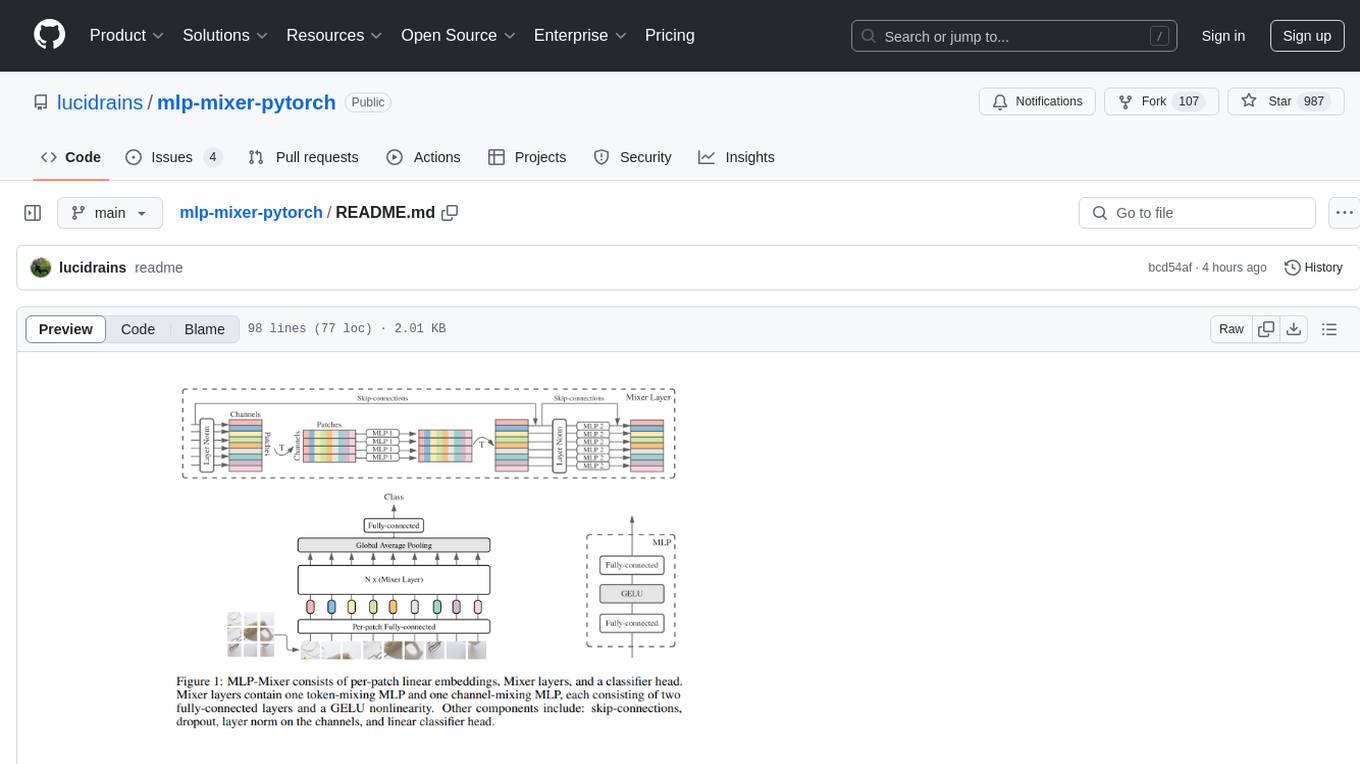

mlp-mixer-pytorch

MLP Mixer - Pytorch is an all-MLP solution for vision tasks, developed by Google AI, implemented in Pytorch. It provides an architecture that does not require convolutions or attention mechanisms, offering an alternative approach for image and video processing. The tool is designed to handle tasks related to image classification and video recognition, utilizing multi-layer perceptrons (MLPs) for feature extraction and classification. Users can easily install the tool using pip and integrate it into their Pytorch projects to experiment with MLP-based vision models.

DeepLearing-Interview-Awesome-2024

DeepLearning-Interview-Awesome-2024 is a repository that covers various topics related to deep learning, computer vision, big models (LLMs), autonomous driving, smart healthcare, and more. It provides a collection of interview questions with detailed explanations sourced from recent academic papers and industry developments. The repository is aimed at assisting individuals in academic research, work innovation, and job interviews. It includes six major modules covering topics such as large language models (LLMs), computer vision models, common problems in computer vision and perception algorithms, deep learning basics and frameworks, as well as specific tasks like 3D object detection, medical image segmentation, and more.

flux-fine-tuner

This is a Cog training model that creates LoRA-based fine-tunes for the FLUX.1 family of image generation models. It includes features such as automatic image captioning during training, image generation using LoRA, uploading fine-tuned weights to Hugging Face, automated test suite for continuous deployment, and Weights and biases integration. The tool is designed for users to fine-tune Flux models on Replicate for image generation tasks.

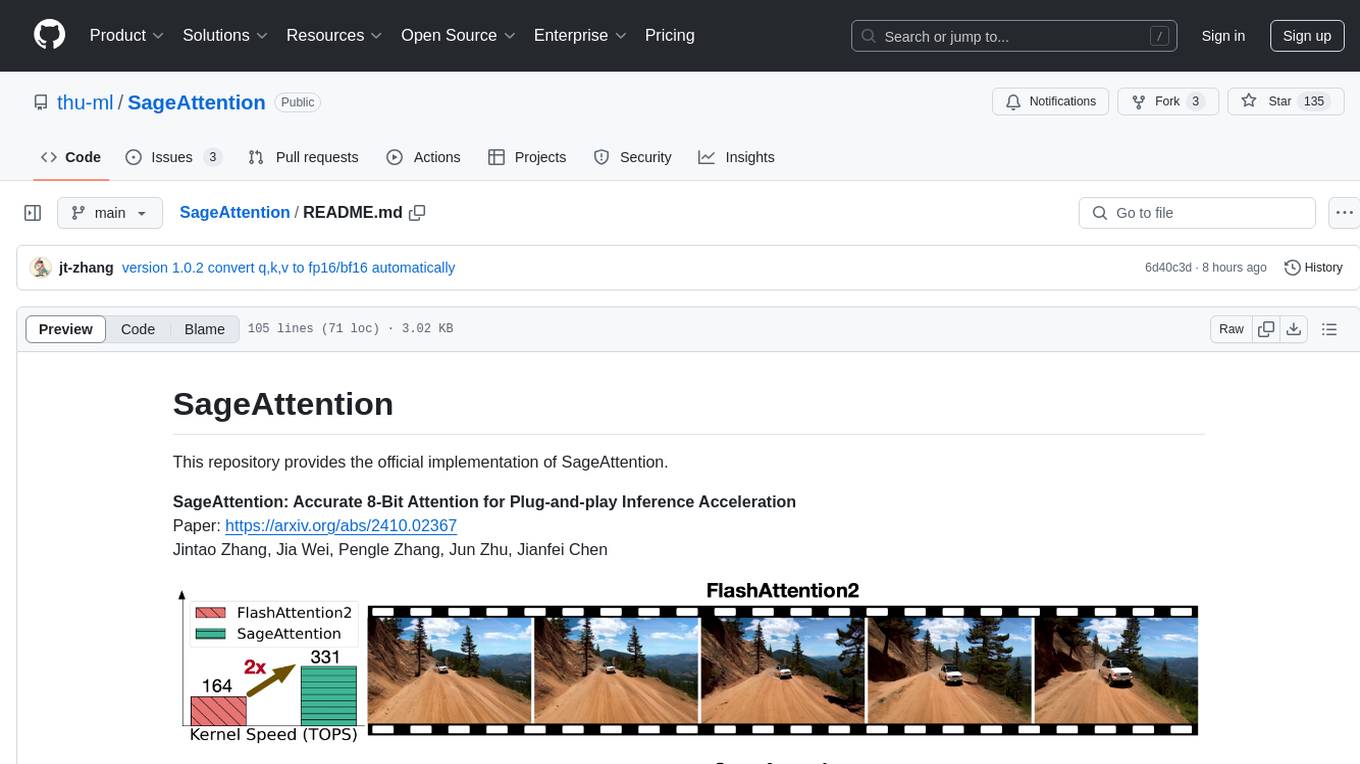

SageAttention

SageAttention is an official implementation of an accurate 8-bit attention mechanism for plug-and-play inference acceleration. It is optimized for RTX4090 and RTX3090 GPUs, providing performance improvements for specific GPU architectures. The tool offers a technique called 'smooth_k' to ensure accuracy in processing FP16/BF16 data. Users can easily replace 'scaled_dot_product_attention' with SageAttention for faster video processing.

ai-algorithms

This repository is a work in progress that contains first-principle implementations of groundbreaking AI algorithms using various deep learning frameworks. Each implementation is accompanied by supporting research papers, aiming to provide comprehensive educational resources for understanding and implementing foundational AI algorithms from scratch.

TokenFormer

TokenFormer is a fully attention-based neural network architecture that leverages tokenized model parameters to enhance architectural flexibility. It aims to maximize the flexibility of neural networks by unifying token-token and token-parameter interactions through the attention mechanism. The architecture allows for incremental model scaling and has shown promising results in language modeling and visual modeling tasks. The codebase is clean, concise, easily readable, state-of-the-art, and relies on minimal dependencies.

Gaudi-tutorials

The Intel Gaudi Tutorials repository contains source files for tutorials on using PyTorch and PyTorch Lightning on the Intel Gaudi AI Processor. The tutorials cater to users from beginner to advanced levels and cover various tasks such as fine-tuning models, running inference, and setting up DeepSpeed for training large language models. Users need access to an Intel Gaudi 2 Accelerator card or node, run the Intel Gaudi PyTorch Docker image, clone the tutorial repository, install Jupyterlab, and run the Jupyterlab server to follow along with the tutorials.

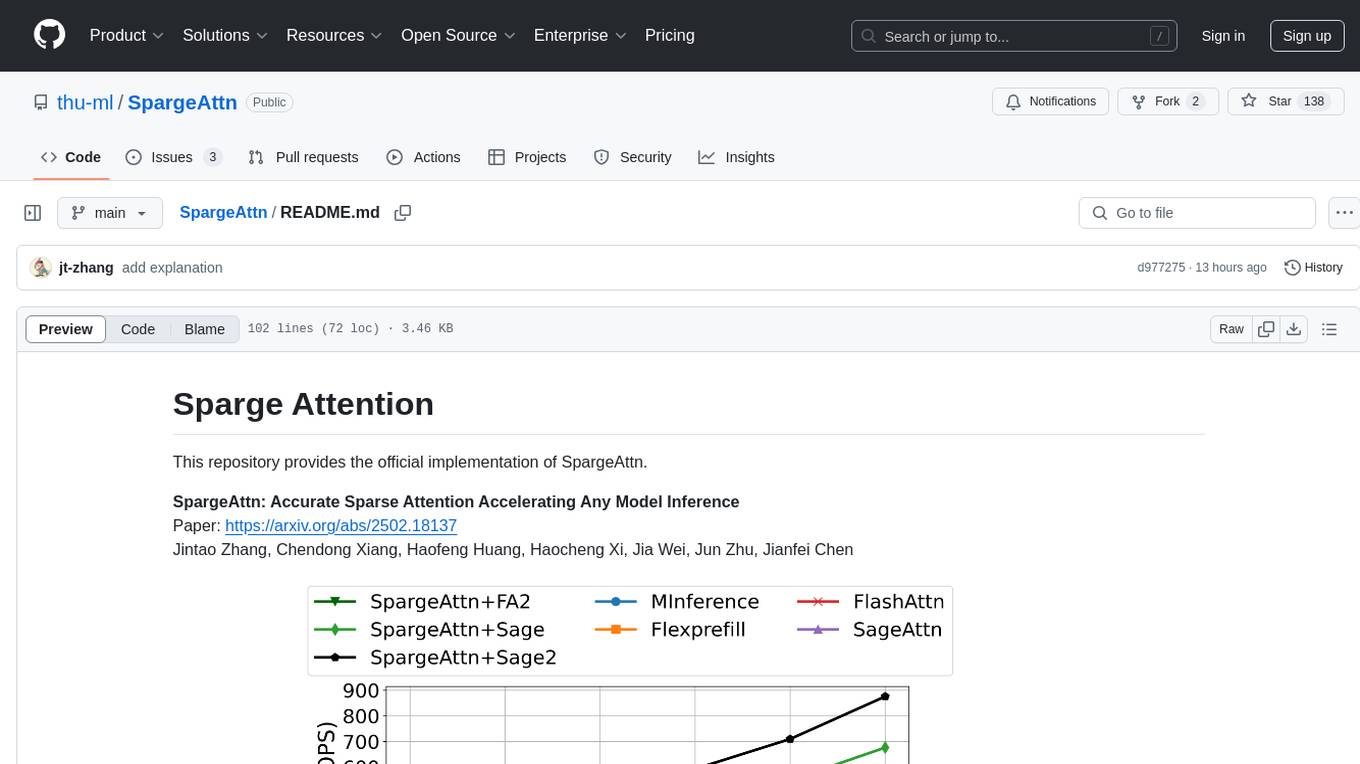

SpargeAttn

SpargeAttn is an official implementation designed for accelerating any model inference by providing accurate sparse attention. It offers a significant speedup in model performance while maintaining quality. The tool is based on SageAttention and SageAttention2, providing options for different levels of optimization. Users can easily install the package and utilize the available APIs for their specific needs. SpargeAttn is particularly useful for tasks requiring efficient attention mechanisms in deep learning models.

amazon-sagemaker-llm-fine-tuning-remote-decorator

This repository provides interactive fine-tuning of Foundation Models with Amazon SageMaker Training using the @remote decorator. It showcases the use of SageMaker AI capabilities for Small/Large Language Models fine-tuning by employing different distribution techniques like FSDP and DDP. Users can run the repository from Amazon SageMaker Studio or a local IDE. The notebooks cover various supervised and self-supervised fine-tuning scenarios for different models, along with instructions for updating configurations based on the AWS region and Python version compatibility.

RLinf

RLinf is a flexible and scalable open-source infrastructure designed for post-training foundation models via reinforcement learning. It provides a robust backbone for next-generation training, supporting open-ended learning, continuous generalization, and limitless possibilities in intelligence development. The tool offers unique features like Macro-to-Micro Flow, flexible execution modes, auto-scheduling strategy, embodied agent support, and fast adaptation for mainstream VLA models. RLinf is fast with hybrid mode and automatic online scaling strategy, achieving significant throughput improvement and efficiency. It is also flexible and easy to use with multiple backend integrations, adaptive communication, and built-in support for popular RL methods. The roadmap includes system-level enhancements and application-level extensions to support various training scenarios and models. Users can get started with complete documentation, quickstart guides, key design principles, example gallery, advanced features, and guidelines for extending the framework. Contributions are welcome, and users are encouraged to cite the GitHub repository and acknowledge the broader open-source community.

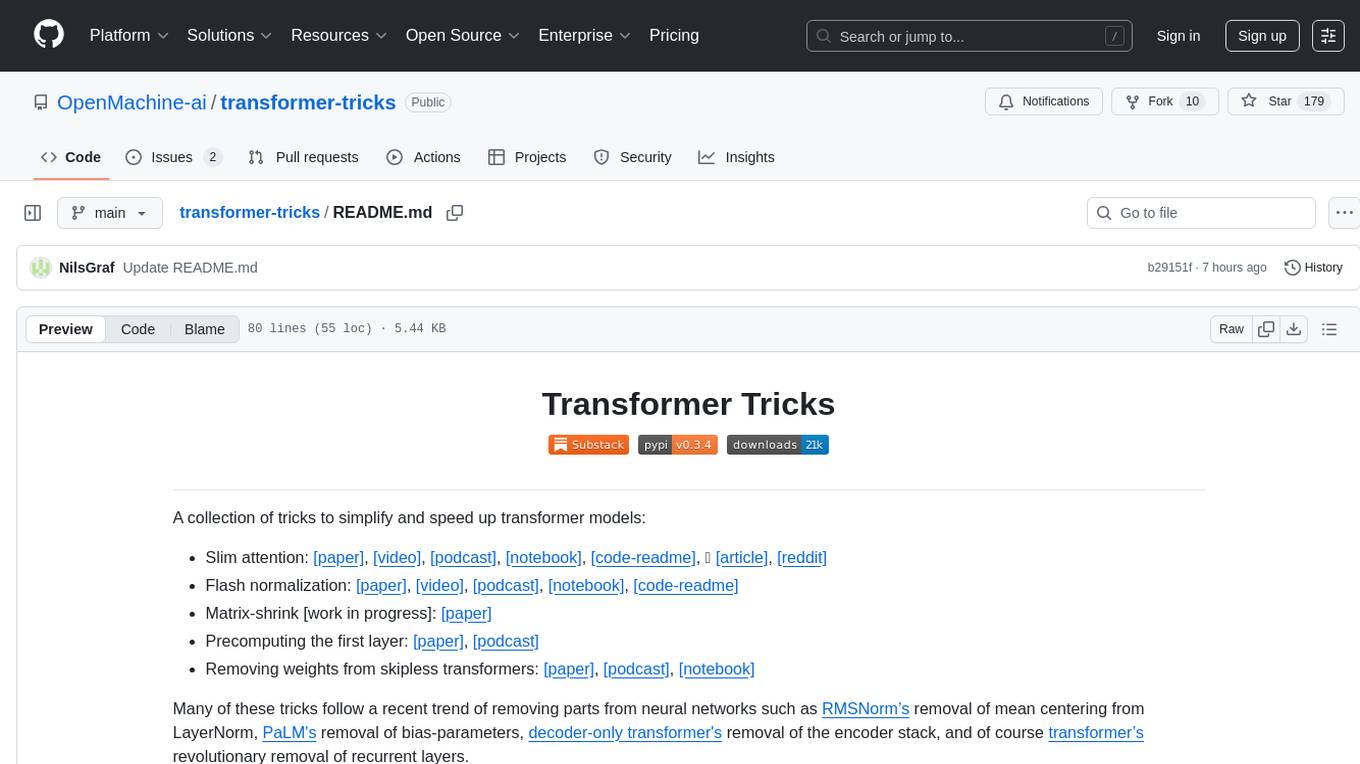

transformer-tricks

A collection of tricks to simplify and speed up transformer models by removing parts from neural networks. Includes Flash normalization, slim attention, matrix-shrink, precomputing the first layer, and removing weights from skipless transformers. Follows recent trends in neural network optimization.

MegatronApp

MegatronApp is a toolchain built around the Megatron-LM training framework, offering performance tuning, slow-node detection, and training-process visualization. It includes modules like MegaScan for anomaly detection, MegaFBD for forward-backward decoupling, MegaDPP for dynamic pipeline planning, and MegaScope for visualization. The tool aims to enhance large-scale distributed training by providing valuable capabilities and insights.

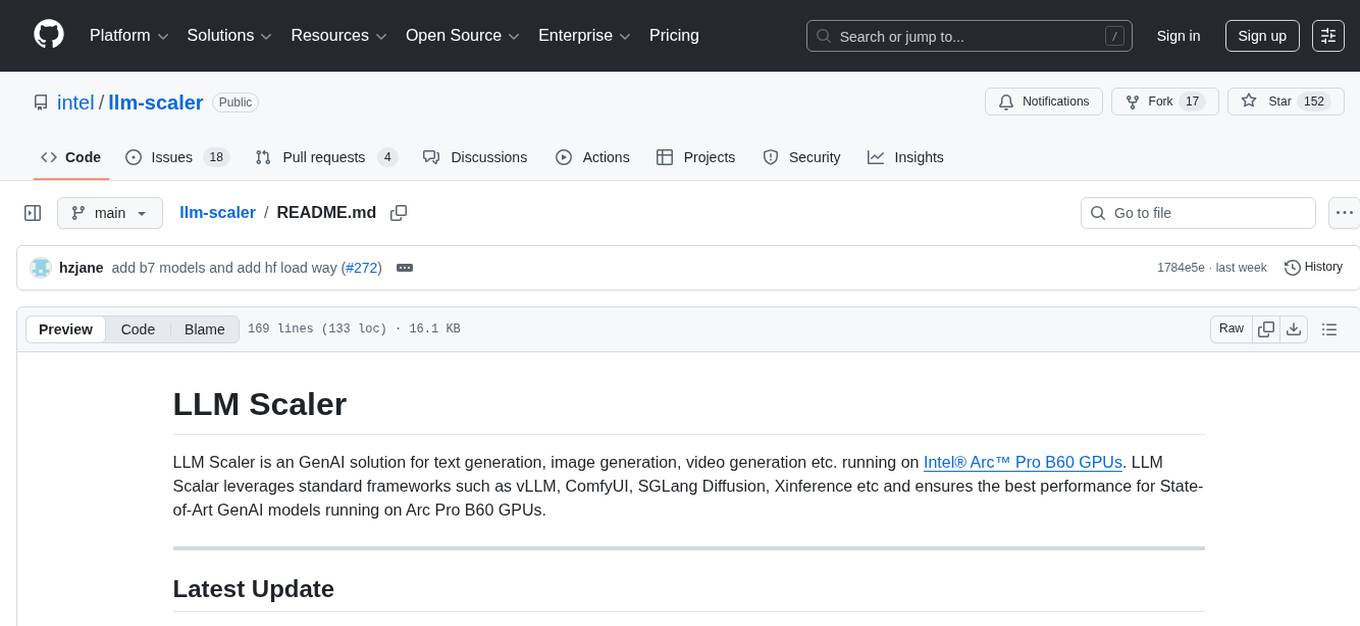

llm-scaler

LLM Scaler is a GenAI solution for text, image, and video generation running on Intel® Arc™ Pro B60 GPUs. It leverages standard frameworks such as vLLM, ComfyUI, SGLang Diffusion, Xinference, etc., ensuring optimal performance for State-of-Art GenAI models on Arc Pro B60 GPUs.

20 - OpenAI Gpts

PINN Design Pattern Specialist

Expert in physics-informed neural networks for practitioners

2nd Year Pharmacy

To provide a comprehensive AI-assisted learning experience for 2nd-year pharmacy students, aiming to enhance understanding, retention, and application of pharmaceutical knowledge.

Spicy QuestionMaster

Devious, charming host, embracing desires and instant gratification.

Angry Bot

Welcome to the world of the Angry Robot! You'll face an irate robot that you must appease. Can you successfully complete all 9 levels ?