Efficient-LLMs-Survey

[TMLR 2024] Efficient Large Language Models: A Survey

Stars: 1058

This repository provides a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from **model-centric** , **data-centric** , and **framework-centric** perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

README:

Efficient Large Language Models: A Survey [arXiv] (Version 1: 12/06/2023; Version 2: 12/23/2023; Version 3: 01/31/2024; Version 4: 05/23/2024, camera ready version of Transactions on Machine Learning Research)

Zhongwei Wan1, Xin Wang1, Che Liu2, Samiul Alam1, Yu Zheng3, Jiachen Liu4, Zhongnan Qu5, Shen Yan6, Yi Zhu7, Quanlu Zhang8, Mosharaf Chowdhury4, Mi Zhang.1

1The Ohio State University, 2Imperial College London, 3Michigan State University, 4University of Michigan, 5Amazon AWS AI, 6Google Research, 7Boson AI, 8Microsoft Research Asia.

⚡News: Our survey has been officially accepted by Transactions on Machine Learning Research (TMLR), May 2024. Camera ready version is available at: [OpenReview]

@article{wan2023efficient,

title={Efficient large language models: A survey},

author={Wan, Zhongwei and Wang, Xin and Liu, Che and Alam, Samiul and Zheng, Yu and others},

journal={arXiv preprint arXiv:2312.03863},

volume={1},

year={2023},

publisher={no}

}

This repository is maintained by tuidan ([email protected]), SUSTechBruce ([email protected]), samiul272 ([email protected]), and mi-zhang ([email protected]). We welcome feedback, suggestions, and contributions that can help improve this survey and repository so as to make them valuable resources to benefit the entire community.

We will actively maintain this repository by incorporating new research as it emerges. If you have any suggestions regarding our taxonomy, find any missed papers, or update any preprint arXiv paper that has been accepted to some venue, feel free to send us an email or submit a pull request using the following markdown format.

Paper Title, <ins>Conference/Journal/Preprint, Year</ins> [[pdf](link)] [[other resources](link)].Large Language Models (LLMs) have demonstrated remarkable capabilities in many important tasks and have the potential to make a substantial impact on our society. Such capabilities, however, come with considerable resource demands, highlighting the strong need to develop effective techniques for addressing the efficiency challenges posed by LLMs. In this survey, we provide a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from model-centric, data-centric, and framework-centric perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

Although LLMs are leading the next wave of AI revolution, the remarkable capabilities of LLMs come at the cost of their substantial resource demands. Figure 1 (left) illustrates the relationship between model performance and model training time in terms of GPU hours for LLaMA series, where the size of each circle is proportional to the number of model parameters. As shown, although larger models are able to achieve better performance, the amounts of GPU hours used for training them grow exponentially as model sizes scale up. In addition to training, inference also contributes quite significantly to the operational cost of LLMs. Figure 2 (right) depicts the relationship between model performance and inference throughput. Similarly, scaling up the model size enables better performance but comes at the cost of lower inference throughput (higher inference latency), presenting challenges for these models in expanding their reach to a broader customer base and diverse applications in a cost-effective way. The high resource demands of LLMs highlight the strong need to develop techniques to enhance the efficiency of LLMs. As shown in Figure 2, compared to LLaMA-1-33B, Mistral-7B, which uses grouped-query attention and sliding window attention to speed up inference, achieves comparable performance and much higher throughput. This superiority highlights the feasibility and significance of designing efficiency techniques for LLMs.

- 🤖 Model-Centric Methods

- 🔢 Data-Centric Methods

- 🧑💻 System-Level Efficiency Optimization and LLM Frameworks

- I-LLM: Efficient Integer-Only Inference for Fully-Quantized Low-Bit Large Language Models, arXiv, 2024 [Paper]

- IntactKV: Improving Large Language Model Quantization by Keeping Pivot Tokens Intact, arXiv, 2024 [Paper]

- OmniQuant: OmniQuant: Omnidirectionally Calibrated Quantization for Large Language Models, ICLR, 2024 [Paper] [Code]

- OneBit: Towards Extremely Low-bit Large Language Models, arXiv, 2024 [Paper]

- GPTQ: Accurate Quantization for Generative Pre-trained Transformers, ICLR, 2023 [Paper] [Code]

- QuIP: 2-Bit Quantization of Large Language Models With Guarantees, arXiv, 2023 [Paper] [Code]

- AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration, arXiv, 2023 [Paper] [Code]

- OWQ: Lessons Learned from Activation Outliers for Weight Quantization in Large Language Models, arXiv, 2023 [Paper] [Code]

- SpQR: A Sparse-Quantized Representation for Near-Lossless LLM Weight Compression, arXiv, 2023 [Paper] [Code]

- FineQuant: Unlocking Efficiency with Fine-Grained Weight-Only Quantization for LLMs, NeurIPS-ENLSP, 2023 [Paper]

- LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale, NeurlPS, 2022 [Paper] [Code]

- Optimal Brain Compression: A Framework for Accurate Post-Training Quantization and Pruning, NeurIPS, 2022 [Paper] [Code]

- QuantEase: Optimization-based Quantization for Language Models, arXiv, 2023 [Paper] [Code]

- Rotation and Permutation for Advanced Outlier Management and Efficient Quantization of LLMs, NeurIPS, 2024 [Paper]

- OmniQuant: OmniQuant: Omnidirectionally Calibrated Quantization for Large Language Models, ICLR, 2024 [Paper] [Code]

- Intriguing Properties of Quantization at Scale, NeurIPS, 2023 [Paper]

- ZeroQuant-V2: Exploring Post-training Quantization in LLMs from Comprehensive Study to Low Rank Compensation, arXiv, 2023 [Paper] [Code]

- ZeroQuant-FP: A Leap Forward in LLMs Post-Training W4A8 Quantization Using Floating-Point Formats, NeurIPS-ENLSP, 2023 [Paper] [Code]

- OliVe: Accelerating Large Language Models via Hardware-friendly Outlier-Victim Pair Quantization, ISCA, 2023 [Paper] [Code]

- RPTQ: Reorder-based Post-training Quantization for Large Language Models, arXiv, 2023 [Paper] [Code]

- Outlier Suppression+: Accurate Quantization of Large Language Models by Equivalent and Optimal Shifting and Scaling, arXiv, 2023 [Paper] [Code]

- QLLM: Accurate and Efficient Low-Bitwidth Quantization for Large Language Models, arXiv, 2023 [Paper]

- SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models, ICML, 2023 [Paper] [Code]

- ZeroQuant: Efficient and Affordable Post-Training Quantization for Large-Scale Transformers, NeurIPS, 2022 [Paper]

- Evaluating Quantized Large Language Models, arXiv, 2024 [Paper]

- The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits, arXiv, 2024 [Paper]

- FP8-LM: Training FP8 Large Language Models, arXiv, 2023 [Paper]

- Training and inference of large language models using 8-bit floating point, arXiv, 2023 [Paper]

- BitNet: Scaling 1-bit Transformers for Large Language Models, arXiv, 2023 [Paper]

- LLM-QAT: Data-Free Quantization Aware Training for Large Language Models, arXiv, 2023 [Paper] [Code]

- Compression of Generative Pre-trained Language Models via Quantization, ACL, 2022 [Paper]

- Compact Language Models via Pruning and Knowledge Distillation, arXiv, 2024 [Paper]

- A deeper look at depth pruning of LLMs, arXiv, 2024 [Paper]

- Perplexed by Perplexity: Perplexity-Based Data Pruning With Small Reference Models, arXiv, 2024 [Paper]

- Plug-and-Play: An Efficient Post-training Pruning Method for Large Language Models, ICLR, 2024 [Paper]

- BESA: Pruning Large Language Models with Blockwise Parameter-Efficient Sparsity Allocation, arXiv, 2024 [Paper]

- ShortGPT: Layers in Large Language Models are More Redundant Than You Expect, arXiv, 2024 [Paper]

- NutePrune: Efficient Progressive Pruning with Numerous Teachers for Large Language Models, arXiv, 2024 [Paper]

- SliceGPT: Compress Large Language Models by Deleting Rows and Columns, ICLR, 2024 [Paper] [Code]

- LoRAShear: Efficient Large Language Model Structured Pruning and Knowledge Recovery, arXiv, 2023 [Paper]

- LLM-Pruner: On the Structural Pruning of Large Language Models, NeurIPS, 2023 [Paper] [Code]

- Sheared LLaMA: Accelerating Language Model Pre-training via Structured Pruning, NeurIPS-ENLSP, 2023 [Paper] [Code]

- LoRAPrune: Pruning Meets Low-Rank Parameter-Efficient Fine-Tuning, arXiv, 2023 [Paper]

- LoRAP: Transformer Sub-Layers Deserve Differentiated Structured Compression for Large Language Models, ICML, 2024 [Paper][Code]

- MaskLLM: Learnable Semi-Structured Sparsity for Large Language Models, NIPS, 2024 [Paper]

- Dynamic Sparse No Training: Training-Free Fine-tuning for Sparse LLMs, ICLR, 2024 [Paper]

- SparseGPT: Massive Language Models Can Be Accurately Pruned in One-Shot, ICML, 2023 [Paper] [Code]

- A Simple and Effective Pruning Approach for Large Language Models, arXiv, 2023 [Paper] [Code]

- One-Shot Sensitivity-Aware Mixed Sparsity Pruning for Large Language Models, arXiv, 2023 [Paper]

- SVD-LLM: Singular Value Decomposition for Large Language Model Compression, arXiv, 2024 [Paper] [Code]

- ASVD: Activation-aware Singular Value Decomposition for Compressing Large Language Models, arXiv, 2023 [Paper] [Code]

- Language model compression with weighted low-rank factorization, ICLR, 2022 [Paper]

- TensorGPT: Efficient Compression of the Embedding Layer in LLMs based on the Tensor-Train Decomposition, arXiv, 2023 [Paper]

- LoSparse: Structured Compression of Large Language Models based on Low-Rank and Sparse Approximation, ICML, 2023 [Paper] [Code]

- DDK: Distilling Domain Knowledge for Efficient Large Language Models arXiv, 2024 [Paper]

- Rethinking Kullback-Leibler Divergence in Knowledge Distillation for Large Language Models arXiv, 2024 [Paper]

- DistiLLM: Towards Streamlined Distillation for Large Language Models, arXiv, 2024 [Paper] [Code]

- Towards the Law of Capacity Gap in Distilling Language Models, arXiv, 2023 [Paper] [Code]

- Baby Llama: Knowledge Distillation from an Ensemble of Teachers Trained on a Small Dataset with no Performance Penalty, arXiv, 2023 [Paper]

- Knowledge Distillation of Large Language Models, arXiv, 2023 [Paper] [Code]

- GKD: Generalized Knowledge Distillation for Auto-regressive Sequence Models, arXiv, 2023 [Paper]

- Propagating Knowledge Updates to LMs Through Distillation, arXiv, 2023 [Paper] [Code]

- Less is More: Task-aware Layer-wise Distillation for Language Model Compression, ICML, 2023 [Paper]

- Token-Scaled Logit Distillation for Ternary Weight Generative Language Models, arXiv, 2023 [Paper]

- Zephyr: Direct Distillation of LM Alignment, arXiv, 2023 [Paper]

- Instruction Tuning with GPT-4, arXiv, 2023 [Paper] [Code]

- Lion: Adversarial Distillation of Closed-Source Large Language Model, arXiv, 2023 [Paper] [Code]

- Specializing Smaller Language Models towards Multi-Step Reasoning, ICML, 2023 [Paper] [Code]

- Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes, ACL, 2023 [Paper]

- Large Language Models Are Reasoning Teachers, ACL, 2023 [Paper] [Code]

- SCOTT: Self-Consistent Chain-of-Thought Distillation, ACL, 2023 [Paper] [Code]

- Symbolic Chain-of-Thought Distillation: Small Models Can Also "Think" Step-by-Step, ACL, 2023 [Paper]

- Distilling Reasoning Capabilities into Smaller Language Models, ACL, 2023 [Paper] [Code]

- In-context Learning Distillation: Transferring Few-shot Learning Ability of Pre-trained Language Models, arXiv, 2022 [Paper]

- Explanations from Large Language Models Make Small Reasoners Better, arXiv, 2022 [Paper]

- DISCO: Distilling Counterfactuals with Large Language Models, arXiv, 2022 [Paper] [Code]

- MobiLlama: Towards Accurate and Lightweight Fully Transparent GPT, arXiv, 2024 [Paper]

- Bfloat16 Processing for Neural Networks, ARITH, 2019 [Paper]

- A Study of BFLOAT16 for Deep Learning Training, arXiv, 2019 [Paper]

- Mixed Precision Training, ICLR, 2018 [Paper]

- lemon: lossless model expansion, ICLR, 2024 [Paper]

- Preparing Lessons for Progressive Training on Language Models, AAAI, 2024 [Paper]

- Learning to Grow Pretrained Models for Efficient Transformer Training, ICLR, 2023 [Paper] [Code]

- 2x Faster Language Model Pre-training via Masked Structural Growth, arXiv, 2023 [Paper]

- Reusing Pretrained Models by Multi-linear Operators for Efficient Training, NeurIPS, 2023 [Paper]

- FLM-101B: An Open LLM and How to Train It with $100 K Budget, arXiv, 2023 [Paper] [Code]

- Knowledge Inheritance for Pre-trained Language Models, NAACL, 2022 [Paper] [Code]

- Staged Training for Transformer Language Models, ICML, 2022 [Paper] [Code]

- Deepnet: Scaling transformers to 1,000 layers, arXiv, 2022 [Paper] [Code]

- ZerO Initialization: Initializing Neural Networks with only Zeros and Ones, TMLR, 2022 [Paper] [Code]

- Rezero is All You Need: Fast Convergence at Large Depth, UAI, 2021 [Paper] [Code]

- Batch Normalization Biases Residual Blocks Towards the Identity Function in Deep Networks, NeurIPS, 2020 [Paper]

- Improving Transformer Optimization Through Better Initialization, ICML, 2020 [Paper] [Code]

- Fixup Initialization: Residual Learning without Normalization, ICLR, 2019 [Paper]

- On Weight Initialization in Deep Neural Networks, arXiv, 2017 [Paper]

- Towards Optimal Learning of Language Models, arXiv, 2024 [Paper] [Code]

- Symbolic Discovery of Optimization Algorithms, arXiv, 2023 [Paper]

- Sophia: A Scalable Stochastic Second-order Optimizer for Language Model Pre-training, arXiv, 2023 [Paper] [Code]

- OpenDelta: A Plug-and-play Library for Parameter-efficient Adaptation of Pre-trained Models, ACL Demo, 2023 [Paper] [Code]

- LLM-Adapters: An Adapter Family for Parameter-Efficient Fine-Tuning of Large Language Models, EMNLP, 2023 [Paper] [Code]

- Compacter: Efficient Low-Rank Hypercomplex Adapter Layers, NeurIPS, 2023 [Paper] [Code]

- Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learning, NeurIPS, 2022 [Paper] [Code]

- Meta-Adapters: Parameter Efficient Few-shot Fine-tuning through Meta-Learning, AutoML, 2022 [Paper]

- AdaMix: Mixture-of-Adaptations for Parameter-efficient Model Tuning, EMNLP, 2022 [Paper] [Code]

- SparseAdapter: An Easy Approach for Improving the Parameter-Efficiency of Adapters, EMNLP, 2022 [Paper] [Code]

- HydraLoRA: An Asymmetric LoRA Architecture for Efficient Fine-Tuning, NeurIPS, 2024 [Paper]

- LOFIT: Localized Fine-tuning on LLM Representations, Arxiv, 2024 [Paper]

- Mixture-of-Subspaces in Low-Rank Adaptation, Arxiv, 2024 [Paper] [Code]

- MEFT: Memory-Efficient Fine-Tuning through Sparse Adapter, ACL, 2024 [Paper]

- LoRA Meets Dropout under a Unified Framework, arXiv, 2024 [Paper]

- STAR: Constraint LoRA with Dynamic Active Learning for Data-Efficient Fine-Tuning of Large Language Models, arXiv, 2024 [Paper]

- LoRA+: Efficient Low Rank Adaptation of Large Models, arXiv, 2024 [Paper]

- LoRA-FA: Memory-efficient Low-rank Adaptation for Large Language Models Fine-tuning, arXiv, 2023 [Paper]

- LoraHub: Efficient Cross-Task Generalization via Dynamic LoRA Composition, arXiv, 2023 [Paper] [Code]

- LongLoRA: Efficient Fine-tuning of Long-Context Large Language Models, arXiv, 2023 [Paper] [Code]

- Multi-Head Adapter Routing for Cross-Task Generalization, NeurIPS, 2023 [Paper] [Code]

- Adaptive Budget Allocation for Parameter-Efficient Fine-Tuning, ICLR, 2023 [Paper]

- DyLoRA: Parameter-Efficient Tuning of Pretrained Models using Dynamic Search-Free Low Rank Adaptation, EACL, 2023 [Paper] [Code]

- Tied-Lora: Enhacing Parameter Efficiency of LoRA with Weight Tying, arXiv, 2023 [Paper]

- LoRA: Low-Rank Adaptation of Large Language Models, ICLR, 2022 [Paper] [Code]

- LLaMA-Adapter: Efficient Fine-tuning of Language Models with Zero-init Attention, arXiv, 2023 [Paper] [Code]

- Prefix-Tuning: Optimizing Continuous Prompts for Generation ACL, 2021 [Paper] [Code]

- Compress, Then Prompt: Improving Accuracy-Efficiency Trade-off of LLM Inference with Transferable Prompt, arXiv, 2023 [Paper]

- GPT Understands, Too, AI Open, 2023 [Paper] [Code]

- Multi-Task Pre-Training of Modular Prompt for Few-Shot Learning ACL, 2023 [Paper] [Code]

- Multitask Prompt Tuning Enables Parameter-Efficient Transfer Learning, ICLR, 2023 [Paper]

- PPT: Pre-trained Prompt Tuning for Few-shot Learning, ACL, 2022 [Paper] [Code]

- Parameter-Efficient Prompt Tuning Makes Generalized and Calibrated Neural Text Retrievers, EMNLP-Findings, 2022 [Paper] [Code]

- P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks,ACL-Short, 2022 [Paper] [Code]

- The Power of Scale for Parameter-Efficient Prompt Tuning, EMNLP, 2021 [Paper]

- A Study of Optimizations for Fine-tuning Large Language Models, arXiv, 2024/ins> [Paper]

- Sparse Matrix in Large Language Model Fine-tuning, arXiv, 2024/ins> [Paper]

- GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection, arXiv, 2024/ins> [Paper]

- ReFT: Representation Finetuning for Language Models, arXiv, 2024/ins> [Paper]

- LISA: Layerwise Importance Sampling for Memory-Efficient Large Language Model Fine-Tuning, arXiv, 2024/ins> [Paper]

- BitDelta: Your Fine-Tune May Only Be Worth One Bit, arXiv, 2024/ins> [Paper]

- Winner-Take-All Column Row Sampling for Memory Efficient Adaptation of Language Model, NeurIPS, 2023 [Paper] [Code]

- Memory-Efficient Selective Fine-Tuning, ICML Workshop, 2023 [Paper]

- Full Parameter Fine-tuning for Large Language Models with Limited Resources, arXiv, 2023 [Paper] [Code]

- Fine-Tuning Language Models with Just Forward Passes, NeurIPS, 2023 [Paper] [Code]

- Memory-Efficient Fine-Tuning of Compressed Large Language Models via sub-4-bit Integer Quantization, NeurIPS, 2023 [Paper]

- LoftQ: LoRA-Fine-Tuning-Aware Quantization for Large Language Models, arXiv, 2023 [Paper] [Code]

- QA-LoRA: Quantization-Aware Low-Rank Adaptation of Large Language Models, arXiv, 2023 [Paper] [Code]

- QLoRA: Efficient Finetuning of Quantized LLMs, NeurIPS, 2023 [Paper] [Code1] [Code2]

- Let the Expert Stick to His Last: Expert-Specialized Fine-Tuning for Sparse Architectural Large Language Models, arXiv, 2024 [Paper]

- CLLMs: Consistency Large Language Models, arXiv, 2024 [Paper]

- Encode Once and Decode in Parallel: Efficient Transformer Decoding, arXiv, 2024 [Paper]

- MagicDec: Breaking the Latency-Throughput Tradeoff for Long Context Generation with Speculative Decoding, arXiv, 2024 [Paper]

- DeFT: Decoding with Flash Tree-attention for Efficient Tree-structured LLM Inference, arXiv, 2024 [Paper]

- LayerSkip: Enabling Early Exit Inference and Self-Speculative Decoding, arXiv, 2024 [Paper]

- TriForce: Lossless Acceleration of Long Sequence Generation with Hierarchical Speculative Decoding, arXiv, 2024 [Paper]

- REST: Retrieval-Based Speculative Decoding, arXiv, 2024 [Paper]

- Tandem Transformers for Inference Efficient LLMs, arXiv, 2024 [Paper]

- PaSS: Parallel Speculative Sampling, NeurIPS Workshop, 2023 [Paper]

- Accelerating Transformer Inference for Translation via Parallel Decoding, ACL, 2023 [Paper] [Code]

- Medusa: Simple Framework for Accelerating LLM Generation with Multiple Decoding Heads, Blog, 2023 [Blog] [Code]

- Fast Inference from Transformers via Speculative Decoding, ICML, 2023 [Paper]

- Accelerating LLM Inference with Staged Speculative Decoding, ICML Workshop, 2023 [Paper]

- Accelerating Large Language Model Decoding with Speculative Sampling, arXiv, 2023 [Paper]

- Speculative Decoding with Big Little Decoder, NeurIPS, 2023 [Paper] [Code]

- SpecInfer: Accelerating Generative LLM Serving with Speculative Inference and Token Tree Verification, arXiv, 2023 [Paper] [Code]

- Inference with Reference: Lossless Acceleration of Large Language Models, arXiv, 2023 [Paper] [Code]

- SEED: Accelerating Reasoning Tree Construction via Scheduled Speculative Decoding, arXiv, 2024 [Paper]

- SCOPE: Optimizing Key-Value Cache Compression in Long-context Generation, arXiv, 2024 [Paper]

- vl-cache: sparsity and modality-aware kv cache compression for vision-language model inference acceleration, arXiv, 2024 [Paper]

- MInference 1.0: Accelerating Pre-filling for Long-Context LLMs via Dynamic Sparse Attention, arXiv, 2024 [Paper]

- KVSharer: Efficient Inference via Layer-Wise Dissimilar KV Cache Sharing, arXiv, 2024 [Paper]

- DuoAttention: Efficient Long-Context LLM Inference with Retrieval and Streaming Heads, arXiv, 2024 [Paper]

- LazyLLM: Dynamic Token Pruning for Efficient Long Context LLM Inference, arXiv, 2024 [Paper]

- Palu: Compressing KV-Cache with Low-Rank Projection, arXiv, 2024 [Paper] [Code]

- LOOK-M: Look-Once Optimization in KV Cache for Efficient Multimodal Long-Context Inference, arXiv, 2024 [Paper]

- D2O: Dynamic Discriminative Operations for Efficient Generative Inference of Large Language Models, arXiv, 2024 [Paper]

- QUEST: Query-Aware Sparsity for Efficient Long-Context LLM Inference, ICML, 2024 [Paper]

- Reducing Transformer Key-Value Cache Size with Cross-Layer Attention, arXiv, 2024 [Paper]

- SnapKV : LLM Knows What You are Looking for Before Generation, arXiv, 2024 [Paper]

- Anchor-based Large Language Models, arXiv, 2024 [Paper]

- kvquant: Towards 10 million context length llm inference with kv cache quantization, arXiv, 2024 [Paper]

- GEAR: An Efficient KV Cache Compression Recipe for Near-Lossless Generative Inference of LLM, arXiv, 2024 [Paper]

- Dynamic Memory Compression: Retrofitting LLMs for Accelerated Inference, arXiv, 2024 [Paper]

- No Token Left Behind: Reliable KV Cache Compression via Importance-Aware Mixed Precision Quantization, arXiv, 2024 [Paper]

- Get More with LESS: Synthesizing Recurrence with KV Cache Compression for Efficient LLM Inference, arXiv, 2024 [Paper]

- WKVQuant: Quantizing Weight and Key/Value Cache for Large Language Models Gains More, arXiv, 2024 [Paper]

- On the Efficacy of Eviction Policy for Key-Value Constrained Generative Language Model Inference, arXiv, 2024 [Paper]

- KIVI: A Tuning-Free Asymmetric 2bit Quantization for KV Cache, arXiv, 2024 [Paper] [Code]

- Model Tells You What to Discard: Adaptive KV Cache Compression for LLMs, ICLR, 2024 [Paper]

- SkipDecode: Autoregressive Skip Decoding with Batching and Caching for Efficient LLM Inference, arXiv, 2023 [Paper]

- H2O: Heavy-Hitter Oracle for Efficient Generative Inference of Large Language Models, NeurIPS, 2023 [Paper]

- Scissorhands: Exploiting the Persistence of Importance Hypothesis for LLM KV Cache Compression at Test Time, NeurIPS, 2023 [Paper]

- Dynamic Context Pruning for Efficient and Interpretable Autoregressive Transformers, arXiv, 2023 [Paper]

- LoMA: Lossless Compressed Memory Attention, arXiv, 2024 [Paper]

- MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases, arXiv, 2024 [Paper]

- GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints, EMNLP, 2023 [Paper]

- Fast Transformer Decoding: One Write-Head is All You Need, arXiv, 2019 [Paper]

- Nyströmformer: A nyström-based algorithm for approximating self-attention, AAAI, 2021 [Paper] [Code]

- Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing, NeurIPS, 2020 [Paper] [Code]

- Set Transformer: A Framework for Attention-based Permutation-Invariant Neural Networks, ICML, 2019 [Paper]

- Loki: Low-Rank Keys for Efficient Sparse Attention, ICML Workshop, 2023 [Paper]

- Sumformer: Universal Approximation for Efficient Transformers, ICML Workshop, 2023 [Paper]

- FLuRKA: Fast fused Low-Rank & Kernel Attention, arXiv, 2023 [Paper]

- Scatterbrain: Unifying Sparse and Low-rank Attention, NeurlPS, 2021 [Paper] [Code]

- Rethinking Attention with Performers, ICLR, 2021 [Paper] [Code]

- Random Feature Attention, ICLR, 2021 [Paper]

- Linformer: Self-Attention with Linear Complexity, arXiv, 2020 [Paper] [Code]

- Lightweight and Efficient End-to-End Speech Recognition Using Low-Rank Transformer, ICASSP, 2020 [Paper]

- Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention, ICML, 2020 [Paper] [Code]

- Simple linear attention language models balance the recall-throughput tradeoff, arXiv, 2024 [Paper]

- Lightning Attention-2: A Free Lunch for Handling Unlimited Sequence Lengths in Large Language Models, arXiv, 2024 [Paper] [Code]

- Faster Causal Attention Over Large Sequences Through Sparse Flash Attention, ICML Workshop, 2023 [Paper]

- Poolingformer: Long Document Modeling with Pooling Attention, ICML, 2021 [Paper]

- Big Bird: Transformers for Longer Sequences, NeurIPS, 2020 [Paper] [Code]

- Longformer: The Long-Document Transformer, arXiv, 2020 [Paper] [Code]

- Blockwise Self-Attention for Long Document Understanding, EMNLP, 2020 [Paper] [Code]

- Generating Long Sequences with Sparse Transformers, arXiv, 2019 [Paper]

- MoA: Mixture of Sparse Attention for Automatic Large Language Model Compression, arXiv, 2024 [Paper]

- HyperAttention: Long-context Attention in Near-Linear Time, arXiv, 2023 [Paper] [Code]

- ClusterFormer: Neural Clustering Attention for Efficient and Effective Transformer, ACL, 2022 [Paper]

- Reformer: The Efficient Transformer, ICLR, 2022 [Paper] [Code]

- Sparse Sinkhorn Attention, ICML, 2020 [Paper]

- Fast Transformers with Clustered Attention, NeurIPS, 2020 [Paper] [Code]

- Efficient Content-Based Sparse Attention with Routing Transformers, TACL, 2020 [Paper] [Code]

- Self-MoE: Towards Compositional Large Language Models with Self-Specialized Experts, arXiv, 2024 [Paper]

- Lory: Fully Differentiable Mixture-of-Experts for Autoregressive Language Model Pre-training, 2024 [Paper]

- JetMoE: Reaching Llama2 Performance with 0.1M Dollars, 2024 [Paper]

- An Expert is Worth One Token: Synergizing Multiple Expert LLMs as Generalist via Expert Token Routing, 2024 [Paper]

- Mixture-of-Depths: Dynamically allocating compute in transformer-based language models, 2024 [Paper]

- Branch-Train-MiX: Mixing Expert LLMs into a Mixture-of-Experts LLM, 2024 [Paper]

- Mixtral of Experts, arXiv, 2024 [Paper] [Code]

- Mistral 7B, arXiv, 2023 [Paper] [Code]

- PanGu-Σ: Towards Trillion Parameter Language Model with Sparse Heterogeneous Computing, arXiv, 2023 [Paper]

- Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity, JMLR, 2022 [Paper] [Code]

- Efficient Large Scale Language Modeling with Mixtures of Experts, EMNLP, 2022 [Paper] [Code]

- BASE Layers: Simplifying Training of Large, Sparse Models, ICML, 2021 [Paper] [Code]

- GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding, ICLR, 2021 [Paper]

- SEER-MoE: Sparse Expert Efficiency through Regularization for Mixture-of-Experts, arXiv, 2024/ins> [Paper]

- Scaling Laws for Fine-Grained Mixture of Experts, arXiv, 2024/ins> [Paper]

- Lifelong Language Pretraining with Distribution-Specialized Experts, ICML, 2023 [Paper]

- Mixture-of-Experts Meets Instruction Tuning:A Winning Combination for Large Language Models, arXiv, 2023 [Paper]

- Mixture-of-Experts with Expert Choice Routing, NeurIPS, 2022 [Paper]

- StableMoE: Stable Routing Strategy for Mixture of Experts, ACL, 2022 [Paper] [Code]

- On the Representation Collapse of Sparse Mixture of Experts, NeurIPS, 2022 [Paper]

- Two Stones Hit One Bird: Bilevel Positional Encoding for Better Length Extrapolation, ICML, 2024 [Paper]

- ∞-Bench: Extending Long Context Evaluation Beyond 100K Tokens, arXiv, 2024 [Paper]

- Resonance RoPE: Improving Context Length Generalization of Large Language Models, arXiv, 2024 [Paper] [Code]

- LongRoPE: Extending LLM Context Window Beyond 2 Million Tokens, arXiv, 2024 [Paper]

- E^2-LLM:Efficient and Extreme Length Extension of Large Language Models, arXiv, 2024 [Paper]

- Scaling Laws of RoPE-based Extrapolation, arXiv, 2023 [Paper]

- A Length-Extrapolatable Transformer, ACL, 2023 [Paper] [Code]

- Extending Context Window of Large Language Models via Positional Interpolation, arXiv, 2023 [Paper]

- NTK Interpolation, Blog, 2023 [Reddit post]

- YaRN: Efficient Context Window Extension of Large Language Models, arXiv, 2023 [Paper] [Code]

- CLEX: Continuous Length Extrapolation for Large Language Models, arXiv, 2023 [Paper][Code]

- PoSE: Efficient Context Window Extension of LLMs via Positional Skip-wise Training, arXiv, 2023 [Paper][Code]

- Functional Interpolation for Relative Positions Improves Long Context Transformers, arXiv, 2023 [Paper]

- Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation, ICLR, 2022 [Paper] [Code]

- Exploring Length Generalization in Large Language Models, NeurIPS, 2022 [Paper]

- Retentive Network: A Successor to Transformer for Large Language Models, arXiv, 2023 [Paper] [Code]

- Recurrent Memory Transformer, NeurIPS, 2022 [Paper] [Code]

- Block-Recurrent Transformers, NeurIPS, 2022 [Paper] [Code]

- ∞-former: Infinite Memory Transformer, ACL, 2022 [Paper] [Code]

- Memformer: A Memory-Augmented Transformer for Sequence Modeling, AACL-Findings, 2020 [Paper] [Code]

- Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context, ACL, 2019 [Paper] [Code]

- XL3M: A Training-free Framework for LLM Length Extension Based on Segment-wise Inference, arXiv, 2024 [Paper]

- TransformerFAM: Feedback attention is working memory, arXiv, 2024 [Paper]

- Naive Bayes-based Context Extension for Large Language Models, NAACL, 2024 [Paper]

- Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention, arXiv, 2024 [Paper]

- Training LLMs over Neurally Compressed Text, arXiv, 2024 [Paper]

- LM-Infinite: Zero-Shot Extreme Length Generalization for Large Language Models, arXiv, 2024 [Paper]

- Training-Free Long-Context Scaling of Large Language Models, arXiv, 2024 [Paper] [Code]

- Long-Context Language Modeling with Parallel Context Encoding, arXiv, 2024 [Paper] [Code]

- Soaring from 4K to 400K: Extending LLM’s Context with Activation Beacon, arXiv, 2024 [Paper] [Code]

- LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning, arXiv, 2024 [Paper] [Code]

- Extending Context Window of Large Language Models via Semantic Compression, arXiv, 2023 [Paper]

- Efficient Streaming Language Models with Attention Sinks, arXiv, 2023 [Paper] [Code]

- Parallel Context Windows for Large Language Models, ACL, 2023 [Paper] [Code]

- LongNet: Scaling Transformers to 1,000,000,000 Tokens, arXiv, 2023 [Paper] [Code]

- Efficient Long-Text Understanding with Short-Text Models, TACL, 2023 [Paper] [Code]

- InfLLM: Unveiling the Intrinsic Capacity of LLMs for Understanding Extremely Long Sequences with Training-Free Memory, arXiv, 2024 [Paper]

- Landmark Attention: Random-Access Infinite Context Length for Transformers, arXiv, 2023 [Paper] [Code]

- Augmenting Language Models with Long-Term Memory, NeurIPS, 2023 [Paper]

- Unlimiformer: Long-Range Transformers with Unlimited Length Input, NeurIPS, 2023 [Paper] [Code]

- Focused Transformer: Contrastive Training for Context Scaling, NeurIPS, 2023 [Paper] [Code]

- Retrieval meets Long Context Large Language Models, arXiv, 2023 [Paper]

- Memorizing Transformers, ICLR, 2022 [Paper] [Code]

- Transformers are SSMs: Generalized Models and Efficient Algorithms Through Structured State Space Duality, arXiv, 2024 [Paper]

- MoE-Mamba: Efficient Selective State Space Models with Mixture of Experts, arXiv, 2024 [Paper]

- DenseMamba: State Space Models with Dense Hidden Connection for Efficient Large Language Models, arXiv, 2024 [Paper] [Code]

- MambaByte: Token-free Selective State Space Model, arXiv, 2024 [Paper]

- Sparse Modular Activation for Efficient Sequence Modeling, NeurIPS, 2023 [Paper] [Code]

- Mamba: Linear-Time Sequence Modeling with Selective State Spaces, arXiv, 2023 [Paper] [Code]

- Hungry Hungry Hippos: Towards Language Modeling with State Space Models, ICLR 2023 [Paper] [Code]

- Long Range Language Modeling via Gated State Spaces, ICLR, 2023 [Paper]

- Block-State Transformers, NeurIPS, 2023 [Paper]

- Efficiently Modeling Long Sequences with Structured State Spaces, ICLR, 2022 [Paper] [Code]

- Diagonal State Spaces are as Effective as Structured State Spaces, NeurIPS, 2022 [Paper] [Code]

- Differential Transformer, arXiv, 2024 [Paper]

- Scalable MatMul-free Language Modeling, arXiv, 2024 [Paper]

- You Only Cache Once: Decoder-Decoder Architectures for Language Models, arXiv, 2024 [Paper]

- MEGALODON: Efficient LLM Pretraining and Inference with Unlimited Context Length, arXiv, 2024 [Paper]

- DiJiang: Efficient Large Language Models through Compact Kernelization, arXiv, 2024 [Paper]

- Griffin: Mixing Gated Linear Recurrences with Local Attention for Efficient Language Models, arXiv, 2024 [Paper]

- PanGu-π: Enhancing Language Model Architectures via Nonlinearity Compensation, arXiv, 2023 [Paper]

- RWKV: Reinventing RNNs for the Transformer Era, EMNLP-Findings, 2023 [Paper]

- Hyena Hierarchy: Towards Larger Convolutional Language Models, arXiv, 2023 [Paper]

- MEGABYTE: Predicting Million-byte Sequences with Multiscale Transformers, arXiv, 2023 [Paper]

- MATES: Model-Aware Data Selection for Efficient Pretraining with Data Influence Models, arXiv, 2024 [Paper]

- DoReMi: Optimizing Data Mixtures Speeds Up Language Model Pretraining, NeurIPS, 2023 [Paper]

- Data Selection for Language Models via Importance Resampling, NeurIPS, 2023 [Paper] [Code]

- NLP From Scratch Without Large-Scale Pretraining: A Simple and Efficient Framework, ICML, 2022 [Paper] [Code]

- Span Selection Pre-training for Question Answering, ACL, 2020 [Paper] [Code]

- Show, Don’t Tell: Aligning Language Models with Demonstrated Feedback, arXiv, 2024 [Paper]

- Synthetic Data (Almost) from Scratch: Generalized Instruction Tuning for Language Models, arXiv, 2024 [Paper]

- AutoMathText: Autonomous Data Selection with Language Models for Mathematical Texts, arXiv, 2024 [Paper] [Code]

- What Makes Good Data for Alignment? A Comprehensive Study of Automatic Data Selection in Instruction Tuning, ICLR, 2024 [Paper] [Code]

- How to Train Data-Efficient LLMs, arXiv, 2024[Paper]

- LESS: Selecting Influential Data for Targeted Instruction Tuning, arXiv, 2024 [Paper] [Code]

- Superfiltering: Weak-to-Strong Data Filtering for Fast Instruction-Tuning, arXiv, 2024 [Paper] [Code]

- One Shot Learning as Instruction Data Prospector for Large Language Models, arXiv, 2023 [Paper]

- MoDS: Model-oriented Data Selection for Instruction Tuning, arXiv, 2023 [Paper] [Code]

- From Quantity to Quality: Boosting LLM Performance with Self-Guided Data Selection for Instruction Tuning, arXiv, 2023 [Paper] [Code]

- Instruction Mining: When Data Mining Meets Large Language Model Finetuning, arXiv, 2023 [Paper]

- Data-Efficient Finetuning Using Cross-Task Nearest Neighbors, ACL, 2023 [Paper] [Code]

- Data Selection for Fine-tuning Large Language Models Using Transferred Shapley Values, ACL SRW, 2023 [Paper] [Code]

- Maybe Only 0.5% Data is Needed: A Preliminary Exploration of Low Training Data Instruction Tuning, arXiv, 2023 [Paper]

- AlpaGasus: Training A Better Alpaca with Fewer Data, arXiv, 2023 [Paper] [Code]

- LIMA: Less Is More for Alignment, arXiv, 2023 [Paper]

- Unified Demonstration Retriever for In-Context Learning, ACL, 2023 [Paper] [Code]

- Large Language Models Are Latent Variable Models: Explaining and Finding Good Demonstrations for In-Context Learning, NeurIPS, 2023 [Paper] [Code]

- In-Context Learning with Iterative Demonstration Selection, arXiv, 2022 [Paper]

- Dr.ICL: Demonstration-Retrieved In-context Learning, arXiv, 2022 [Paper]

- Learning to Retrieve In-Context Examples for Large Language Models, arXiv, 2022 [Paper]

- Finding Supporting Examples for In-Context Learning, arXiv, 2022 [Paper]

- Self-Adaptive In-Context Learning: An Information Compression Perspective for In-Context Example Selection and Ordering, ACL, 2023 [Paper] [Code]

- Selective Annotation Makes Language Models Better Few-Shot Learners, ICLR, 2023 [Paper] [Code]

- What Makes Good In-Context Examples for GPT-3? DeeLIO, 2022 [Paper]

- Learning To Retrieve Prompts for In-Context Learning, NAACL-HLT, 2022 [Paper] [Code]

- Active Example Selection for In-Context Learning, EMNLP, 2022 [Paper] [Code]

- Rethinking the Role of Demonstrations: What makes In-context Learning Work? EMNLP, 2022 [Paper] [Code]

- Fantastically Ordered Prompts and Where to Find Them: Overcoming Few-Shot Prompt Order Sensitivity, ACL, 2022 [Paper]

- Large Language Models as Optimizers, arXiv, 2023 [Paper]

- Instruction Induction: From Few Examples to Natural Language Task Descriptions, ACL, 2023 [Paper] [Code]

- Large Language Models Are Human-Level Prompt Engineers, ICLR, 2023 [Paper] [Code]

- TeGit: Generating High-Quality Instruction-Tuning Data with Text-Grounded Task Design, arXiv, 2023 [Paper]

- Self-Instruct: Aligning Language Model with Self Generated Instructions, ACL, 2023 [Paper] [Code]

- Training Language Models to Self-Correct via Reinforcement Learning, arXiv, 2024 [Paper]

- Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters, arXiv, 2024 [Paper]

- Learning to Reason with LLMs, Website, 2024 [Html]

- Quiet-STaR: Language Models Can Teach Themselves to Think Before Speaking, arXiv, 2024 [Paper]

- From Explicit CoT to Implicit CoT: Learning to Internalize CoT Step by Step, ICLR, 2024 [Paper]

- Automatic Chain of Thought Prompting in Large Language Models, ICLR, 2023 [Paper] [Code]

- Measuring and Narrowing the Compositionality Gap in Language Models, EMNLP, 2023 [Paper] [Code]

- ReAct: Synergizing Reasoning and Acting in Language Models, ICLR, 2023 [Paper] [Code]

- Least-to-Most Prompting Enables Complex Reasoning in Large Language Models, ICLR, 2023 [Paper]

- Graph of Thoughts: Solving Elaborate Problems with Large Language Models, arXiv, 2023 [Paper] [Code]

- Tree of Thoughts: Deliberate Problem Solving with Large Language Models, NeurIPS, 2023 [Paper] [Code]

- Self-Consistency Improves Chain of Thought Reasoning in Language Models, ICLR, 2023 [Paper]

- Graph of Thoughts: Solving Elaborate Problems with Large Language Models, arXiv, 2023 [Paper] [Code]

- Contrastive Chain-of-Thought Prompting, arXiv, 2023 [Paper] [Code]

- Everything of Thoughts: Defying the Law of Penrose Triangle for Thought Generation, arXiv, 2023 [Paper]

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, NeurIPS, 2022 [Paper]

- Better & Faster Large Language Models via Multi-token Prediction, arXiv, 2023 [Paper]

- Skeleton-of-Thought: Large Language Models Can Do Parallel Decoding, arXiv, 2023 [Paper] [Code]

- LLMLingua-2: Data Distillation for Efficient and Faithful Task-Agnostic Prompt Compression, arXiv, 2024 [Paper]

- PCToolkit: A Unified Plug-and-Play Prompt Compression Toolkit of Large Language Models, arXiv, 2024 [Paper]

- Compressed Context Memory For Online Language Model Interaction, ICLR, 2024 [Paper]

- Learning to Compress Prompts with Gist Tokens, arXiv, 2023 [Paper]

- Adapting Language Models to Compress Contexts, EMNLP, 2023 [Paper] [Code]

- In-context Autoencoder for Context Compression in a Large Language Model, arXiv, 2023 [Paper] [Code]

- LongLLMLingua: Accelerating and Enhancing LLMs in Long Context Scenarios via Prompt Compression, arXiv, 2023 [Paper] [Code]

- Discrete Prompt Compression with Reinforcement Learning, arXiv, 2023 [Paper]

- Nugget 2D: Dynamic Contextual Compression for Scaling Decoder-only Language Models, arXiv, 2023 [Paper]

- TempLM: Distilling Language Models into Template-Based Generators, arXiv, 2022 [Paper] [Code]

- PromptGen: Automatically Generate Prompts using Generative Models, NAACL Findings, 2022 [Paper]

- AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts, EMNLP, 2020 [Paper] [Code]

- MegaScale: Scaling Large Language Model Training to More Than 10,000 GPUs, arXiv, 2024 [Paper]

- CoLLiE: Collaborative Training of Large Language Models in an Efficient Way, EMNLP, 2023 [Paper] [Code]

- An Efficient 2D Method for Training Super-Large Deep Learning Models, IPDPS, 2023 [Paper] [Code]

- PyTorch FSDP: Experiences on Scaling Fully Sharded Data Parallel, VLDB, 2023 [Paper]

- Bamboo: Making Preemptible Instances Resilient for Affordable Training, NSDI, 2023 [Paper] [Code]

- Oobleck: Resilient Distributed Training of Large Models Using Pipeline Templates, SOSP, 2023 [Paper] [Code]

- Varuna: Scalable, Low-cost Training of Massive Deep Learning Models, EuroSys, 2022 [Paper] [Code]

- Unity: Accelerating DNN Training Through Joint Optimization of Algebraic Transformations and Parallelization, OSDI, 2022 [Paper] [Code]

- Tesseract: Parallelize the Tensor Parallelism Efficiently, ICPP, 2022, [Paper]

- Alpa: Automating Inter- and Intra-Operator Parallelism for Distributed Deep Learning, OSDI, 2022, [Paper][Code]

- Maximizing Parallelism in Distributed Training for Huge Neural Networks, arXiv, 2021 [Paper]

- Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism, arXiv, 2020 [Paper]

- Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM, SC, 2021 [Paper] [Code]

- ZeRO-Infinity: breaking the GPU memory wall for extreme scale deep learning, SC, 2021 [Paper]

- ZeRO-Offload: Democratizing Billion-Scale Model Training, USENIX ATC, 2021 [Paper] [Code]

- ZeRO: Memory Optimizations Toward Training Trillion Parameter Models, SC, 2020 [Paper] [Code]

- LUT TENSOR CORE: Lookup Table Enables Efficient Low-Bit LLM Inference Acceleration, arXiv, 2024 [Paper]

- TurboTransformers: an efficient GPU serving system for transformer models, PPoPP, 2021 [Paper]

- Orca: A Distributed Serving System for Transformer-Based Generative Models, OSDI, 2022 [Paper]

- FlexGen: High-Throughput Generative Inference of Large Language Models with a Single GPU, ICML, 2023 [Paper] [Code]

- Efficiently Scaling Transformer Inference, MLSys, 2023 [Paper]

- DeepSpeed-Inference: Enabling Efficient Inference of Transformer Models at Unprecedented Scale, SC, 2022 [Paper]

- Efficient Memory Management for Large Language Model Serving with PagedAttention, SOSP, 2023 [Paper] [Code]

- S-LoRA: Serving Thousands of Concurrent LoRA Adapters, arXiv, 2023 [Paper] [Code]

- Petals: Collaborative Inference and Fine-tuning of Large Models, arXiv, 2023 [Paper]

- SpotServe: Serving Generative Large Language Models on Preemptible Instances, arXiv, 2023 [Paper]

- KV-Runahead: Scalable Causal LLM Inference by Parallel Key-Value Cache Generation, arXiv, ICML [Paper]

- CacheGen: KV Cache Compression and Streaming for Fast Language Model Serving, arXiv, 2024 [Paper]

- Predictive Pipelined Decoding: A Compute-Latency Trade-off for Exact LLM Decoding, TMLR, 2024 [Paper]

- Flash-LLM: Enabling Cost-Effective and Highly-Efficient Large Generative Model Inference with Unstructured Sparsity, arXiv, 2023 [Paper]

- S3: Increasing GPU Utilization during Generative Inference for Higher Throughput, arXiv, 2023 [Paper]

- Fast Distributed Inference Serving for Large Language Models, arXiv, 2023 [Paper]

- Response Length Perception and Sequence Scheduling: An LLM-Empowered LLM Inference Pipeline, arXiv, 2023 [Paper]

- SARATHI: Efficient LLM Inference by Piggybacking Decodes with Chunked Prefills, arXiv, 2023 [Paper]

- FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance, arXiv, 2023 [Paper]

- Prompt Cache: Modular Attention Reuse for Low-Latency Inference, arXiv, 2023 [Paper]

- Fairness in Serving Large Language Models, arXiv, 2023 [Paper]

- FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision, arXiv, 2024 [Paper]

- FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness, NeurIPS, 2022 [Paper] [Code]

- FlashAttention-2: Faster Attention with Better Parallelism and Work Partitioning, arXiv, 2023 [Paper] [Code]

- Flash-Decoding for Long-Context Inference, Blog, 2023 [Blog]

- FlashDecoding++: Faster Large Language Model Inference on GPUs, arXiv, 2023 [Paper]

- PowerInfer: Fast Large Language Model Serving with a Consumer-grade GPU, arXiv, 2023 [Paper] [Code]

- LLM in a flash: Efficient Large Language Model Inference with Limited Memory, arXiv, 2023 [Paper]

- Chiplet Cloud: Building AI Supercomputers for Serving Large Generative Language Models, arXiv, 2023 [Paper]

- EdgeMoE: Fast On-Device Inference of MoE-based Large Language Models, arXiv, 2022 [Paper]

| Efficient Training | Efficient Inference | Efficient Fine-Tuning | |

|---|---|---|---|

| DeepSpeed [Code] | ✅ | ✅ | ✅ |

| Megatron [Code] | ✅ | ✅ | ✅ |

| ColossalAI [Code] | ✅ | ✅ | ✅ |

| Nanotron [Code] | ✅ | ✅ | ✅ |

| MegaBlocks [Code] | ✅ | ✅ | ✅ |

| FairScale [Code] | ✅ | ✅ | ✅ |

| Pax [Code] | ✅ | ✅ | ✅ |

| Composer [Code] | ✅ | ✅ | ✅ |

| OpenLLM [Code] | ❌ | ✅ | ✅ |

| LLM-Foundry [Code] | ❌ | ✅ | ✅ |

| vLLM [Code] | ❌ | ✅ | ❌ |

| TensorRT-LLM [Code] | ❌ | ✅ | ❌ |

| TGI [Code] | ❌ | ✅ | ❌ |

| RayLLM [Code] | ❌ | ✅ | ❌ |

| MLC LLM [Code] | ❌ | ✅ | ❌ |

| Sax [Code] | ❌ | ✅ | ❌ |

| Mosec [Code] | ❌ | ✅ | ❌ |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Efficient-LLMs-Survey

Similar Open Source Tools

Efficient-LLMs-Survey

This repository provides a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from **model-centric** , **data-centric** , and **framework-centric** perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

Awesome-LLM4RS-Papers

This paper list is about Large Language Model-enhanced Recommender System. It also contains some related works. Keywords: recommendation system, large language models

Recommendation-Systems-without-Explicit-ID-Features-A-Literature-Review

This repository is a collection of papers and resources related to recommendation systems, focusing on foundation models, transferable recommender systems, large language models, and multimodal recommender systems. It explores questions such as the necessity of ID embeddings, the shift from matching to generating paradigms, and the future of multimodal recommender systems. The papers cover various aspects of recommendation systems, including pretraining, user representation, dataset benchmarks, and evaluation methods. The repository aims to provide insights and advancements in the field of recommendation systems through literature reviews, surveys, and empirical studies.

Awesome-LLM-Compression

Awesome LLM compression research papers and tools to accelerate LLM training and inference.

Awesome-TimeSeries-SpatioTemporal-LM-LLM

Awesome-TimeSeries-SpatioTemporal-LM-LLM is a curated list of Large (Language) Models and Foundation Models for Temporal Data, including Time Series, Spatio-temporal, and Event Data. The repository aims to summarize recent advances in Large Models and Foundation Models for Time Series and Spatio-Temporal Data with resources such as papers, code, and data. It covers various applications like General Time Series Analysis, Transportation, Finance, Healthcare, Event Analysis, Climate, Video Data, and more. The repository also includes related resources, surveys, and papers on Large Language Models, Foundation Models, and their applications in AIOps.

Awesome-LLMs-on-device

Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

Awesome-LLM-Post-training

The Awesome-LLM-Post-training repository is a curated collection of influential papers, code implementations, benchmarks, and resources related to Large Language Models (LLMs) Post-Training Methodologies. It covers various aspects of LLMs, including reasoning, decision-making, reinforcement learning, reward learning, policy optimization, explainability, multimodal agents, benchmarks, tutorials, libraries, and implementations. The repository aims to provide a comprehensive overview and resources for researchers and practitioners interested in advancing LLM technologies.

LLM-Tool-Survey

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

awesome-ai4db-paper

The 'awesome-ai4db-paper' repository is a curated paper list focusing on AI for database (AI4DB) theory, frameworks, resources, and tools for data engineers. It includes a collection of research papers related to learning-based query optimization, training data set preparation, cardinality estimation, query-driven approaches, data-driven techniques, hybrid methods, pretraining models, plan hints, cost models, SQL embedding, join order optimization, query rewriting, end-to-end systems, text-to-SQL conversion, traditional database technologies, storage solutions, learning-based index design, and a learning-based configuration advisor. The repository aims to provide a comprehensive resource for individuals interested in AI applications in the field of database management.

llm-continual-learning-survey

This repository is an updating survey for Continual Learning of Large Language Models (CL-LLMs), providing a comprehensive overview of various aspects related to the continual learning of large language models. It covers topics such as continual pre-training, domain-adaptive pre-training, continual fine-tuning, model refinement, model alignment, multimodal LLMs, and miscellaneous aspects. The survey includes a collection of relevant papers, each focusing on different areas within the field of continual learning of large language models.

AwesomeLLM4APR

Awesome LLM for APR is a repository dedicated to exploring the capabilities of Large Language Models (LLMs) in Automated Program Repair (APR). It provides a comprehensive collection of research papers, tools, and resources related to using LLMs for various scenarios such as repairing semantic bugs, security vulnerabilities, syntax errors, programming problems, static warnings, self-debugging, type errors, web UI tests, smart contracts, hardware bugs, performance bugs, API misuses, crash bugs, test case repairs, formal proofs, GitHub issues, code reviews, motion planners, human studies, and patch correctness assessments. The repository serves as a valuable reference for researchers and practitioners interested in leveraging LLMs for automated program repair.

awesome-llm-security

Awesome LLM Security is a curated collection of tools, documents, and projects related to Large Language Model (LLM) security. It covers various aspects of LLM security including white-box, black-box, and backdoor attacks, defense mechanisms, platform security, and surveys. The repository provides resources for researchers and practitioners interested in understanding and safeguarding LLMs against adversarial attacks. It also includes a list of tools specifically designed for testing and enhancing LLM security.

Awesome-LLM4Graph-Papers

A collection of papers and resources about Large Language Models (LLM) for Graph Learning (Graph). Integrating LLMs with graph learning techniques to enhance performance in graph learning tasks. Categorizes approaches based on four primary paradigms and nine secondary-level categories. Valuable for research or practice in self-supervised learning for recommendation systems.

For similar tasks

Efficient-LLMs-Survey

This repository provides a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from **model-centric** , **data-centric** , and **framework-centric** perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

Awesome_GPT_Super_Prompting

Awesome_GPT_Super_Prompting is a repository that provides resources related to Jailbreaks, Leaks, Injections, Libraries, Attack, Defense, and Prompt Engineering. It includes information on ChatGPT Jailbreaks, GPT Assistants Prompt Leaks, GPTs Prompt Injection, LLM Prompt Security, Super Prompts, Prompt Hack, Prompt Security, Ai Prompt Engineering, and Adversarial Machine Learning. The repository contains curated lists of repositories, tools, and resources related to GPTs, prompt engineering, prompt libraries, and secure prompting. It also offers insights into Cyber-Albsecop GPT Agents and Super Prompts for custom GPT usage.

inspect_ai

Inspect AI is a framework developed by the UK AI Safety Institute for evaluating large language models. It offers various built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can extend Inspect by adding new elicitation and scoring techniques through additional Python packages. The tool aims to provide a comprehensive solution for assessing the performance and safety of language models.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

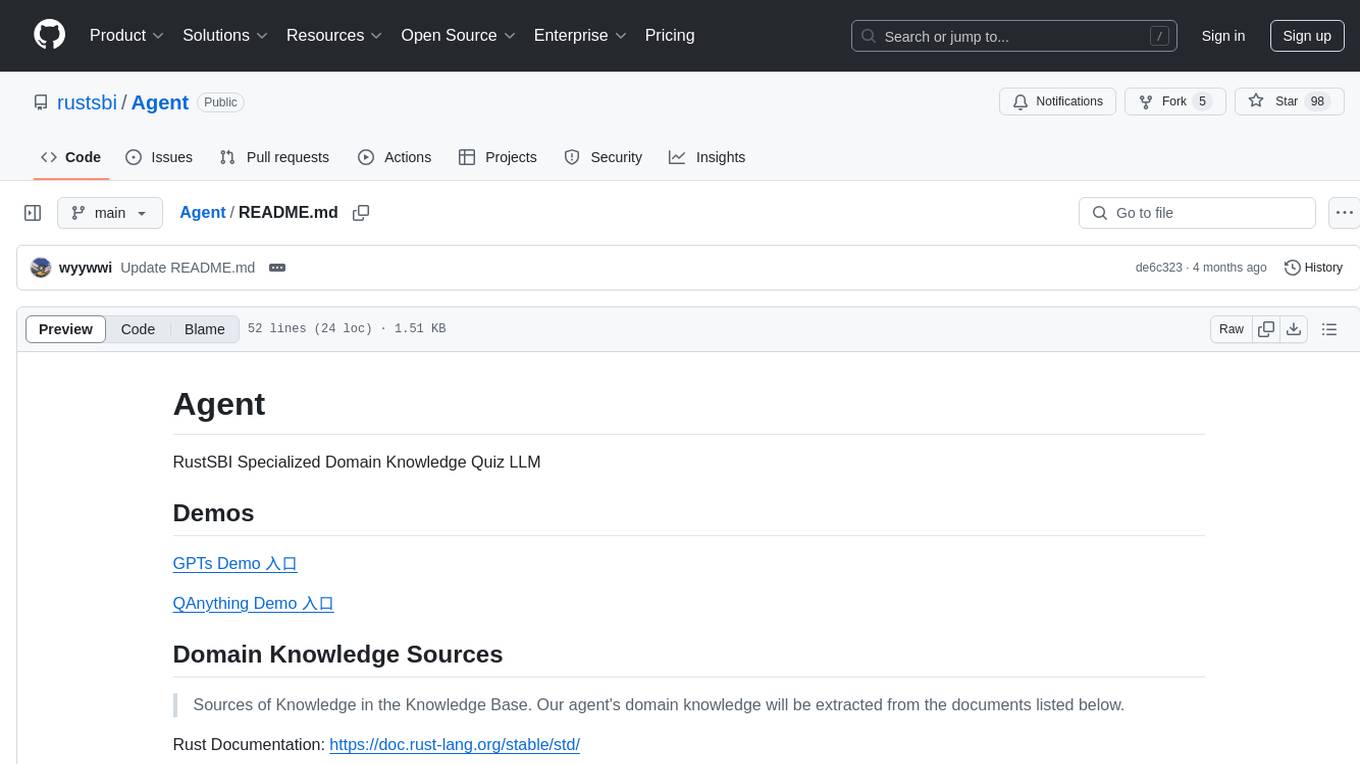

Agent

Agent is a RustSBI specialized domain knowledge quiz LLM tool that extracts domain knowledge from various sources such as Rust Documentation, RISC-V Documentation, Bouffalo Docs, Bouffalo SDK, and Xiangshan Docs. It also provides resources for LLM prompt engineering and RAG engineering, including guides and existing projects related to retrieval-augmented generation (RAG) systems.

aimet

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy. AIMET is designed to work with PyTorch, TensorFlow and ONNX models. We also host the AIMET Model Zoo - a collection of popular neural network models optimized for 8-bit inference. We also provide recipes for users to quantize floating point models using AIMET.

AutoGPTQ

AutoGPTQ is an easy-to-use LLM quantization package with user-friendly APIs, based on GPTQ algorithm (weight-only quantization). It provides a simple and efficient way to quantize large language models (LLMs) to reduce their size and computational cost while maintaining their performance. AutoGPTQ supports a wide range of LLM models, including GPT-2, GPT-J, OPT, and BLOOM. It also supports various evaluation tasks, such as language modeling, sequence classification, and text summarization. With AutoGPTQ, users can easily quantize their LLM models and deploy them on resource-constrained devices, such as mobile phones and embedded systems.

hqq

HQQ is a fast and accurate model quantizer that skips the need for calibration data. It's super simple to implement (just a few lines of code for the optimizer). It can crunch through quantizing the Llama2-70B model in only 4 minutes! 🚀

For similar jobs

Efficient-LLMs-Survey

This repository provides a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from **model-centric** , **data-centric** , and **framework-centric** perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

hqq

HQQ is a fast and accurate model quantizer that skips the need for calibration data. It's super simple to implement (just a few lines of code for the optimizer). It can crunch through quantizing the Llama2-70B model in only 4 minutes! 🚀

promptfoo

Promptfoo is a tool for testing and evaluating LLM output quality. With promptfoo, you can build reliable prompts, models, and RAGs with benchmarks specific to your use-case, speed up evaluations with caching, concurrency, and live reloading, score outputs automatically by defining metrics, use as a CLI, library, or in CI/CD, and use OpenAI, Anthropic, Azure, Google, HuggingFace, open-source models like Llama, or integrate custom API providers for any LLM API.

ComfyUI-IF_AI_tools

ComfyUI-IF_AI_tools is a set of custom nodes for ComfyUI that allows you to generate prompts using a local Large Language Model (LLM) via Ollama. This tool enables you to enhance your image generation workflow by leveraging the power of language models.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

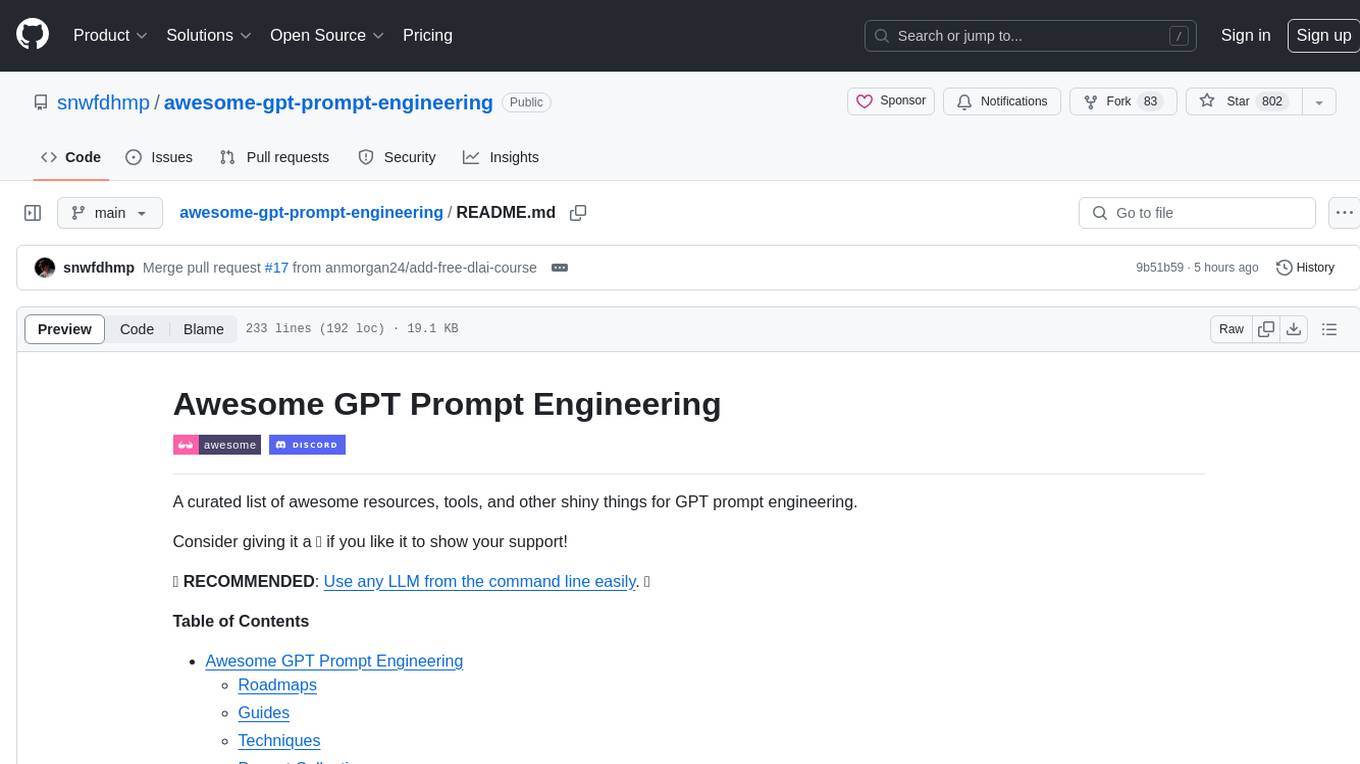

awesome-gpt-prompt-engineering

Awesome GPT Prompt Engineering is a curated list of resources, tools, and shiny things for GPT prompt engineering. It includes roadmaps, guides, techniques, prompt collections, papers, books, communities, prompt generators, Auto-GPT related tools, prompt injection information, ChatGPT plug-ins, prompt engineering job offers, and AI links directories. The repository aims to provide a comprehensive guide for prompt engineering enthusiasts, covering various aspects of working with GPT models and improving communication with AI tools.